Reading Execution Plans

Urh Srecnik

Urh SrecnikI routinely do a "rehearsal" of my presentations with our DBA team and any other willing participants (they tend to be developers) to receive feedback and to share knowledge. In the last one, I've got quite an interesting question, which requires understanding of execution plans - specifically, the order in which operations are executed. This post is my attempt to explain the misconception.

The Query

The query which raised the question doesn't need much explaining. It's a simple example for demonstration purposes:

select /*+ ORDERED */ *

from tab_first tf

join tab_second ts on ts.id_first=tf.id_first;

---------------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time |

---------------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | | | 4 (100)| |

|* 1 | HASH JOIN | | 6 | 978 | 4 (0)| 00:00:01 |

| 2 | TABLE ACCESS FULL| TAB_FIRST | 3 | 225 | 2 (0)| 00:00:01 |

| 3 | TABLE ACCESS FULL| TAB_SECOND | 6 | 528 | 2 (0)| 00:00:01 |

---------------------------------------------------------------------------------

The Question

Which of the two tables is accessed first?

Is it tab_first because it is explicitly requested to be the leading table or is it tab_second because it is displayed as the last line in execution plan output?

Well, the correct answer is tab_first, but what prompted the question was actually the awareness of the general rule of the order in which execution plans are supposed to be read: from bottom up - starting with the deepest entries (leaf nodes). And, if we'd follow this rule to the letter, the tab_second would be accessed first.

This general rule comes from the way the execution plan is executed. Maybe it can help thinking of it this way: it all starts with root operation (id=0), but in order to complete this root operation it needs full or partial results from its children operations. And the same goes for all children that have children until we get to leaf nodes. That is why we're generally reading the plan as discussed earlier.

Let's first understand how Hash Join and Nested Loops operations work in order to better understand when is which table accessed.

Hash Join

This join method is generally used when joining two larger row sources (two tables in our example) using equality operator.

The optimizer will use statistics to determine which of the two row sources is smaller than the other and proclaim it to be the build table. We can also force specific table to be the build table by explicitly specifying the join order using hints (ORDERED or LEADING).

The other row source (generally the bigger one) is considered the probe table.

In our example, first_table is the build table and second_table is the probe table.

Hash Join will read the whole build table ("smaller one") first and calculate hash on joined columns for each row. The hashes are stored in PGA where each hash value has associated linked list of rows for which the same hash has been calculated.

The probe table (bigger one) is being read only after this hash -> linked list memory structure is built in PGA. This memory structure is probed by each record in the probe table.

So, in our example, tab_first is read first, and after that the tab_second is read.

Nested Loops

Let's now consider an execution plan with Nested Loops such as this one:

---------------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time |

---------------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 6 | 102 | 6 (0)| 00:00:01 |

| 1 | NESTED LOOPS | | 6 | 102 | 6 (0)| 00:00:01 |

| 2 | TABLE ACCESS FULL| TAB_FIRST | 3 | 30 | 2 (0)| 00:00:01 |

|* 3 | TABLE ACCESS FULL| TAB_SECOND | 2 | 26 | 1 (0)| 00:00:01 |

---------------------------------------------------------------------------------

Nested loops are probably the easiest to understand: for each row in tab_first loop over all rows in tab_second to find matching rows. tab_first would be considered outer table and tab_second would be considered the inner table in terms of Nested loops terminology.

In regards of when is which table accessed:

- first, a batch of rows is read from

tab_first - for each of the rows in this batch the matching rows in

tab_secondare found

So, tab_first is accessed first, then tab_second is iterated as many times as there is rows in first batch, then tab_first is accessed again for the next batch.

To put it differently, full table scan of tab_first is done once. But by the time it is done, the full table scan of tab_second was already done as many times as there is rows in tab_first.

Connecting the Dots

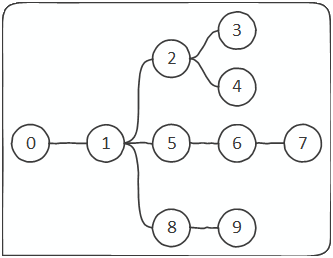

In the similar manner, if we'd have a multi-table join with nested loops, then all three tables would be accessed in described alternating manner and the execution plan would look like this:

----------------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time |

----------------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 8 | 2008 | 12 (0)| 00:00:01 |

| 1 | NESTED LOOPS | | 8 | 2008 | 12 (0)| 00:00:01 |

| 2 | NESTED LOOPS | | 6 | 978 | 6 (0)| 00:00:01 |

| 3 | TABLE ACCESS FULL| TAB_FIRST | 3 | 225 | 2 (0)| 00:00:01 |

|* 4 | TABLE ACCESS FULL| TAB_SECOND | 2 | 176 | 1 (0)| 00:00:01 |

|* 5 | TABLE ACCESS FULL | TAB_THIRD | 1 | 88 | 1 (0)| 00:00:01 |

----------------------------------------------------------------------------------

Hopefully, I managed to explain that reading the execution plan from bottom up, starting with the deepest entries (leaf nodes), is correct. But it does not mean that one operation must complete for the next to begin. And the operations displayed as siblings (tab_first and tab_second in our examples), don't necessarily execute in any specific order.

And if you need more food for thought on the subject - consider thinking about execution plans of parallel queries, pipelined functions and adaptive plans.

References

- Official doc: Oracle Documentation - Joins

Subscribe to my newsletter

Read articles from Urh Srecnik directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Urh Srecnik

Urh Srecnik

I'm an Oracle DBA and a developer at Abakus Plus d.o.o.. My team is responsible for pro-active maintenance of many Oracle Databases and their infrastructure. I am co-author of Abakus's solutions for monitoring Oracle Database performance, APPM, also available as a Free Edition. for backup and recovery: Backup Server for quick provisioning of test & development databases: Deja Vu Also author of open-source DDLFS filesystem which is available on GitHub. I am: OCP Database Administrator OCP Java SE Programmer OCIS Exadata Database Machine and a few more, check my LinkedIn profile for the complete list.