A Comprehensive Overview of Neural Networks and Their Applications in Modern AI

Indu Jawla

Indu JawlaNeural networks and deep learning are at the forefront of artificial intelligence (AI) and machine learning (ML), revolutionizing how machines perceive, interpret, and interact with data. This article provides an in-depth look at the fundamental architectures of neural networks, their components, and their applications, along with important topics one should know.

1. Introduction to Neural Networks

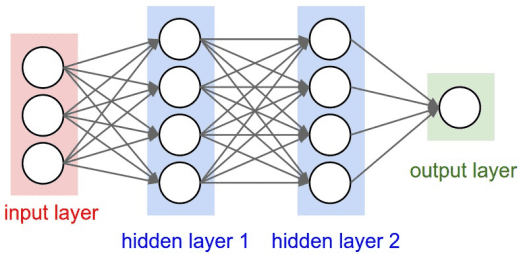

Neural networks are computational models inspired by the human brain’s structure and function. They consist of interconnected nodes, or neurons, organized in layers:

Input Layer: Receives input data.

Hidden Layers: Intermediate layers that process the input through weighted connections.

Output Layer: Produces the final output.

Neural networks learn by adjusting the weights of connections based on the error of the output compared to the expected result, a process known as backpropagation.

Weights and Biases: Each connection has a weight, which signifies the strength of the connection. Biases allow the model to fit the data better by shifting the activation function.

Activation Functions: Functions that introduce non-linearity into the model, allowing it to learn complex patterns. Common activation functions include: Sigmoid, Hyperbolic Tangent (tanh), Rectified Linear Unit (ReLU)

2. Feedforward Neural Networks

Feedforward Neural Networks (FNNs) are the simplest type of neural network. Data flows in one direction — from the input layer through the hidden layers to the output layer — without any cycles or loops.

feedforward netural networks

Structure: Typically consists of an input layer, one or more hidden layers, and an output layer.

Training: Uses a method called stochastic gradient descent (SGD) to minimize the loss function, which measures how far the predicted output is from the actual output.

Applications: Used in various applications like pattern recognition, regression tasks, and function approximation.

3. Convolutional Neural Networks (CNNs)

CNNs are specialized neural networks primarily used for image processing tasks. They leverage the spatial structure of images to reduce the number of parameters and computations required.

Convolutional Layers: These layers apply convolution operations to input data, using filters (or kernels) that learn to detect features such as edges and textures.

Pooling Layers: Down-sampling layers that reduce the spatial dimensions of the data, helping to achieve invariance to small translations and reducing computational load.

Fully Connected Layers: After several convolutional and pooling layers, the final layers are typically fully connected, where each neuron is connected to every neuron in the previous layer.

Applications

Image Classification: Identifying the main objects in an image.

Object Detection: Locating and identifying multiple objects within an image.

Image Segmentation: Dividing an image into segments for more precise analysis.

4. Recurrent Neural Networks (RNNs)

RNNs are designed for sequential data, where the output depends on previous inputs. This architecture allows RNNs to maintain memory of previous inputs, making them suitable for tasks like time series prediction and natural language processing.

Hidden States: RNNs maintain hidden states that capture information about past inputs.

Backpropagation Through Time (BPTT): An extension of backpropagation that adjusts weights in RNNs by considering the time dimension.

Limitations: Traditional RNNs struggle with long-term dependencies, which can be addressed by specialized architectures like Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) networks.

Applications

Speech Recognition: Converting spoken language into text.

Text Generation: Predicting the next word in a sentence based on previous words.

Time Series Forecasting: Predicting future values based on historical data.

5. Generative Adversarial Networks (GANs)

GANs are a type of deep learning model that consists of two neural networks — a generator and a discriminator — that compete against each other. This framework allows GANs to generate new data samples.

Generator: Creates new data samples from random noise.

Discriminator: Evaluates whether a given sample is real (from the training dataset) or fake (generated by the generator).

Training Process: The generator aims to produce realistic samples to fool the discriminator, while the discriminator learns to differentiate between real and fake samples. This adversarial training leads to improved performance for both networks.

Applications

Image Generation: Creating realistic images from random noise.

Data Augmentation: Generating additional training data to improve model performance.

Style Transfer: Altering images to adopt the style of other images while maintaining their content.

6. Key Considerations in Neural Networks

While neural networks are powerful tools, there are several important considerations to keep in mind:

Overfitting and Underfitting

Overfitting: When a model learns the training data too well, including noise and outliers, leading to poor generalization on unseen data.

Underfitting: When a model is too simple to capture the underlying patterns in the data.

Regularization Techniques

Dropout: A technique that randomly drops neurons during training to prevent overfitting.

L1 and L2 Regularization: Adding a penalty term to the loss function to discourage overly complex models.

Hyperparameter Tuning

Adjusting hyperparameters, such as learning rate, batch size, and number of layers, is crucial for optimizing model performance. Techniques like grid search and random search can be employed for this purpose.

Conclusion

Neural networks and deep learning have transformed various fields, from computer vision to natural language processing, enabling advancements that were previously unimaginable. By understanding the different architectures, their applications, and the key concepts behind them, one can harness the power of neural networks to solve complex problems and drive innovation. Whether you are a beginner or an experienced practitioner, exploring these topics will deepen your understanding and help you leverage deep learning in your projects.

For those interested in delving into the world of artificial intelligence, I highly recommend exploring Airoman’s AI (Artificial Intelligence) course. This comprehensive program provides a solid foundation in AI concepts, including machine learning, deep learning, natural language processing, and computer vision. The course emphasizes practical application, featuring hands-on projects that allow you to implement algorithms and build AI models using popular frameworks such as TensorFlow and PyTorch. You will learn how to analyze data, train models, and deploy AI solutions effectively. Additionally, the course covers ethical considerations and the latest advancements in the field, ensuring you are well-versed in both the technical and social implications of AI. Whether you are a beginner seeking to start your journey in AI or an experienced professional aiming to enhance your skill set, this course equips you with the knowledge and practical experience necessary to thrive in the rapidly evolving AI landscape.

Subscribe to my newsletter

Read articles from Indu Jawla directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by