Unlocking Selection Sort: Techniques, Applications, and Efficiency Explained

Keerthi Ravilla Subramanyam

Keerthi Ravilla Subramanyam

In our exploration of sorting algorithms, we've already covered bubble sort and its mechanism. Now, let’s focus on another fundamental yet insightful algorithm—Selection Sort. As with all our discussions, we aim to build a solid foundation before moving on to more advanced techniques.

What is the Selection Sort?

In Selection Sort, the focus is on finding and selecting the smallest (or largest) element from the unsorted part of the list and then swapping it into its correct position in the sorted part. The algorithm repeatedly selects the smallest element from the remaining unsorted portion and moves it to the beginning, growing the sorted section one element at a time.

Key Idea: Select the smallest unsorted element and move it to the correct spot.

Sorting happens via swapping after finding the smallest element.

How Selection Sort Works

It is a straightforward sorting algorithm that sorts an array by repeatedly finding the minimum element from the unsorted portion and moving it to the beginning. Here’s a step-by-step breakdown of how the selection sort algorithm operates:

Initial Setup:

- Treat the entire array as unsorted.

Finding the Minimum:

- For each pass, starting from the first index, identify the minimum element in the unsorted section of the array.

Swapping:

- Once the minimum element is found, swap it with the first unsorted element. This adds the minimum element to the sorted section of the array.

Incrementing the Index:

- Move to the next index and repeat the process. This involves finding the minimum in the remaining unsorted elements and swapping it with the current index.

Repeating the Process:

- Continue repeating steps 2 to 4 until the entire array is sorted. The process ends when there are no unsorted elements left.

Selection Sort Example and visulaization

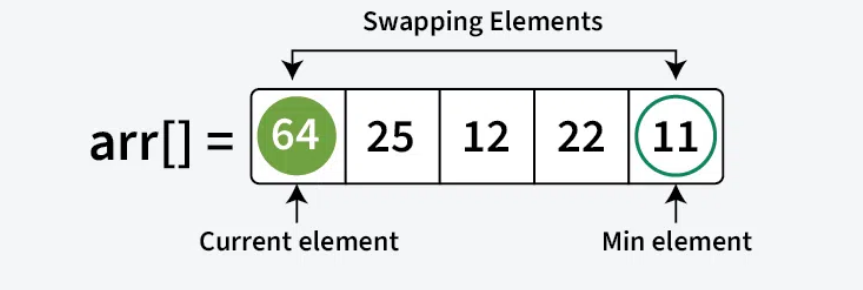

Let’s visualize selection sort with an example. Consider an unsorted array: [64, 25, 12, 22, 11]

First Pass:

Find the minimum element in

[64, 25, 12, 22, 11], which is11.

Swap

11with the first element (64), resulting in:[11, 25, 12, 22, 64]

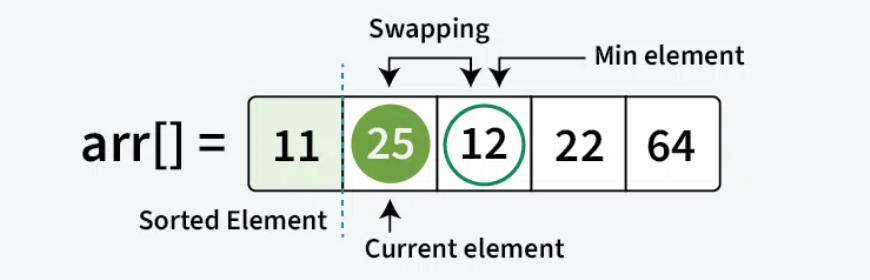

Second Pass:

Find the minimum in

[25, 12, 22, 64], which is12.

Swap

12with25: [11, 12, 25, 22, 64]

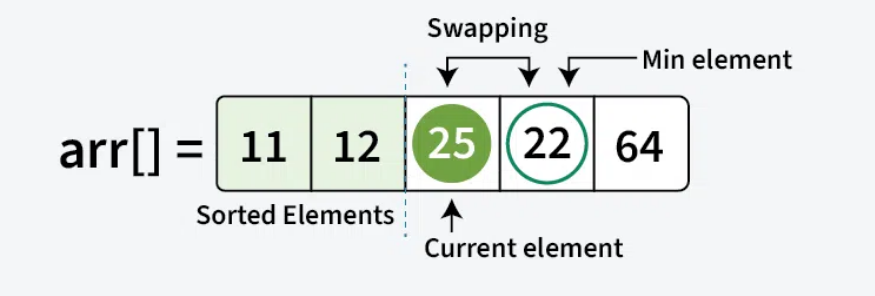

Third Pass:

The minimum in

[25, 22, 64]is22.

Swap

22with25:[11, 12, 22, 25, 64]

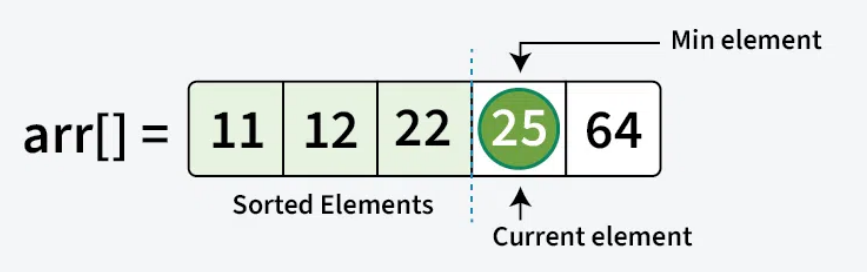

Fourth Pass:

The remaining part

[25, 64]is already in order, so the process stops.

So final sorted array is:

[11,12,22,25,64]

Pseudocode of sorting algorithm

Set

MINto the first element's index (start at location 0).Search through the unsorted part of the list to find the minimum element.

Swap the minimum element with the element at the

MINposition.Increment

MINto the next element's index.Repeat steps 2-4 until the entire list is sorted.

Python Implementation

Here’s the Python code implementing the selection sort algorithm:

def selection_sort(arr):

n = len(arr)

# Go through each element

for i in range(n):

# Assume the first unsorted element is the smallest

min_index = i

# Check the rest of the unsorted array

for j in range(i + 1, n):

# Update the index of the smallest element if a smaller one is found

if arr[j] < arr[min_index]:

min_index = j

# Swap the smallest element found with the first unsorted element

arr[i], arr[min_index] = arr[min_index], arr[i]

return arr

# Example usage

arr = [64, 25, 12, 22, 11]

sorted_arr = selection_sort(arr)

print("Sorted array:", sorted_arr)

Time Complexity

The time complexity of selection sort is O(n²), as it involves two nested loops: one for traversing the array and another for finding the minimum element.

Best Case: O(n²)

Average Case: O(n²)

Worst Case: O(n²)

Advantages and Disadvanatages

| Advantages | Disadvantages |

| Simplicity | Inefficiency with Large Datasets |

| Easy to understand and implement. | O(n²) time complexity makes it inefficient for large lists. |

| In-Place Sorting | Not Adaptive |

| Requires a constant amount of additional memory (O(1)). | Always performs the same number of comparisons, regardless of initial order. |

| Fewer Swaps | Limited Practical Use |

| Performs the minimum number of swaps necessary. | Rarely used in practice for sorting large datasets. |

| Predictable Performance | Stability Issues |

| Consistent O(n²) time complexity across all cases. | Generally not stable, but can be modified for stability. |

Conclusion

Although selection sort has a quadratic time complexity, it remains relevant in specific scenarios where the cost of swapping elements outweighs that of comparing them. By performing the minimum number of swaps necessary, selection sort can be a practical choice in certain contexts.

As we continue our DSA series: Sorting Algorithms Explained, we aim to enrich our understanding of sorting techniques. This journey will provide a solid foundation for tackling more complex algorithms. We invite you to join us as we explore additional sorting methods, their efficiencies, and their real-world applications in the articles to come.

Subscribe to my newsletter

Read articles from Keerthi Ravilla Subramanyam directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Keerthi Ravilla Subramanyam

Keerthi Ravilla Subramanyam

Hi, I'm Keerthi Ravilla Subramanyam, a passionate tech enthusiast with a Master's in Computer Science. I love diving deep into topics like Data Structures, Algorithms, and Machine Learning. With a background in cloud engineering and experience working with AWS and Python, I enjoy solving complex problems and sharing what I learn along the way. On this blog, you’ll find articles focused on breaking down DSA concepts, exploring AI, and practical coding tips for aspiring developers. I’m also on a journey to apply my skills in real-world projects like predictive maintenance and data analysis. Follow along for insightful discussions, tutorials, and code snippets to sharpen your technical skills.