LLM tracing in AI system

Tom X Nguyen

Tom X NguyenTable of contents

When

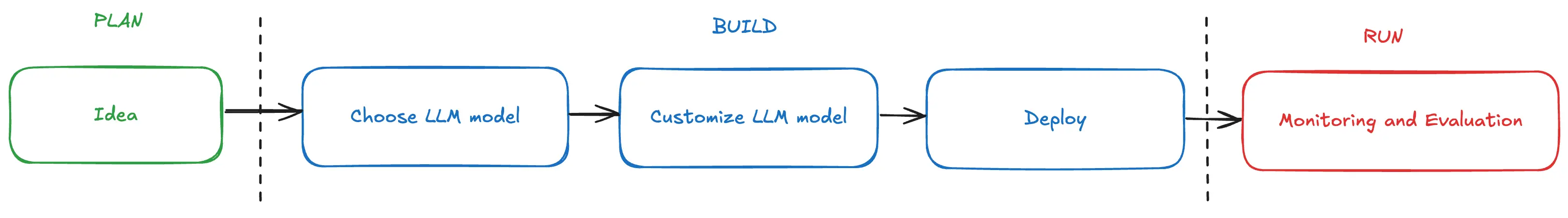

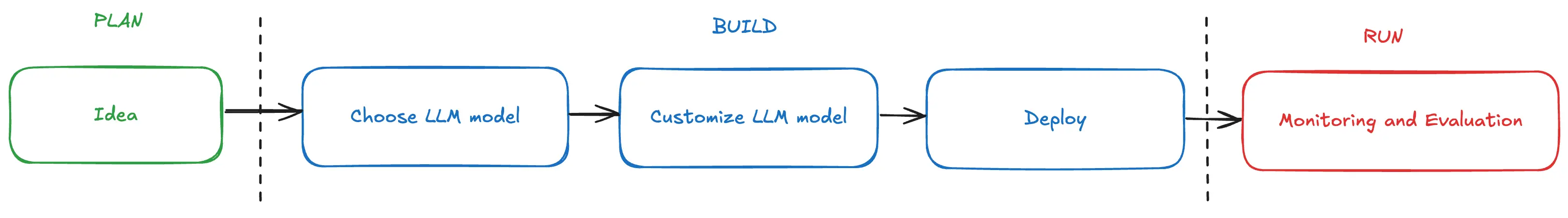

Building software with Large Language Models (LLMs) involves several steps, from planning to deployment. LLM tracing emerges as a final step in this process, providing ongoing insights and enabling continuous improvement of LLM-powered applications.

Why

Before diving into tracing, it's important to understand the fundamental difference between traditional software and LLM-powered applications:

- Traditional software: Deterministic, based on explicit instructions written by programmers.

- With LLMs: Probabilistic, based on neural networks with weights determined through training.

Why LLM Tracing is Necessary:

- Unpredictable Outputs: LLMs can produce different outputs for the same input due to their probabilistic nature.

- Black Box Nature: The decision-making process of an LLM is opaque.

- Complex Interactions: LLMs often interact with multiple components (e.g., retrieval systems, filters, classifiers, external APIs) in ways that aren't immediately obvious.

- Performance Variability: Performance can vary significantly based on input complexity, model size, and hardware.

- Evolving Behavior: LLMs can exhibit evolving behavior through fine-tuning or in response to different prompts.

- Error Diagnosis: "Errors" in LLMs might be subtle, like hallucinations or biased responses.

- Continuous Improvement: LLMs can be improved through better prompts, fine-tuning, or model updates.

Key Metrics

| Basic | Evaluating |

| Latency Throughput Error Rate Resource Utilization Execution Time ... | Factual Accuracy Relevance Bias Detection and Fairness Hallucination Rate Coherence ... |

While basic metrics like latency and throughput measure operational performance, evaluative metrics dig deeper into the actual output and behavior of the LLM.

Tools

Some popular tools that support various aspects of LLM tracing:

References

- https://colab.research.google.com/github/Arize-ai/phoenix/blob/main/tutorials/llm_ops_overview.ipynb

- https://arize.com/blog-course/llm-evaluation-the-definitive-guide/

- https://docs.smith.langchain.com/how_to_guides/tracing

- https://karpathy.medium.com/software-2-0-a64152b37c35

- Demo: https://colab.research.google.com/gist/tienan92it/490dd65748518a9abc73cdf4bd84583d/welcome-to-colab.ipynb

Subscribe to my newsletter

Read articles from Tom X Nguyen directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Tom X Nguyen

Tom X Nguyen

Started out this path from working with MIPS assembly at around 12 years old, and for some reason ended working mostly on fullstack.