print(result) "Part 3 of NotADev"

Isa

Isa

Enriching Data with Technical Indicators

With the stock data successfully fetched and initial error handling in place, it was time to delve deeper into the data to make it more informative for our predictive models. The idea was to enrich the data with technical indicators—tools that traders use to analyse past market data to predict future price movements.

Calculating Technical Indicators

The AI assistant suggested utilising the ta library, a comprehensive technical analysis library in Python. This library provides a wide range of technical indicators ready to be used with minimal setup.

We aimed to calculate several key indicators:

Simple Moving Average (SMA)

Exponential Moving Average (EMA)

Relative Strength Index (RSI)

Moving Average Convergence Divergence (MACD)

Bollinger Bands

Average True Range (ATR)

On-Balance Volume (OBV)

Here's how we implemented the function to add these indicators:

import ta

def add_technical_indicators(data):

# Simple Moving Average

data['SMA'] = ta.trend.SMAIndicator(data['Close'], window=14).sma_indicator()

# Exponential Moving Average

data['EMA'] = ta.trend.EMAIndicator(data['Close'], window=14).ema_indicator()

# Relative Strength Index

data['RSI'] = ta.momentum.RSIIndicator(data['Close'], window=14).rsi()

# MACD

macd = ta.trend.MACD(data['Close'])

data['MACD'] = macd.macd()

data['MACD_Signal'] = macd.macd_signal()

# Bollinger Bands

bb = ta.volatility.BollingerBands(data['Close'], window=20, window_dev=2)

data['BB_High'] = bb.bollinger_hband()

data['BB_Low'] = bb.bollinger_lband()

# Average True Range

data['ATR'] = ta.volatility.AverageTrueRange(data['High'], data['Low'], data['Close'], window=14).average_true_range()

# On-Balance Volume

data['OBV'] = ta.volume.OnBalanceVolumeIndicator(data['Close'], data['Volume']).on_balance_volume()

# Drop initial rows with NaN values

data.dropna(inplace=True)

return data

NaN values, particularly at the beginning of the dataset. This was expected since some indicators require a certain number of periods to calculate their values.NaN values to clean the dataset.data.dropna(inplace=True)

This adjustment ensured that the dataset was free of missing values and ready for further analysis.

Additional Consideration: To make the dataset even richer, the AI suggested adding more technical indicators like Momentum, Chaikin Money Flow (CMF), and Money Flow Index (MFI).

# Momentum

data['Momentum'] = data['Close'].diff(4)

# Chaikin Money Flow

data['CMF'] = ta.volume.ChaikinMoneyFlowIndicator(

high=data['High'], low=data['Low'], close=data['Close'], volume=data['Volume'], window=20

).chaikin_money_flow()

# Money Flow Index

data['MFI'] = ta.volume.MFIIndicator(

high=data['High'], low=data['Low'], close=data['Close'], volume=data['Volume'], window=14

).money_flow_index()

Feature Engineering with Lag Features

To capture temporal dependencies and provide the model with more context, we decided to create lag features. Lag features are previous time steps' values of a time series, which can help the model understand how past values influence future ones.

Temporal dependencies refer to the relationships and patterns between data points in a time series, where the value at a given time is influenced by its previous values. These dependencies are crucial in time series analysis and forecasting, as they help in understanding how past events affect future outcomes.

def create_lag_features(data, numeric_cols, lags):

for col in numeric_cols:

for lag in lags:

data[f'{col}_lag{lag}'] = data[col].shift(lag)

data.dropna(inplace=True)

return data

We applied this function to our data, specifying the numeric columns and the number of lags we wanted to create (e.g., 1, 2).

Multicollinearity refers to a statistical phenomenon in which two or more predictor variables in a multiple regression model are highly correlated, meaning that one can be linearly predicted from the others with a substantial degree of accuracy. This can cause problems in estimating the coefficients of the regression model, leading to unreliable and unstable estimates. Multicollinearity can inflate the variance of the coefficient estimates and make it difficult to determine the individual effect of each predictor variable on the dependent variable.

def remove_highly_correlated_features(data, threshold=0.9):

corr_matrix = data.corr().abs()

upper_tri = corr_matrix.where(np.triu(np.ones(corr_matrix.shape), k=1).astype(bool))

to_drop = [column for column in upper_tri.columns if any(upper_tri[column] > threshold)]

data.drop(columns=to_drop, inplace=True)

return data

Implementing this function helped in reducing the feature set to a more manageable size while retaining the most informative variables.

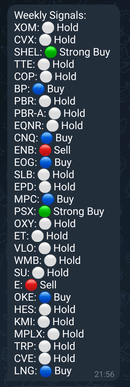

So far so good! I started to push the data out to my Telegram, bearing in mind, the entirety of the code is generated each time, so the model works and produces results. Changes come from me noticing issues with the output or errors that come up in the terminal.

Output of signals to Telegram

That’s it for this week, see you on the next one.

pxng0lin.

Subscribe to my newsletter

Read articles from Isa directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Isa

Isa

Former analyst with expertise in data, forecasting, and resource modeling, transitioned to cybersecurity over the past 4 years (as of May 2024). Passionate about security and problem-solving, utilising skills in data and analysis, for cybersecurity challenges. Experience: Extensive background in data analytics, forecasting, and predictive modelling. Experience with platforms like Bugcrowd, Intigriti, and HackerOne. Transitioned to Web3 cybersecurity with Immunefi, exploring smart contract vulnerabilities. Spoken languages: English (Native, British), Arabic (Fus-ha)