Step-by-Step Guide to Seamless Live Streaming Integration with VideoSDK in React

Riya Chudasama

Riya Chudasama

In today’s digital era, live streaming has become increasingly popular over the past decade and has emerged as a powerful tool for engaging audiences in real-time. Whether it’s for webinars, gaming, or online events, integrating live streaming capabilities into your application can significantly enhance user experience.

VideoSDK is a potent tool that aims to simplify the process of integrating video calls, live streaming, real time transcription and more with minimal effort. In this blog post, we will guide you through the process of integrating VideoSDK into a React application for live streaming

Why choose VideoSDK

When considering live streaming solutions, many platforms offer standard streaming capabilities where the audience can only watch and chat, lacking real-time interaction with the speaker. In contrast, VideoSDK provides a robust interactive live streaming experience that enhances audience engagement through various interactive features.

Advantages of VideoSDK's Interactive Live Streaming:

Real-Time Interaction:

VideoSDK enables viewers to actively engage with the content and each other during live streams. This includes features like chat, live polling, Q&A sessions. Thus, promoting a dynamic and interactive environment.Flexible Layouts:

Developers can customize the interactive livestream layout according to their needs, choosing from prebuilt layouts or creating their own templates.Adaptive Streaming:

VideoSDK supports adaptive bitrate streaming, ensuring optimal video quality for all viewers regardless of their network conditions. This feature enhances the overall user experience by and maintaining high-quality playback.Seamless Integration:

With simple API calls likestartHls()andstopHls(), integrating interactive live streaming into your React application is straightforward and easy.Scalability:

VideoSDK is designed to handle large audiences, making it suitable for events with thousands of participants. The platform’s infrastructure ensures reliable performance even during peak usage times.

Defining User Roles

For our application , there will be two types of users :

Speaker : They are the ones that creates meeting or enter meeting id and click on button

Join as Host. they have all the media controls i.e they can toggle webcam and mic. They can even start HLS stream.Viewer : They are the ones that enter the meeting id and click on button

Join as Viewer. They will not have any media controls, they will just watch VideoSDK HLS Stream, which was started by the speaker.

Guide to building your own live streaming application

Prerequisites :

Before you get started, ensure you have the following prerequisites:

A basic understanding of React and Hooks(useState, useRef, useEffect)

Have node.js and npm installed

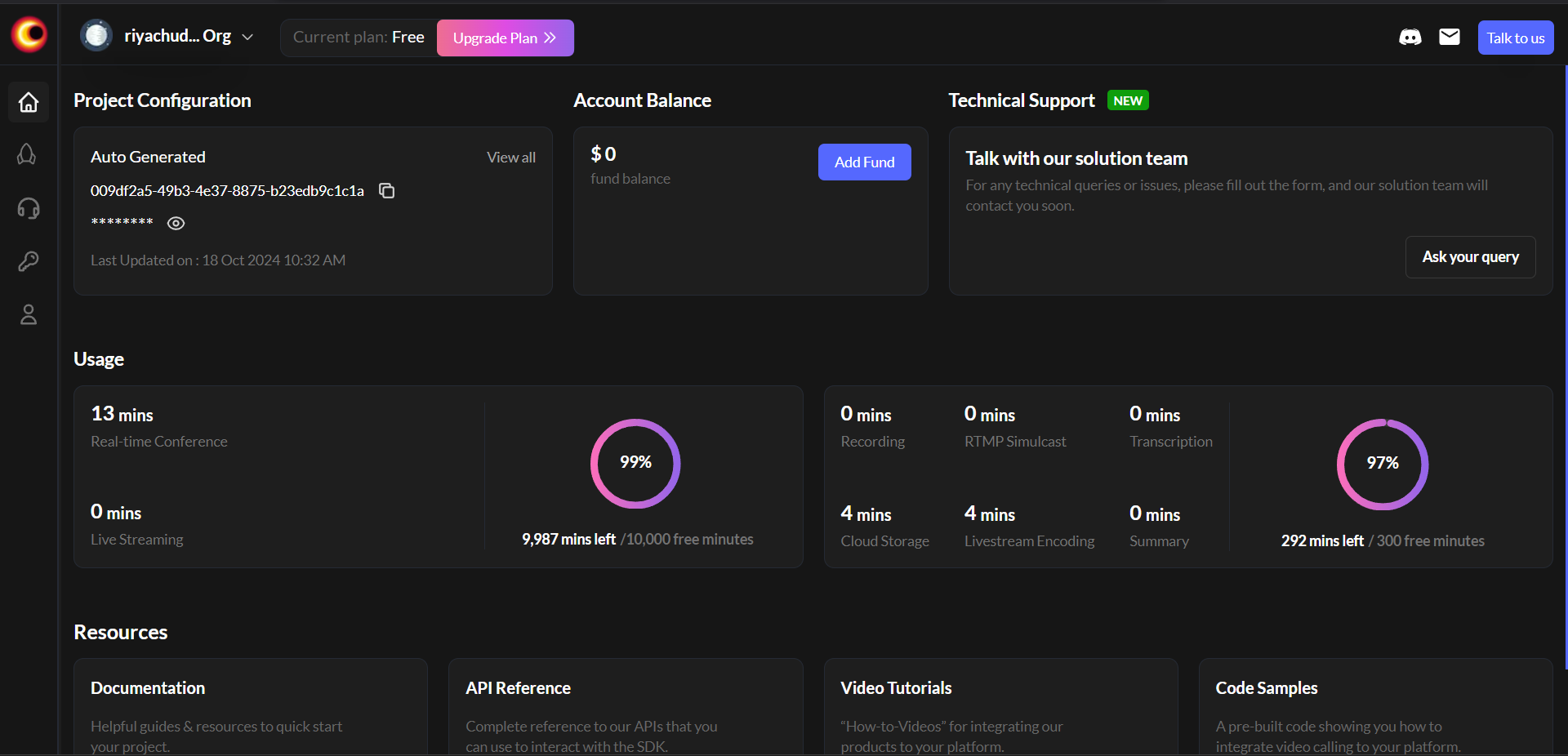

An active Video SDK account (you can sign up for free).

Step 1 : Getting Started

Create new React app

$ npx create-react-app videosdk-rtc-react-app $ cd videosdk-rtc-react-appInstall VideoSDK and Dependencies

$ npm install @videosdk.live/react-sdk react-player hls.jsStructure of the Project

root/ ├── node_modules/ ├── public/ ├── src/ │ ├── API.js │ ├── App.js │ ├── index.js │ ├── components/ │ │ ├── Controls.js │ │ ├── JoinScreen.js │ │ ├── Container.js │ │ ├── ParticipantView.js │ │ ├── SpeakerView.js │ │ └── ViewerView.js ├── package.json

Step 2 : Set Up your API token

To create a new meeting , an API call will be required. You can either use temporary auth-token from user dashboard or go for the auth-Token generated by your servers. We would recommend the later.

Create a new file named api.js in the src directory and add the following code :

// Auth token we will use to generate a meeting and connect to it

export const authToken = "<Generated-from-dashbaord>";

// API call to create meeting

export const createMeeting = async ({ token }) => {

const res = await fetch(`https://api.videosdk.live/v2/rooms`, {

method: "POST",

headers: {

authorization: `${authToken}`,

"Content-Type": "application/json",

},

body: JSON.stringify({}),

});

//Destructuring the roomId from the response

const { roomId } = await res.json();

return roomId;

};

Step 3 : Main App Component

import "./App.css";

import React, { useEffect, useMemo, useRef, useState } from "react";

import {

MeetingProvider,

MeetingConsumer,

useMeeting,

useParticipant,

Constants,

} from "@videosdk.live/react-sdk";

import { authToken, createMeeting } from "./API";

import ReactPlayer from "react-player";

function App() {

const [meetingId, setMeetingId] = useState(null);

//State to handle the mode of the participant i.e. CONFERENCE or VIEWER

const [mode, setMode] = useState("CONFERENCE");

//You have to get the MeetingId from the API created earlier

const getMeetingAndToken = async (id) => {

const meetingId =

id == null ? await createMeeting({ token: authToken }) : id;

setMeetingId(meetingId);

};

const onMeetingLeave = () => {

setMeetingId(null);

};

return authToken && meetingId ? (

<MeetingProvider

config={{

meetingId,

micEnabled: true,

webcamEnabled: true,

name: "C.V. Raman",

//This will be the mode of the participant CONFERENCE or VIEWER

mode: mode,

}}

token={authToken}

>

<MeetingConsumer>

{() => (

<Container meetingId={meetingId} onMeetingLeave={onMeetingLeave} />

)}

</MeetingConsumer>

</MeetingProvider>

) : (

<JoinScreen getMeetingAndToken={getMeetingAndToken} setMode={setMode} />

);

}

Step 4 : Join Screen Component

JoinScreen is the first screen that will appear in the Ui once you start your application. You can create a new meeting in this component or even enter an existing meeting with meetingId.

function JoinScreen({ getMeetingAndToken, setMode }) {

const [meetingId, setMeetingId] = useState(null);

//Set the mode of joining participant and set the meeting id

const onClick = async (mode) => {

setMode(mode);

await getMeetingAndToken(meetingId);

};

return (

<div className="container">

<button onClick={() => onClick("CONFERENCE")}>Create Meeting</button>

<br />

<br />

{" or "}

<br />

<br />

<input

type="text"

placeholder="Enter Meeting Id"

onChange={(e) => {

setMeetingId(e.target.value);

}}

/>

<br />

<br />

<button onClick={() => onClick("CONFERENCE")}>Join as Host</button>

{" | "}

<button onClick={() => onClick("VIEWER")}>Join as Viewer</button>

</div>

);

}

Step 5 : Container Component

Next we will be diverted to Container page where meeting id will be displayed and to join the meeting , a button Join will be there.

function Container(props) {

const [joined, setJoined] = useState(null);

//Get the method which will be used to join the meeting.

const { join } = useMeeting();

const mMeeting = useMeeting({

//callback for when a meeting is joined successfully

onMeetingJoined: () => {

setJoined("JOINED");

},

//callback for when a meeting is left

onMeetingLeft: () => {

props.onMeetingLeave();

},

//callback for when there is an error in a meeting

onError: (error) => {

alert(error.message);

},

});

const joinMeeting = () => {

setJoined("JOINING");

join();

};

return (

<div className="container">

<h3>Meeting Id: {props.meetingId}</h3>

{joined && joined == "JOINED" ? (

mMeeting.localParticipant.mode == Constants.modes.CONFERENCE ? (

<SpeakerView />

) : mMeeting.localParticipant.mode == Constants.modes.VIEWER ? (

<ViewerView />

) : null

) : joined && joined == "JOINING" ? (

<p>Joining the meeting...</p>

) : (

<button onClick={joinMeeting}>Join</button>

)}

</div>

);

}

Step 6 : Implement SpeakerView

On clicking on Join button, the speaker will enter the meeting where he can manage features such as leave, mute, unmute, start/stop HLS and webcam.

SpeakerView.js

function SpeakerView() { //Get the participants and HLS State from useMeeting const { participants, hlsState } = useMeeting(); //Filtering the host/speakers from all the participants const speakers = useMemo(() => { const speakerParticipants = [...participants.values()].filter( (participant) => { return participant.mode == Constants.modes.CONFERENCE; } ); return speakerParticipants; }, [participants]); return ( <div> <p>Current HLS State: {hlsState}</p> {/* Controls for the meeting */} <Controls /> {/* Rendring all the HOST participants */} {speakers.map((participant) => ( <ParticipantView participantId={participant.id} key={participant.id} /> ))} </div> ); }Implement controls that allow the speaker to start and stop live streaming

function Controls() { const { leave, toggleMic, toggleWebcam, startHls, stopHls } = useMeeting(); return ( <div> <button onClick={() => leave()}>Leave</button>  |  <button onClick={() => toggleMic()}>toggleMic</button> <button onClick={() => toggleWebcam()}>toggleWebcam</button>  |  <button onClick={() => { //Start the HLS in SPOTLIGHT mode and PIN as //priority so only speakers are visible in the HLS stream startHls({ layout: { type: "SPOTLIGHT", priority: "PIN", gridSize: "20", }, theme: "LIGHT", mode: "video-and-audio", quality: "high", orientation: "landscape", }); }} > Start HLS </button> <button onClick={() => stopHls()}>Stop HLS</button> </div> ); }ParticipantView.js

function ParticipantView(props) { const micRef = useRef(null); const { webcamStream, micStream, webcamOn, micOn, isLocal, displayName } = useParticipant(props.participantId); const videoStream = useMemo(() => { if (webcamOn && webcamStream) { const mediaStream = new MediaStream(); mediaStream.addTrack(webcamStream.track); return mediaStream; } }, [webcamStream, webcamOn]); //Playing the audio in the <audio> useEffect(() => { if (micRef.current) { if (micOn && micStream) { const mediaStream = new MediaStream(); mediaStream.addTrack(micStream.track); micRef.current.srcObject = mediaStream; micRef.current .play() .catch((error) => console.error("videoElem.current.play() failed", error) ); } else { micRef.current.srcObject = null; } } }, [micStream, micOn]); return ( <div> <p> Participant: {displayName} | Webcam: {webcamOn ? "ON" : "OFF"} | Mic:{" "} {micOn ? "ON" : "OFF"} </p> <audio ref={micRef} autoPlay playsInline muted={isLocal} /> {webcamOn && ( <ReactPlayer // playsinline // extremely crucial prop pip={false} light={false} controls={false} muted={true} playing={true} // url={videoStream} // height={"300px"} width={"300px"} onError={(err) => { console.log(err, "participant video error"); }} /> )} </div> ); }

Step 7 : Implement ViewerView

Viewer can only join the meeting when the HOST will start the HLS Player

function ViewerView() {

// States to store downstream url and current HLS state

const playerRef = useRef(null);

//Getting the hlsUrls

const { hlsUrls, hlsState } = useMeeting();

//Playing the HLS stream when the playbackHlsUrl is present and it is playable

useEffect(() => {

if (hlsUrls.playbackHlsUrl && hlsState == "HLS_PLAYABLE") {

if (Hls.isSupported()) {

const hls = new Hls({

maxLoadingDelay: 1, // max video loading delay used in automatic start level selection

defaultAudioCodec: "mp4a.40.2", // default audio codec

maxBufferLength: 0, // If buffer length is/becomes less than this value, a new fragment will be loaded

maxMaxBufferLength: 1, // Hls.js will never exceed this value

startLevel: 0, // Start playback at the lowest quality level

startPosition: -1, // set -1 playback will start from intialtime = 0

maxBufferHole: 0.001, // 'Maximum' inter-fragment buffer hole tolerance that hls.js can cope with when searching for the next fragment to load.

highBufferWatchdogPeriod: 0, // if media element is expected to play and if currentTime has not moved for more than highBufferWatchdogPeriod and if there are more than maxBufferHole seconds buffered upfront, hls.js will jump buffer gaps, or try to nudge playhead to recover playback.

nudgeOffset: 0.05, // In case playback continues to stall after first playhead nudging, currentTime will be nudged evenmore following nudgeOffset to try to restore playback. media.currentTime += (nb nudge retry -1)*nudgeOffset

nudgeMaxRetry: 1, // Max nb of nudge retries before hls.js raise a fatal BUFFER_STALLED_ERROR

maxFragLookUpTolerance: 0.1, // This tolerance factor is used during fragment lookup.

liveSyncDurationCount: 1, // if set to 3, playback will start from fragment N-3, N being the last fragment of the live playlist

abrEwmaFastLive: 1, // Fast bitrate Exponential moving average half-life, used to compute average bitrate for Live streams.

abrEwmaSlowLive: 3, // Slow bitrate Exponential moving average half-life, used to compute average bitrate for Live streams.

abrEwmaFastVoD: 1, // Fast bitrate Exponential moving average half-life, used to compute average bitrate for VoD streams

abrEwmaSlowVoD: 3, // Slow bitrate Exponential moving average half-life, used to compute average bitrate for VoD streams

maxStarvationDelay: 1, // ABR algorithm will always try to choose a quality level that should avoid rebuffering

});

let player = document.querySelector("#hlsPlayer");

hls.loadSource(hlsUrls.playbackHlsUrl);

hls.attachMedia(player);

} else {

if (typeof playerRef.current?.play === "function") {

playerRef.current.src = hlsUrls.playbackHlsUrl;

playerRef.current.play();

}

}

}

}, [hlsUrls, hlsState, playerRef.current]);

return (

<div>

{/* Showing message if HLS is not started or is stopped by HOST */}

{hlsState != "HLS_PLAYABLE" ? (

<div>

<p>HLS has not started yet or is stopped</p>

</div>

) : (

hlsState == "HLS_PLAYABLE" && (

<div>

<video

ref={playerRef}

id="hlsPlayer"

autoPlay={true}

controls

style={{ width: "100%", height: "100%" }}

playsinline

playsInline

muted={true}

playing

onError={(err) => {

console.log(err, "hls video error");

}}

></video>

</div>

)

)}

</div>

);

}

Step 9: Run Your Application

Now that everything is set up, you can run your application using:

$ npm start

Open your browser and navigate to http://localhost:3000. You should see your application running with options to create or join a meeting.

That’s a Wrap

Bingo!! You have successfully integrated live streaming feature using videoSDK in your React application.

Now that you know how easy it is to use VideoSDK , we leave you to your creativity and skills of how amazing you build your product.

Happy Hacking!

Resources

React Interactive Live Streaming with Video SDK

%[https://youtu.be/L1x7wtH-ok8?feature=shared]

Subscribe to my newsletter

Read articles from Riya Chudasama directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by