Exploring Amazon EFS: Usage, Storage Classes, and Cross-Region Replication

Venkata Pavan Vishnu Rachapudi

Venkata Pavan Vishnu Rachapudi

Amazon Elastic File System (EFS) is a fully managed, scalable, and highly available file storage service designed for use with Amazon EC2 instances. EFS automatically grows and shrinks as files are added or removed, ensuring that your applications always have the storage they need. In this post, we will explore the EFS storage classes, the different use cases for EFS, and the steps to enable cross-region replication.

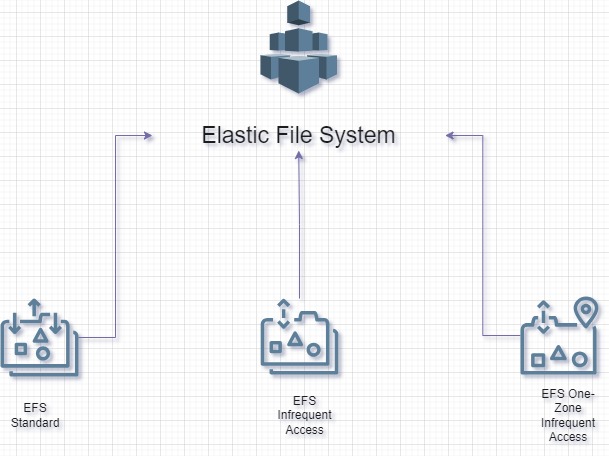

EFS Storage Classes

Amazon EFS offers multiple storage classes to help optimize both performance and cost based on your file access patterns. These storage classes automatically scale up and down with your data and include:

1. EFS Standard

Description: The default storage class for frequently accessed files.

Use Case: Ideal for applications that need low-latency access to large amounts of data, like web services, media processing, or content management systems.

Cost: Higher compared to infrequent access, but optimized for lower-latency access.

2. EFS Infrequent Access (IA)

Description: Designed for files that are not frequently accessed but need to be available quickly when required.

Use Case: Perfect for files that are not in everyday use but should still be available on demand, such as backup data or archived logs.

Cost: Lower storage cost compared to the EFS Standard tier, but incurs a small fee when accessing data.

3. EFS One-Zone Infrequent Access (One-Zone IA)

Description: Stores files in a single Availability Zone (AZ) and is more cost-effective than the EFS IA class.

Use Case: Suitable for non-critical workloads that do not require multi-AZ resilience, like development or test environments.

Cost: Offers the lowest cost storage option with similar performance to EFS IA.

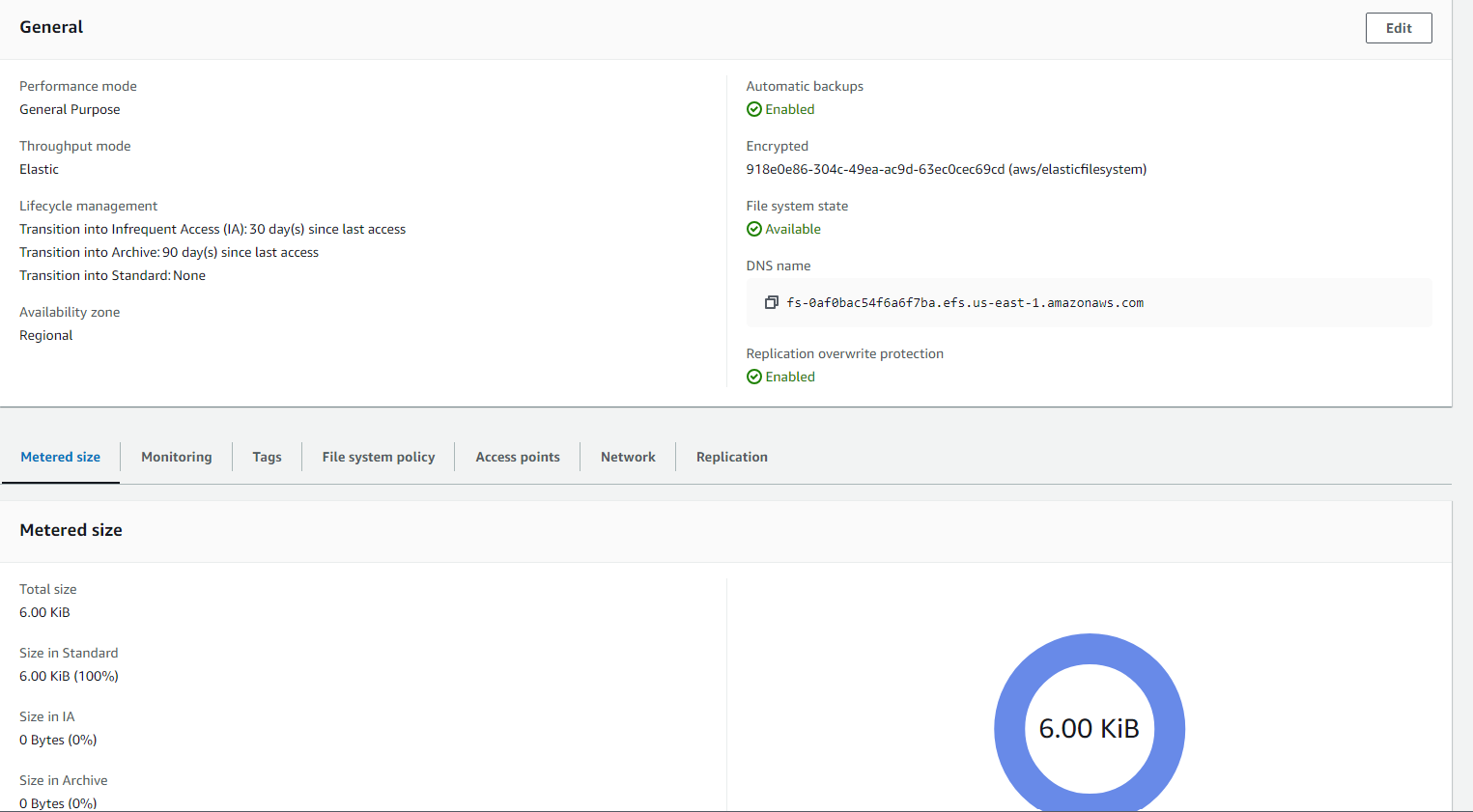

Let’s Deep Dive into below EFS File system configuration

Replication overwrite protection

The protection prevents the file system from being used as the destination file system in a replication configuration.

Lifecycle management

Automatically save money as access patterns change by moving files into the Infrequent Access (IA) or Archive storage class

File System Policy

It is resource-based policy that you can apply to control access to your file system at the resource level. This policy defines permissions for different AWS Identity and Access Management (IAM) principals, such as IAM users, roles, or services, and specifies what actions they can take on the file system.Example of EFS File System Policy

Here is an example file system policy that grants access to a specific IAM role to mount the file system and restricts access to a particular VPC:

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Principal": { "AWS": "arn:aws:iam::example:role/EFSAccessRole" }, "Action": [ "elasticfilesystem:ClientMount", "elasticfilesystem:ClientWrite" ], "Resource": "arn:aws:elasticfilesystem:us-west-2:example:file-system/fs-12345678", "Condition": { "StringEquals": { "aws:VpcId": "vpc-example" } } } ] }Metered Size

refers to the actual storage usage of your file system, which is billed based on the amount of data stored. The metered size is determined by the following factors:

Stored Data: The total size of the files stored in the file system, including any metadata and directory information.

Storage Class: EFS offers two storage classes:

Standard Storage: For frequently accessed files.

Infrequent Access (IA) Storage: For files that are not accessed frequently. Storing data in this class costs less, but retrieving it incurs additional costs.

Data Replication: EFS automatically replicates data across multiple Availability Zones in an AWS region. However, this replication does not affect your metered size or billing. You are charged only for the actual data stored, not for replicas.

Lifecycle Management: If you enable lifecycle policies, data can automatically transition to the Infrequent Access storage class after a certain number of days, which can reduce costs without impacting the metered size.

EFS Performance Modes

Amazon EFS offers two performance modes: General Purpose and Max I/O. These modes are designed to optimize performance based on your workload requirements.

1. General Purpose (Default Mode)

Low Latency: General Purpose mode is optimized for low-latency operations and is suitable for the majority of applications, including web servers, development environments, and content management systems.

Metadata Operations: This mode provides the best performance for workloads with frequent metadata operations (e.g., file open, close, or access checks).

Use Case: Ideal for use cases requiring low-latency file operations, such as web serving or content management.

2. Max I/O (Maximize Throughput)

Higher Latency, Higher Throughput: Max I/O mode is designed for applications that need the highest possible throughput and can tolerate slightly higher latencies.

Scalability: This mode scales to thousands of EC2 instances accessing the same file system simultaneously, making it ideal for big data, analytics, and media processing workloads.

Use Case: Best for large-scale data analytics, machine learning training, and workloads that involve massive parallel access.

EFS Throughput Modes

EFS provides two throughput modes that control how data transfer rates are provisioned: Bursting Throughput and Provisioned Throughput.

1. Bursting Throughput (Default Mode)

Burst Credits: EFS file systems automatically scale their throughput as your workload grows. Bursting mode is based on burst credits, which allow the file system to burst throughput levels above the baseline as needed.

Baseline Throughput: The baseline is determined by the size of your file system. The more data stored, the higher the baseline throughput.

Use Case: Suitable for most workloads with unpredictable or spiky traffic patterns. Small file systems will be able to burst occasionally, while larger file systems have sustained higher throughput.

2. Provisioned Throughput

Fixed Throughput: Provisioned Throughput allows you to specify the exact throughput required, independent of the file system size.

Consistent Performance: This mode is ideal for workloads that require consistent, high throughput, even when the file system is small or under heavy load.

Use Case: Ideal for use cases such as media processing or scientific computing, where sustained high throughput is required, but the file system size may not justify high burst limits.

EFS Usage Scenarios

Amazon EFS can be used in a variety of scenarios that require scalable file storage, including:

Content Management Systems (CMS): EFS supports the storage of large media files that need to be shared across multiple servers.

Web Serving & Application Hosting: Ideal for storing shared assets like images, videos, and other media files that are accessed by multiple instances.

Big Data Analytics: You can process large volumes of data in a distributed manner, with EFS providing a shared file system accessible by all instances.

Backup and Restore: EFS can be used as a scalable solution to store backup data, and it integrates well with services like AWS Backup for automated backups.

Disaster Recovery: Using the EFS replication feature, businesses can keep a backup copy of their file systems in another region for disaster recovery.

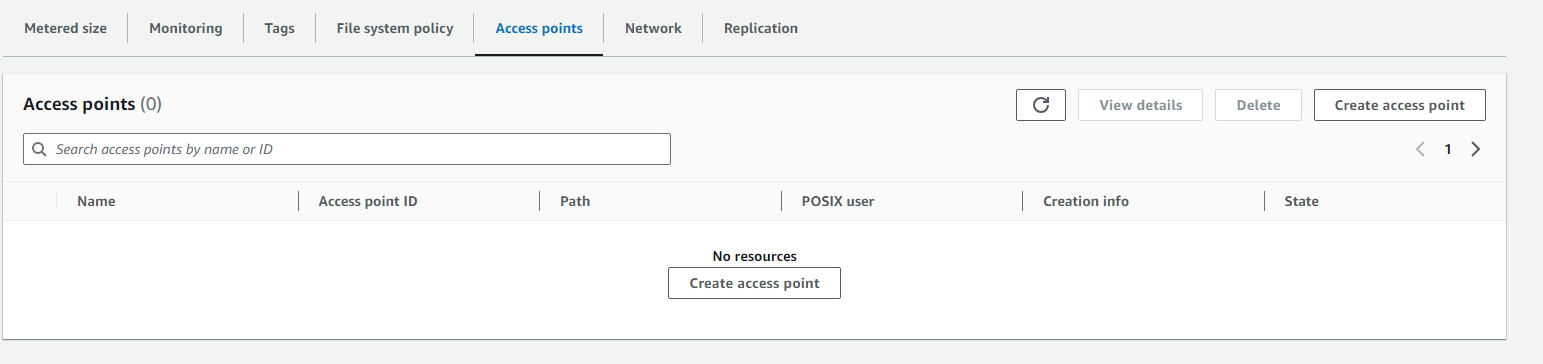

EFS Access Points

EFS Access Points are application-specific entry points that simplify the management of permissions for EFS file systems. They provide an abstraction over file system paths, which allows you to configure user permissions for different applications or workloads.

Key Features of EFS Access Points

Simplified Access Management: You can specify different access points for different applications using the same file system, each with its own root directory and POSIX permissions.

User Identity Overwrite: Access points allow you to specify a user and group ID that are applied when a client accesses the file system through that specific access point. This ensures consistent file ownership and access control, even across different clients or applications.

Automated Directory Creation: Access points can create directories dynamically for new users, simplifying the setup of multi-user environments.

Use Case:

EFS Access Points are great for multi-tenant environments where each application or user needs isolated access to different parts of the file system with different permissions. For example, a web server might use an access point to store logs, while a machine learning application uses another to store training datasets.

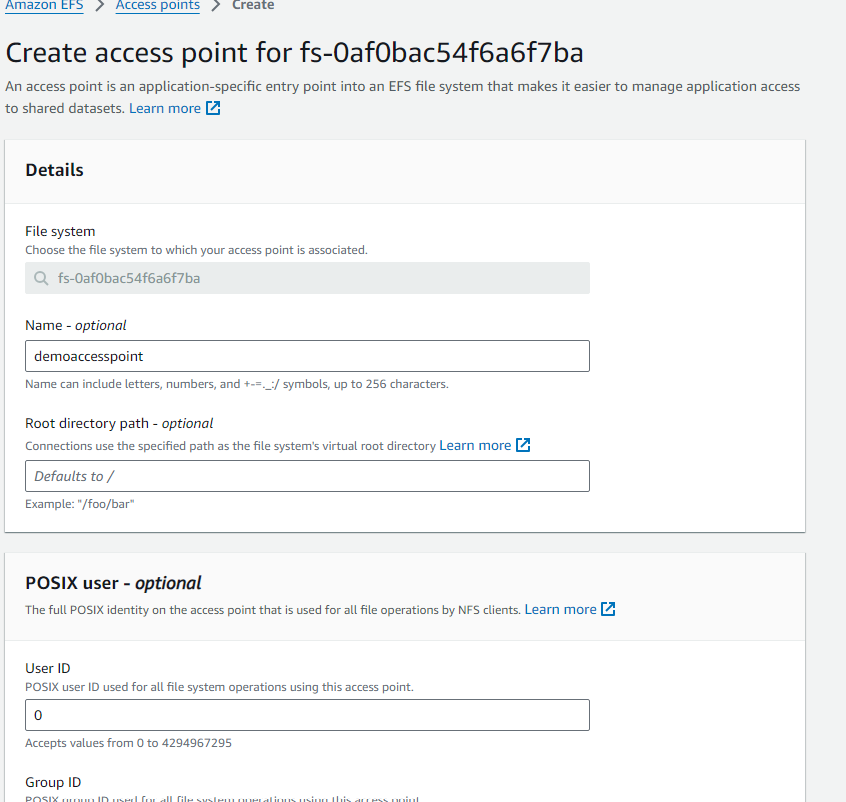

When creating an Amazon EFS Access Point, you have several options to configure POSIX permissions, root directory settings, and tags. Here's a breakdown of these options:

1. POSIX User (Optional)

User ID: This is the POSIX user ID (UID) that will be used for all file system operations by clients using this access point. The range is from

0to4294967295. For example,0is typically the root user.Group ID: This is the POSIX group ID (GID) applied for all file operations using the access point, with the same range of

0to4294967295.Secondary Group IDs: You can provide additional POSIX group IDs (GIDs) that will be used for access control. These are specified as a comma-separated list. This allows you to associate multiple group permissions with a user.

2. Root Directory Creation Permissions (Optional)

This section configures the root directory associated with the access point. EFS can automatically create this directory if it doesn’t exist when accessed for the first time.

Owner User ID: The user ID to own the root directory if it doesn't exist. Again, the values range from

0to4294967295.Owner Group ID: The group ID that owns the root directory. The same value range applies.

Access Point Permissions: You can specify the POSIX permissions for the root directory using the octal format. For example:

0755: This grants read, write, and execute permissions to the owner, and read and execute permissions to others (a common setting for directories).You can adjust these values to suit your security and access requirements. like below

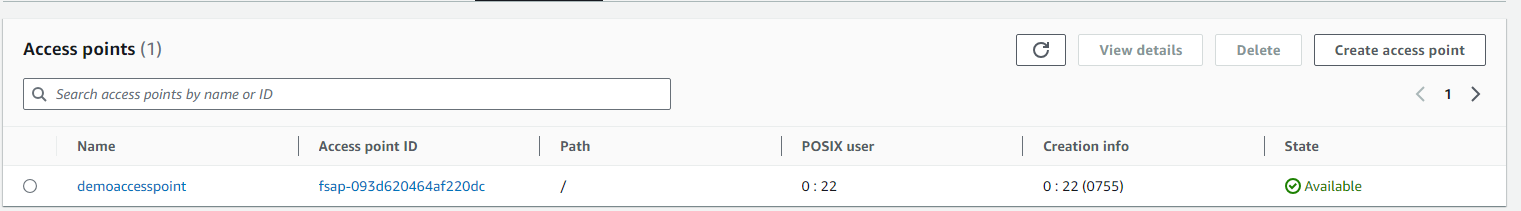

Now Access point for our Efs is created successfully !!!

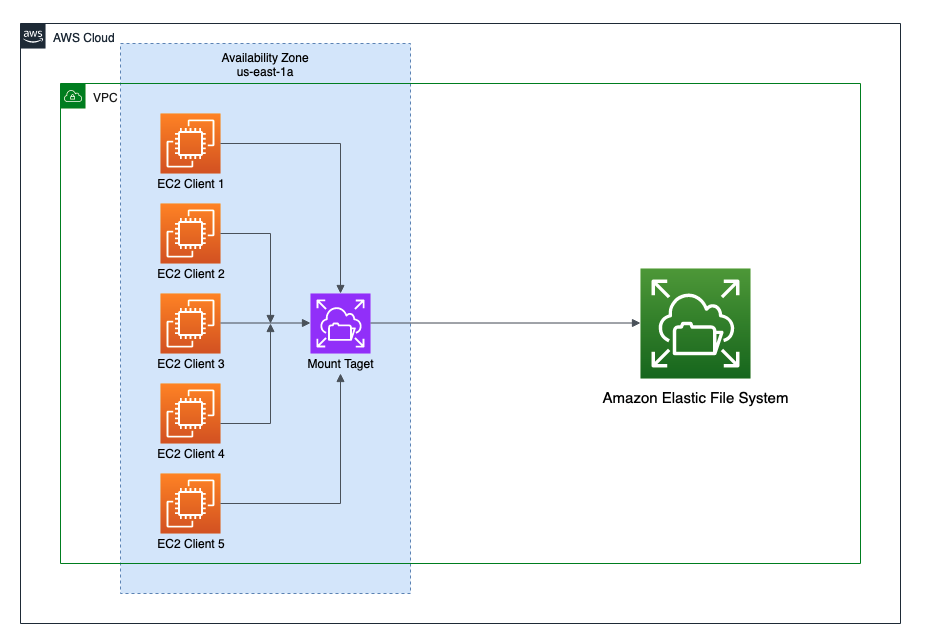

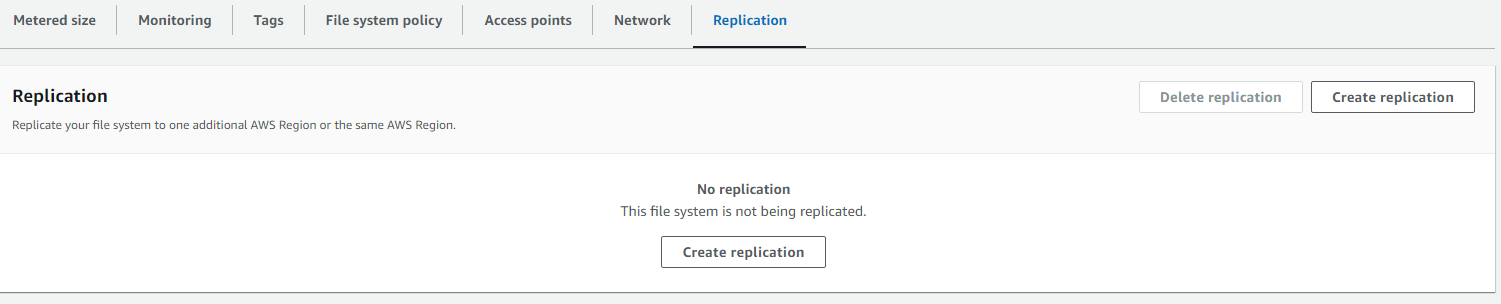

Cross-Region Replication for Disaster Recovery

One of the key advantages of Amazon EFS is its ability to replicate data across AWS regions. Replicating EFS file systems ensures high availability and data protection in case of region-wide outages. Here's how you can set up EFS Replication step by step.

Steps to Set Up Amazon EFS Replication

1. Create Your Primary EFS File System

If you haven't set up your primary EFS file system yet, follow these steps:

Go to the AWS Management Console.

Open the Amazon EFS dashboard.

Click on Create file system and configure your settings like Throughput mode, Lifecycle management, and Performance mode.

Set up mount targets across the necessary Availability Zones (AZs).

2. Start the Replication Process

Amazon EFS makes it easy to start replication to another AWS Region. Here’s how:

Open the Amazon EFS Console.

Select the source file system you want to replicate.

Click on Actions and then select Replicate.

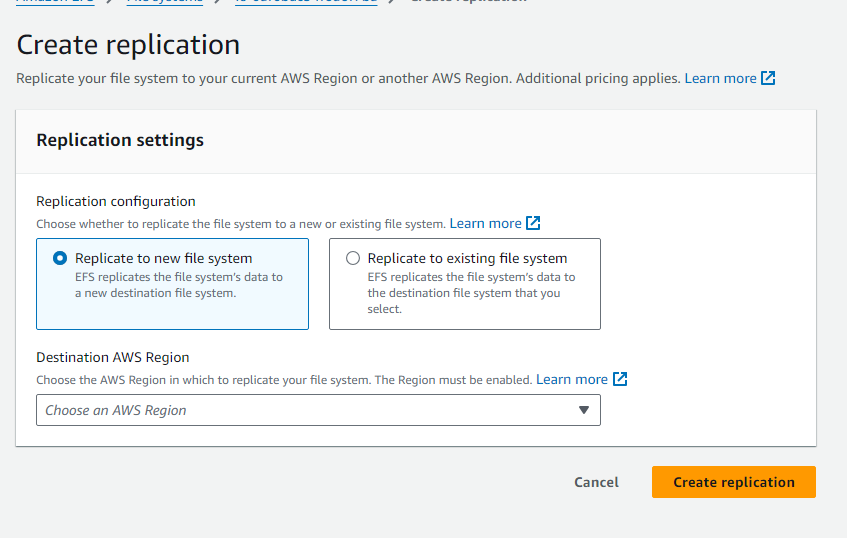

3. Configure Your Replication Target

In the replication wizard:

Choose the Destination Region where you want the replicated file system.

AWS will automatically create a target file system in the destination region. You can adjust the settings, such as performance and availability, for this target. like below either you replicate into existing or new file system.

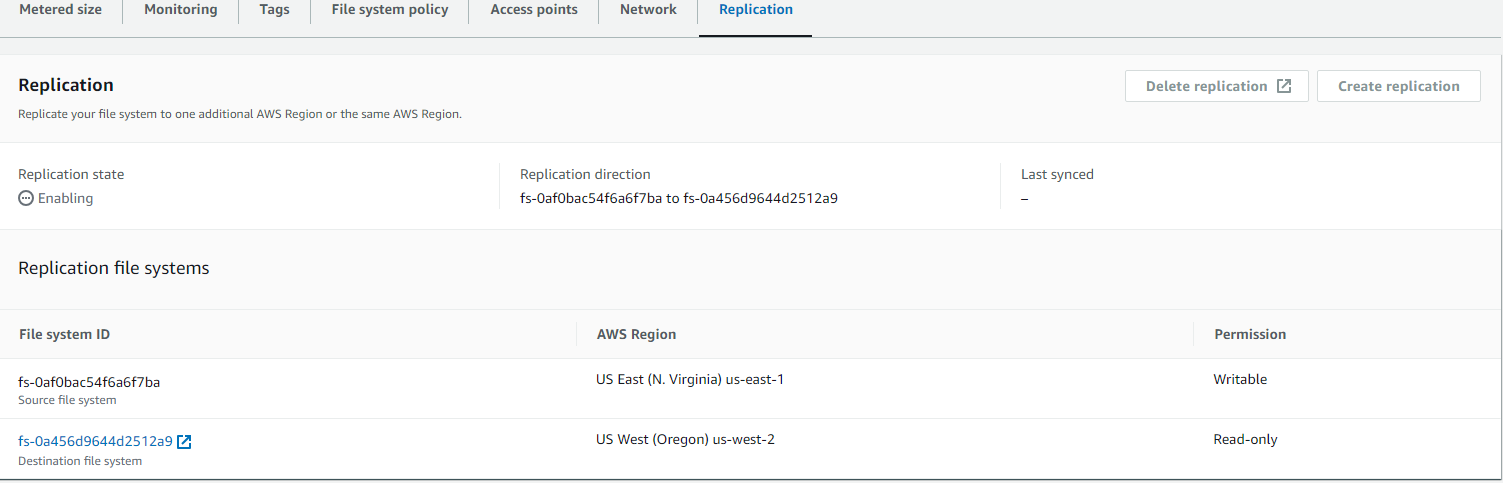

Now Cross Region Replication is successfully done !!!

4. Monitor Replication

Once the replication starts, you can monitor its progress in the Amazon EFS Console or Amazon CloudWatch. This includes tracking metrics like replication completion time and data transfer rates.

5. Failover and Failback

In case of a regional outage, you can easily failover to the replicated file system in the target region:

Mount the replicated EFS file system to your EC2 instances in the target region.

If needed, you can reverse the replication process after the disaster has been resolved to failback to your original file system.

6. Stop Replication (Optional)

If you no longer require replication:

Open the Amazon EFS Console.

Select the source file system and click Stop replication.

This will halt the replication process while retaining the replicated data up to that point.

Conclusion

Amazon EFS provides a robust and scalable file storage solution for a wide range of use cases, from web serving to big data analytics. By using EFS’s various storage classes, you can optimize costs based on your data’s access patterns. Additionally, with EFS Replication, you can ensure your data is protected and available even in the event of regional disasters.

Setting up cross-region replication ensures data availability and business continuity, making Amazon EFS an excellent choice for enterprises requiring scalable, reliable, and highly available storage.

Want to learn more or collaborate on AWS topics? Feel free to get in touch!

Subscribe to my newsletter

Read articles from Venkata Pavan Vishnu Rachapudi directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Venkata Pavan Vishnu Rachapudi

Venkata Pavan Vishnu Rachapudi

I'm Venkata Pavan Vishnu, a cloud enthusiast with a strong passion for sharing knowledge and exploring the latest in cloud technology. With 3 years of hands-on experience in AWS Cloud, I specialize in leveraging cloud services to deliver practical solutions and insights for real-world scenarios. Whether it's through engaging content, cloud security best practices, or deep dives into storage solutions, I'm dedicated to helping others succeed in the ever-evolving world of cloud computing. Let's connect and explore the cloud together!