Intro to Docker: Why Containerization Matters for Developers

Hemanth Gangula

Hemanth Gangula

Have you ever felt the frustration of an app that works perfectly on your machine but falls flat when you try to run it elsewhere?

You spend hours troubleshooting, only to find that the issue is due to differences in environments—mismatched dependencies, conflicting configurations, or incompatible operating systems. It’s a common problem in software development that wastes time and energy.

What If Your App Worked Everywhere?

Imagine if you could package your application so it runs flawlessly no matter where it’s deployed. What if “it works on my machine” actually meant “it works everywhere”? That’s where Docker steps in.

VM Architecture vs. Containerization Architecture:

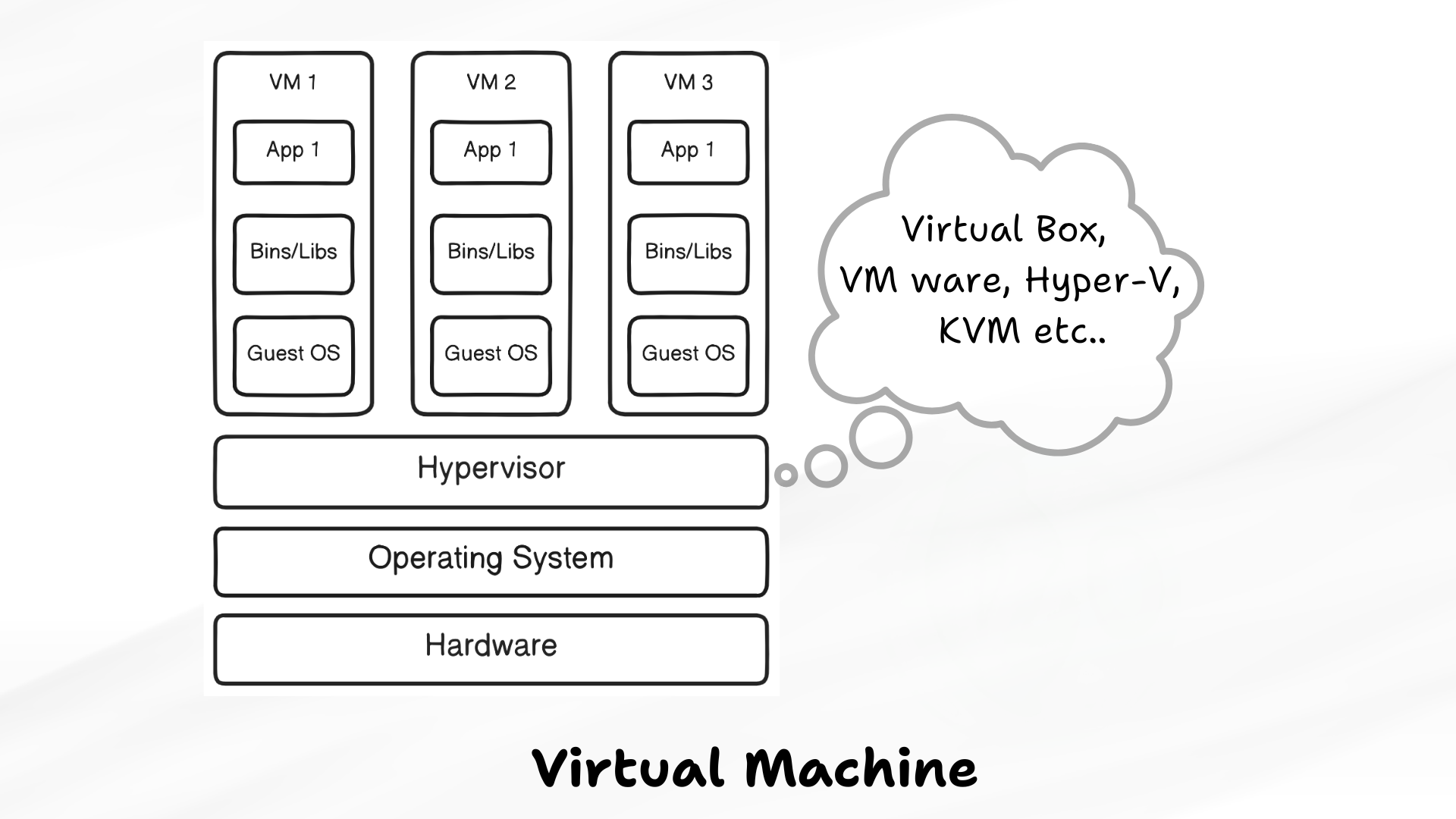

Before we dive deep into Docker, it’s important to understand the difference between how we used to deploy applications with Virtual Machines (VMs) and how we do it now with containers. Let’s break it down simply.

What Is VM Architecture?

Think of a Virtual Machine as a complete computer running inside your computer. Each VM comes with its own operating system, storage, and applications. It’s like giving each app its own mini-world to live in, but here’s the catch each world comes with the weight of an entire operating system, which takes up a lot of resources.

Example:

Say you’re running two apps on a single server using VMs. Each VM needs its own full operating system, so you end up duplicating the OS for each app. This means you’re using extra CPU, memory, and storage for every VM—even though they’re doing similar tasks. Not very efficient, right?

Key Points:

Resource Hog: Each VM includes a full OS, making them resource-heavy.

Full Isolation: VMs are fully isolated, which is great for security but not so great for your machine’s resources.

Slow Start: VMs can take a while to start up because they have to boot up their own OS.

Hard to Move: VMs are big and clunky to move from one place to another.

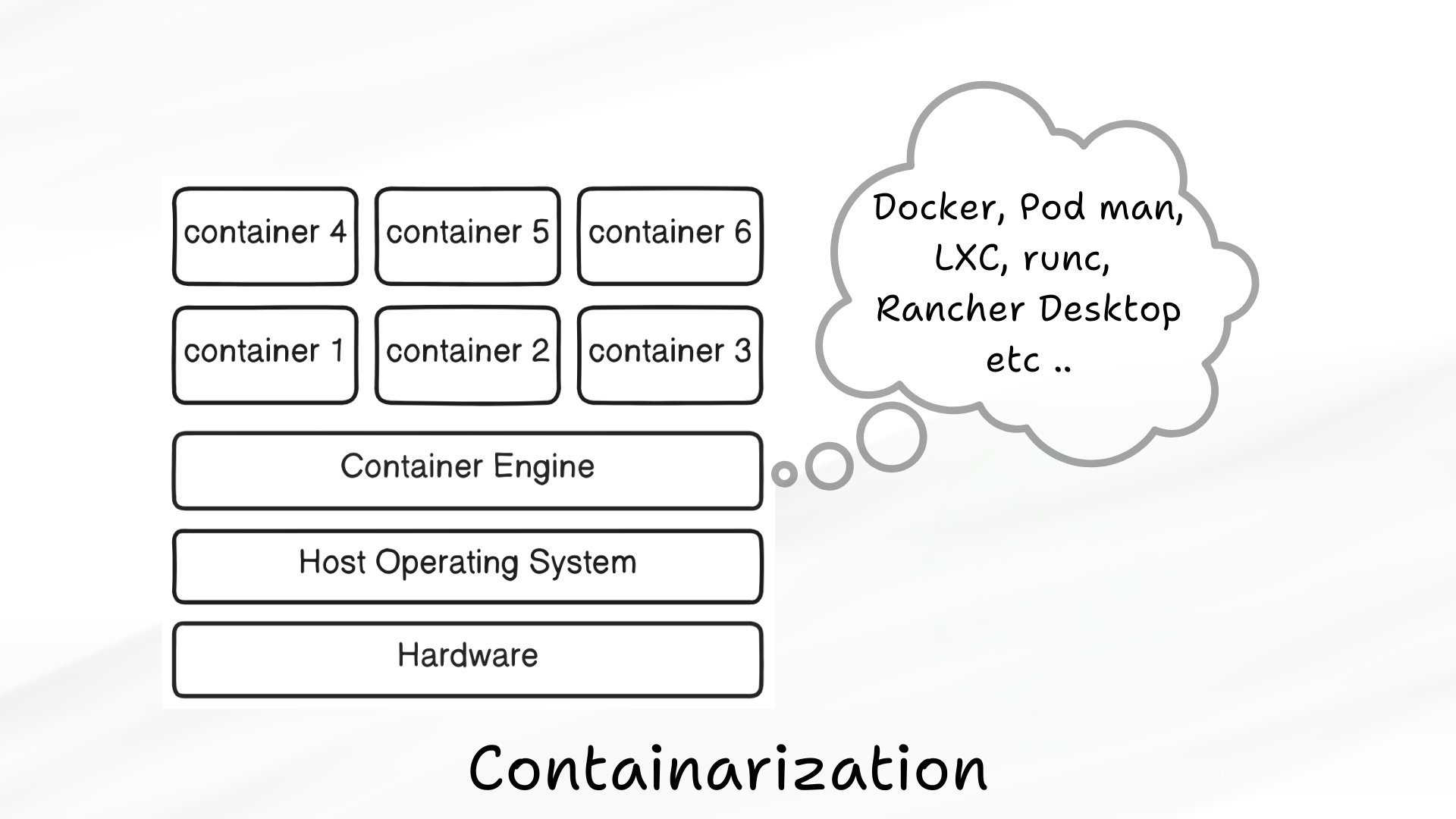

What About Containers?

Now let’s talk about containers. Unlike VMs, containers don’t need their own OS they share the host’s operating system. Containers are like lightweight, self-contained boxes that hold just the app and its essentials. They use far fewer resources because they don’t carry the extra baggage of a full OS. We'll continue to discuss this further in the section below.

In the image above, each container represents an application or a feature of an application, which is particularly useful in a microservices architecture. This allows individual features to be scaled independently. For example, in an e-commerce platform, many users browse products, but only a few proceed to purchase. In this case, the container handling payments can be scaled differently from the one managing product views. Each container includes only the necessary libraries and dependencies, which makes it lightweight and resource-efficient.

Example:

If you run the same two apps with Docker containers, both apps share the same OS. This means you avoid duplicating the operating system, and your apps run in their own little bubbles, using just what they need.

Key Points:

Light and Fast: Containers share the host OS, so they use fewer resources than VMs.

Quick to Start: Containers start almost instantly because there’s no OS to boot.

Portable: Containers are easy to move around, making deployment a breeze.

The Big Picture: VM vs. Containers

For beginners, here’s the takeaway:

VMs are like heavy-duty suitcases that carry everything, including an OS, which makes them slow and resource-hungry.

Containers, on the other hand, are like carry-on bags—light, fast, and perfect for getting your apps where they need to go without all the extra weight.

Meet Docker: The Game-Changer

Docker is a game-changer in the world of software development. It’s a platform that uses containers—self-contained environments that bundle everything your app needs to run smoothly. With Docker, you ensure that your app behaves the same in development, testing, and production, putting an end to the "works on my machine" issue.

Why Docker is Taking Over

Docker’s popularity isn’t a fluke. It’s become essential in modern software development, especially for DevOps and cloud-native applications. In this article, we’ll dive into why Docker is so valuable, how it tackles key issues in software deployment, and why you should be excited to use it. Whether you're new to Docker or looking to deepen your understanding, you’re in the right place. Let’s explore how Docker can revolutionise the way you build and deploy applications.

Is Docker the Only Containerization Tool?

Not at all! While Docker is currently the superstar of containerization, it’s important to remember that in DevOps, the focus is on the concept of containerization, not just the tool. There are other powerful players in the game, like Podman, Buildah, LXC (Linux Containers), and CRI-O. Each has its own strengths and quirks, offering developers different ways to manage containers.

The key takeaway? It’s not just about learning Docker—it's about understanding how containerization works. Tools will come and go, evolving as technology advances, but the core concept remains essential. So while Docker is a great place to start, the skills you pick up will serve you well, no matter which tool becomes the next big thing.

Importance of Understanding Docker

Imagine a world where your applications run smoothly, no matter where they’re deployed—be it your local machine, a staging server, or the cloud. Docker makes this possible by eliminating environment inconsistencies, ensuring your code works everywhere.

Understanding Docker is crucial because it simplifies deployments, enhances collaboration, and unlocks new levels of scalability. Whether you're working with microservices, cloud-native architectures, or just need a reliable development environment, Docker provides the tools to streamline your workflow and reduce deployment risks.

Mastering Docker positions you as a versatile and valuable contributor, equipped to handle everything from local development to production deployments. It's not just a tool—it's a game-changer in modern software development, and learning it opens doors to efficiency, consistency, and innovation.

Overview of Containerization

Imagine if you could package your entire application code, libraries, dependencies, and all into a single, portable unit that runs consistently across any environment. That’s the essence of containerisation, a breakthrough that has revolutionized how we deploy software.

What is Containerization?

Containerization is a method of bundling an application and its dependencies into a "container," ensuring that it runs the same way regardless of where it’s deployed. Unlike traditional software deployment, where applications are tied to specific operating systems and configurations, containers are lightweight, portable, and isolated. This means no more “it worked on my machine” problems containers bring consistency and reliability to software development.

In traditional deployments, applications might be affected by differences in environments, leading to compatibility issues. Containerization solves this by encapsulating everything the application needs to function, creating a self-sufficient unit that works in any environment—from a developer's laptop to a cloud server.

Understanding containerization is crucial because it forms the backbone of modern software deployment, enabling faster, more efficient, and more reliable application delivery. As you dive into Docker, you’ll see just how transformative this approach can be.

History and Evolution of Containerization

To truly appreciate Docker, it’s helpful to understand the journey that brought us here. Containerization may seem like a modern innovation, but its roots stretch back decades.

Brief History of Containerization

The concept of isolating applications in lightweight environments began in the early 2000s with technologies like chroot in Unix, which allowed for the creation of isolated file systems. This laid the groundwork for more advanced tools like Solaris Zones and Linux Containers (LXC), which offered better process isolation and resource management.

Key Milestones Leading to Docker

The real game-changer came in 2013 with the introduction of Docker. Building on the foundations of earlier technologies, Docker made containerization accessible and practical for developers everywhere. Its ease of use, combined with powerful features like Docker Hub for sharing container images, rapidly propelled Docker to the forefront of modern software development.

Docker’s introduction marked a significant evolution in the software industry, transforming how applications are built, shipped, and run. It took containerization from a niche tool to a mainstream technology, essential for DevOps, microservices, and cloud-native applications.

Understanding this history gives you insight into why Docker is so impactful today, and how it became the go-to solution for containerization in the tech world.

How Containerization Works

Containerization is a method of packaging an application and its dependencies into a self-contained unit known as a container. Here's a brief overview:

Self-Contained Packages: Containers bundle everything your application needs such as code, runtime, libraries, and dependencies—into a single, portable unit.

Operating System Sharing: Unlike traditional virtual machines that require their own OS, containers share the host system’s operating system. This makes them lightweight and efficient, reducing overhead and speeding up execution.

Isolation: Each container runs in its own isolated environment, ensuring that it doesn’t interfere with other containers or the host system. This isolation is crucial for maintaining the consistency and stability of applications.

Portability: Containers are highly portable, meaning they can be moved across different environments—development, testing, and production—without any changes. This guarantees that the application runs consistently everywhere.

Benefits of Docker Over Traditional Virtual Machines (VMs)

Docker offers several advantages over traditional virtual machines (VMs), making it a preferred choice for modern software development and deployment:

Resource Efficiency:

Lightweight: Docker containers share the host OS kernel, eliminating the need for a full guest OS, which reduces CPU, memory, and storage usage.

Higher Density: You can run more containers on the same hardware compared to VMs, maximising resource utilization.

Faster Startup Times:

Instant Initialization: Containers can start almost instantly, unlike VMs that require time to boot an entire operating system.

Quick Scaling: This speed allows for rapid scaling of applications to meet demand.

Portability and Consistency:

Write Once, Run Anywhere: Docker containers are portable across different environments, ensuring your application runs consistently on any platform—be it your laptop, a testing environment, or production servers.

Environment Consistency: Docker eliminates the "it works on my machine" problem by providing a consistent environment across the development lifecycle.

Simplified CI/CD Pipelines:

Streamlined Workflows: Docker integrates smoothly into continuous integration/continuous deployment (CI/CD) pipelines, automating the building, testing, and deployment of containers.

Reduced Complexity: By using Docker, teams can avoid the complexities associated with managing multiple VMs for different stages of the development process.

Scalability and Flexibility:

Microservices Architecture: Docker is ideal for deploying microservices, allowing you to scale individual components of your application independently.

Dynamic Resource Allocation: Docker's lightweight nature enables dynamic allocation of resources, making it easier to manage large-scale applications.

Simplified Maintenance and Updates:

Rolling Updates: Docker makes it easy to update applications with minimal downtime, thanks to features like rolling updates and automated rollback.

Isolation: Each container is isolated, so updating one service doesn’t affect others, reducing the risk of system-wide failures.

Conclusion

In this article, we've explored the foundational concepts of Docker and containerization, highlighting why they are transformative in modern software development.

Recap of Key Points:

Containerization offers a powerful way to package applications and their dependencies into lightweight, portable units, ensuring consistent performance across different environments.

Docker takes containerization to the next level, making it accessible, efficient, and integral to DevOps practices.

Benefits Over VMs: Docker’s advantages over traditional virtual machines are clear—faster startup times, resource efficiency, and portability make it a game-changer for deploying and scaling applications.

With Docker, 'It works on my machine!' is no longer an excuse—now it works everywhere, so you're officially out of alibis!

Next, we'll explore Docker's key concepts—Images, Containers, and Docker Engine—and how they work together. Stay tuned for practical insights and examples to help you start using Docker effectively!

Subscribe to my newsletter

Read articles from Hemanth Gangula directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Hemanth Gangula

Hemanth Gangula

🚀 Passionate about cloud and DevOps, I'm a technical writer at Hasnode, dedicated to crafting insightful blogs on cutting-edge topics in cloud computing and DevOps methodologies. Actively seeking opportunities in the DevOps domain, I bring a blend of expertise in AWS, Docker, CI/CD pipelines, and Kubernetes, coupled with a knack for automation and innovation. With a strong foundation in shell scripting and GitHub collaboration, I aspire to contribute effectively to forward-thinking teams, revolutionizing development pipelines with my skills and drive for excellence. #DevOps #AWS #Docker #CI/CD #Kubernetes #CloudComputing #TechnicalWriter