Phaser World Issue 202

Richard Davey

Richard Davey

Welcome to Issue 202 of Phaser World. As we enter the final weeks of October, we bring you new releases, new games, and yet another vast Dev Log section. An incredible amount is going on at Phaser Studio right now and we do our best to sum it all up in this newsletter each week. So please read, and if you’ve any questions just ask us on Discord - we’re only too happy to chat.

🌟 Phaser Editor 4.5.1 Released

An important point release of Phaser Editor that solves several high-priority issues.

We are happy to announce the immediate availability of Phaser Editor 4.5.1. We felt it was important to release this update to address some priority issues.

Fixes the 'white screen of death' issue, where the Editor would hang on a white screen after opening a project if the server was slow to start.

Fixes tilemap pixel rounding issue in the Scene Editor.

Fixes the npm install command when the proxy is configured but disabled.

Fixes the variable name generation.

We have also taken the opportunity to update the editor to the latest version of Phaser, version 3.86.

🎮 Honey Snap

Guide bees to victory in this sweet, honey-filled challenge that will keep you buzzing for more.

Honey Snap, besides sounding like a tasty breakfast cereal, is an addictive new game from Black Moon Design. You're presented with a grid of hexagons set into the bark of a tree. Below it are honey-filled shapes that you must drag into the grid. If you manage to complete a line in any direction, it vanishes in exchange for lots of points.

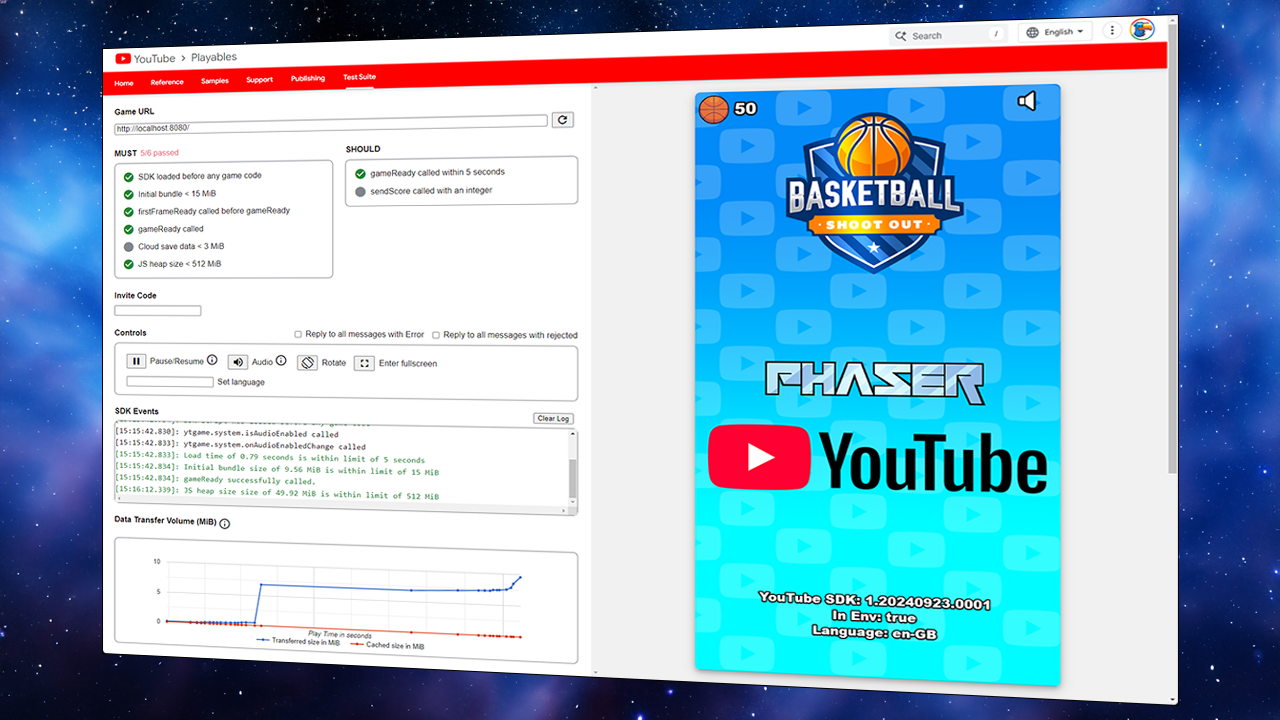

🎙️ YouTube Playables Phaser Tutorial

Learn how to create YouTube Playables with our extensive new tutorial.

Who would have thought YouTube would become such a popular web gaming platform? That's precisely what it has done with its YouTube Playables service. After a successful beta period, users of the YouTube site or app worldwide can now engage in an extensive range of web gaming titles, and developers we've spoken to have seen great success with them.

Of course, being web-first, Phaser is an excellent choice for creating games for submission to the YouTube Playables content team. We have published a brand new YouTube Playables Template and a comprehensive tutorial to make that process as easy as possible. The template includes a complete game, our Playables SDK wrapper, and full documentation. It is now available directly from the Google web samples repository.

To help you get the most from our template, we've also written a detailed new tutorial that covers the SDK and the Test Suite and how to use them in depth. So now you have the perfect reason to enhance your Phaser games into compelling YouTube Playables and introduce them to millions of new gamers.

Read the YouTube Playables Tutorial

🎮 Gloomyvania

A nostalgic platformer filled with challenging enemies and classic arcade vibes.

Gloomyvania is a lovely homage to the classic NES Castlevania series. In this arcade platformer, you run and 'gun' to destroy a variety of creepy enemies as you explore the castle.

💰 Let us publish your Phaser Games

Got a Phaser game you’d like published? Get in touch!

At Phaser Studio, we’re all about empowering developers to create great games. By focusing on the framework and tools to allow this, we’ve seen thousands of games released over the years, many of which went on to critical and commercial success.

And we’ve noticed the publishing landscape is changing yet again. New platforms are opening up that introduce keen opportunities for the right games. And it’s something we’re deeply invested in.

So - if you’ve developed a Phaser game you feel would benefit from wider distribution to exciting new platforms, then we’d love to hear from you. Please email a link to your game or games portfolio to bizdev@phaser.io , and we’ll be in touch.

Phaser Studio Developer Logs

👨💻 Rich

When I sat down to write my Dev Log, I thought about all the really exciting things that had happened this week. Then, I realized I can’t talk about most of them yet! We sign NDAs with our partners for a reason - so I’ll just have to bottle it up until such time as we can shout about it. Back in the realm of things I can actually discuss, we are getting close to the release of several new products. Can talks about his Discord Activities tool in his Dev Log this issue and once we complete some more testing and documentation we will release version 1 of this. There are many cool things this tool will evolve to do in the future, but for now, it’ll be good to have the first release live.

I’ve also been working on the new documentation site with Zeke. This has been a huge amount of work, but it will be so worth it. We have a couple loose ends to tie up on Monday, and then we can make it live. The site is a combination of lots of things. It’s a replacement for our API Documentation that currently lives at newdocs.phaser.io - we have redone all of this, and it’s now easier to browse and search than before. We have also bought together lots of written content and authored pages and pages of new material. This includes guides that I’ve written, and we’re also using some of the fantastic work of Samme and RexRainbow, obviously with full permission and attribution. This revised content allows developers to read about concepts, internal systems, and ‘how things work’ in one single location without having to try and extract that all from the API docs, which isn’t even possible in many cases. The docs site even has an ‘AI Search’, so you can use normal language prompts to query the contents. Having this live will be massive and I cannot wait.

Remaining on the tutorial front, I also finished my comprehensive tutorial on creating YouTube Playables using Phaser. This was featured earlier in this newsletter, so I won’t go over it again here, suffice to say it was great to finally publish it. The tutorial comes with a feature-packed template and a full sample game. It was fun to actually work on a game again! and even more fun to then see it merged into the official Google repositories 😀 Working with the team at YouTube has been a great experience. We’re excited to see where that will evolve in the future.

This week I’ve also been working with the team at Werplay. They’ve years of experience creating amazing games, and as one of Phaser’s enterprise customers we are helping them build a playable ads template that will fit their specific requirements. Doing this has been a nice mental shift for me, and it has also led to some new features landing in the Phaser codebase that will benefit everyone, so a double-win! We create private Slack or Discord channels for our enterprise clients so they can get direct support from the team for their projects. If this is something that would interest you, please drop me an email.

In the coming days, we should have a new Technical Preview of Phaser Beam, the new docs site live, and the first release of the Discord Activities tool. Oh.. and just maybe something else pretty cool 😎

That’s it for now. This is yet another huge newsletter, so keep scrolling to read what the rest of the team has been doing — it’s pretty epic!

👨💻 Zeke - New Phaser Docs Site

This week was focused on adding the remaining documentation and cleaning up all previous documentation!

Documents added:

- Blend Mode, Blitter, Color, Container, Device, DOM Element, FX, Game, Group, Image, Layer, Light, Mask, Nine Slice, Particles, Plane, Render Texture, Rope, Scale Manager, Shader, Sprite, Text, Tile Sprite and Video.

The clean-up has been completed by:

going through and seeing if everything makes sense and is readable

spelling and grammatically correct

check links ensuring all are working and pointed to the right destination

checking examples ensuring the code actually works

standardized formatting on all pages on the site

That's all for the updates this week. If everything goes according to plan, the new documentation site should be ready by the end of the week.

👨💻 Can - Discord Activities Tooling

This week, I've made significant strides in finalizing the Discord Activities integration.

Upgrade to Tauri v2

I upgraded the project from Tauri v1 to v2. The biggest challenge was the new permission system for plugins, especially on macOS. It took more time than expected due to limited documentation. But rest assured, everything is now running smoothly!

Streamlined Discord Developer Portal Access

I've added a direct link to your Discord Developer Portal within the app. It fetches your Discord APP_ID and takes you straight to the URL mapping page. The generated proxy URL is auto-copied, just paste and save to profit!

More Polishing

To improve user experience, I've implemented alert boxes that inform you of any errors or successful events without disrupting your workflow.

Almost There

We're wrapping up the installer systems. With a few more tweaks, it will be ready for its first release!

Until then, happy coding everyone!

👨💻 Pete - Box2D Physics

On Monday I tracked down some strange numbers in the dynamic tree, where it appeared to be faster if the tree balancing was always an even split. This was (of course) not the case, and eventually I found a nasty bug. The tumbler 1000 demo doubled in speed with this fix, so that was a lovely start to the week!

I continued to reduce the transient memory allocations, concentrating mostly on a structure called b2ManifoldPoint I managed to reduce it from over 5000 per world step to 0. The current design is using a handful of these objects which are allocated only once, when the world is first created. Running some long-term mobile tests following the tree fix and the reduced garbage accumulation, we now see a solid 60fps with 1000 boxes in the tumbler on iPhone 14 and 15. On iPhone 13 we regularly touch 60 fps but then it drops, I think there may still be work needed on the garbage for those older devices.

I've revisited the frame timing system and given it a capability to catch up a dropped frame or two if the previous frame was at or above the physics simulation fixed frame rate (typically 60 fps). This approach works to smooth out temporary glitches like we see on mobile, while it 'does something else', without getting into a death spiral where the catch-up frame is continually triggering another one.

More optimisations, more memory management changes... tumbler 1000 is no longer a useful test and I've stepped up to tumbler 1250 even on mobile devices.

A note about mobile devices: performance on iPhone is significantly better than on Android. Even the more powerful Android devices on BrowserStack are significantly slower than the older iPhones. I'm not sure how to rectify this. Everything is running well on both Android and iPhone, but on Android the timing values are almost double. My understanding (from a Tom's hardware phone comparison) is that the CPU should be entirely capable of keeping up, so maybe I'm hitting RAM too hard, or maybe there's another element at play. I'll continue to experiment and see if I can work out how to reach parity.

Later in the week I ran the full range of tests to make sure nothing had broken during my heavy handed optimisation passes. Three (out of 8) were broken, so I spent some time with github, reverting to older commits and retesting to isolate the commit that broke things. In this way I have located four 'silly' bugs (x instead of y, min instead max, that type of thing) and one extremely sneaky bug that was well hidden inside the manifold creation system. With these all fixed all my tests are running again, although I am facing an issue where things rarely and randomly explode upwards. I'm calling it the 'popcorn bug' and I'll dig into it next week (with plenty of salt).

I took two days to experiment with WASM via AssemblyScript. I'd love to squeeze a bit more speed out of this, and the code structure (being translated almost verbatim from the C original code) should be ideal. TLDR: it was not good. The long version: I had only a short period of time to perform the testing, so I chose a suitably quick goal: replace the AABB system with a drop-in 100% wasm system. The AABB (axis aligned bounding box) system is called a phenomenal amount, but it's only called from 30-40 unique places. It takes in tiny amounts of data, does some processing, then throws out tiny answers. It's almost a perfect test except that the work it does is minimal. Ideally we'd find a system with the other properties but which does some very grunty processing inside - e.g. pixel manipulation in-situ (the large amounts of data going in can be controlled by the wasm, and processing the data where it lies means we don't have to do slow conversions on the way out, plus pixel manipulations are often relatively expensive per-pixel).

After I'd built the entire system in AssemblyScript and compiled it to WASM, linked the modules, and modified the 30+ places that called AABB related functions, the time test came back 4x slower than JS alone. I put this down to a relatively high overhead switching from JS to WASM and back again... I'd read some internet discussions that downplayed this enormously, but I think I hadn't read deep enough or widely enough. On the basis of these results I'd say that you don't want to be calling out to WASM more than one or two times per frame, making the AABB possibly one of the worst test cases I could have chosen. I would like to revisit this issue, but before I do, I'll need to locate a more suitable function, with much heavier internal processing, only one or two calls per frame, and a suitable data format or data requirements. To do this properly substantial changes will need to be made to the data format used by the engine core functions. Changing the data format will require rewriting a lot of the code uses that data, and in a physics simulation almost all of the systems rely on the same fundamental world representation. Throwing the original C at emscriptem might be a quicker solution, although I expect there could be a host of other problems going in that direction.

Plans for next week:

fix the 'popcorn' bug

make some new tests and demos stretching the engine and hunting for anything that's broken in there

add new helper functions to the growing collection and make sure there's a meaningful example for each one

polygon decomposition into triangle connected hulls would be lovely to have

think about the engine in gaming terms: what features will be needed, and which ones are particularly awkward in Phaser Box2D right now

👨💻 Francisco - Phaser Launcher

Hello, everyone!

I’ve been working on adding more features to Phaser Launcher and running internal tests to explore how we can create our own packages, allowing for greater flexibility such as the option to use TypeScript if desired (though this doesn’t mean we’ll be using it).

The packaging tests with Rust have been successful; we managed to build packages for small test projects. However, there’s still a lot of work to do in this area, so we’ll continue these experiments and revisit them in the future.

As for our initial code editor, we’ve already implemented several commands like copy, paste, and delete. The key to these commands is the structure I’ve developed to handle everything smoothly.

Thanks to the use of states, we’ve separated the state management of the file system, tabs, the contextual menu, and options like reserving files for copy-paste operations. Slowly but surely, our editor is taking shape.

We also have an internal roadmap for this tool, crafted by the great Richard, filled with interesting ideas that make this application a compelling option for those just starting with our powerful framework.

👨💻 Arian - Phaser Editor

Hello friends.

It's been a while since I last wrote here. In Florida, many of us had to prepare for the impact of Hurricane Milton and then deal with the damage it caused. However, this forced break has allowed me to take a break from the computer and recharge my batteries to do the next job: the map editor.

Having a tilemap editor built into Phaser Editor is a feature I've wanted for years, and we're finally working on it. Many of the games you make with Phaser are based on tilemaps, and I think this new tool will eventually replace the need to use third-party map editors, such as Tiled.

There is definitely a huge advantage to having a map editor built into the Scene Editor, since in many cases, levels not only use maps but also parallax backgrounds, images of the environment elements, character sprites, and now you can do all of this in a single tool and with immediate visual feedback.

A map editor is a tool with many features and use cases, so it will take time to develop. However, I believe that we will have a first version very soon. Here are some images of what we have done this week.

Tales from the Pixel Mines

October 20th 2024, Ben Richards

This week, filters get up to speed. I've implemented several filters to check the system, and it's looking good. Let's talk about the changes you'll see in Phaser Beam when it comes to filters.

Foundations

This has been a long time coming. Phaser Beam is built around a whole set of upgrades and changes that were always intended to make it easier to use filters. But it took a while to get everything ready for the new system, so I'm ecstatic that the design is finally working.

The DrawingContext forms the backbone of rendering in Beam, but it was actually designed to keep things consistent with filters. It holds a reference to a framebuffer, and all the settings such as blend modes, camera, scissor mode, etc. When we render, the DrawingContext applies all these settings to make sure everything is properly lined up.

The WebGLGlobalWrapper is a vital companion. It performs state management, ensuring that we always know what all the bindings in WebGL are set to. It also eliminates redundant calls: if we try to set a value that wouldn't change the state, we don't do anything. Thus the DrawingContext can track everything, but doesn't need to update everything.

As discussed in previous installments, I've also developed the RenderFilters object to substitute for the old FX component. This acts as a wrapper for a game object, via an internal Camera. It's the Camera that does most of the work when it comes to filters. The wrapper mainly just provides an extra transform matrix, necessary for separating the internal and external worlds of the Camera.

RenderFilters Child Transforms

The short of it: the child wrapped by RenderFilters keeps its transform. The RenderFilters' internal camera snaps to the child when it's added. This has a number of consequences.

Why do we need to focus the internal camera on the child? Well, we want the child to appear just like a texture, not as an object oriented in the game world. There are actually two ways to ensure this. The first way is to remove all transforms from the object, returning it to the origin. The second is to transform the camera to focus on the object.

Of course, I tried the worst way first. I removed transforms from the object. In hindsight, this was fighting against the natural shape of rendering. It's just so much more confusing to try to map everything back to an un-transformed object.

Focusing the camera is much better. There are problems, though.

Framebuffer Resizing

Depending on the size of the child object, a filter requests a specific size of framebuffer. But that could mean a whole lot of different sizes, across different objects and filters. Too many framebuffers could cause a crash.

We reduce framebuffer count by using a pool. Unused framebuffers can be resized to meet demand.

It turns out it's important to manually delete framebuffer and renderbuffer objects when rebuilding them. WebGL's own garbage collection, if it exists, is inadequate to frequent resizing.

But we don't want to resize if we don't have to. The resize operation can take half a millisecond or more, because it's creating a new framebuffer behind the scenes. In a 16 millisecond frame, that's a huge cost - just a dozen resizes are already eating half the frame.

So by default, we resize just once, when a child is added to a RenderFilters wrapper.

However, some objects change their size every frame. For example, a ParticleEmitter has bounds defined by its particles as they fly about. How can we deal with this?

I've added a flag to automatically resize every frame. However, because of the above reasons, this flag is disabled by default. It's not the best solution.

I've also added a flag for running

preUpdateon the child every frame. This flag is on by default, though, becausepreUpdateis necessary for all sorts of things, from particle movement to sprite animation.

Camera Focusing

Rather than relying on automatic resizing, we can instead give the user the ability to set the size of the RenderFilters. I've added a focus(x, y, width, height) method. This focuses the internal camera such that the child is at x, y within a view sized to width, height.

For example, the troublesome ParticleEmitter can be set to draw to a screen-sized framebuffer, and positioned within that screen-sized view. If you know what you need the RenderFilters for, you can really customize the way it works.

It's also possible to manipulate both the camera and the child after the camera is focused. The system is flexible. Just be aware that the automatic focusing will affect the transforms and origin of the RenderFilters, and the camera perspective. The focus method is actually a wrapper around setSize, setScroll and setOrigin calls. But in most circumstances, I hope focus is the simpler choice!

This gives you more control over internal filter coverage than ever before.

Camera Alpha and Compositing Changes

What should happen when you set RenderFilters.alpha? I think it should treat the RenderFilters as a texture, and change the alpha of that texture. If the alpha is 0.5, we should see the background behind the texture at 0.5.

That's not how Phaser 3 Pipelines handle alpha on cameras. There, camera alpha multiplies the alpha of every child being rendered by the camera. This means that objects blend together. If the alpha is 0.5, two objects overlapping will add to 0.75, and we would see the background behind them at just 0.25.

Here's an example of alpha on child objects. Observe how the text is bright in some places and dark in others, depending on the game objects behind it.

And here's an example of alpha on the final composited texture. See how everything is the same level of brightness, because it's blended before alpha.

In Beam, I've changed cameras to composite their children before applying alpha. This produces the more consistent results. Because filters run through a Camera, it also makes filters more consistent. Anything in a RenderFilters wrapper is composited to a framebuffer texture before alpha is applied.

This means you can use RenderFilters to not render filters at all! You can use it as a compositing tool, rendering several objects in a Container or Layer, then adjusting the alpha of the composite so you can't see one object through another. I think that's pretty useful.

Auto Padding

Some filters (and FX before them) have effects that spread out beyond a single texel. For example, a blur wants to blur the left and the right together. But what happens when a texel is on the very edge of a texture?

Well, by default the blur cuts off, and you see a sharp edge. That's OK if the edge is on the edge of the screen, or is otherwise obscured. But if the edge is in clear view, it's pretty obvious.

So FX had padding added. This was a manual override to add room for extra spread. It was applied by the FX controller and affected every FX on the object.

Filters are more sophisticated. Instead of a manual padding value, each filter controller has its own padding override, which is a Rectangle describing the left, top, right, and bottom sides of the filter. By default they are all 0, so no padding is added. You can change these to any values necessary.

But if you set the override to null, the filter controller uses auto padding. This is coded into filters which have spread. It computes the necessary padding from the controller's settings. (And on objects without spread, it's still 0.)

This should take the guesswork out of padding filters!

You can also override padding to inset, removing the edges of the texture. This could be useful for trimming down a filtered object during the rendering sequence.

More Filters

A lot of this work was precipitated by discoveries made while converting FX into filters. We've got a small collection now!

Pixelate

This is a mech walking through a forest. It looks very creepy in motion. I swear it's just a robot, though.

Bokeh

The classic Bokeh effect applied to a ParticleEmitter. This example used RenderFilters.focus to ensure that the particles were all rendered, and the emitter was at a particular place on screen, without running expensive resize operations.

Tilt Shift

A variant Bokeh which blurs out the top and bottom of the screen.

Blur

Blur, applied to the Phaser logo. This is the filter that really needs padding. Getting padding working correctly, for both internal and external filters, was a real mission, but it paid off.

Displacement

Displacement uses one image as a source to warp another image. We've got an exciting enhancement for this one: it now supports normal maps! Previously, it used the red channel from the displacement map to drive the length of the vector. I've changed it to use red for X and green for Y. This allows you to warp the image in two directions at once. Put a filter on the input, and you could animate the warp!

Next Up

My main objective is a Minimum Viable Product for filters. We're very close! All the filters listed above proved something different and vital. Pixelation tested axis-aligned filters, showing the difference between internal and external states. Bokeh tested filter padding. Blur tested composite filter operations, running several shader passes inside the filter. Displacement tested texture inputs to filter controllers.

I have just two more MVP tasks: Masks and Samplers. But you'll see more about those next week!

Share your content with 18,000+ readers

Created a game, tutorial, code snippet, video, or anything you feel Phaser World readers would like? Then please send it to us!

Until the next issue, happy coding!

Subscribe to my newsletter

Read articles from Richard Davey directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by