The Science Behind AI's First Nobel Prize

Ahmad W Khan

Ahmad W Khan

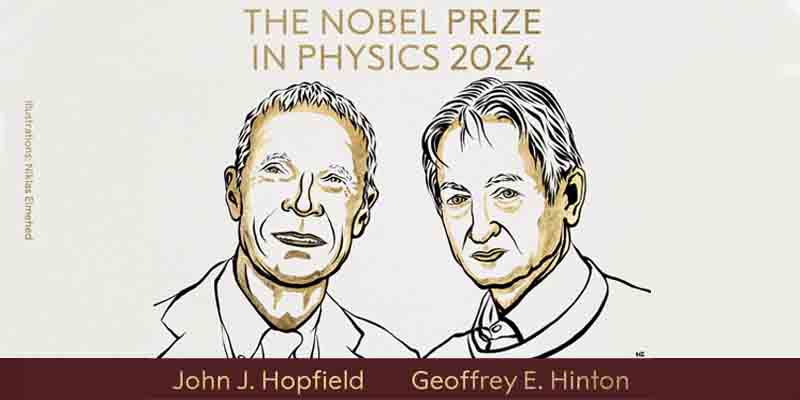

The 2024 Nobel Prize in Physics was awarded to John Hopfield and Geoffrey Hinton for their foundational work in bridging physics and artificial intelligence (AI). Their work on Hopfield Networks and Boltzmann Machines brought physics-driven insights to machine learning (ML) long before AI became a mainstream technology. In recognizing their contributions, the Nobel committee highlighted how neural networks, inspired by natural phenomena like spin systems and statistical mechanics, laid the groundwork for today’s deep learning and generative AI models. Let’s dive into the intricacies of this Nobel-winning research and see why their achievements have reshaped both physics and AI.

Physics at the Core of Machine Learning

Physics and machine learning may seem like distant cousins, but at their core, they share fundamental similarities. Both disciplines aim to uncover patterns in complex systems—whether it’s predicting particle interactions or recognizing faces in a photograph. Hopfield Networks and Boltzmann Machines represent early attempts to fuse these fields by modeling neural behavior using physical principles.

To understand this, let's begin by exploring the underlying physics that inspired these AI models: spin systems. In physics, spins are the magnetic orientations of particles like electrons. These spins can point either up or down (0 or 1 in binary terms), and their behavior is dictated by interactions with neighboring spins. When placed in a magnetic material, spins will naturally settle into a configuration that minimizes the system’s total energy—a concept rooted in thermodynamics and statistical mechanics.

By adapting this energy minimization principle, Hopfield and Hinton showed that neural networks could store and retrieve memories, just like how spins stabilize into specific states. This concept became essential for understanding how artificial systems could simulate brain-like activity.

Hopfield Networks

John Hopfield’s groundbreaking work in 1982 introduced a type of recurrent neural network that mimicked how neurons in the brain might store memories. Hopfield Networks are energy-based models that use binary states (1s and 0s) to represent the activity of neurons. Each neuron interacts with others based on weighted connections (synapses), and the system’s goal is to find the lowest energy state.

Here’s where physics steps in. Just as spins align to minimize a magnet's total energy, neurons in Hopfield networks flip between states to minimize the system's energy. The energy function guiding the network is similar to how spins behave in a physical material:

$$E = -\sum_{i,j} w_{ij} s_i s_j - \sum_i b_i s_i$$

Where:

$$w_{ij}\text{ is the weight (interaction) between neurons } i \text{ and } j$$

$$s_i \text{ is the state of neuron } i \text{ (either 0 or 1)}$$

$$b_i \text{ is the bias of neuron } i$$

- When a Hopfield network is trained, its weights and biases are adjusted so that input data—patterns like images or sequences—are stored as low-energy configurations. Once trained, the network can recall these patterns by starting with a partial or noisy input and flipping neurons to reduce the overall energy. The process continues until the system stabilizes, producing the most likely stored memory.

However, Hopfield networks come with limitations:

The number of stored memories is limited by the size of the network.

When memories are too similar, recall becomes unreliable.

The system is deterministic, meaning it can only recall exact stored memories, making it rigid for complex real-world tasks.

These challenges led to the development of more flexible models like the Boltzmann Machine, introduced by Geoffrey Hinton in 1985.

Boltzmann Machines

Hopfield networks were powerful, but their deterministic nature limited their ability to generalize. Geoffrey Hinton’s Boltzmann Machine added a crucial ingredient to address this problem: temperature. In thermodynamics, temperature allows systems to explore different configurations rather than just settling into the lowest energy state. By introducing probabilistic elements, Boltzmann Machines became some of the earliest generative models—able to create new data by sampling from probability distributions rather than just memorizing inputs.

In a Boltzmann Machine, the likelihood that a neuron will be active (1) or inactive (0) follows a Boltzmann distribution, which introduces randomness based on temperature:

In a Boltzmann Machine, the likelihood that a neuron will be active (1) or inactive (0) follows a Boltzmann distribution, introducing randomness based on temperature:

$$P(s_i = 1) = \frac{1}{1 + e^{-\Delta E / kT}}$$

Where:

ΔE is the change in energy if the neuron flips state,

T is the temperature, controlling randomness,

k is a constant related to the system's entropy.

At low temperatures, the network behaves similarly to a Hopfield network—seeking out low-energy states. But at higher temperatures, it explores a wider range of states, allowing for greater flexibility in solving problems like pattern recognition or image generation.

This probabilistic approach is especially powerful in training. Instead of simply minimizing an energy function, the model learns by maximizing the likelihood of observing the training data—a concept rooted in thermodynamics and statistical inference.

Training and Inference

While both Hopfield Networks and Boltzmann Machines are foundational to machine learning, they have largely been replaced by more efficient models like deep neural networks and transformers. However, their influence is profound. The key concept of energy minimization and probabilistic inference is mirrored in today’s AI systems, especially in unsupervised learning and generative models like GPT and DALL·E.

These models rely on principles that trace back to Hopfield and Hinton’s work. For example:

Backpropagation, the core of modern neural network training, involves adjusting weights in a way that is conceptually similar to energy minimization.

Generative Adversarial Networks (GANs) and Diffusion Models also build on ideas from probabilistic sampling and energy-based models.

Attention mechanisms used in transformers can be viewed as a generalization of how energy-based systems focus on key interactions between elements.

Even today, Hopfield Networks are seeing a resurgence in new forms, such as continuous Hopfield networks and their connections to attention-based models in large language models (LLMs). These modern adaptations are more efficient and can handle larger, more complex datasets—showing the lasting influence of Hopfield and Hinton’s early work.

Why the 2024 Nobel Prize Matters for AI

The decision to award the Nobel Prize to Hopfield and Hinton highlights the deep connections between physics and AI. At first glance, it might seem odd to celebrate neural networks in a field traditionally concerned with the fundamental forces of nature. But physics is, at its heart, the science of uncovering patterns and laws that govern everything—from particles to galaxies. AI, in many ways, is the computational counterpart, discovering patterns and rules in data.

In fact, the very notion of minimizing an energy function—central to both physics and AI—is a universal principle that applies whether we’re simulating magnetic materials or training a neural network to generate images. This Nobel Prize reminds us that science is often multidisciplinary, and that breakthroughs in one field can spark revolutions in another.

The 2024 Nobel Prize is a testament to the power of interdisciplinary thinking. Today’s most pressing challenges—whether they involve climate change, medicine, or technology—require us to blend insights from physics, statistics, neuroscience, and beyond. Hopfield and Hinton’s work shows how seemingly abstract physical principles can lead to practical, world-changing technologies.

The problems of the future will require us to break down silos between disciplines and look at the world holistically. As we enter an age of AI-driven transformation, the methods and principles rooted in nature’s laws will continue to guide us toward understanding and solving the mysteries of intelligence, both natural and artificial.

Physics isn’t just about forces or particles; it’s about understanding systems, recognizing patterns, and crafting solutions that are repeatable and predictable. These are the same goals we pursue in machine learning and AI.

So, whether you’re a physicist, a data scientist, or a software engineer, keep looking for patterns, keep innovating, and most of all, keep blending the best of our scientific traditions to push the boundaries of what’s possible.

Subscribe to my newsletter

Read articles from Ahmad W Khan directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by