Step-By-Step Guide to Measuring Relevance in Azure AI Search

Farzad Sunavala

Farzad SunavalaTable of contents

- Introduction

- Setting Up The Environment

- Configuring Cohere API and Azure AI Search

- Generating Embeddings with Cohere

- Loading and Preparing Wayfair WANDS Product Dataset

- Creating Vector Indexes in Azure AI Search

- Uploading Product Data and Vectors to Azure AI Search

- Preparing Query Data and Ground Truth

- Performing Vector Search with Azure AI Search

- Conclusion

- Next Steps

- Appendix

Measuring relevance is a crucial part of any retrieval system, whether you're building an eCommerce platform, an enterprise search application, or a Retrieval-Augmented Generation (RAG) solution. Accurately assessing and optimizing search results is key to delivering a high-quality user experience and achieving meaningful outcomes.

In this blog, we dive into evaluating search relevance in Azure AI Search using Cohere embeddings and a real-world eCommerce dataset from Wayfair. We'll compare the performance of Float32 vs. int8 embeddings and explore whether to independently encode product_name and product_description, or store both for cross-field vector searches using Reciprocal Rank Fusion (RRF). Our goal is to identify the best configuration for maximum retrieval accuracy.

Introduction

Overview

Azure AI Search is a robust search-as-a-service solution that empowers developers to integrate powerful search capabilities into their applications. However, ensuring that search results are relevant and meet user expectations is crucial for delivering an optimal user experience. This blog delves into measuring Azure AI Search relevance using Cohere embeddings and Ranx, providing a hands-on, reproducible approach.

What you’ll learn

Environment Setup: Installing necessary libraries and configuring environment variables.

Cohere Embeddings: Generating both int8 and Float32 embeddings.

Azure AI Search Configuration: Creating and managing search indexes.

Azure AI Studio Model Catalog: Optionally, use a Model-as-a-Service on Azure’ AI Studio Model Catalog.

Vector Searches: Performing vector-based searches on different embedding types.

Relevance Evaluation: Using Ranx (a blazing fast python library for ranking evaluation) to assess and compare search relevance.

Decision Making: Determining the best embedding type and indexing strategy based on evaluation metrics.

Prerequisites

Before diving in, ensure you have:

Technical Skills: Basic understanding of Python and Jupyter Notebooks.

Azure Account: An active Azure account with Azure AI Search service set up.

Cohere API Key: Access to Cohere for generating embeddings. (I’ll be using Azure AI Studio Serverless API for this tutorial but you can easily use the Cohere API client.)

Python Libraries: Installed libraries such as

azure-search-documents,cohere,pandas,ranx,python-dotenv, etc.

Setting Up The Environment

Importing Necessary Libraries

First things first, let's import all the libraries we'll need:

!pip install ranx

!pip install azure-search-documents==11.6.0b6

!pip install azure-identity

!pip install python-dotenv

!pip install pandas

!pip install cohere

import os

import pandas as pd

import cohere

from azure.search.documents import SearchClient

from azure.core.credentials import AzureKeyCredential

from collections import defaultdict

from dotenv import load_dotenv

from ranx import Qrels, Run, compare

from azure.identity import DefaultAzureCredential

from azure.search.documents.indexes import SearchIndexClient

from azure.search.documents.indexes.models import (

AIStudioModelCatalogName,

AzureMachineLearningParameters,

AzureMachineLearningVectorizer,

HnswAlgorithmConfiguration,

HnswParameters,

SearchField,

SearchFieldDataType,

SearchIndex,

SearchableField,

SimpleField,

VectorEncodingFormat,

VectorSearch,

VectorSearchAlgorithmKind,

VectorSearchAlgorithmMetric,

VectorSearchProfile

)

from azure.search.documents.models import (

VectorizableTextQuery,

VectorizedQuery

)

Loading Env Variables

Managing sensitive information like API keys securely is crucial. We'll use a .env file to store our environment variables.

load_dotenv()

Configuring Cohere API and Azure AI Search

Setting up Azure AI Studio Model Catalog Cohere API Credentials

Cohere provides state-of-the-art embeddings that are essential for our search relevance evaluation.

# Environment variables

AZURE_AI_STUDIO_COHERE_EMBED_KEY = os.getenv("AZURE_AI_STUDIO_COHERE_EMBED_KEY")

AZURE_AI_STUDIO_COHERE_EMBED_ENDPOINT = os.getenv("AZURE_AI_STUDIO_COHERE_EMBED_ENDPOINT")

# Initialize Cohere client using Azure AI Studio

cohere_azure_client = cohere.ClientV2(

base_url=f"{AZURE_AI_STUDIO_COHERE_EMBED_ENDPOINT}/v1",

api_key=AZURE_AI_STUDIO_COHERE_EMBED_KEY

)

Setting up Azure AI Search Credentials

Azure AI Search is where our search indices will live. Let's set up the credentials and initialize the search clients.

AZURE_SEARCH_SERVICE_ENDPOINT = os.getenv("AZURE_SEARCH_SERVICE_ENDPOINT")

AZURE_SEARCH_ADMIN_KEY = os.getenv("AZURE_SEARCH_ADMIN_KEY")

credential = AzureKeyCredential(AZURE_SEARCH_ADMIN_KEY)

int8_index_name = "wands-cohere-int8-index"

float_index_name = "wands-cohere-float32-index"

int8_search_client = SearchClient(endpoint=AZURE_SEARCH_SERVICE_ENDPOINT, index_name=int8_index_name, credential=credential)

float_search_client = SearchClient(endpoint=AZURE_SEARCH_SERVICE_ENDPOINT, index_name=float_index_name, credential=credential)

Generating Embeddings with Cohere

Understanding Embeddings

Embeddings are numerical representations of text that capture semantic relationships, enabling more accurate and context-aware search results.

Creating the Embedding Function

Define a function to generate embeddings using Cohere. This function includes a retry mechanism to handle transient errors gracefully.

from tenacity import retry, stop_after_attempt, wait_fixed, retry_if_exception_type

from cohere.errors import (

TooManyRequestsError, ServiceUnavailableError, GatewayTimeoutError,

InternalServerError, ClientClosedRequestError

)

# Add tenacity retry mechanism to handle rate limits and other transient errors

@retry(

stop=stop_after_attempt(5), # Retry up to 5 times

wait=wait_fixed(5), # Wait 5 seconds between retries

retry=retry_if_exception_type((

TooManyRequestsError, # Rate limit error (429)

ServiceUnavailableError, # Service unavailable (503)

GatewayTimeoutError, # Gateway timeout (504)

InternalServerError, # Internal server error (500)

ClientClosedRequestError # Client closed request (499)

))

)

def generate_embeddings(texts, input_type="search_document", embedding_type="float"):

model = "embed-english-v3.0"

texts = [texts] if isinstance(texts, str) else texts # Ensure input is a list of strings

response = cohere_azure_client.embed(

texts=texts,

model=model,

input_type=input_type,

embedding_types=[embedding_type],

)

return [embedding for embedding in getattr(response.embeddings, embedding_type)]

Loading and Preparing Wayfair WANDS Product Dataset

Importing Product Data

Load your product dataset, which includes product names, descriptions, and other relevant attributes.

products_df = pd.read_csv("eval/products/product.csv", sep="\t", index_col="product_id", keep_default_na=False)

# Extract first 5000 product names and descriptions

product_names = products_df["product_name"].tolist()[:5000]

product_descriptions = products_df["product_description"].tolist()[:5000]

products_df.head()

Extracting Relevant Fields

Isolate the product_name and product_description for embedding generation. (We’ll take the first 5000 strictly for demo purposes).

product_names = products_df["product_name"].tolist()[:5000]

product_descriptions = products_df["product_description"].tolist()[:5000]

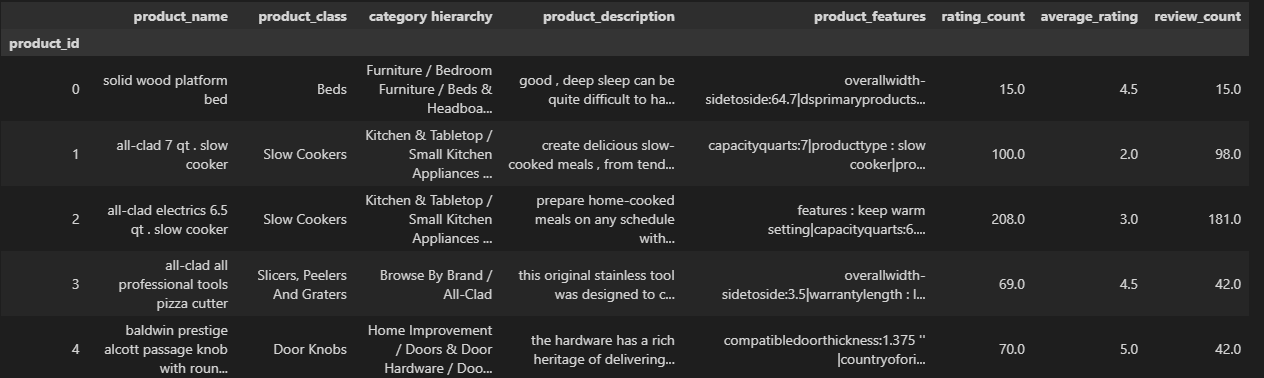

Understanding the WANDS Dataset

The WANDS (Wayfair ANnotation Dataset) is a comprehensive dataset designed for benchmarking and evaluating search engines within an eCommerce context. Here's a breakdown of its components:

product.csv: Contains details of all candidate products available for search, including

product_id,product_name,product_class,category_hierarchy,product_description,product_features,rating_count,average_rating, andreview_count.query.csv: Contains the search queries used to evaluate the search system, including

query_idandquery.label.csv: Provides relevance judgments (aka qrels) for each

(query, product)pair, includingid,query_id,product_id, andlabel(Exact, Partial, Irrelevant).

Dataset Statistics

Total Products: 42,994

Total Queries: 480

Total Relevance Judgments: 233,448

Dataset Creation and Labeling

The WANDS dataset was meticulously curated by Wayfair to facilitate objective benchmarking of search engines in an eCommerce environment. The dataset encompasses a diverse range of products and queries, ensuring comprehensive coverage of potential search scenarios. Each (query, product) pair was evaluated and labeled based on its relevance:

Exact: The product precisely matches the user's query.

Partial: The product partially matches the query, offering some relevant features.

Irrelevant: The product does not match the query and is not useful in the search context.

These annotations enable the assessment of search algorithms in terms of their ability to retrieve relevant products, thereby providing a robust foundation for evaluating and optimizing search relevance.

Generating and Indexing Float and Int8 Embeddings

Generating Int8 Embeddings

Int8 embeddings are optimized for efficient storage and faster computation, making them suitable for large-scale applications.

product_name_int8_embeddings = generate_embeddings(product_names, embedding_type="int8")

product_description_int8_embeddings = generate_embeddings(product_descriptions, embedding_type="int8")

Generating Float32 Embeddings

Float32 embeddings offer higher precision, which can enhance the semantic understanding of the search system.

product_name_float_embeddings = generate_embeddings(product_names, embedding_type="float")

product_description_float_embeddings = generate_embeddings(product_descriptions, embedding_type="float")

Creating Vector Indexes in Azure AI Search

Understanding Vector Search Indexes

A search index is a structured repository that facilitates efficient querying and retrieval of documents based on search criteria.

Defining the Index

Define the schema for your search indexes, including fields for both int8 and Float32 embeddings.

from azure.search.documents.indexes.models import (

SearchIndex, SimpleField, VectorField, SearchableField, SearchFieldDataType

)

def create_or_update_index(

client, index_name, vector_field_type, scoring_uri, authentication_key, model_name

):

# Define the search index fields based on your product schema

fields = [

SimpleField(name="product_id", type=SearchFieldDataType.String, key=True),

SearchField(

name="product_name",

type=SearchFieldDataType.String,

searchable=True,

filterable=True,

),

SearchField(

name="product_description", type=SearchFieldDataType.String, searchable=True

),

SearchField(

name="product_name_vector",

type=vector_field_type,

vector_search_dimensions=1024,

vector_search_profile_name="my-vector-config",

),

SearchField(

name="product_description_vector",

type=vector_field_type,

vector_search_dimensions=1024,

vector_search_profile_name="my-vector-config",

),

]

# Vector search configuration with HNSW algorithm and query vectorizer

vector_search = VectorSearch(

profiles=[

VectorSearchProfile(

name="my-vector-config",

algorithm_configuration_name="my-hnsw",

vectorizer_name="my-vectorizer",

)

],

algorithms=[

HnswAlgorithmConfiguration(

name="my-hnsw",

kind=VectorSearchAlgorithmKind.HNSW,

parameters=HnswParameters(metric=VectorSearchAlgorithmMetric.COSINE),

)

],

vectorizers=[

AzureMachineLearningVectorizer(

name="my-vectorizer",

vectorizer_name="my-vectorizer",

aml_parameters=AzureMachineLearningParameters(

scoring_uri=scoring_uri,

authentication_key=authentication_key,

model_name=model_name,

),

)

],

)

index = SearchIndex(name=index_name, fields=fields, vector_search=vector_search)

client.create_or_update_index(index=index)

generate_embeddings function every time. Great for production scenarios! Super convenient! See: Integrated Vectorization for Azure AI Search now Generally AvailableCreating Int8 and Float32 Vector Indexes

Create search indexes for both embedding types using the defined schema.

# Example usage: Creating indexes for both Int8 and Float32 embeddings with query vectorizer

search_index_client = SearchIndexClient(

endpoint=AZURE_SEARCH_SERVICE_ENDPOINT, credential=credential

)

create_or_update_index(

search_index_client,

index_name=int8_index_name,

vector_field_type="Collection(Edm.SByte)", # Int8 embedding storage format

scoring_uri=AZURE_AI_STUDIO_COHERE_EMBED_ENDPOINT,

authentication_key=AZURE_AI_STUDIO_COHERE_EMBED_KEY,

model_name=AIStudioModelCatalogName.COHERE_EMBED_V3_ENGLISH,

)

create_or_update_index(

search_index_client,

index_name=float_index_name,

vector_field_type="Collection(Edm.Single)", # Float32 embedding storage format

scoring_uri=AZURE_AI_STUDIO_COHERE_EMBED_ENDPOINT,

authentication_key=AZURE_AI_STUDIO_COHERE_EMBED_KEY,

model_name=AIStudioModelCatalogName.COHERE_EMBED_V3_ENGLISH,

)

Uploading Product Data and Vectors to Azure AI Search

Preparing Documents for Upload

from azure.search.documents import SearchIndexingBufferedSender

# Function to upload embeddings using SearchIndexingBufferedSender

def upload_embeddings_to_index(embeddings_name, embeddings_description, search_client, index_name, batch_size=100):

documents = []

# Prepare documents with embeddings

for i, (name_embedding, desc_embedding) in enumerate(zip(embeddings_name, embeddings_description)):

document = {

"product_id": str(products_df.index[i]),

"product_name": products_df["product_name"][i],

"product_description": products_df["product_description"][i],

"product_name_vector": name_embedding,

"product_description_vector": desc_embedding,

}

documents.append(document)

# Initialize SearchIndexingBufferedSender for batch uploads

with SearchIndexingBufferedSender(

endpoint=AZURE_SEARCH_SERVICE_ENDPOINT,

index_name=index_name,

credential=AzureKeyCredential(AZURE_SEARCH_ADMIN_KEY),

auto_flush_interval=60, # Automatically flush every 60 seconds

initial_batch_action_count=batch_size # Batch size for actions

) as batch_client:

# Upload documents in batches

for doc_batch in [documents[i:i + batch_size] for i in range(0, len(documents), batch_size)]:

batch_client.upload_documents(documents=doc_batch)

print(f"Uploaded {len(documents)} documents to the index '{index_name}' using buffered sender.")

Uploading to Int8 and Float32 Indexes

Upload your prepared documents to both int8 and Float32 search indexes.

# Upload embeddings to respective indexes

upload_embeddings_to_index(product_name_int8_embeddings, product_description_int8_embeddings, int8_search_client, int8_index_name)

upload_embeddings_to_index(product_name_float_embeddings, product_description_float_embeddings, float_search_client, float_index_name)

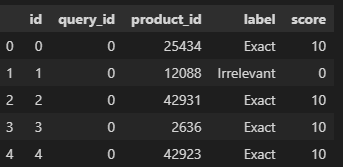

Preparing Query Data and Ground Truth

Loading Query Data

Load your queries and their corresponding ground truth labels to evaluate search relevance.

queries_df = pd.read_csv("eval/products/query.csv", sep="\t", index_col="query_id")

labels_df = pd.read_csv("eval/products/label.csv", sep="\t")

# Map ground truth labels to scores

relevancy_scores = {"Exact": 10, "Partial": 5, "Irrelevant": 0}

labels_df["score"] = labels_df["label"].map(relevancy_scores)

labels_df.head()

Performing Vector Search with Azure AI Search

Understanding Vector Search

Vector search leverages embeddings to find semantically similar documents, offering more nuanced and relevant search results compared to traditional keyword-based search.

Creating the Search Function

Define a function to perform vector searches using the configured embeddings.

# Function to perform vector search using the vectorizer in Azure AI Search

def perform_vector_search(search_client, queries_df, field):

run_dict = defaultdict(dict)

for index, row in queries_df.iterrows():

query_text = row["query"]

# Use the vectorizer already configured in the Azure AI Search index for query embedding generation

vector_query = VectorizableTextQuery(text=query_text, k_nearest_neighbors=3, fields=field)

# Perform vector search using the Azure AI Search client

results = search_client.search(search_text=None, vector_queries=[vector_query], top=3)

query_id = f"{index}" # Ensure query_id matches what's in qrels

for result in results:

# Use the actual product_id from the search results instead of generating a 'doc_' ID

product_id = result['product_id']

score = result['@search.score']

# Populate the run_dict using product_id and score

run_dict[query_id][str(product_id)] = score

return run_dict

Executing Searches on Int8 and Float32 Embeddings

Perform vector searches on both embedding types and for different fields (product_name, product_description, and both as 2 fields via cross-field vector searching and performing RRF on the results).

int8_name_run_dict = perform_vector_search(int8_search_client, queries_df, "product_name_vector")

int8_description_run_dict = perform_vector_search(int8_search_client, queries_df, "product_description_vector")

int8_combined_run_dict = perform_vector_search(int8_search_client, queries_df, "product_name_vector, product_description_vector")

float_name_run_dict = perform_vector_search(float_search_client, queries_df, "product_name_vector")

float_description_run_dict = perform_vector_search(float_search_client, queries_df, "product_description_vector")

float_combined_run_dict = perform_vector_search(float_search_client, queries_df, "product_name_vector, product_description_vector")

Evaluating Search Relevance Using Ranx

Introduction to Ranx

Ranx is a powerful evaluation framework designed to assess the performance of information retrieval systems. It allows you to measure how well your search results align with predefined ground truth relevancy.

Preparing Qrels (Ground Truth)

Convert your labeled data into a format suitable for Ranx evaluation.

# Ensure query_id and product_id columns are of type string (object)

labels_df["query_id"] = labels_df["query_id"].astype(str)

labels_df["product_id"] = labels_df["product_id"].astype(str)

# Create qrels from labels after converting dtypes

qrels = Qrels.from_df(labels_df, q_id_col="query_id", doc_id_col="product_id", score_col="score")

Creating Run Instances

Create run instances for each search scenario (int8 vs. Float32, different fields, and combined).

# Create runs for int8, float, and combined comparisons

int8_name_run = Run(int8_name_run_dict, name="int8_product_name")

int8_description_run = Run(int8_description_run_dict, name="int8_product_description")

float_name_run = Run(float_name_run_dict, name="float_product_name")

float_description_run = Run(float_description_run_dict, name="float_product_description")

int8_combined_run = Run(int8_combined_run_dict, name="int8_combined")

float_combined_run = Run(float_combined_run_dict, name="float_combined")

Comparing Metrics

Evaluate and compare the performance of each embedding type using various metrics such as Precision@3, Recall@3, MRR@3, DCG@3, and nDCG@3.

# Compare search relevance metrics across different models

report = compare(

qrels=qrels,

runs=[

int8_name_run,

int8_description_run,

float_name_run,

float_description_run,

int8_combined_run,

float_combined_run

],

metrics=["precision@3", "recall@3", "mrr@3", "dcg@3", "ndcg@3"],

make_comparable=True # Ensure that qrels and runs have matching query IDs

)

# View results using available methods for the `Report` object

print("Comparison Results:")

# Convert the report to a DataFrame and display it

results_df = report.to_dataframe()

results_df

| model_names | precision@3 | recall@3 | mrr@3 | dcg@3 | ndcg@3 |

| int8_product_name | 0.726389 | 0.013993 | 0.816667 | 10.336328 | 0.568412 |

| int8_product_description | 0.596528 | 0.011205 | 0.704167 | 8.873794 | 0.480235 |

| float_product_name | 0.725000 | 0.013955 | 0.820486 | 10.325911 | 0.568340 |

| float_product_description | 0.596528 | 0.011212 | 0.703819 | 8.877886 | 0.480424 |

| int8_combined | 0.674306 | 0.013293 | 0.787153 | 9.965795 | 0.543094 |

| float_combined | 0.672222 | 0.013170 | 0.785764 | 9.943598 | 0.541845 |

Interpeting the results

Let's break down the key metrics:

Precision@3: Measures the proportion of relevant documents in the top 3 results. Higher values indicate better relevance.

- int8_product_name and float_product_name have the highest precision, both around 0.73, indicating that embeddings based on

product_nameare highly effective.

- int8_product_name and float_product_name have the highest precision, both around 0.73, indicating that embeddings based on

Recall@3: Represents the proportion of relevant documents retrieved out of all relevant documents. The values are relatively low (≈0.014), suggesting that while the top results are relevant, many relevant documents are not being retrieved.

MRR@3 (Mean Reciprocal Rank): Reflects the average rank of the first relevant document. Both int8_product_name and float_product_name scores are above 0.81, showcasing strong performance.

DCG@3 (Discounted Cumulative Gain): Accounts for the position of relevant documents, with higher values indicating better ranking.

- int8_product_name and float_product_name score around 10.3, showing effective ranking of relevant results.

nDCG@3 (Normalized DCG): Normalizes DCG to scale between 0 and 1. Values closer to 1 signify highly relevant search results.

- Both embedding types for

product_nameachieve around 0.568, outperforming other configurations.

- Both embedding types for

Insights:

Embedding Types: Int8 and Float32 embeddings perform similarly, with Float32 slightly edging out in some metrics.

Field Indexing: Indexing

product_nameyields better relevance thanproduct_description. Combining both fields may dilute relevance.

Conclusion

Recap of Steps

We've navigated through setting up Azure AI Search, generating int8 and Float32 embeddings with Cohere, performing vector searches on different indexing strategies, and evaluating their relevance using Ranx. This end-to-end process provides a clear methodology for assessing and optimizing search relevance in your applications.

Key takeaways

Embeddings Matter: Both int8 and Float32 embeddings are effective, with slight advantages to Float32 in specific metrics.

Field Selection: Indexing the

product_namefield yields better search relevance compared toproduct_description.Combined Indexing Trade-offs: While combining multiple fields might seem beneficial, it can lead to decreased relevance and performance metrics.

Evaluation is Crucial: Tools like Ranx are invaluable for objectively measuring and comparing the effectiveness of different search configurations.

Data-Driven Recommendations

Based on the evaluation metrics:

Choose Embedding Type Wisely:

If computational efficiency and storage are important, int8 embeddings are a solid choice, offering comparable performance to Float32.

For scenarios where maximum precision is required, Float32 embeddings provide a slight edge.

Optimize Field Indexing:

Focus on

product_name: Given the higher relevance metrics, prioritizingproduct_namefor embedding generation and indexing is advisable.Selective Use of

product_description: If detailed descriptions are crucial for your application, consider usingproduct_descriptionin a targeted manner rather than performing Reciprocal Rank Fusion using cross-field vector searches withproduct_name.

Avoid Overcomplicating Indexes:

- Combining multiple fields for vector search can dilute relevance. Instead, tailor your indexing strategy based on the most critical fields for your search use case.

Next Steps

Alternative encoder models: Experiment with different embedding models and primitive types to further enhance search relevance (or whatever your objective may be)

Explore Advanced Azure AI Search Features: Dive deeper into features like vector weighting, custom scoring profiles/document boosting, and semantic reranking for further customization of search relevance

Scale and Optimize: Test your search setup with larger datasets and optimize performance based on specific application needs.

Appendix

Complete Python Notebook Code

For a comprehensive walkthrough, refer to the full Jupyter Notebook: azure-ai-search-python-playground/azure-ai-search-eval-ranx.ipynb at main · farzad528/azure-ai-search-python-playground

FAQs

Q: Can I use a different embedding provider instead of Cohere?

A: Absolutely! You can integrate other embedding services like OpenAI or Hugging Face by modifying the embedding generation function accordingly.

Q: How scalable is this setup for larger datasets?

A: Azure AI Search is designed to handle large-scale datasets efficiently. Ensure your Azure plan accommodates the volume and complexity of your data. Additionally, consider optimizing batch sizes and leveraging Azure AI Search’s scalability features. See Announcing cost-effective RAG at scale with Azure AI Search

Q: What are the trade-offs between int8 and Float32 embeddings?

A: Int8 embeddings offer reduced storage and faster computation at the cost of slight precision loss, while Float32 embeddings provide higher precision. See Using Cohere Binary Embeddings in Azure AI Search and Command R Model via Azure AI Studio & Leveraging Cohere Embed V3 Compressed Embeddings with Azure AI Search for more details.

Q: I don’t have a labeled dataset with query-document pairs. What do I do?

A: Consider generating synthetic data by creating sample queries and associating them with relevant product descriptions. Alternatively, you can explore LLM-as-a-judge frameworks like RAGAS, DeepEval, Trulens, or Arize AI.

Credit: This guide utilizes the WANDS - Wayfair Annotation Dataset by Wayfair for benchmarking and evaluation. Please refer to their paper for more details.

@InProceedings{wands,

title = {WANDS: Dataset for Product Search Relevance Assessment},

author = {Chen, Yan and Liu, Shujian and Liu, Zheng and Sun, Weiyi and Baltrunas, Linas and Schroeder, Benjamin},

booktitle = {Proceedings of the 44th European Conference on Information Retrieval},

year = {2022},

numpages = {12}

}

This guide serves as a foundational step towards mastering Azure AI Search relevance evaluation. By understanding the nuances of different embedding types and encoding strategies, you can build search applications that deliver accurate and meaningful results, enhancing user satisfaction and engagement. Happy measuring!

Subscribe to my newsletter

Read articles from Farzad Sunavala directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Farzad Sunavala

Farzad Sunavala

I am a Principal Product Manager at Microsoft, leading RAG and Vector Database capabilities in Azure AI Search. My passion lies in Information Retrieval, Generative AI, and everything in between—from RAG and Embedding Models to LLMs and SLMs. Follow my journey for a deep dive into the coolest AI/ML innovations, where I demystify complex concepts and share the latest breakthroughs. Whether you're here to geek out on technology or find practical AI solutions, you've found your tribe.