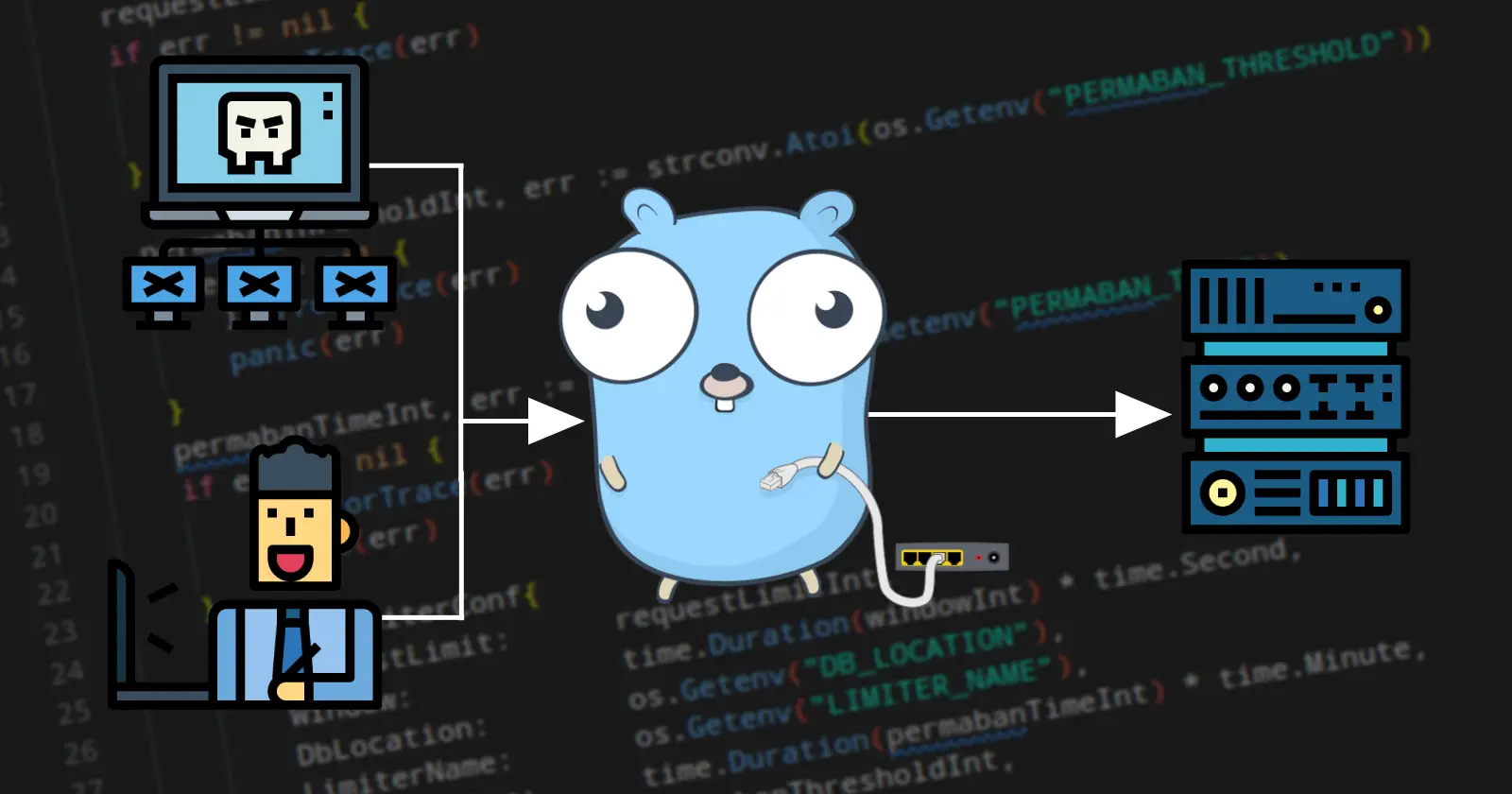

File Server with built-in DDoS protection - NetwrokNanny

Dejan Gegić

Dejan Gegić

Origin

Try out NetworkNanny for yourself on GitHub, or keep reading the article

Long story short

There was a guy whose service was being pounded with 10 million requests/hour, and there was nothing he could do about it. As railway charges per GB of traffic, this was getting pricey.

So, I jumped in, and that's how NetworkNanny came to be.

What happened

One night I noticed that a Discord server I was a part of, got pretty noisy all of a sudden. Didn't really give it too much attention at first. But in the following days, I noticed that the same complaint kept popping up, and all the admins were pretty active. Apparently, their file server where they were sharing the data, which was pretty much the reason the server existed, was under a DDoS attack!

Seeing that, I reached out to the guy responsible for keeping the File Server running. We'll call him D.M. from now on.

D.M. was head over heels in this and didn't know what had struck him. He isn't a bad coder by any stretch of the imagination, but a Node.js server hosted on railway can only take so much.

The Cost of this Problem

The day I contacted him, they had a pretty "normal" day with 67 Million requests that day, which meant that they served 327GB of data that day alone. Hourly peaks were around 10 million requests, which turns out to be 50-ish GB. Give or take 20% from hour to hour.

If the service didn't go down, then what's the issue? You see, Railway (Where the file server was being hosted) charges $0.10 per GB of traffic. Which sounds pretty cheap at first, but it sure adds up. I will not calculate CPU, which is $20 per core per month, because it fluctuates a lot.

Back-of-the-napkin cost calculation 💸

My Back-of-the-napkin calculation ran something like this. Keep in mind, this is just a rough estimate.

327GB of data daily x $0.10 per GB = $32.70 per day.

$32.70 x 30 days = $981 per month.

That means that this DDoS, even though it did not bring the service down, cost him around $1,000 every month he was running it.

Birth of the NetworkNanny 👶

Having a long weekend ahead of me, with absolutely no plans and not the best weather, I had something to occupy me.

So why use NetworkNanny and not some existing project?

Well, it's quite simple actually, as there are several strengths NetworkNanny has, that others can't match.

Requirements ✔️

Cross-platform standalone binary

One single binary, which can be pre-compiled for any OS and architecture.

This is for when you need to be able to deploy a File Server anywhere on any system without installing any dependencies or an interpreter. Production environments can get messy and crowded when small tools, which are implemented as niche solutions, become a problem because of the dependencies they need to run.

Fast

Quick response times are mandatory. Need I say more?

Low resource footprint

No one wants to see their File Server chewing through half the available RAM and spiking the CPU.

Simple, yet robust customization

CLI with 20 flags isn't an option, and I can't even consider changing the source code and re-compiling. So a config file is preferred. So a config file, or even just a .env file might be the best choice.

Tech used

Go

Because of course it's Go, what else did you expect me to use?

Jokes aside, I do think it's the right tool for this job. Performance is high, and the resource footprint is low, especially for a garbage-collected language. It's a joy to write, and I'm more efficient than in other languages. All good characteristics for a weekend and high-traffic project.

Fiber

I could have chosen a bunch of other approaches. The Go community has developed a ton of great web frameworks, and the standard library is actually one of the best out there. Some will cry out how I should have used Echo or Chi, or even maybe Gin. And I hear you, I see where you're coming from. But Fiber is still faster and a breeze to work with. Which means I'm sticking to it.

DB

A TTL (Time to live) was a feature that was needed in a database here. A self-cleaning TTL, not just a column in a SQL table, would need a script to run through it and do the housekeeping periodically. Besides that, relationships are not needed here, just a key-value pair DB will do, and performance is more important.

Redis/KeyDB

The first idea most would have is Redis and its spiritual, multithreaded successor, KeyDB. To be honest, it's not a bad option. But there are 2 large downsides.

The first is that RAM, although faster, is generally in shorter supply than long-term storage.

The second is spinning a whole process to handle the DB and the network latency that comes with it. Now, I hear you say, "Just use Docker". And, that's exactly what I did, there's a docker-compose file for those who want to go down that route. But it adds unneeded complexity, which I'm not a fan of.

BadgerDB

Badger is an interesting project which is the ground stone for Dgraph database. Being a key-value pair DB with the ability to set TTL just like Redis, it has several important distinctions.

The first distinction is, that Badger writes to disk, not memory. You'd think it makes it slower, as the disk is slower than memory, right? Well, that's where the second part comes in.

Badger is a package, not a stand-alone service. That means no need for Docker and no need for slow network calls. Everything comes packaged in the binary. Granted, that does make the binary a bit larger. But it does make it worth the compromise when you take into account the increased simplicity and speed gains. This makes Badger my preferred way of deploying NetworkNanny.

Load tests & Results

Using BadgerDB and running it locally, you can expect sub-1 ms response times.

Local tests

The first set of tests was conducted on my machine using autocannon and pewpew, which are open-source stress-testing tools. Micro benchmarks done here are far from indicative of real-world performance. But they serve as a good measuring stick that can be used to test different approaches quickly and cheaply.

Foremost, the response time is amazing. 99.9% of requests were under 1 ms. Of course, those numbers are only going to rise once you add all the networking overhead in the real world. But, even locally, I'm thrilled with the results.

| Stat | 2.5% | 50% | 97.5% | 99% | Avg | Stdev | Max |

| Latency | 0 ms | 0 ms | 0 ms | 0 ms | 0.01 ms | 0.04 ms | 5 ms |

The numbers are not so good because the traffic was low, to the contrary, it's actually averaging over 16K requests per second is nothing to sneeze at, as it would be over half a billion requests per hour. Which is almost 6x more than what we were aiming for. Good for now.

| Stat | 1% | 2.5% | 50% | 97.5% | Avg | Stdev | Min |

| Req/Sec | 15,743 | 15,743 | 16,687 | 17,247 | 16,622.8 | 364.61 | 15,737 |

| Bytes/Sec | 5.07 MB | 5.07 MB | 5.37 MB | 5.55 MB | 5.35 MB | 118 kB | 5.07 MB |

And here we see that 99.9% of requests are sub-millisecond.

| Percentile | Latency (ms) |

| 0.001 | 0 |

| 0.01 | 0 |

| 0.1 | 0 |

| 1 | 0 |

| 2.5 | 0 |

| 10 | 0 |

| 25 | 0 |

| 50 | 0 |

| 75 | 0 |

| 90 | 0 |

| 97.5 | 0 |

| 99 | 0 |

| 99.9 | 0 |

| 99.99 | 1 |

| 99.999 | 5 |

Real DDoS simulation

BlazeMeter

For this, I have used BlazeMeter over which I have run a DDoS attack spread off between 50 different agents, all in close proximity to the server (the same city in Germany), which makes the attack sting even harder.

Server Hosting

I have hosted NetworkNanny on a Hetzner CPX11 (2 CPU cores, 2GB) VPS, the weakest and smallest they offer. I feel that this is the right choice, as this better represents situations in which it will realistically be deployed.

Test Results

BlazeMeter has a good visualization of the results. And it's clear that in this case, the service isn't as fast as it is when it's run locally. This is to be expected, as network latency plays a role. Under 50ms response time, no matter if it's serving a small text file, or not.

Another piece of data for those who are interested, a Btop screenshot directly from the server. The spikes are when the file is being served, and the flat line is when the server is rejecting requests. As we can see, the window has been set to a short time so that it starts serving again files shortly after banning. This configuration would not be used in deployment, but is useful to show how the CPU is almost idle while it's rejecting requests.

This means that besides the overhead of actually serving files, they can be hitting the service as hard as they can, but it won't do us any harm.

Conclusion

I did not write this blog post to preach how NetworkNanny is an all-in-one solution for ALL needs. It does one thing, and it does it well.

If you have 5 minutes to set up a file server that needs to be performant, resource-efficient, resistant to DDoS, and easy to configure in a single config file, then this is your project.

If you want to check it out for yourself, here’s the link to Network Nanny

Subscribe to my newsletter

Read articles from Dejan Gegić directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by