Day 36/40 - Kubernetes Logging and Monitoring

Rahul Vadakkiniyil

Rahul Vadakkiniyil

Kubernetes, the powerful open-source platform for container orchestration, has become a go-to solution for managing complex applications at scale. As a DevOps engineer, logging and monitoring are two critical aspects that ensure the smooth operation of applications running on Kubernetes clusters. Proper logging and monitoring help in identifying issues, optimizing performance, and ensuring high availability.

1. Introduction to Logging and Monitoring in Kubernetes

Logging in Kubernetes refers to the practice of capturing and storing the logs generated by your applications, pods, and the Kubernetes components themselves. This helps in troubleshooting, auditing, and understanding the application's behavior.

Monitoring focuses on capturing metrics about the health, performance, and resource utilization of your application and the Kubernetes cluster. It involves real-time tracking of metrics like CPU usage, memory usage, disk I/O, network latency, and more.

Together, logging and monitoring provide a holistic view of your Kubernetes environment, making it easier to diagnose issues and optimize performance.

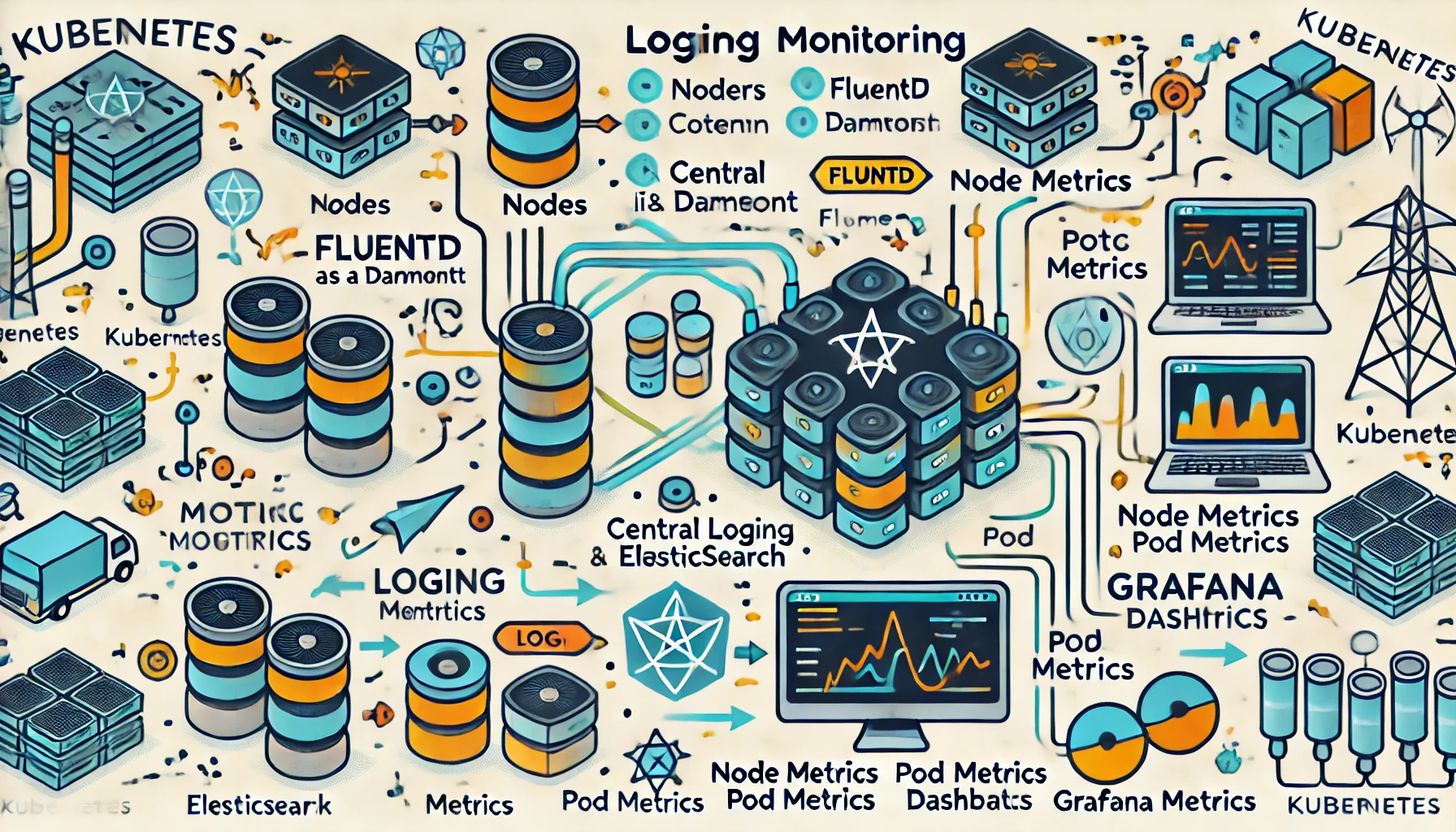

2. Kubernetes Logging Architecture

Kubernetes manages logs at different levels, and understanding the architecture is crucial for proper logging.

a. Node-Level Logging

Logs are generated at the node level by various components such as:

Kubelet: The agent that manages pods and containers on each node. Logs from Kubelet include information about pod creation, termination, and other lifecycle events.

Container Runtime (CRI): Container logs generated by the runtime (e.g., Docker, containerd) provide valuable information on application behavior and performance.

System Logs: Logs from the underlying operating system and system services.

b. Pod and Container-Level Logging

Applications running in containers generate logs that can be accessed via kubectl logs. Kubernetes by itself doesn’t aggregate these logs. They are stored locally on the node where the pod is running and are lost if the pod is deleted or restarted. To avoid this, logs must be centralized using a logging solution.

c. Centralized Logging

Centralized logging involves collecting logs from all nodes and pods in the cluster and forwarding them to a log aggregation system like Elasticsearch, Fluentd, and Kibana (EFK) stack. This approach helps in:

Scalability: Centralized logging can handle logs from multiple nodes in large clusters.

Persistence: Logs are stored even after pods are deleted or nodes are replaced.

Searchability: A centralized log store enables easy searching and querying for troubleshooting.

3. Popular Tools for Kubernetes Logging

Several tools can be integrated with Kubernetes for logging purposes. Some of the most popular ones include:

a. Fluentd

Fluentd is a flexible and extensible logging tool that allows you to collect logs from multiple sources and forward them to various destinations. It integrates well with Kubernetes and is often used as part of the EFK stack.

How it works: Fluentd runs as a DaemonSet on each node, collecting logs from all containers and nodes and forwarding them to a centralized location.

Benefits: Fluentd supports various plugins for different log formats and destinations, making it highly customizable.

b. Elasticsearch, Fluentd, Kibana (EFK) Stack

The EFK stack is a popular choice for centralized logging in Kubernetes.

Elasticsearch: Stores logs and provides a distributed search engine.

Fluentd: Collects logs from Kubernetes and forwards them to Elasticsearch.

Kibana: Visualizes the logs and enables querying, alerting, and dashboards.

c. Loki and Promtail

Loki, developed by Grafana Labs, is a horizontally scalable log aggregation system. It is designed to work closely with Prometheus and focuses on efficiency and scalability by not indexing log content but instead indexing labels (metadata).

Promtail: It’s an agent that scrapes logs from Kubernetes and sends them to Loki.

Grafana: Used for querying, visualizing, and alerting on logs stored in Loki.

d. Logstash

Logstash, part of the Elastic stack, is another tool for managing and processing logs. It can be used to ingest logs, process them (e.g., filtering, enriching), and forward them to Elasticsearch or another log store.

4. Kubernetes Monitoring Overview

Monitoring a Kubernetes cluster involves tracking the health and performance of the infrastructure and applications running on it. Metrics can include CPU/memory usage, disk I/O, network latency, pod health, and application-level metrics.

Monitoring provides insight into:

Cluster Health: Understanding the resource usage and performance of nodes and pods.

Application Performance: Monitoring the application’s responsiveness, availability, and error rates.

Resource Utilization: Ensuring that applications and services are not over- or under-utilizing resources, leading to optimization.

5. Popular Tools for Kubernetes Monitoring

Several tools can be used for monitoring Kubernetes, and choosing the right one depends on your specific needs. Here are the most popular solutions:

a. Prometheus

Prometheus is an open-source monitoring and alerting toolkit designed specifically for reliability and scalability in dynamic environments like Kubernetes.

Metrics collection: Prometheus scrapes metrics from Kubernetes components and applications.

Alerting: It supports powerful alerting based on metrics thresholds and conditions.

Service Discovery: Prometheus automatically discovers targets in Kubernetes based on annotations and labels.

b. Grafana

Grafana is often used with Prometheus to create rich dashboards and visualizations. It allows you to build custom dashboards with graphs, gauges, and alerts, providing insights into your Kubernetes environment.

Integration with Prometheus: Grafana natively supports Prometheus as a data source.

Dashboards: Pre-built dashboards for Kubernetes are available, providing insights into cluster health, node performance, and pod metrics.

c. Kubernetes Metrics Server

The Kubernetes Metrics Server is a lightweight aggregator that collects resource metrics from the kubelets and exposes them via the Kubernetes API. These metrics are useful for autoscaling and basic health checks but don’t provide long-term storage.

6. Best Practices for Kubernetes Logging and Monitoring

To ensure that your Kubernetes logging and monitoring setup is effective, consider the following best practices:

a. Centralize Your Logs

Centralized logging is critical in a distributed environment like Kubernetes. Use tools like Fluentd or Promtail to collect logs from all nodes and store them in a centralized system like Elasticsearch or Loki.

b. Set Up Alerts

Proactive alerting helps in catching issues before they affect your production environment. Set up alerts for critical metrics like high CPU/memory usage, pod failures, or application errors.

c. Use Labels and Annotations

Labels and annotations in Kubernetes can be used for better organization and targeting in monitoring and logging tools. They allow you to group logs and metrics by service, namespace, or other identifiers.

d. Monitor Resource Utilization

Ensure that you’re tracking resource utilization at both the node and pod levels. This helps in identifying bottlenecks and preventing resource exhaustion in your cluster.

e. Retain Logs and Metrics

Determine an appropriate retention policy for your logs and metrics. Short retention periods can result in losing critical information for troubleshooting, while long retention periods can increase storage costs.

Conclusion

Logging and monitoring in Kubernetes are essential for managing applications and infrastructure at scale. By setting up a robust logging system (e.g., using the EFK stack or Loki) and a powerful monitoring solution (e.g., Prometheus and Grafana), you can ensure the reliability, performance, and security of your Kubernetes clusters.

As a DevOps engineer, focusing on best practices for centralized logging, proper resource utilization monitoring, and alerting will greatly enhance your ability to manage dynamic, containerized environments efficiently.

Reference

https://www.youtube.com/watch?v=cNPyajLASms&list=PLl4APkPHzsUUOkOv3i62UidrLmSB8DcGC&index=37

https://kubernetes.io/docs/tasks/debug/debug-cluster/resource-metrics-pipeline/

Subscribe to my newsletter

Read articles from Rahul Vadakkiniyil directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by