Kubernetes 101: Part 5

Md Shahriyar Al Mustakim Mitul

Md Shahriyar Al Mustakim MitulCheck out the concept of switching, routing, default gateway, DNS Server, CoreDNS

Network Namespaces

If the host is the house here, rooms are the namespaces

As a parent, you have the visibility to the rooms

Assume that there is a container (blue square) kept within a namespace(the cover of the blue square). If you inspect from the container about processes running, you can only see one process (one container running)

But if you inspect from the host device or as a parent, you can see all processes running on the host (including the container)

The host device has it’s own networking interface, routing table

But we don’t want our container to know about them. So, we keep it within a networking namespace where it has it’s routing table and networking interface

How to create networking namespaces?

We use ip netns add <networking_namespace_name>

To see the networking interface of the host, use ip link

But if we want to see the networking interface of the namespace, use these

To see the arp table for the host , use arp

Also you can check the arp for network namespaces

Same way for both host device and networking namespace

How to connect two network namespace?We use virtual ethernet port or pipe

First attach two virtual ethernet port

Then connect them to respected namespace

We can also set IP addresses to each of the namespaces

Then we can try to ping each other

Now, if you check the arp table for both of the namespace, you will see they can detect their neighbours

If you check the host arp, you can’t find them

We connected two networking namespaces. But what about a lot of networking namespace?

In that case, we need a virtual network and for that we need virtual switch.

But how to create virtual switch? We use linux bridge and OVS open vSwitch for that.

Let’s use Linux Bridge

First we will add a bridge (an interface on the network)

The host device watches that as an interface

So, it acts as an interface for the host and switch for the namespaces. Let’s connect the networking namespaces to the switch (v-net-0)

First delete the old connections

First create a connection to the bridge

Now, connect them to the respective namespace and the bridge network

Now, the red networking namespace is connected to the bridge!!

Follow the same for other networking namespaces

add IP address to the networking namespaces and make them up and running

Follow the same process for orange and ash networking namespaces. Once done,

they can all communicate each other through the bridge

Can we contact any namespace through our host device? No!!

Because they are not connected. How to connect? As this bridge is working as a networking interface for the host, we just need to assign an IP address to it (This bridge works as a switch for namespaces)

Here the v-net-0 has been assigned ip 192.168.15.5. Now the host device can contact any of the networking namespaces via the v-net-0

What if another host want to ping our blue networking namespace?

It can’t as there is no connection because no gateway is there (0.0.0.0)

We need a gateway to connect the bridge with some outside network.

Once we connect the LAN with the v-net-0,

we can send a ping from outside network (192.168.1.3) to blue networking namespace, but there is no response back.

Why? Assume you have a home network which wants to contact to external device via a router. As our home network has a private network which our destination device does not even know, it can not reach back.

To solve this issue, we need NAT enabled on the host which will act as a gateway.It will act as the network namespace and send message to the LAN using it’s own iptable.

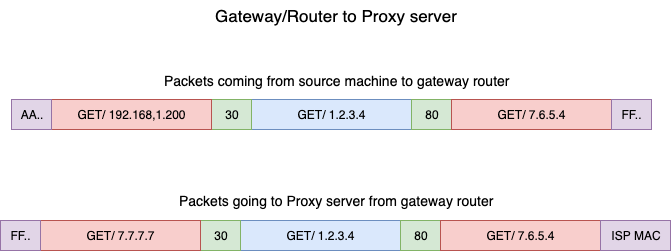

Add a new rule in the NAT Iptable in the POSTROUTING Chain to replace front address on all packets coming from the source network 192.168.15.0 with own IP address.

Here you can see that a data packet basically has source IP, destination etc. The NAT gateway will change it to it’s own IP.

Now, if anyone outside of the host device received the packet, they will think that the data came from the host device rather than the networking namespace (data actually came from blue to v-net-0 and then to NAT gateway)

Now, if we ping the outside device (192.168.1.3) , the blue networking namespace can reach that.

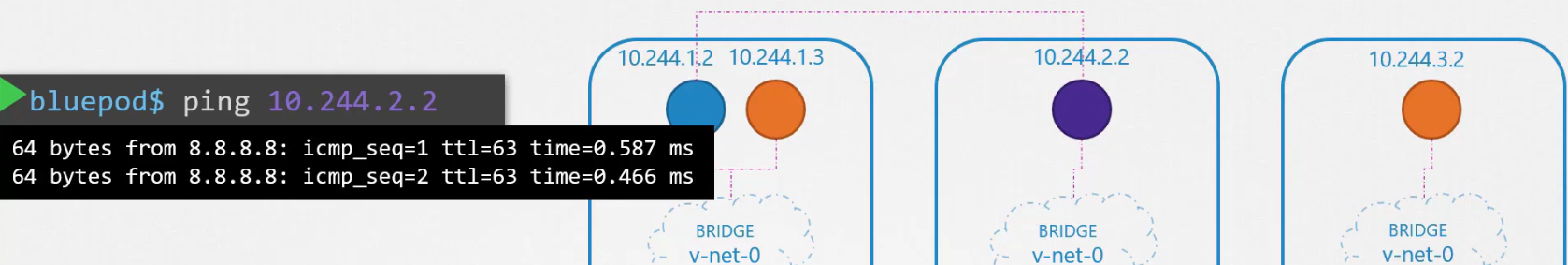

Now, assume that the LAN is connected to the internet and we want to connect a server (8.8.8.8) on the internet via a networking namespace

It can’t reach there. From the routing table, we can see that we have connection from v-net-0 (192.168.15.5) to LAN (192.168.1.0)

To connect to internet, we need to connect our v-net-0 with a default namespace

Now, if we ping form the blue networking namespace, we can reach the outside server (8.8.8.8) via Default gateway.

What if any outside server wants to reach to the blue networking namespace?

Why? Again, the outside server or host does not have access to the private network IP for the blue network namespace.

To solve this issue, what we can do is, any traffic that comes to the host one’s port 80 can be passed to blue networking namespace’s port 80 (basically passing the data without letting outside source access the private network directly)

Now, the out side device can reach the blue networking namespace using this technique

Note: While testing the Network Namespaces, if you come across issues where you can't ping one namespace from the other, make sure you set the NETMASK while setting IP Address. ie: 192.168.1.10/24

ip -n red addr add 192.168.1.10/24 dev veth-red Another thing to check is FirewallD/IP Table rules. Either add rules to IP Tables to allow traffic from one namespace to another. Or disable IP Tables all together (Only in a learning environment).

Docker Networking

Assuming we have a host which has docker installed and networking interface (eth0) with IP 192.168.1.10

Let’s run containers on None network . In this way, they can’t contact to the outside world

You can also run containers on the host

Here the container nginx is hosted on the port 80. So, anyone connecting to port 80 can reach the container.

Another way is connecting through Bridge.

In this way, every container gets their own private IP address.

Bridge network in docker

When docker is installed there is a default bridge network that gets created.

Here bridge refers to the docker0 interface. The interface is down now. The interface s also assigned an IP address on the host.

Whenever a container gets created, docker creates a network namespace. You can list that using ip netns

You can see the namespace associated with each container in the output of the docker inspect <container_id>

But how does docker connect the container (kept within a network namespace) with the bridge network?

If you try to see a link, you can see there are interfaces connected to bridge (vethbb1c….) and the container/networking namespace (eth0@if8)

The same process is followed once a new container is created. It creates a namespace for the container, attaches a network interface to bridge and the network interface. Then links both.

Now, assume you did run a container on port 80. Only host can access it

But if you do it from outside the host, you can’t do it.

How to solve it? You can use Port mapping

Now, anyone reaching to port 8080 on the host will get connected to the port 80 on the container.

This is how it connects port 8080 to 80

You can check the iptables

Container Network Interface (CNI)

We can solve all of the issues mentioned earlier almost in the same way. We then took an approach to do all of these in a standard approach and we call it BRIDGE. This is basically a script.

This will help us connecting a container to a bridge network.

Assume that you want to attach the container (2e34….) to this networking namespace (/var/run/netns/2e34…), BRIDGE takes care of that and connects

So, container run time(CRT) does not have to do it.

While using rkt or kubernetes, we use the same command to connect to a namespace

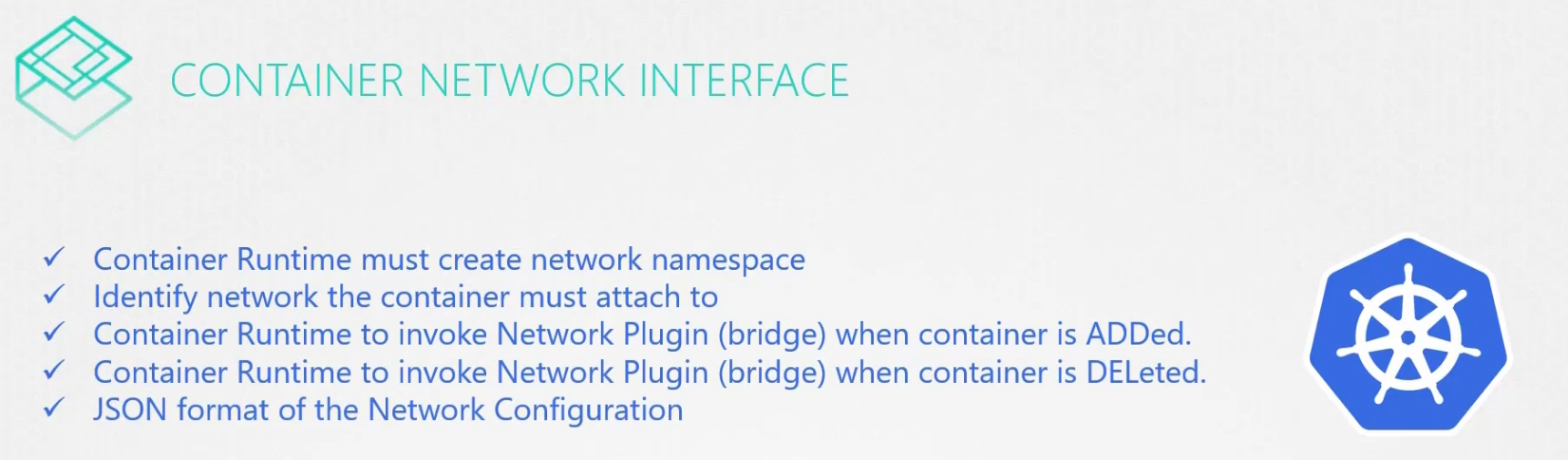

If you want to create your own BRIDGE like program/plugins, you need to follow some standard called CNI

These are the standard rules by CNI:

CNI comes with supported plugins/programs like BRIDGE, VLAN, IPVLAN etc

also comes with IPAM plugins like DHCP, host-local. Also other plugins from third-party like flannel, cilium, Vmware etc.

So, any of these can implement CNI execpt Docker

Docker has it’s own netork model (CNM). So, you can’t use cni with docker.

But you can use CNI with docker using a technique.Create a container part of none network and then add the container to a network namespace using BRIDGE. as BRDIGE is a plugin mentioned above, you are using CNI from docker.

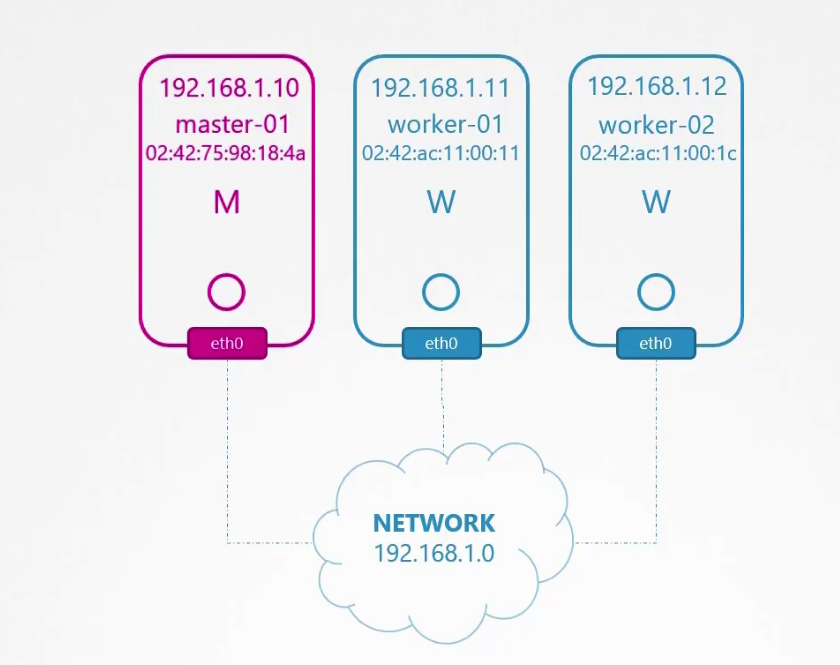

Cluster Networking

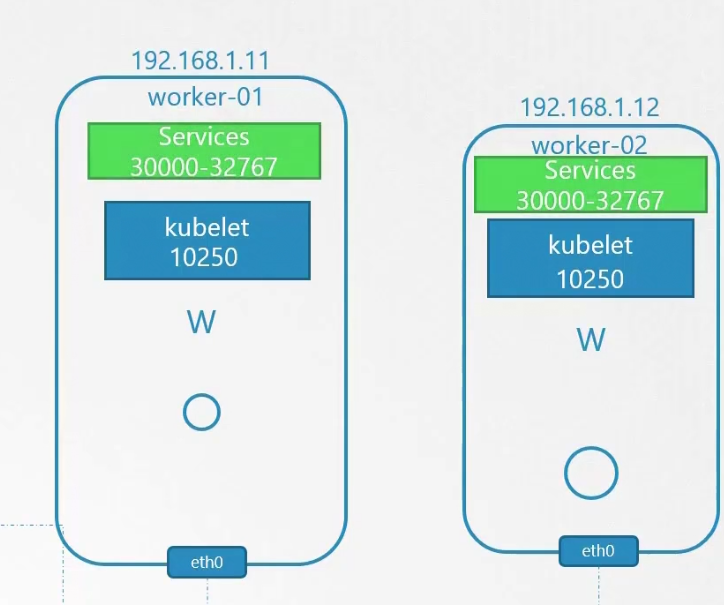

Within a cluster, we have master node, worker nodes . Each node carries pods.

The whole cluster is connected to a network which assigns IP address to the nodes.

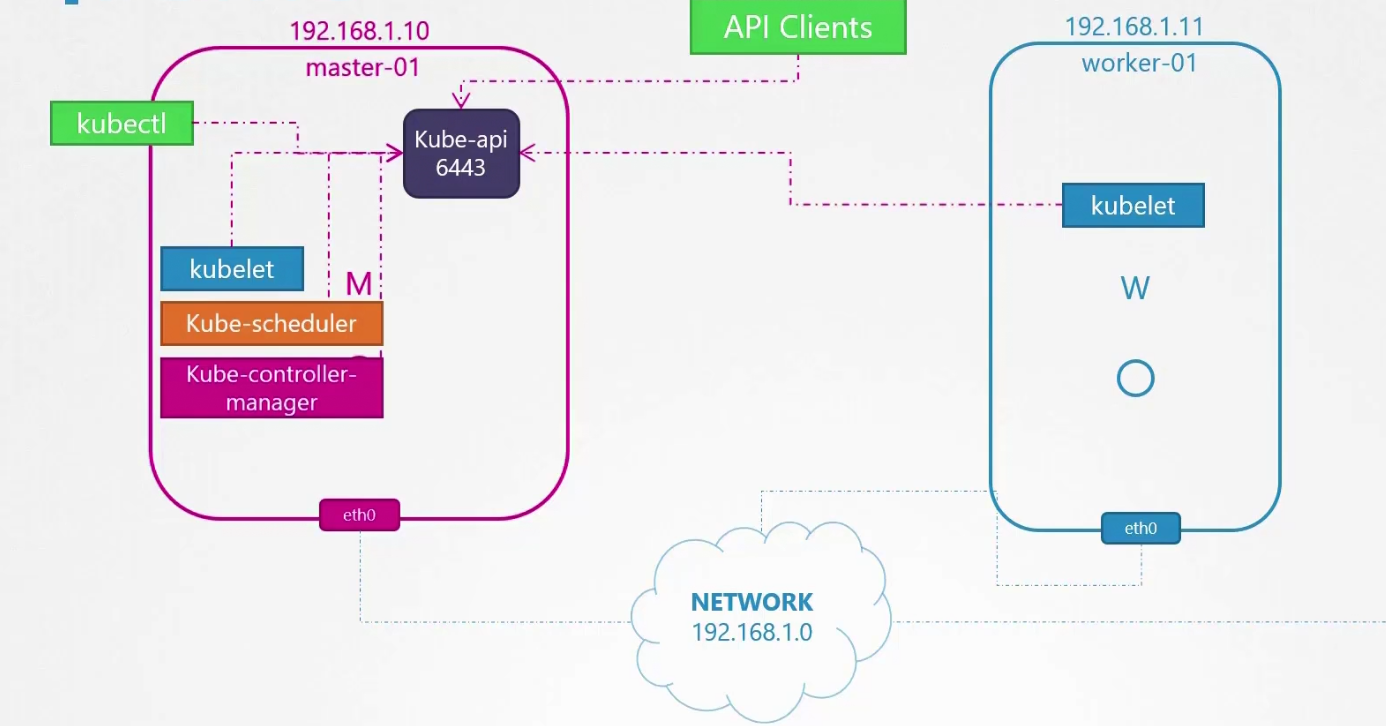

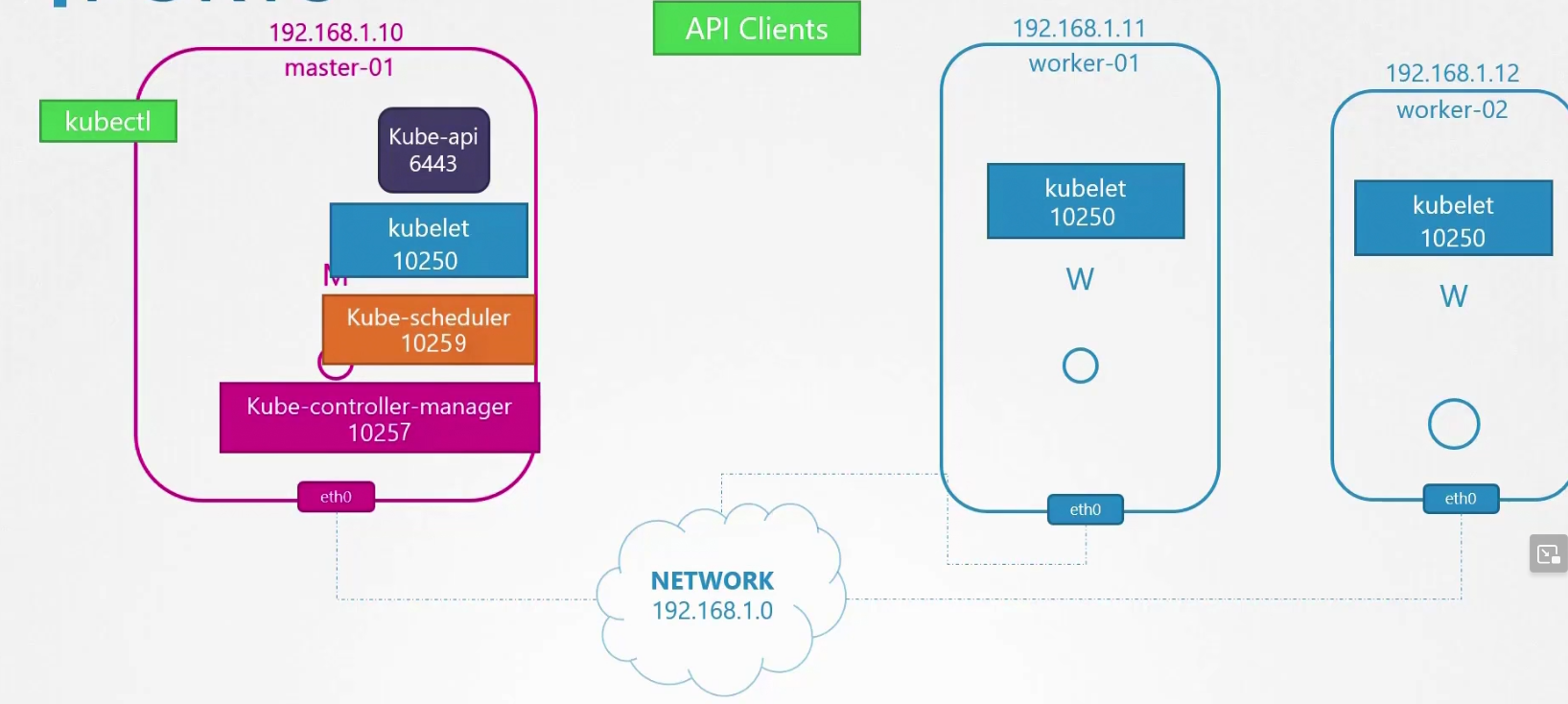

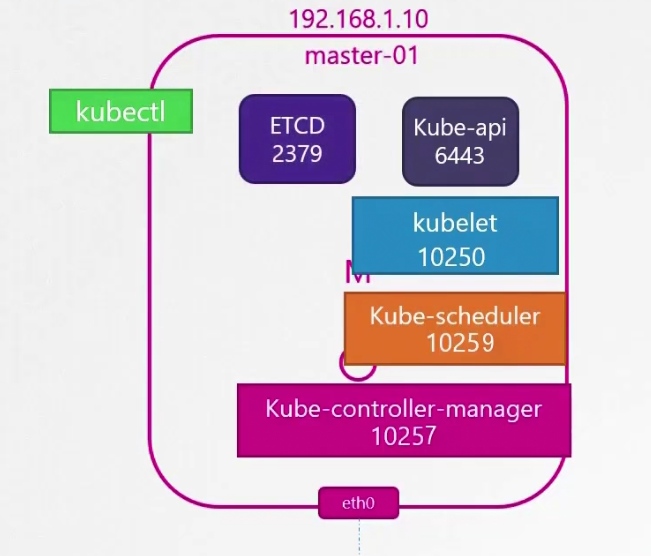

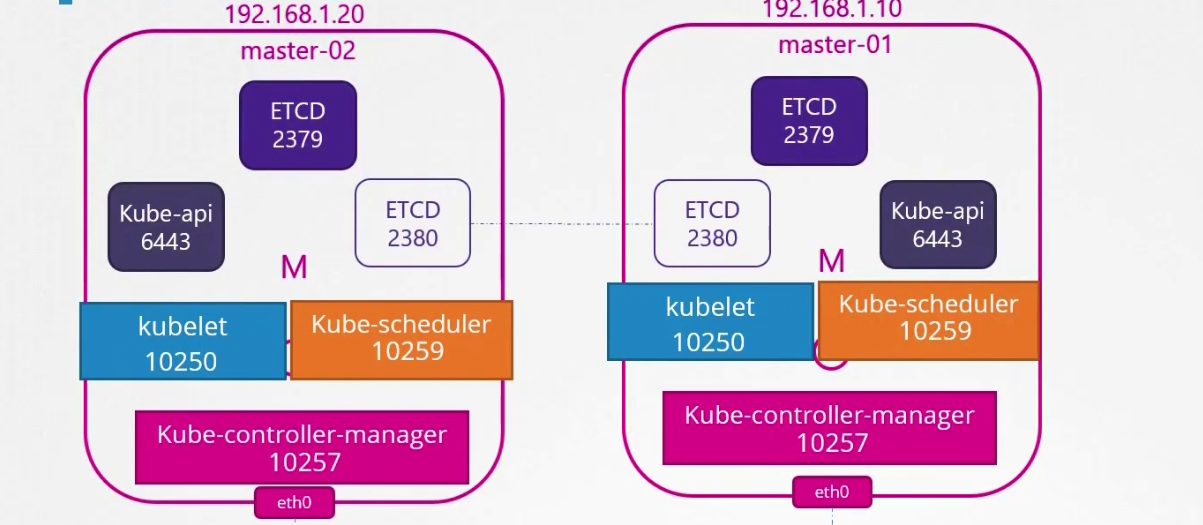

Typically this is the master node and worked node looks like. Master node has kube-api, kubelet (can be there), kube-scheduler, kube-controller-manager. Other external nodes, agents etc contacts kube-api to get work done.

The ports for kube-api is 6443, for kubelet it’s 10250, for kube-scheduler it’s 10259, for kube-controller-manager it’s 10257.

The worker node expose ports for external services. The services can have a port between 30000-32767

The ETCD server on master node is at 2379 port

If we have multiple master nodes, we need to create one additional ETCD Client at port 2380 to communicate both masters.

In the upcoming ones, we will work with Network Addons. This includes installing a network plugin in the cluster. While we have used weave-net as an example, please bear in mind that you can use any of the plugins which are described here:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

In the CKA exam, for a question that requires you to deploy a network addon, unless specifically directed, you may use any of the solutions described in the link above.

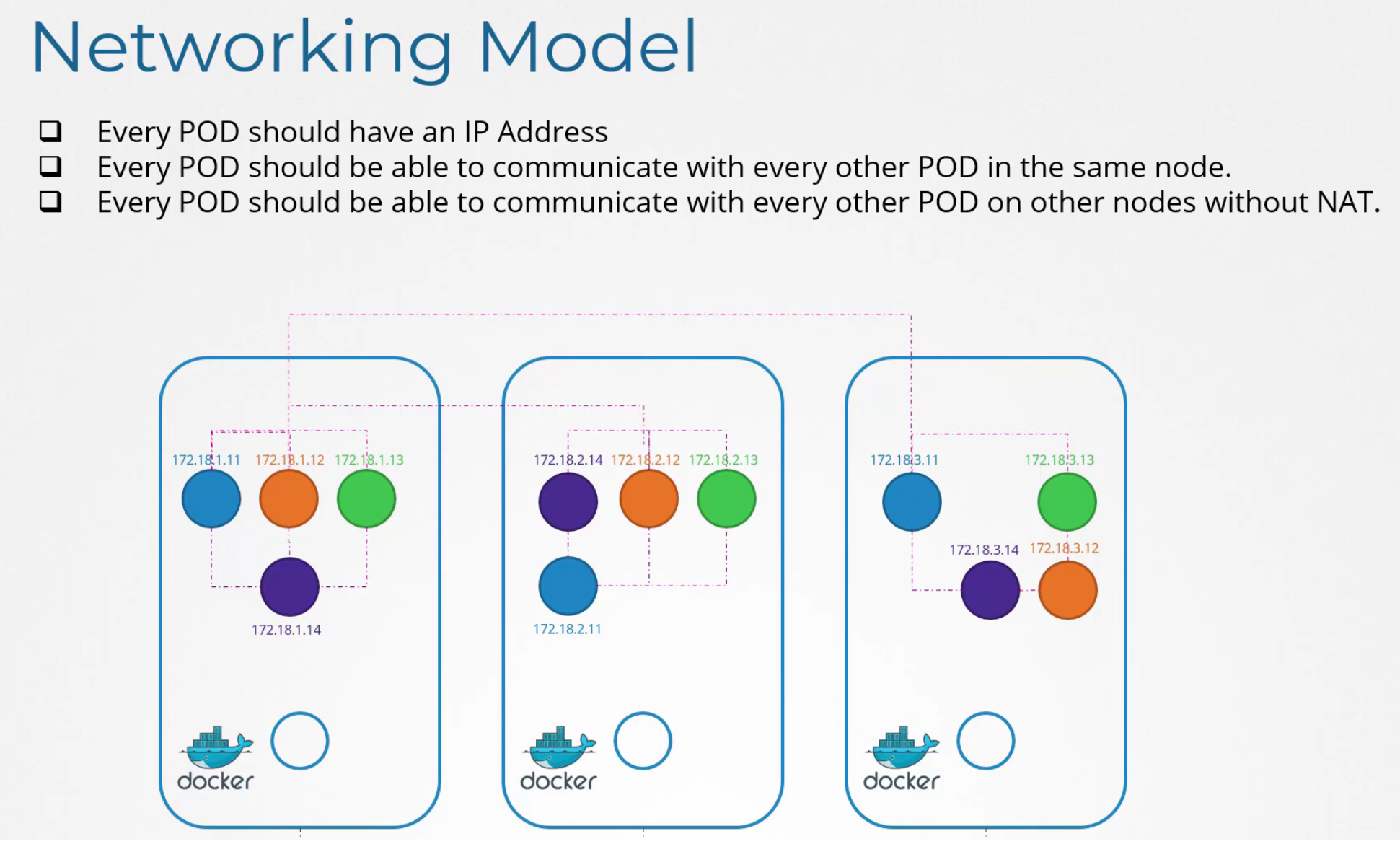

Pod Networking

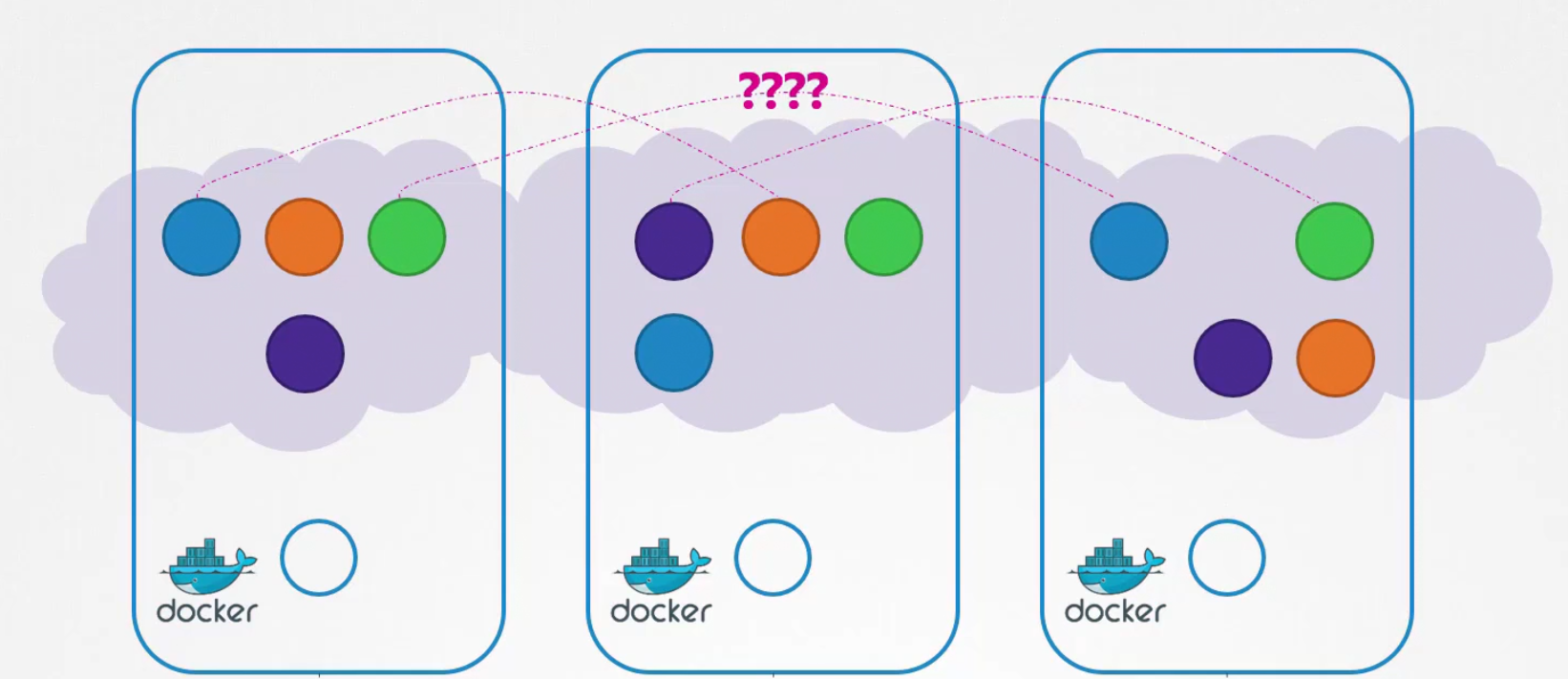

There are pods within nodes and every pod should contact each other.

By default kubernetes does not solve this issue and expects external solution to solve this issue.

But the solution must follow these rules

There are solutions like these to use

But how do they do it? Let’s see

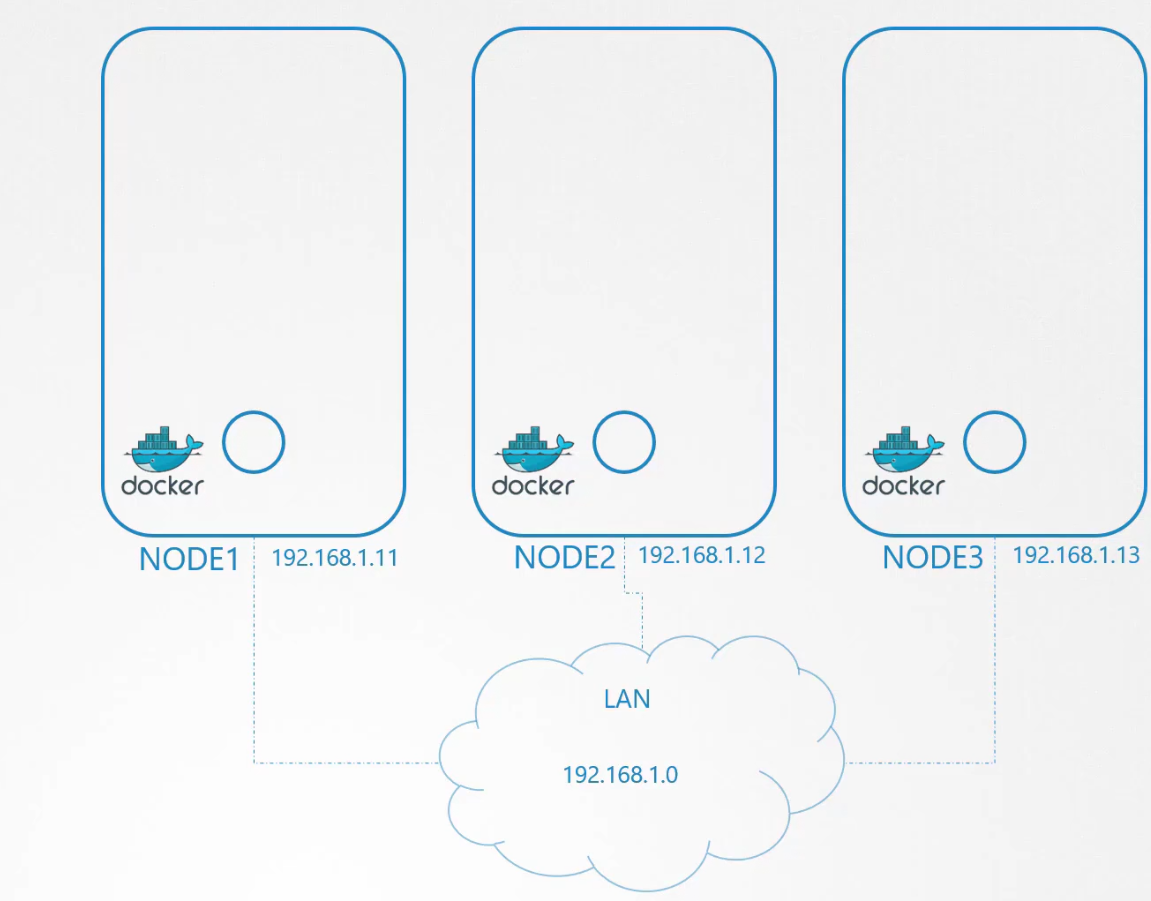

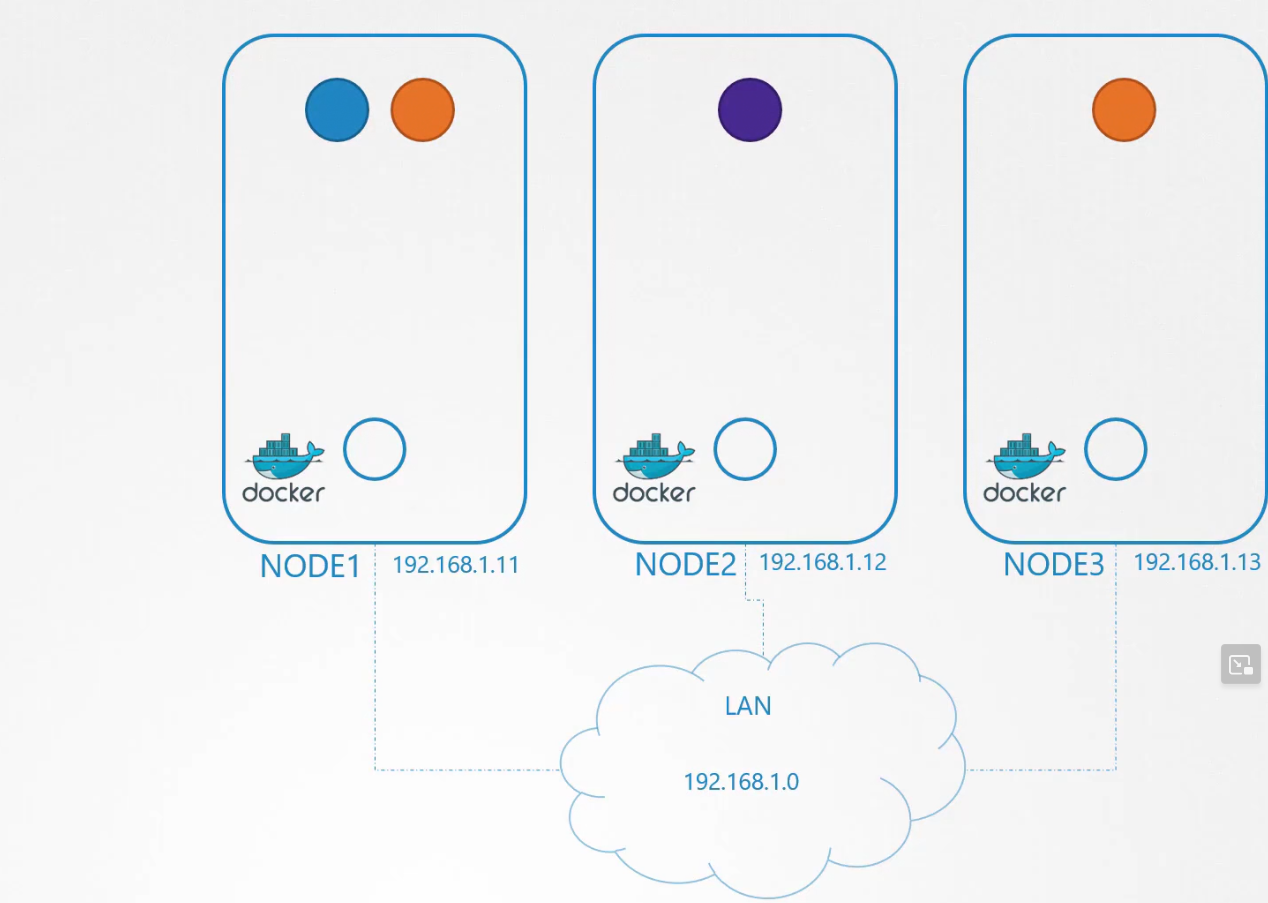

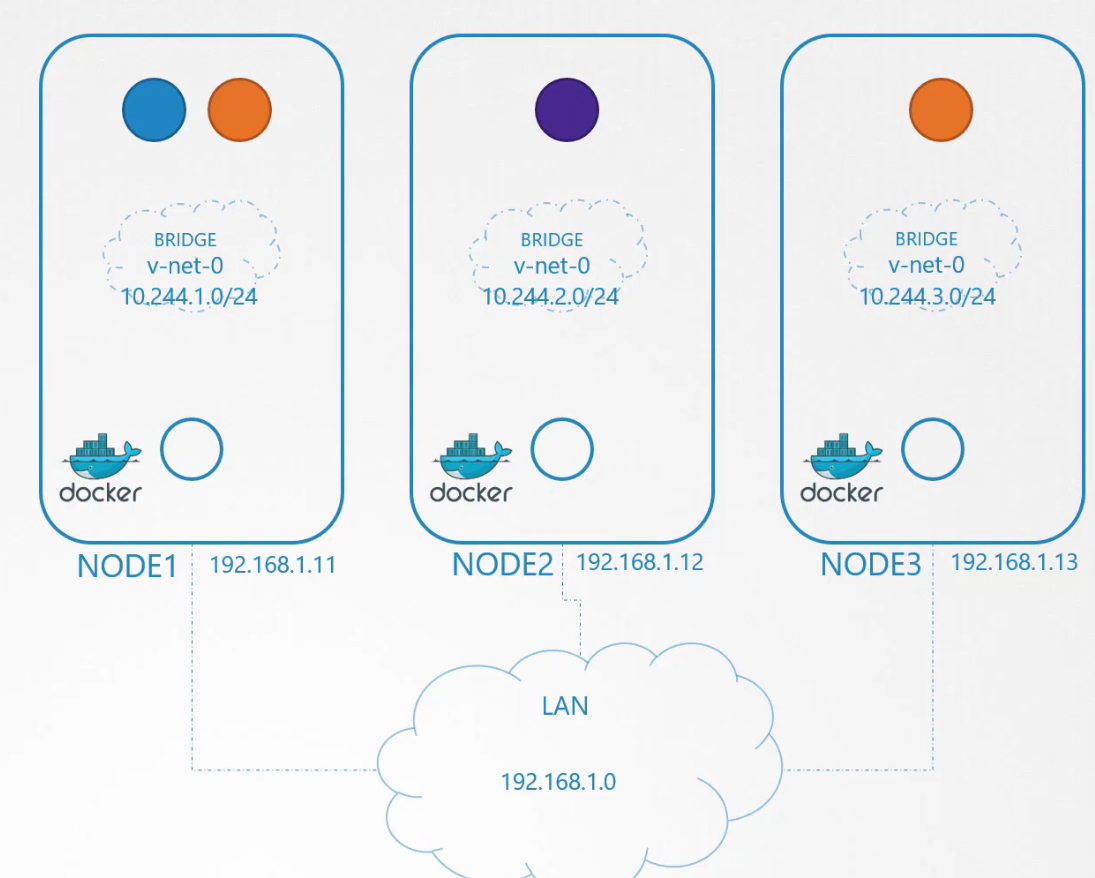

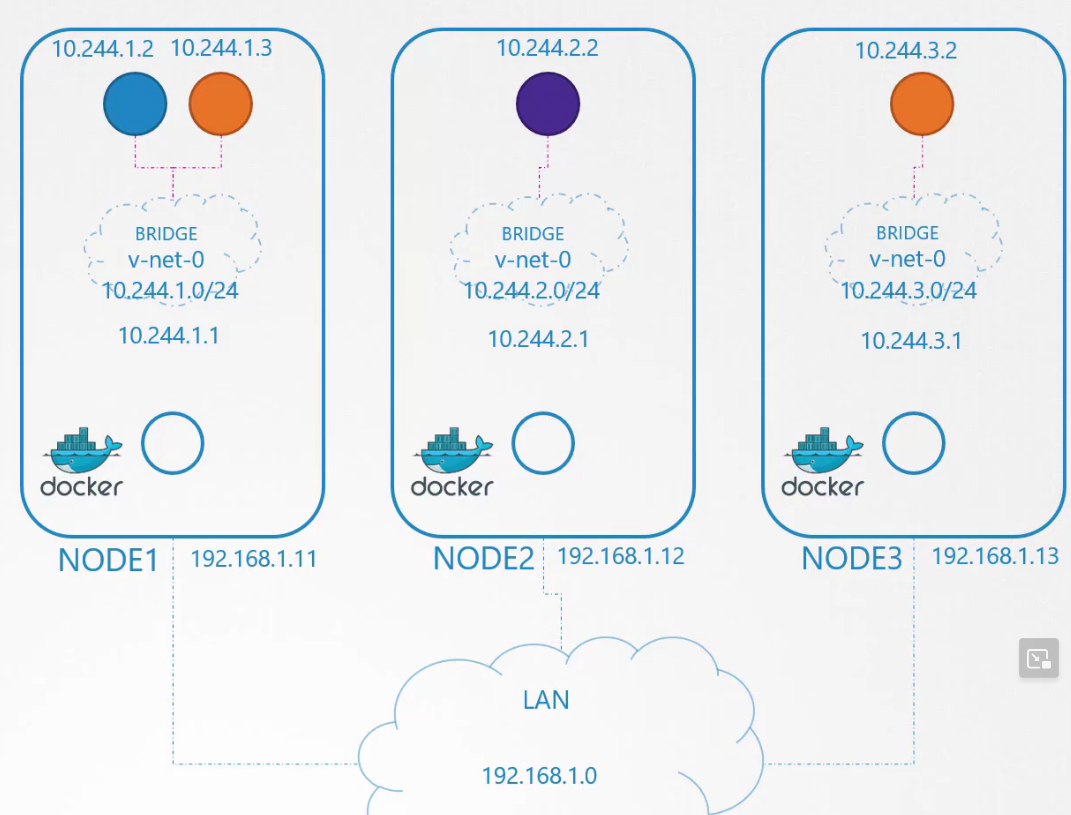

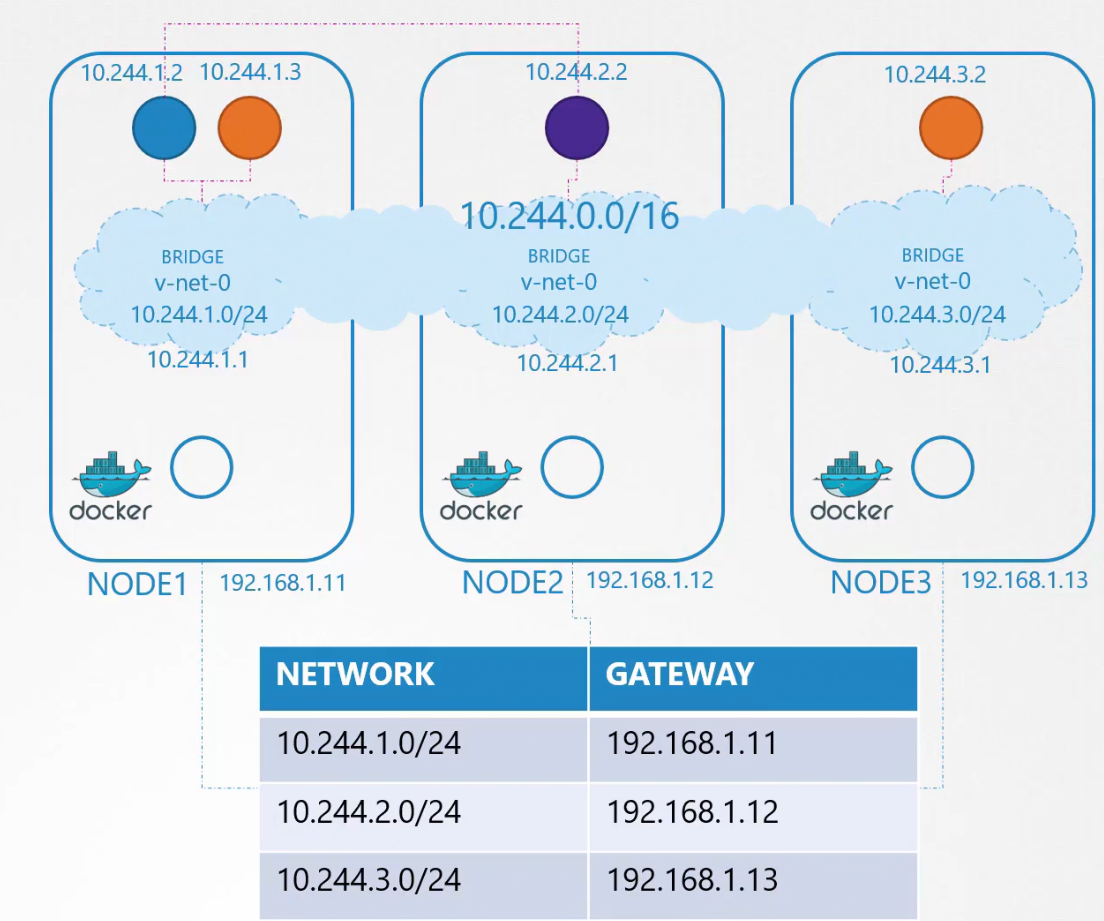

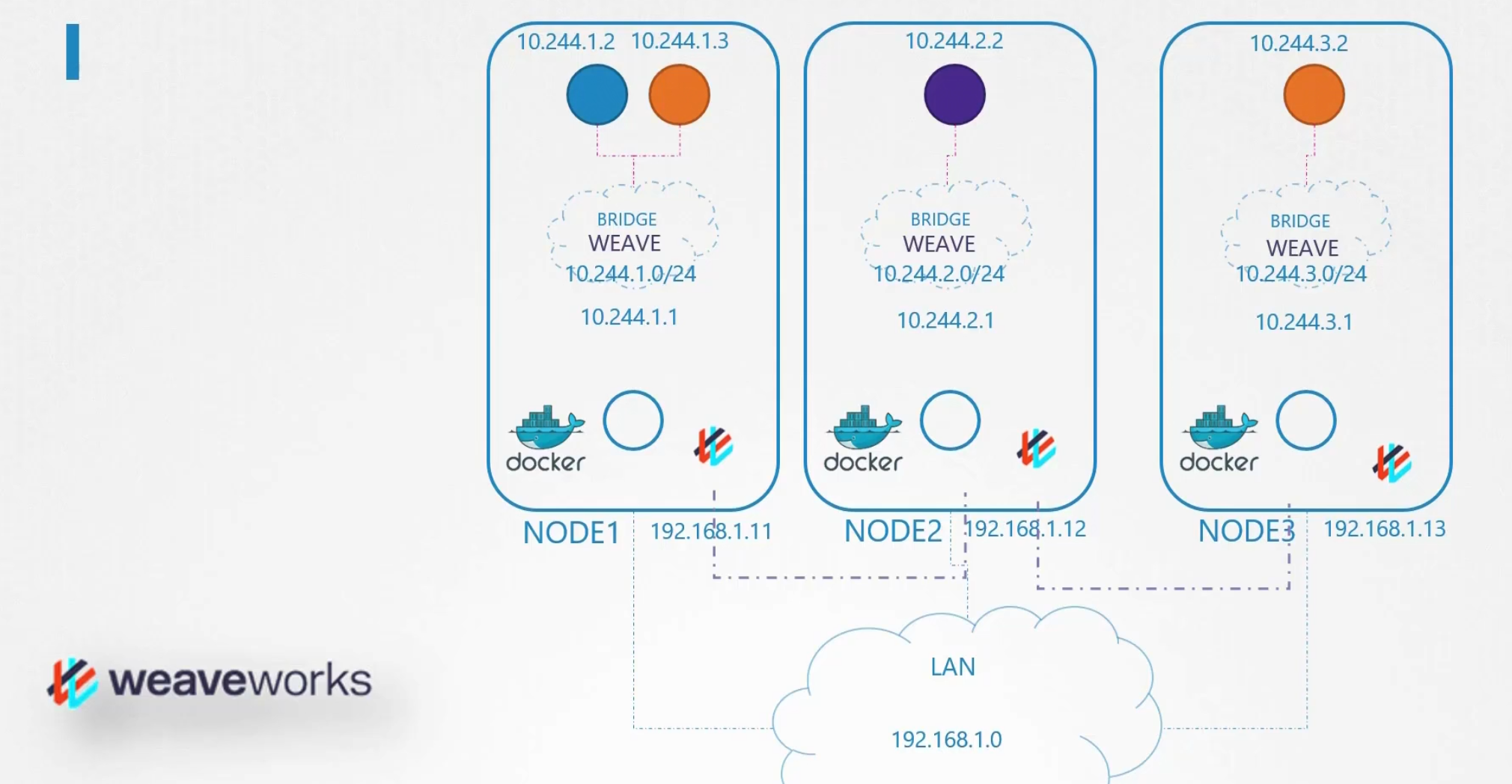

Firstly each node gets assigned to some IP address

Then when the pods are created, kubernetes create network namespace for them.

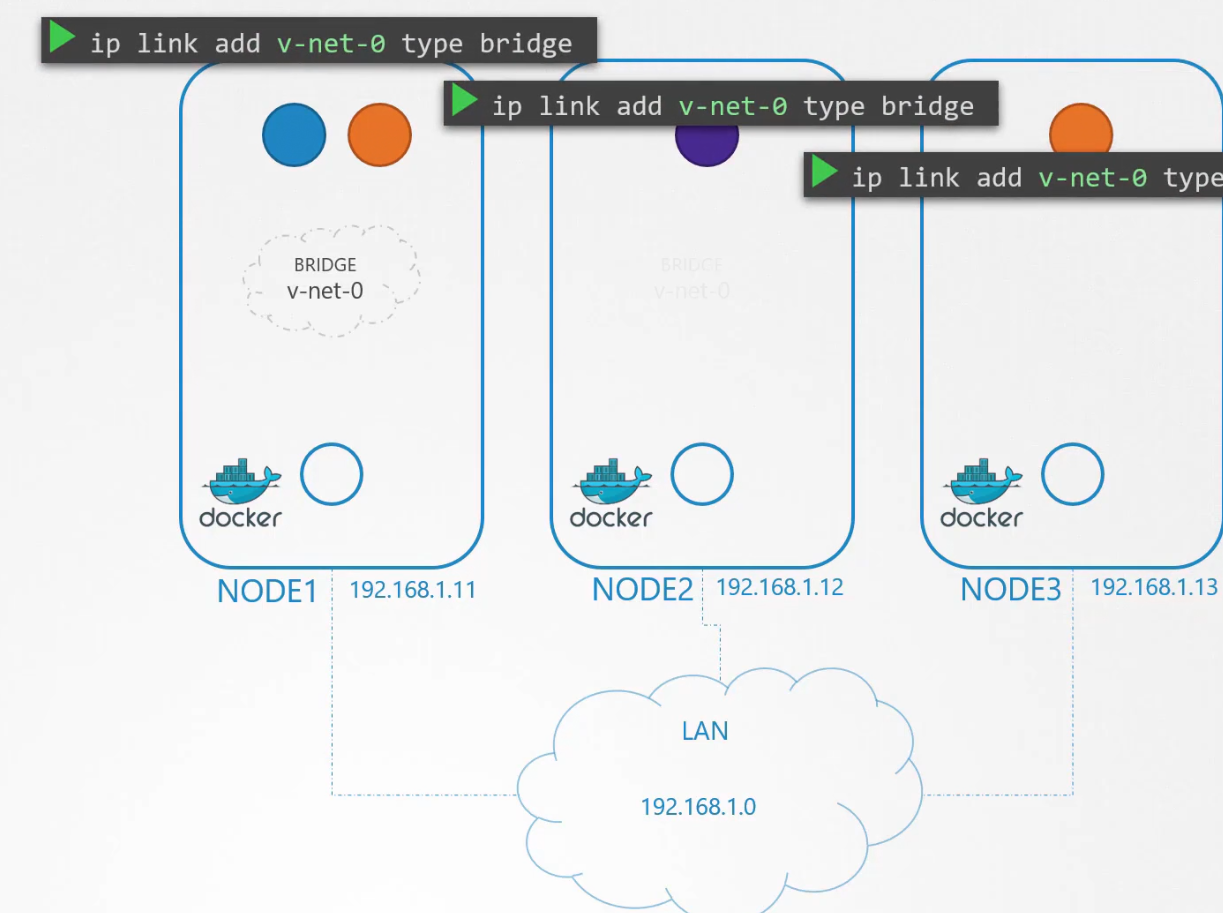

To enable communication between them, we add these namespace to network through bridge.

We create bridge network on each node

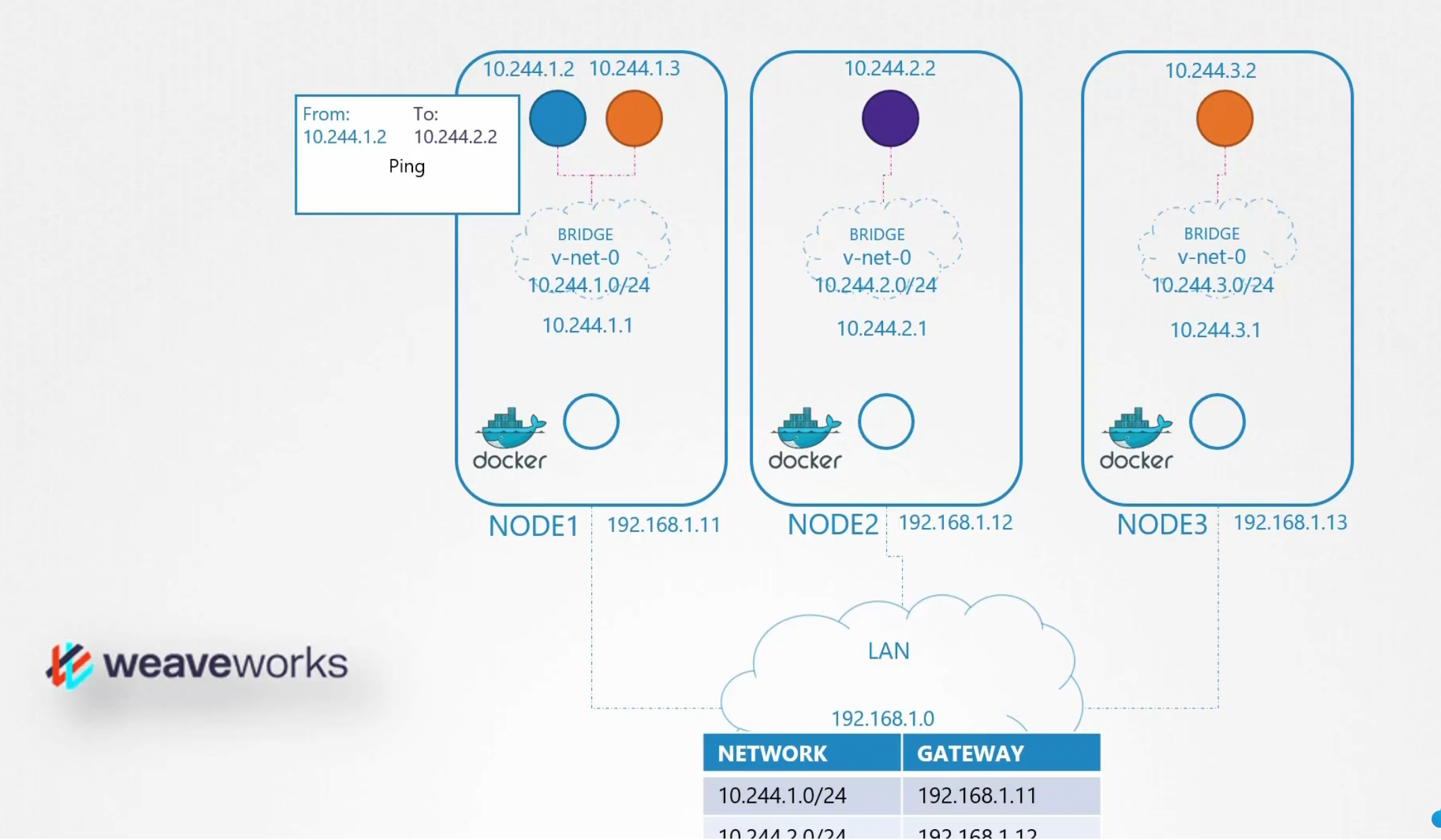

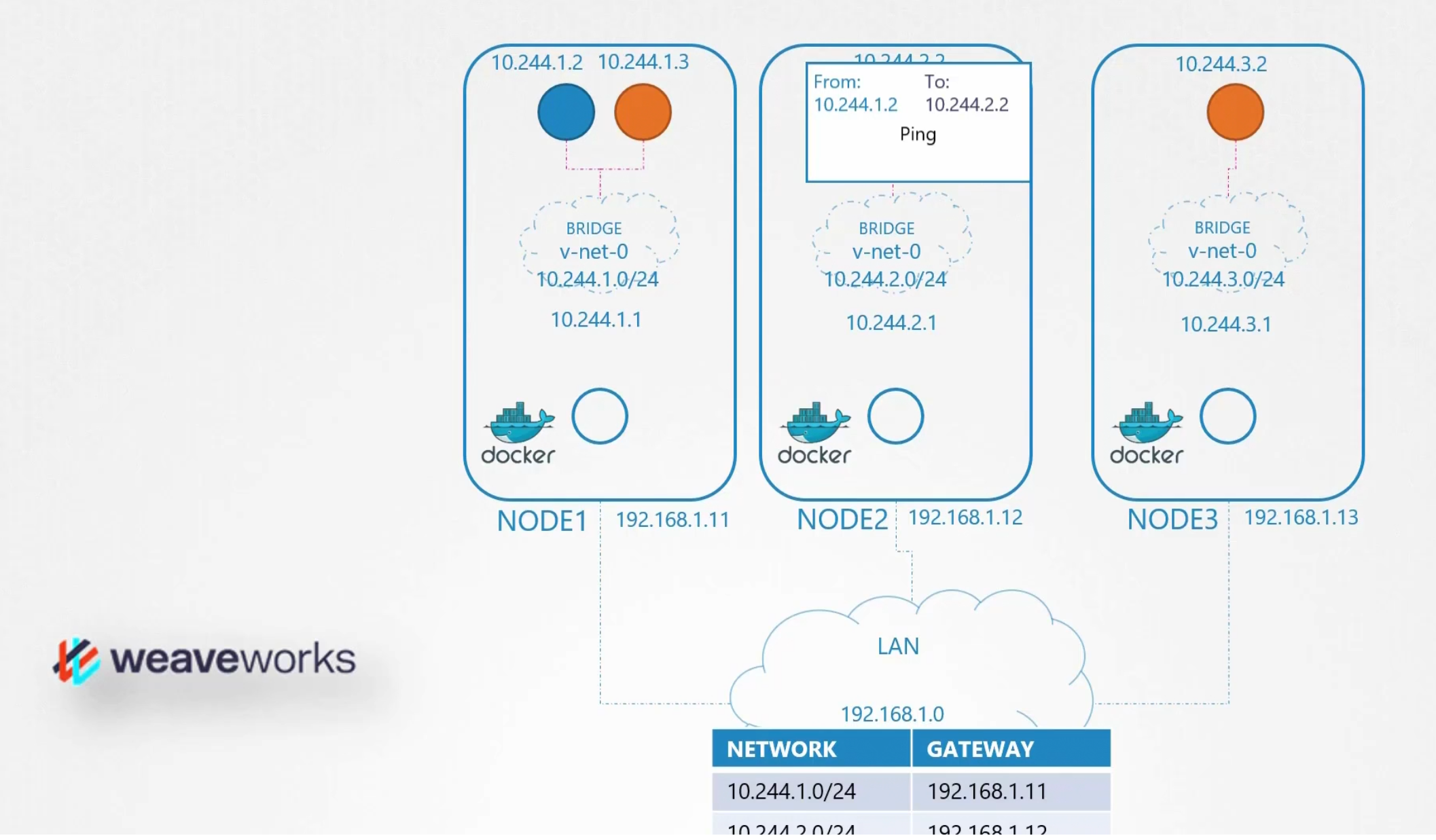

Let’s have each bridge network on its own subnet. Assuming they are 10.244.1, 10.244.2, 10.244.3

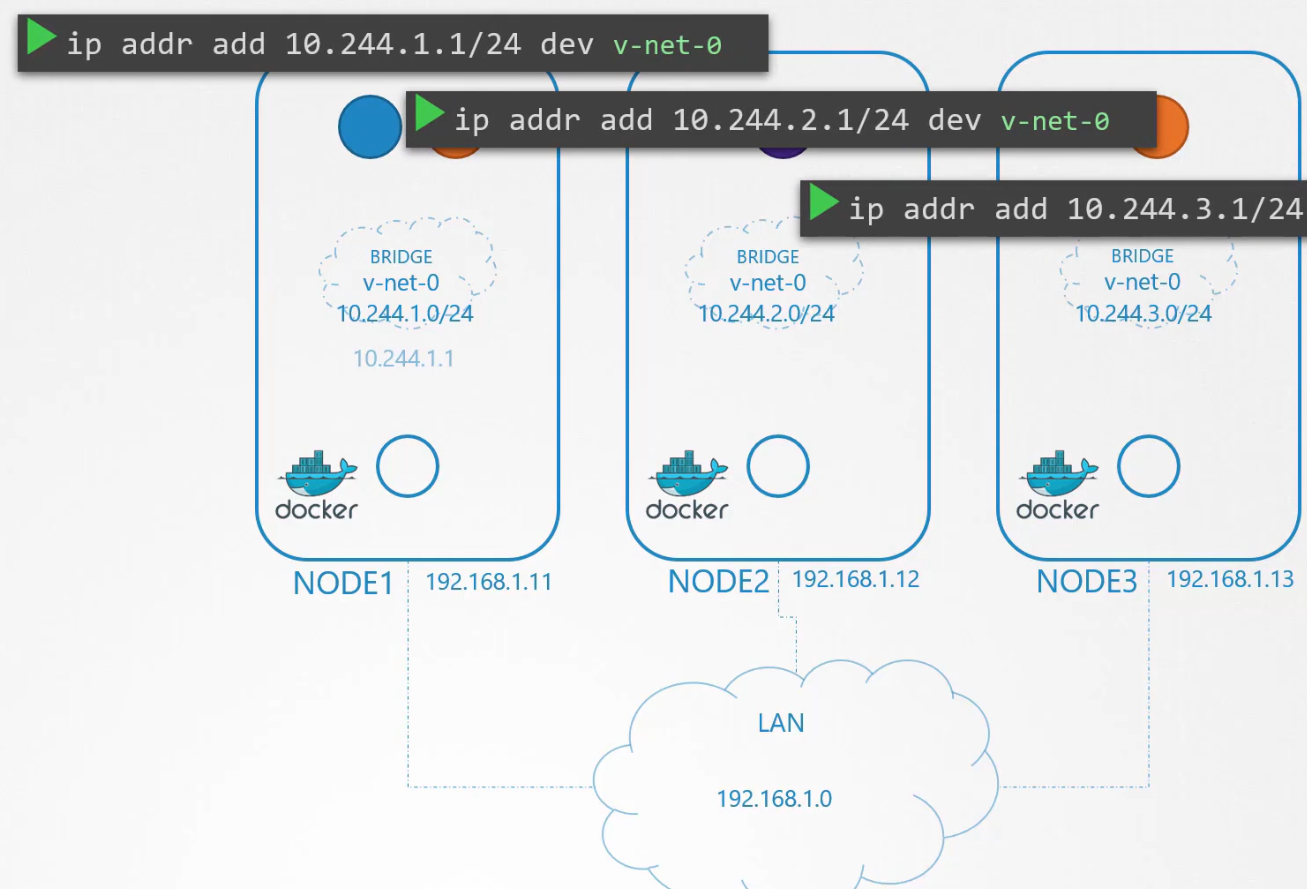

Next is to set up the IP address

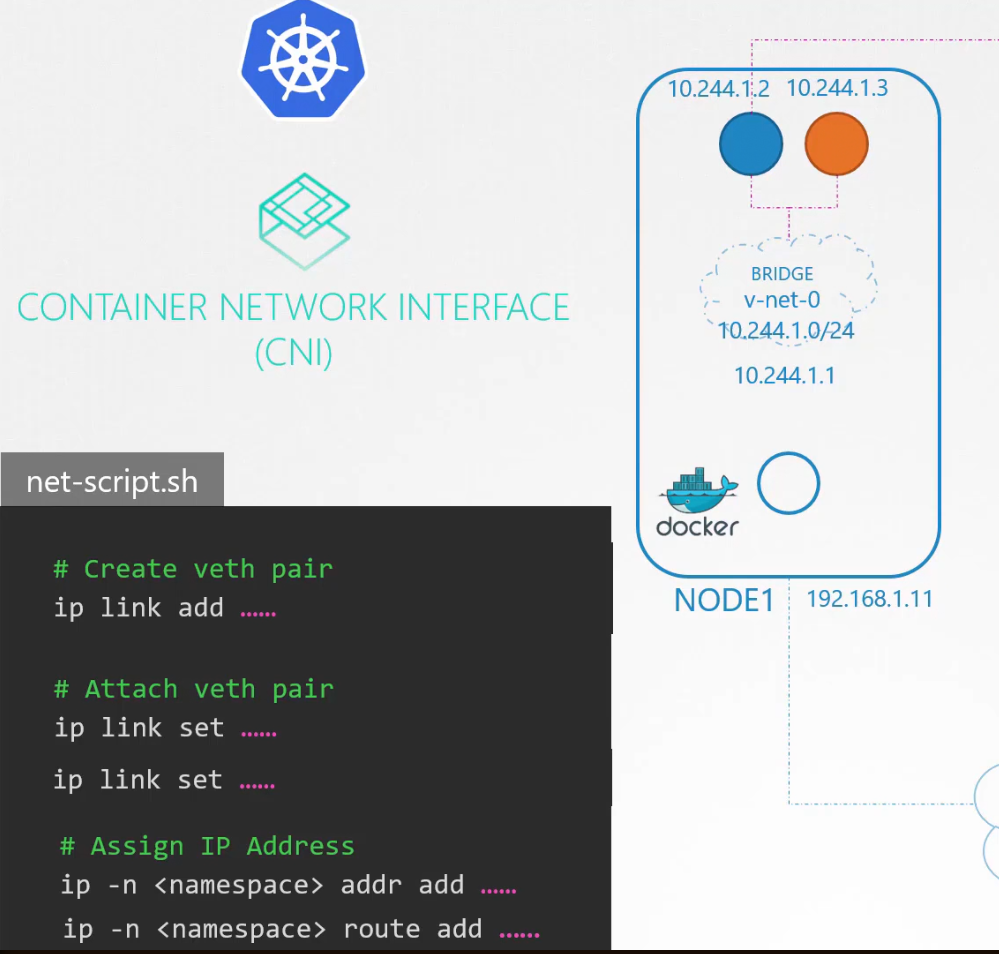

Now the basic tasks are done. The remaining tasks are to be performed by each container and every time a container is created. A script will do this for us.

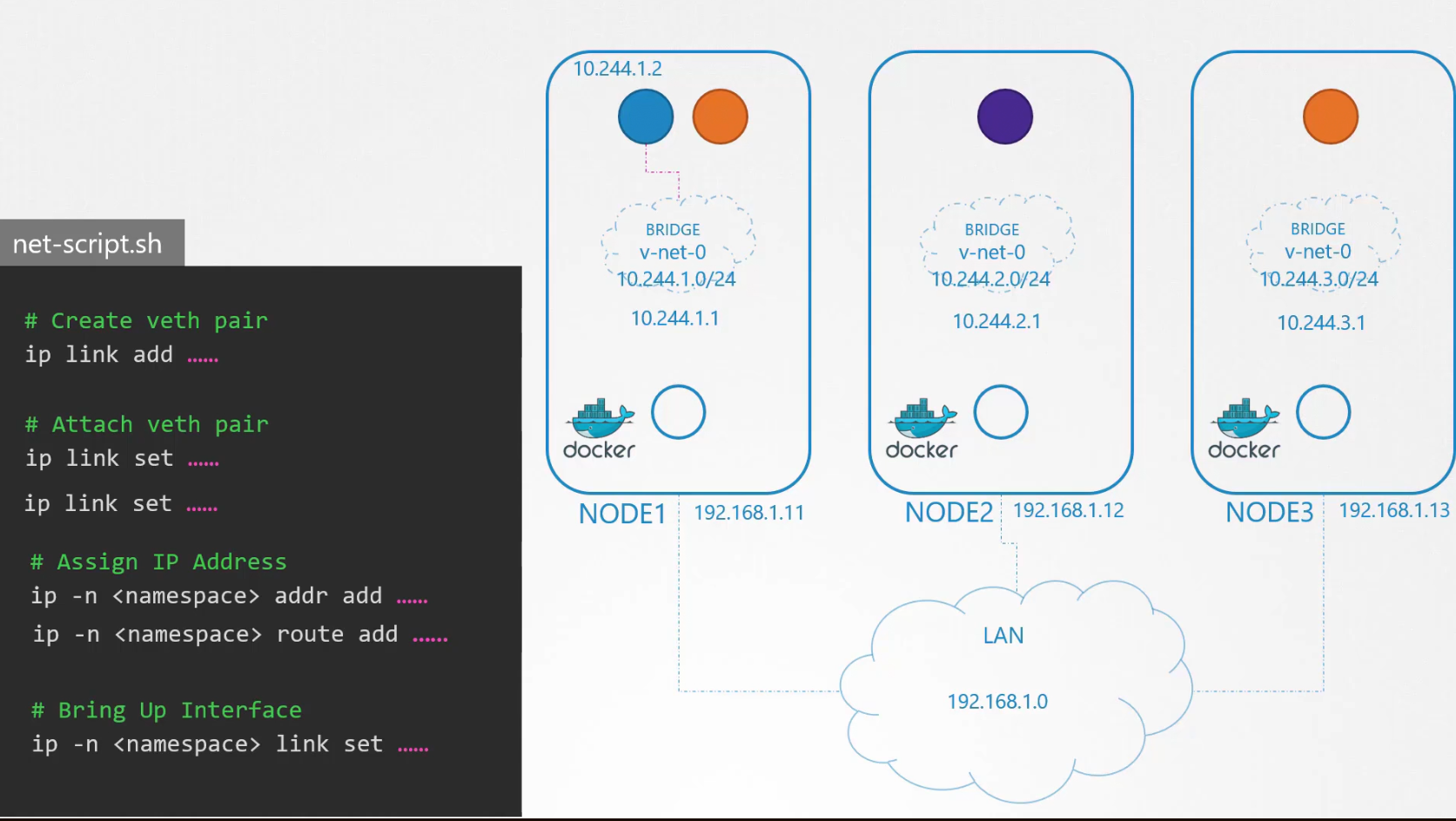

To attach a container to the network, use

ip link add …

Then one end to the container and another end to the bridge . Use

ip link set ….

Then we assign IP address and

ip -n <namespace> addr add….

add a route to the default gateway.

ip -n <namespace> route add ….

But what IP address to add? For now, let’s use 10.244.1.2

Then we bring the interface

ip -n <namespace> link set ….

So, the blue container (left most) is connected to the bridge

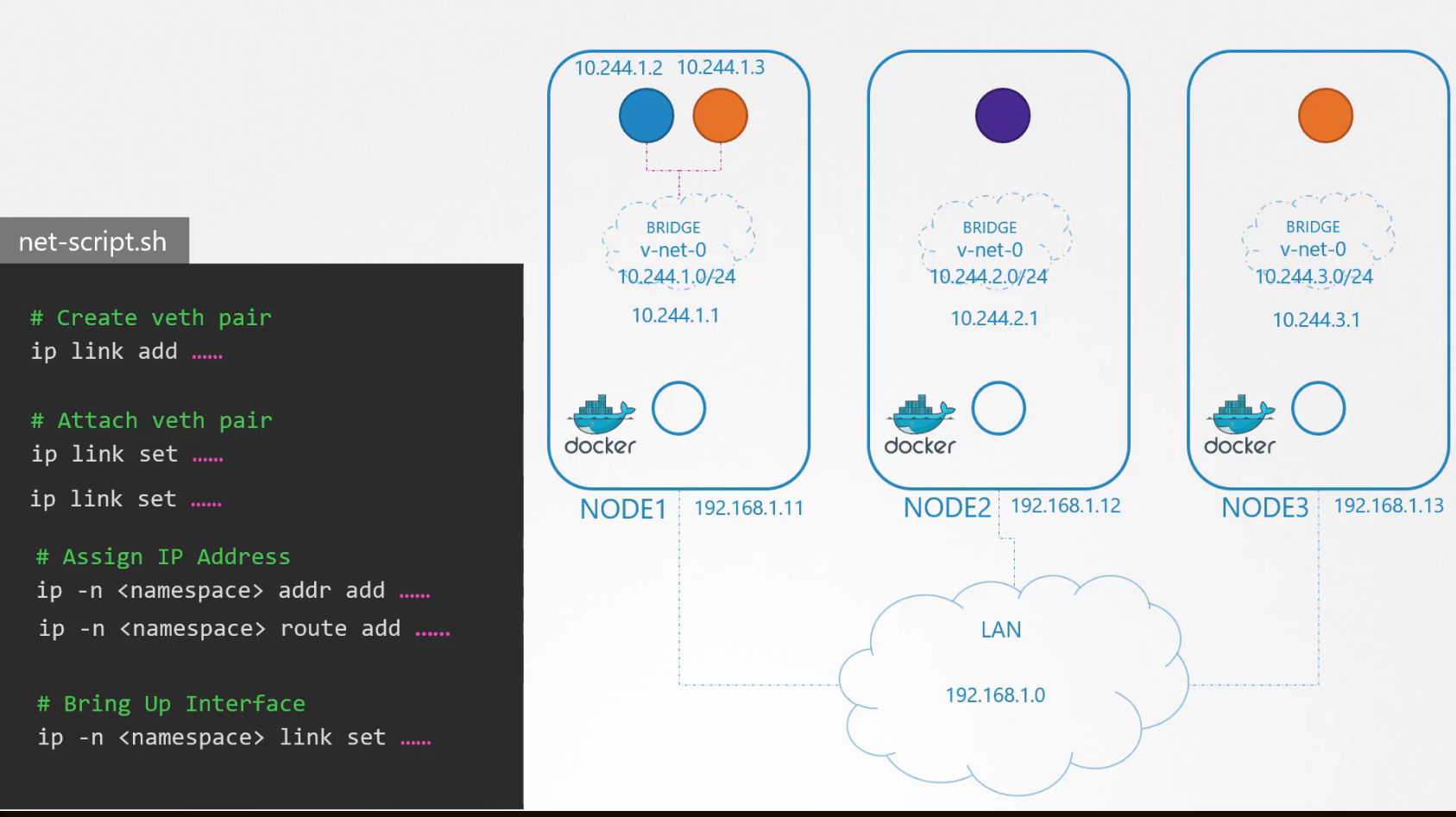

We will repeat this script for the another container.

Now both blue and orange containers can communicate and now they have their own IP. We can use this script for other nodes and make sure other containers can talk within themselves.

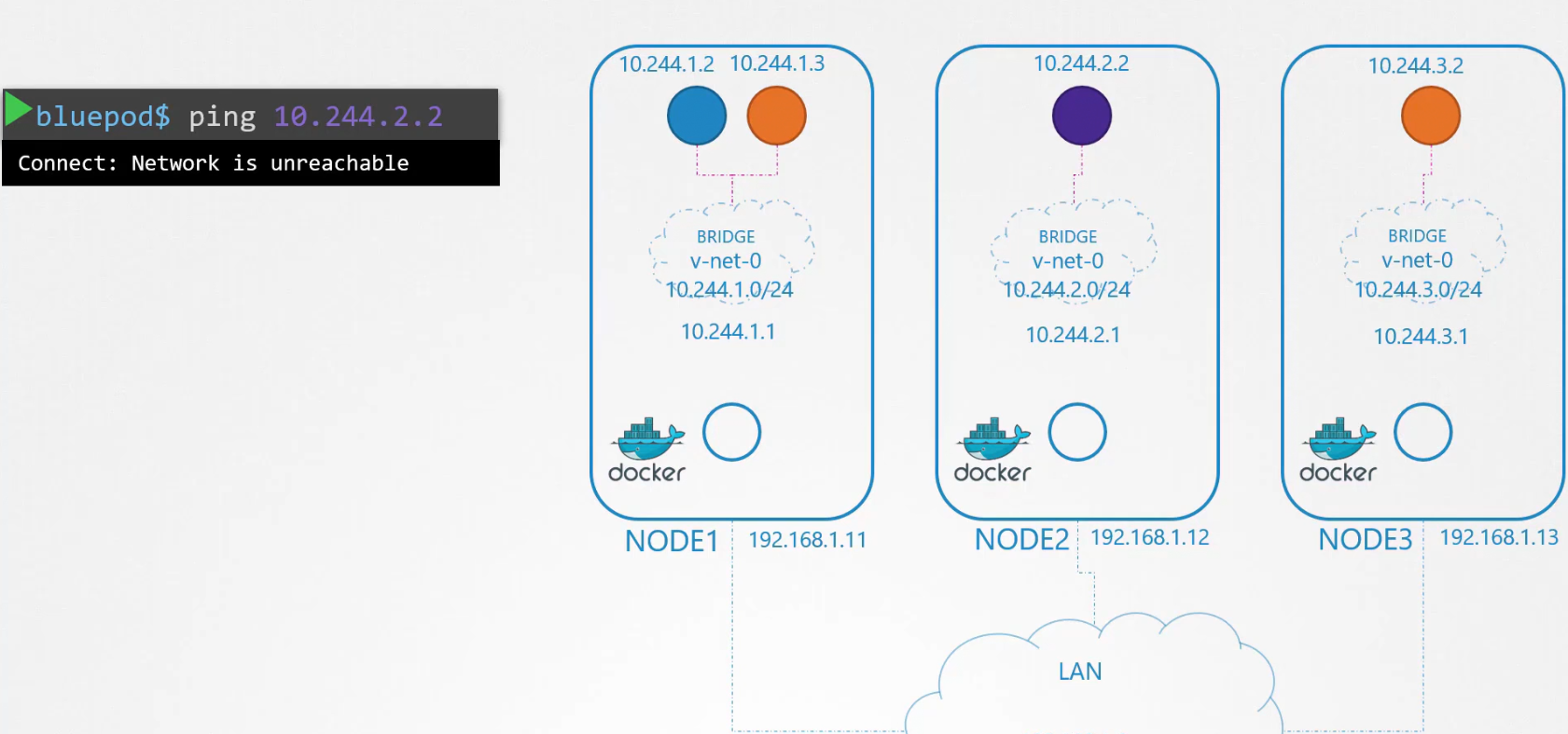

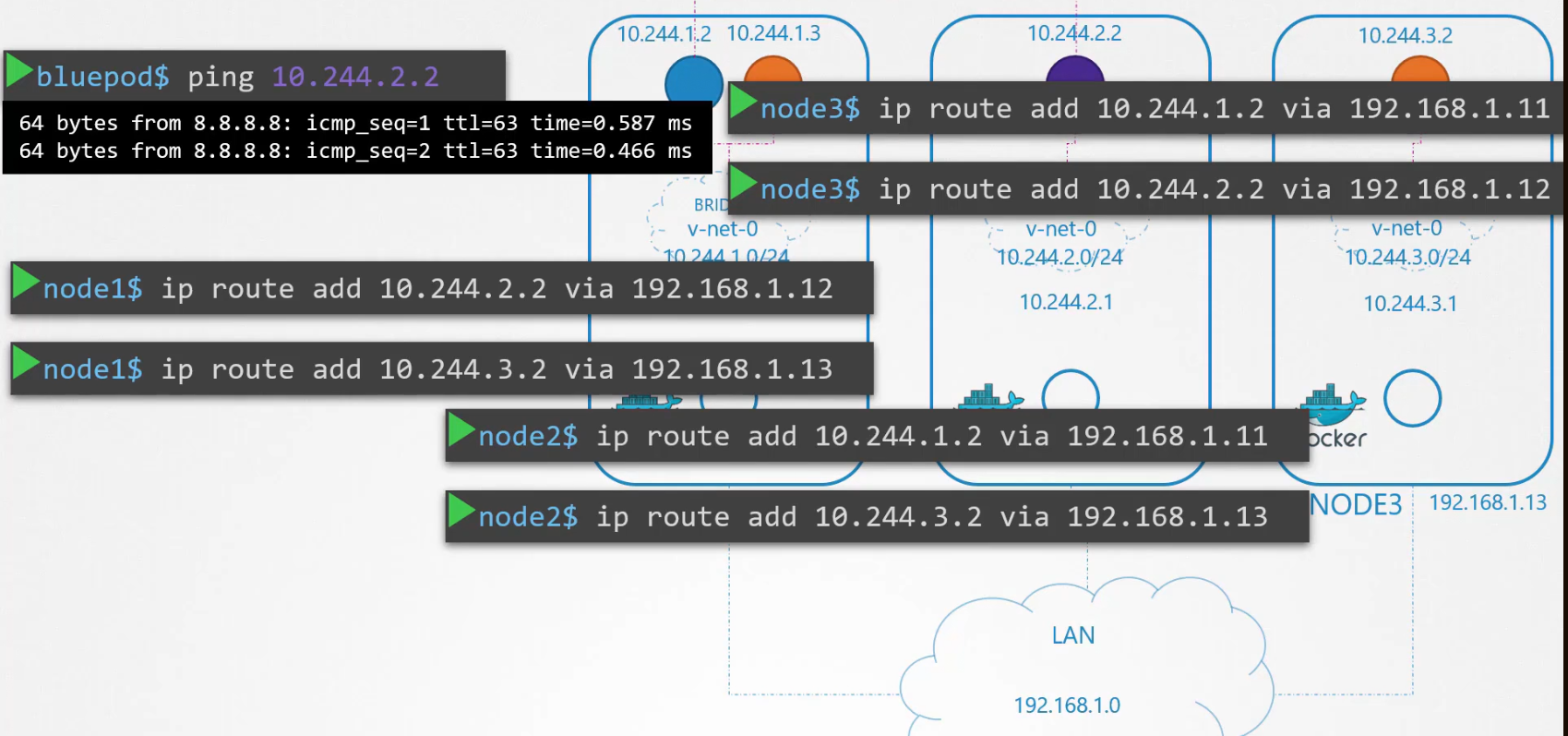

The containers within the node can communicate but can they do it with different containers outside of their node? For example, here blue pod wants to connect to purple pod

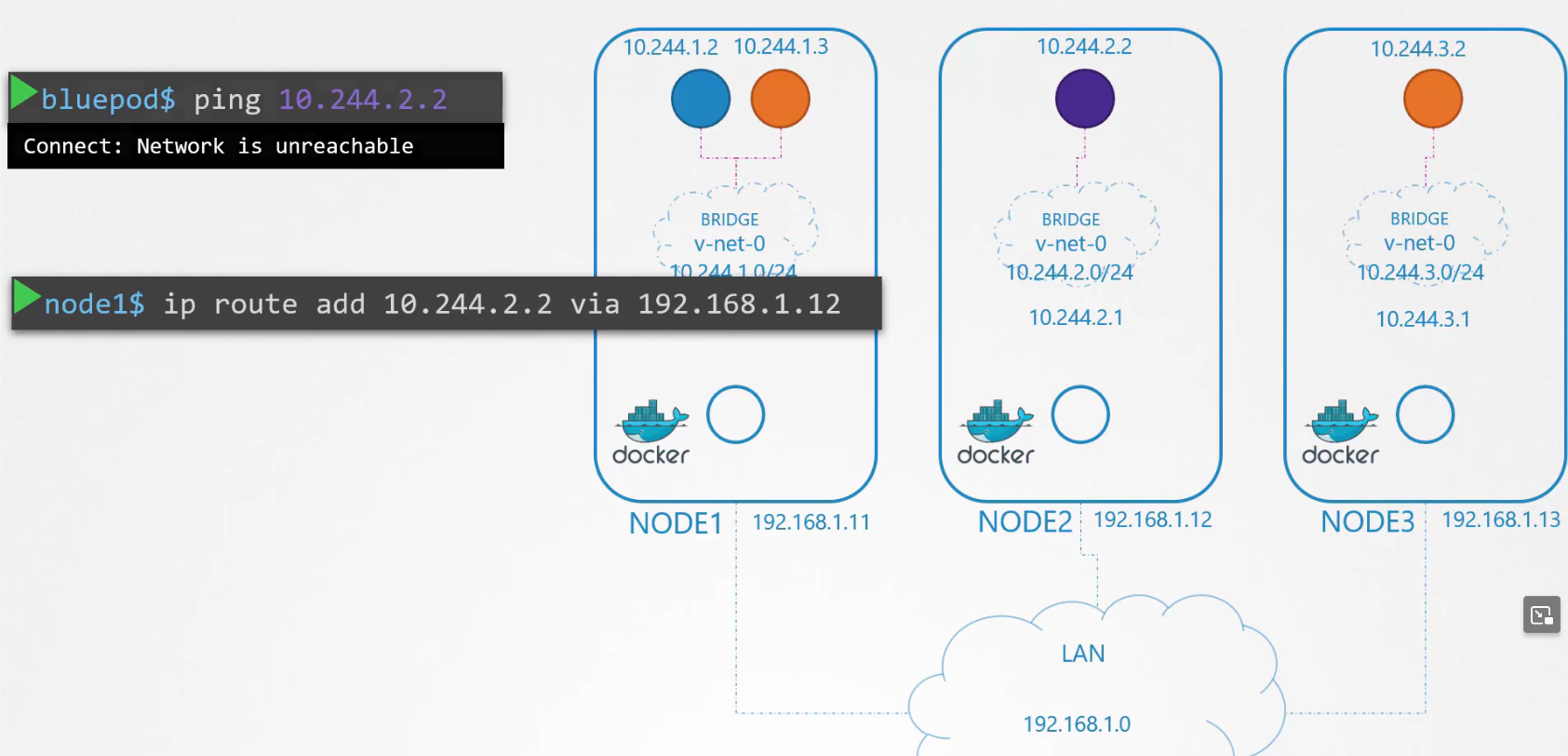

It does not work!! To solve this issue, we can create a route within our desired pod (purple'; 10.244.2.2) and the Node 2 IP

Assuming blue pod is added with Node1 and using LAN, nodes can communicate and therefore, the pods can too.

Now you can see them communicate.

Then we can create route for all of the container (hosts) with their Node IP.

or, we could use a route table to manage these

The problem will be solve using route table as well.

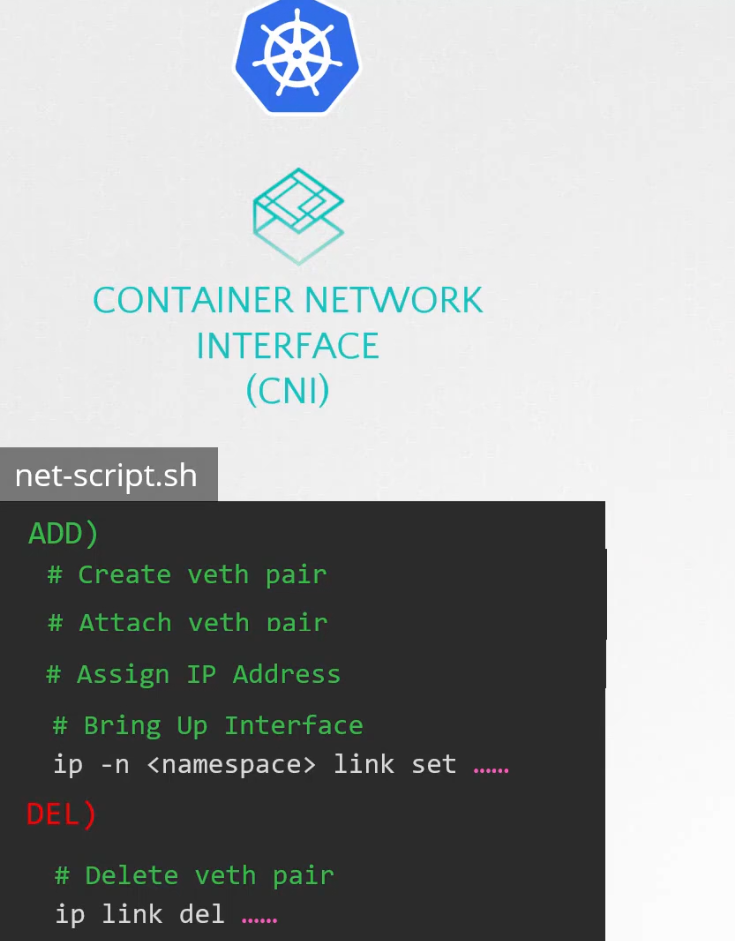

Now, remember we used a script to work with containers and network?

But that script runs on CNI which has it’s format.

ADD is to add containers to the network and DEL to delete container interface from the network.

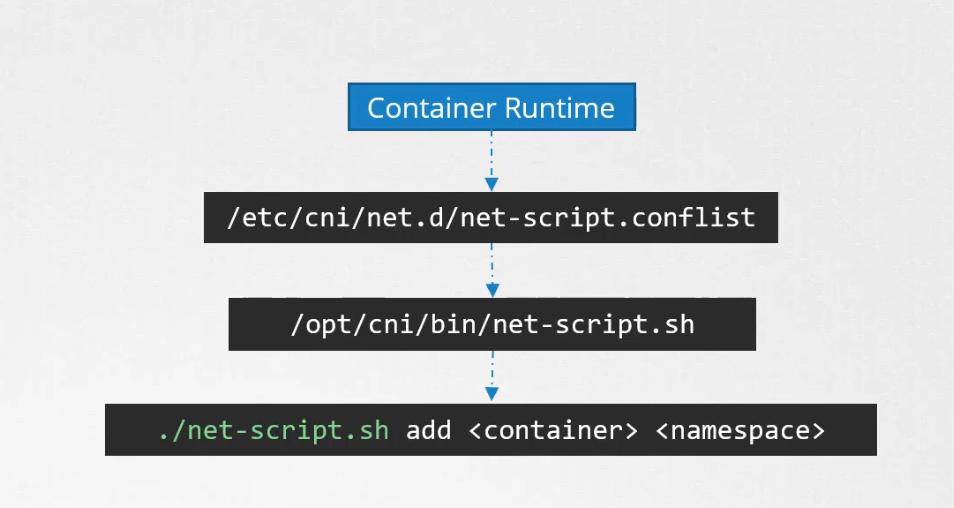

So, when a new container is created, the kubelet looks at the CNI configuration passed as a command line argument when it was run and identifies our script name. (/etc/cni/net.d/………). It finds the script in the cni’s bin folder (/opt/cni/bin/n…)

Then it executes it with add command using name and namespaceID of the container.

CNI in kubernetes

CNI basically defines the responsibilities of container runtime. CNI defines the responsibilites of container runtime. Here, kubernetes is used as container runtime which will be responsible to container network namespace, indentify and attach those namespaces to the right network by called the right network plugin.

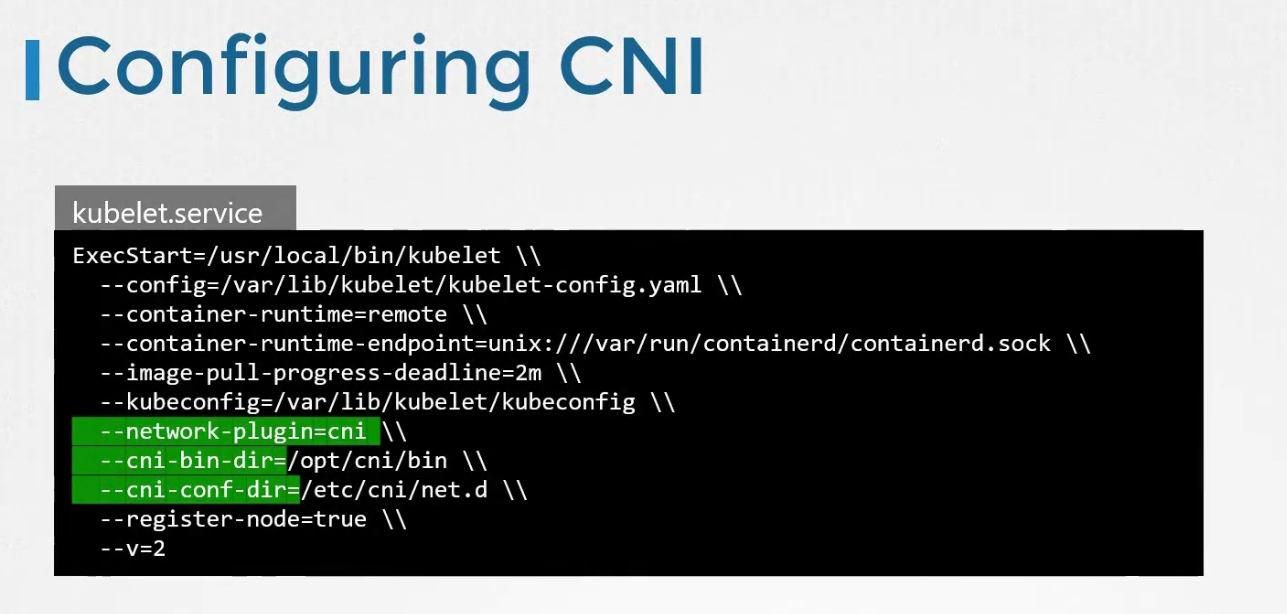

So, where is this CNI plugin created?

Basically it’s configured in the kubelet of each node in the cluster.

One of the tools which works as a CNI solution is , Weaveworks

Let’s understand how this works in our system. So, our blue pod in Node1 wants to send a ping to purple pod. Using the route table, the connection is defined.So, the ping has been sent.

It was okay because we dealt with just 2 containers/pods.

But this is not feasible for big clusters. We have lots of containers in nodes and specially cluster. So, we can’t keep so many container information only in routing table. It will be a mess!

Let’s use another technique

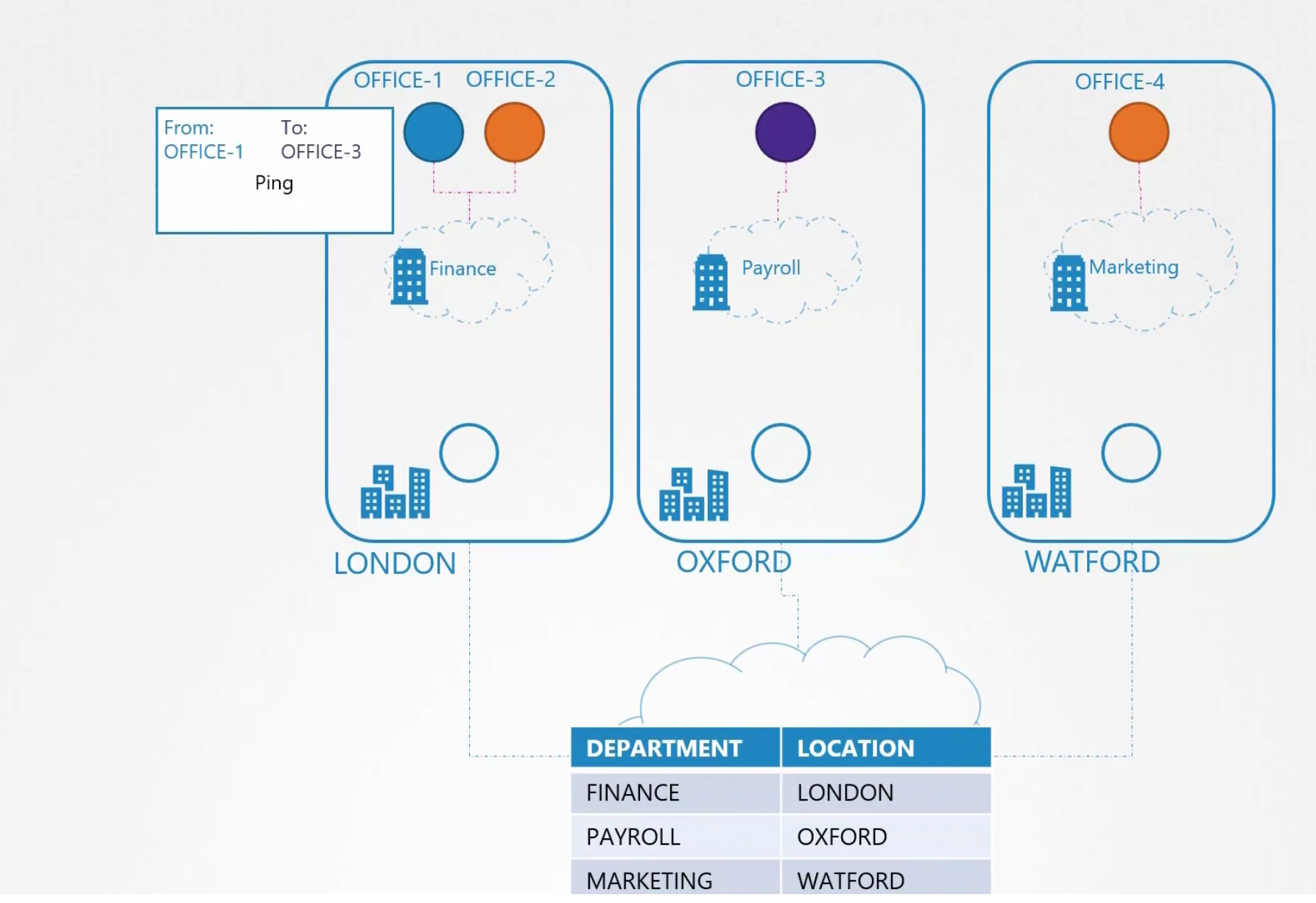

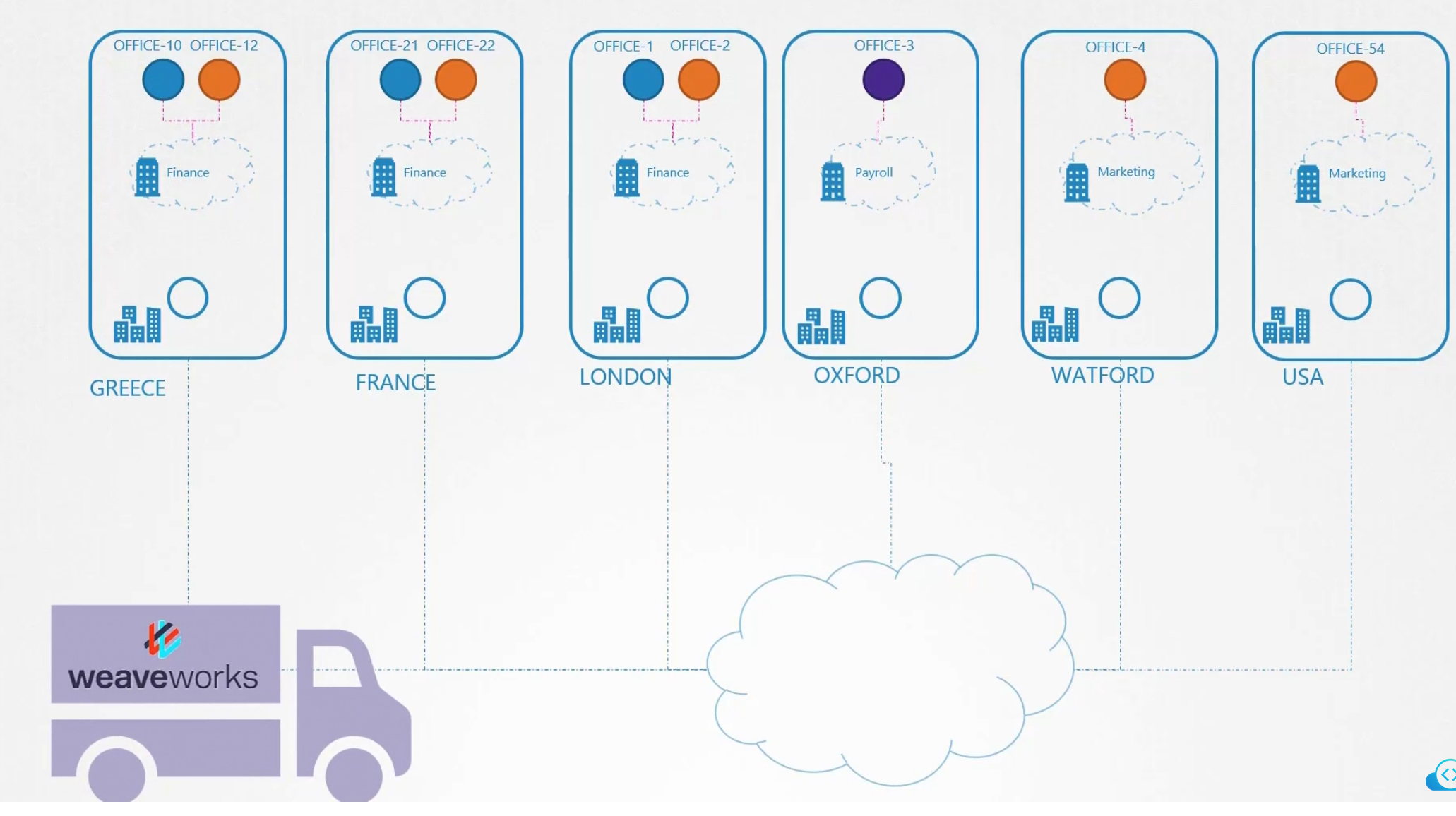

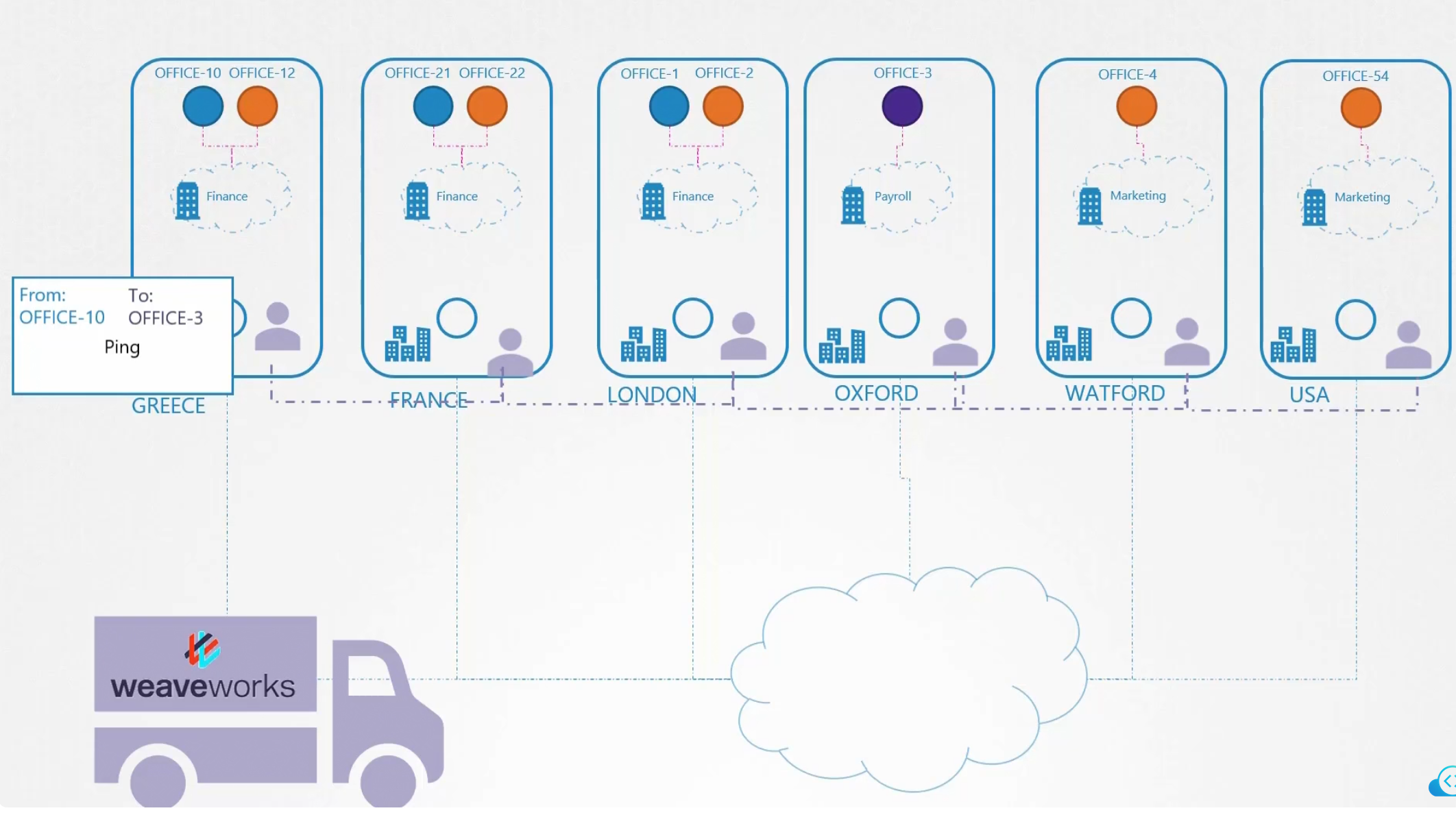

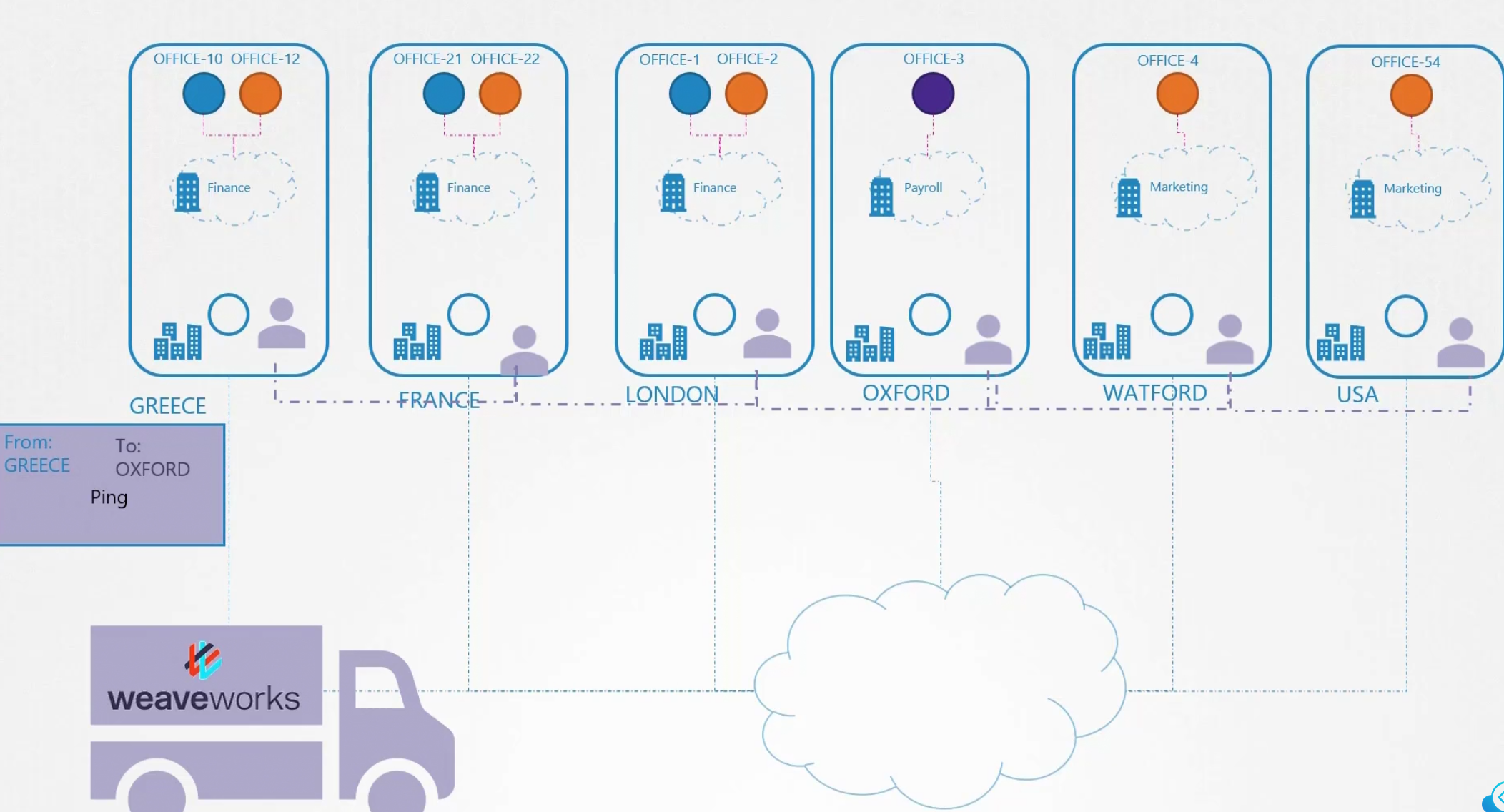

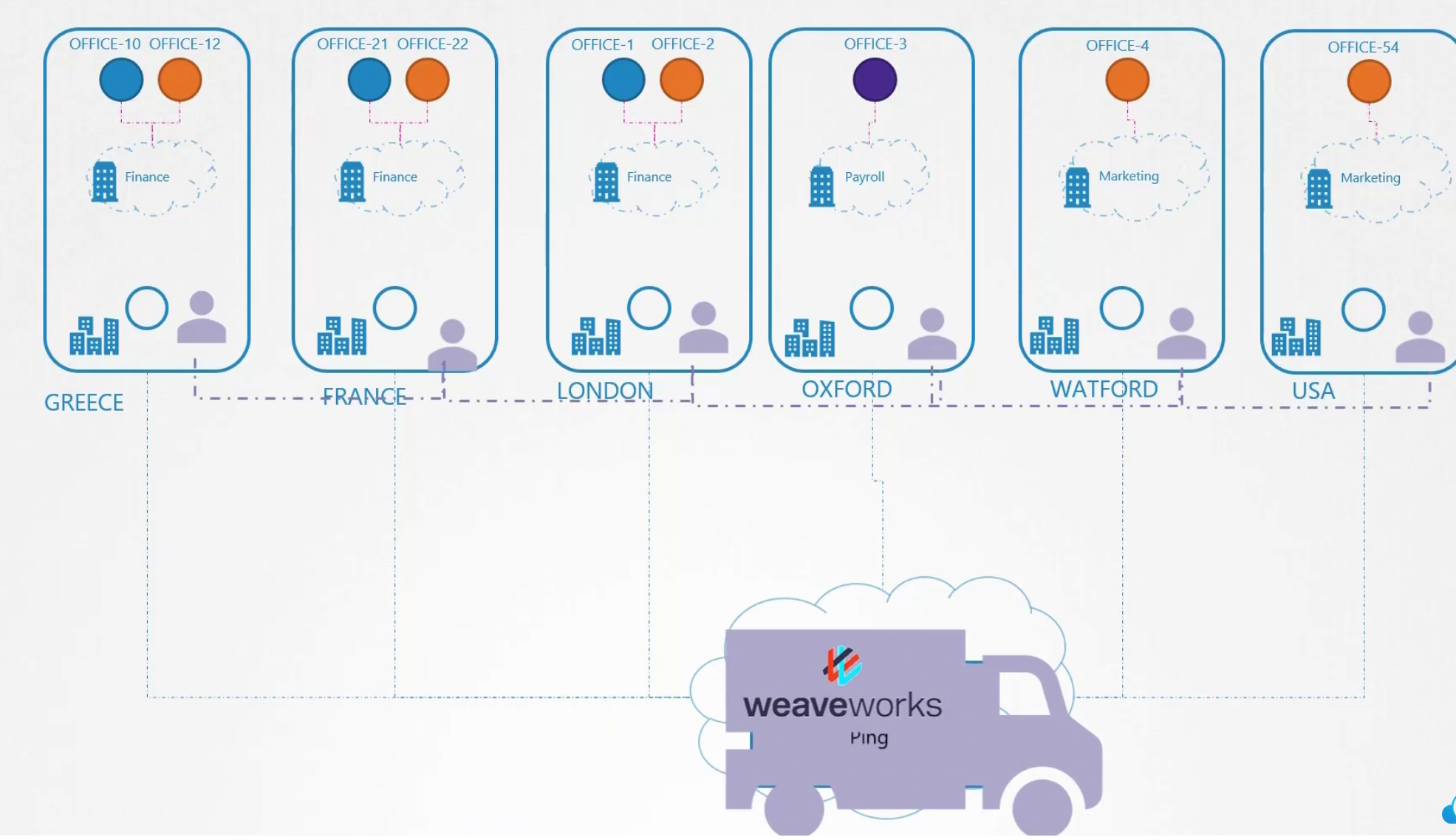

Assume that we have different offices at different places. And surely each office has some department. Now assume these departments want to send packet to each other.

Someone in office-1 wants to send a packet to office-3

The packet then passes via the connection to the goal

We just had 3 nodes and it was easy to deal. But what if we have too much nodes? Then we should use a 3rd party solution which can manage these tasks for us

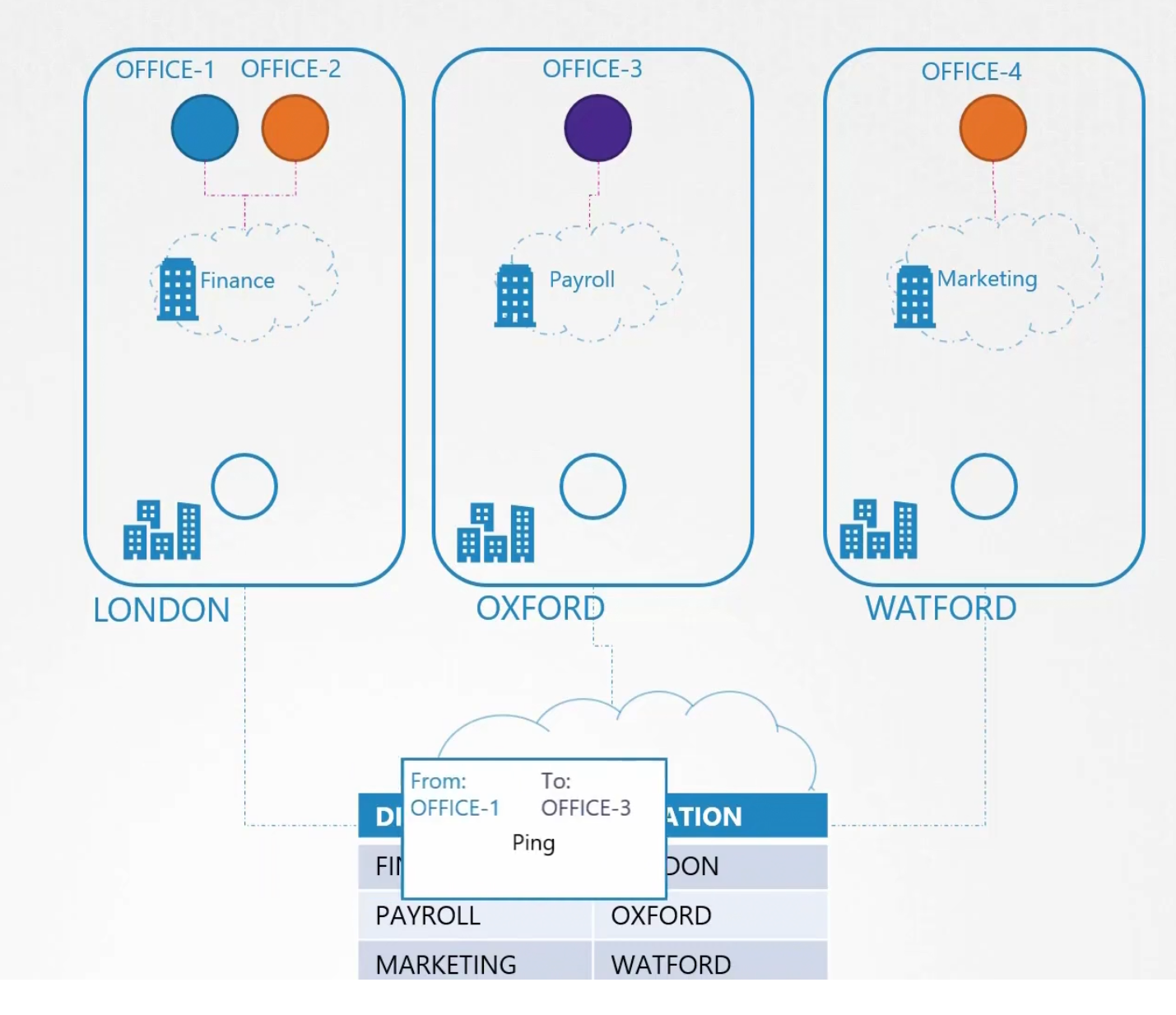

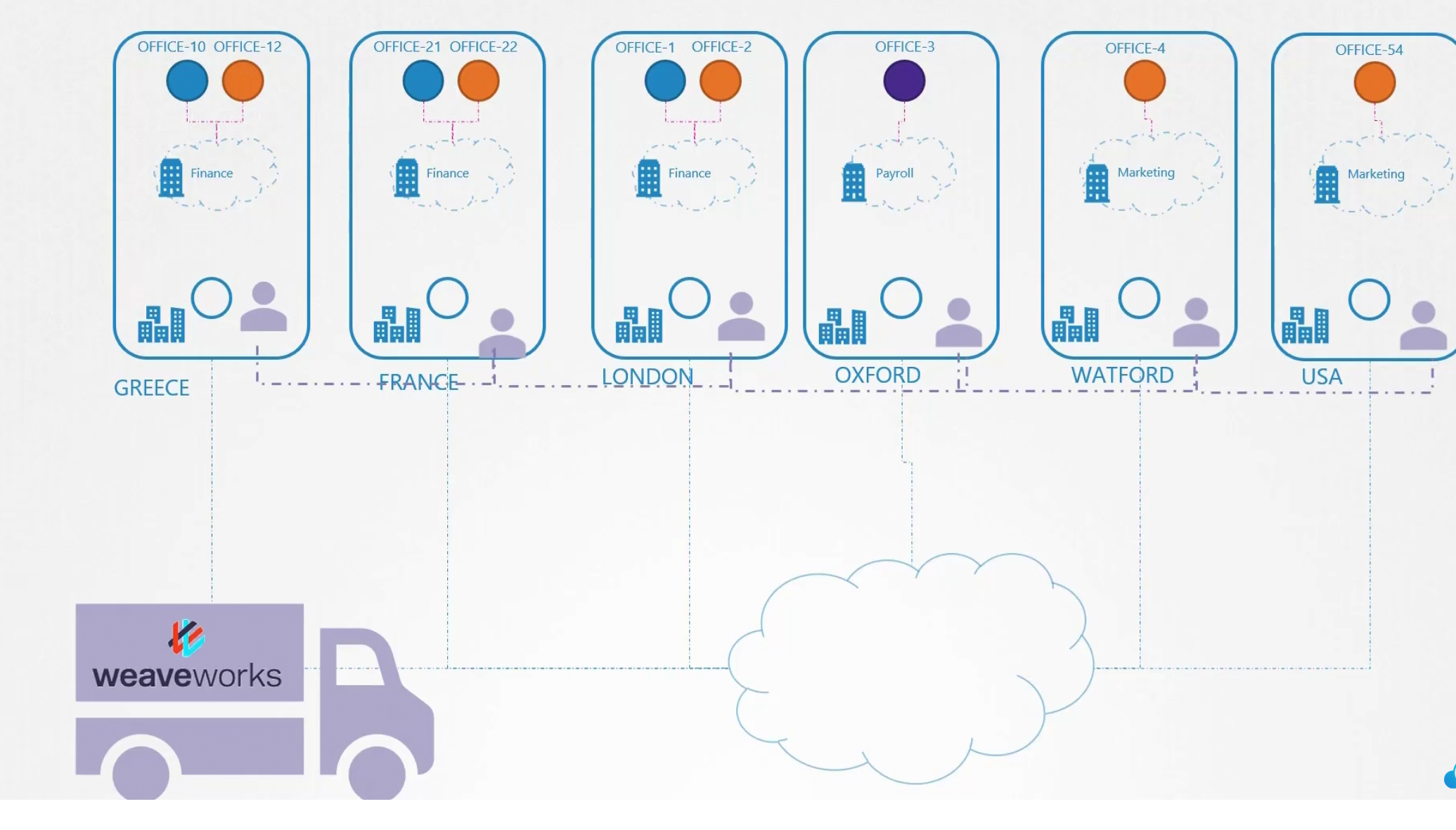

For example, while using tools like weaveworks , it keeps agents in all of the nodes

The agents are well connected with their internal network. So, now if any office/pod/container wants to send a packet, the agents know where to send the packet and how easily it can be done.

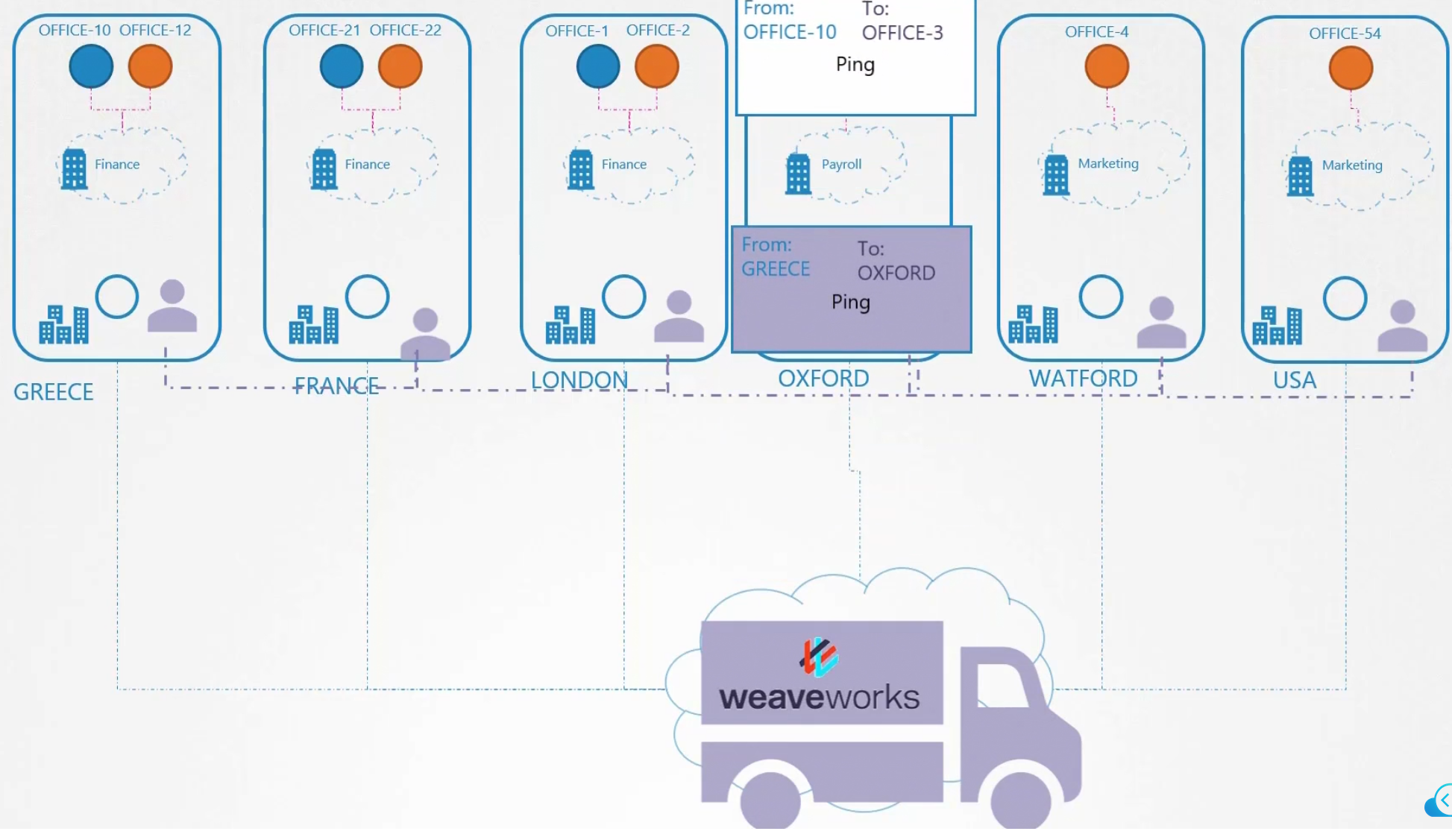

Here, office-10 wants to send to office-3

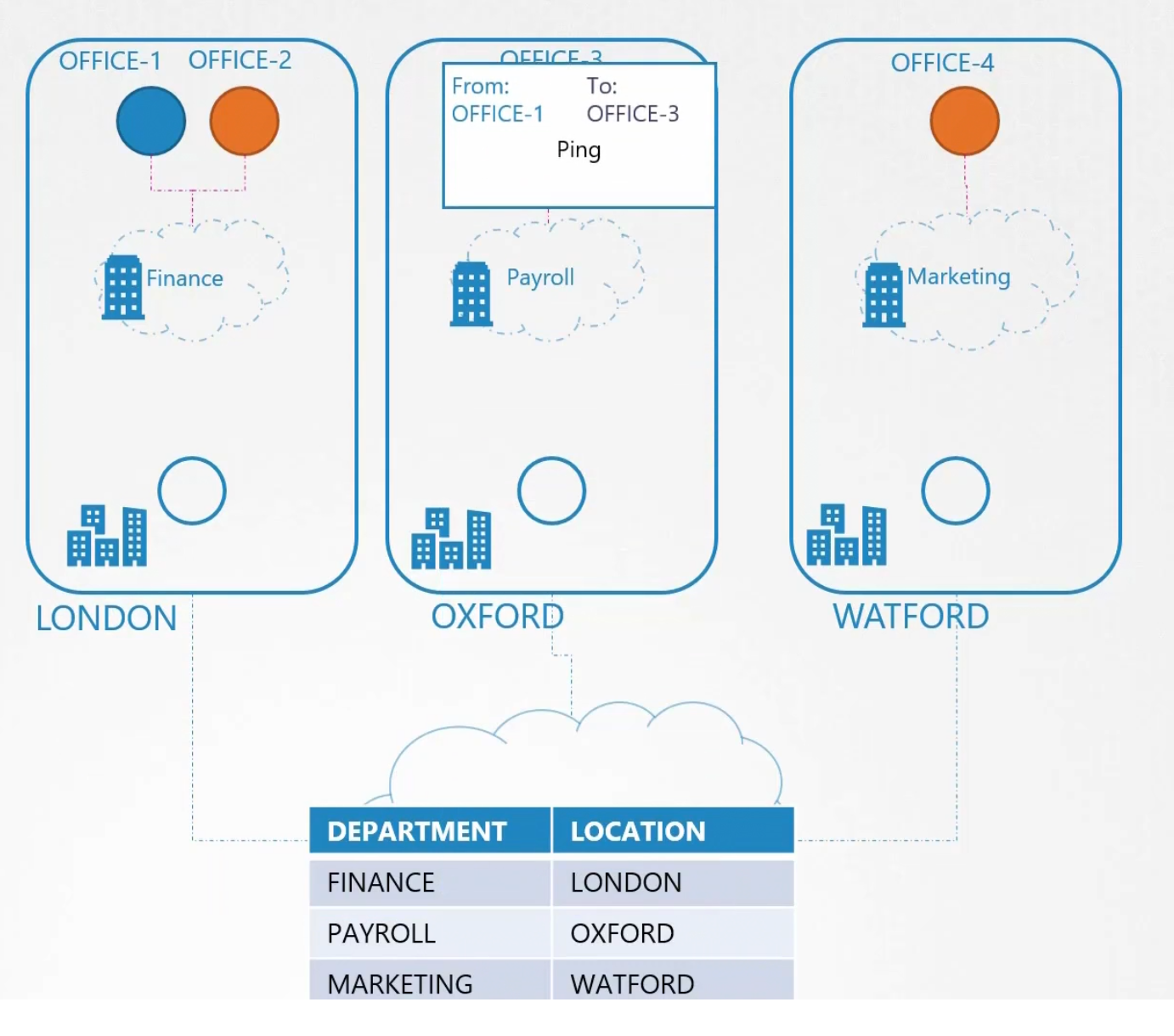

The agent creates a package and keeps the packet within that. The package has destination and from set.

Then weaveworks carries the package

The packet is passed to the agent on the node. Then the agent unpacks it and give packet to the office

So, this is it. A 3rd party tool like installs it’s agents once it’s used

You can also see how they (weaveworks agents) are connected within themselves via internal network.

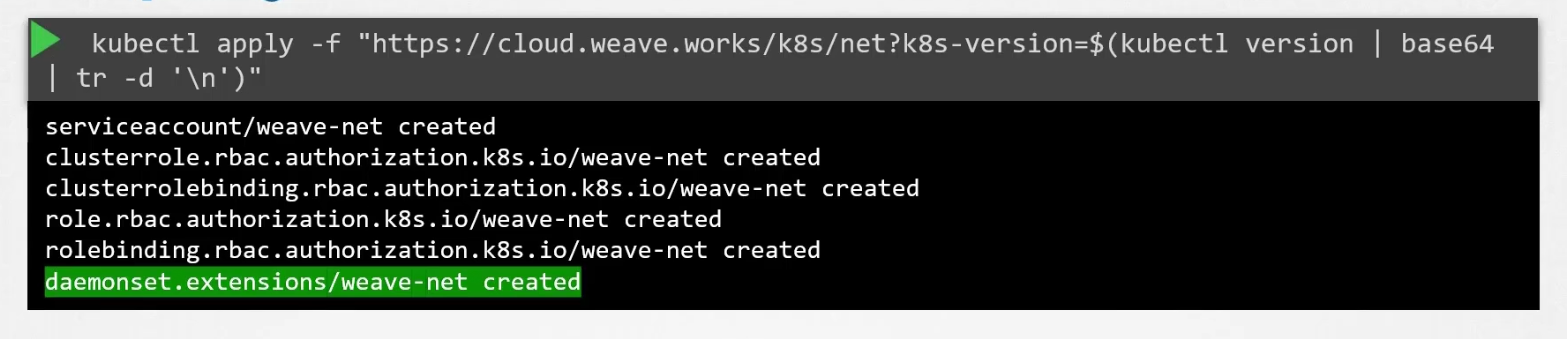

But how to deploy that on a cluster?

We can use this command to deploy this as a service/ daemon set.

Then one copy of pod (agent) is created in every node.

IPAM (IP Address Management)

Who is responsible for assigning IP?How do they ensure that IPs are not duplicate?

CNI has something called IPAM where we can specify the type of plugins, subnet and all

This can be done from our script to invoke the appropriate plugins instead of hard coding them (plugins).

Weaveworks solves this one by having an IP range in between 10.32.0.1 to 10.47.25.254)

Then it assigns IP equally to the pods. Now, pods have enough IPs to allocate their containers.

Service Networking

Assuming that we have 4 pods and 1 service here.

Each pod can reach the service irrespective of the service.

Learn more from here

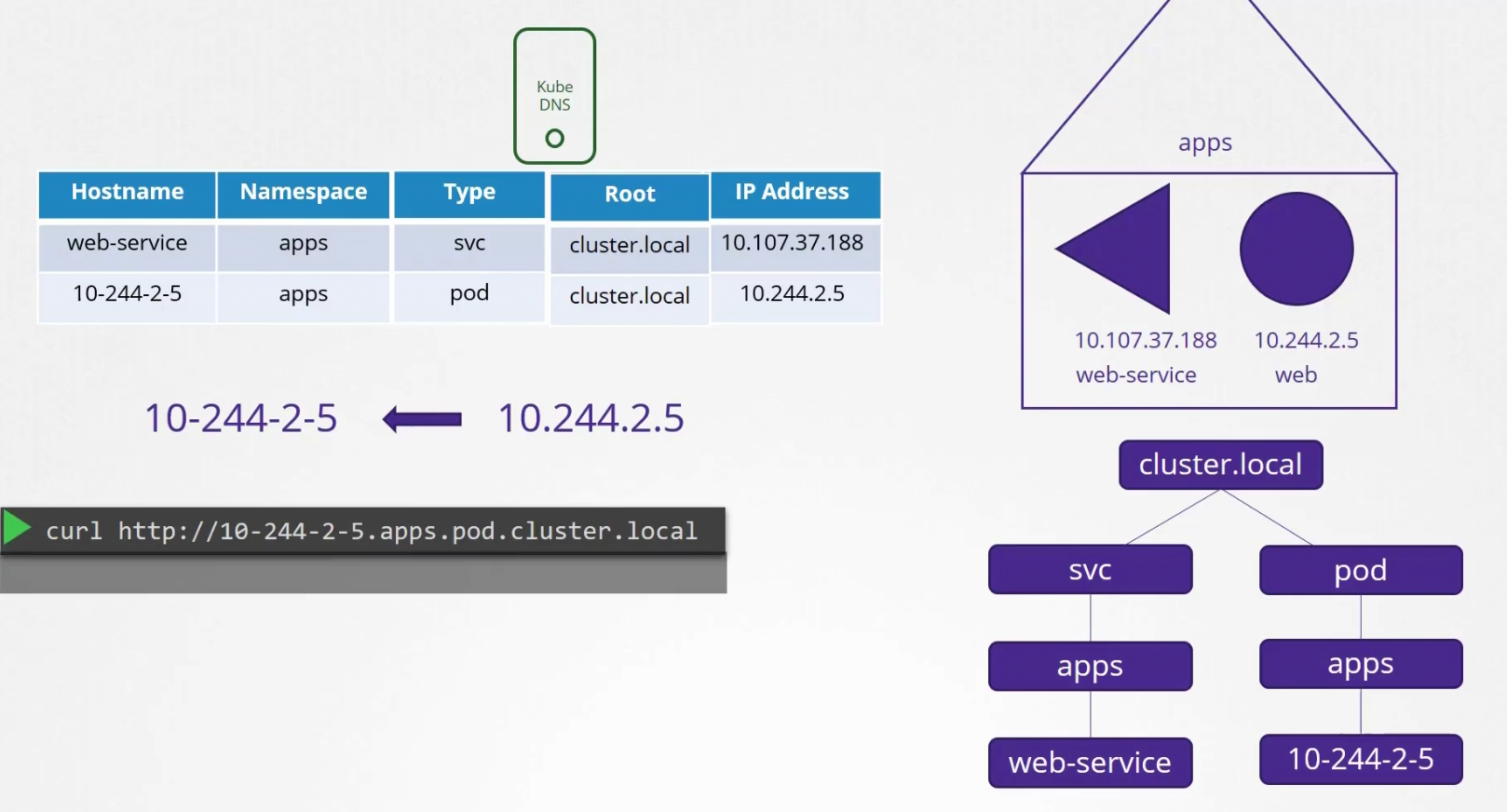

DNS

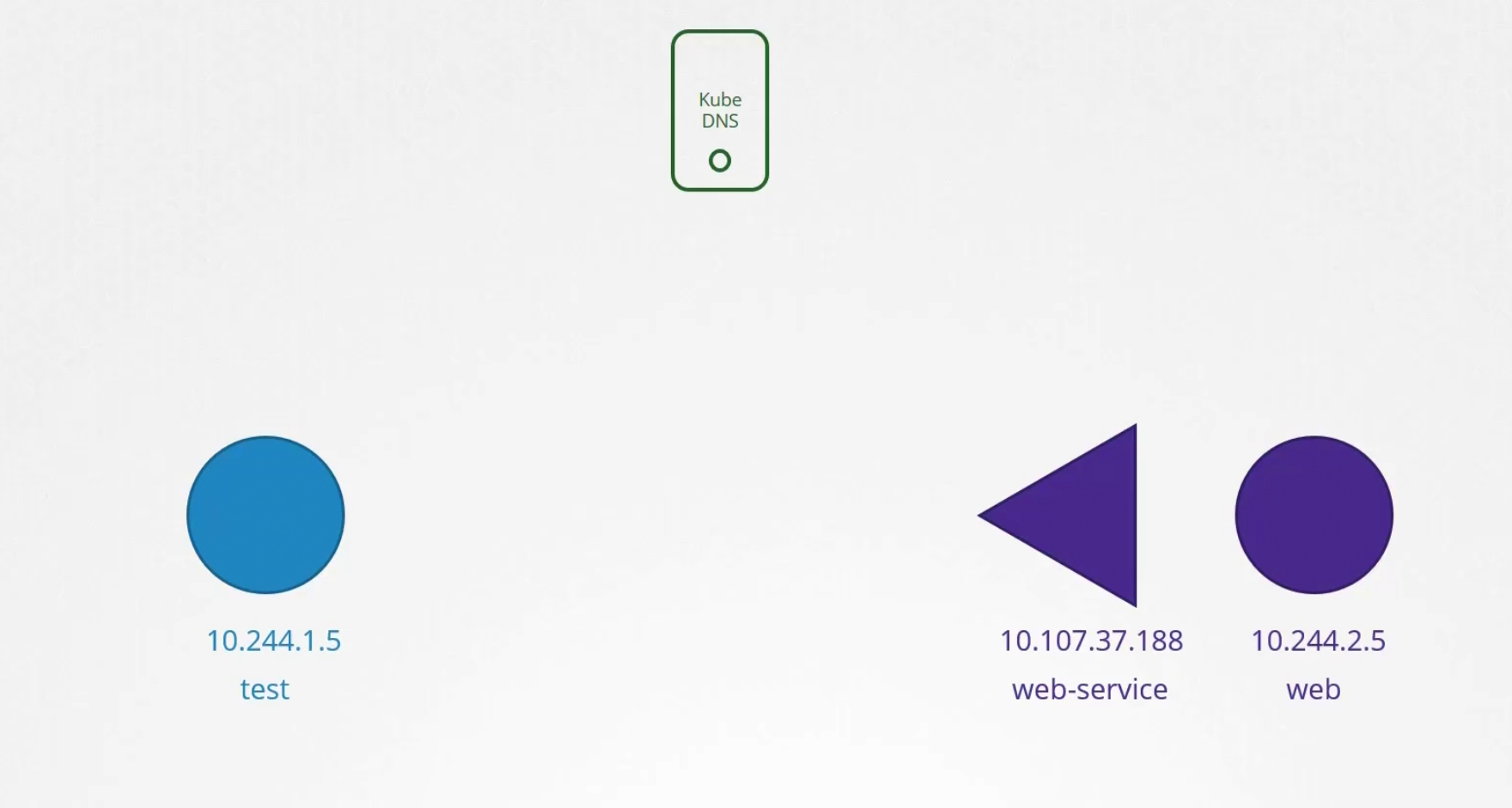

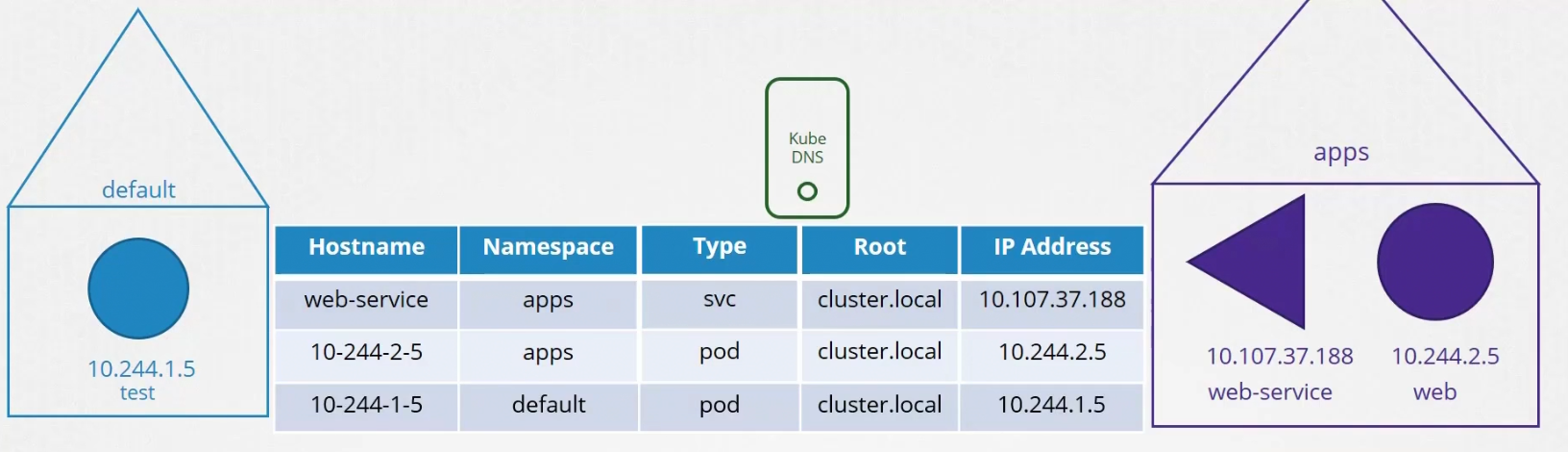

Assume that we have 2 pods and 1 service which has their own IP (Note: They might be in different nodes)

To connect the web server with test one, we use web-service. Whenever a service is created, Kubernetes DNS service creates a record for the service. It maps out the service name to the IP address.

So, using any pod/container , we can reachout to the service by it’s name (as DNS server has it’s name and IP in record)

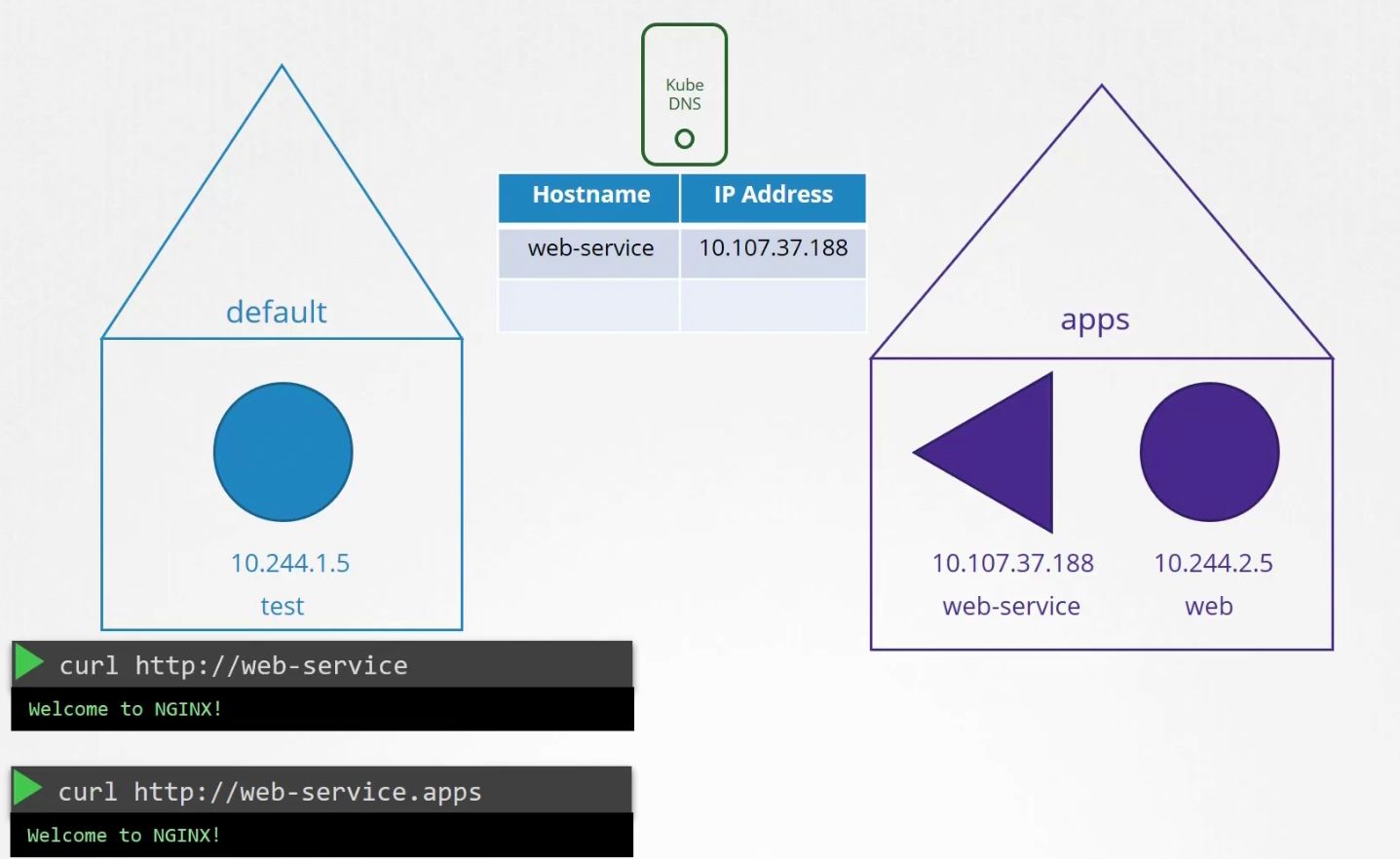

What if they are part of two different namespace? Then you need to specify with DNS name.namespace name

Here from the test pod, we have to use web-service.apps to get access to the web-service within the apps namespace

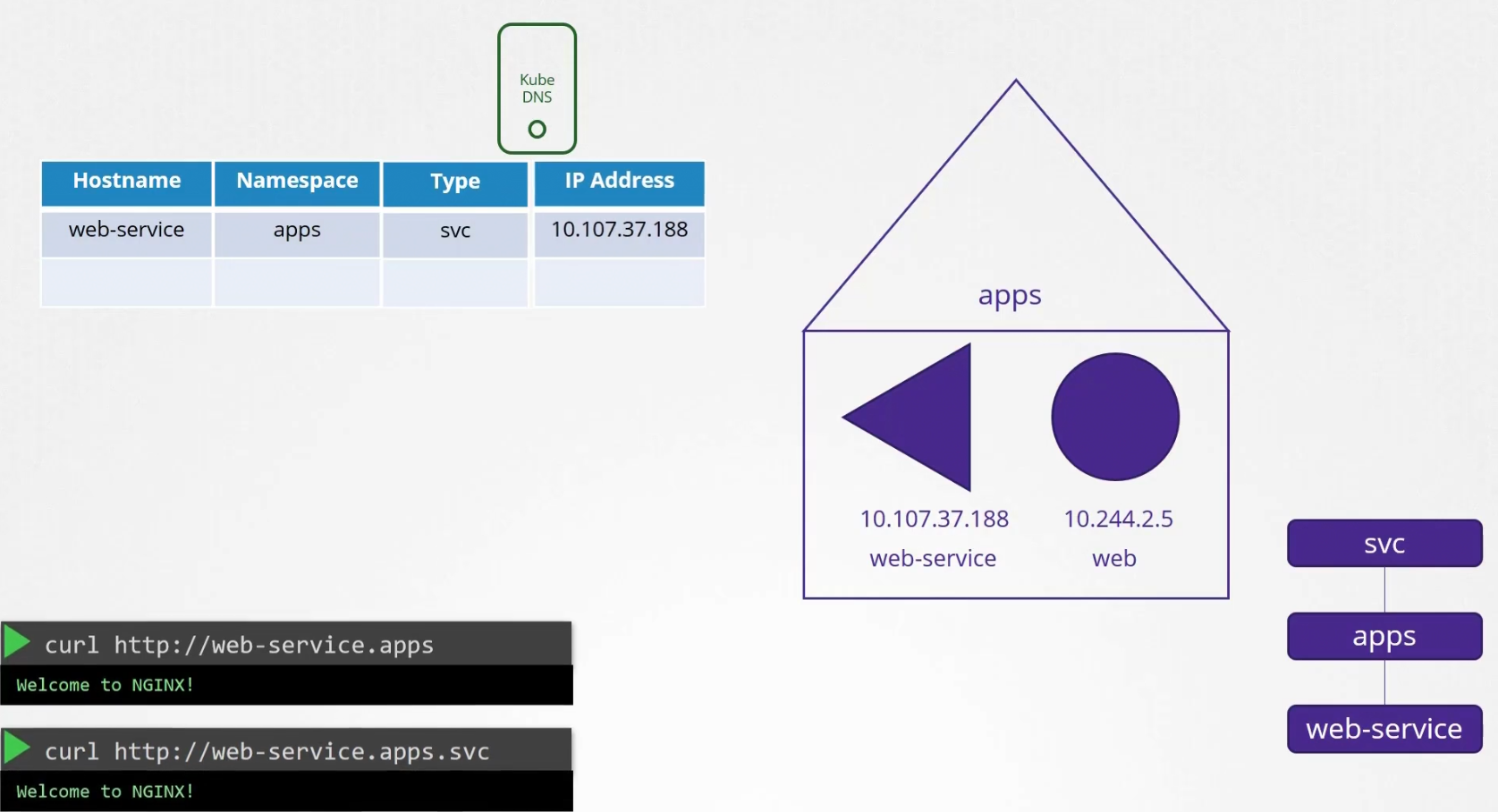

But all the services are grouped together within a namespace. For services, it’s svc subdomain group

So, we need to use service-name.namespace.subdomain-name

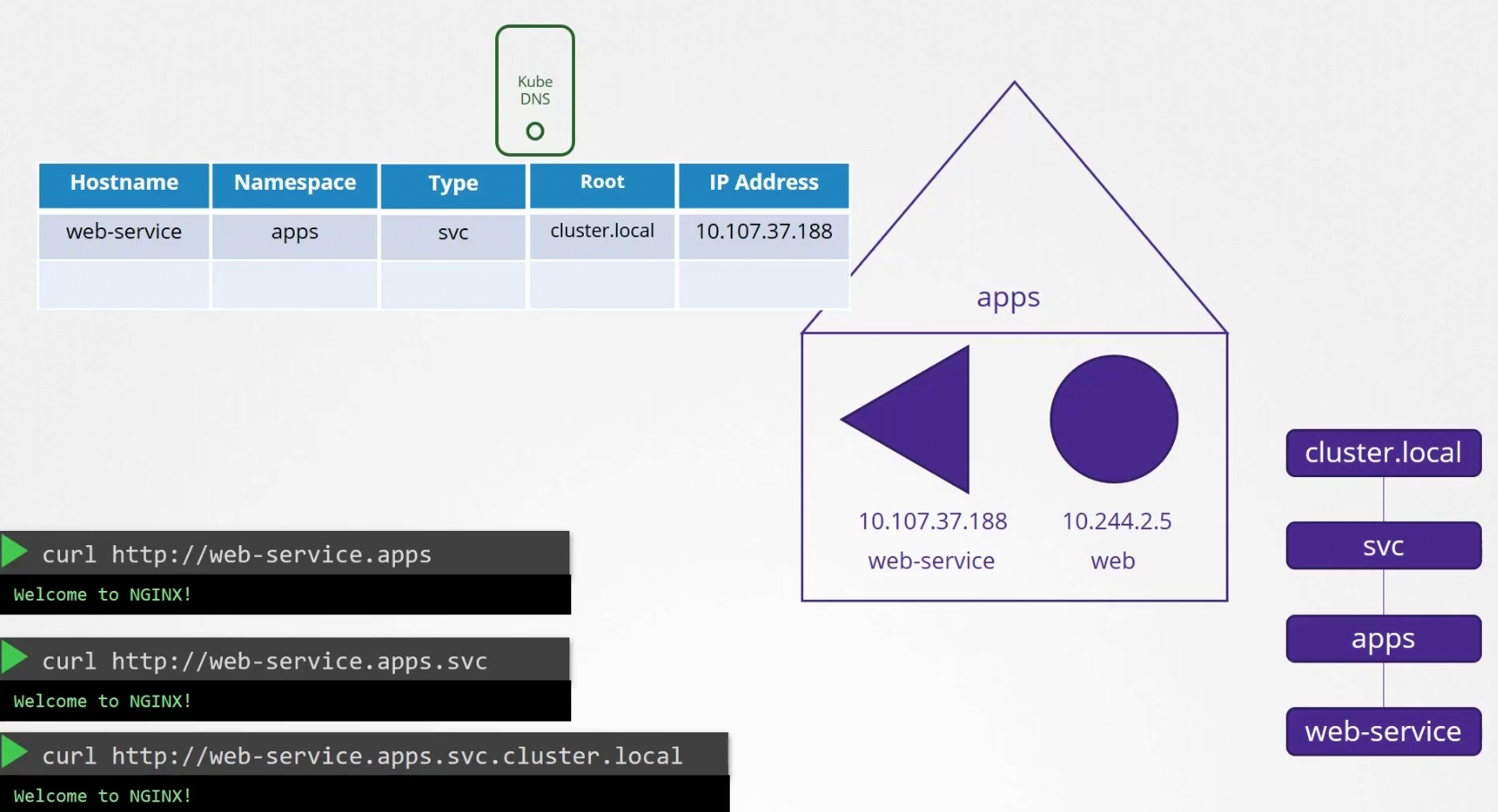

Finally, all the pods and services are grouped together within root domain for the cluster.

So, now to get access to the web-service we need to specify web-service-name.namespace-name.service-group.pod and service group. That’s it for services.

How to connect to pods using curl?

Although that’s not done in general, kubernetes uses the IP of the pod to create a name in the DNS. for example, the IP of web is 10.244.2.5 which turns to 10-244-2-5

And pods are part of pod group and also part of the cluster.local group which has all pods and services.

This is how test pod can contact to the web pod kept in apps namespace

Also, same goes for the test pod if someone wants to contact to this pod

It’s part of the default namespace and part of pod group and surely part of the cluster.local group within the default namespace.

CoreDNS in Kubernetes

We keep the name and IP on a DNS server and then point these PODs to the DNS server by adding an entry into their /etc/resolv.conf

Every time a container is created, we do the same thing

Kubernetes deploy CoreDNS as DNS server

It runs as a pod

It requires a configuration file called “Corefile”. Here plugins are mentioned (error, health, etc)

If a pod tries to reach to www.google.com , it is forwarded to nameserver sepcified in the coredns pods /etc/resolv.conf file.

It is set ti use the nameserver from the kubernetes node. This corefile is passed into the pod has a configMap object

Now, if a pod/service is created, the coredns server keeps the data

But how pods can reach the dns server?

This coredns server also has a service deployed called kube-dns

Once configured properly, you can access the websites/pod (10-244-1-15, 10-24-2-5)

For pods, use full address

Ingress

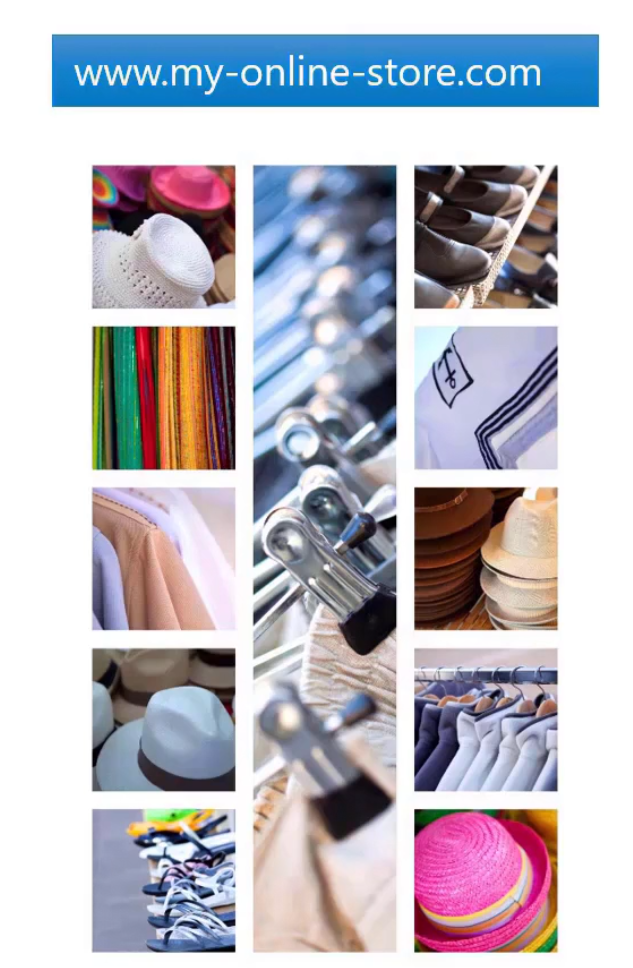

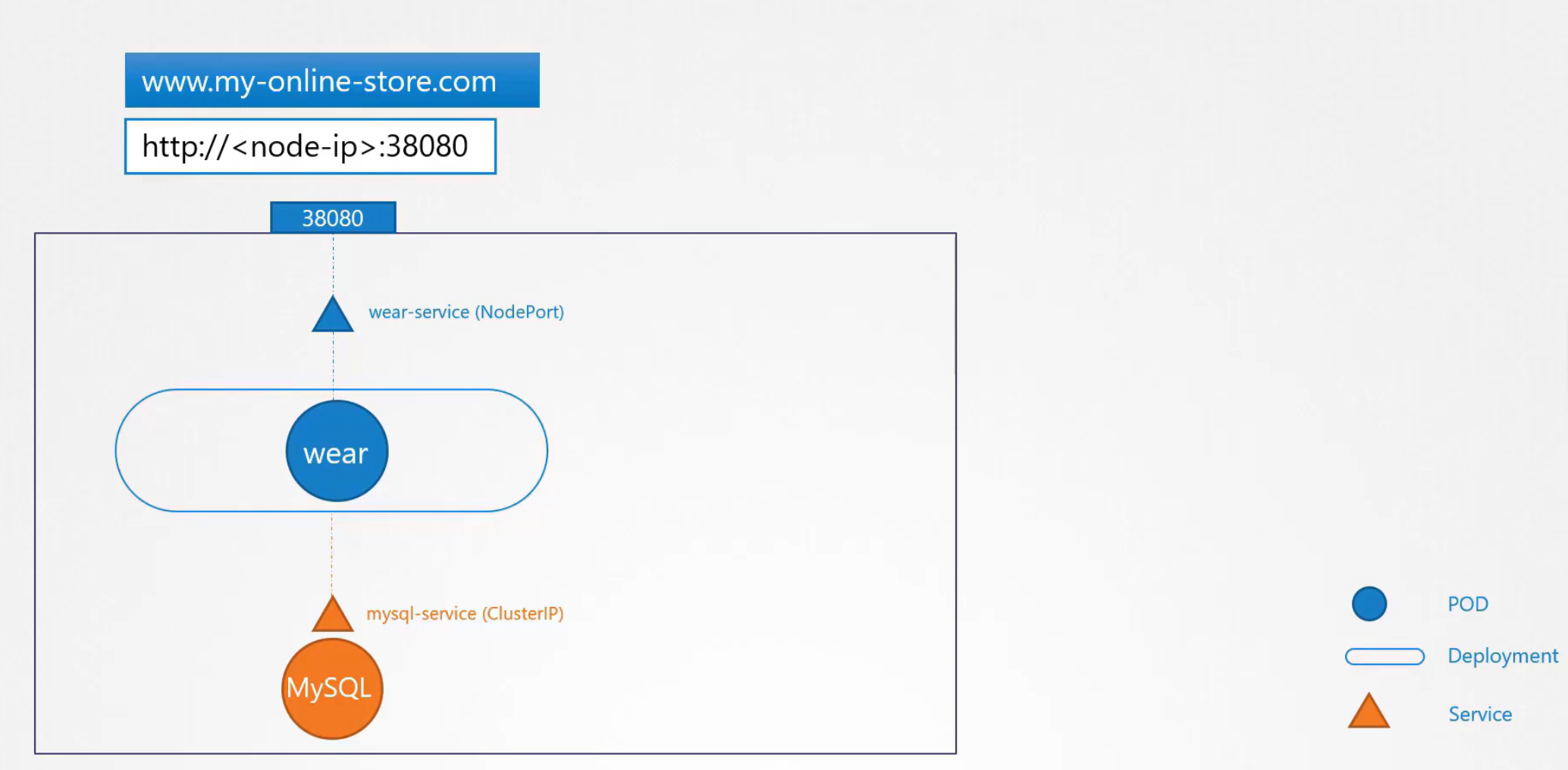

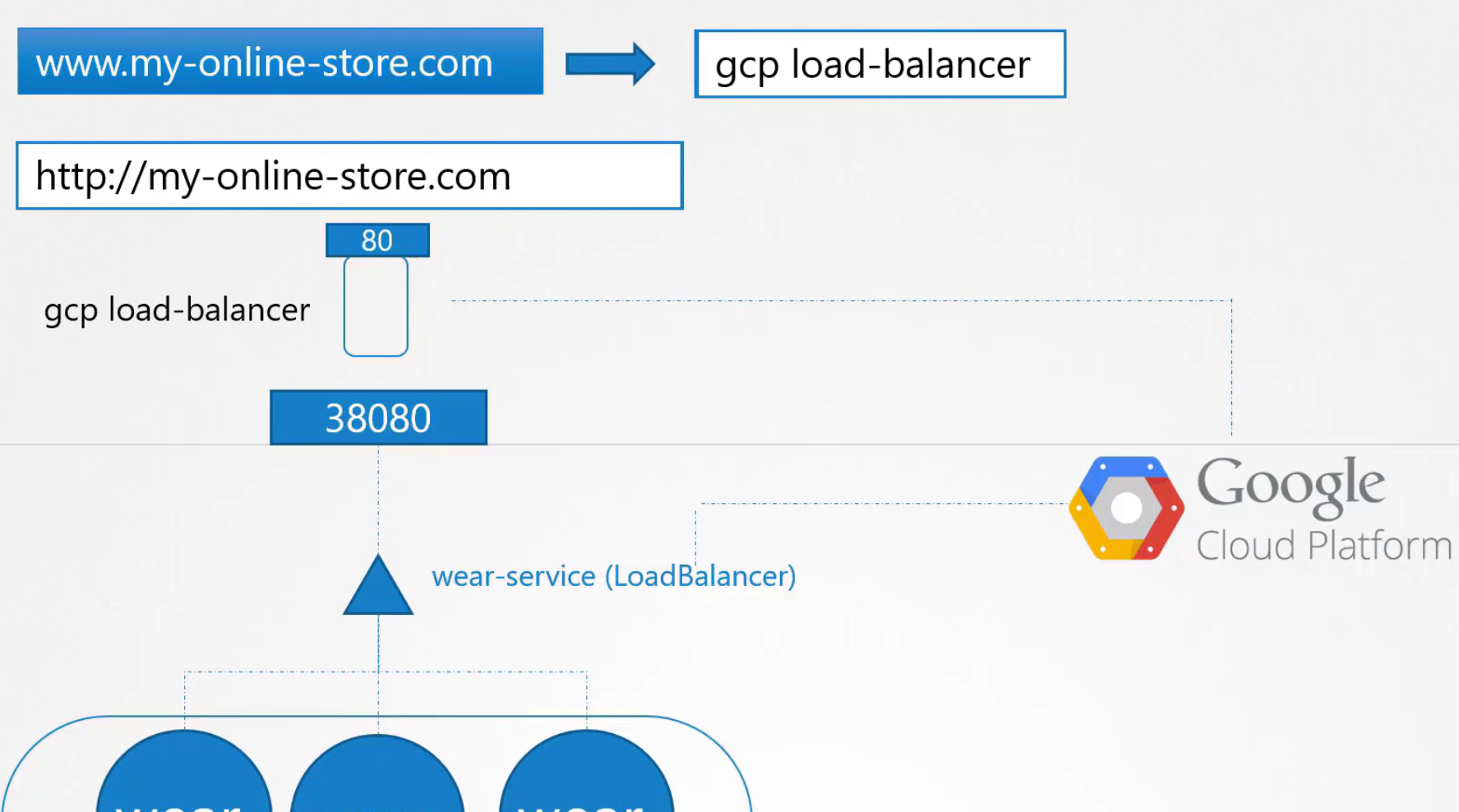

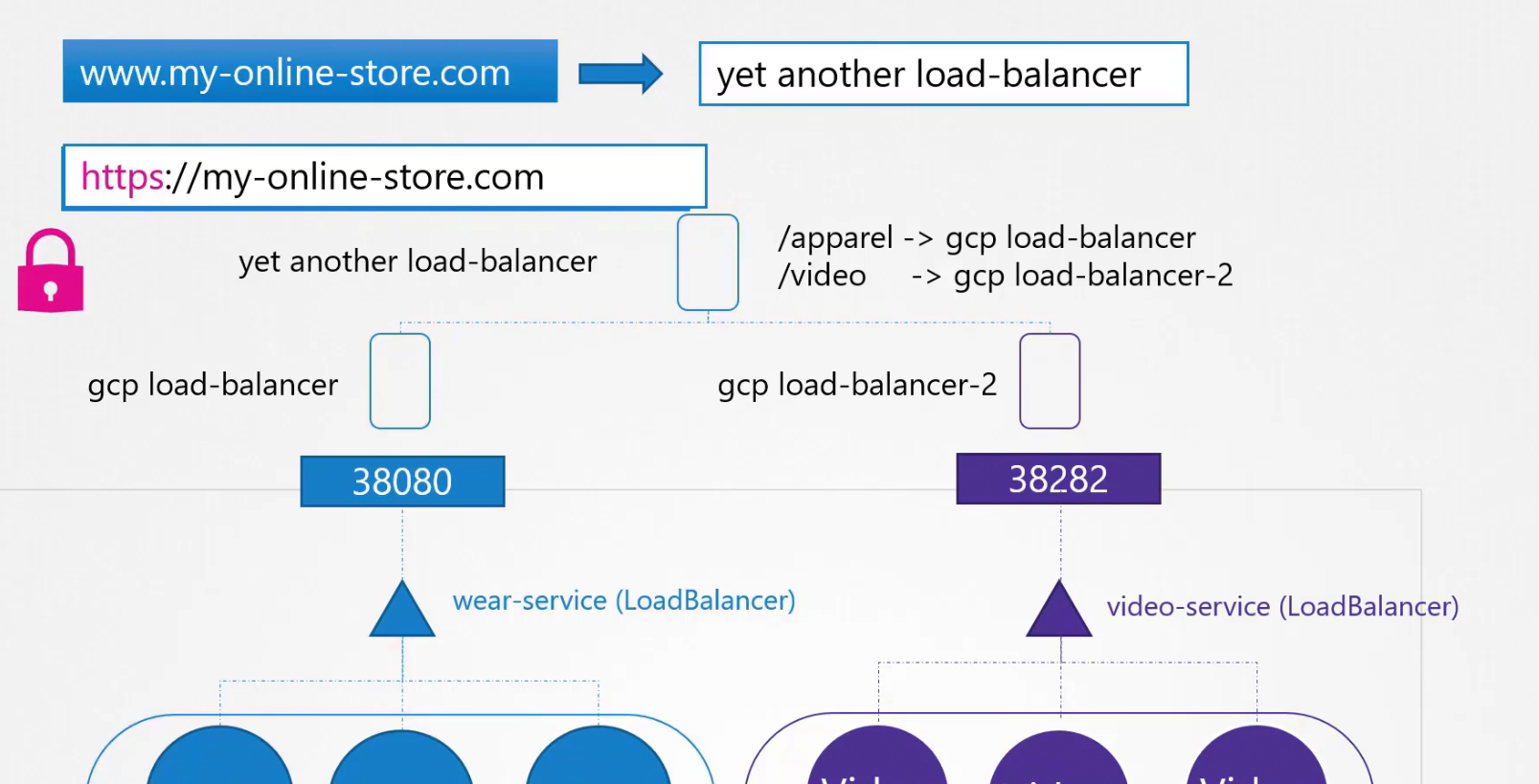

Assume that you have an online store

So, you can create this application pod (“wear”) which will have all of these images

Then your application needs a database and thus you created a pod MySQL. To connect to the database pod with our online store content pod (“wear”), there is a service “mysql-service” is created of type ClusterIP.

Again to make the application pod available from outside, you created another service (“wear-service”) and connected that to port 38080 which is a NodePort and ranges between 30000-32767

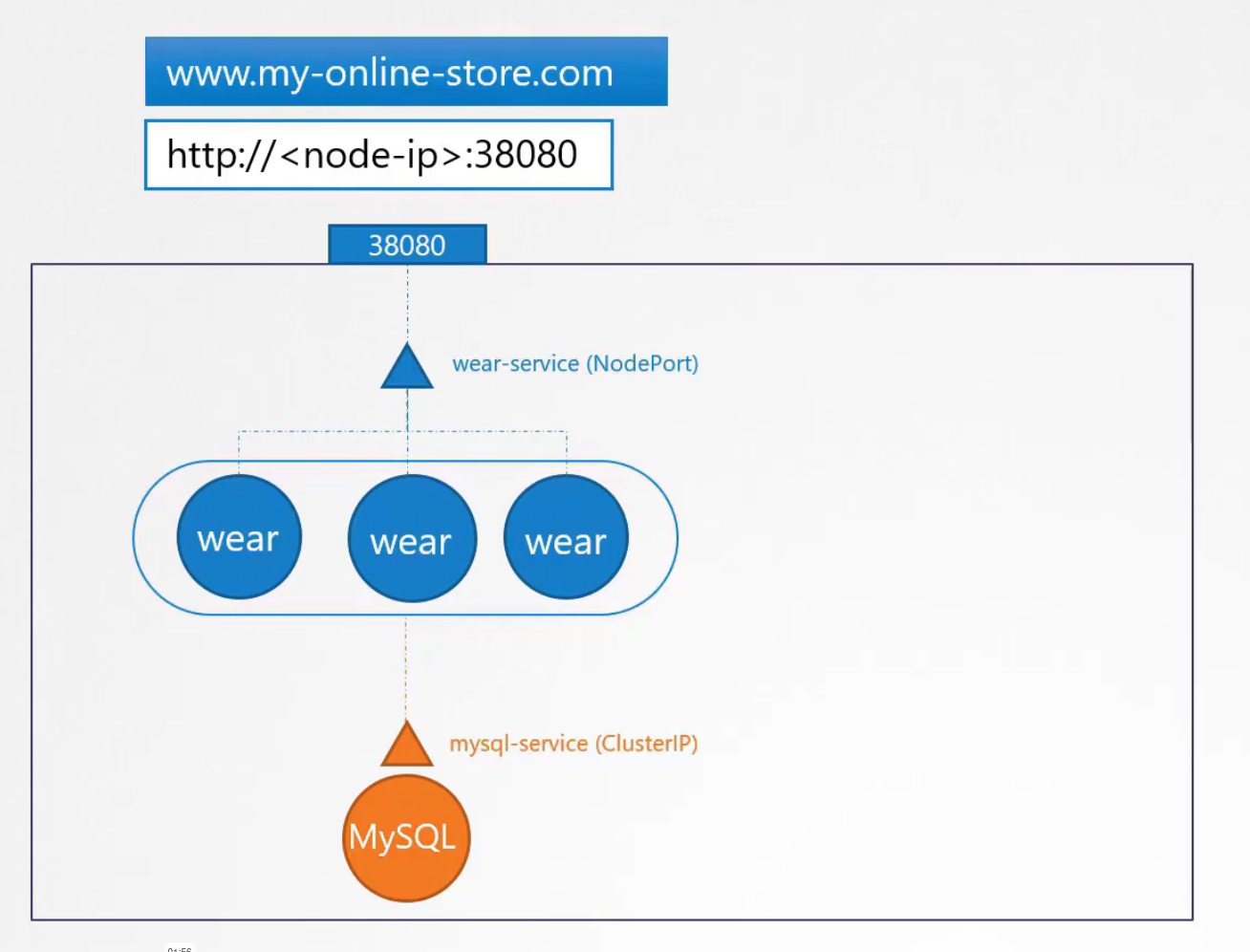

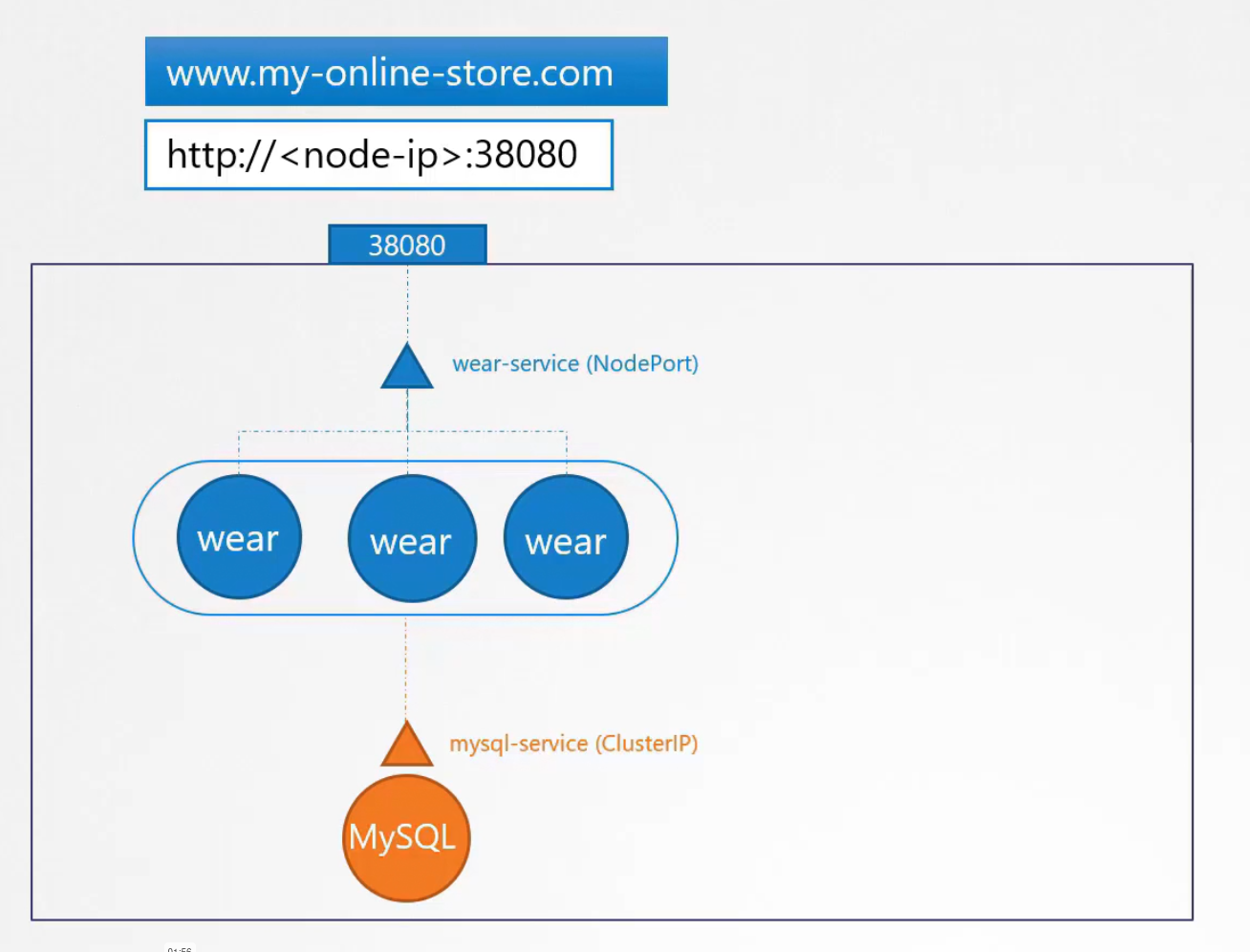

When the traffic increases, we increase replicas

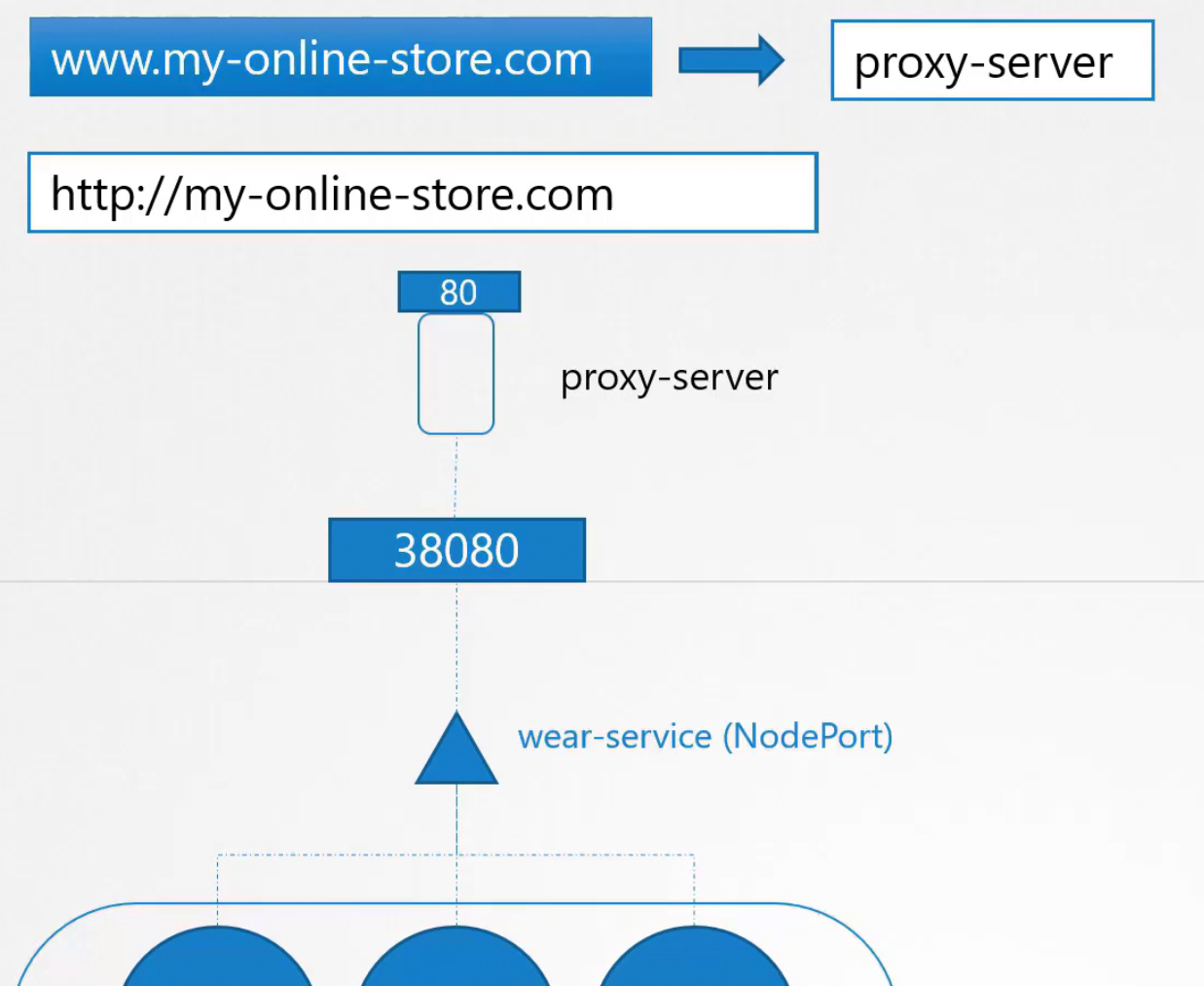

Now, we don’t want users to use <node-ip>:3808 instead we can point to IP table and users can then use the my-online-store.com:38080

We also don’t want users to remember the Nodeport as it’s a big number. To solve this, we will follow 2 ways.

Way 1: we can add a proxy-server which will connect to the NodePort. Then we can route the traffic to proxy-server

As port 80 is a default port, so users don’t need to mention port any more. If they search with my-online-store.com, they will be sent to proxy-server.

Way 2:

If we use cloud platforms like GCP, a load balancer can be added here. It will exactly work as the proxy-server and users can reach the website using my-online-store.com

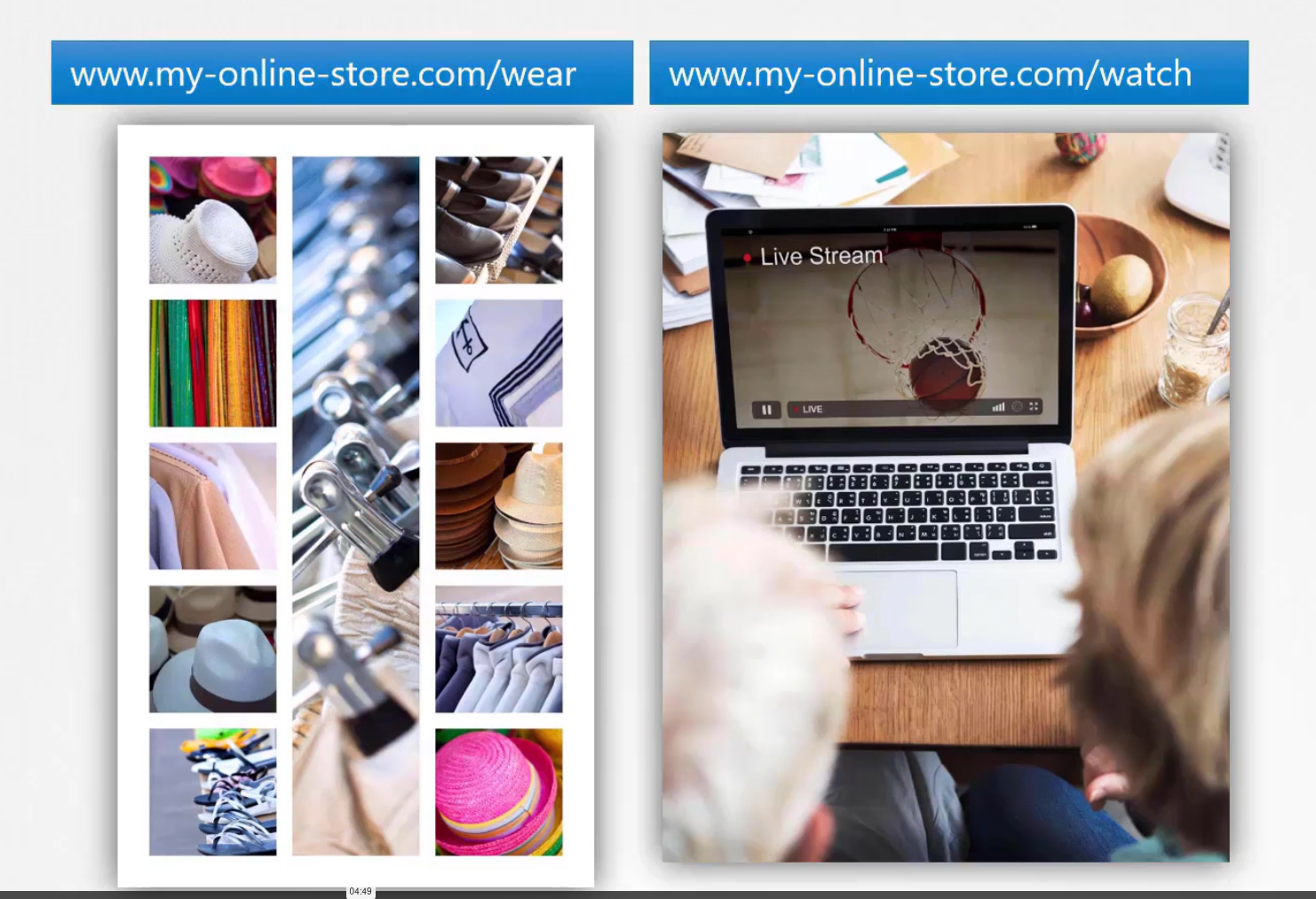

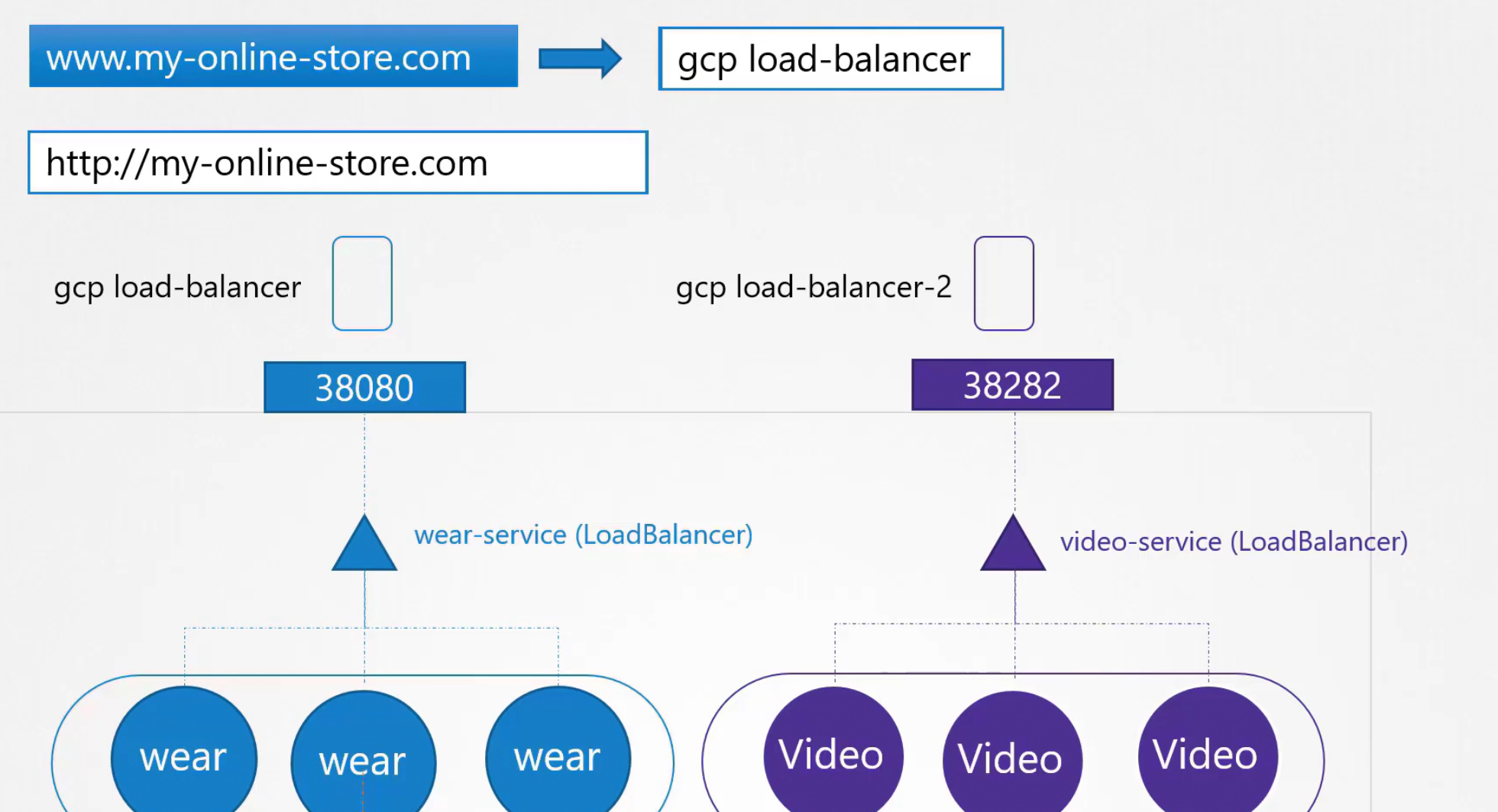

Assume that you want to introduce new service like live watching

How will the architecture change now?

Based on the way 2, we can introduce another load balancer with the pods of video stream

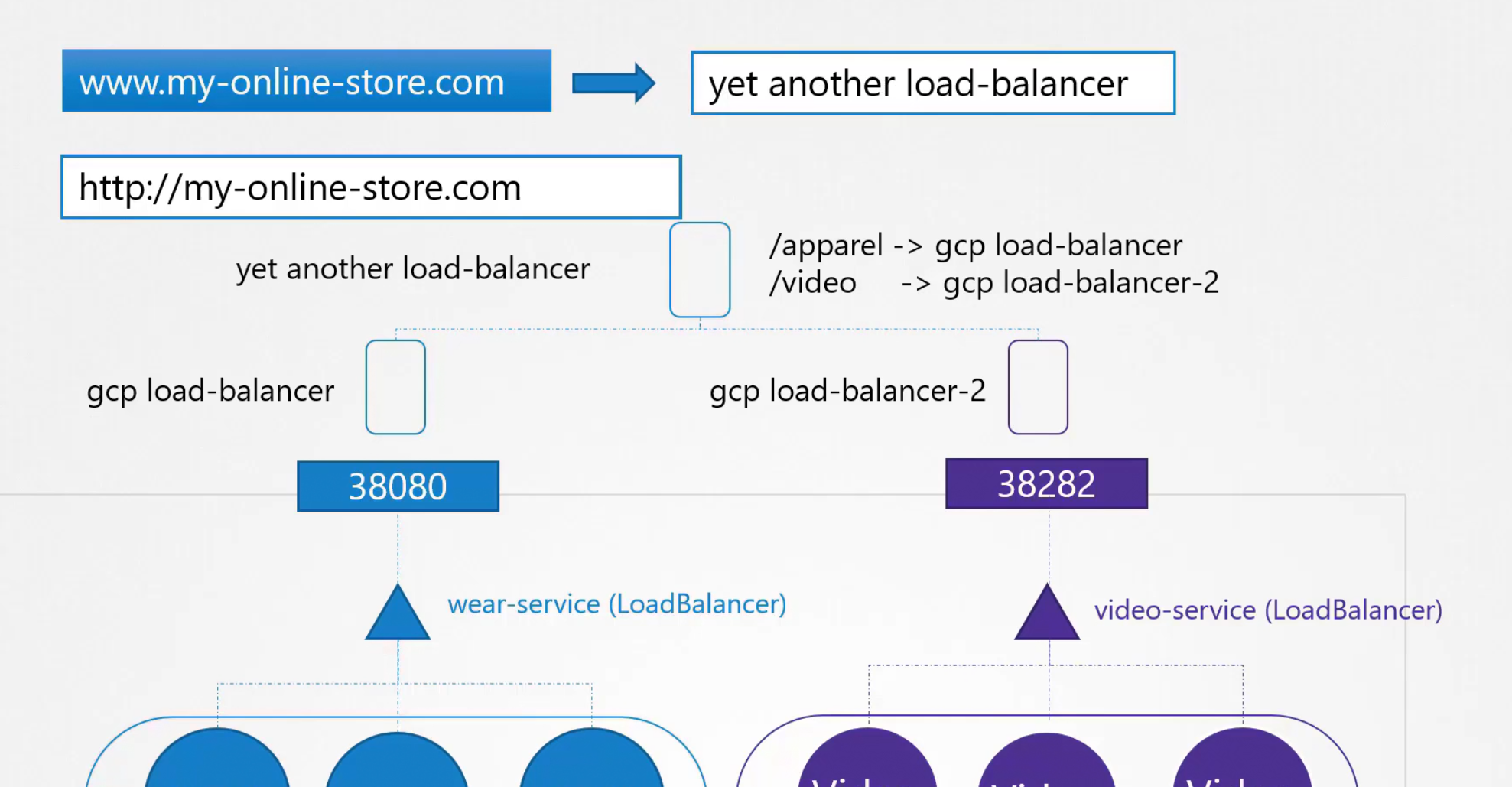

But we have 2 services now. We need to send traffic to wear or video pods depending on user’s need. To solve this issue, another load balancer can be added which will redirect traffic based on the need

We then add this load balancer in the DNS. Also, we need to enable SSL certificate to make our my-online-store.com much secured and can be used using https

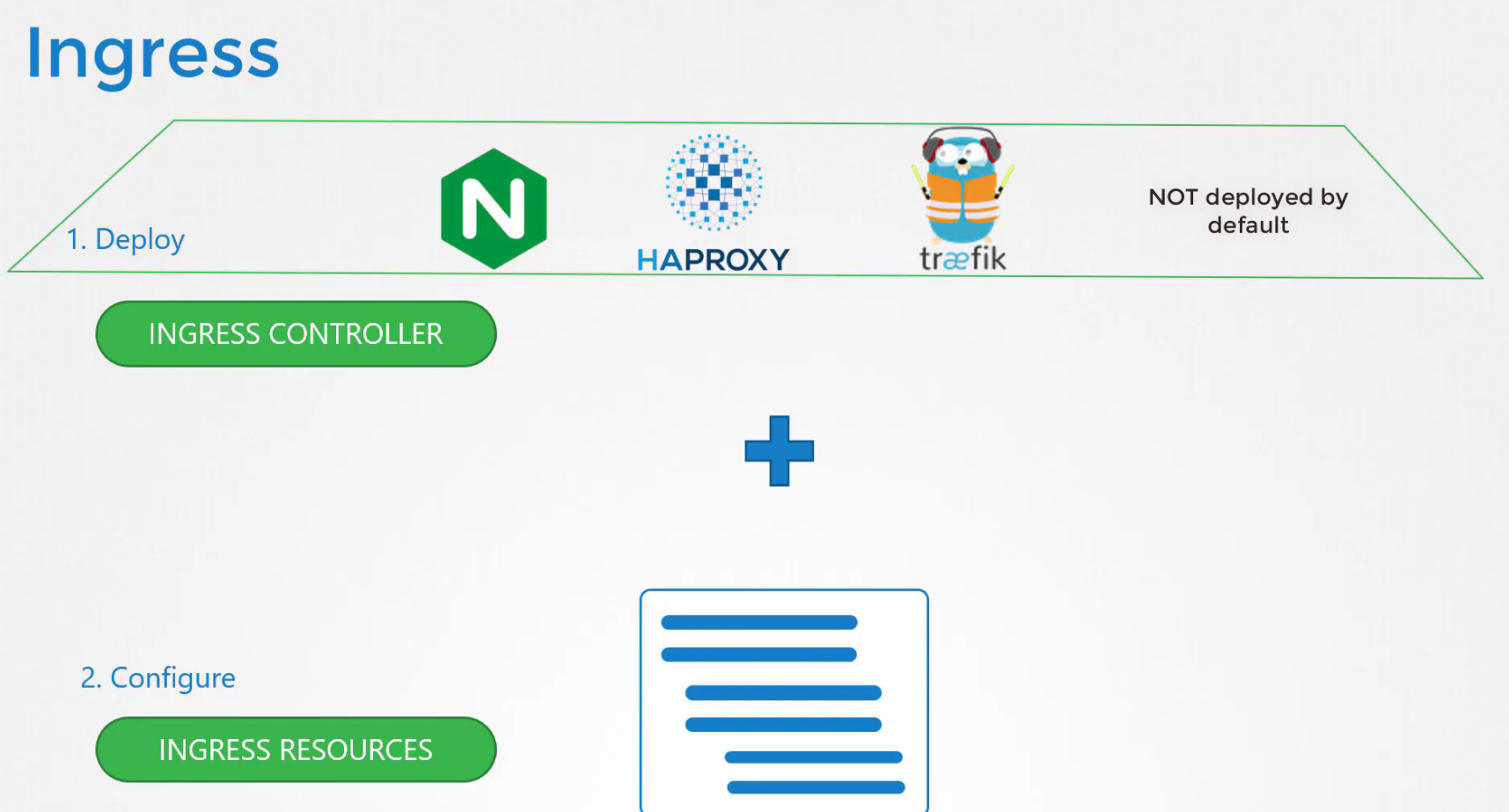

To do all of these things, we can use ingress

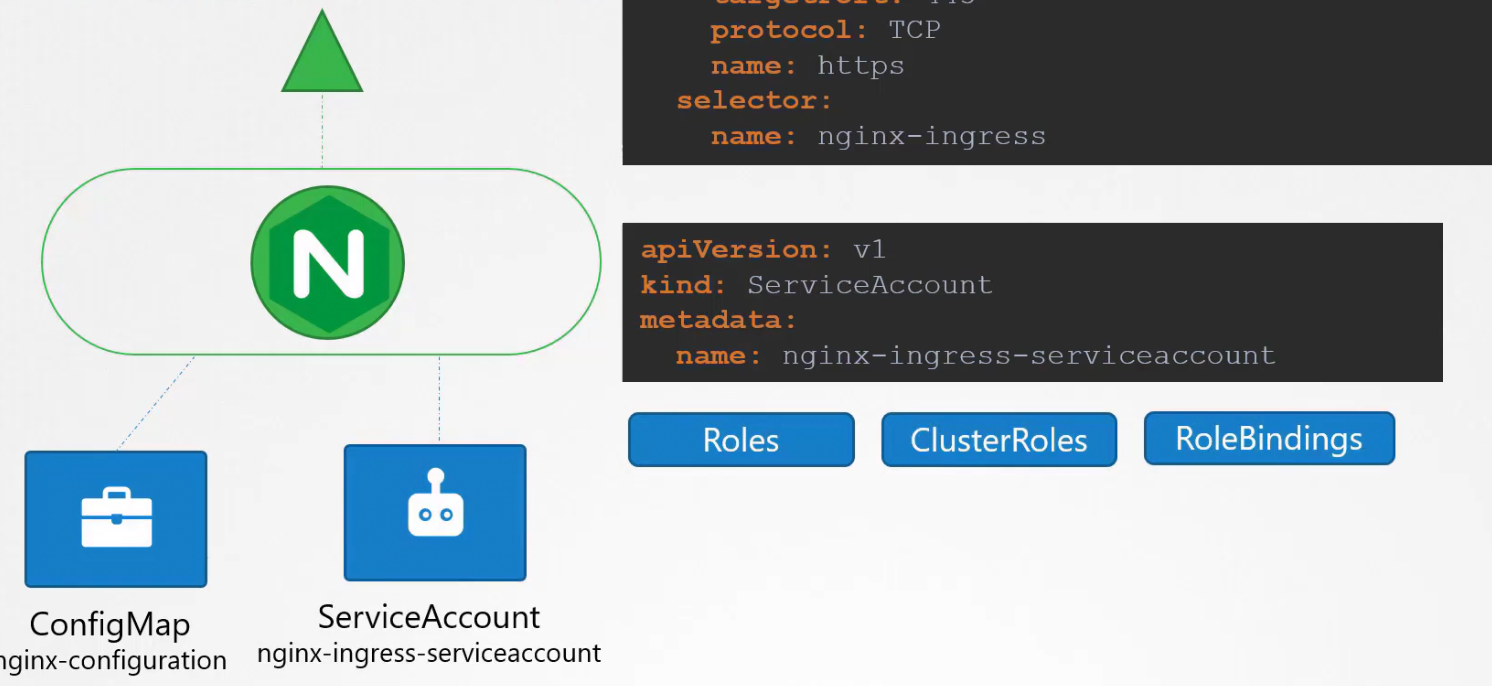

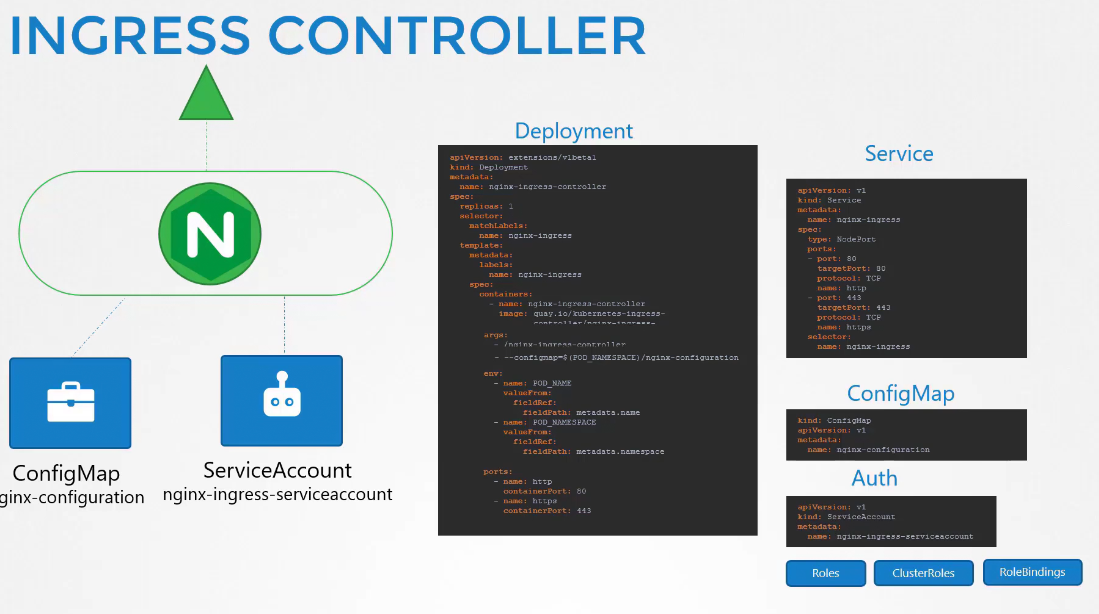

We need to deploy ingress solution which is called as ingress controller

and rules which is called as ingress resources.

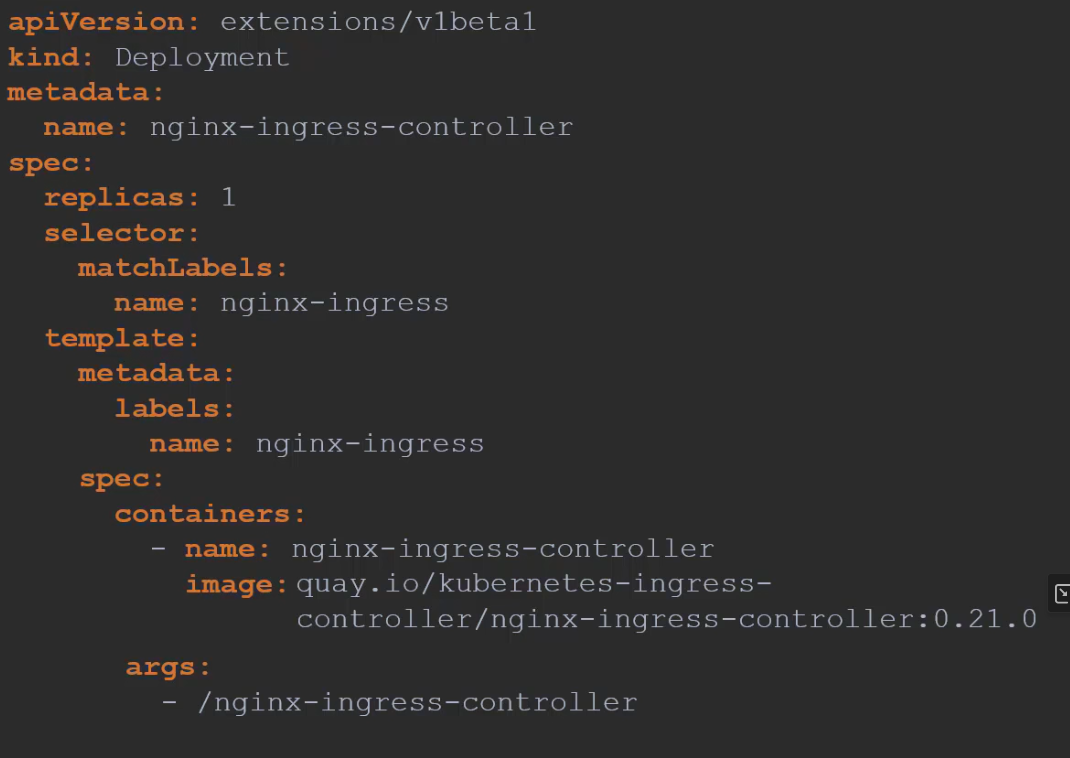

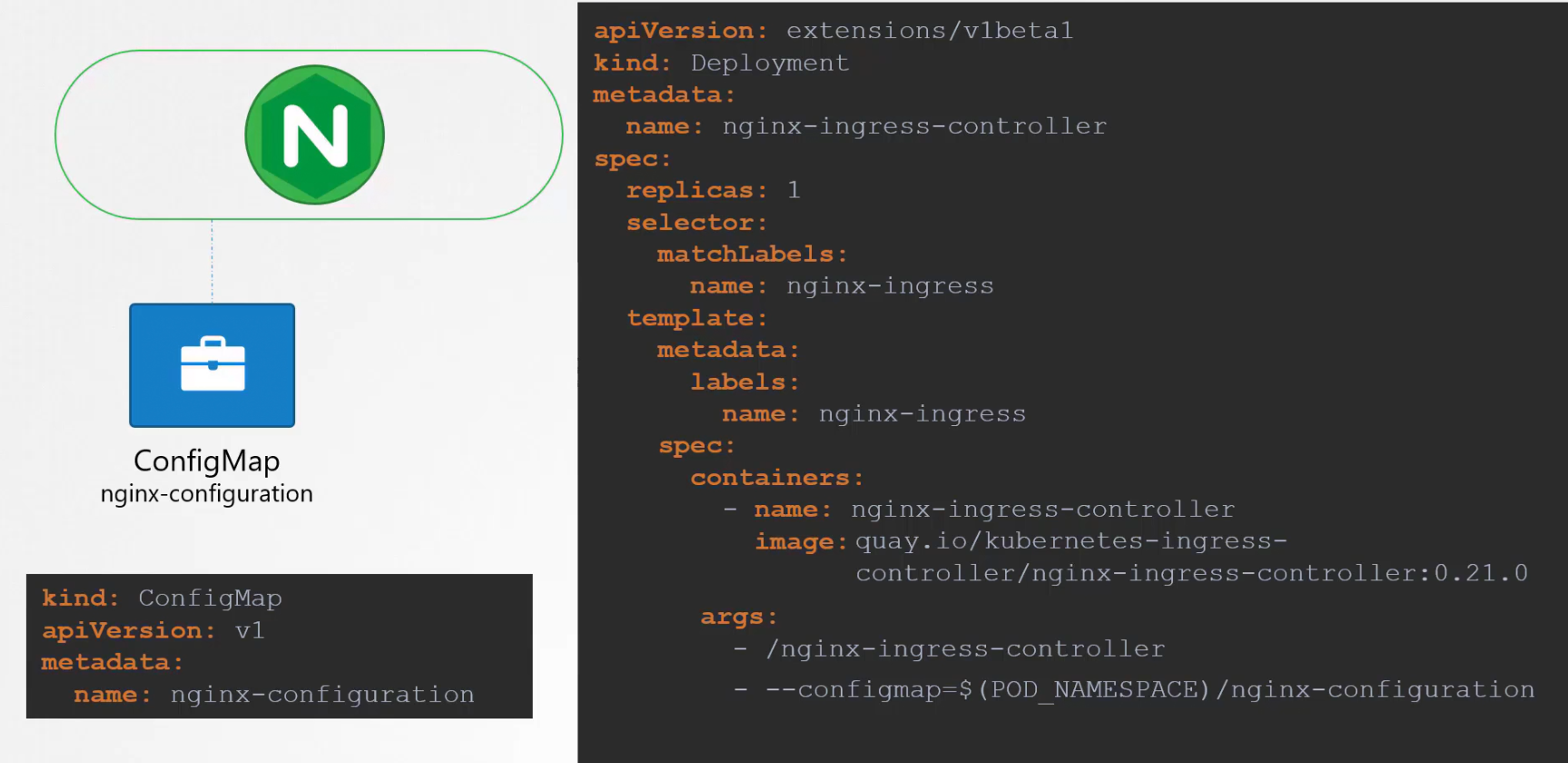

Among these ingress controllers, GCP load balancer and NGINX is maintained by Kubernetes. Let’s use NGINX by deploying this for ingress-controller.

But we are not done here. NGINX has set of configuration options such as path to store the logs, keep alive threshold, SSL Settings, session timeout etc.\

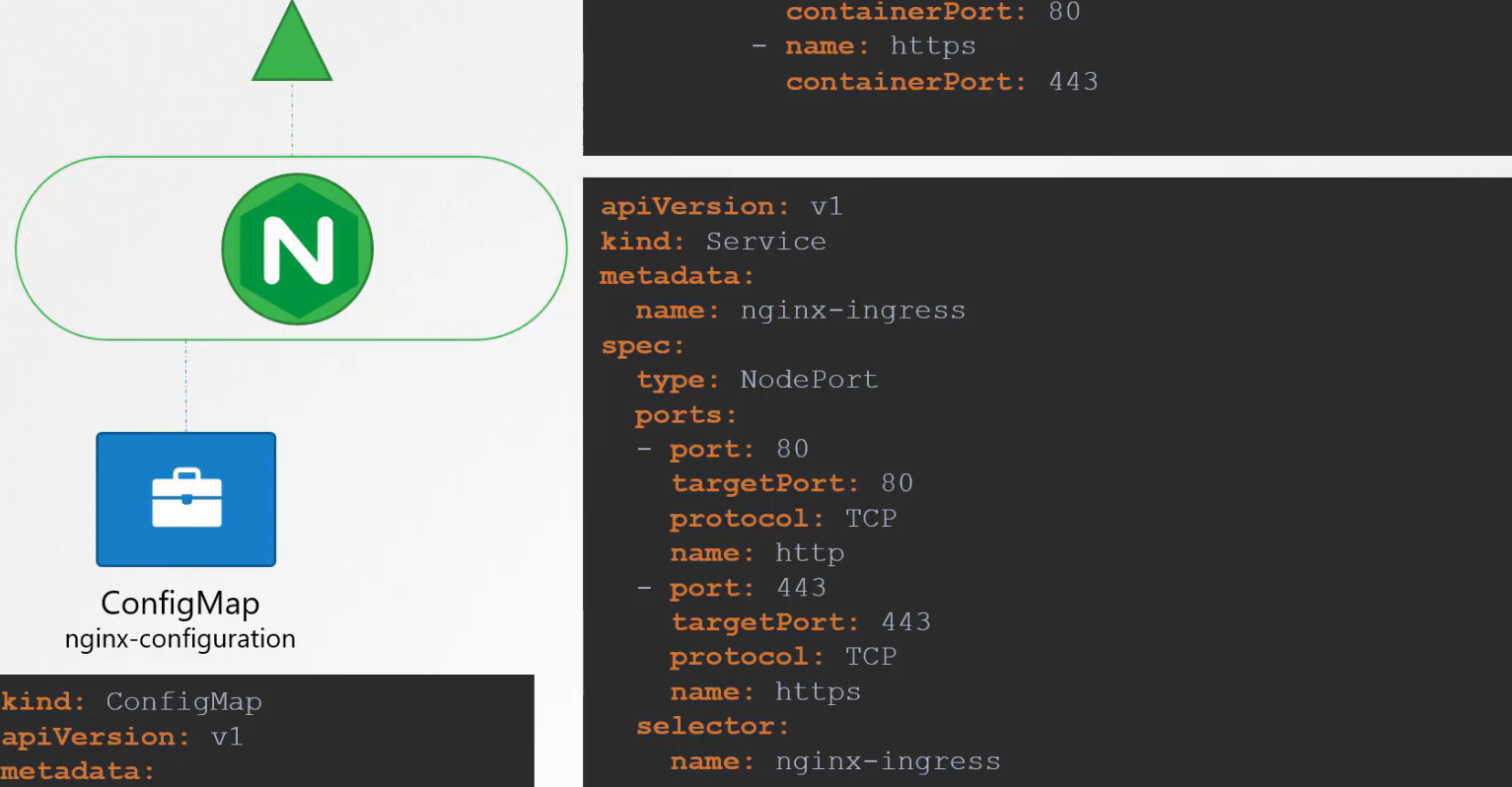

We have ton create a configmap object pass the nginx controller image to decouple these configuration.

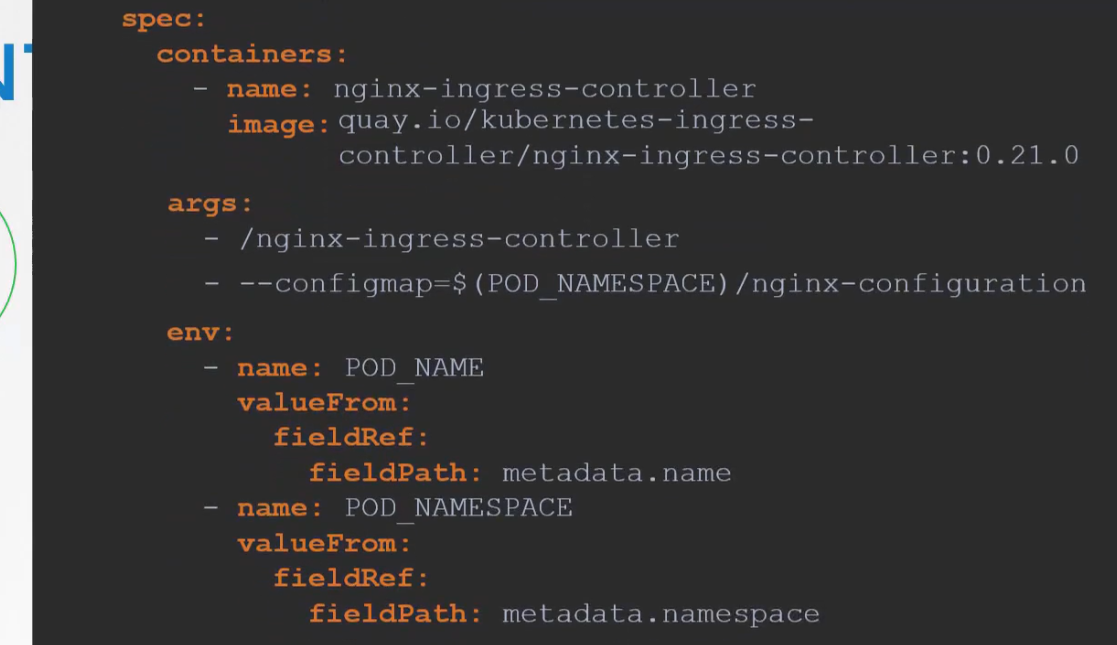

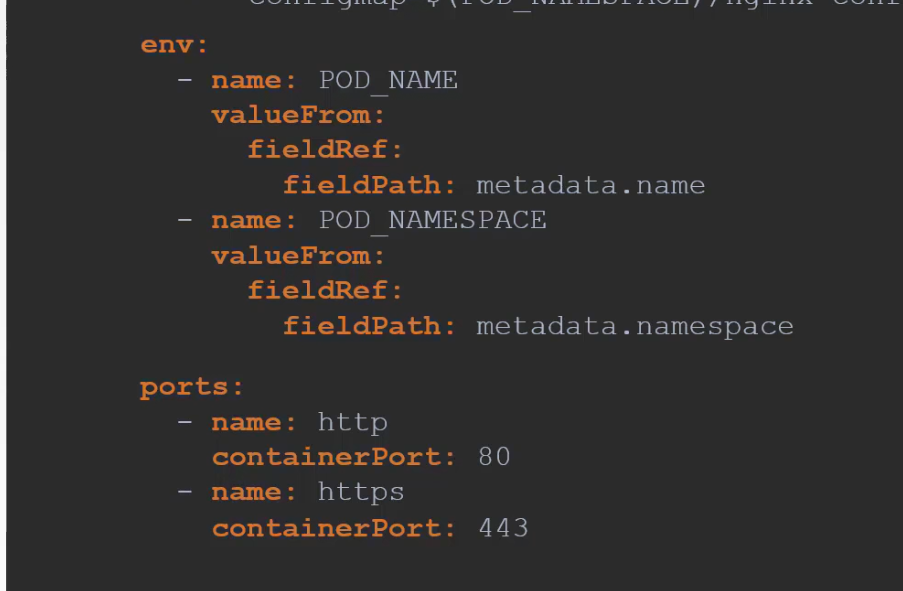

Also, we need to pass 2 environment variables which carries the pod’s name and namespace it’s deployed to.

The nginx pod need these 2 data to read content from the pod. Then the port used by this ingress controller

Then we will create a service to expose this nginx controller to the world

The ingress controller have additional intelligence built into them to monitor the kubernetes cluster for ingress resources and configure underlying nginx server when something has changed. To do this, we we need service account with right permissions.

Here a service account is created with correct roles and role bindings

So, overall this is how we created the ingress controller

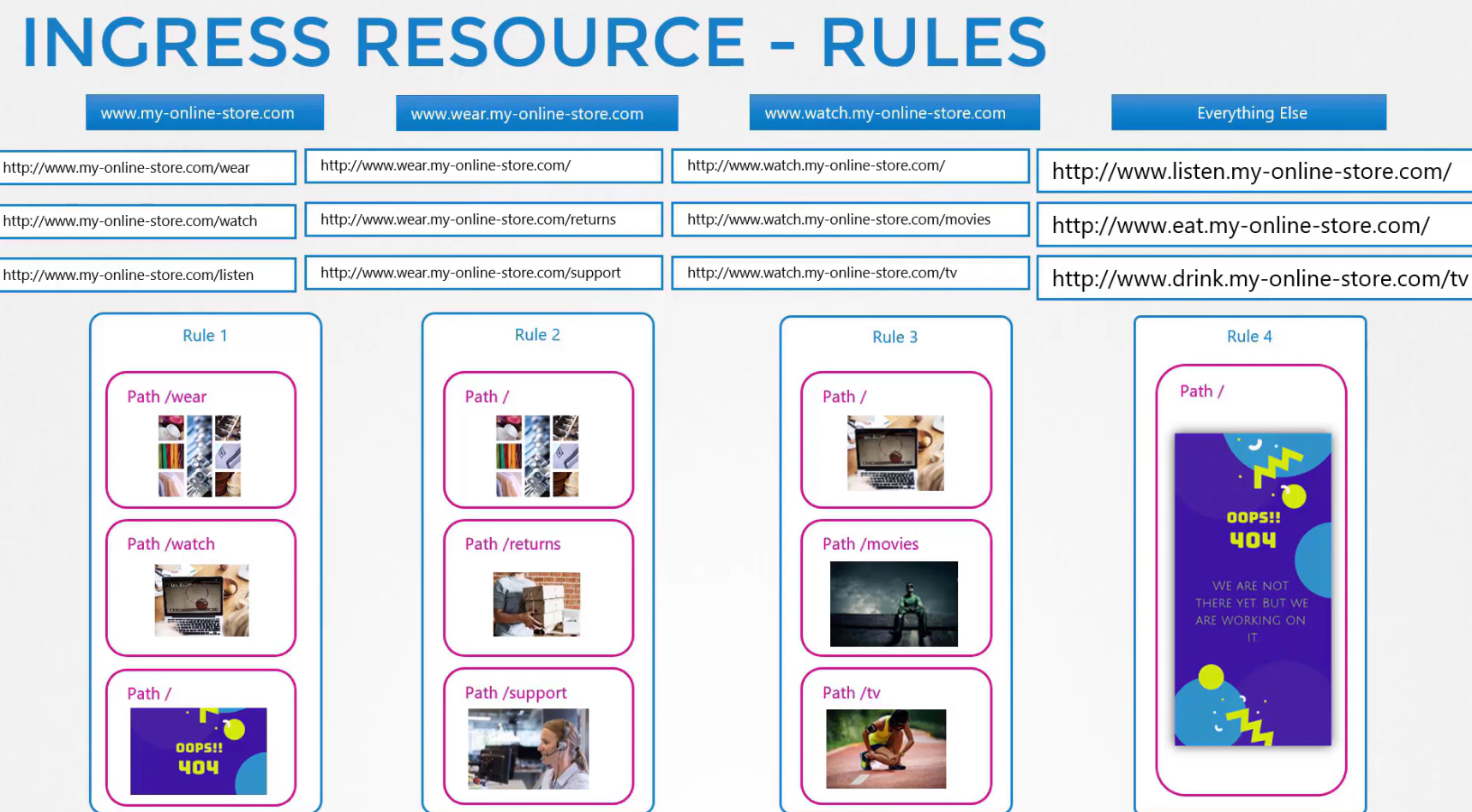

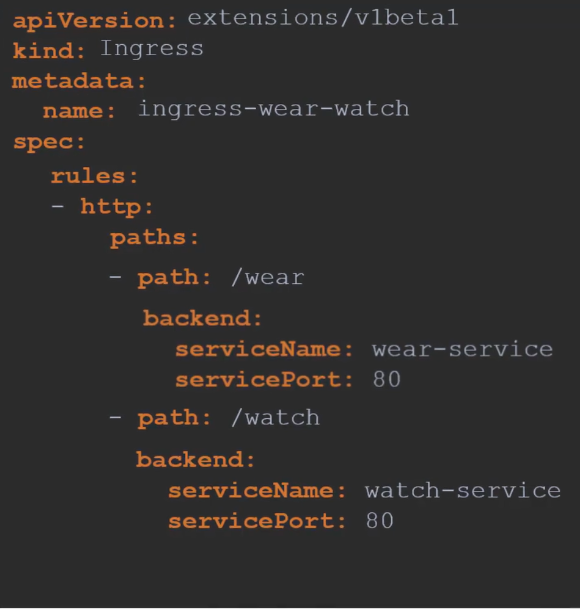

Let’s create ingress resource (rules on ingress controller) now

There can be different types of rules we may set

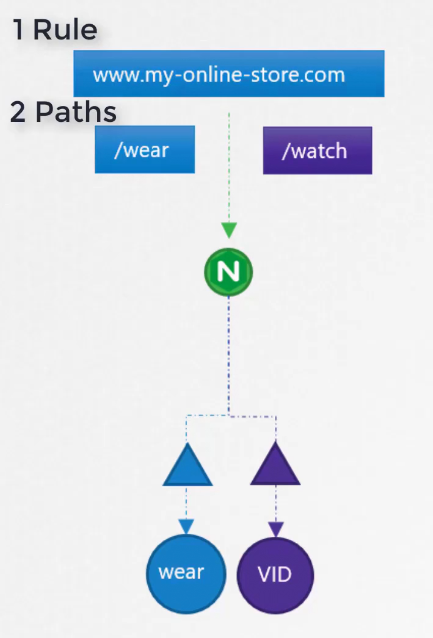

Our goal was to redirect traffic based on the path (/wear or /watch)

To do that, we can have 1 rule as we have 1 domain and 2 paths (for wear and watch)

For the wear and watch pod, we can set this rule to redirect

Here in the image, you can see service infront of pods. So, the yaml file also redirects traffic based on paths (/wear, /watch) to the service (wear-service, watch-service)

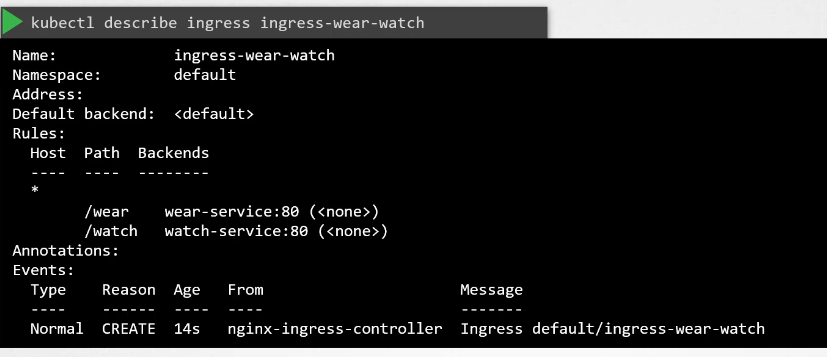

Once we run it and check description

We can see 2 URLs in the Backends.

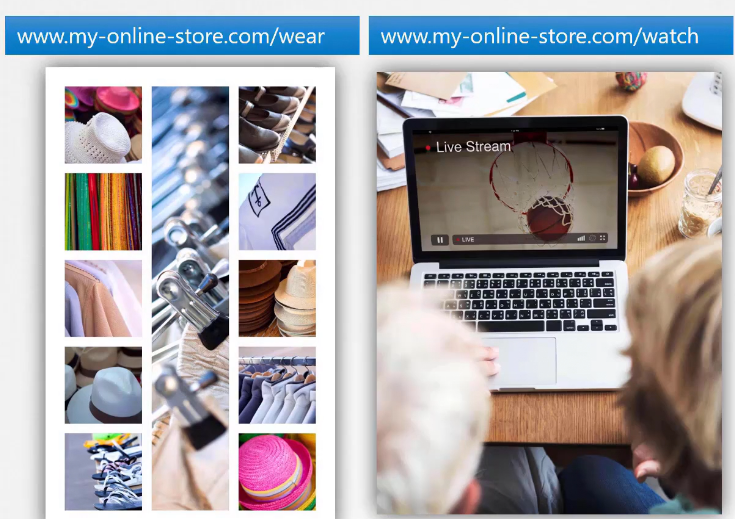

So, this is our desired output once user goes for wear or watch path.

But if the user asks for a different path which we don’t have?

We can then set a 404 page and redirect for such request.

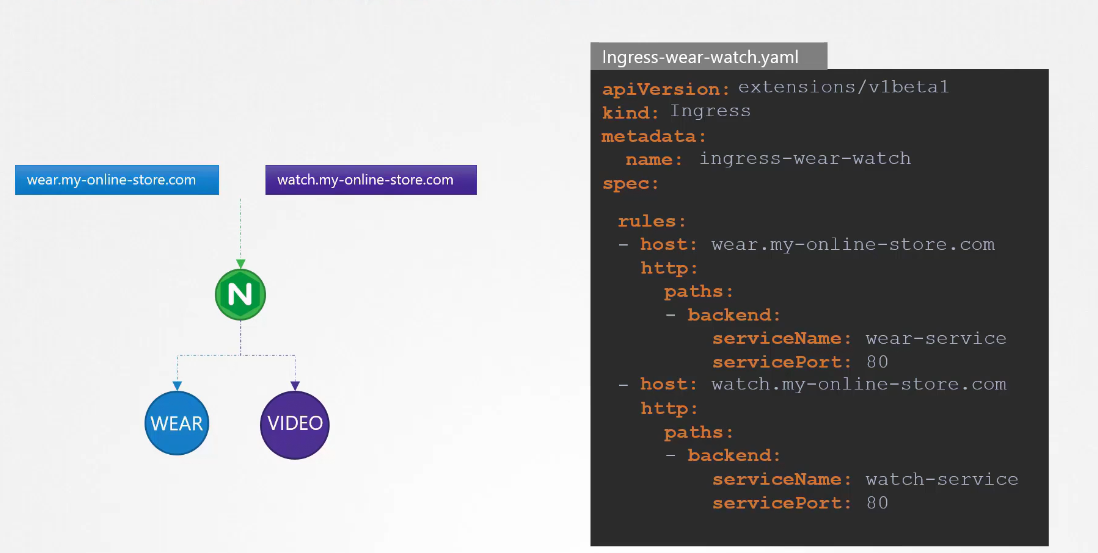

We also have another ways to solve the whole scenarios.If we had dedicated dns for wear and watch pages, we can now use them in hosts and just redirect them directly to the services responsible for the pod. (Note: This image does not show the service , here you can just see wear and watch pod. But don’t worry, the services are there to connect to the pods.)

Now, in k8s version 1.20+, we can create an Ingress resource in the imperative way like this:-

Format - kubectl create ingress --rule="host/path=service:port"**

Example -

kubectl create ingress ingress-test --rule="wear.my-online-store.com/wear*=wear-service:80"**

Find more information and examples in the below reference link:-**

https://kubernetes.io/docs/reference/generated/kubectl/kubectl-commands#-em-ingress-em-

References:-

https://kubernetes.io/docs/concepts/services-networking/ingress

https://kubernetes.io/docs/concepts/services-networking/ingress/#path-types

Subscribe to my newsletter

Read articles from Md Shahriyar Al Mustakim Mitul directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by