Kubernetes 101: Part 8

Md Shahriyar Al Mustakim Mitul

Md Shahriyar Al Mustakim MitulAutoscaling

It means that the services can be scaled up or down depending on needs.

To achieve true cloud native autoscaling, three things are needed.

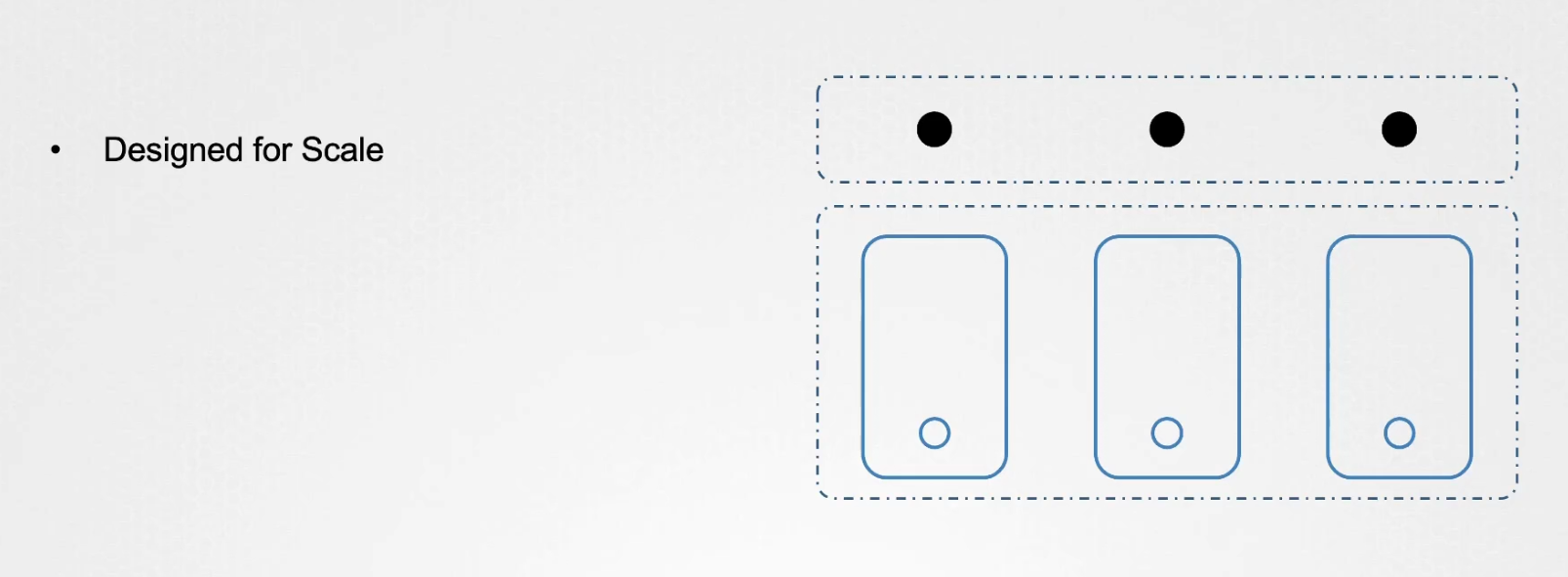

- Both the application and infrastructure must be designed to scale effectively

The system should be able top monitor the workload and allocated additional resources as needed without manual intervention.

It should be bidirectional meaning it should have the ability to up and down as the demand fluctuates.

Kubernetes has 3 types of autoscaler

Horizontal Pod Autoscaler

Vertical Pod Autoscaler

Cluster Autoscaler

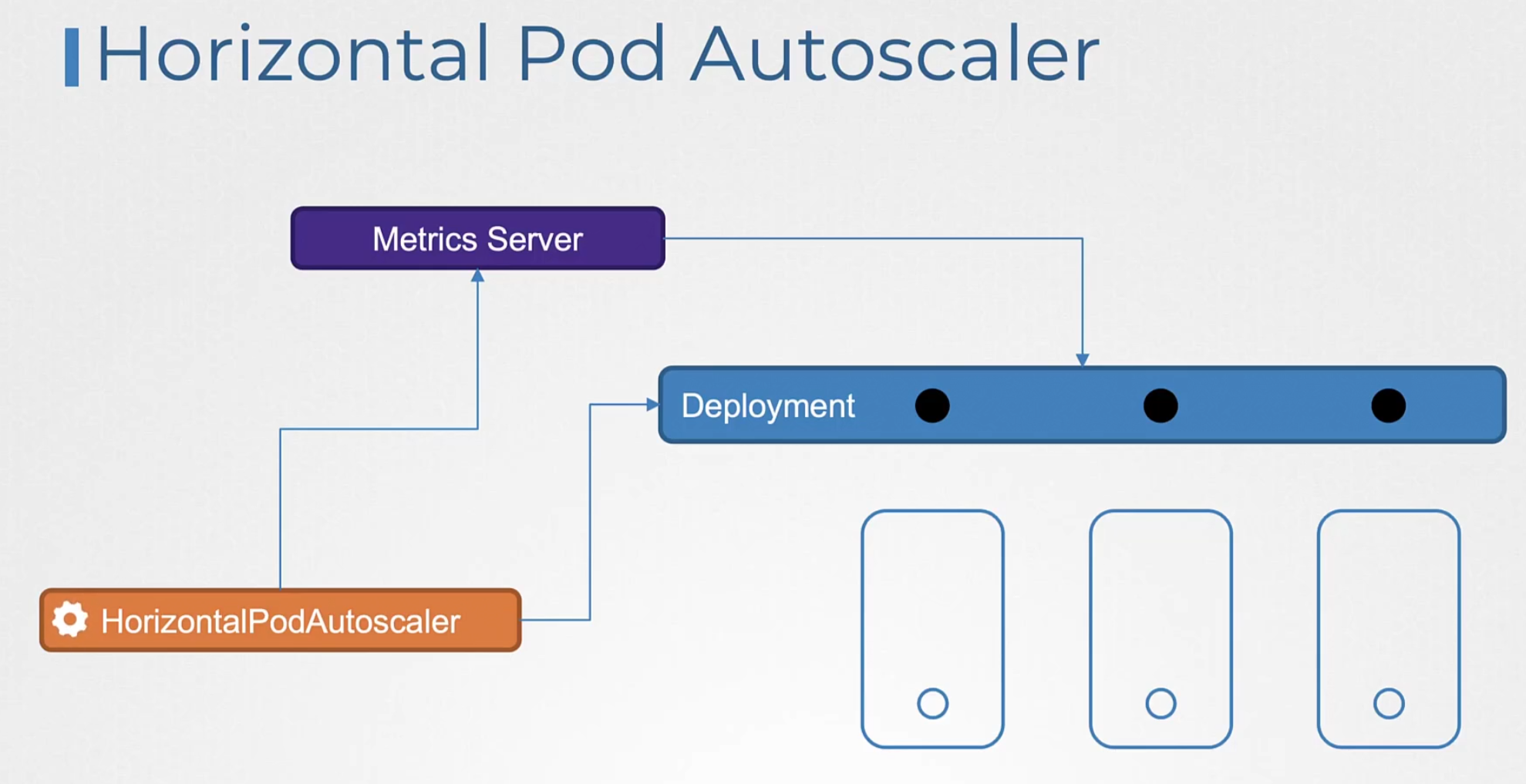

Horizontal Pod Autoscaler (HPA)

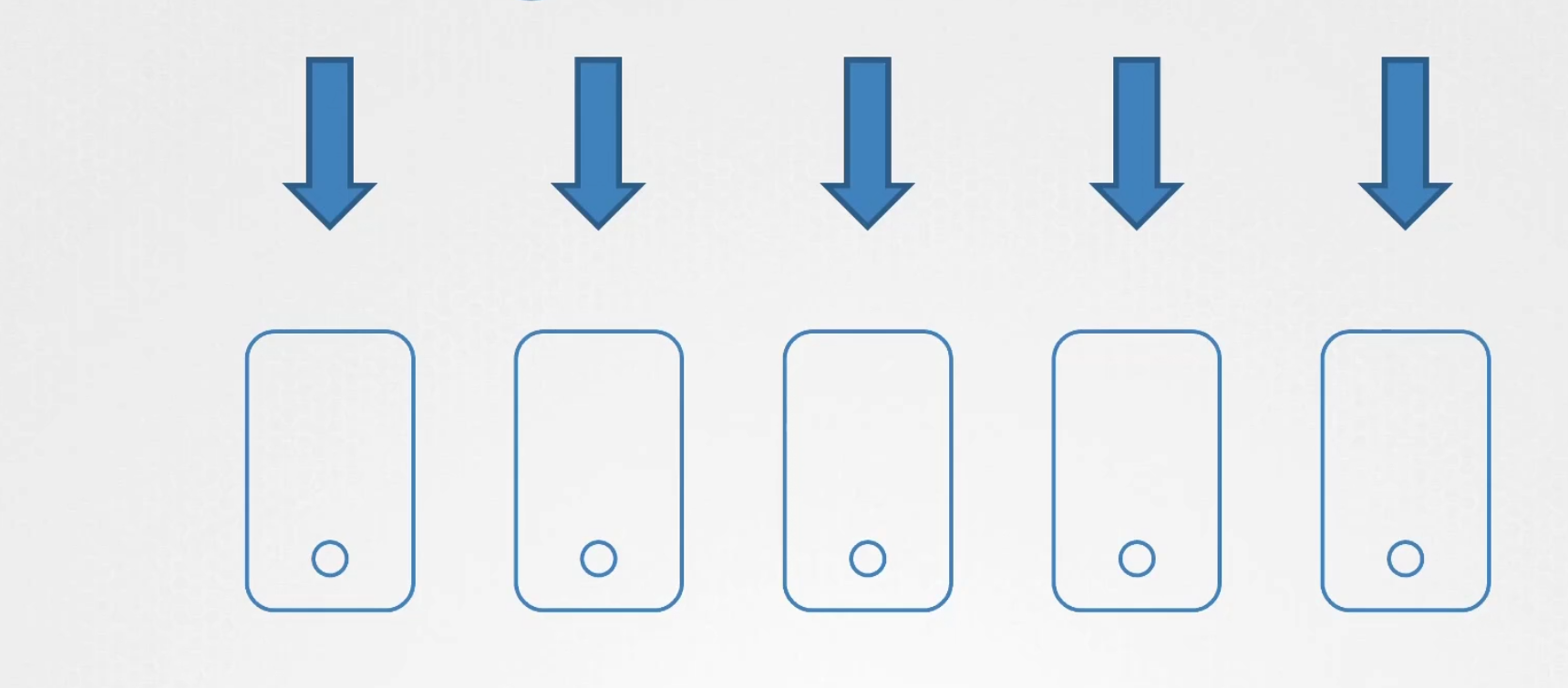

Horizontal autoscaling is increasing number of pods . Here this autoscaler scales the number of pods up (increase the number) or down (lessen the pods) depending on metrics. These metrics are received from cpu utilization or others.

How to create one?

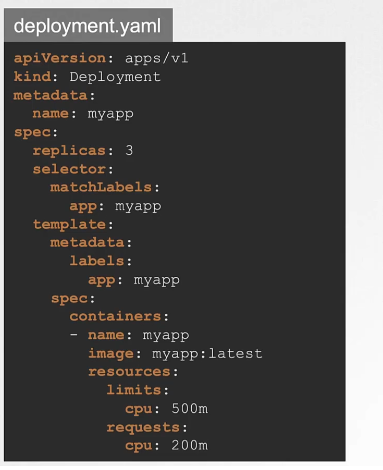

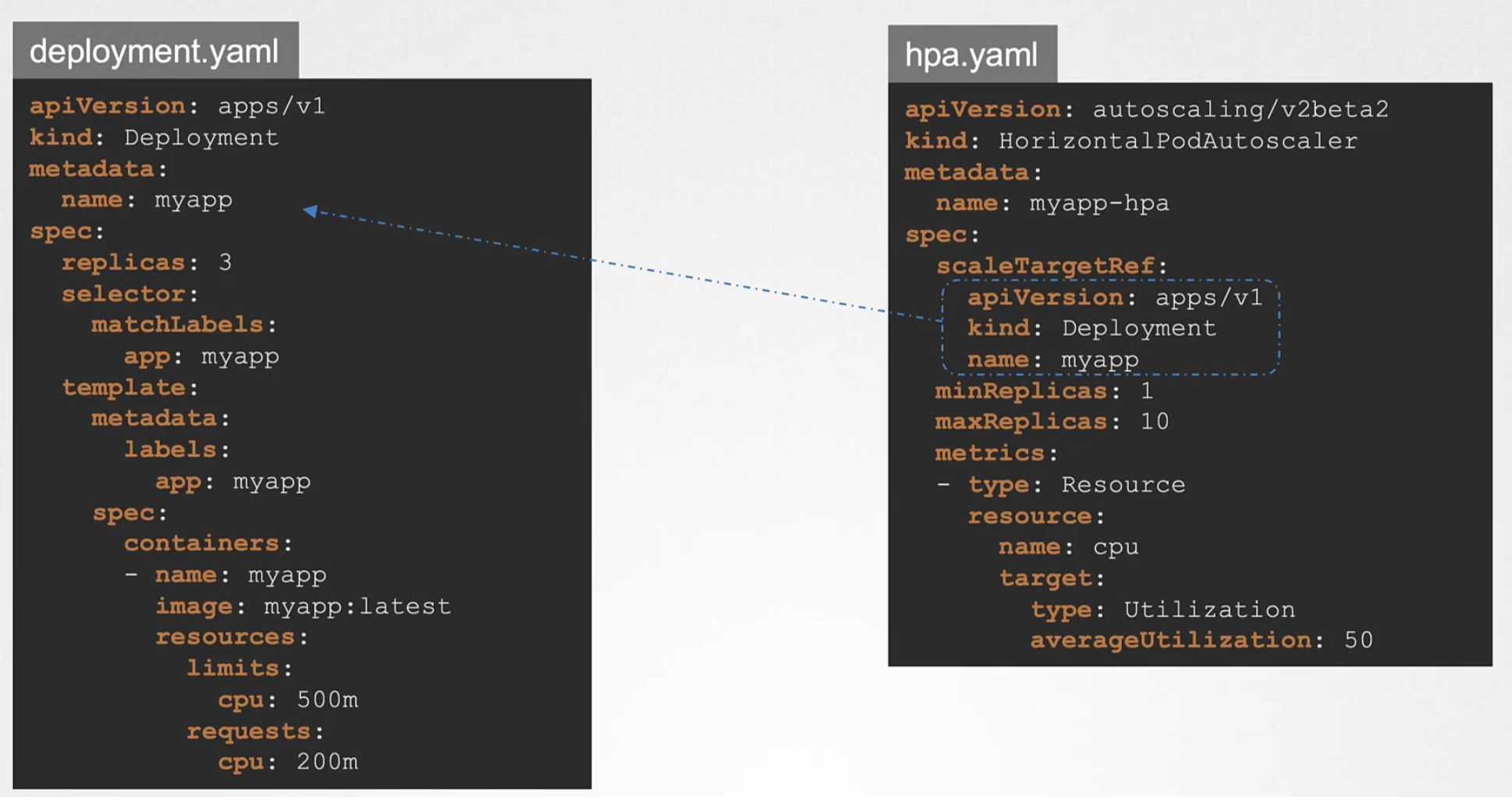

First of all, create a deployment

Secondly, this deployment will be targeted by the Horizontal Pod Autoscaler (HPA)

Here you can see the hpa.yaml to target the myapp deployment

Also the metrics are set as cpu utilization and maximum 50% utilization can be done. If crosses that, new replica should be created . Here minReplicas are 1 and maxReplicas are 10

Then apply that using kubectl create -f hpa.yaml

You can also check the HPA details and see that , reference has deployment with which it’s assocaited.

We can also delete the HPA

Vertical Pod Autoscaler (VPA)

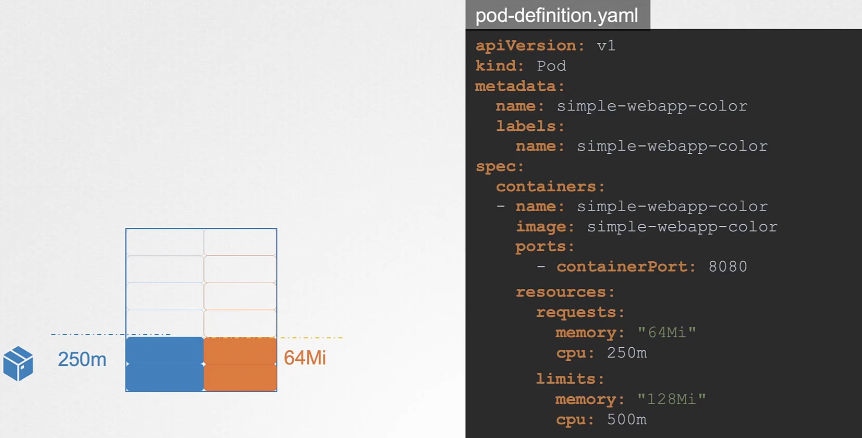

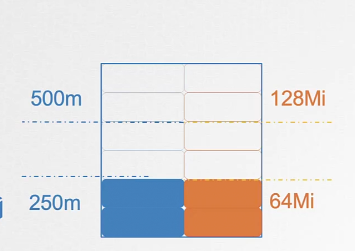

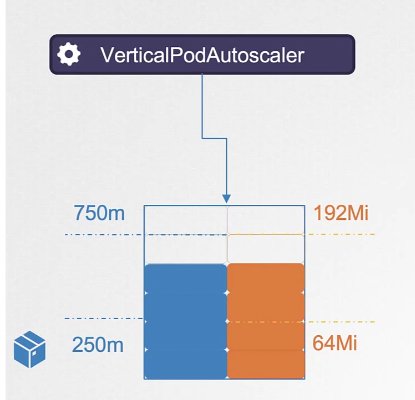

Assume that we have a pod running which has 64MB memory and 250mili CPU.

The maximum limit it can have is 128 MB and 500mili CPU usages.

What if the app might get more traffics and reach the max limit?

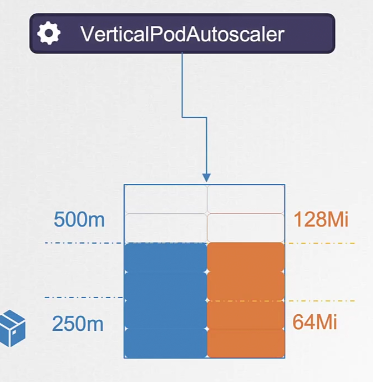

The VPA then come in and adjust the amount for memory and cpu so that the pod can work properly.

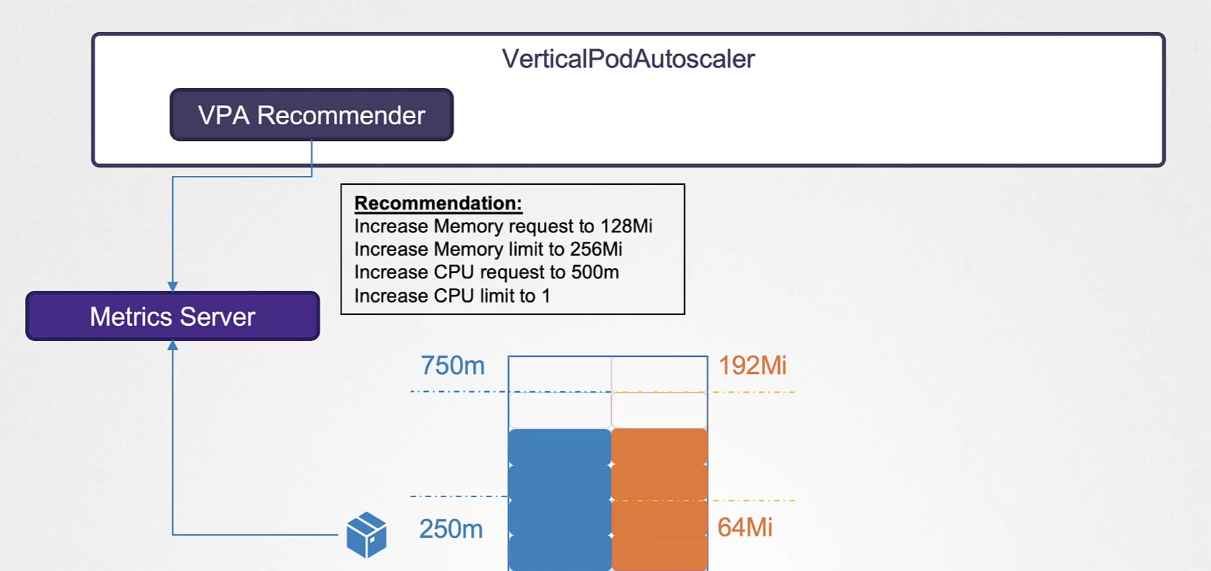

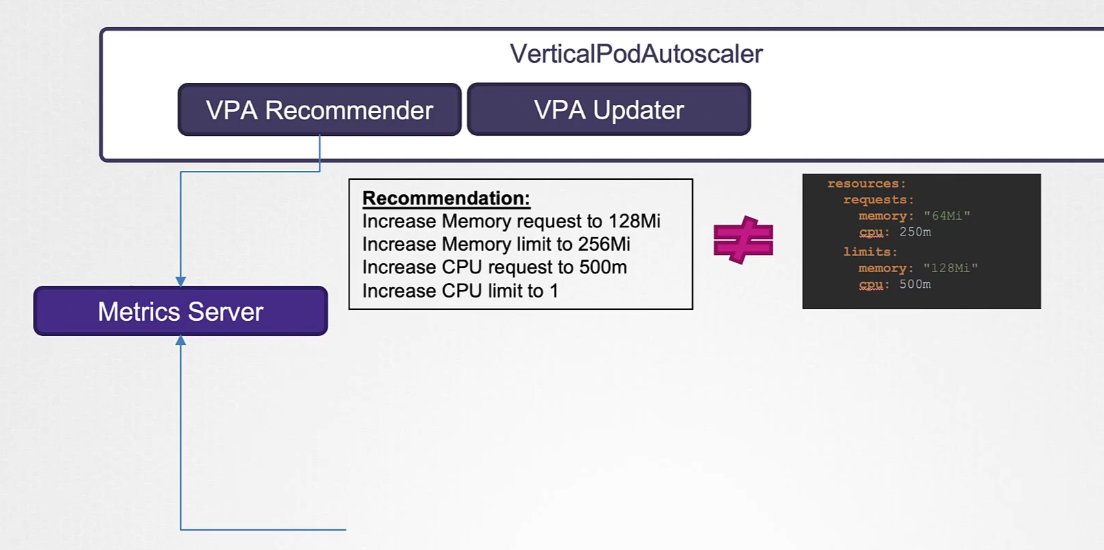

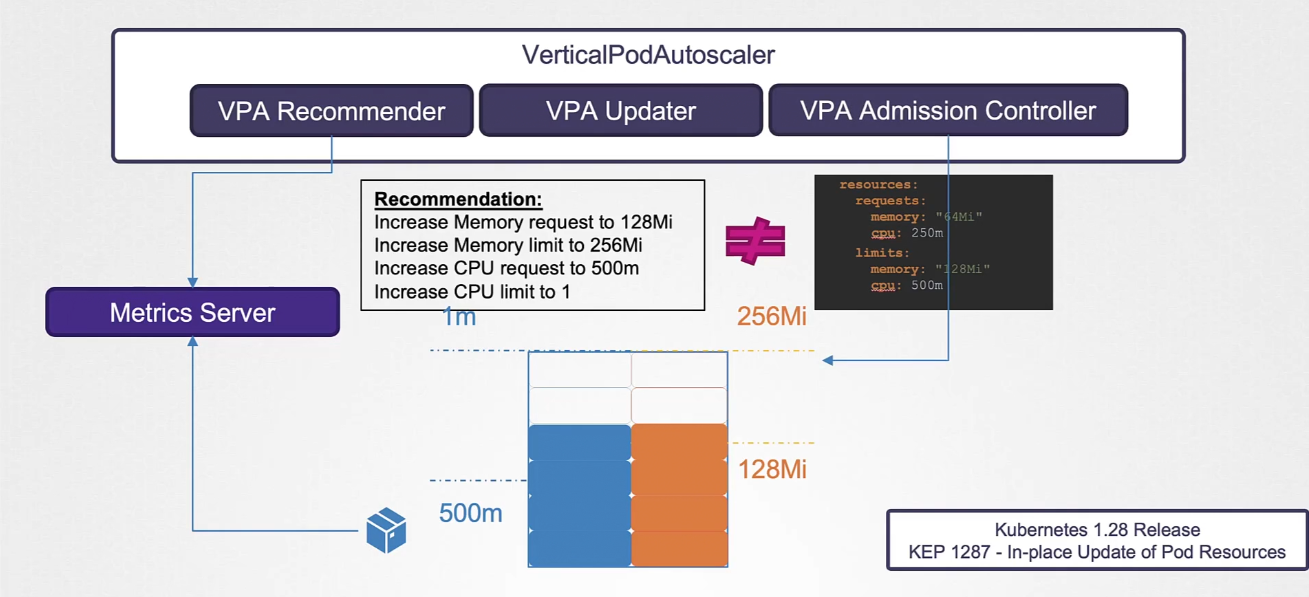

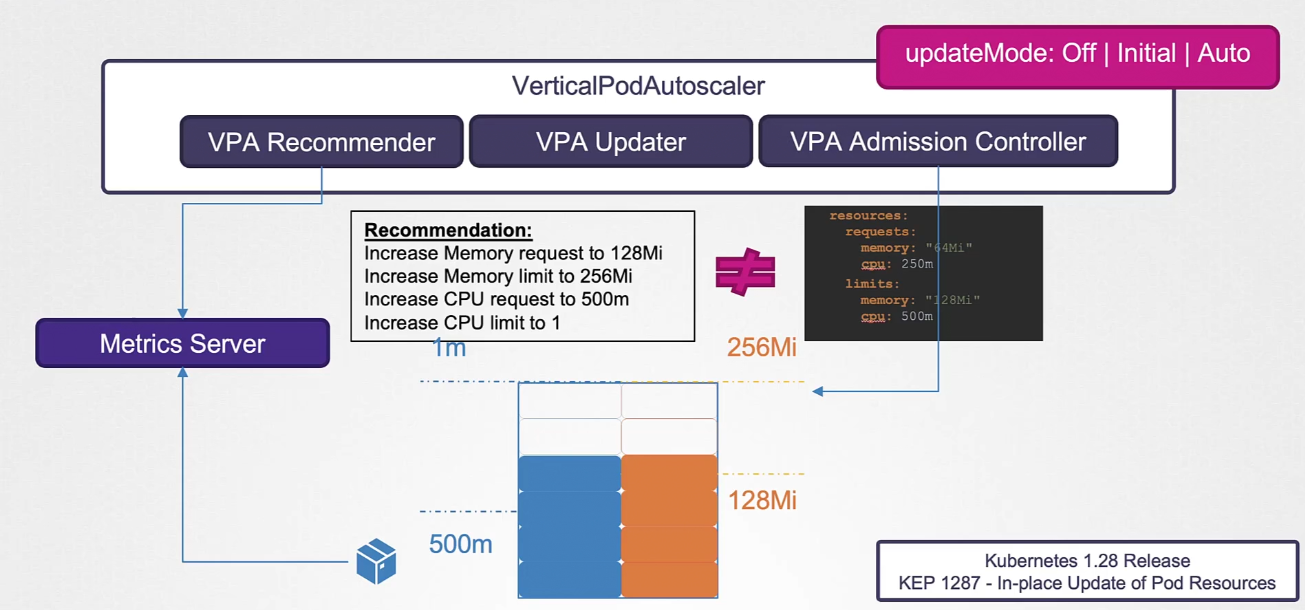

How does VPA work?

First of all, VPA Recommended suggests new adjustments depending on metricss

The VPA updater then shuts down the pod and initializes a new pod creation.

Once it’s getting created, VPA Admission Controller lets the pod to change the resource limit same as recommended by the VPA recommender

So, now you can see the limit of Memory 256MB and CPU limit is 1.

THere is also a variable (updateMode) which can be set to manipulate the updating of the resources

If set to off, VPA recommender will recommend new limits but won’t be applied.

If set to Initial, only new pods will have this limits and Auto will automatically change the limits as we saw earlier.

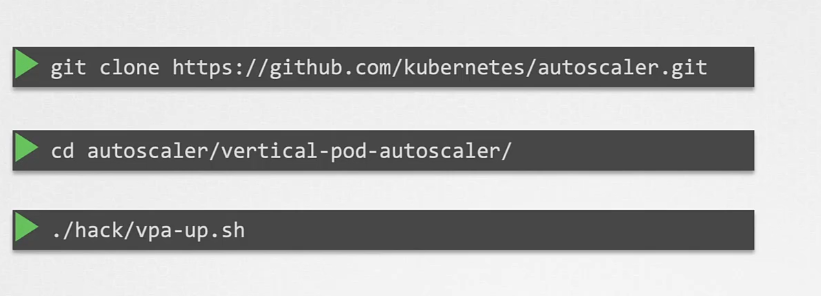

VPA is not by default there in kubernetes and thus we need to clone the project

Then how to deploy a VPA?

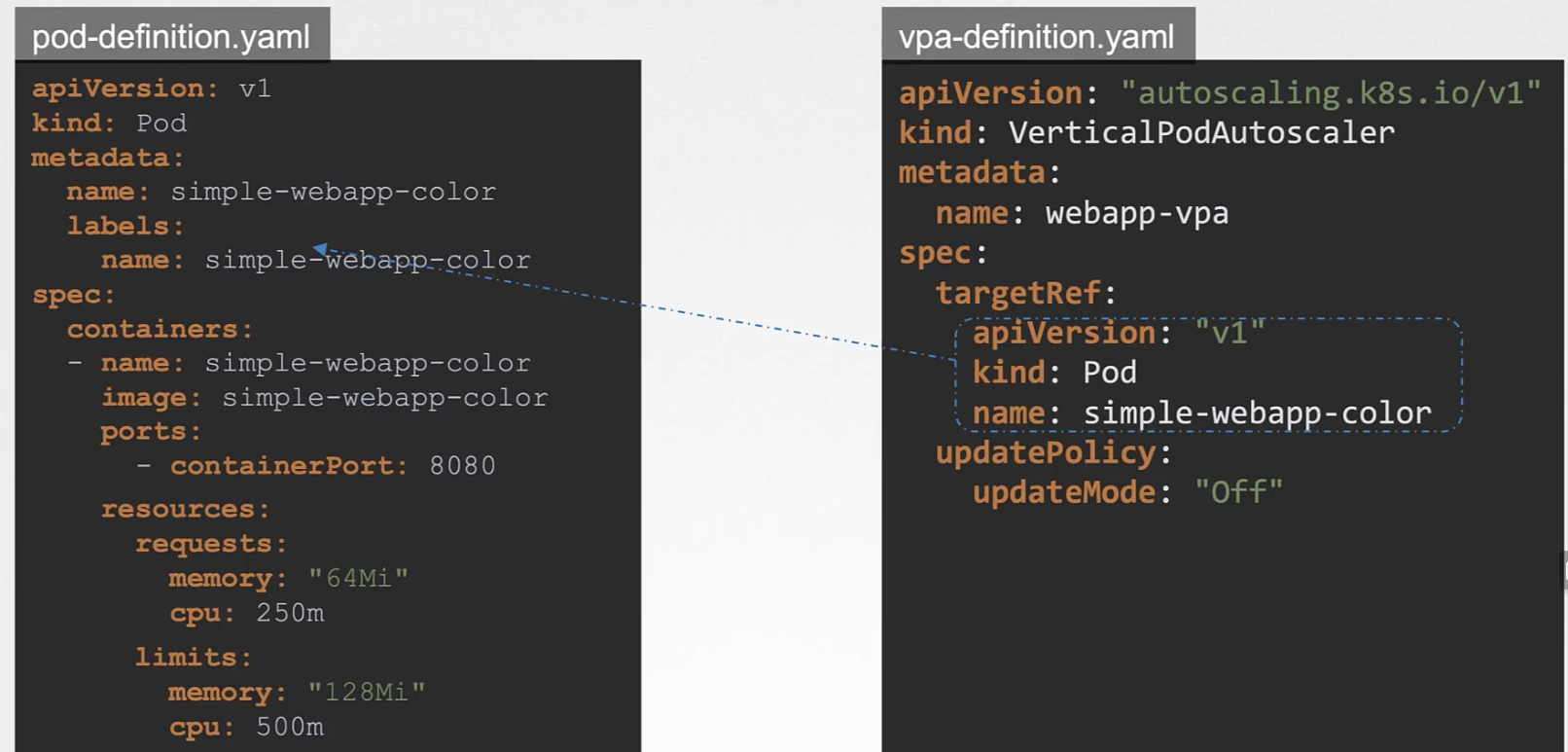

Assume we have a pod named simple-webapp-color . Then create a vpa definition file where mention the pod’s detail under targetRef and set the updateMode to be off or, initial or auto

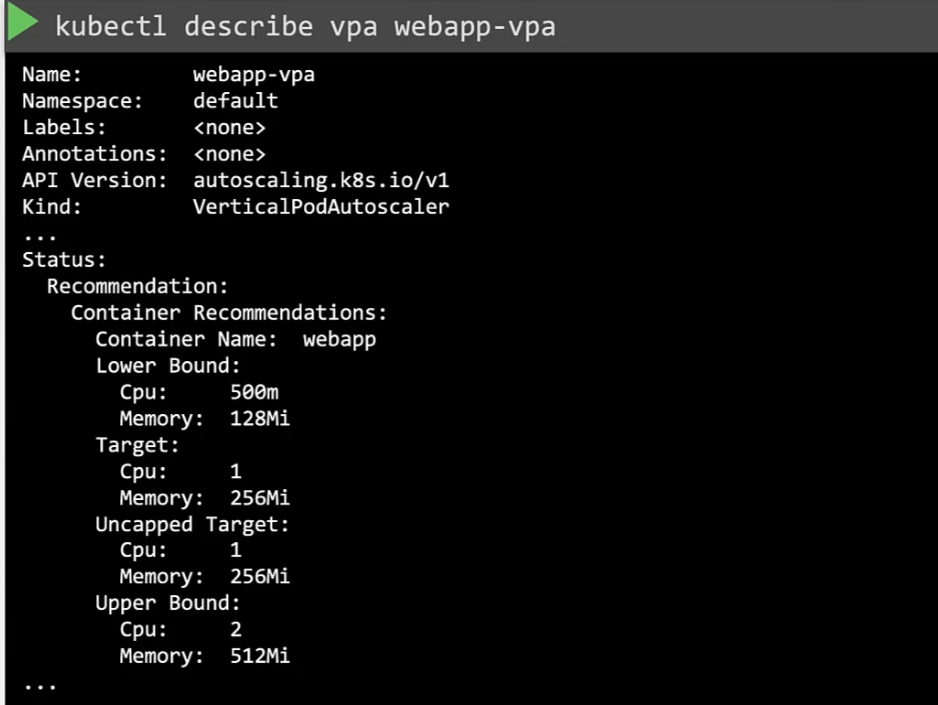

You can check the description of it

Cluster Autoscaler

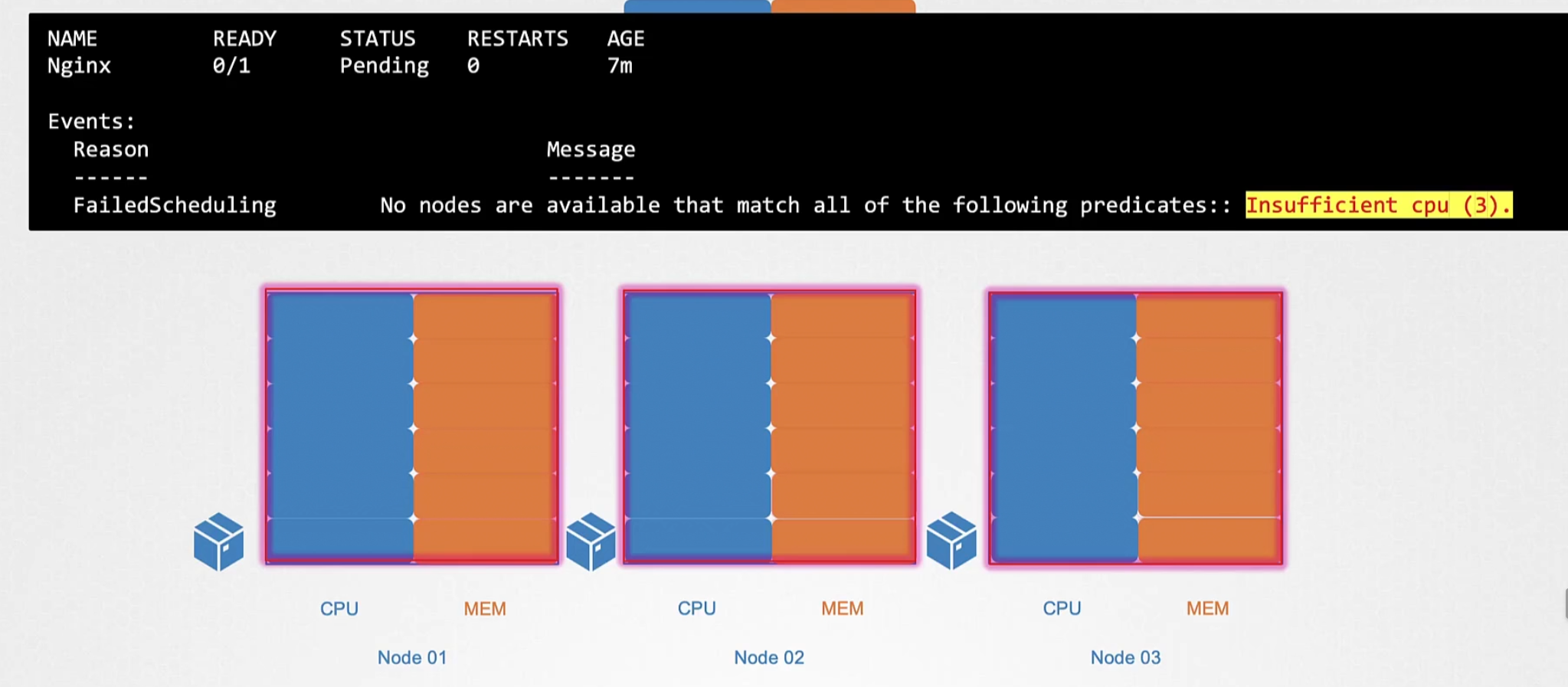

The kube-scheduler puts pods in nodes depending on the CPU, Memory etc

Once it’s full, the kube-scheduler keeps new pods in pending state because there is no space.

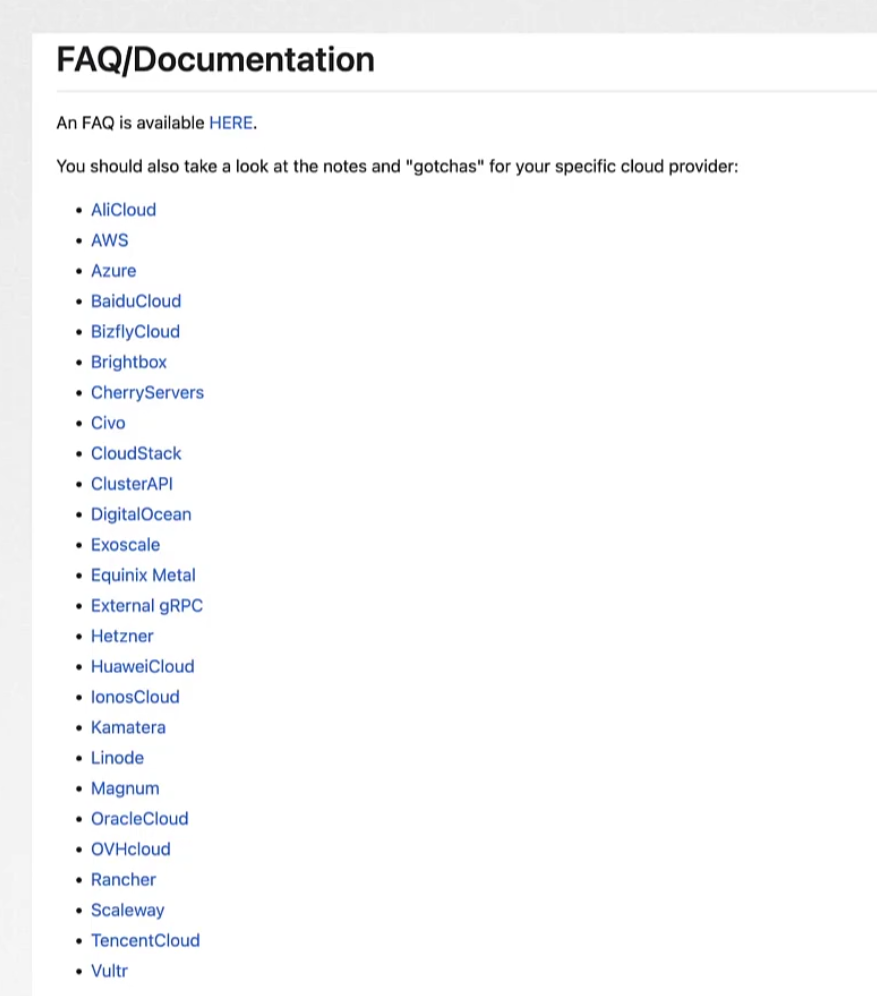

Here our Cluster autoscaler which created new node on the cluster. As it depends on memory and cpu inclusion now, we may need to choose cluster API depending on the providers. Some of them are

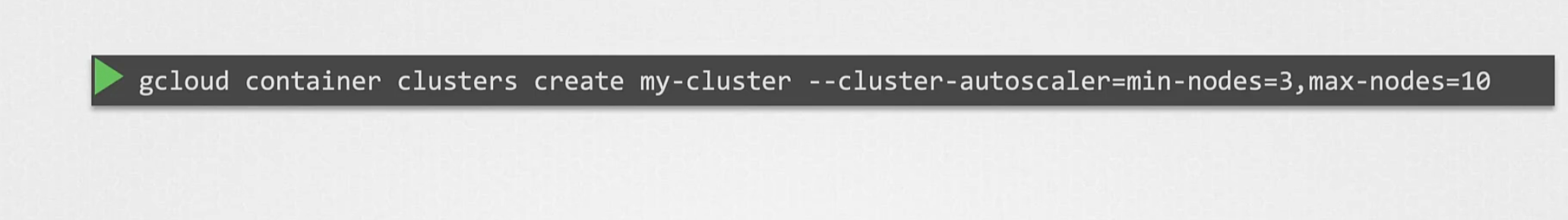

For example, this is the command we use for Google Cloud to use a cluster autoscaler

Serverless

Assume that. it’s your birthday and you have hired an event organizer who will clean the house before the party. And they will present things during the event and finally, clean things after the party

In the CS world, there is something called FAAS → Function As A Service

Which works like that and we don’t need to bother about doing those of our own.

The benefit of this way is, you have to pay only for what you use.

One of the examples is, running your container on cloud server

Kubernetes serverless platforms such as Knative, OpenFaaS provides developers a way to deploy and manage serverless function (scaling, event trigerring, lifecycle management) on top of kubernetes

Kubernetes Enhancement Proposals (KEP)

Check this page where developers share enhancement proposals to make kubernetes better

Rather than proposing something on issue, they use kep to share ideas.

How does a KEP look? For example, here is a KEP under sig-auth

Where you need to mention the goals, motivations etc to make a new enhancement, risks and many more

SIG members vote on whether to accept the KEP or not.

How Kubernetes community work?

On top of everything there is a steering committee who takes the overall decision, then working group which works at specific issues/Special Interest Groups (SIGs)

Here you can see SIG Names, their Chair and meeting link

SIG chairs do hold meetings monthly and talk about KEP

Open Standards

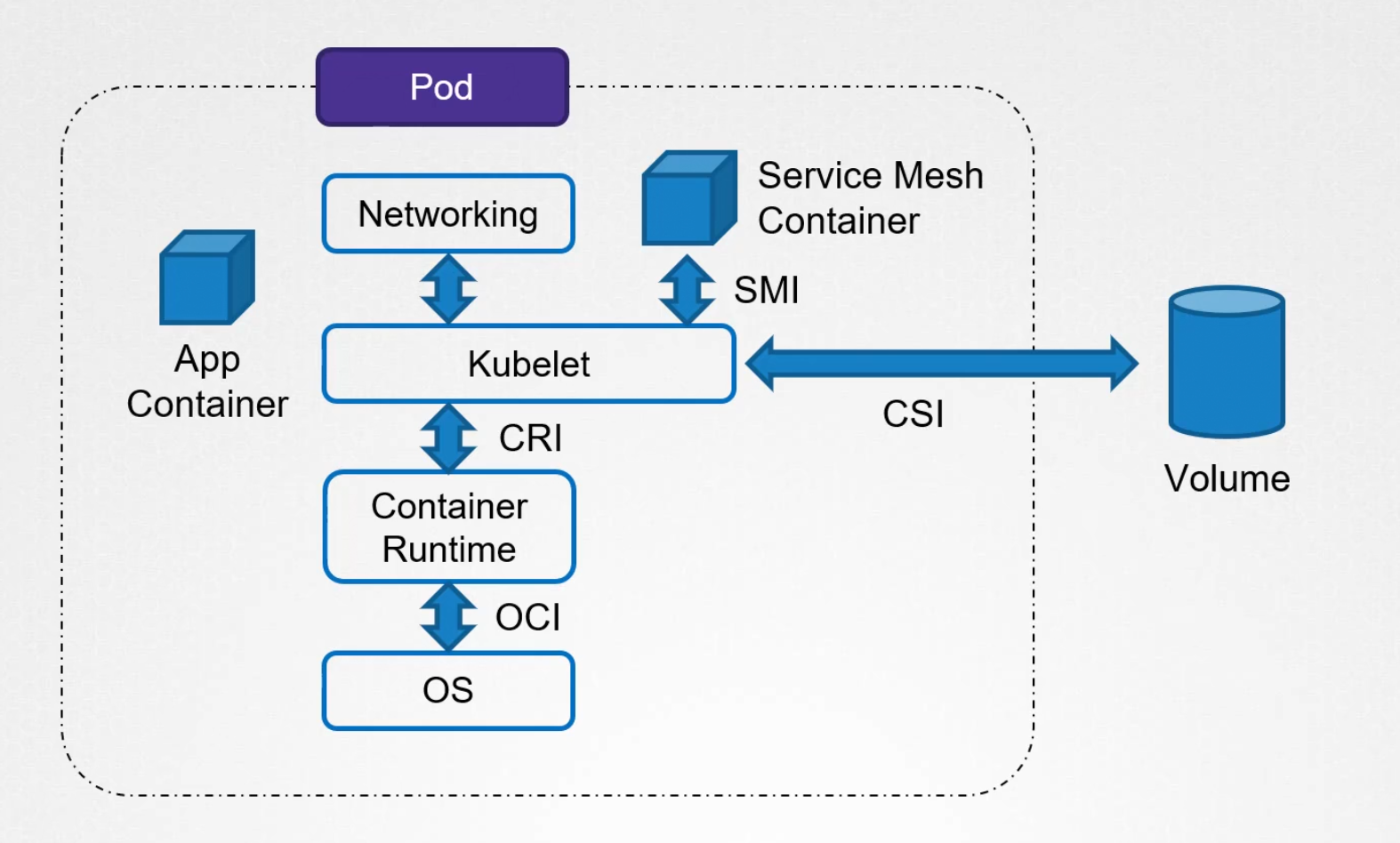

There are some policies to maintain while creating solutions. For example, OCI(Open Container Initiatives) is a group focused on creating open standards for container images, runtimes etc.

Kubernetes Open standards are:

CRI(Container Runtime interface) for container runtimes, CSI (Container Storage Interface) , SMI , CNI(Container Networking Interface) etc are the examples which follows a set of rules.

Subscribe to my newsletter

Read articles from Md Shahriyar Al Mustakim Mitul directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by