Beginner’s Guide to Docker and AWS ECR for MLOps

Pranjal Chaubey

Pranjal Chaubey

Introduction to Docker

Docker is a powerful platform for containerization, which packages an application and its dependencies into lightweight, portable containers. These containers can run consistently across different computing environments, from development to production. Think of Docker as a service like GitHub, where containerization is the technology—just like Git is the technology behind GitHub.

What is Containerization and Why is it Useful?

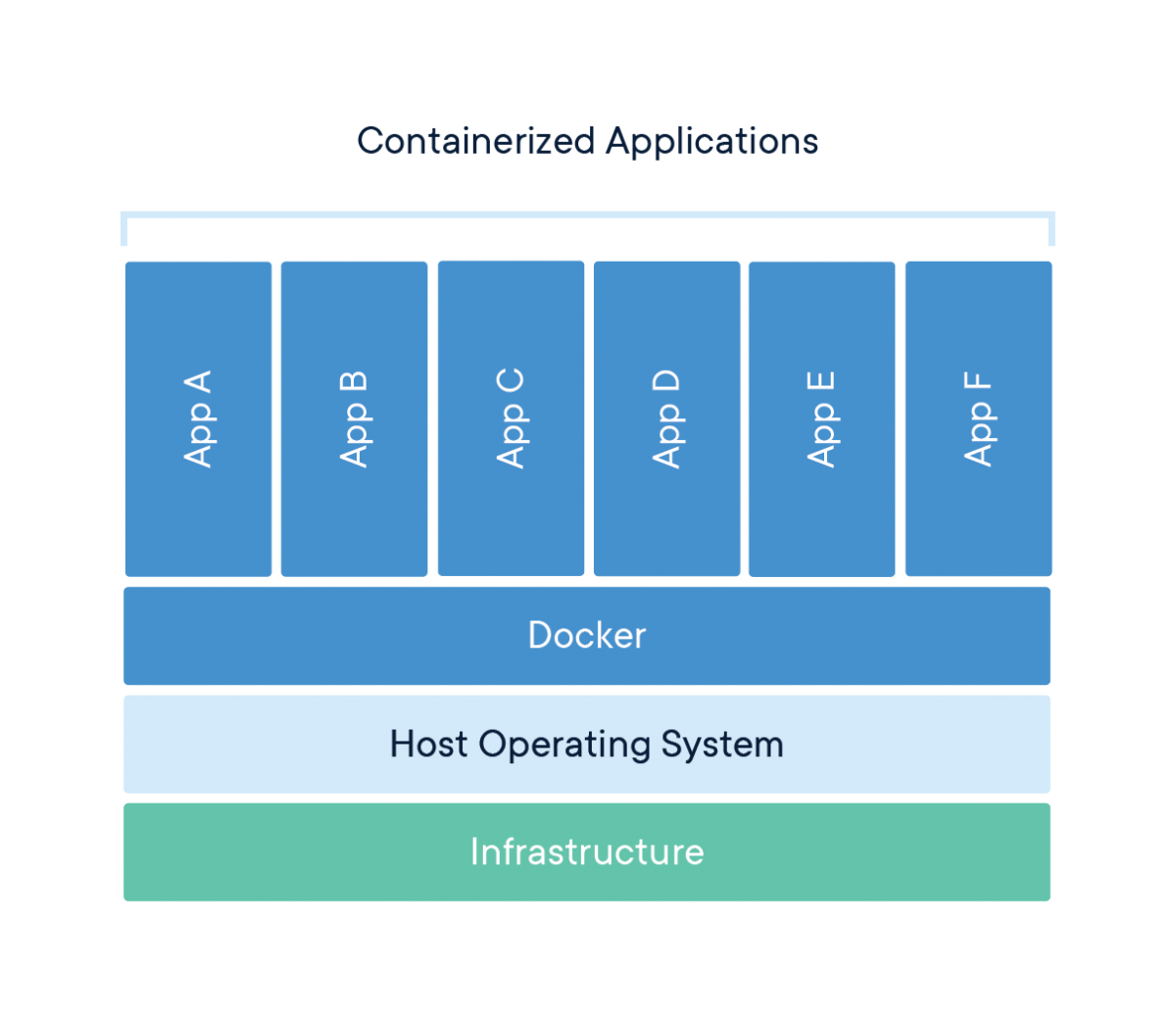

Containerization is the process of packaging an application and its dependencies into a container, which is an isolated unit that runs on the host operating system. Unlike Virtual Machines (VMs), which include an entire operating system, containers share the host's OS kernel while isolating the app's processes. This makes containers more lightweight, allowing for faster startup times and better resource efficiency.

Why is this useful?

Portability: Containers can be moved across different environments without worrying about the underlying system.

Efficiency: Because containers share the host OS, they consume fewer resources (e.g., memory and CPU) compared to VMs.

Consistency: Whether in development, testing, or production, containers ensure that your application behaves the same in every environment.

Why Docker Compared to VMs?

Both Docker and VMs provide application isolation, but Docker is more lightweight because it virtualizes the OS, not the hardware. VMs run a complete operating system for each instance, which increases memory and CPU usage. Containers, on the other hand, use fewer resources because they share the host's OS kernel, making them much faster and more efficient.

Docker Architecture

1. Docker Engine

Docker Engine is the core service that powers Docker. It handles the entire lifecycle of a container, from creating, starting, and stopping containers to managing networks and volumes. Similar to how a hypervisor manages VMs, Docker Engine manages containers on the host system.

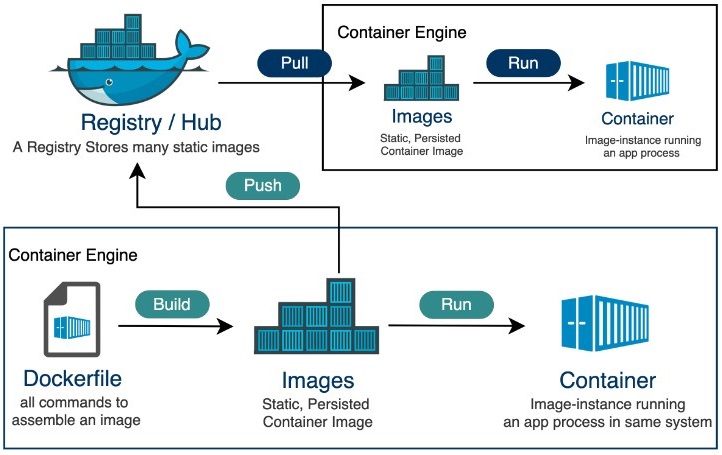

2. Docker Images

Images are read-only templates that contain everything needed to run an application, including code, libraries, dependencies, and configurations. These are the blueprints from which containers are created.

3. Docker Containers

Containers are the running instances of Docker images. When you start a container, it runs as an isolated process on the host machine, with its own environment and file system, but shares the OS kernel with other containers.

4. Docker Registry

Docker Registry is a storage and distribution system for Docker images, much like GitHub is for code. You can pull images from Docker Hub (the default public registry) or push your own images to a private registry, like AWS ECR (Elastic Container Registry).

Basic Docker Workflow: From Dockerfile to Running a Container

Here's a simple example to illustrate how to go from defining a container to running it.

Create a Dockerfile: A

Dockerfileis a script that defines how to build your image. Let’s create a simple Dockerfile for a Python app:DockerfileCopy code# Use an official Python runtime as a parent image FROM python:3.8-slim # Set the working directory in the container WORKDIR /app # Copy the current directory contents into the container at /app COPY . /app # Install any needed packages RUN pip install --no-cache-dir -r requirements.txt # Make port 80 available to the world outside this container EXPOSE 80 # Run app.py when the container launches CMD ["python", "app.py"]Build the Image: Run the following command to build your Docker image from the Dockerfile:

bashCopy codedocker build -t my-python-app .Run the Container: Once the image is built, you can run a container based on that image:

bashCopy codedocker run -d -p 4000:80 my-python-appThis command will start a container and map port 4000 on your machine to port 80 in the container, allowing you to access your app at

http://localhost:4000.

Basic Docker Commands

docker run <container_name>: Starts a container from an image. If the image is not available locally, Docker will pull it from the registry (like Docker Hub).docker ps: Shows all running containers.docker ps -a: Shows all containers, including stopped ones.docker build: Builds an image from a Dockerfile.docker push <repository>: Pushes an image to a remote registry, like AWS ECR.

Docker in MLOps

In MLOps, Docker ensures reproducibility and consistency across various stages—development, training, and deployment. With Docker, machine learning models and their dependencies are packaged into containers, which can be easily deployed across different environments.

Docker also plays a crucial role in Continuous Integration/Continuous Deployment (CI/CD) pipelines, allowing seamless integration and faster deployment of ML models.

Why Use AWS ECR with Docker in MLOps?

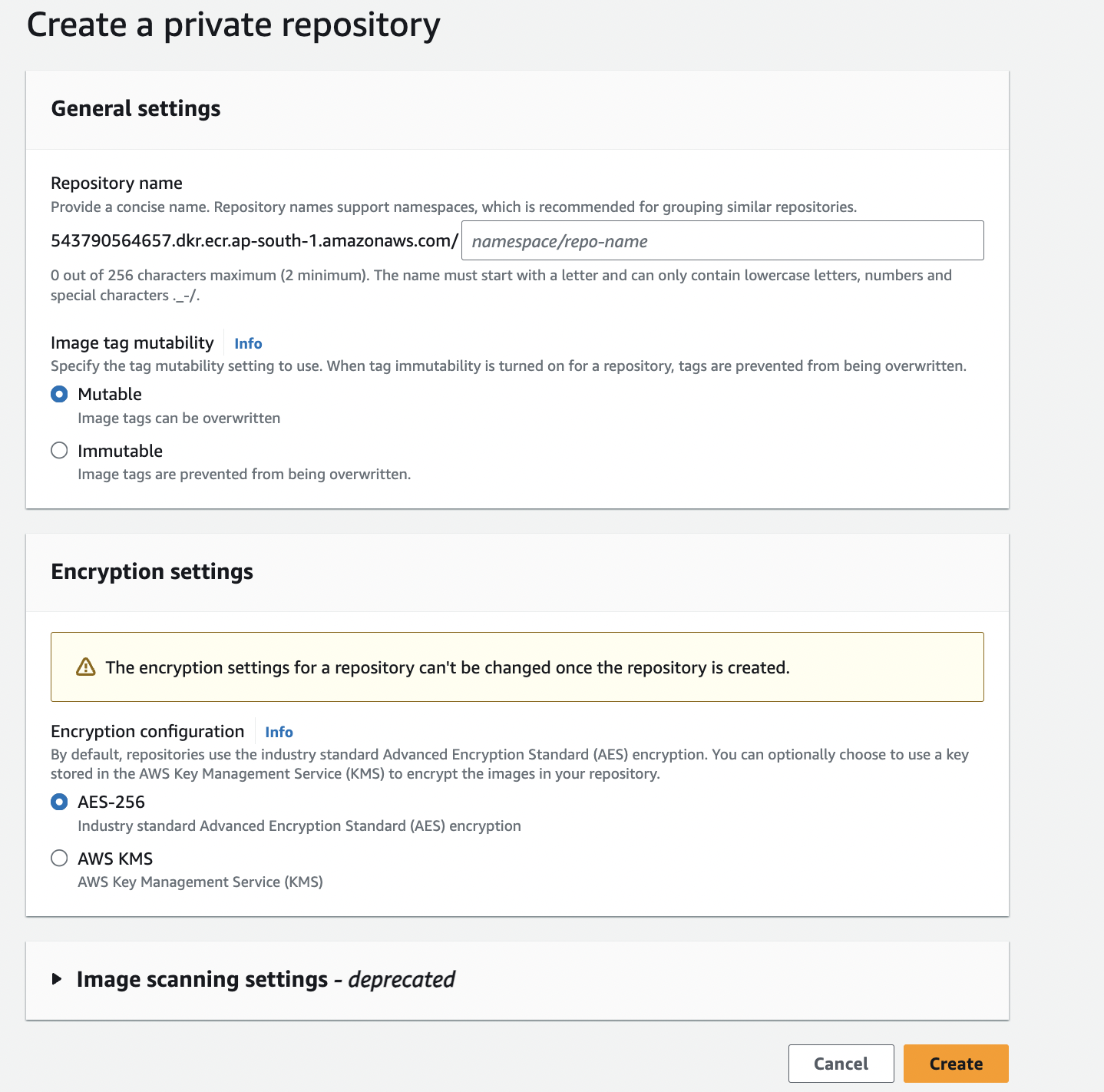

AWS ECR (Elastic Container Registry) is a fully managed container registry that integrates with Docker and other AWS services, making it ideal for storing and managing Docker images in the cloud. Using AWS ECR, you can:

Store and manage your ML model Docker images.

Automate deployment pipelines with AWS tools like CodePipeline.

Scale your applications effortlessly using Amazon ECS (Elastic Container Service) or EKS (Elastic Kubernetes Service).

Conclusion

Docker is an essential tool for developers and data scientists alike, ensuring consistency, scalability, and portability across environments. When combined with AWS ECR, it provides a robust platform for managing containerized applications and ML models in a cloud-native environment, making it a cornerstone in modern MLOps workflows.

Subscribe to my newsletter

Read articles from Pranjal Chaubey directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Pranjal Chaubey

Pranjal Chaubey

Hey! I'm Pranjal and I am currently doing my bachelors in CSE with Artificial Intelligence and Machine Learning. The purpose of these blogs is to help me share my machine-learning journey. I write articles about new things I learn and the projects I do.