Decoding Llama 3 vs 3.1: Which One Is Right for You?

NovitaAI

NovitaAI

Key Highlights

Generative AI Advancements: Meta’s Llama 3.1 model introduces significant improvements over Llama 3, especially in problem-solving capabilities, context length, and multilingual support.

Model Recommendations:Llama 3.1 70B is ideal for long-form content and complex document analysis, while Llama 3 70B is better for real-time interactions.

LLM API Flexibility: The LLM API allows developers to seamlessly switch between models, facilitating direct comparisons and maximizing each model’s strengths.

Getting Started: A step-by-step guide is provided for integrating Llama models through the Novita AI LLM API, including signing up for access and testing features.

Exploration Opportunities: Users can experiment with newer Llama models in the Novita AI LLM Playground ahead of the official Llama 3 API release.

Introduction

Generative AI is seeing new and creative Llama models. Meta’s newest model, Llama 3.1, shows how far we’ve come. This update improves on Llama 3 and offers big upgrades for many types of problem-solving tasks. In this blog, we will explain the main differences between Llama 3 and Llama 3.1. This will help you choose the best option for your AI needs.

Exploring the Evolution from Meta Llama 3 to Llama 3.1

The launch of Llama 3 was an important step for open-source generative AI. Still, Meta saw room for improvements, especially in context length, multilingual support, and safety. These areas were key in creating Llama 3.1.

With Llama 3.1, Meta fixes these main problems. It gives developers and researchers better tools to work with. This upgrade offers a big jump in skills, making Llama 3.1 a strong option against top private models.

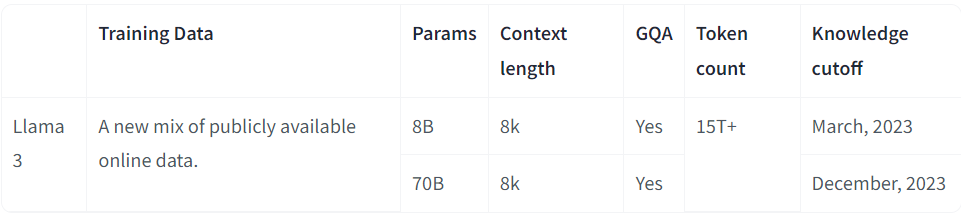

What is Llama 3?

Meta has developed and released the Meta Llama 3 family of large language models (LLMs), which includes a collection of pretrained and instruction-tuned generative text models available in 8 billion and 70 billion parameters. The Llama 3 instruction-tuned models are specifically optimized for dialogue applications and consistently outperform many existing open-source chat models on common industry benchmarks. Additionally, we prioritized optimizing for helpfulness and safety during the development of these models.

The Llama 3 model is available in two sizes — 8 billion and 70 billion parameters — with both pre-trained and instruction-tuned variants.

What is Llama 3.1?

The Meta Llama 3.1 collection features multilingual large language models (LLMs) that include pretrained and instruction-tuned generative models in sizes of 8 billion, 70 billion, and 405 billion parameters (text in/text out). The Llama 3.1 instruction-tuned text-only models (8B, 70B, and 405B) are specifically optimized for multilingual dialogue applications and consistently outperform many available open-source and proprietary chat models on common industry benchmarks.

Key Differences Between llama 3 vs 3.1

While Llama 3 and Llama 3.1 use the same dense transformer design, there are several important differences between them. One of the biggest differences is their context length. Llama 3.1 has a much larger context window. This lets it handle more text at once. Because of this, it performs better with long documents or complex conversations than Llama 3.

Llama 3.1 also has many important updates:

Improved Text Generation: The training of Llama 3.1 has been refined. This means it creates text that is more clear, relevant, and sounds more human.

Multilingual Skills: Llama 3.1 can work with more languages compared to Llama 3. This makes it useful for a wider range of tasks.

Strong Safety Features: Llama 3.1 includes better safety measures. These help to reduce risks linked to troublesome outputs that could arise from the longer context windows.

These updates show that Llama 3.1 is a more flexible and powerful tool for developers who need advanced text generation and processing abilities.

The Llama models have now been updated to Llama 3.2. If you want to learn more about the differences between Meta Llama 3.2, Llama 3.1, and Llama 3, you can click here to watch a detailed video explanation or simply click the video below.

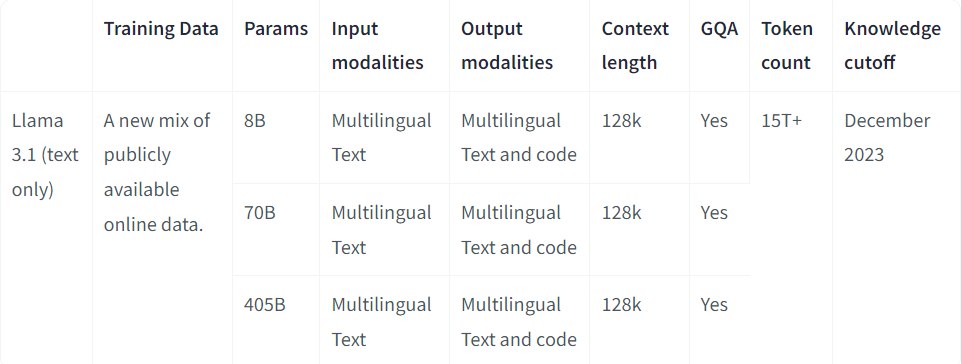

Having explored the key differences between Llama 3 and Llama 3.1, it’s important to turn our attention to a specific comparison: Llama 3 70B versus Llama 3.1 70B. This analysis will showcase their unique features, performance metrics, and practical applications, enabling developers to make informed choices tailored to their needs in dialogue and text generation.

Llama 3 70B vs Llama 3.1 70B

Choosing between Llama 3 70B and Llama 3.1 70B depends on what your project needs. If you need to handle a lot of context, create long content, or solve complex problems, Llama 3.1 70B is the better option. But, if you care more about speed and efficiency, then Llama 3 70B is still a strong choice. It works well for quick responses and real-time tasks.

Basic Comparison

Here’s a fundamental comparison between the two models.

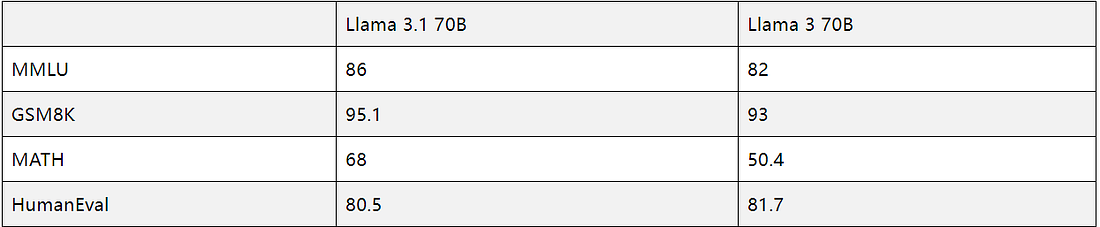

Benchmark Comparison

Llama 3.1 70B outperforms its predecessor in most benchmarks, with significant improvements in:

MMLU (+4 points): This benchmark evaluates performance across 57 subjects in STEM, humanities, social sciences, and more, with questions ranging from elementary to advanced professional levels. It assesses both general knowledge and problem-solving skills.

MATH (+17.6 points): MATH is a new dataset containing 12,500 challenging mathematics problems designed for competitions.

GSM8K (+2.1 points): GSM8K features 8,500 high-quality, linguistically diverse math word problems for grade school students, created by human writers. The dataset is divided into 7,500 training problems and 1,000 test problems.

HumanEval (-1.2 points): This indicates a slight decrease in coding performance. The dataset includes 164 original programming problems that assess language comprehension, algorithms, and basic mathematics, some of which resemble typical software interview questions.

Overall, Llama 3.1 70B shows superior performance, especially in mathematical reasoning tasks, while maintaining comparable coding abilities.

Speed Comparison

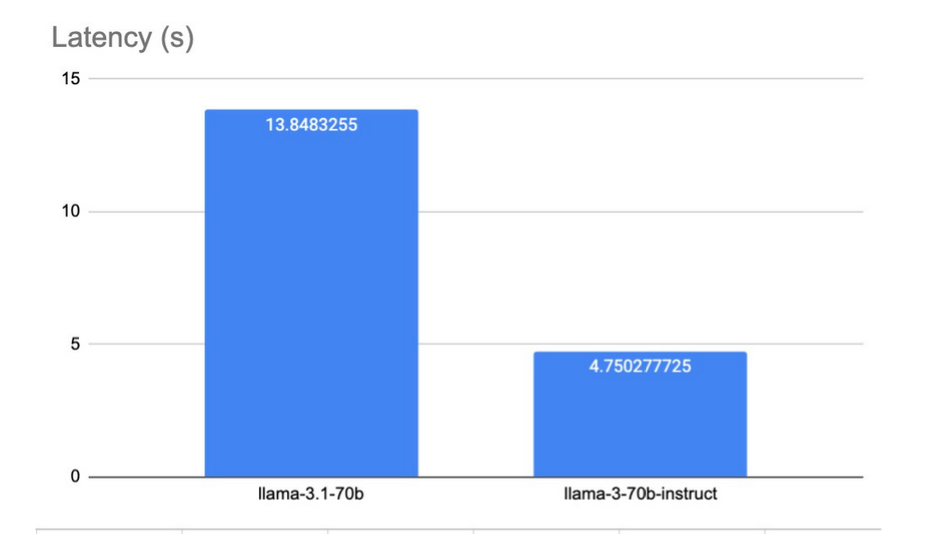

The team conducted tests using Keywords AI’s model playground to compare the speed performance of Llama 3 70B and Llama 3.1 70B.

Latency

The tests, consisting of hundreds of requests for each model, revealed a significant difference in latency. Llama 3 70B demonstrated superior speed with an average latency of 4.75 seconds, while Llama 3.1 70B averaged 13.85 seconds. This nearly threefold difference in response time highlights Llama 3 70B’s advantage in scenarios requiring quick real-time responses, potentially making it a more suitable choice for time-sensitive applications, despite the improvements seen in Llama 3.1 70B in other areas.

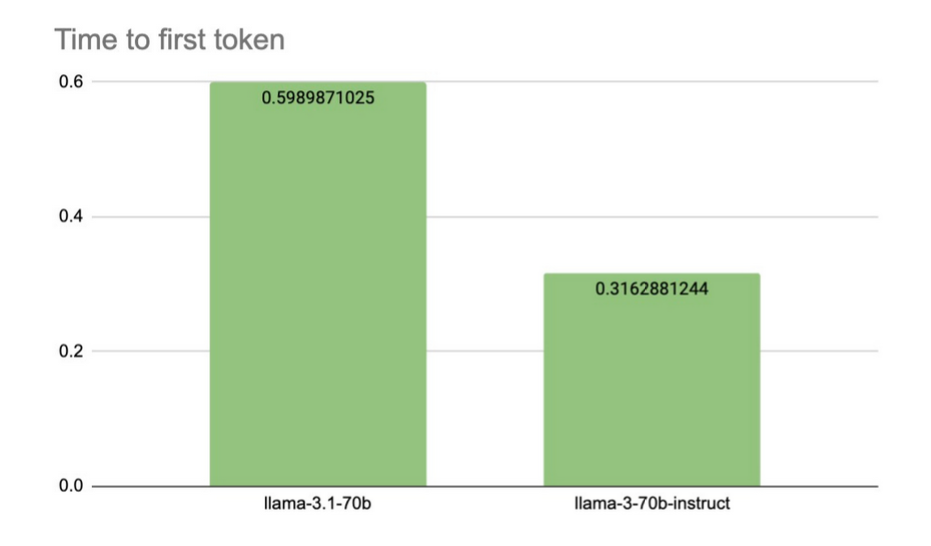

TTFT (Time to First Token)

The tests reveal a significant difference in TTFT performance. Llama 3 70B excels with a TTFT of 0.32 seconds, while Llama 3.1 70B lags behind at 0.60 seconds. This twofold speed advantage for Llama 3 70B could be crucial for applications requiring rapid response initiation, such as voice AI systems, where minimizing perceived delay is essential for user experience.

Throughput (Tokens per Second)

Llama 3 70B demonstrates significantly higher throughput, processing 114 tokens per second compared to Llama 3.1 70B’s 50 tokens per second. This substantial difference in processing speed — more than double — highlights Llama 3 70B’s superior performance in generating text quickly, making it potentially more suitable for applications that require rapid content generation or real-time interactions.

Model Recommendations

Both Llama 3 70B and Llama 3.1 70B offer useful features for AI. It is important to know their strengths when choosing the best model for you.

Llama 3.1 70B

Best for: Long-form content generation, complex document analysis, tasks that require extensive context understanding, advanced logical reasoning, and applications that benefit from larger context windows and output capacities.

Not suitable for: Time-sensitive applications requiring rapid responses, real-time interactions where low latency is critical, or projects with limited computational resources that cannot accommodate the model’s increased demands.

Llama 3 70B

Best for: Applications that require quick response times, real-time interactions, efficient coding tasks, processing shorter documents, and projects where computational efficiency is a priority.

Not suitable for: Tasks involving very long documents or complex contextual understanding that exceeds its 8K context window, advanced logical reasoning problems, or applications requiring the processing of extensive contextual information.

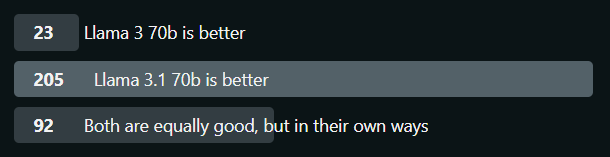

The general sentiment on Reddit regarding Llama 3 70B vs. Llama 3.1 70B is illustrated in the following image.

Llama 3 offers faster response times, while Llama 3.1 excels in tasks needing deeper contextual understanding. The LLM API’s flexibility allows developers to easily switch between the two models without complex configurations, enabling direct comparisons of their performance and features. This helps developers leverage each model’s strengths and make informed decisions, unlocking their potential across various use cases.

Getting Started with Llama Models in Novita AI’s LLM API

Follow these detailed steps closely to build a powerful language processing application using the Llama model API on Novita AI. This comprehensive guide is tailored to ensure a smooth, efficient development process, meeting the needs of developers seeking advanced AI platforms.

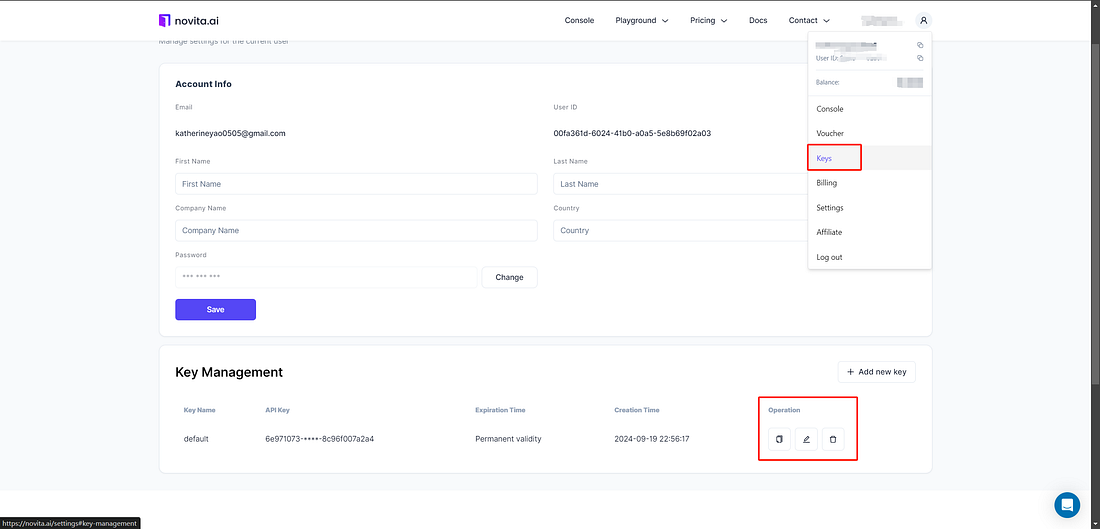

Step 1: Sign Up for API Access: Visit the official Novita AI website and create an account. Then, navigate to the API key management section to generate your API key.

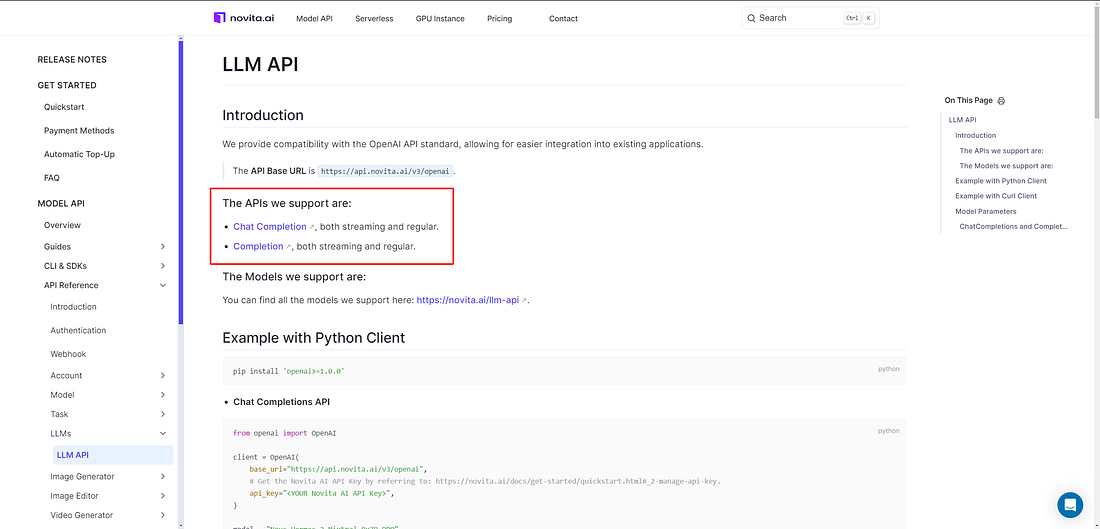

Step 2: Review the Documentation: Carefully go through the Novita AI API documentation.

Step 3: Integrate the Novita LLM API: Enter your API key into Novita AI’s LLM API to start generating concise summaries.

Step 4: Test and Add Optional Features: Process the API response and display it in a user-friendly format. Consider adding features like topic extraction or keyword highlighting.

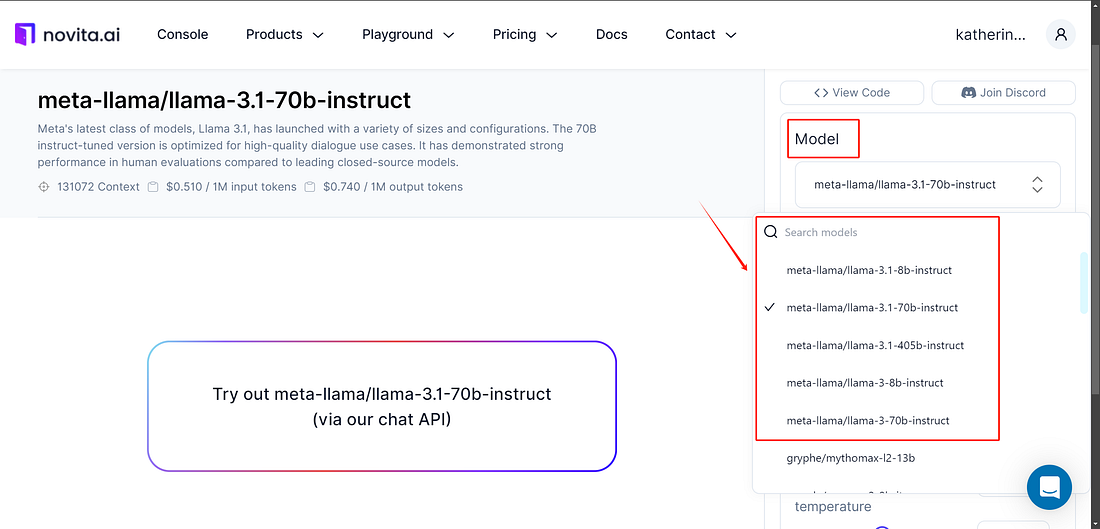

Exploring Llama Models in the LLM Playground on Novita AI

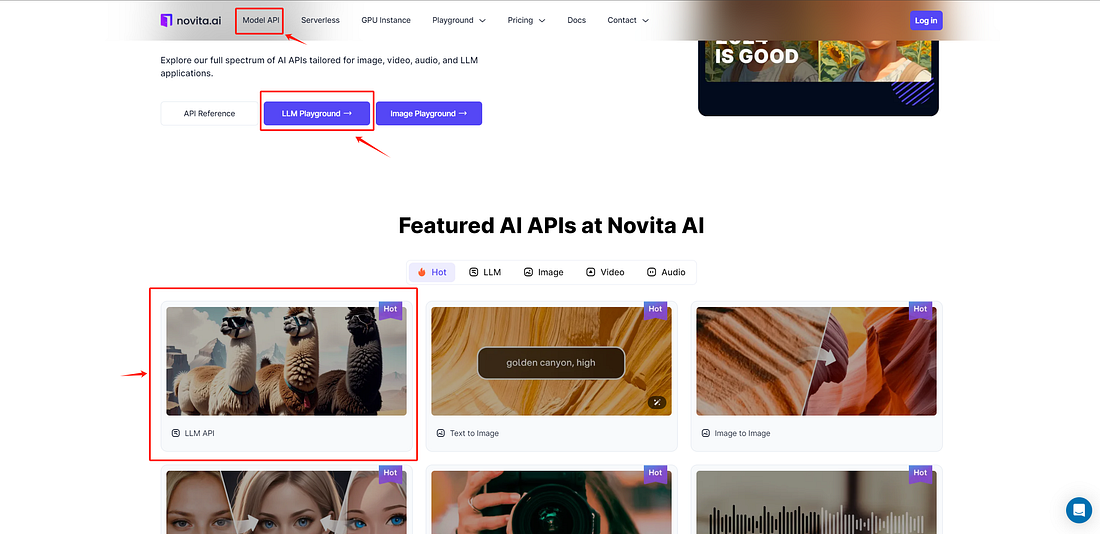

You can also experiment with Llama’s newer models in the Novita AI LLM Playground before the Llama 3 API is officially released.

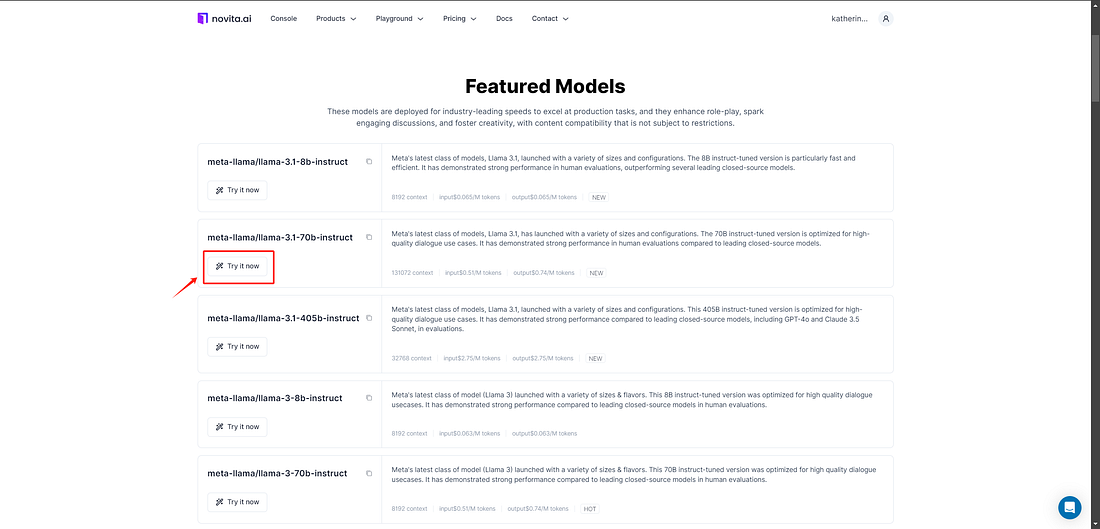

Step 1: Access the Playground: Navigate to the “Model API” tab and select “LLM Playground” to start experimenting with the Llama models.

Step 2: You can select from the various models in the Llama family within the playground.

Step 3: Enter Your Prompt and Generate: Type your desired prompt into the input field provided. This is where you can enter the text or question you want the model to respond to.

Conclusion

In summary, knowing the differences between Llama 3 and Llama 3.1 can really help you pick the right model for your needs. Llama 3 has its own perks, but Llama 3.1 brings improvements that might fit your needs better. By looking into the key differences and how well each model performs, you can make a smart choice that fits your goals. Whether you care about speed, accuracy, or how they work with Novita AI’s LLM API, choosing the right Llama model is important for boosting your AI abilities. Check out the features, compare the benchmarks, and think about your case to see which version works best for you.

Frequently Asked Questions

How to access llama 3?

Llama 3, an open-source model for the AI community, has a limited context window of 8,192 tokens. This limitation may pose challenges for tasks requiring extensive text data.

Is llama 3.1 better than GPT-4?

If you prioritize accuracy and efficiency in coding tasks, Llama 3 might be the better choice.

Is llama 3.1 restricted?

Users must prominently display “Built with Llama” on related websites, interfaces, or documentation.

Can Llama 3 run locally?

To simplify running Llama 3 on your local machine, use Ollama, an open-source tool. It allows users to run large language models locally and deploy them in Docker containers for easy access.

Originally published at Novita AI

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.

Subscribe to my newsletter

Read articles from NovitaAI directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by