Project On Provisioning Resources On AWS Using Ansible

Akash Sutar

Akash SutarIntroduction

Ansible is a powerful tool that can also be used to provision various resources, such as on the AWS platform, GCP, or Azure. In this series, we will use the Ansible Playbook to provision AWS EC2 instances.

Setting Up the Environment

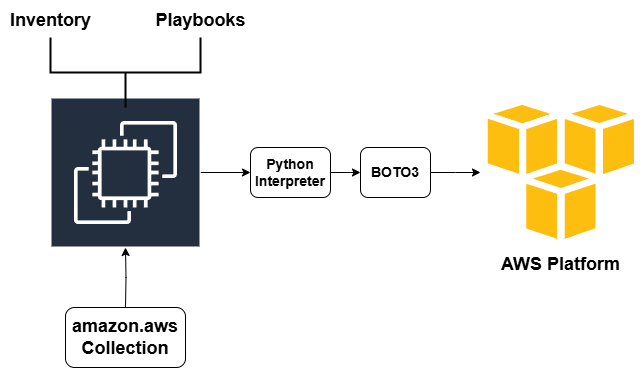

Understanding the architecture

We were earlier connecting to already existing AWS resources via SSH and doing the required tasks like installation and configuration management. Its architecture looks as below.

In this task, we need to create the actual AWS resources using Ansible. So, Ansible here works as an Infrastructure as a Code (IaC) Tool. We need to connect to the AWS Platform directly with Ansible Control Node as an AWS IAM User via API request.

Prerequisites to access the AWS platform with Ansible

boto3

What is boto3?

Boto3 is a Python library used to interact with AWS services programmatically and is required by Ansible to make AWS API calls.

It is an official Python software development kit (SDK) for AWS. It allows us to interact with AWS services like EC2, S3, Lambda, and more.

boto3is required because Ansible interacts with AWS services using modules that are built on top ofboto3.Boto3 installation on AWS EC2 Ansible Control Node

Command to install boto3

pip3 install boto3(since we havepython3installed, we usepip3instead ofpip)

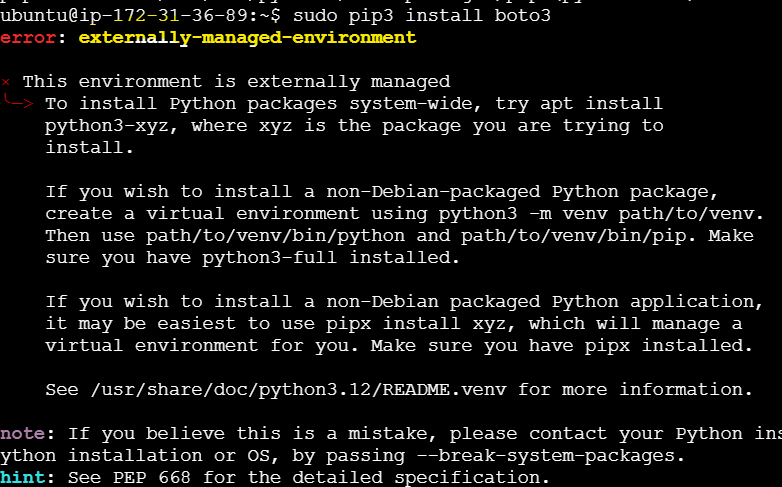

An error was encountered while installing boto3 on the server stating that the Environment is managed externally

Troubleshooting the issue

This error occured because our system is using an externally managed Python environment (as per PEP 668). To avoid system conflicts, it is recommended not to install Python packages globally using

pip. Instead, we can use a virtual environment for managing Python packages.To determine if our AWS EC2 instance ( on which Ansible Control Node) is running an externally managed environment or a virtually managed environment, we can check the following:

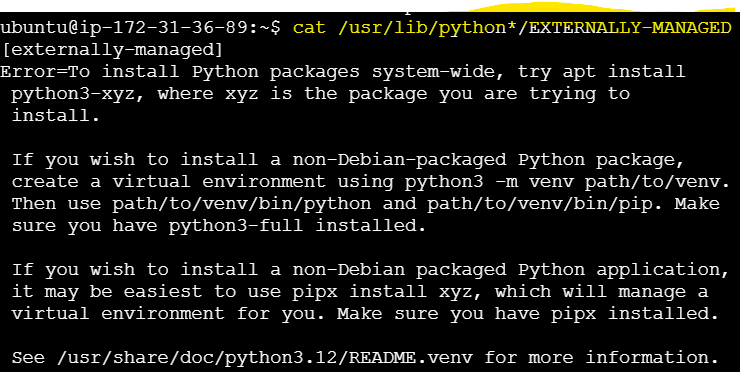

1. Check PEP 668 Environment:

cat /usr/lib/python*/EXTERNALLY-MANAGEDIf the file exists then it is an externally managed env. In our case it returned the file content confirming that our Env is externally managed

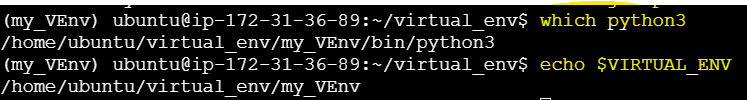

2. Check Virtual Environment:

echo $VIRTUAL_ENVThe above command outputs the path to virtual env.

If the output is a path (like

/path/to/your/venv), we are inside a virtual environment.If the output is empty, you're not inside a virtual environment.

we can also check by running:

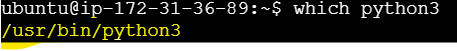

which python3

If this returns a path like

/home/ubuntu/myenv/bin/python, we are using a virtual environment's Python.If it returns something like

/usr/bin/python, we are using the system-wide Python, indicating you're not in a virtual environment.

It implies that —>

Externally Managed Environment: This is when the OS package manager controls Python, and we are restricted from using

pipto install packages globally.Virtual Environment: A self-contained directory where we can install Python packages without affecting the system.

Hence, it is required to set up a virtual environment first within which we can install boto3 using a pip package manager.

Virtual Environment Creation

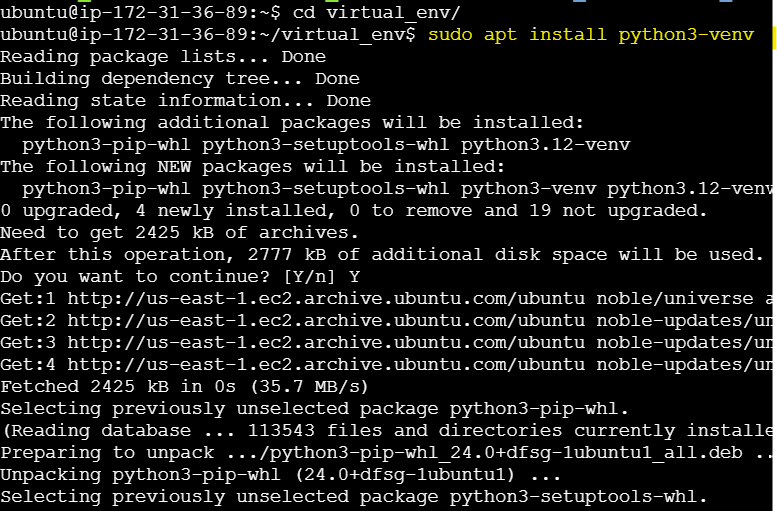

Install a venv package

sudo apt install python3-venv

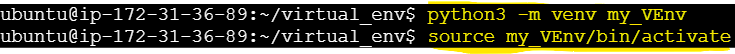

Create a Virtual Environment

python3 -m venv my_VEnv

Activate the Virtual Environment

source my_VEnv/bin/activate

Virtual Environment created can be verified

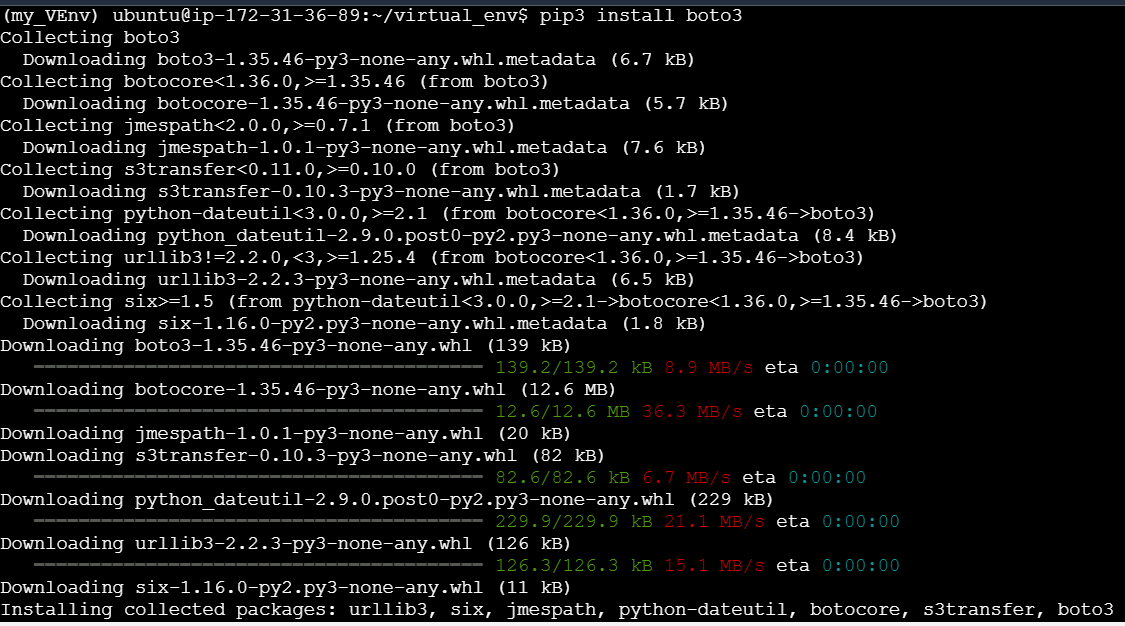

Installing boto3 in this Virtual Env - a prerequisite for connecting to AWS

pip3 install boto3

amazon.awscollectionWhat are Ansible Collections?

Ansible Collections are a standardized way to package and distribute content such as modules, plugins, roles, and playbooks.

amazon.awsis one such collection that Ansible uses to interact with the AWS Platform for provisioning resources.Ansible uses specialized modules like

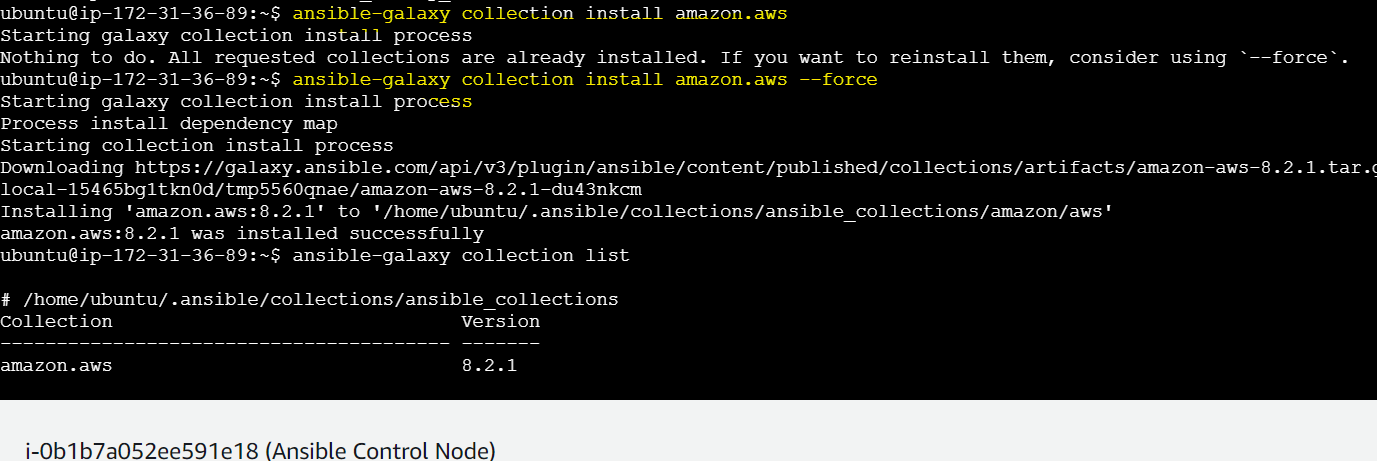

amazon.aws.ec2_instance,amazon.aws.ec2_key, etc. These modules are part of theamazon.awscollection available via Ansible Galaxy (a hub for Ansible roles and collections).Installing

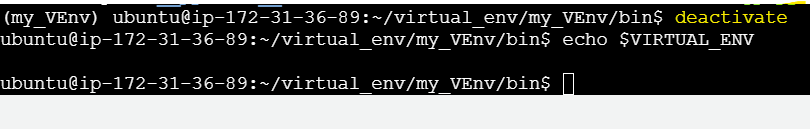

amazon.awscollection on Ansible Control Nodeamazon.awscollection is an Ansible-specific package that includes Ansible modules and plugins to manage AWS resources. This collection is managed by Ansible itself, not by Python virtual environments. Hence, required to be installed on the Ansible Control Node itself and not on the Virtual Environment.Hence, exiting the Virtual Environment that we created earlier for installing

boto3.Command:

deactivate

ansible-galaxy collection install amazon.aws

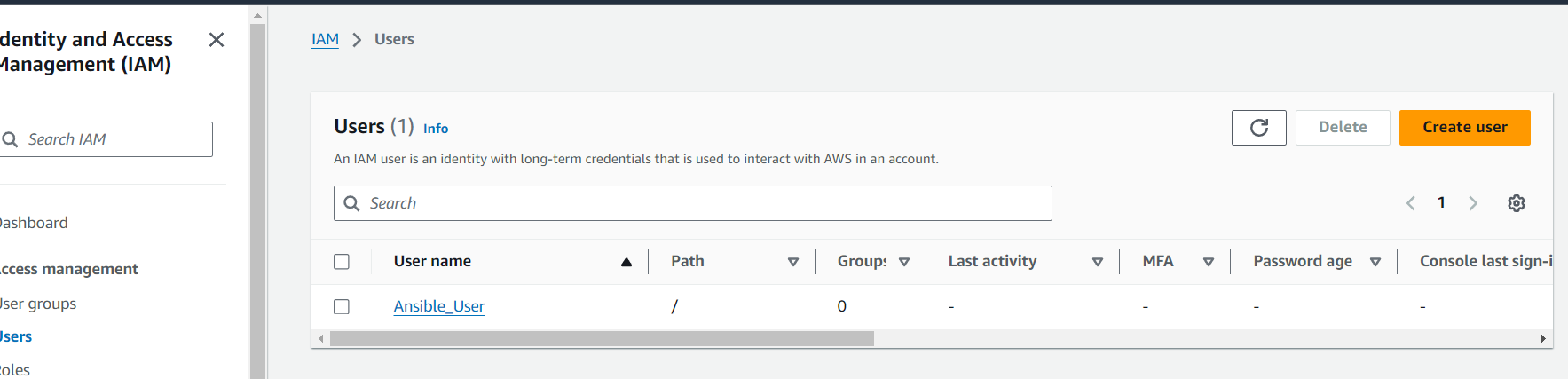

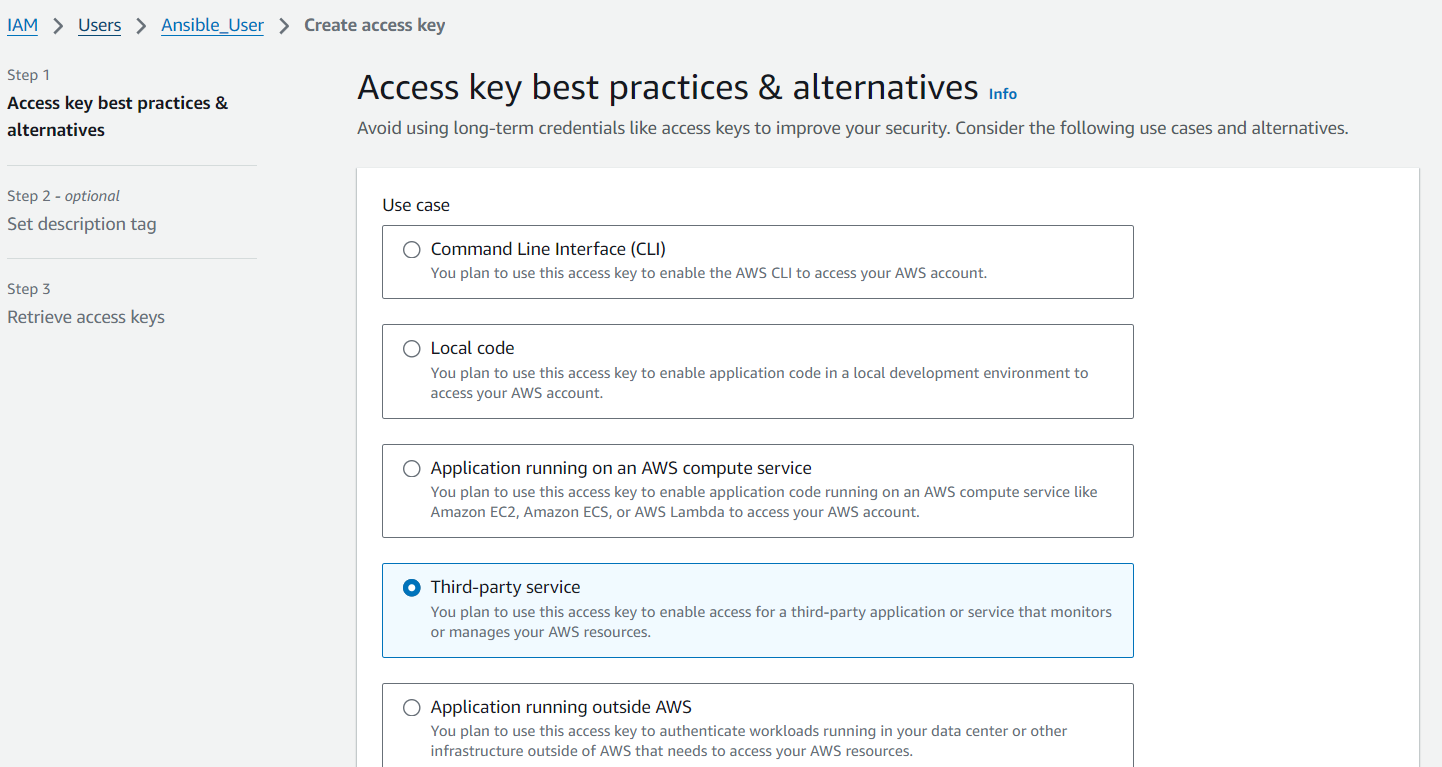

IAM user with access to EC2 roles

Configuring AWS Credentials and Access

We shall use Built-in Ansible-Vault to store the access_keys to the AWS IAM user.

Creating and Fetching the access keys

These access keys are required to be stored safely and securely on the control node. We should not directly plug in the access key values in the playbooks. Let’s store this value in a separate file that is vault password-protected.

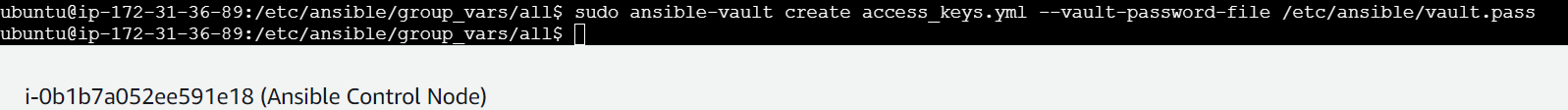

We need to set up a vault

Creating a random password and saving it in a file vault.pass which will serve as the password to the vault file access_keys.yml that contains access keys.

sudo openssl rand -base64 2048 > vault.pass

If the above fails, the following command can also be used.

sudo openssl rand -base64 2048 | sudo tee /etc/ansible/vault.pass > /dev/null

Using tee command will ensure that the random password is written to the vault.pass file even in directories where we need sudo permissions.

These commands will hence create a random password and store it in the vault.pass

Creating an access keys’ vault file

ansible-vault create group_vars/all/access_keys.yml --vault-password-file /path/to/vault.pass

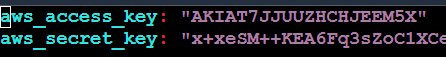

We have to provide the access_key and secret_key values inside the file.

If this vault file needs to be edited, it be done using the following edit command

sudo ansible-vault edit access_keys.yml --vault-password-file /etc/ansible/vault.pass

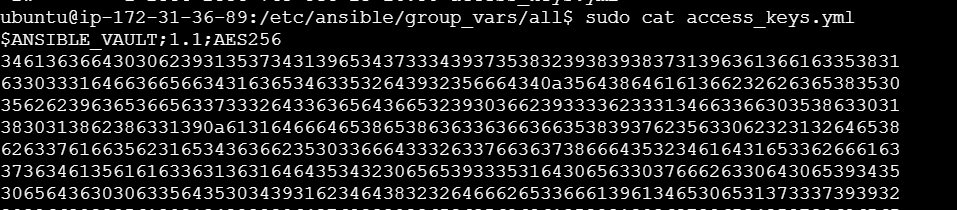

We cannot cat or vi this vault file. It returns some encrypted value thus keeping the access_keys secured.

Provisioning AWS Resources with Ansible

Ansible and AWS Integration

Now that we have completed the prerequisites, we can write a playbook to provision EC2 instances on AWS.

Writing Ansible Playbooks for AWS Provisioning

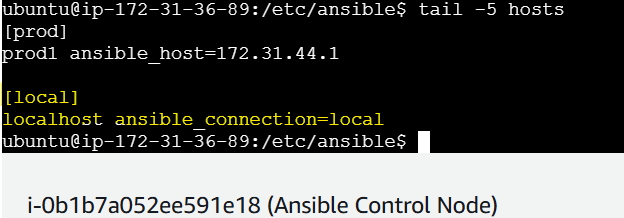

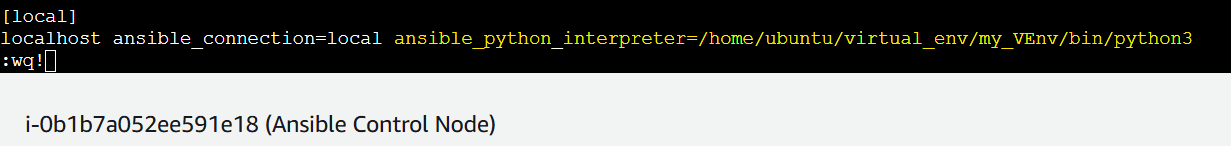

Updating the inventory with localhost connection as the playbook will be executed on the control node.

This configuration tells Ansible that localhost is a local connection, allowing it to run commands on the control node without needing SSH or other connection types.

Using Ansible Roles for AWS Resource Management

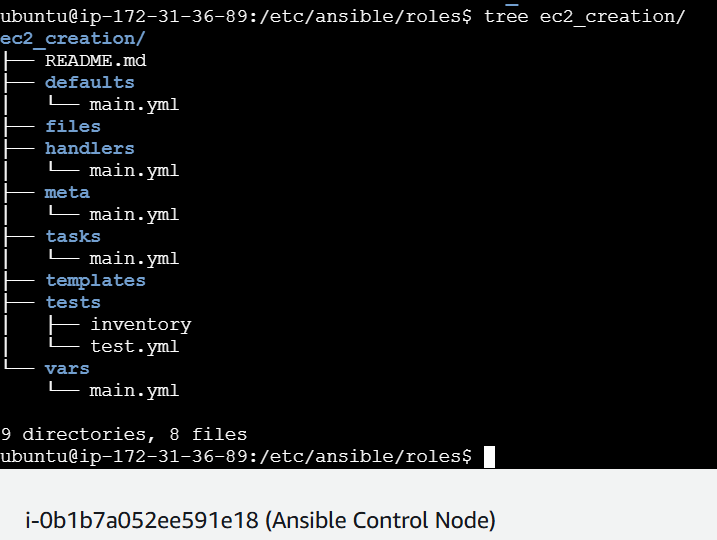

We will use a role to execute the tasks instead of directly creating the playbook.

sudo ansible-galaxy role init ec2_creation

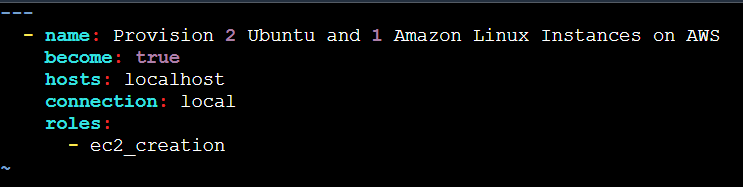

Writing a Playbook to Launch EC2 Instances

Create an ec2_create.yml playbook file at path /etc/ansible/playbooks

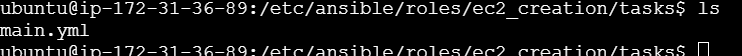

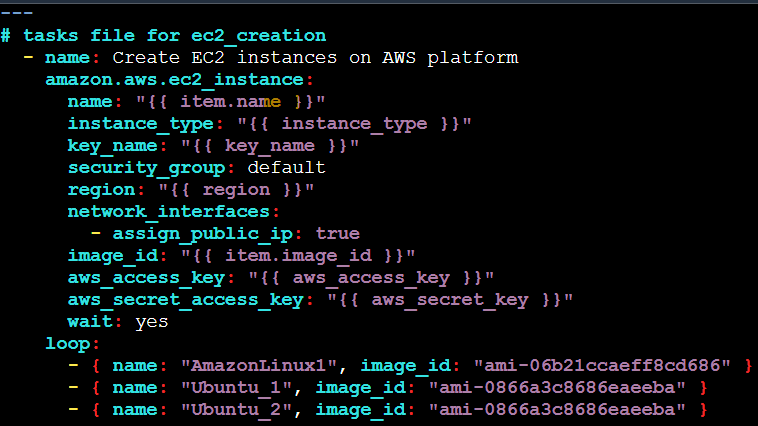

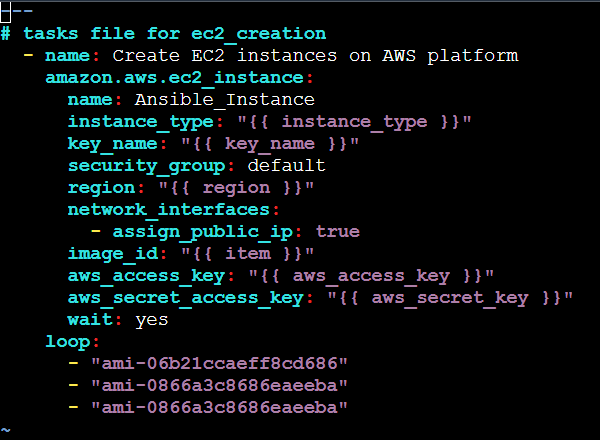

Navigate to the ec2_creation roles dir and the tasks sub-dir. Writing the task to create the instances in main.yml within the tasks dir.

In this main.yml playbook inside the tasks subfolder, we have written the main task to create the 2 Ubuntu and 1 Amazon Linux instances. We have also provided variables which are defined in a separate yml file inside the vars subfolder.

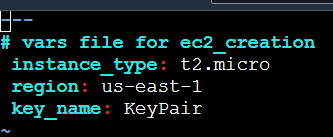

In vars directory, following variables are defined:

The key-pair name mentioned in the file is present on the AWS console wich will be further used for passwordless access to the instances.

Running the Playbook

Let’s check if the playbook can be run properly

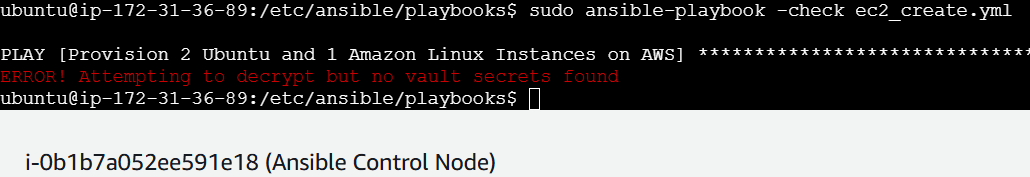

sudo ansible-playbook -check ec2-create.yml

#error encountered stating ‘ERROR! Attempting to decrypt but no vault secrets found’

Troubleshooting:

The above error has been encountered because we have the access_keys.yml file protected with a vault password. Thus, Ansible is unable to access the required data in the file.

Solution: We need to pass the password file vault.pass which is basically a key to the locked access_keys.yml file.

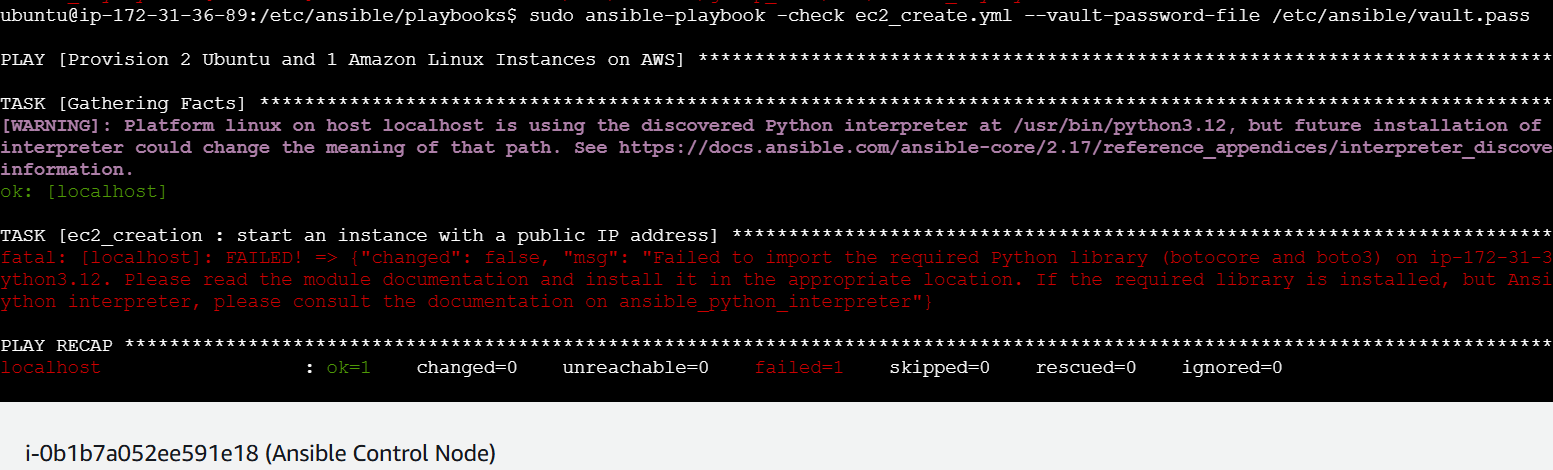

The above has been resolved. We have encountered another error:

Troubleshooting:

The error: “fatal: [localhost]: FAILED! => {"changed": false, "msg": "Failed to import the required Python library (botocore and boto3) on ip-172-31-36-89's Python /usr/bin/python3.12.“

This error suggests that Ansible cannot find the boto3 and botocore libraries in the Python interpreter used by our control node (in this case, /usr/bin/python3.12). Since we have set up a virtual environment (myV_Env) with boto3 installed, the playbook needs to be explicitly told to use the Python interpreter within this virtual environment.

Solution:

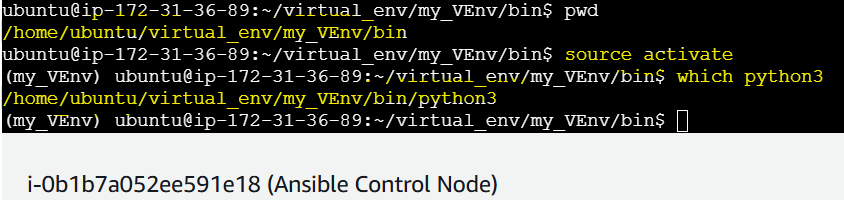

Navigate to the Virtual Env and Activate the environment

Check which python interpreter is present. It should return its path

Setting up this Python interpreter in the inventory

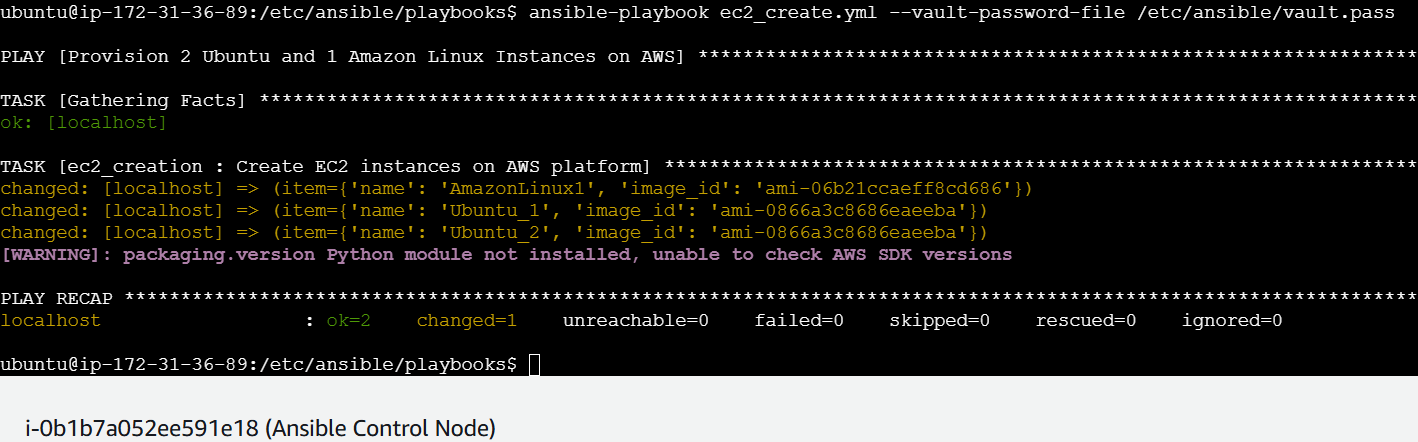

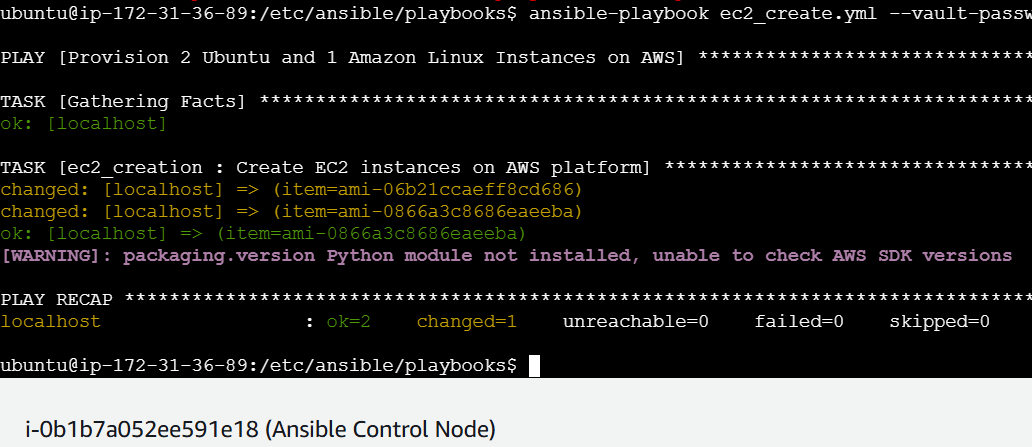

Rerunning the playbook

ansible-playbook ec2_create.yml --vault-password-file /etc/ansible/vault.pass

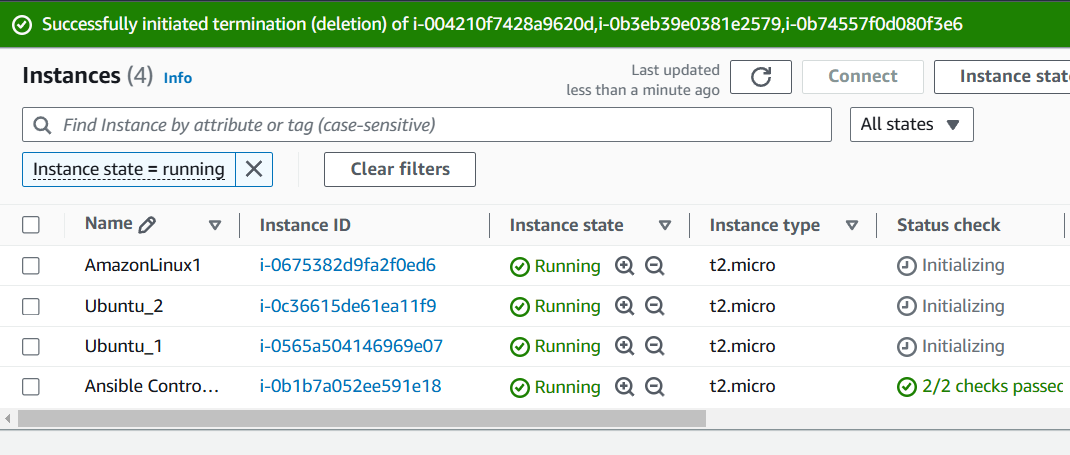

Playbook was executed successfully!!

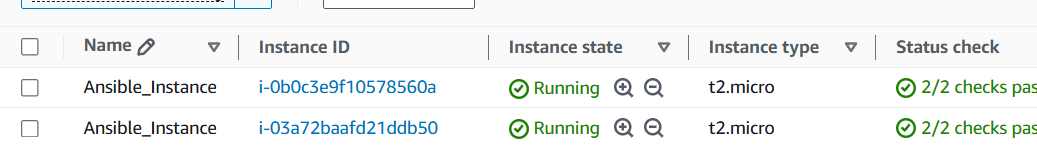

AWS Console also shows 2 Ubuntu and 1 Amazon Linux Instances Created!!

Idempotency Principle

We have used a loop since we need to create multiple instances. Also, 2 Ubuntu instances with the same configurations are created so the name as well has to be explicitly mentioned in the loop. If the name is not mentioned inside the loop, then only one Ubuntu instance would get created due to Ansible’s Idempotency Principle

Let’s understand this by updating the playbook as follows:

Here, the name is removed from the loop, and only image_id is mentioned. Same image_id is mentioned twice for 2 Ubuntu instances.

Only 1 Ubuntu and 1 Amazon Linux instances are created. Due to Idempotency only 1 Ubuntu instance is created instead of 2

Conclusion

Recap of Key Learnings

We have successfully provisioned AWS EC2 instances using Ansible. There were some prerequisites such as Python Interpreter, Boto3 API, and Virtual Environment Setup which were completed first after which the playbook to provision the resources was written.

References

List of Resources and Documentation

amazon.aws collection: https://docs.ansible.com/ansible/latest/collections/amazon/aws/ec2_instance_module.html#ansible-collections-amazon-aws-ec2-instance-module

Subscribe to my newsletter

Read articles from Akash Sutar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by