Building a Data Quality Testing Framework Using Snowflake and SQL

Vipin

Vipin

In the era of data-driven decision-making, maintaining high data quality is crucial. Poor data quality can lead to incorrect insights, impacting business decisions. This blog post will guide you through building a robust data quality testing framework using Snowflake and SQL.

Prerequisites

Before diving in, ensure you have the following:

A Snowflake account.

Basic knowledge of SQL.

Familiarity with Snowflake's architecture and features.

Designing the Data Quality Framework

The framework consists of several components:

Data Validation Rules: Define the criteria for data quality.

Error Logging: Capture and store test results and errors.

Reporting: Generate reports to visualize data quality.

Key Data Quality Tests

Null Check: Ensure critical columns do not contain null values.

Range Check: Verify numerical values fall within a specified range.

Format Check: Validate data against a specific format (e.g., email addresses).

Data Quality Tests on customers Table

Step 1: Set Up the customers Table

Assume we have a customers table with the following structure:

CREATE OR REPLACE TABLE customers (

customer_id INT,

name STRING,

email STRING,

age INT,

signup_date DATE

);

INSERT INTO customers (customer_id, name, email, age, signup_date) VALUES

(1, 'John Doe', 'john.doe@example.com', 28, '2023-01-15'),

(2, 'Jane Smith', NULL, 22, '2023-02-20'),

(3, 'Alice Johnson', 'alice.johnson@example', 35, '2023-03-10'),

(4, 'Bob Brown', 'bob.brown@example.com', -5, '2023-04-05'),

(5, 'Carol White', 'carol.white@example.com', 42, '2023-05-12');

Implementing Data Quality Tests

Step 1: Create a Data Quality Rules Table

First, create a table to store your data quality rules. This table can include columns for the rule name, SQL query, and any additional metadata you might need.

CREATE OR REPLACE TABLE data_quality_rules (

rule_id INT AUTOINCREMENT,

rule_name STRING,

sql_query STRING,

error_message STRING

);

Step 2: Insert Data Quality Rules

Insert your data quality rules into the data_quality_rules table. You can store the SQL queries as strings:

INSERT INTO data_quality_rules (rule_name, sql_query, error_message) VALUES

('Null Check',

'SELECT COUNT(*) AS error_count FROM customers WHERE email IS NULL',

'Null values found in email column'),

('Range Check',

'SELECT COUNT(*) AS error_count FROM customers WHERE age NOT BETWEEN 0 AND 120',

'Out of range values in age column'),

('Format Check',

'SELECT COUNT(*) AS error_count FROM customers WHERE NOT REGEXP_LIKE(email, ''^[A-Za-z0-9._%+-]+@[A-Za-z0-9.-]+\\.[A-Za-z]{2,}$'')',

'Invalid email format');

Step 3: Create a Table for Results

Create a results table to store the output of the data quality checks:

CREATE OR REPLACE TABLE data_quality_results (

rule_name STRING,

error_count NUMBER,

error_message STRING,

test_timestamp TIMESTAMP

);

Step 4: Execute Data Quality Rules

You can write a SQL script or use a stored procedure to iterate over the rules in the data_quality_rules table and execute them dynamically. Here’s an example of how to do this using a loop in a stored procedure:

CREATE OR REPLACE PROCEDURE run_data_quality_checks()

RETURNS STRING

LANGUAGE JAVASCRIPT

AS

$$

var sql_command;

var stmt;

var results;

// Clear previous results

sql_command = "TRUNCATE TABLE data_quality_results;";

stmt = snowflake.createStatement({sqlText: sql_command});

stmt.execute();

// Retrieve rules

sql_command = "SELECT rule_name, sql_query, error_message FROM data_quality_rules;";

stmt = snowflake.createStatement({sqlText: sql_command});

results = stmt.execute();

while (results.next()) {

var rule_name = results.getColumnValue(1);

var query = results.getColumnValue(2);

var error_message = results.getColumnValue(3);

// Execute the query

stmt = snowflake.createStatement({sqlText: query});

var query_results = stmt.execute();

if (query_results.next()) {

var error_count = query_results.getColumnValue(1);

// Insert results into the results table

sql_command = `INSERT INTO data_quality_results (rule_name, error_count, error_message, test_timestamp)

VALUES ('${rule_name}', ${error_count}, '${error_message}', CURRENT_TIMESTAMP);`;

stmt = snowflake.createStatement({sqlText: sql_command});

stmt.execute();

}

}

return "Data quality checks completed.";

$$;

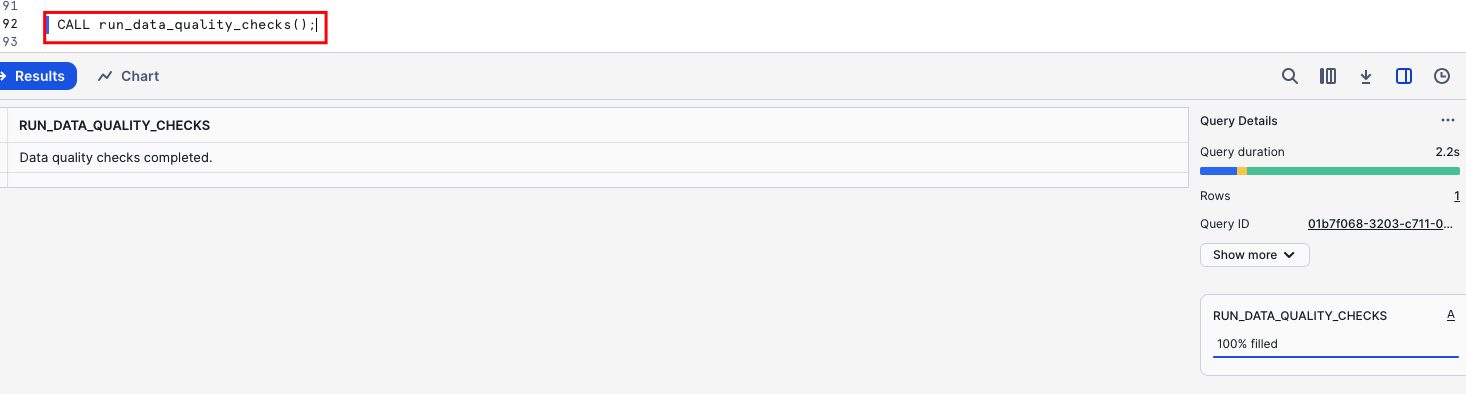

Step 5: Run the Stored Procedure

You can run the stored procedure whenever you want to execute all the data quality checks:

CALL run_data_quality_checks();

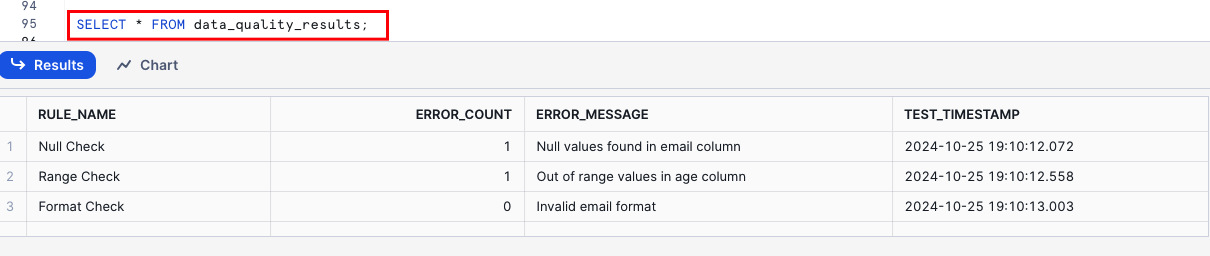

Step 6: View the Results

After running the checks, you can view the results:

SELECT * FROM data_quality_results;

Future Enhancement:

The framework can be enhanced to include additional data quality check scenarios, such as the following:

1. Uniqueness Checks

Ensure that specific columns that should have unique values (e.g., primary keys, email addresses) do not contain duplicates.

SELECT

customer_id, COUNT(*)

FROM

customers

GROUP BY

customer_id

HAVING

COUNT(*) > 1;

2. Consistency Checks

Ensure that related fields have consistent values. For example, the state and zip code should match within the same geographical area.

SELECT

COUNT(*)

FROM

customers

WHERE

(state = 'CA' AND NOT zip_code LIKE '9%')

OR (state = 'NY' AND NOT zip_code LIKE '1%');

3. Referential Integrity Checks

Verify that foreign keys in the table refer to existing records in related tables.

SELECT

c.customer_id

FROM

customers c

LEFT JOIN

orders o ON c.customer_id = o.customer_id

WHERE

o.customer_id IS NULL;

4. Pattern Checks

Ensure that the values in certain columns follow a specific pattern or format, such as email addresses or phone numbers.

SELECT

email

FROM

customers

WHERE

email NOT LIKE '%_@__%.__%';

5. Length Checks

Ensure that string data does not exceed the expected length.

SELECT

customer_id, first_name, last_name

FROM

customers

WHERE

LENGTH(first_name) > 50

OR LENGTH(last_name) > 50;

6. Statistical Distribution Checks

Compare the distribution of data to expected distributions. For example, ensuring that the distribution of ages in the customers table follows a reasonable pattern.

SELECT

AVG(age), MIN(age), MAX(age)

FROM

customers;

7. Anomaly Detection

Identify outliers or anomalies in your data that deviate significantly from the norm.

WITH stats AS (

SELECT

AVG(order_amount) AS avg_amount,

STDDEV(order_amount) AS stddev_amount

FROM

orders

)

SELECT

order_id, order_amount

FROM

orders, stats

WHERE

ABS(order_amount - stats.avg_amount) > 3 * stats.stddev_amount;

8. Completeness Checks

Ensure that mandatory fields are not null.

SELECT

customer_id

FROM

customers

WHERE

first_name IS NULL

OR last_name IS NULL;

9. Timeliness Checks

Verify that dates and times are within expected ranges and that there are no future dates in historical records.

SELECT

order_id, order_date

FROM

orders

WHERE

order_date > CURRENT_DATE;

10. Cross-Field Validation

Ensure that the values in one field are logically consistent with the values in another field.

SELECT

customer_id, start_date, end_date

FROM

subscriptions

WHERE

end_date < start_date;

11. Dependency Checks

Ensure that the presence of one field's value is dependent on another field's value.

SELECT

customer_id, email, email_opt_in

FROM

customers

WHERE

email_opt_in = 'Y' AND email IS NULL;

By incorporating these additional checks, you can significantly enhance your data quality framework and ensure that your data remains accurate, consistent, and reliable. Make sure to regularly review and update these checks based on your evolving data quality requirements and business rules.

Best Practices

Regular Updates: Review and update data quality rules periodically.

Monitor Trends: Keep an eye on data quality trends and address issues promptly.

Scale: Adapt the framework to handle increasing data volumes.

Conclusion

Ensuring data quality is a continuous process. Using Snowflake and SQL, you can build a flexible and powerful data quality testing framework to maintain the integrity of your data. Implement this framework, customize it to your needs, and ensure your data remains reliable and accurate.

Additional Resources

Subscribe to my newsletter

Read articles from Vipin directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Vipin

Vipin

Highly skilled Data Test Automation professional with over 10 years of experience in data quality assurance and software testing. Proven ability to design, execute, and automate testing across the entire SDLC (Software Development Life Cycle) utilizing Agile and Waterfall methodologies. Expertise in End-to-End DWBI project testing and experience working in GCP, AWS, and Azure cloud environments. Proficient in SQL and Python scripting for data test automation.