Kubernetes path based routing using Ingress

Aniket Kharpatil

Aniket Kharpatil

Kubernetes has become the go-to platform for deploying, managing, and scaling containerized applications. One of its powerful features is Ingress, which helps manage external access to the services within your Kubernetes cluster.

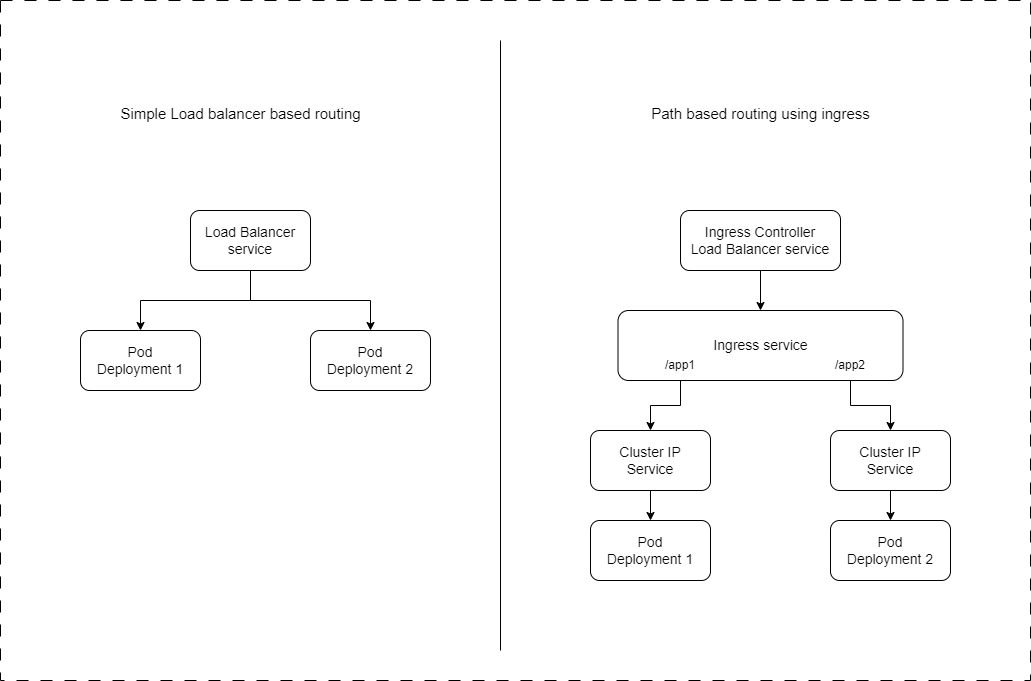

In our previous blog(click to view), we exposed two different Kubernetes deployments using a LoadBalancer service. However, we encountered a routing issue: traffic for both deployments was routed through the same service, causing confusion and inefficiency. In this blog, we'll explore how to resolve this problem using Kubernetes Ingress. Let's dive in! 🌟

What is path based routing?

Path-based routing is a technique used in web applications to direct traffic to different services or resources based on the URL path after the hostname. This method allows multiple resources or functions within a single application or website to be accessible through distinct paths while sharing the same domain.

Let's look at an example of path-based routing using an e-commerce website like "www.onlineshop.com":

Product Pages:

URL:

www.onlineshop.com/productsThis route takes users to a page displaying all available products.

Shopping Cart:

URL:

www.onlineshop.com/cartThis route directs users to their shopping cart, where they can view items they have added for purchase.

Order Tracking:

URL:

www.onlineshop.com/ordersThis route allows users to track their order history and current order status.

Customer Support:

- URL:

www.onlineshop.com/support

- URL:

Here all pages share the hostname www.onlineshop.com, but the path (like /products, /cart, etc.) determines which specific resource is accessed. Path-based routing ensures that requests are directed to the appropriate part of the application based on the URL path, enhancing user experience by keeping everything under one hostname. This approach also helps organize the website structure, making it easier for users to navigate.

The Problem: Routing with LoadBalancer Service⚖️

When we exposed our deployments with a LoadBalancer service, all incoming traffic was directed through a single service. This setup did not allow us to differentiate and properly route traffic to the respective deployments. As a result, both deployments received traffic indiscriminately, leading to potential conflicts and performance issues.

The Solution: Kubernetes Ingress🚦

Ingress provides a more flexible and powerful way to manage external access to your Kubernetes services. Let's understand it in the correct way.

What is Kubernetes Ingress? 🤔

Ingress is a Kubernetes API object that manages external access to services within a cluster, typically HTTP. Ingress can:

Route traffic based on host or path.

Provide SSL termination.

Offer advanced routing features like load balancing.

In simple terms, Ingress acts as an entry point that controls how external users access services running inside your Kubernetes cluster.

Why Use Ingress? 🌐

Ingress provides several benefits:

Advanced Routing: Route requests to different services based on the URL.

SSL Termination: Terminate SSL/TLS connections at the Ingress point.

Load Balancing: Distribute traffic across multiple backend services.

Centralized Access Control: Manage access rules in one place.

How Ingress Works 🔄

Ingress Resource: Defines the rules for routing traffic.

Ingress Controller: Watches Ingress resources and processes the routing rules. It’s responsible for fulfilling the Ingress rules defined by the Ingress resources.

Without an Ingress controller, an Ingress resource has no effect. The controller is the engine that interprets and executes the rules.

Setting Up Ingress in Kubernetes 🛠️

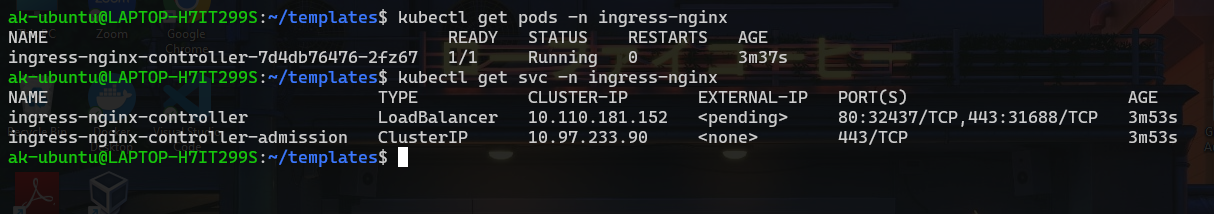

Step 1: Deploying the NGINX Ingress Controller

NGINX is a popular Ingress controller due to its flexibility and ease of use. Here’s how to set it up:

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/main/deploy/static/provider/cloud/deploy.yaml

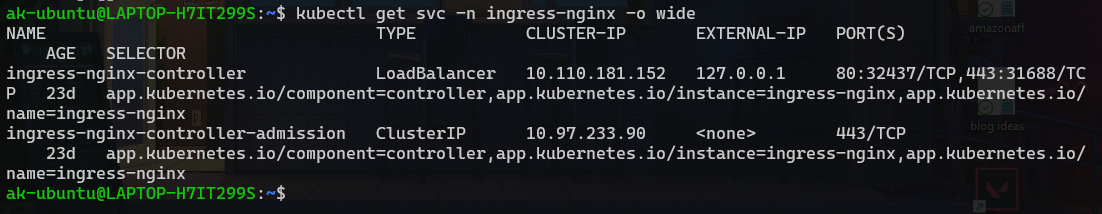

Verify the installation:

kubectl get pods -n ingress-nginx

kubectl get svc -n ingress-nginx

You will see this in ingress-nginx namespace

The external IP for ingress-nginx-controller service is showing pending since we don’t have a load balancer present. I’ll show you how to expose this service externally so we can see an IP there which will be the access point of our application.

Step 2: Update the deployments

As per our previous project (Click here) we had deployed two applications using different HTML pages via config maps. Here we will be updaing the same deployments and creating ingress resources + services so that we can have path based routing to those two web pages.

No need to worry if you are new 🙂 I’ve explained the yaml below Also you can refer the code here 👉 https://github.com/AniketKharpatil/DevOps-Journey/tree/main/k8s/k8s-deployment-example

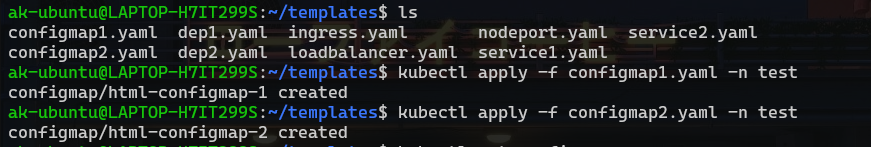

Firstly make sure the configmaps are created. I am using the namespace as test.

To create the namespace:

kubectl create namespace test

Configmap1:

apiVersion: v1 kind: ConfigMap metadata: name: html-configmap-1 data: index.html: | <html> <h1>Hello World!</h1> </br> <h1>Deployment One</h1> </htmlConfigmap2:

apiVersion: v1 kind: ConfigMap metadata: name: html-configmap-2 data: index.html: | <html> <h1>Hello World!</h1> </br> <h1>Deployment Two</h1> </html

To create the configmaps, use this command:

kubectl apply -f configmap1.yaml -n test

kubectl apply -f configmap2.yaml -n test

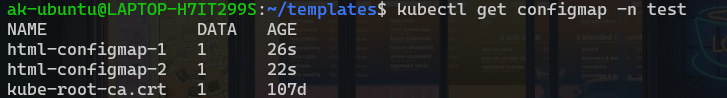

# To check the configmaps

kubectl get configmap -n test

Output:

Now we need to make the following changes in those deployments:

Here we have updated the deployment one with label asapp: nginx1

Similarly the second one with app: nginx2

- Deployment 1:

#File 1

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx1

spec:

selector:

matchLabels:

app: nginx1

replicas: 1

template:

metadata:

labels:

app: nginx1

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

volumeMounts:

- name: index-file-1

mountPath: /usr/share/nginx/html

volumes:

- name: index-file-1

configMap:

name: html-configmap-1

items:

- key: index.html

path: index.html

- Deployment 2:

#File 2

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx2

spec:

selector:

matchLabels:

app: nginx2

replicas: 1

template:

metadata:

labels:

app: nginx2

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

volumeMounts:

- name: index-file-2

mountPath: /usr/share/nginx/html

volumes:

- name: index-file-2

configMap:

name: html-configmap-2

items:

- key: index.html

path: index.html

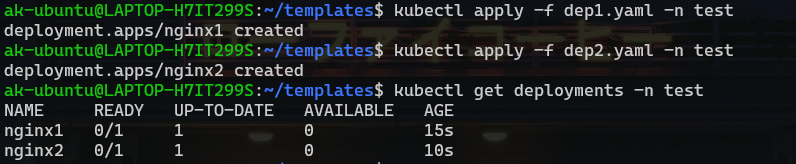

Let’s apply these on k8s

Run the following commands:

kubectl apply -f dep1.yaml -n test

kubectl apply -f dep2.yaml -n test

#To check the deployments

kubectl get deployments -n test

We’re half way there, let’s get ahead quickly

Step 3: Create the Cluster-IP services

apiVersion: v1

kind: Service

metadata:

name: nginx-service-1

spec:

type: ClusterIP

selector:

app: nginx1

ports:

- port: 80

targetPort: 80

This is a Cluter IP service which will be used as a base service to route traffic from our ingress path to specific pods.

This service has selector label as app: nginx1 which means that it will forward traffic to Deployment 1.

Similar service for Deployment 2 with label as app: nginx2

apiVersion: v1

kind: Service

metadata:

name: nginx-service-2

spec:

type: ClusterIP

selector:

app: nginx2

ports:

- port: 80

targetPort: 80

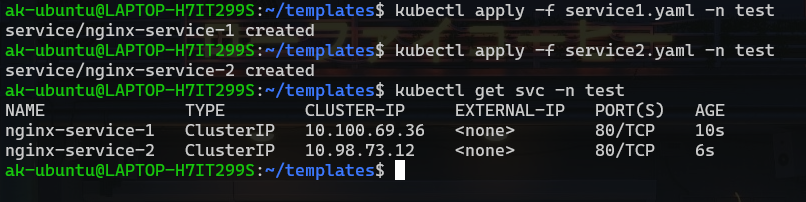

Create the services on k8s:

kubectl apply -f service1.yaml -n test

kubectl apply -f service2.yaml -n test

#To check the services:

kubectl get svc -n test

Note: The ClusterIP service never have an external IP, it’s a service to enable communications of multiple pods within the cluster.

Now let’s move forward to create the ingress resource and define our paths↘️

Step 4: Create the Ingress Resource:

Here we have created an Ingress resource to route traffic to a our two Cluster IP services. I’ve defined the host name as myapp.test.com. This is where we will be able to access our application. You can set it to any name since we are going to run it on localhost itself for testing purpose.

# ingress 1

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-service

annotations:

# kubernetes.io/ingress.class: "nginx"

# nginx.ingress.kubernetes.io/use-regex: "true"

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- host: myapp.test.com

http:

paths:

- path: /one

pathType: Prefix

backend:

service:

name: nginx-service-1

port:

number: 80

- path: /two

pathType: Prefix

backend:

service:

name: nginx-service-2

port:

number: 80

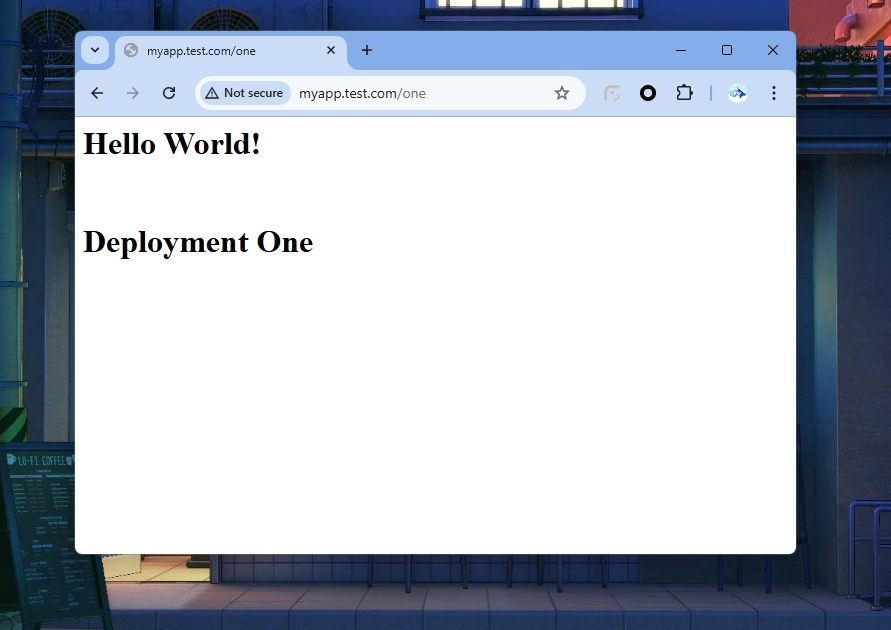

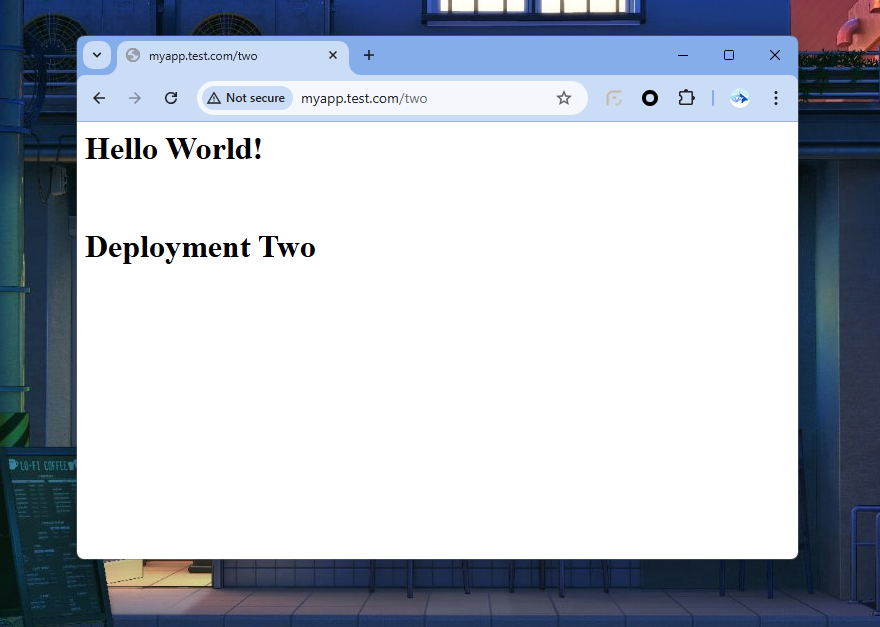

With this configuration, any request to http:// myapp.test.com/one will be routed to the web page of deployment 1 and the request to http:// myapp.test.com/two will be routed to the web page of deployment 2.

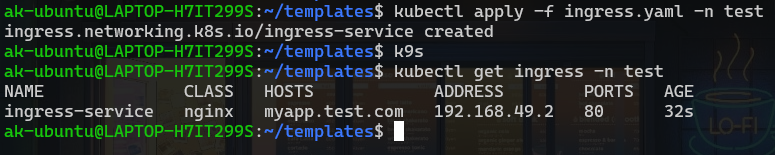

Apply the changes to k8s

kubectl apply -f ingress.yaml -n test

#To check the ingress resource

kubectl get ingress -n test

Testing our App 🖥️

Open a new WSL terminal and run the command minikube tunnel to create a tunnel from WSL to our PC’s localhost so we can access the application via localhost(127.0.0.1).

minikube tunnel

if prompted for password enter your WSL user password.Note: Keep this terminal running DO NOT close it else our tunnel will get disconnected❌

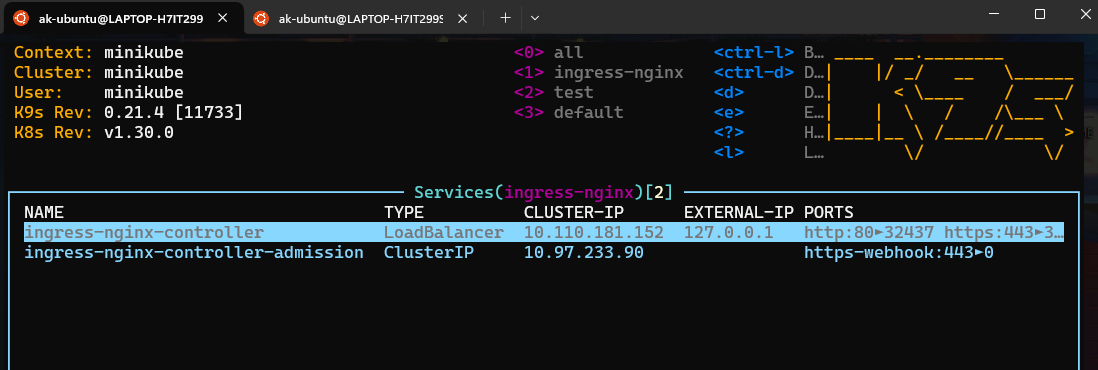

Now come back to the previous terminal and check the ingress-nginx service which was created at the start it would have got a public IP. Here’s how it looks in my k9s.

You can run the following command to check the same:

kubectl get svc -n ingress-nginx -o wide

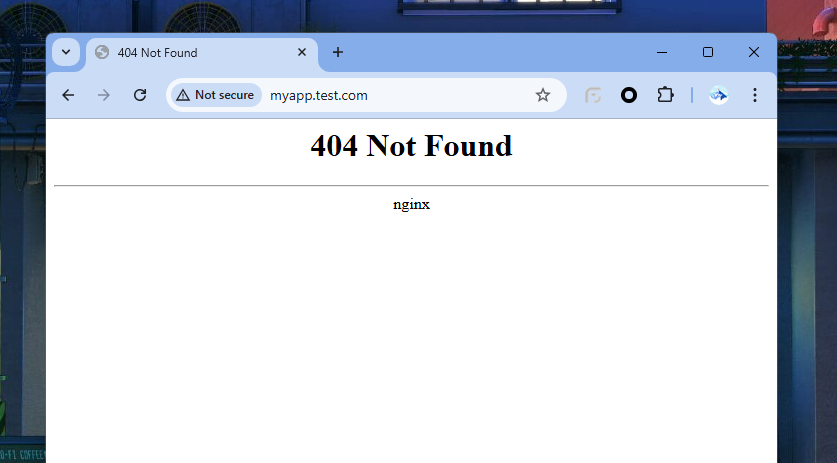

Now go to your favourite browser and type our host which was myapp.test.com

It’s giving 404 since we haven’t created any page for “/” path.

Now go to path /one myapp.test.com/one

You will see the following output:

Go to /two and you will be seeing the second deployment.

If you have been able to reach till here - Congratulations!! You’ve accomplished the target of our project.

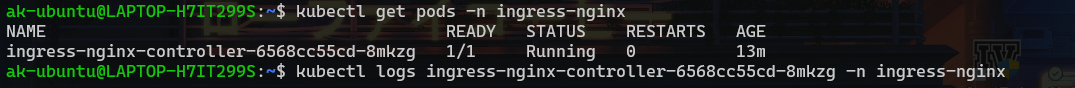

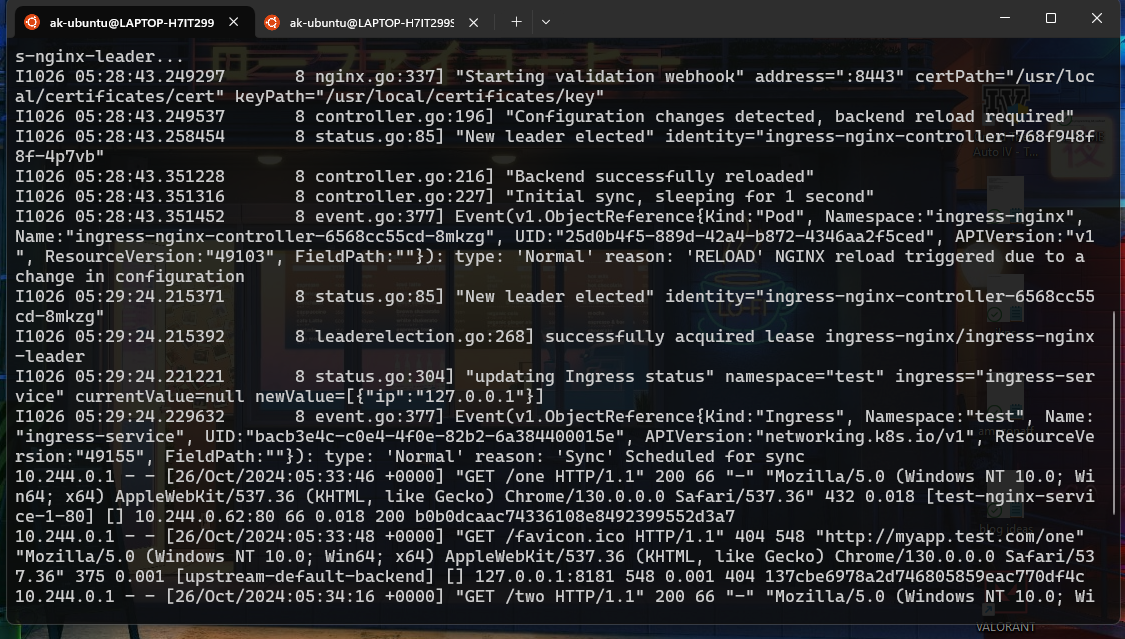

To check the logs of ingress-controller:

kubectl get pods -n ingress-nginx

Get the pod name using this command as shown below. Now get the logs of that pod using following command.

kubectl logs PODNAME -n ingress-nginx

If you face an error you can debug the issues from these logs.

Advanced Features of NGINX Ingress 🌟

SSL/TLS Termination

To secure your application, you can use SSL/TLS termination. Here’s how to set it up:

- Create a TLS Secret:

kubectl create secret tls example-tls --key /path/to/tls.key --cert /path/to/tls.crt

- Modify the Ingress Resource:

Note: this is just an example not included in our YAML

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: example-ingress

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

tls:

- hosts:

- example.com

secretName: example-tls

rules:

- host: example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: example-service

port:

number: 80

Rate Limiting

NGINX Ingress also supports rate limiting to prevent abuse:

metadata:

name: example-ingress

annotations:

nginx.ingress.kubernetes.io/limit-connections: "20"

nginx.ingress.kubernetes.io/limit-rpm: "60"

Troubleshooting Common Issues 🔧

Check Logs: Use

kubectl logsto check the logs of the Ingress controller.DNS Configuration: In production environment ensure your DNS is correctly set up to point to the Ingress controller’s IP.

Annotations: Double-check annotations for correct syntax and values.

Most common errors can be:

1. Minikube tunnel disconnected

2. Check the type of nginx-controller service it should be LoadBalancer with an External IP

3. Check the labels on our deployments and services.

4. Make sure the namespaces are correct when creating and checking the resources.

Conclusion 🎯

Ingress is a powerful tool for managing external access to your Kubernetes services. By using an Ingress controller like NGINX, you can easily set up advanced routing, SSL termination, and load balancing. This makes your Kubernetes applications more robust, secure, and easier to manage.

Feel free to reach out with any questions or feedback! Happy Kubernetes-ing! 🚀

Subscribe to my newsletter

Read articles from Aniket Kharpatil directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Aniket Kharpatil

Aniket Kharpatil

Dynamic DevOps Engineer with expertise in Kubernetes, AWS Cloud, CICD pipelines, and GitOps, specializing in automation, monitoring, and scalable solutions. Skilled in optimizing workflows, managing production releases, and driving efficiency in cloud-native environments. As a lifelong learner and problem-solver, I thrive on tackling complex business challenges by leveraging the latest DevOps tools, best practices, and innovation.