Why Reinforcement Learning via Chain-of-Thought Misses the Point: Misguided Optimisations-Driven AI Research

Gerard Sans

Gerard Sans

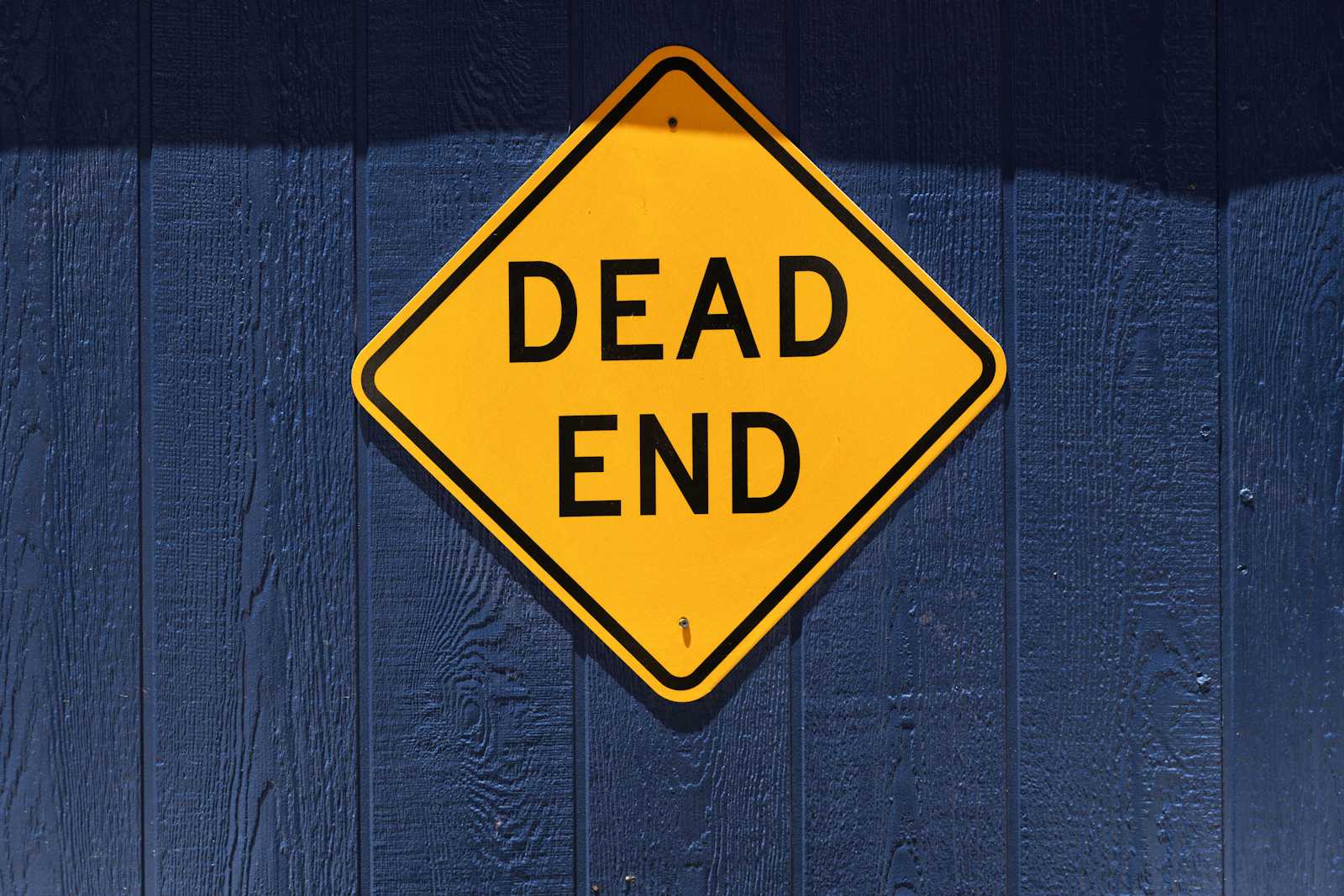

While artificial intelligence continues to make headlines with impressive benchmark scores, a troubling practice has taken root in AI research. Imagine a teacher who, instead of helping students understand the subject matter, simply hands them copies of upcoming exam questions. This is essentially what's happening with a popular AI training technique that uses something called 'Chain-of-Thought Reinforcement Learning.'

This approach might produce impressive scores on standardized AI tests, but it represents a fundamental betrayal of machine learning principles. It's not teaching AI to be smarter or more capable—it's teaching it to recognize and reproduce patterns specific to the tests it will face.

To put this in technical terms: researchers are using Reinforcement Learning (RL) with Chain-of-Thought (CoT) prompting on standardized benchmarks, reflecting a critical misunderstanding that prompt strategies can fundamentally alter a model's capabilities. Just as memorizing test answers doesn't expand a student's knowledge, any perceived gains from this approach are strictly limited to the model's latent space—the AI equivalent of its working knowledge—which remains bounded by its original training data.

The consequences are severe on both practical and technical levels. In educational terms, it's academic dishonesty masked as innovation. In AI terms, RL via CoT not only fails to improve true model performance but actively distorts the output space, creating a cascade of unintended consequences that ripple through the model's behavior. This isn't just cutting corners—it's compromising the integrity of AI development while hiding behind artificially inflated metrics.

Just as teaching to the test undermines genuine education in our schools, this approach threatens to create a generation of AI models that excel at benchmarks while failing at their fundamental purpose: developing true intelligence and understanding.

The Boundaries of Latent Space: Why Input Prompts Can't Expand AI Capabilities

Latent Space as the Core of AI Understanding

At the heart of any AI model's performance lies its latent space, a structured representation of the training data. This latent space determines the boundaries of what the model can understand and produce. No prompt strategy or input manipulation can push a model beyond these limits. For true advancement, improvements need to stem from high-quality training data that broadens this latent space, not from prompting tricks that merely shuffle its existing knowledge.

Gradient Descent and the Reinforcement of Patterns

A model learns through algorithms like gradient descent, which identifies patterns in training data based on correlations between inputs and outputs. To recognize patterns meaningfully, the data must be broad and representative. Yet these patterns interact, sometimes competing for prominence in the model's response. Because models operate on reinforcement, not negation, efforts to "direct" them with prompts (e.g., "don't think of X") often backfire by unintentionally reinforcing the very concept they aim to suppress.

RL via CoT: A Shortcut that Misunderstands AI's Core

Hardcoding Prompts: The Missteps and Unintended Consequences

Using RL via CoT to guide models on benchmarks is, in effect, hardcoding a prompt strategy into the model's latent space. This is not only unnecessary but sacrilegious to the AI principles of generality and adaptability. By embedding specific prompt strategies, researchers distort regions of the output space that have no natural association with the test data, introducing a cascade of unintended consequences. These distortions can impact unrelated activations within the model, causing an exponential ripple of effects across the output space—effects that are difficult to predict and control.

Artificial Performance Gains and Metric Manipulation

The primary motivation behind RL via CoT on standardized tests is to show metric improvement. But this improvement is largely illusory, as it reflects performance gains specific to the benchmark rather than a true expansion of model capabilities. This approach results in overfitting, where models are tailored to perform well on specific tasks without broader applicability. Essentially, it's an exercise in metric manipulation: adjusting the model's responses to pass specific tests without addressing its actual performance limitations.

The Ripple Effect: Distortions in the Output Space

The Exponential Explosion of Unintended Activations

The activations influenced by RL via CoT affect the model's output space in unpredictable ways, creating a cascading impact that extends far beyond the intended task. This exponential increase in unintended activations can lead to a mess of misaligned responses in unrelated areas of the model's output. By overfitting to specific benchmarks, researchers introduce artifacts that distort the model's natural response structure, leading to degraded performance in tasks that were previously unaffected.

Sacrificing Core AI Principles for Arbitrary Metrics

RL via CoT is ultimately a distraction from core AI principles. It focuses on short-term metrics, sacrificing the model's integrity and adaptability. When researchers rely on prompt strategies to create artificial gains, they dismiss the importance of a strong, generalizable latent space—a foundation built on quality training data and balanced patterns. Instead of genuine improvements, we're left with a patchwork of tweaks that degrade model performance and introduce noise into the AI's response space.

Conclusion: Reclaiming AI's Core for True Progress

The path to meaningful AI advancements lies in respecting foundational principles, not in shortcut strategies like RL via CoT that artificially inflate metrics. AI's potential is bound by the quality of its training data, not by superficial input adjustments. Researchers must prioritize data quality, representation, and the fundamental balance of reinforcement in model training to achieve real progress. True breakthroughs will come from honoring these principles rather than from pursuing metrics through methods that distort, rather than expand, the model's understanding.

Subscribe to my newsletter

Read articles from Gerard Sans directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Gerard Sans

Gerard Sans

I help developers succeed in Artificial Intelligence and Web3; Former AWS Amplify Developer Advocate. I am very excited about the future of the Web and JavaScript. Always happy Computer Science Engineer and humble Google Developer Expert. I love sharing my knowledge by speaking, training and writing about cool technologies. I love running communities and meetups such as Web3 London, GraphQL London, GraphQL San Francisco, mentoring students and giving back to the community.