An Overview into Generative Adversarial Networks

Arya M. Pathak

Arya M. Pathak

Welcome to the fascinating world of Generative Adversarial Networks (GANs)! As engineering students, we often come across amazing technologies that leave us in awe, and GANs are one of them. Imagine building a model that can generate images of realistic objects, create original music, or even draw landscapes. Through this post, we’ll get into the fundamentals of how GANs work, how they differ from other machine learning models, and the detailed inner workings that make GANs one of the most innovative AI models of our time.

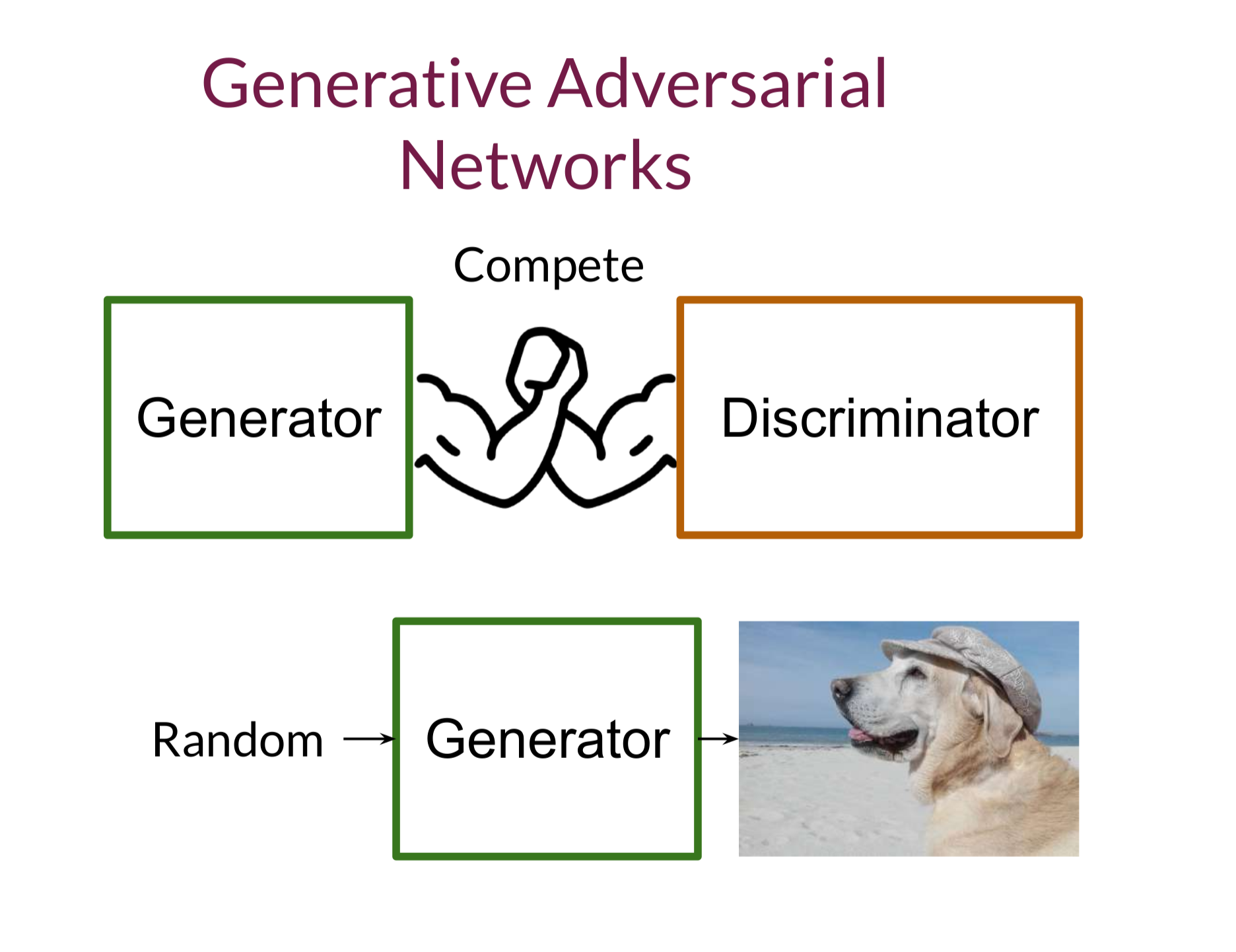

In a GAN setup, two networks compete and learn from each other: the Generator, which tries to produce realistic outputs, and the Discriminator, which learns to identify if an output is real or fake. This adversarial process is where the "adversarial" in GANs comes from. As we go deeper, we’ll explore various types of generative models and GAN’s close relative, Variational Autoencoders (VAEs), giving you a complete view of how these models operate. By the end of this post, you’ll have a clear understanding of GANs and be ready to build a model that can generate handwritten digits.

This blog is built on insights and resources from deeplearning.ai.

The Basics: Generative Models vs. Discriminative Models

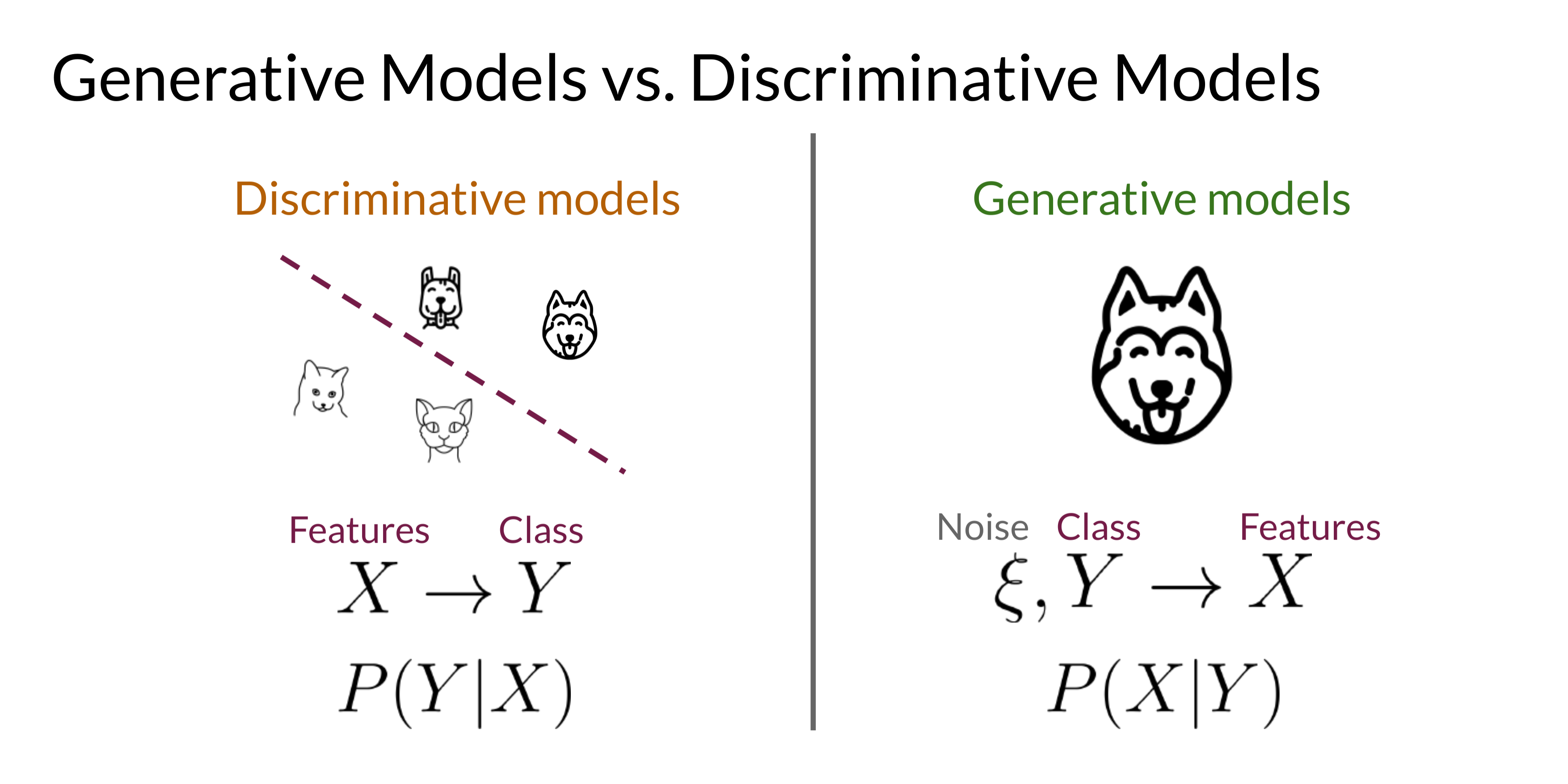

In machine learning, you might already be familiar with discriminative models. Discriminative models are typically used for classification tasks, learning to distinguish between classes, such as identifying images of cats versus dogs. They work by finding the probability of a class given certain features (e.g., if it has a wet nose and purrs, it’s likely a cat).

Generative models are a bit different. Instead of classifying or distinguishing between objects, generative models learn to create data that resembles real-world data. In other words, they generate new instances of data by learning the patterns in the data they’re trained on. This is achieved by modeling the probability distribution of features in the data.

Consider a generative model trained on images of dogs. Given a random noise input, the model would try to generate a new image of a dog with realistic features, like a wet nose or floppy ears. By generating new, diverse images based on this probability distribution, the model can produce images of various dog breeds without needing real input images.

Introduction to Variational Autoencoders (VAEs)

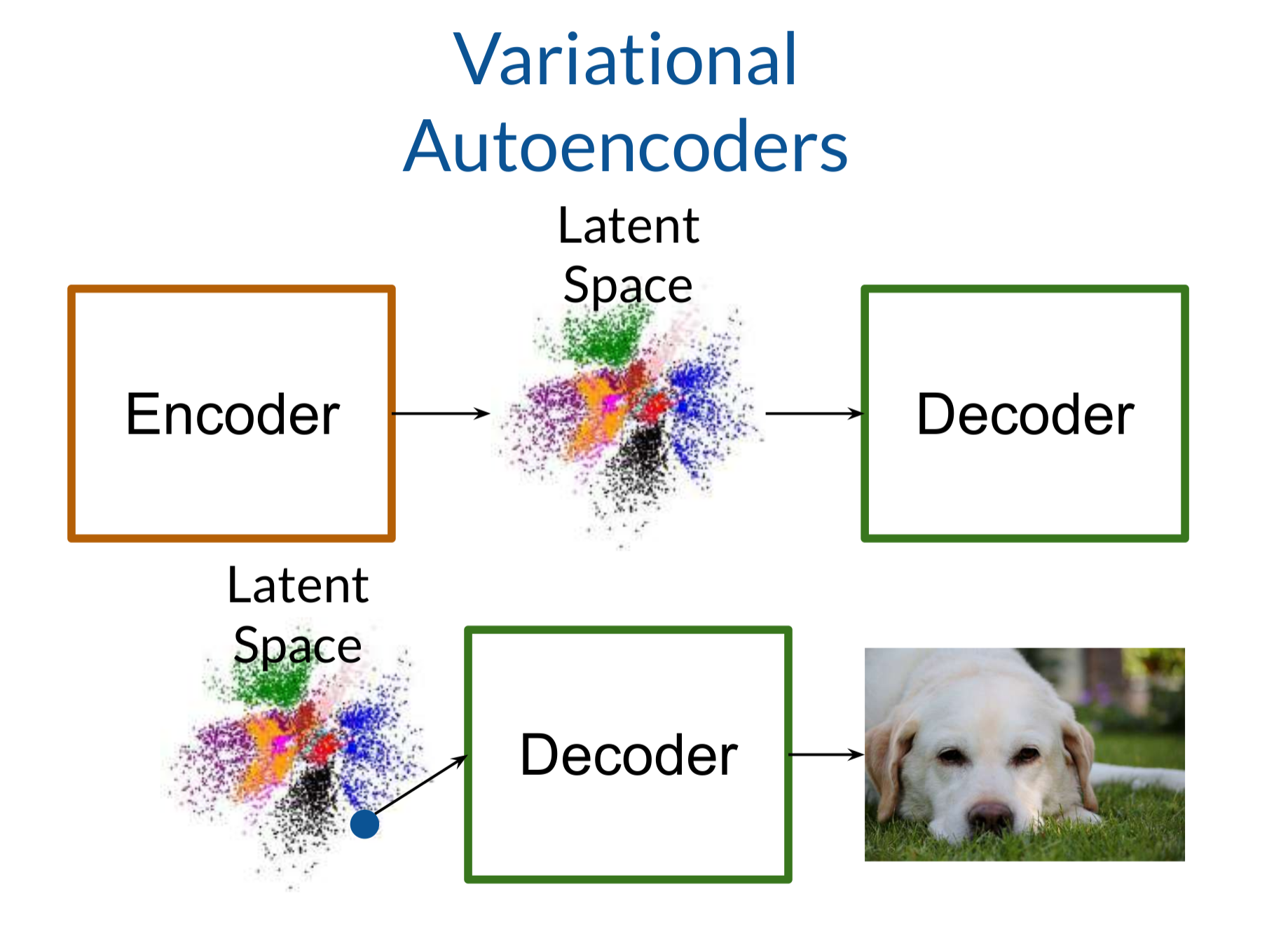

Before we dive into GANs, let’s look at one of their cousins: Variational Autoencoders (VAEs). VAEs are also generative models, and they work using two neural networks: an encoder and a decoder. The encoder’s job is to take in a real image (say, of a dog) and map it into a "latent space," which is a compressed representation of that image. In this space, the image data is represented by a vector of numbers, such as [6.2, -3, 21]. The decoder then takes this vector and tries to reconstruct the original image of the dog.

What makes VAEs unique is that they introduce a bit of randomness into this latent space. Instead of mapping each image to a fixed point, the encoder maps it to a probability distribution. During decoding, the model samples from this distribution, allowing it to generate diverse versions of the image. This noise injection makes the VAE produce different variations, such as dogs with varied fur lengths, colors, or poses, from the same latent point.

Getting to Know GANs: How GANs Work

GANs were introduced by Ian Goodfellow in 2014 and have since transformed the field of generative models. GANs use two primary components that learn together in a kind of competitive setup:

Generator: The generator’s goal is to create realistic images that can "fool" the discriminator.

Discriminator: The discriminator's role is to identify whether an image is real (from the training set) or fake (produced by the generator).

The generator and discriminator are two neural networks competing in a zero-sum game where the generator wants to create convincing images, while the discriminator tries to catch the fakes. Over multiple training cycles, this back-and-forth improves the capabilities of both models.

Detailed Training Process of GANs: The Adversarial Game

The training of GANs can be understood through the following steps:

Initial Training of the Discriminator: At the beginning, we provide the discriminator with real images and some random "fake" images from the generator. Since the generator hasn’t been trained, these fakes are usually very poor in quality – think of them as beginner’s scribbles. The discriminator learns to distinguish real images from these obvious fakes, scoring each image as real or fake.

Training the Generator: The generator takes random noise (a vector of random numbers, like

[-1.3, 2.5, 0.8]) and converts it into an image. At first, these images look nothing like real data, but after each round of feedback, the generator learns how to make its output look more realistic.Back-and-Forth Training: The generator and discriminator are trained in turns. The discriminator scores the generator’s outputs, labeling them real or fake. Based on the feedback, the generator improves by learning which aspects of its output look more real, gradually refining its images.

Reaching a Stalemate: The competition continues until the generator produces images that are so convincing that even the discriminator has difficulty identifying them as fake. At this point, the generator has become quite skilled at creating realistic images, and we consider the GAN trained.

Types of GANs and Their Applications

GANs have many variations, each suited for different applications. Here are a few popular ones:

CycleGAN: This GAN type is used for image translation. For instance, CycleGAN can take a sketch of a landscape and transform it into a photorealistic version. Artists and designers often use CycleGANs to enhance their work by generating realistic images from simple sketches.

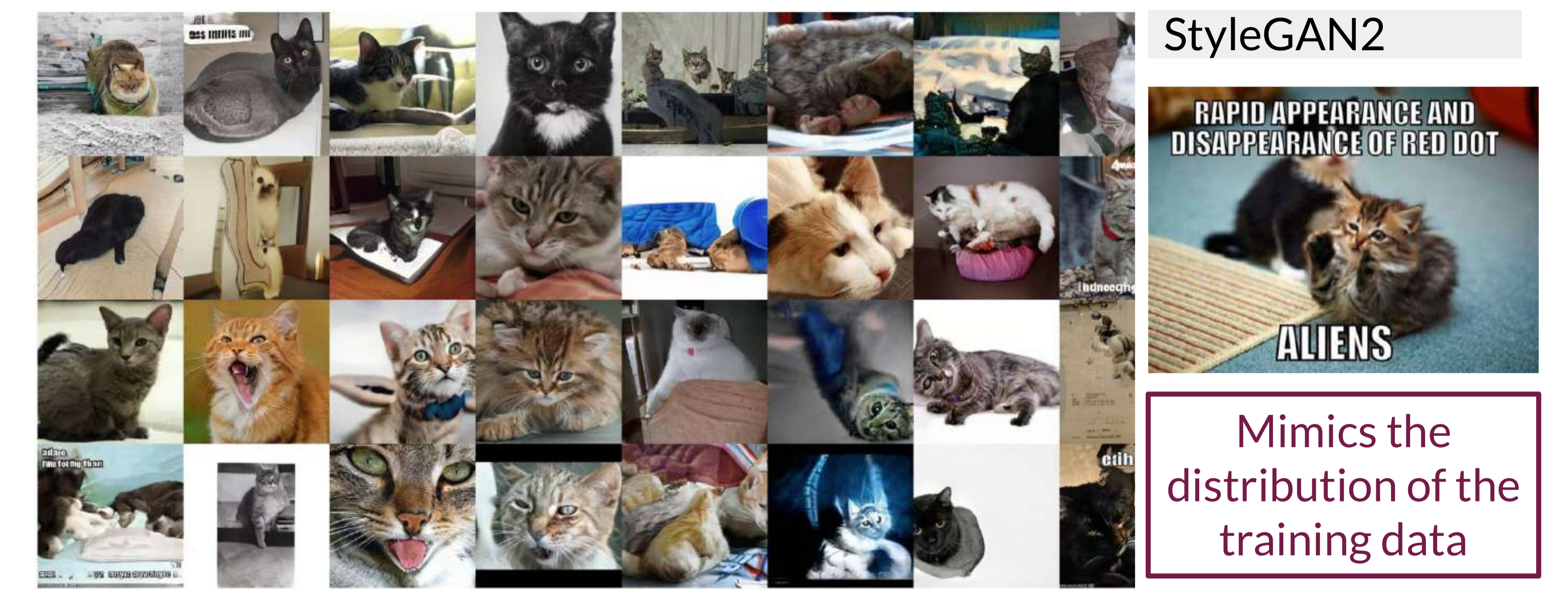

StyleGAN: StyleGANs, developed by Nvidia, are among the most advanced types of GANs for face generation. They can produce hyper-realistic images of human faces that don’t exist. This GAN type is used to generate faces for simulations, avatars, and visual effects in movies.

3D-GAN: This GAN variation generates 3D models. It’s useful in fields like game development and virtual reality, where developers need realistic 3D objects. 3D-GANs are also popular in architecture and product design, creating realistic 3D visualizations.

How GANs Differ from VAEs: A Quick Comparison

While both GANs and VAEs are generative models, their approaches to creating images are different:

Variational Autoencoders (VAEs): VAEs use an encoder-decoder structure. The encoder compresses real data into a latent space, and the decoder reconstructs it. VAEs add noise, so each generated image is slightly unique but still recognizable as part of the target class.

Generative Adversarial Networks (GANs): GANs use two competing networks. The generator doesn’t rely on any fixed latent space representation but instead learns to mimic real data by "fooling" the discriminator.

Real-Life Applications of GANs

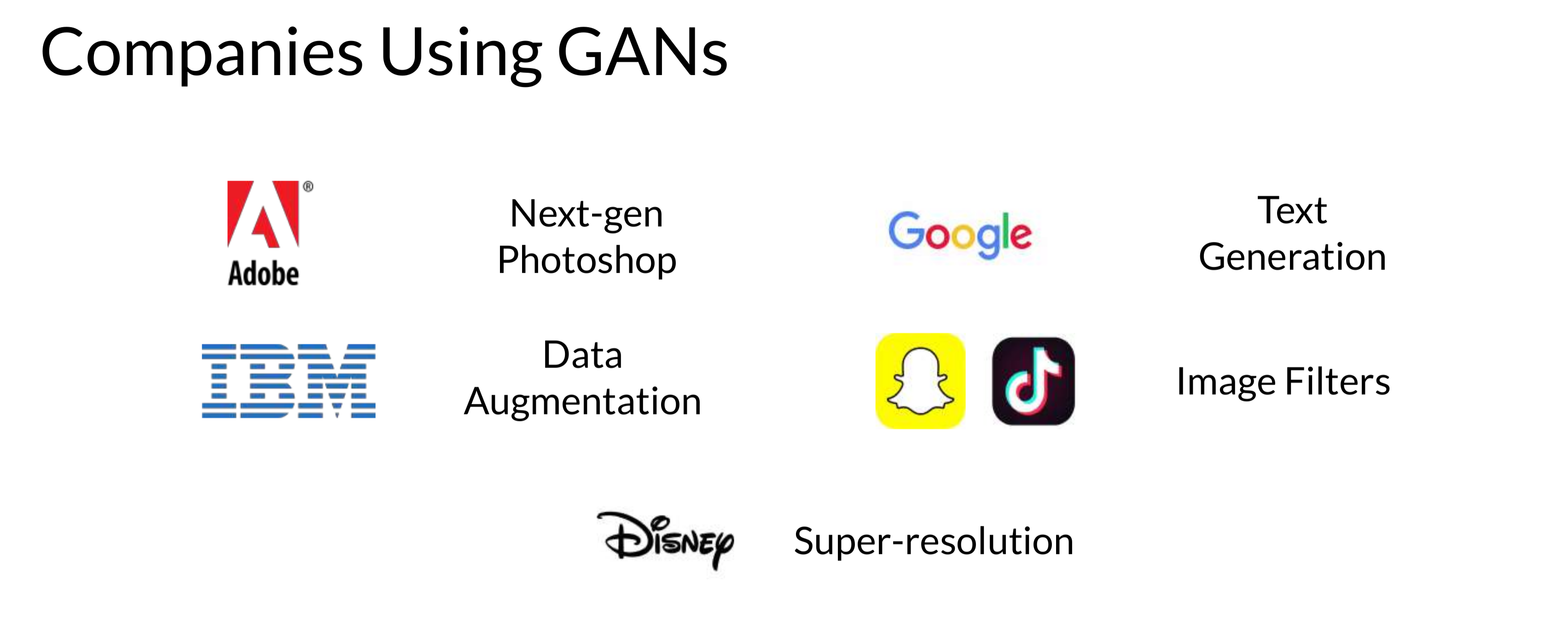

GANs have broad applications across different fields. Here’s a breakdown of some areas where GANs are making a significant impact:

Art and Design: GANs can create art that looks like human-made artwork. Artists use GANs to produce paintings in various styles, while companies use GANs for logo design and branding.

Healthcare: GANs are used to generate synthetic medical images, which are essential for training medical AI models without compromising patient privacy.

Data Augmentation: GANs generate additional data for training machine learning models. This is useful in fields with limited data, like certain medical and environmental research areas, where GANs can generate realistic images or sensor readings.

Text and Image Super-Resolution: GANs can upscale images, making them clearer and more detailed. This technology is widely used in apps like Photoshop and in media for remastering old films or enhancing satellite images.

Conclusion

GANs represent one of the most exciting developments in AI, allowing machines to generate realistic images, text, music, and much more. With GANs, we’re teaching computers to be creative in ways that previously seemed impossible. From the generator and discriminator’s competitive training to the broad applications of GANs in industries ranging from entertainment to medicine, these networks have already started to transform our world.

In the future, GANs will continue to evolve, making AI-generated content more prevalent and realistic. For those passionate about machine learning and AI, GANs offer a gateway into cutting-edge research and practical applications. This post just scratches the surface, so if you’re inspired to learn more, dive deeper into the exciting possibilities of GANs and generative AI!

For further reading and resources, check out deeplearning.ai.

Subscribe to my newsletter

Read articles from Arya M. Pathak directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Arya M. Pathak

Arya M. Pathak

I am a Computer Engineering undergraduate at Vishwakarma Institute of Technology, Pune . With hands-on experience in software development and cloud-native applications, I specialize in Python, Go, C#, and full-stack web development using MERN, ASP.NET Core, and Angular. I have interned at Alemeno and CodingKraft, where I developed AI-driven compliance systems, Python execution engines, and secure web solutions. My projects include scalable microservices, machine learning pipelines, and secure banking APIs deployed on cloud platforms like Azure with Kubernetes and CI/CD automation. Adept in tools like Docker, Redis, RabbitMQ, and GitHub Actions, I am certified in Deep Learning and DevOps. I have a strong foundation in algorithms, having solved over 350 coding problems across platforms like Leetcode and Codeforces. Additionally, I actively contribute to open-source projects, mentoring initiatives, and hackathons.