How To Run Static Analysis On Your CI/CD Pipelines Using AI

Atulpriya Sharma

Atulpriya Sharma

“An inadvertent misconfiguration during a setup left a data field blank, which then triggered the system to automatically delete the account.” - was Google's explanation for accidentally deleting a pension fund's entire account.

Incidents like these highlight the importance of configuration accuracy in modern software systems. A simple misconfiguration can have devastating consequences, particularly in CI/CD pipelines.

Ensuring configuration accuracy and managing the complexity of code reviews can be daunting for DevOps engineers. Teams often prioritize feature development, leaving configuration reviews as an afterthought. This can lead to unnoticed misconfigurations, causing production issues and downtime.

CodeRabbit helps solve this by automating code reviews with AI-driven analysis and real-time feedback. Unlike other tools requiring complex setups, CodeRabbit integrates seamlessly into your pipeline, ensuring that static checks on configuration files are accurate and efficient.

In this blog post, we will look at CodeRabbit and how it helps with static checking in CI/CD Pipelines, ensuring configuration quality and improving efficiency throughout the end-to-end deployment process.

Why Static Checking is Crucial in CI/CD Pipelines

Configuration files are the backbone of CI/CD pipelines that control the deployment of infrastructure and applications. Errors in these files can lead to costly outages and business disruptions, making early validation essential. Static checking is vital in mitigating security vulnerabilities, code quality issues, and operational disruptions.

Below is an example of a Circle CI workflow configuration file that sets up a virtual environment, installs requirement dependencies, and executes linting commands.

jobs:

lint:

docker:

- image: circleci/python:3.9

steps:

- checkout

- run:

name: Install Dependencies

command: |

python -m venv venv

. venv/bin/activate

pip install flake8

- run:

name: Run Linting

command: |

. venv/bin/activate

flake8 .

If static checking didn’t happen in the above configuration, issues like unrecognized syntax or invalid configurations in the Python code could leak, causing the build to fail at later stages. For example, missing dependencies or improperly formatted code could lead to runtime errors that break deployment pipelines or introduce hard-to-trace bugs in production.

Overall, static checking helps with:

Early error detection: A static check identifies syntax errors and misconfigurations in code before execution, reducing the likelihood of runtime failures.

Enforce coding standards: This ensures consistent code quality by enforcing style guidelines and best practices across code and configuration files, making it easier to maintain & review changes.

Enhancing Code Quality: Static checks help enforce criteria like passing tests or x% of code coverage, which must be met before any deployment, thus improving the overall quality.

Using CodeRabbit For Static Checking

CodeRabbit gives an edge by integrating with your CI/CD workflows and identifying common misconfigurations. This capability is crucial for maintaining the integrity of the deployment process and preventing disruptions that could affect end-users.

In addition, it provides a distinctive benefit in executing static analysis and linting automatically, requiring no additional configuration. For DevOps teams, this functionality streamlines the setup process so they can concentrate on development rather than complicated settings.

It integrates with your CI/CD pipelines without causing any disruption and automatically runs linting and static analysis out of the box, requiring no additional configuration.

It supports integration with a wide range of tools across popular CI/CD platforms like GitHub, CircleCI, and GitLab, running checks such as Actionlint, Yamllint, ShellCheck, CircleCI pipelines, etc. This simplifies setup, providing quick results without additional manual effort.

For tools like Jenkins and GitHub Actions, CodeRabbit continuously runs static analysis on every build or commit, catching misconfigurations early and improving workflow reliability.

Let us look at CodeRabbit in action in the following section.

Detecting Misconfiguration with CodeRabbit and GitHub Actions Actionlint

To demonstrate the functionality of CodeRabbit, let us look at how we integrate a GitHub Actions workflow into a project to automate the CI/CD pipeline. The repository has a configuration file with potential errors, which will be flagged and reported by CodeRabbit.

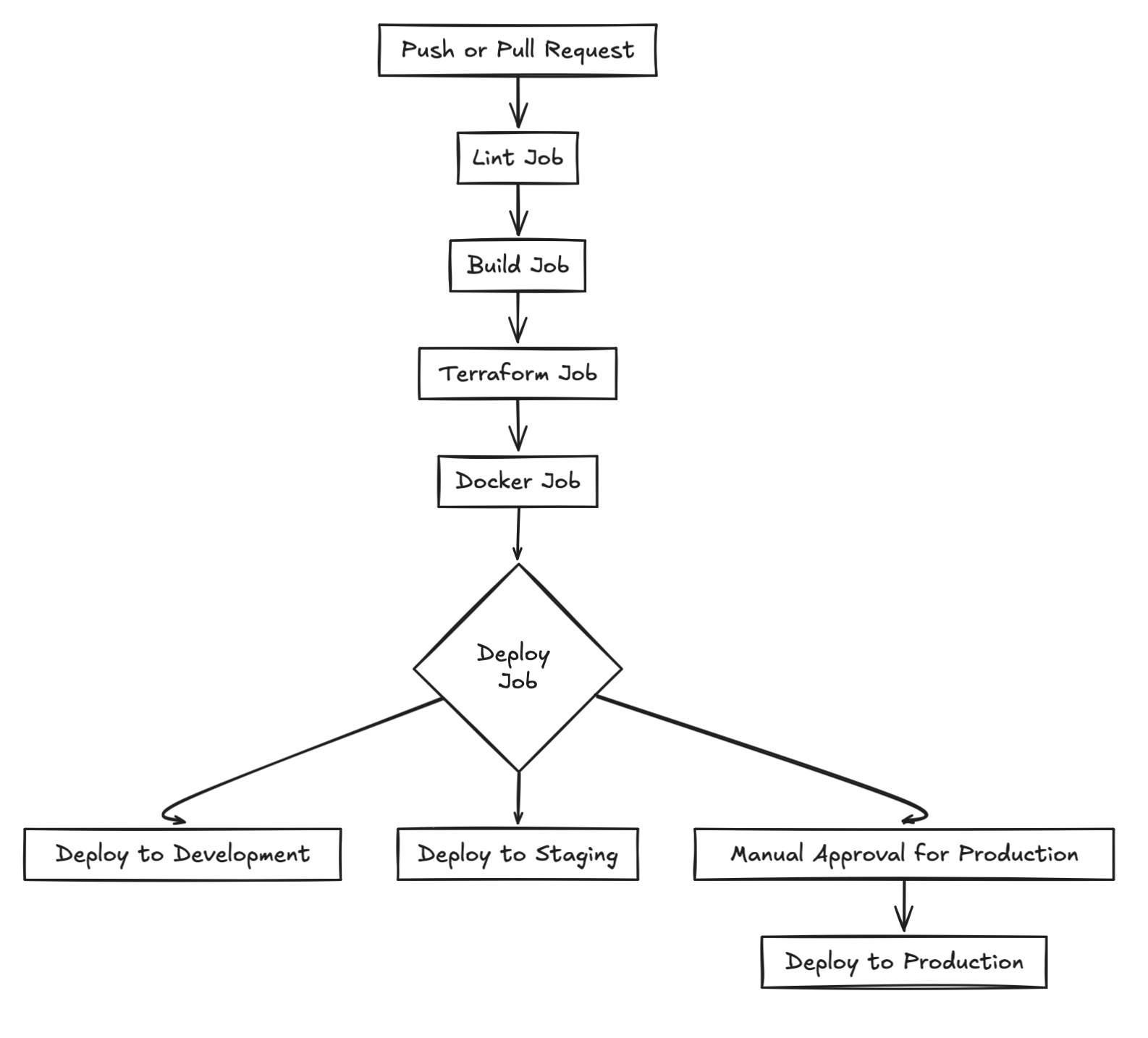

Below is a diagram of the sequence of tasks in the workflow file we created.

By submitting a pull request, we allowed CodeRabbit to review the file and detect potential misconfigurations automatically. Once the repository is prepared, we integrate it with CodeRabbit to set up automated code reviews and generate a comprehensive, structured report consisting of the following key sections.

- Summary – A concise overview of the key changes detected in your code or configuration. This helps you quickly understand the main areas that need attention.

- Walkthrough – A detailed, step-by-step analysis of the reviewed files, guiding you through specific issues, configurations, and recommendations.

- Table of Changes – A table listing all changes in each file and a change summary. This helps you quickly assess and prioritize necessary actions.

You can customize these sections by tweaking the configuration file or using the CodeRabbit dashboard. Refer to our CodeRabbit configuration guide to learn more.

Here a sample workflow.yaml config file, on which detailed insights and recommendations through CodeRabbit's review process.

name: development task

on:

push:

branches:

- main

- develop

- staging

pull_request:

branches:

- main

- develop

- staging

jobs:

lint:

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v3

- name: Lint workflow YAML files

uses: rhysd/actionlint@v1

- name: Setup Node.js

uses: actions/setup-node@v3

with:

node-version: '18'

- name: Install dependencies

run: npm install

- name: Lint JavaScript code

run: npm run lint

build:

runs-on: ubuntu-latest

needs: lint

steps:

- name: Checkout code

uses: actions/checkout@v3

- name: Setup Node.js

uses: actions/setup-node@v3

with:

node-version: '18'

- name: Install dependencies and cache

uses: actions/cache@v3

with:

path: ~/.npm

key: ${{ runner.os }}-node-${{ hashFiles('package-lock.json') }}

restore-keys: |

${{ runner.os }}-node-

run: npm install

- name: Run tests

run: npm test

- name: Check for vulnerabilities

run: npm audit --production

terraform:

runs-on: ubuntu-latest

needs: build

steps:

- name: Checkout code

uses: actions/checkout@v3

- name: Setup Terraform

uses: hashicorp/setup-terraform@v2

with:

terraform_version: 1.5.0

- name: Terraform init

run: terraform init

working-directory: infrastructure/

- name: Terraform plan

run: terraform plan

working-directory: infrastructure/

- name: Terraform apply (development)

if: github.ref == 'refs/heads/develop'

run: terraform apply -auto-approve

working-directory: infrastructure/

env:

AWS_ACCESS_KEY_ID: ${{ secrets.AWS_ACCESS_KEY_ID }}

AWS_SECRET_ACCES_KEY: ${{ secrets.AWS_SECRET_ACCES_KEY }}

docker:

runs-on: ubuntu-latest

needs: terraform

steps:

- name: Checkout code

uses: actions/checkout@v3

- name: Login to AWS ECR

id: login-ecr

uses: aws-actions/amazon-ecr-login@v1

with:

region: us-east-1

- name: Build and tag Docker image

run: |

IMAGE_TAG=${{ github.sha }}

docker build -t ${{ secrets.ECR_REGISTRY }}/my-app:latest .

echo "IMAGE_TAG=$IMAGE_TAG" >> $GITHUB_ENV

- name: Push Docker image to AWS ECR

run: |

IMAGE_TAG=${{ env.IMAGE_TAG }}

docker push ${{ secrets.ECR_REGISTRY }}/my-app:$IMAGE_TAG

deploy:

runs-on: ubuntu-latest

needs: docker

environment: production

steps:

- name: Deploy to Development

if: github.ref == 'refs/heads/develop'

run: |

echo "Deploying to development environment"

# Your deployment script here

- name: Deploy to Staging

if: github.ref == 'refs/heads/staging'

run: |

echo "Deploying to staging environment"

# Your deployment script here

- name: Manual Approval for Production

if: github.ref == 'refs/head/main'

uses: hmarr/auto-approve-action@v2

with:

github-token: ${{ secrets.GITHUB_TOKEN }}

- name: Deploy to Production

if: github.ref == 'refs/heads/main'

run: |

echo "Deploying to production environment"

# Your deployment script here

Before getting into the code review, here’s a high-level overview of what the workflow file accomplishes:

Triggers the CI/CD pipeline on pushes and pull requests to the main, develop, and staging branches, ensuring continuous integration.

Executes a linting workflow that checks the syntax of the YAML configuration and installs the necessary dependencies for the application to ensure code quality.

Sets up Terraform to manage and provision the cloud infrastructure needed for the application.

Executes tests to validate the functionality of the application and checks for vulnerabilities, ensuring the code is secure and stable.

Builds and tags a Docker image for the application, preparing it for deployment.

Pushes the Docker image to AWS Elastic Container Registry (ECR), enabling easy access for deployment.

Deploys the application to different environments (development, staging, and production) based on the branch, including a manual approval step for production deployments to ensure control and oversight.

Having examined the workflow.yaml configuration file and its various components, we now delve into the individual section, starting with the summary.

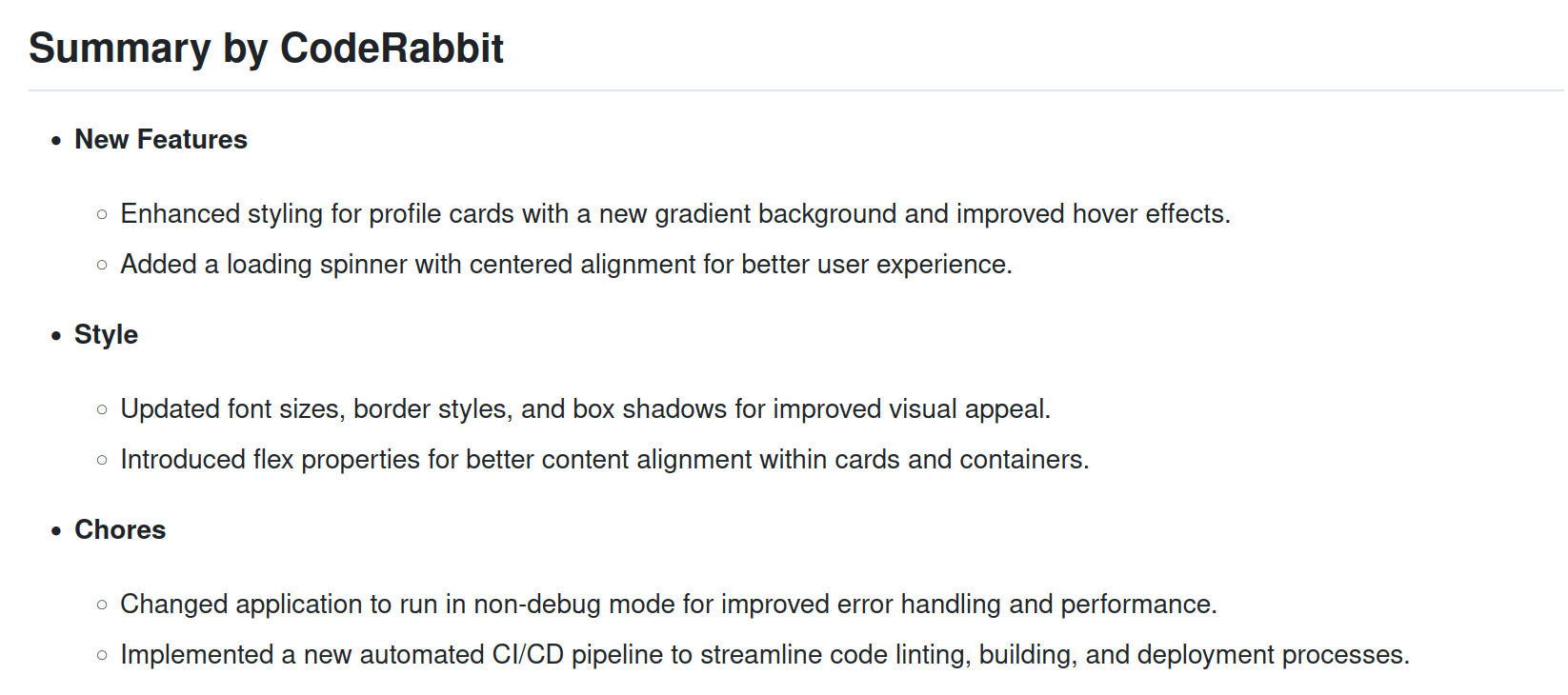

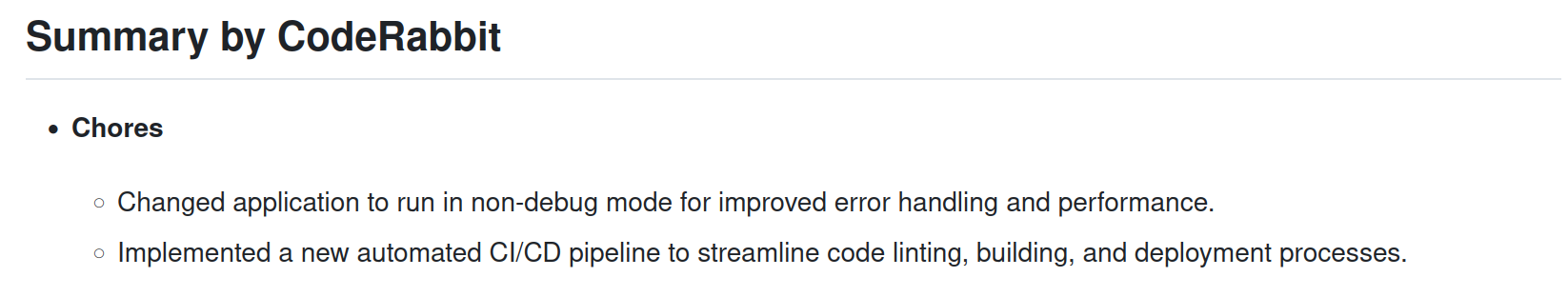

Summary

This summary serves as an essential first step in the review process, offering a clear and concise overview of the changes introduced in the latest commit. It provides a quick understanding of the key aspects covered, including new features, styling adjustments, configuration changes, and other relevant modifications raised in the pull request.

The above snippet highlights important maintenance tasks, including running the application in non-debug mode for improved performance and implementing an automated CI/CD pipeline to streamline code linting, building, and deployment processes.

This summary helps in understanding the key changes and enhancements made in the latest commit.

After reviewing the key changes in the Summary, we can explore the Walkthrough section of the report, which provides a detailed breakdown of specific modifications.

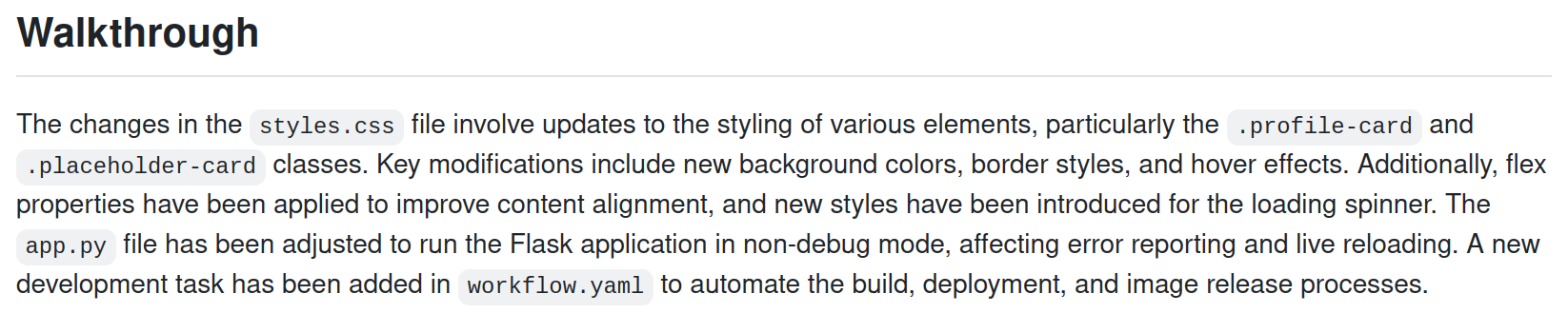

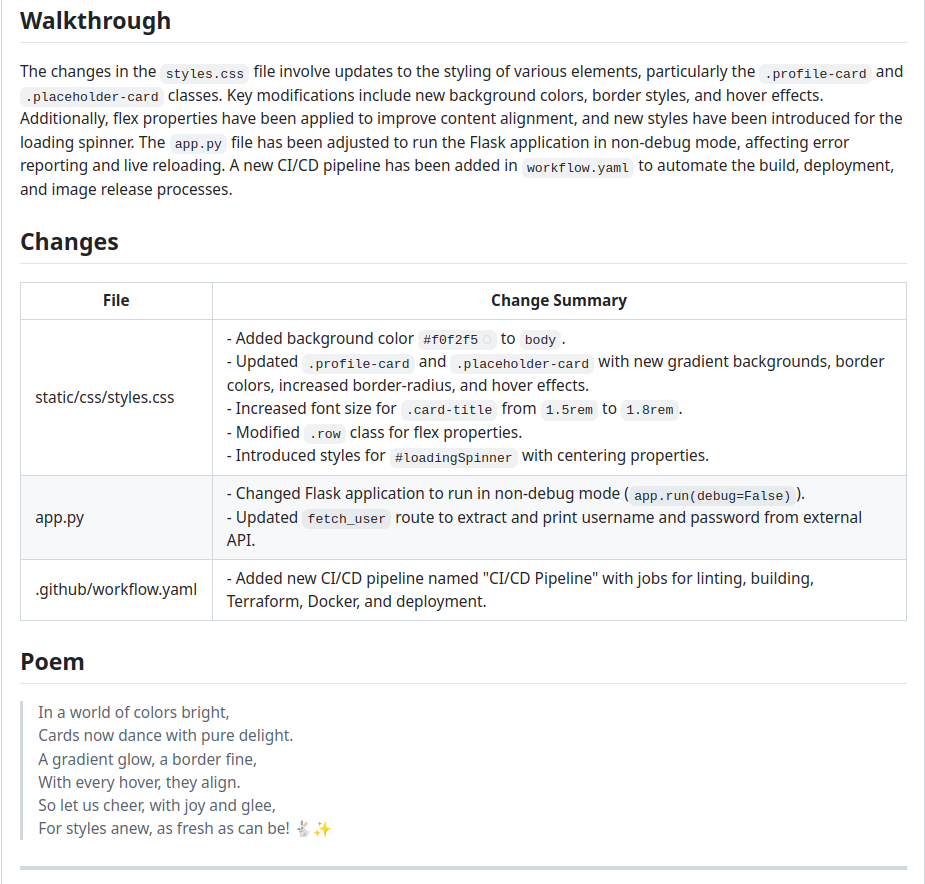

Walkthrough

This section provides a comprehensive overview of the specific modifications made across different files in the latest commit. Each file is assessed for its unique contributions to the project, ensuring clarity on how these changes enhance the overall functionality and user experience.

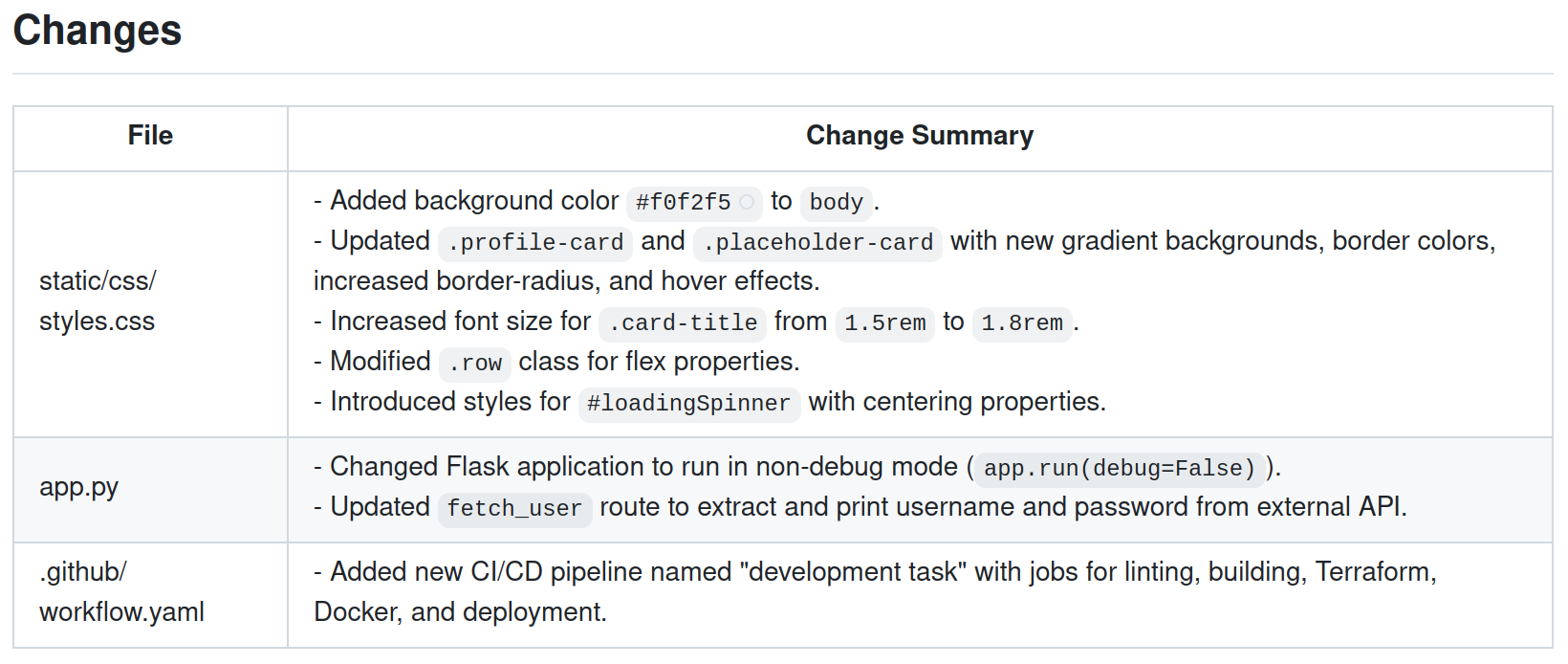

The Changes Table serves as a concise summary of specific modifications made across various files in the latest commit, allowing developers to quickly identify where changes have occurred within the codebase.

Each row indicates an altered file, accompanied by a detailed description of the modifications in the Change Summary column. This includes updates related to styling in CSS files, application logic functionality adjustments, and CI/CD pipeline configuration enhancements.

By presenting this information in a structured format, the table offers clarity and organization, making it easier for developers to digest and understand the impact of each change on the project.

Overall, It acts as an essential reference for collaboration, enabling team members to focus on pertinent areas that may require further discussion or review while tracking the evolution of the codebase. To add some fun elements, it generates a poem for your errors.

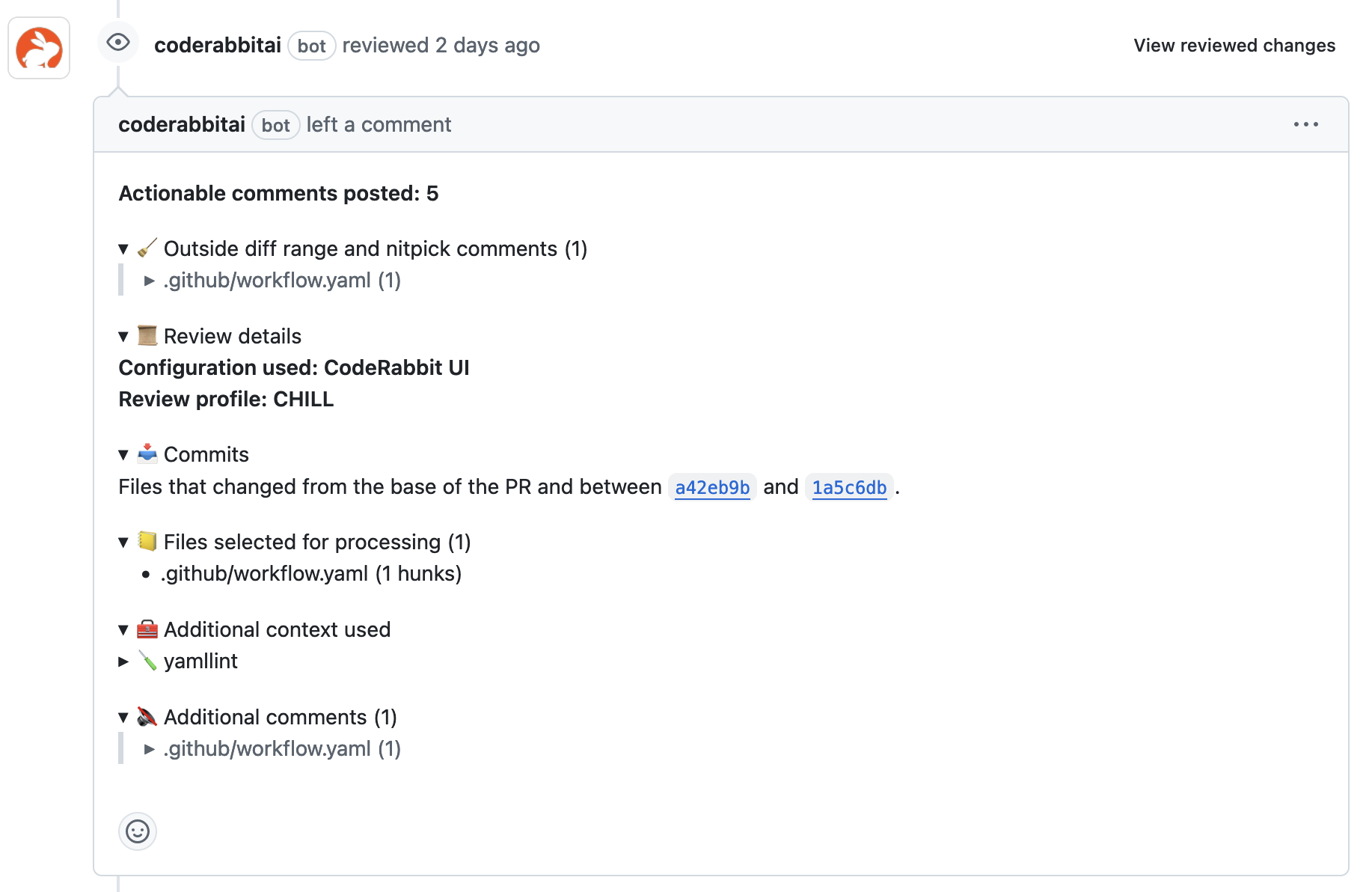

Code Review

The following section thoroughly examines the configuration file, identifying areas for enhancements. From improving caching strategies to optimizing deployment processes, these recommendations are designed specifically to code to enhance your GitHub Actions workflow's overall efficiency and robustness.

It provides details and an overview of the actionable comments about the review details, the configuration used, the review profile, the files selected for processing, and the additional context used. These can also include one-click commit suggestions.

Let us look at the various review comments that CodeRabbit suggested for various sections of the workflow file. It automatically detects that the configuration file is a GitHub Actions workflow and uses actionlint to analyze it thoroughly. During the review, CodeRabbit provides valuable insights and suggestions for optimizing performance.

In our lint job it identified an opportunity to cache npm dependencies using actions/cache@v3. By recommending the addition of a caching step before the linting process it helps reduce execution time for subsequent runs. This proactive feedback streamlines workflows without requiring manual intervention, ensuring a more efficient and optimized CI/CD pipeline.

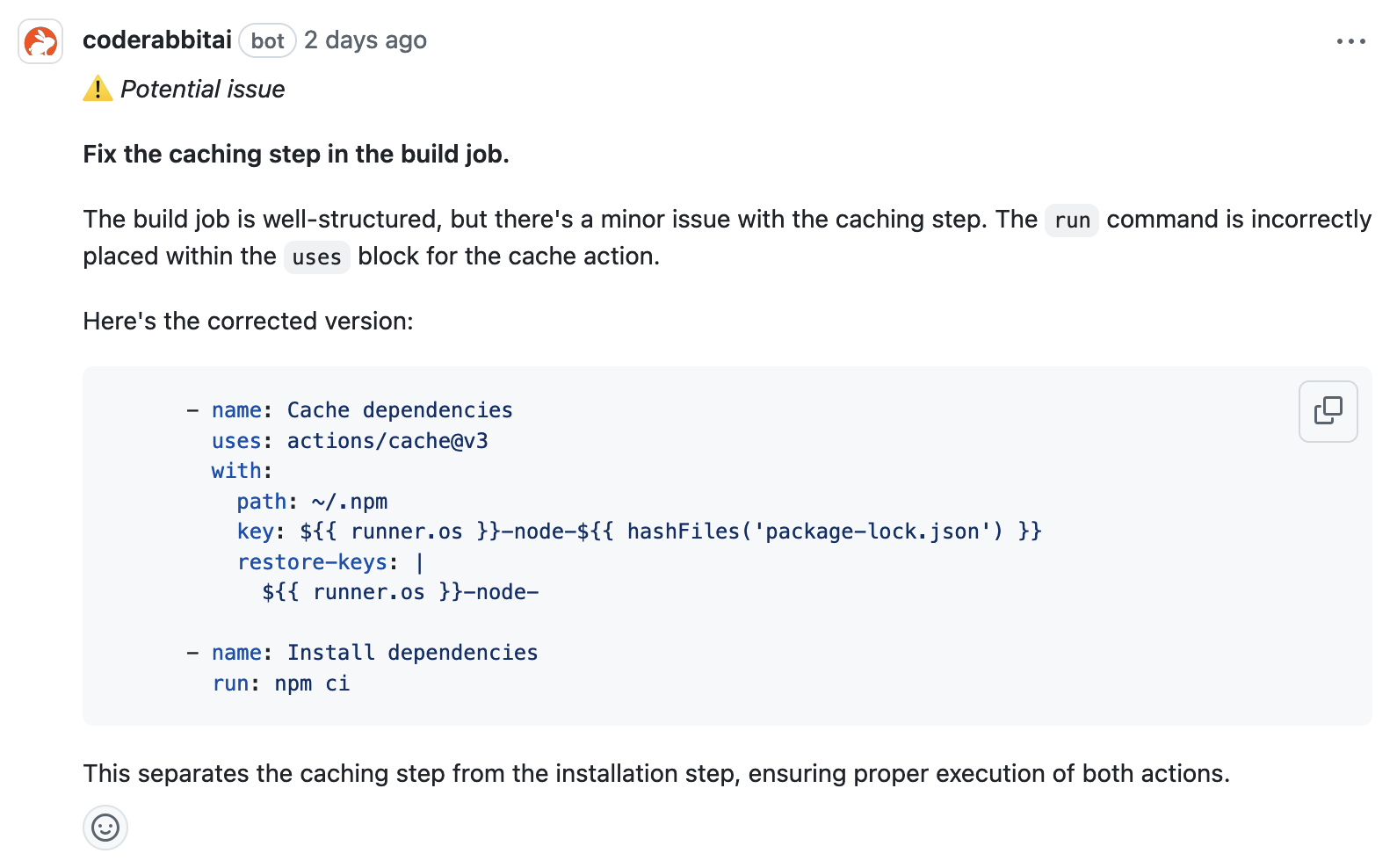

As it points out, the caching step is incorrectly structured. The run command (npm install) is placed within the uses block of the cache action, which could lead to improper execution.

To resolve this, it suggests separating the caching and installation steps. The corrected version moves the cache logic into its own block and ensures the npm install command is executed independently in the next step, using npm ci for a cleaner, faster installation of dependencies.

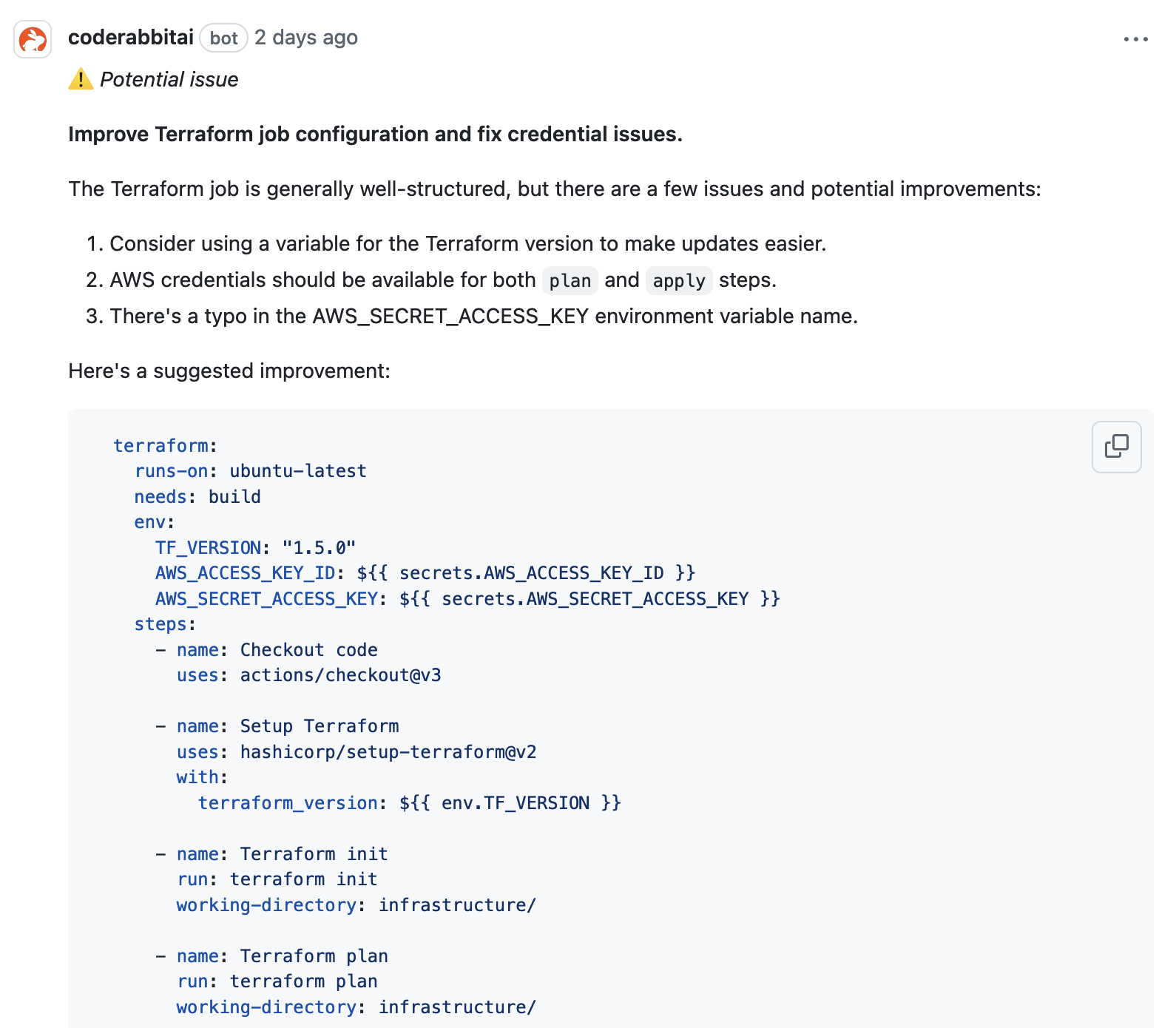

In the Terraform section of the configuration, it detects a potential issue using a variable for the Terraform version. Additionally, AWS credentials too would cause an issue, especially in plan and apply steps. Lastly, it detects typos in AWS_SECRET_ACCESS_KEY, as even minor mistakes can cause failures in your pipeline's execution.

It suggests the changes in the configuration that fixes the typos, makes the Terraform version easily updatable, and ensures AWS credentials are available for all Terraform commands.

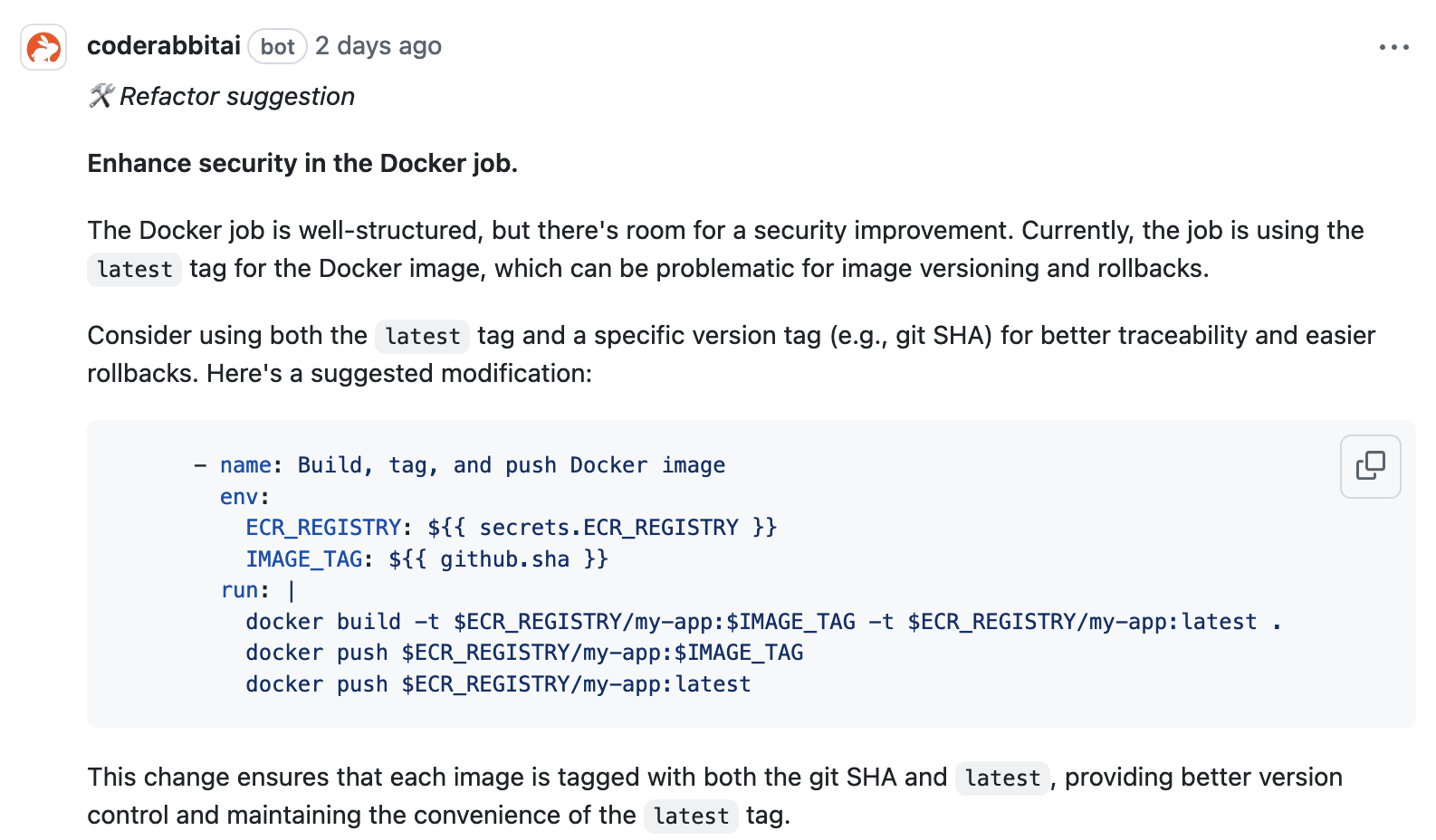

In the docker job, it detects security vulnerabilities as the image is using the latest tag for the Docker image, which can be problematic for image versioning and rollbacks. It suggests configuration changes that consider both the latest tag and a specific version tag (e.g., git SHA) for better traceability and easier rollbacks.

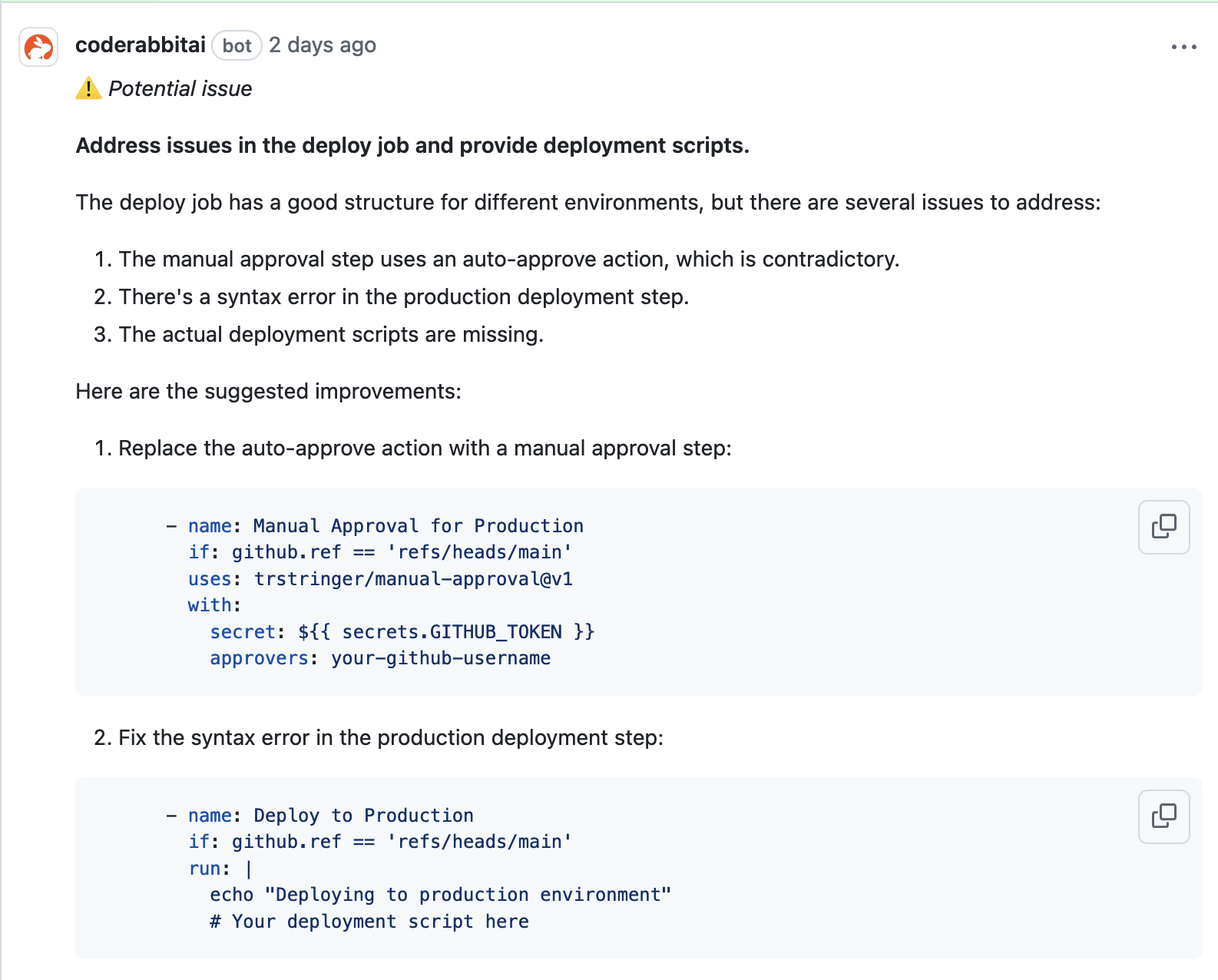

It also detected several potential issues in the deploy job that need attention. The manual approval step uses an auto-approve action, which defeats its purpose. Further, it finds a syntax error in the production deployment step, and the actual deployment scripts are missing, making the process incomplete. It suggests changes for these potential issues along with the required changes.

CodeRabbit’s AI-powered analysis showed how configuration file issues were quickly identified, highlighted, and suggested changes.

Benefits of Using CodeRabbit in CI/CD Pipelines

By automating code reviews and providing precise feedback, CodeRabbit improves code quality and ensures that potential issues and vulnerabilities in your CI/CD pipeline are caught early, leading to smoother deployments and fewer errors.

Let us look at some of the larger benefits of using CodeRabbit in CI/CD pipelines:

Enhanced Developer Productivity

By performing automated static checking workflows, CodeRabbit reduces the need for manual reviews and enables DevOps engineers to focus on strategic tasks like optimizing infrastructure and deployment processes rather than fixing configuration issues. Its instant feedback loop ensures issues are detected quickly and addressed after every commit, allowing you to maintain a rapid development pace.

Improved Code Quality

CodeRabbit enforces consistent configuration standards by automatically validating configuration files against the best practices and catching configuration errors early. The platform learns from the previous review, intelligently suppresses repetitive alerts and allows you to focus on the most critical issues, making code reviews more efficient without compromising on thoroughness. CodeRabbit can also provide one-click suggestions that you can quickly integrate into your configuration files.

Security

CodeRabbit helps DevOps engineers catch security vulnerabilities early, such as misconfigured access controls or insecure settings, reducing the chance of breaches. By integrating static checks into the CI/CD process, CodeRabbit prevents configuration errors from causing deployment failures, ensuring a more stable and reliable software delivery pipeline.

Conclusion

We saw in this post how misconfigurations can lead to delays, security vulnerabilities, or even broken deployments, making it essential to test them just as rigorously as application code.

Unlike traditional approaches that overlook the criticality of testing configuration files, CodeRabbit helps review CI/CD pipelines by automating code/config reviews, catching critical errors, and enhancing overall code quality. It significantly reduces manual review time, allowing DevOps teams to focus on strategic tasks and accelerate deployment cycles.

Experience the impact of AI code review on your workflows – start your free CodeRabbit trial today.

Subscribe to my newsletter

Read articles from Atulpriya Sharma directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by