The Powerhouses Behind the Pixels: A Fun Guide to GPUs

SHEKHAR SAXENA

SHEKHAR SAXENATable of contents

- Do You Really Need a GPU? Let’s Break It Down!

- GPUs vs. CPUs: The Smart Chef vs. the Speedy Kitchen Crew

- Architecture of GPUs: Building Blocks of the Graphics Galaxy

- A Brief History of GPUs: From Pixels to Powerhouses

- Big Markets in GPUs: From Gaming to… What not actually?

- Cost of GPUs: When Your Wallet Starts to Scream!

- NVIDIA’s Hype: The Green Giant of GPUs

- GPUs vs. AI Chips: Different Tools for Different Jobs

- Conclusion

Think about a world, you’re stuck playing Minesweeper forever, the best video edit you can make for your wedding is a cheesy rotating photo effect, and Google can’t bombard you with ads for things you just whispered about. Welcome to a world without GPUs!

Now you get the picture — GPUs are the real MVPs of the tech world! Think of a GPU as the superhero of computing, designed to handle huge amounts of visual data super-fast. They started out just making your screen look great, but now they’re multitasking champions, powering everything from AI to cryptocurrency mining!

Do You Really Need a GPU? Let’s Break It Down!

Ah, the age-old debate! Here’s a fun guide to help you decide.

You Probably Need a GPU If …

You’re a gamer who wants to spot every blade of grass and catch the tiniest enemy movement in PUBG before they catch you!

You’re editing the next Marvel movie (or just trying to remove that person you don’t like from a video).

You’re training AI model to predict the next meme trend (or figuring out what your dog’s thinking).

You’re designing 3D models of futuristic superheroes (or rearranging your dream living room that’s a bit out of your budget).

You Probably Don’t Need a GPU If …

Your idea of gaming involves Solitaire or Minesweeper marathons.

The most intense rendering you do is in Word or Docs when you finally get that “12-point Times New Roman” just right.

Your trickiest calculation involves splitting the dinner bill — should it be 50/50 or do you let them pay for dessert?

You think — “A GPU will make me browse faster!” — Nope, that’s like using a sports car to deliver groceries.

You think — “My spreadsheets will calculate faster!” — Sorry, Excel wizards, that’s CPU territory.

“I need a GPU to watch YouTube.” — Unless you’re watching in 8K while simultaneously editing another video, probably not.

In short, a GPU is powerful, but it’s not a magic wand! Use it wisely.

GPUs vs. CPUs: The Smart Chef vs. the Speedy Kitchen Crew

So, what’s the difference between GPUs (Graphics Processing Units) and their cousin, the CPU (Central Processing Unit)?

Imagine a CPU as a master chef in a fancy restaurant. This chef is a culinary genius, quick on their feet. But with only one chef in the kitchen, when a flood of customers shows up, things get messy — suddenly, you’re waiting an hour for your soup!

Now, picture a GPU as an entire kitchen army, where every cook has their own job. They may not be experts in every dish, but they can handle hundreds of orders all at once! Each "cook" (or core) in the GPU handles a small part of the job, so when things get busy, they can process a ton of data faster.

CPU: Great at tackling one complex task, like perfecting that cake.

GPU: Fantastic at juggling lots of smaller tasks all at once, like rendering every pixel in an image — without breaking a sweat!

Architecture of GPUs: Building Blocks of the Graphics Galaxy

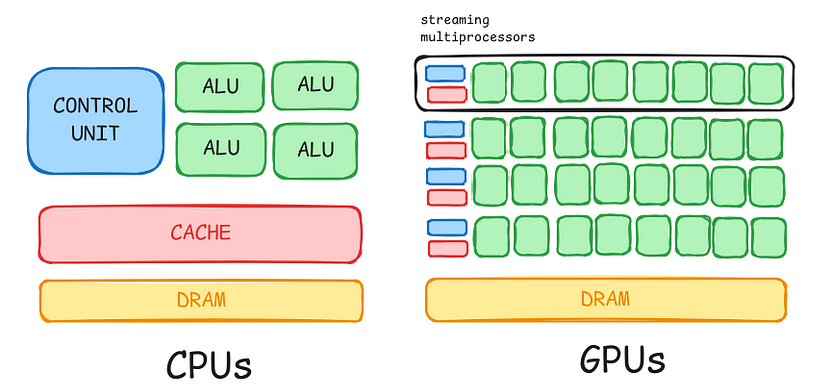

Basic Architecture of GPUs and CPUs

Let’s break down the GPU like it’s a LEGO set, shall we? Don’t worry; no tiny pieces to step on here —

Cores (or Stream Processors): Think of these as the workers in a big factory assembly line. They’re simple but numerous, working in parallel to process data. Imagine a thousand tiny minions, each painting one pixel really fast!

Memory: This is where the GPU stores textures, models, and other data. It’s like a huge whiteboard where all the minions can see and share information. Types include GDDR6, HBM2, etc. (Don’t worry, there’s no quiz on these acronyms!)

Cache: A small, super-fast memory that helps keep frequently used data close at hand. It’s like having a mini-fridge next to your work desk. Snacks, anyone?

Render Output Units (ROPs): These finalize the pixel data before it’s sent to your display. Think of them as the quality control team, making sure every pixel looks just right.

How It All Works Together:

Imagine a massive water balloon fight (stay with me here). The cores are the people filling and throwing balloons, the memory is the water supply, the cache is buckets placed strategically around the field, and the ROPs are the referees making sure everyone gets properly soaked.

When you play a game or render a 3D scene, your GPU:

Loads data from main memory into GPU memory

Processes this data through thousands of cores simultaneously

Uses cache to quickly access frequently needed info

Finalizes the image through ROPs

Sends the completed frame to your display

And it does this hundreds of times per second! No wonder your GPU needs a fan; that’s a lot of work!

A Brief History of GPUs: From Pixels to Powerhouses

Buckle up as we take a thrilling ride through the history of computer graphics with some key milestones!

1970s: Welcome to the Stone Age of computer graphics! Pong bounces onto screens, captivating a generation with its cutting-edge… well, lines!

1981: The IBM PC introduces the Color Graphics Adapter (CGA). A whopping 16 colors! The future is looking bright (and a bit funky)!

1999: NVIDIA releases the GeForce 256, officially coining the term “GPU.” Now we’re talking modern graphics!

2001: ATI (now AMD) and NVIDIA kick off their epic rivalry for GPU supremacy, launching us into the modern era of graphics cards — let the games begin!

2006: NVIDIA introduces CUDA, allowing GPUs to do more than just graphics. Who knew they could handle general computing tasks too?

2013: AMD and NVIDIA push GPUs into 4K resolution gaming. Why settle for less when you can have ultra-sharp graphics?

2017: GPUs become essential for cryptocurrency mining, and demand skyrockets. Suddenly, everyone wants a piece of that digital gold!

2018: Real-time ray tracing becomes a reality in consumer GPUs. Shadows and reflections look so real, you might double-check your own reflection!

2020s: Today, GPUs aren’t just about graphics — they power AI, machine learning, and even simulations of everything from weather patterns to the human brain. Talk about multitasking!

From humble beginnings to pixel-packed powerhouses, GPUs have come a long way — and they’re still going strong!

Did you know that the GPU in your smartphone is more powerful than the graphics hardware used to create the dinosaurs in the original Jurassic Park? Talk about evolution!

Big Markets in GPUs: From Gaming to… What not actually?

Gaming: The original superstar! GPUs create stunning worlds, allowing gamers to explore sharper, faster, and way more immersive realms — who needs reality anyway?

AI and Machine Learning: Data scientists love GPUs for crunching huge datasets all at once, making AI smarter and faster — because who doesn’t want a robot quicker than your Wi-Fi?

Cryptocurrency Mining: Cryptominers use GPUs to solve puzzles and dig up coins like Bitcoin and Ethereum — true pirates of the virtual world!

Content Creation: Video editors and designers depend on GPUs to turn high-definition dreams into reality — making every frame look so good, it could win an Oscar!

Silly Future GPU Uses (We Hope):

Toast Browning Optimization Algorithms: Finally, perfectly golden toast every time!

Real-time Beard Physics Simulations: Because every beard deserves realistic physics!

Quantum Entanglement of Pizza Toppings: Making sure your pepperoni is always in the right place — across the universe!

Who knows? With how fast GPUs are advancing, these might not be so silly in a few years!

Cost of GPUs: When Your Wallet Starts to Scream!

Ah, the cost of GPUs — a topic that can make even the toughest tech enthusiast shed a few silicon tears! Let’s dive into why these pixel-pushing powerhouses can cost more than a second-hand car (and sometimes even a brand-new one if you’re eyeing that Alto).

Factors Contributing to High GPU Costs:

Cutting-Edge Technology: GPUs are at the cutting edge of computing innovation. It’s like buying a ticket to the future, and the future doesn’t come cheap!

Complex Manufacturing Process: Modern GPUs pack billions of transistors. Picture building a city where each house is smaller than a virus!

Research and Development: Companies put a lot of money into creating new GPU architectures. It’s an arms race — but instead of missiles, they’re using transistors!

Supply and Demand: With gamers, crypto miners, and AI researchers all asking for GPUs, when demand outstrips supply, prices shoot to the moon!

NVIDIA’s Hype: The Green Giant of GPUs

NVIDIA — the company that made GPUs a household name!

The Secret Sauce of NVIDIA’s Success:

They’re always pushing the limits with features like real-time ray tracing. It’s like they have a time machine, but just for graphics!

With CUDA, they opened up GPUs for general-purpose computing. Suddenly, scientists could use gaming hardware for serious simulations. Talk about a major upgrade!

Their collaborations with game developers ensure titles are “optimized for NVIDIA.” It’s like having a VIP pass to the best club in town — just for pixels!

And let’s not forget CEO Jensen Huang and his iconic leather jacket. Name a more dynamic duo! Each launch feels like a blockbuster movie premiere (but with more graphs).

GPUs vs. AI Chips: Different Tools for Different Jobs

Both GPUs and AI chips are powerful, but they excel in different ways. GPUs are fantastic at juggling lots of small tasks at once, while AI chips, like Google’s Tensor Processing Units (TPUs), are made for deep learning. Think of it like training one dog (GPU) versus trying to train a hundred dogs at once (AI chip)! Each has its own strengths, and both are important for tech progress.

And if you’re confused about what a TPU is, no worries! Just reach out, and I’ll put together another fun guide to explain it. I promise it’ll be entertaining — just let me know if you’re actually reading this!

Conclusion

That was a quick look at the amazing world of GPUs! We’ve explored how these incredible machines have evolved and all the ways they power our digital lives. Whether you’re a gamer, an AI fan, or just someone who loves technology, there’s a place for you in the GPU world. So go ahead, tech wizard, and let your dreams take flight with the power of GPUs!

Subscribe to my newsletter

Read articles from SHEKHAR SAXENA directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by