Automate Your Backups to Amazon S3 with Python

Shivam Soni

Shivam Soni

As data continues to be a critical asset in the digital world, creating reliable backups is more important than ever. Automating backups can help prevent data loss, streamline restoration, and reduce manual effort, especially for crucial files and directories. In this article, we’ll explore how to automate creating backups of a directory, storing them as compressed files, and uploading them to an Amazon S3 bucket using Python.

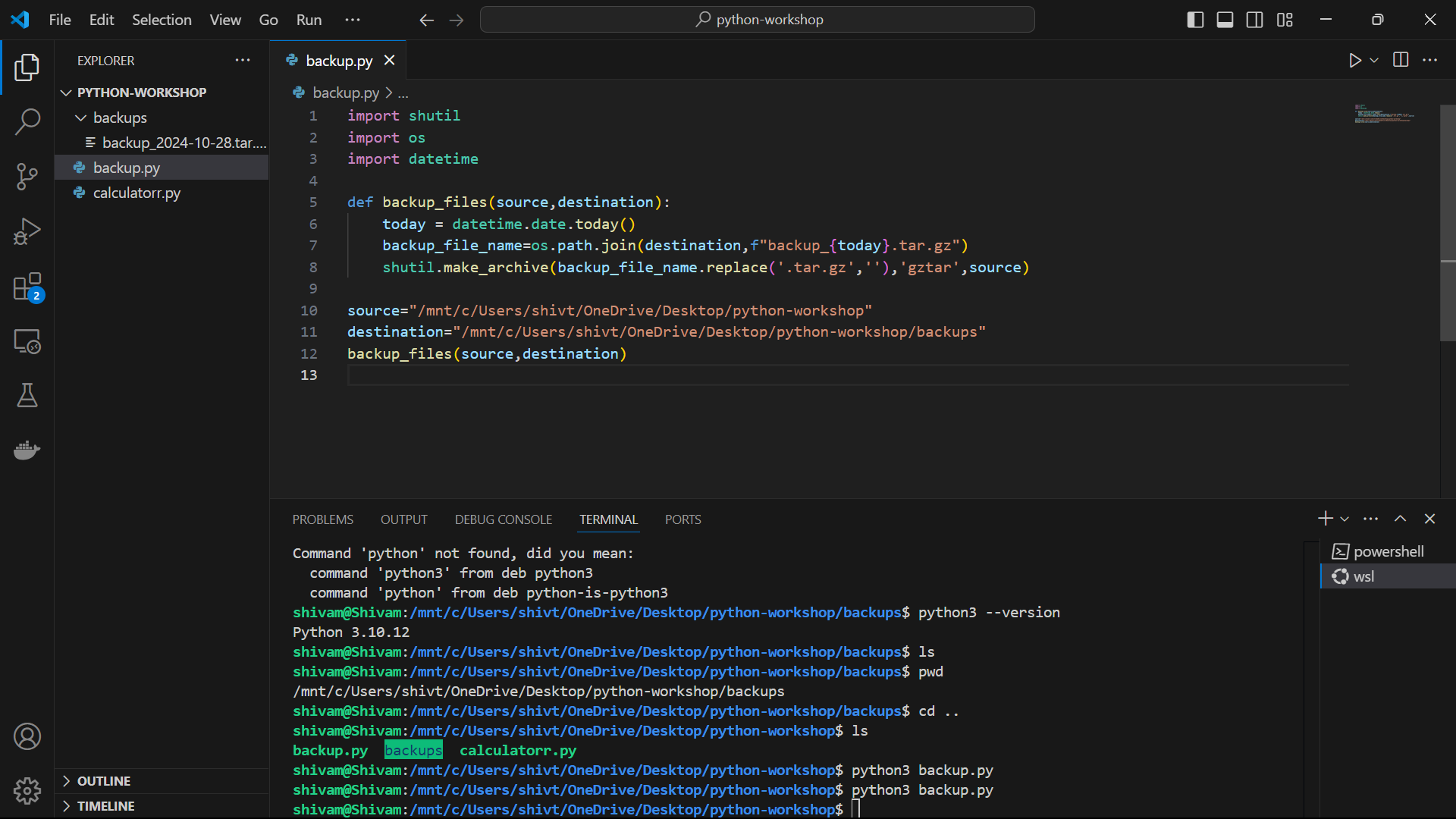

Step 1: Creating a Backup as a Compressed File

The first step in creating a backup of a directory involves packaging its contents in a compressed format. Compressing a directory as a .tar.gz file is a common approach for storing data efficiently, as it reduces the storage space needed for the backup. This format is especially useful for archiving data, as it retains the original file and directory structure, which helps when restoring files. Python's built-in libraries offer straightforward methods to compress entire directories into .tar.gz files, making it a great choice for automating backup workflows.

When i run the command “python3 backup.py” a backup of the source path in created and saved in the destination path i.e “backup_2024-10-28.tar.gz”

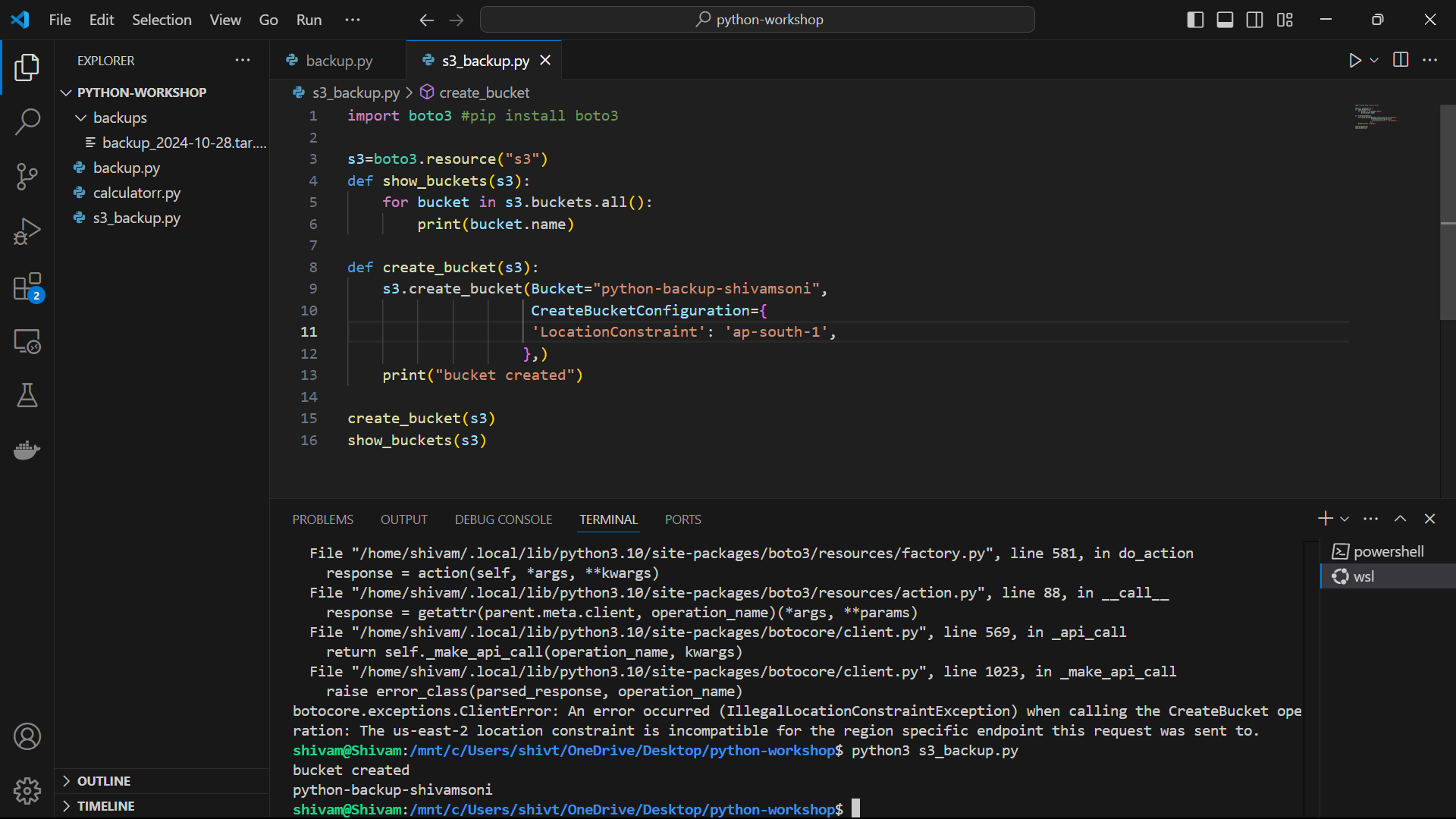

Step 2: Setting Up an Amazon S3 Bucket

Amazon S3 (Simple Storage Service) is one of the most widely used cloud storage solutions, known for its durability, availability, and cost-effectiveness. To store our backup files securely in the cloud, we'll set up an S3 bucket, which is essentially a container for our data. With the help of the boto3 library in Python, creating an S3 bucket is straightforward, and we can control its permissions, storage class, and region, making it highly customizable based on our requirements. S3 is ideal for long-term storage of backups due to its scalability and the various lifecycle management options it offers.

Import boto3

Run command “pip install boto3”

Create s3_backup.py file with the code and run command “python3 s3_backup.py”

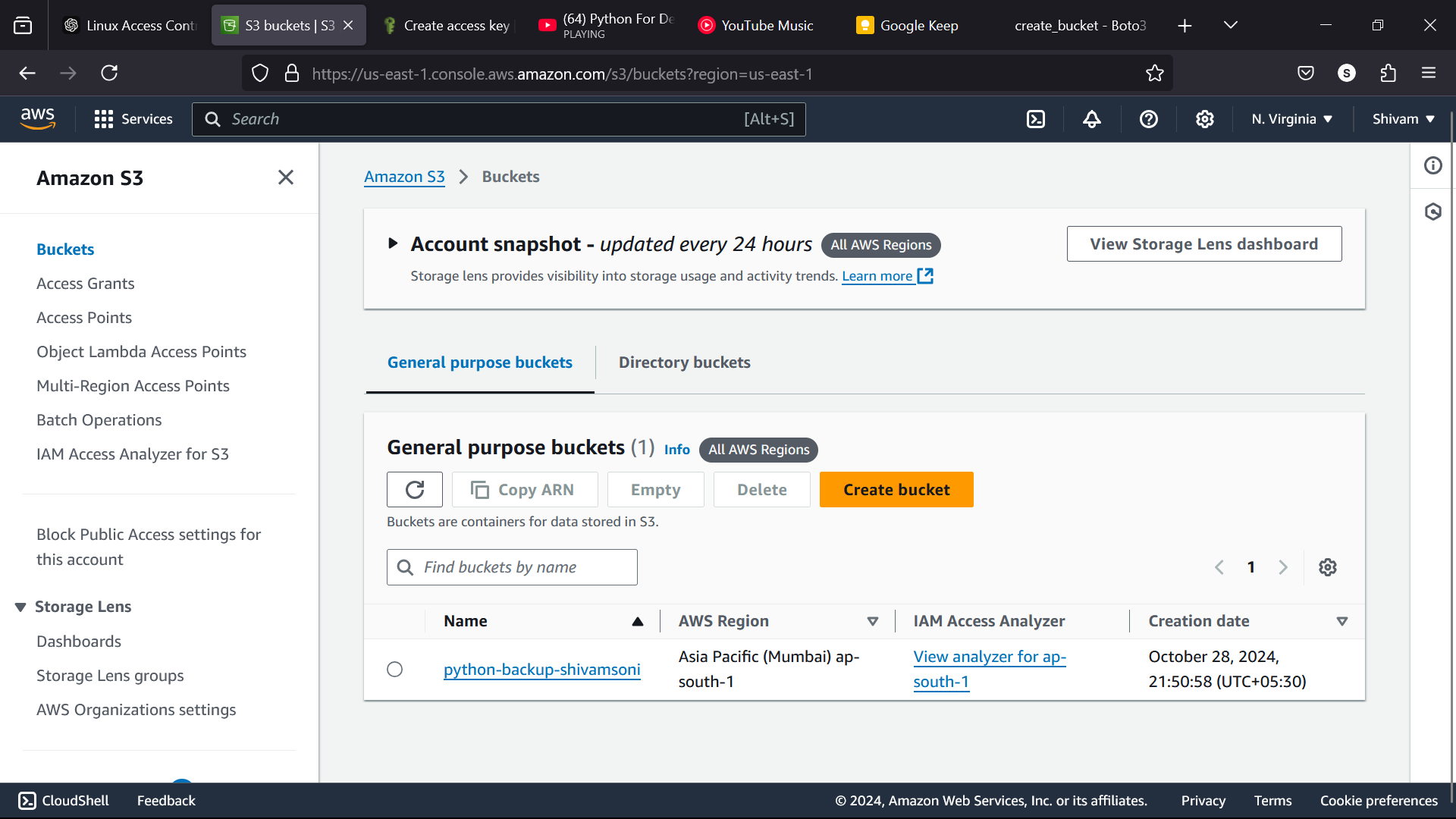

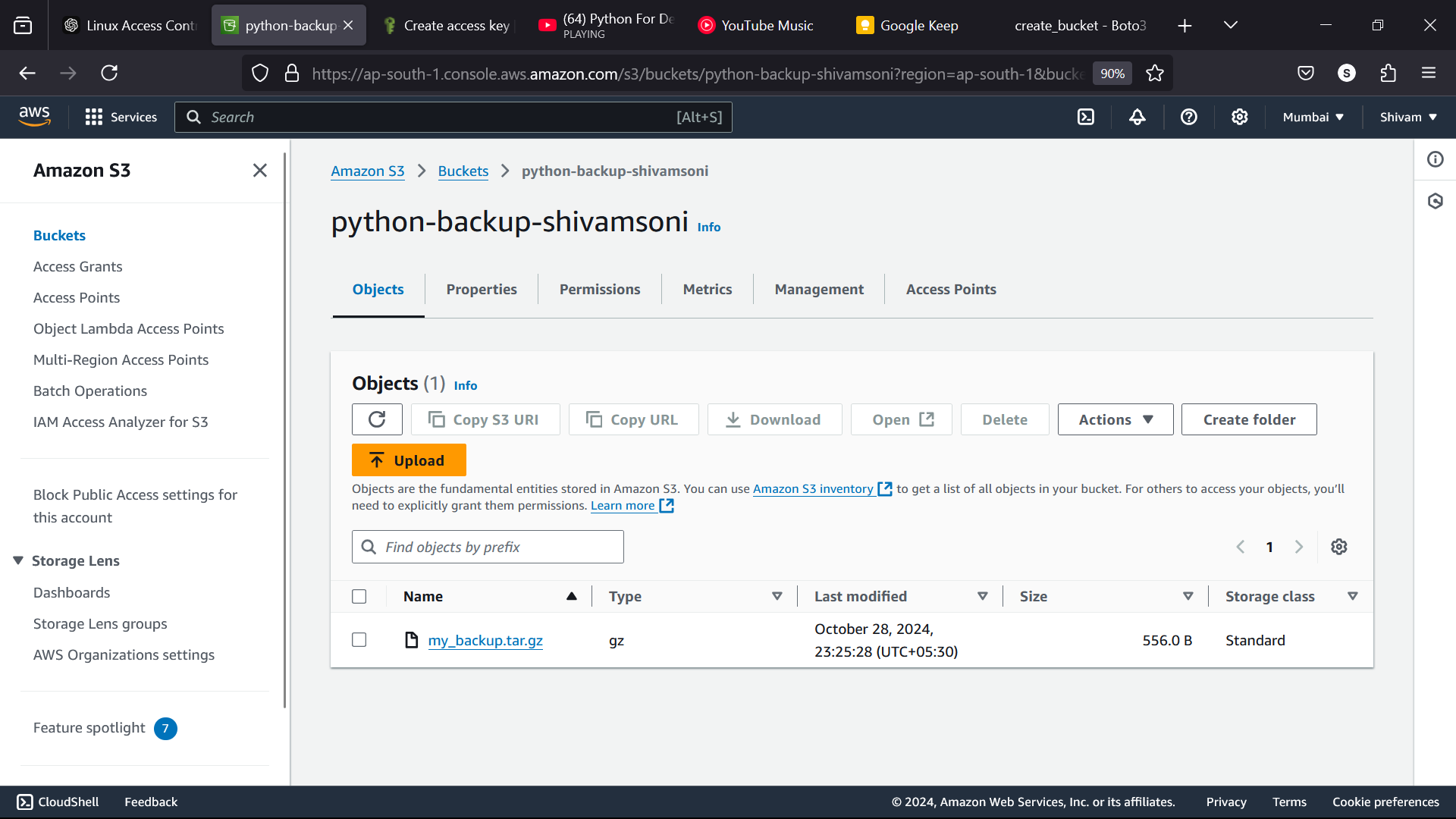

This will create an s3 bucket in your AWS account as shown below

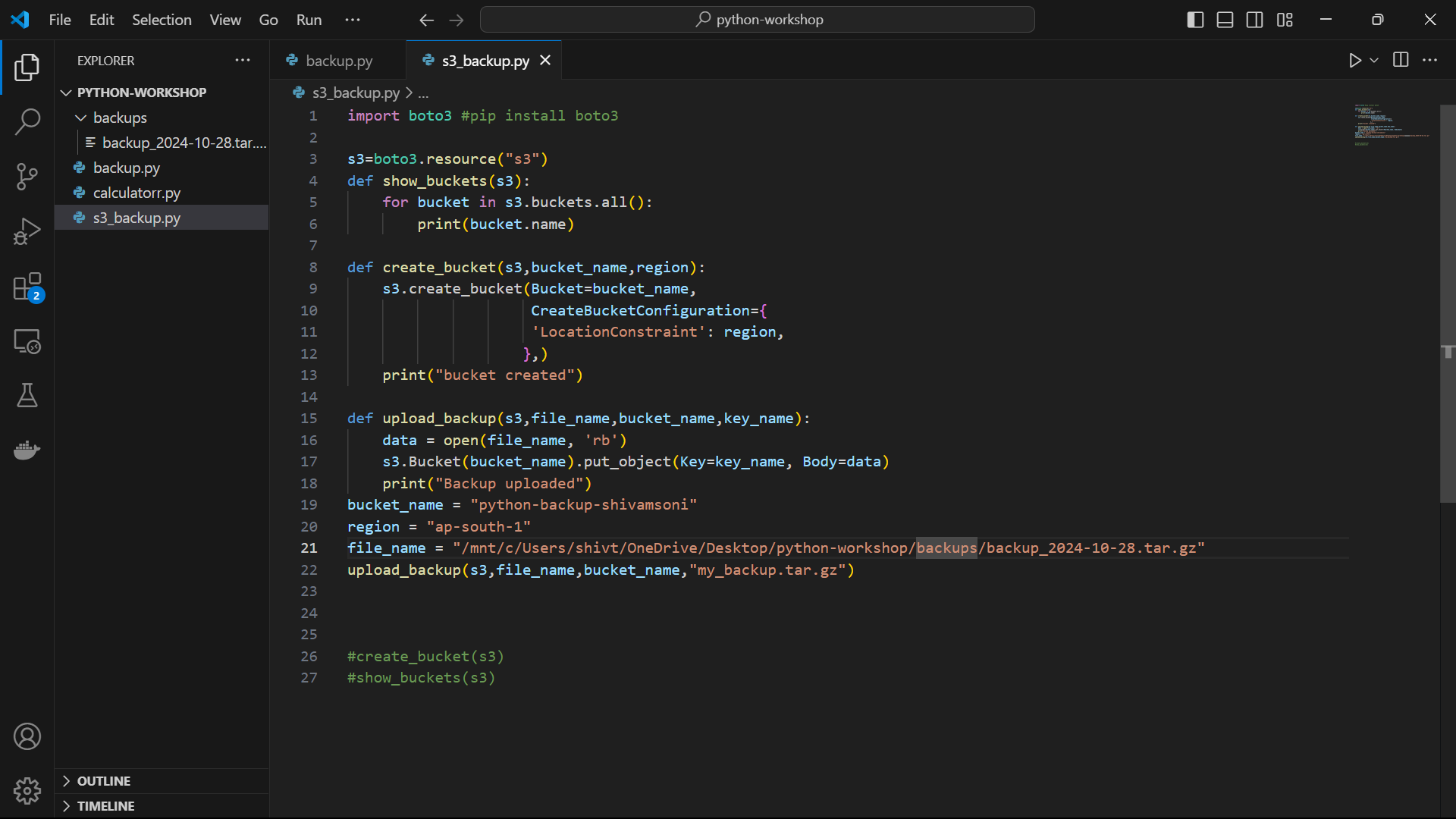

Step 3: Uploading the Backup to Amazon S3

With our backup file ready and our S3 bucket set up, the next step is to upload the .tar.gz file to the S3 bucket. Using boto3, Python makes it easy to securely transfer files to S3. This library not only enables file uploads but also provides options to configure permissions, storage class, and encryption for the uploaded file. By storing backups in Amazon S3, you gain the advantage of redundancy, access control, and ease of retrieval from anywhere with the right credentials. This approach ensures that your data is both protected and accessible, even in disaster recovery scenarios.

Create an upload_backup() function which creates a binary format of the backup stored in the file_name and upload it as an object in S3 Bucket

Mention bucket_name, region and file_name destination.

Run command “python3 s3_backup.py” and the backup tar.gz file will be uploaded in the S3 bucket.

Why Automate This Process?

Automating the entire process – from compressing files to uploading them to S3 – ensures that backups are done consistently and without requiring manual intervention. It saves time, reduces human error, and ensures regular data backups. By implementing this process in Python, you create a lightweight, reusable solution that can be scheduled to run periodically, ensuring you always have an up-to-date backup in case of system failure or accidental data loss.

In Summary

By combining Python’s powerful file handling capabilities with Amazon S3’s secure cloud storage, you can build a simple yet robust backup solution. Here's a quick recap:

Compress the directory into a

.tar.gzfile for efficient storage and easy restoration.Set up an Amazon S3 bucket using Python’s

boto3library, giving you a cloud-based storage option with various management capabilities.Upload the compressed backup file to S3, taking advantage of Amazon’s reliable and secure storage.

Automating backups with Python and Amazon S3 enhances data resilience, providing peace of mind and ensuring that your data is safe, accessible, and easily recoverable.

#Devops #AmazonS3 #Python #Backup

Subscribe to my newsletter

Read articles from Shivam Soni directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Shivam Soni

Shivam Soni

🌟I enjoy sharing my insights and experiences with the community through blog posts and speaking engagements.📝💬 My goal is to empower teams to achieve operational excellence through DevOps best practices and a culture of continuous improvement.💪🌟