AI Chatbots: The Perpetual Fountain of Fortune Cookie Insights

Gerard Sans

Gerard Sans

In the workplace, AI chatbots shine brilliantly. They're the perfect digital assistants - drafting emails, condensing lengthy meetings into digestible summaries, and even churning out code to automate those mind-numbing repetitive tasks. It's a productivity paradise!

The Fortune Teller Syndrome

But step outside the office, and these same chatbots often transform into something more akin to digital fortune tellers. People huddle around their screens, asking about job prospects, relationship outcomes, and even election predictions. Here's the uncomfortable truth: these AI systems, despite their impressive capabilities, aren't oracles. They can't tell you if you'll get that dream job any more than a Magic 8-Ball can.

The Statistical Illusion

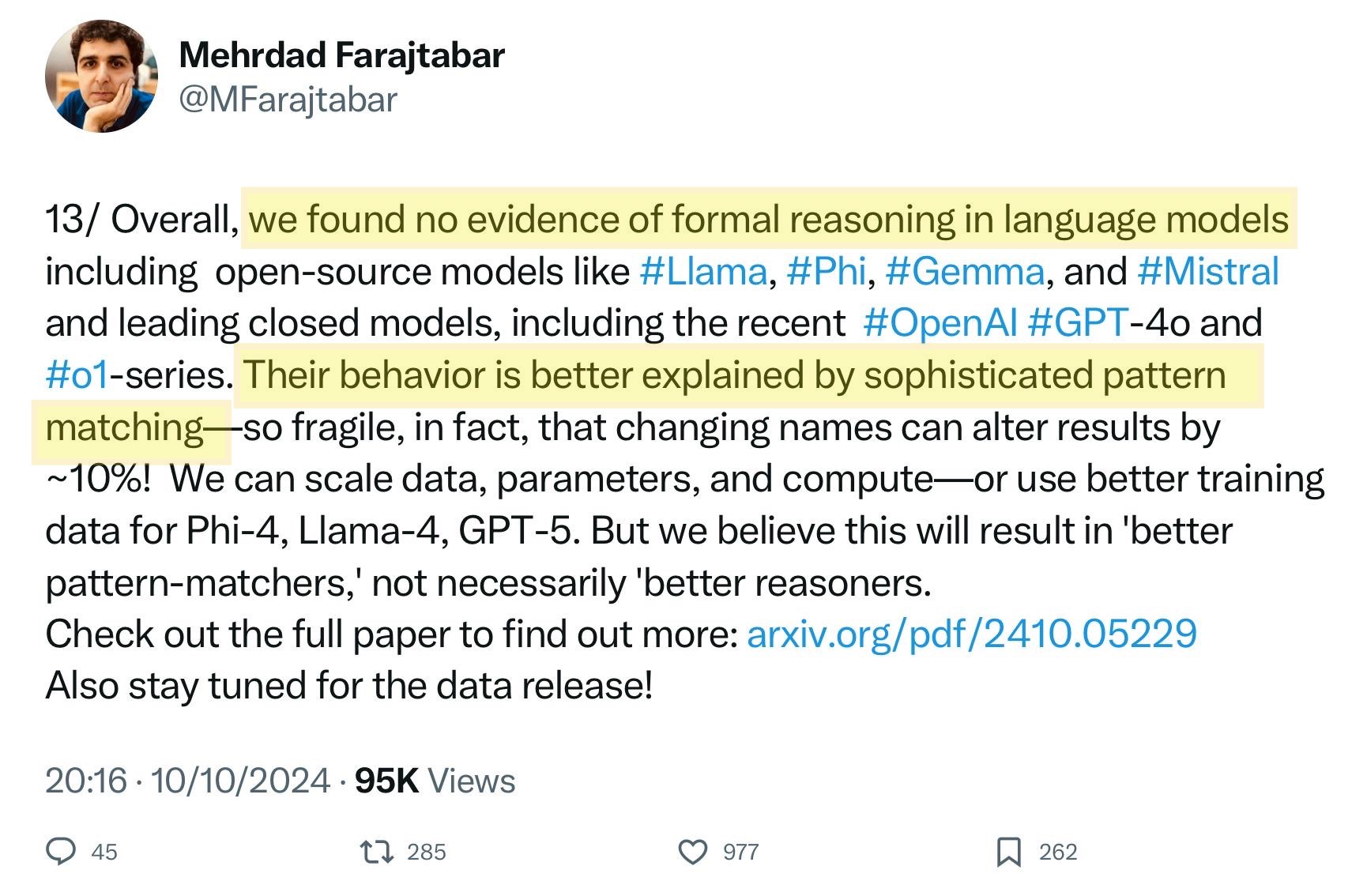

Modern AI isn't the sage wizard many imagine it to be - it's more like a sophisticated pattern-matching machine. When you ask it about fruits, it's not philosophically pondering its preference for apples over oranges. It's simply reflecting statistical patterns in its training data. If apples appear more frequently in its dataset, guess what fruit will dominate its responses?

Recent paper published by Apple confirmed chatbots including the latest generation of o1 models don’t possess reasoning capabilities.

The True Believers' Club

Yet, no matter how often we explain these limitations, some users remain steadfastly convinced of their chatbot's near-mystical intelligence. "But it wrote this amazing poem!" they'll exclaim, or "It knew this obscure historical fact!" While these achievements are impressive, they're still just sophisticated pattern recognition at work, not genuine understanding or creativity.

Why It Matters

The real danger isn't in using chatbots - it's in misunderstanding their capabilities. Becoming emotionally dependent on AI companionship, treating their outputs as gospel truth, or worse, delegating critical decisions to these systems can lead down a perilous path.

The High-Stakes Game

Would you flip a coin to make life-altering medical decisions? Of course not. Yet some people effectively do just that by blindly trusting AI outputs in critical situations. Without domain expertise to verify the AI's suggestions, users risk making decisions based on potentially flawed or incomplete information.

Where to Draw the Line

Some areas should remain strictly in human hands - or at least under expert supervision. Healthcare decisions, financial planning, legal advice, mental health support, and anything involving potentially dangerous activities should never be left solely to AI judgment.

The Way Forward

Does this mean we should abandon chatbots? Absolutely not. They're invaluable tools when used appropriately - as assistants rather than authorities, as helpers rather than decision-makers. The key lies in understanding their limitations and using them responsibly, always maintaining a healthy skepticism and relying on human expertise for critical decisions.

Remember: AI chatbots are powerful tools, but they're not all-knowing oracles. Use them wisely, and they'll serve you well. Trust them blindly, and you might as well be consulting a crystal ball.

Subscribe to my newsletter

Read articles from Gerard Sans directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Gerard Sans

Gerard Sans

I help developers succeed in Artificial Intelligence and Web3; Former AWS Amplify Developer Advocate. I am very excited about the future of the Web and JavaScript. Always happy Computer Science Engineer and humble Google Developer Expert. I love sharing my knowledge by speaking, training and writing about cool technologies. I love running communities and meetups such as Web3 London, GraphQL London, GraphQL San Francisco, mentoring students and giving back to the community.