A Brief Guide to Neural Networks: From Structure to Activation Functions

Ezaan Amin

Ezaan AminOne of the key elements in Artificial Intelligence (AI) is the neural network. But what exactly is a neural network? In my blog “From Turing to ChatGPT 4: A Historical Odyssey of AI,” I delved into the history of AI, including the development of neural networks. For the purposes of this blog, however, I’ll provide a brief overview of how neural networks were invented.

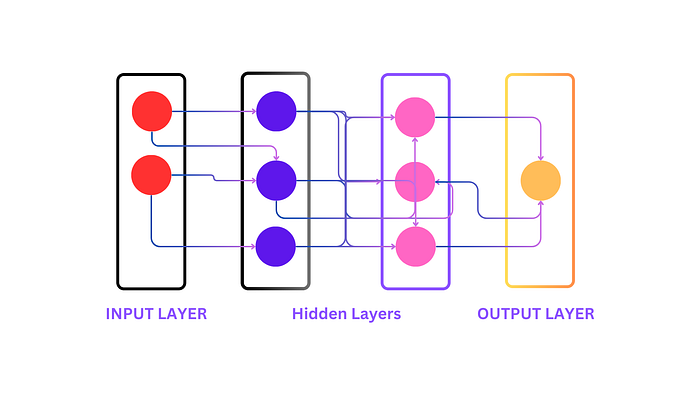

Google defines a neural network as a method in artificial intelligence that teaches computers to process data in a way inspired by the human brain. But how does the human brain work? There are approximately 86 billion neurons inside the human brain, and all of these neurons are interconnected to process and transmit information through electrical and chemical signals. This mechanism inspired the development of artificial neural networks. The diagram below illustrates a very simple neural network.

Basic Structures of Neural Network

The above diagram should a very a basic structures of neural network. The neural network overall consist of three components:

Input Layers

Hidden Layers

Output Layers

Let’s look at each of these layers in details

1.Input Layers

Input Layer is the first Layer in the Neural Network. As the name suggest This layer is the input of the neural network . The notion of neural network can is a a1[1] where a is the name of the input layer and a1 is the first node of inside the input layer [1] denote the first layer of the neural network . In some research paper [1] refer to the first hidden layer as they don’t consider input layer as the layer .

Activation Function

Before going on to the Hidden Layer, we need to discuss what Activation Functions are.

An Activation Function decides whether a neuron should be activated or not. This means that it will decide whether the neuron’s input to the network is important or not in the process of prediction using simpler mathematical operations.

There are many types of Activation Function. The most common of them are

Linear Activation Function

Sigmoid Activation Function

Tanh Activation Function

Relu Activation Function

Let’s take a look of each of the Activation Function in details

1.Linear Activation Function

The linear activation function, also known as no activation. where the activation is proportional to the input. Basically when we multiply by the linear function output is equal to the input. There are a very few use cases where you use linear activation function . In regression problems, a linear activation function is appropriate for the output layer to predict continuous values.But with the condition that in hidden layer either you use Tanh or relu as activation function

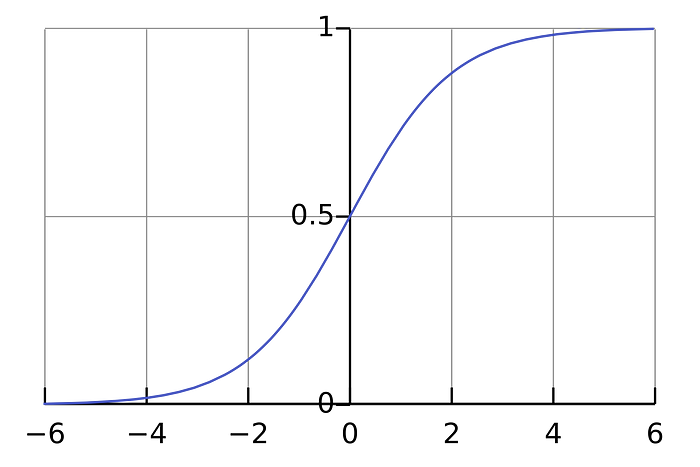

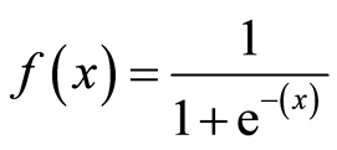

2. Sigmoid Activation Function

The formula for Sigmoid Activation Function

But usually sigmod function isn’t used that much because there is a Activation Function that is far superior than sigmod function. In fact it is mostly used when it comes to classification problem (when the output is either 1 or 0)

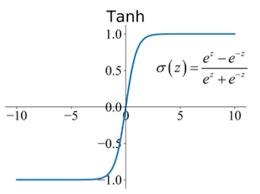

2. Tanh Activation Function

Unlike the Sigmoid function, which maps input values to a range between 0 and 1, Tanh maps inputs to a range between -1 and 1. This means that Tanh is zero-centered, which helps mitigate issues related to gradients being too small (vanishing gradients) during training. Zero-centered activation functions like Tanh often lead to faster convergence and better performance in training neural networks, as the gradients do not get squashed into a small range.

However, it’s important to note that the choice of activation function can depend on the specific characteristics of the problem and the architecture of the neural network. For classification problems, the Sigmoid function is commonly used in the output layer to produce probabilities. For other types of problems, such as regression or complex feature representations, Tanh or other activation functions might be more suitable.

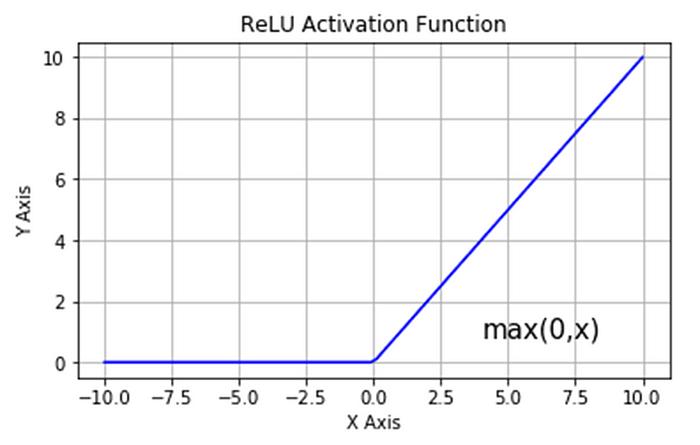

3. Relu Activation Function

The ReLU (Rectified Linear Unit) activation function is popular in neural networks because it helps mitigate the vanishing gradient problem and is computationally efficient. It outputs the input value directly for positive inputs and zero for negative inputs, promoting faster convergence and sparse activations.

Hidden Layers

The hidden layers in a neural network are where the magic of learning happens. These layers lie between the input and output layers and are responsible for transforming the input data into something the network can use to make predictions. Each neuron in the hidden layers processes input from the previous layer, applies an activation function, and passes the result to the next layer. The complexity and number of hidden layers can significantly impact the network’s ability to model intricate patterns and relationships in the data.

Output Layers

The output layer is the final layer in a neural network, where the actual predictions or classifications are made. Its structure and activation function depend on the specific task at hand — whether it’s regression, classification, or another type of prediction. For classification tasks, the output layer often uses the Softmax or Sigmoid activation functions to produce probabilities for each class, while for regression tasks, a linear activation function might be used to predict continuous values.

Conclusion:

Neural networks are powerful tools in AI, inspired by the human brain’s intricate network of neurons. Understanding the roles of input, hidden, and output layers, along with activation functions, is crucial for designing effective neural networks. As these networks evolve and become more complex, they continue to push the boundaries of what AI can achieve, making it an exciting field to explore.

Subscribe to my newsletter

Read articles from Ezaan Amin directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Ezaan Amin

Ezaan Amin

Hey there! I'm Ezaan Amin, a dedicated student and MERN Stack Developer with a passion for technology, particularly in web development and machine learning. I'm committed to refining my skills and contributing positively to the tech sector. During my academic journey, I've undertaken various projects, including developing a comprehensive restaurant management system over 6 months (3 months for the admin app and 3 months for the user-facing app) and creating a social media platform focused on mental health awareness within 4 months. These experiences have provided me with valuable insights into software development, data analysis, and machine learning techniques. In addition to these projects, I have completed several smaller projects, such as a real-time chat application and a personal finance tracker, each within a 2-month timeframe. These projects have strengthened my skills in front-end and back-end development, database management, and user experience design.