A Brief Guide to Neural Networks: From Structure to Activation Functions

Ezaan Amin

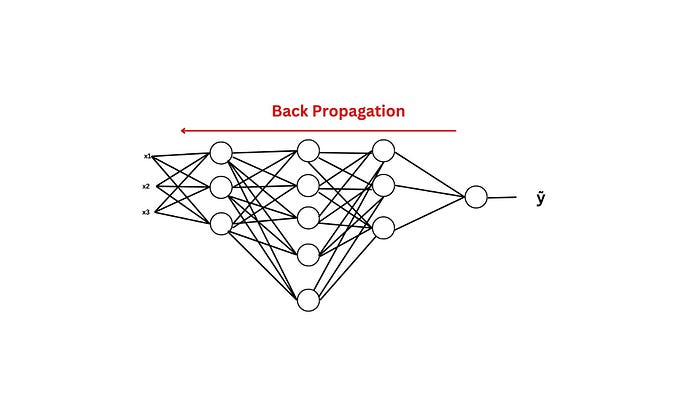

Ezaan AminNeural networks are essential for many modern AI applications, from image recognition to natural language processing. Two fundamental processes that enable these networks to learn from data are Forward Propagation and Backpropagation. Let’s explore these processes in detail, starting with the structure of a neural network.

Neural Network Structure: Layers and Notations

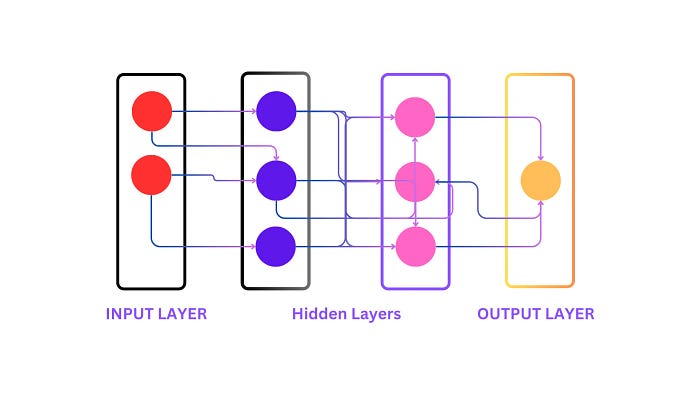

A neural network typically consists of three main layers:

Input Layer: Receives the input data, where each node corresponds to a feature.

Hidden Layer: Processes inputs from the input layer. The term hidden signifies that these layers are not directly observable.

Output Layer: Provides the final predictions based on the hidden layers computations.

Nodes in the input layer are denoted as a[0], and nodes in the hidden layer are denoted as ai[1] where i refers to the specific node.

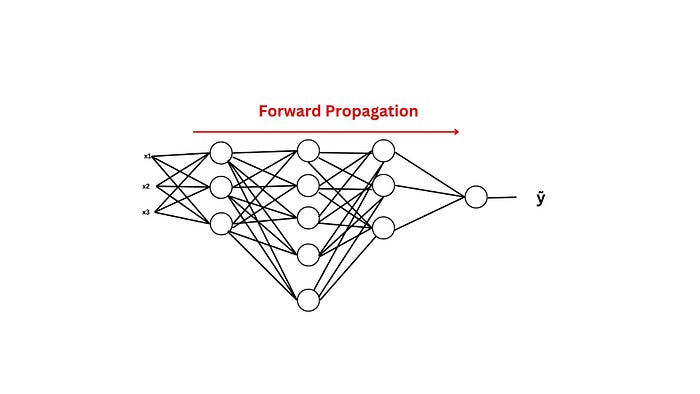

Forward Propagation: Data Flow

Forward propagation involves moving data from the input layer through the hidden layers to the output layer:

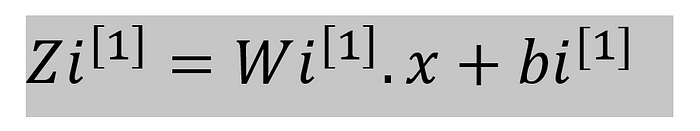

- Input to Hidden Layer: The output from the input layer is passed to the hidden layer nodes. For each node in the hidden layer, compute the weighted sum of inputs and add a bias term

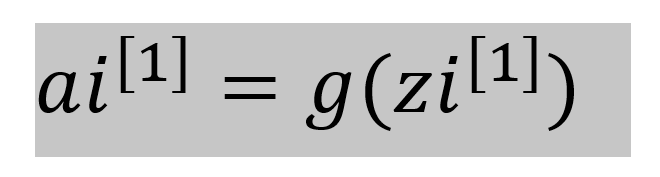

- Activation Function: The weighted sum zi[1] is passed through an activation function g(z) to introduce non-linearity into the model:

Common activation functions include Sigmoid, Tanh, ReLU (Rectified Linear Unit), and Leaky ReLU.

Hidden Layer to Output Layer: The process is repeated for each subsequent hidden layer until the output layer is reached, where the final predictions are made.

Back propagation: Error Correction

Back propagation enables the network to learn by adjusting weights and biases based on prediction errors:

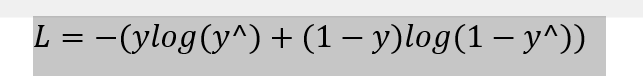

- Calculate the Loss: Compute the loss to measure prediction accuracy. For example, in logistic regression, the loss function might be:

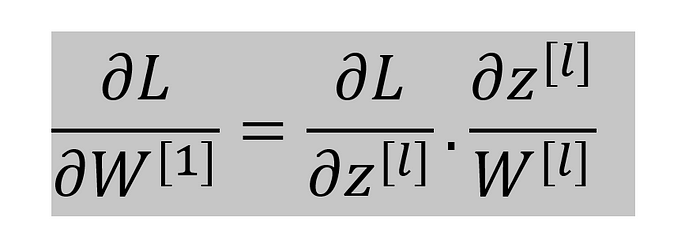

- Compute Gradients: Calculate gradients of the loss function with respect to weights and biases to determine how to adjust them:

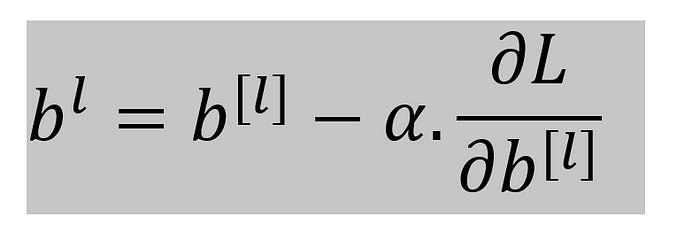

- Update Weights and Biases: Adjust weights and biases using a learning rate α

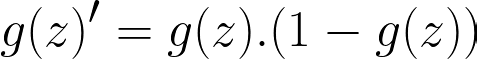

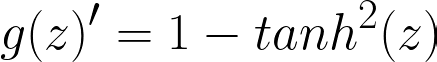

Activation Functions and Derivatives

Activation functions are crucial for network performance:

- Sigmoid Function: Maps input to a range between 0 and 1:

- Tanh Function: Maps input to a range between -1 and 1:

- ReLU and Leaky ReLU: ReLU outputs zero for negative inputs and the input itself for positive values. Leaky ReLU allows a small gradient for negative inputs.

Vectorization: Efficient Computation

Vectorization enables efficient computation in neural networks:

Weight Matrix Multiplication: Use matrix X and weight matrix W for simultaneous computation of outputs.

Activation Functions: Apply activation functions element-wise to matrices.

Conclusion

Forward and Backpropagation are essential for neural network training. Forward propagation involves moving data through the network, while Backpropagation adjusts weights and biases based on errors. Understanding these processes is crucial for working with neural networks and applying them to various AI tasks.

Subscribe to my newsletter

Read articles from Ezaan Amin directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Ezaan Amin

Ezaan Amin

Hey there! I'm Ezaan Amin, a dedicated student and MERN Stack Developer with a passion for technology, particularly in web development and machine learning. I'm committed to refining my skills and contributing positively to the tech sector. During my academic journey, I've undertaken various projects, including developing a comprehensive restaurant management system over 6 months (3 months for the admin app and 3 months for the user-facing app) and creating a social media platform focused on mental health awareness within 4 months. These experiences have provided me with valuable insights into software development, data analysis, and machine learning techniques. In addition to these projects, I have completed several smaller projects, such as a real-time chat application and a personal finance tracker, each within a 2-month timeframe. These projects have strengthened my skills in front-end and back-end development, database management, and user experience design.