How to Deploy a Three-Tier Application on GKE: A Step-by-Step Guide

Amit Maurya

Amit Maurya

Hello everyone, today we will deploy a three-tier application on GKE using Jenkins and explore the concept of three-tier architecture. In this article, you will learn how to create a CI/CD pipeline (Jenkinsfile) and deploy it to GKE through Jenkins.

Understanding Three-Tier Architecture in Kubernetes

Alright! We'll deploy it using Jenkins on GKE, but first, what exactly is a three-tier application? Let's dive into that.

A three-tier application is a software architecture pattern which includes the Application Layer, Data Layer, and Presentation Layer. It enhances the maintainability, scalability, and flexibility.

1) Presentation Layer: When we open the web browser and then opens any website it means we are interacting with the website that will take inputs from client side. In simple the frontend part, or GUI (Graphical User Interface) is the presentation layer.

2) Application Layer: We have heard the term business logic in the tech industry a lot. The application layer contains the logic part, i.e., the backend. When we give any input on the frontend, this layer processes some logic against that input and provides the result.

3) Data Layer: As we had heard the data word that means it consists the information which is stored in database that is connected to Presentation Layer and Application Layer.

Prerequisites

1) GCP (Google Cloud Platform) Account (https://shorturl.at/B6eBv)

2) Docker

3) Jenkins

4) Kubernetes (GKE Cluster)

Setting Up Your Google Kubernetes Engine (GKE) Environment

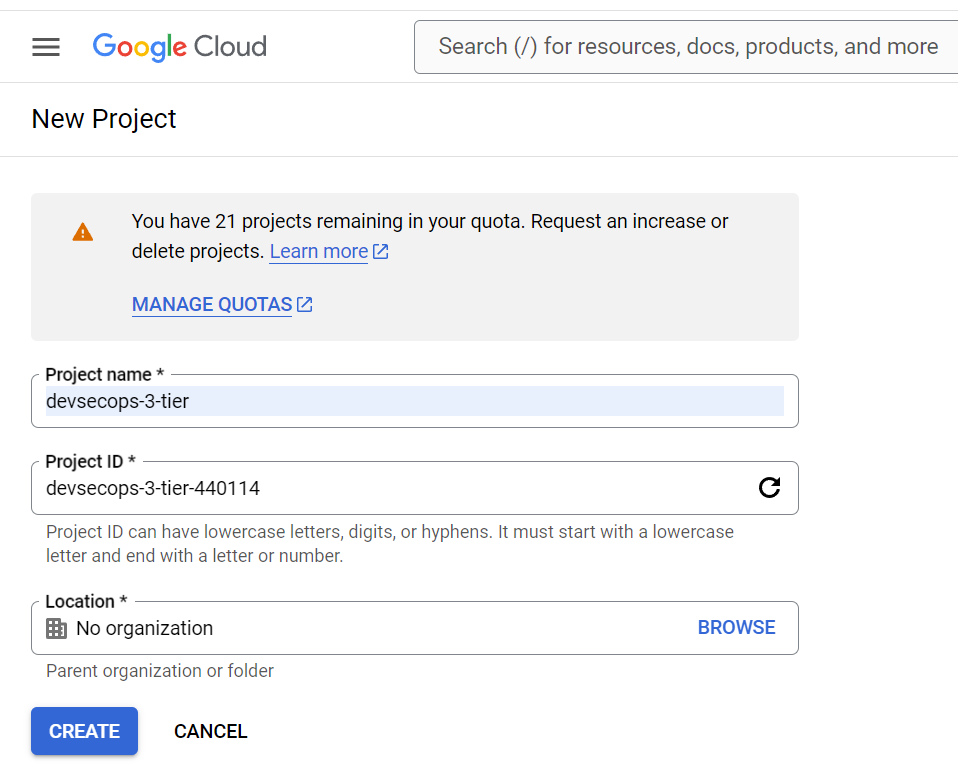

Create a GCP Project: Set up a new GCP project and enable billing.

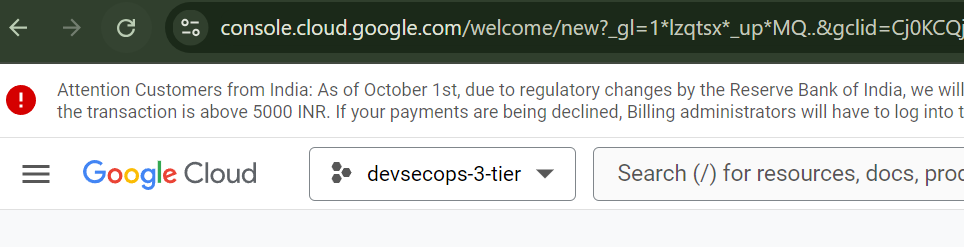

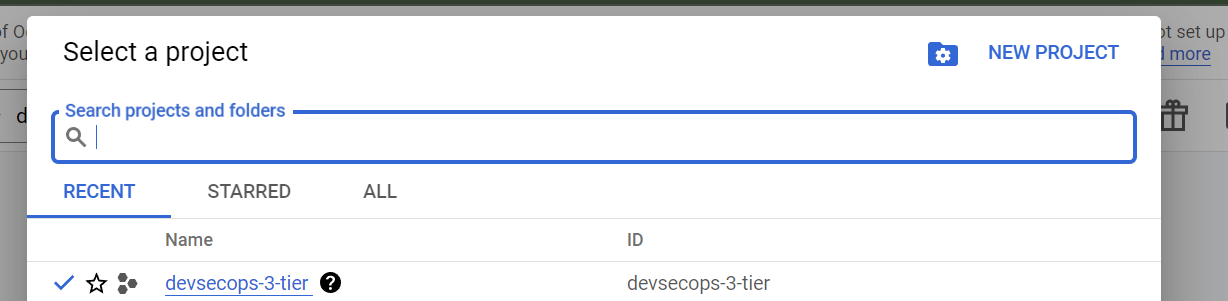

1) Click on “devsecops-3tier” where you can see the project that are created in your account.

2) A dialog box will appear and then click on “New Project“ and name it to “devsecops-3-tier“ which I had already created as you can see.

3) Go to Billing and set Budgets & alerts, configure email alerts for 50%, 75%, and 90% thresholds as this is in the best practices.

Enable Required APIs:

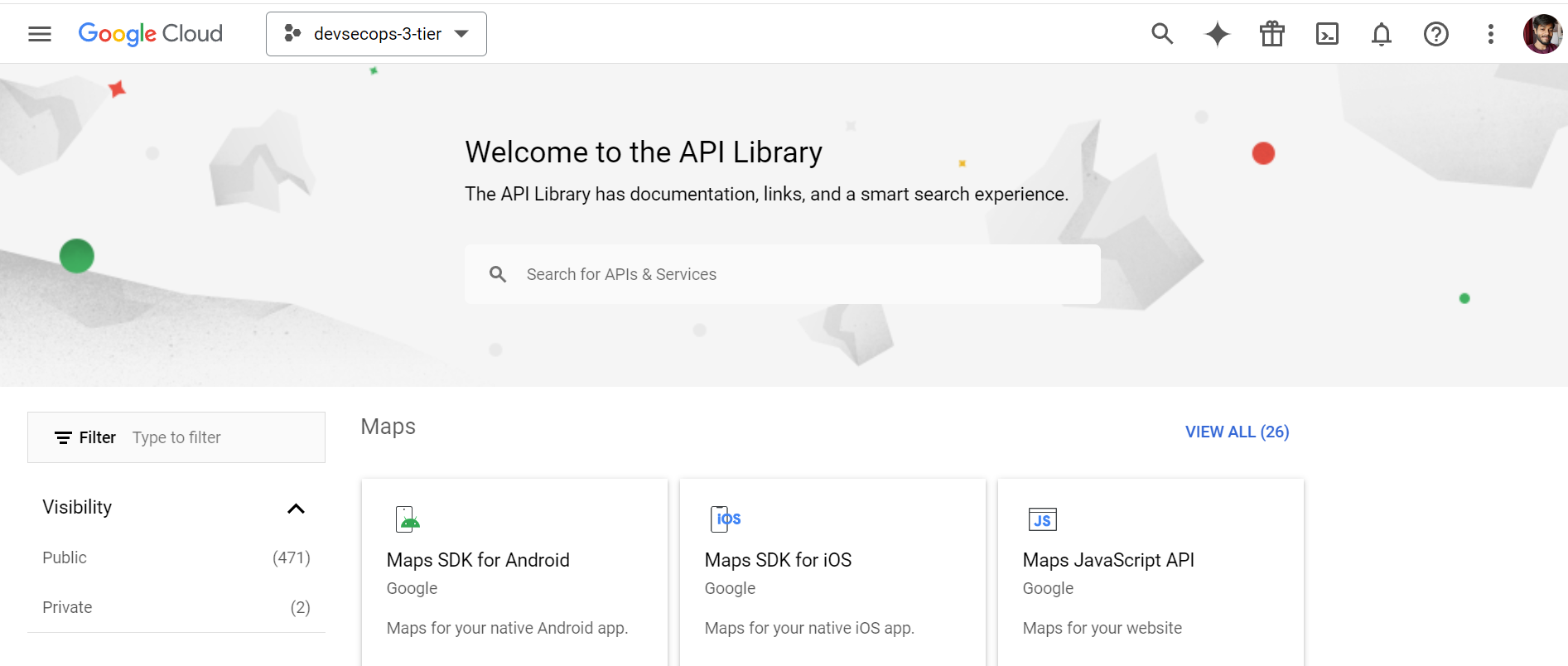

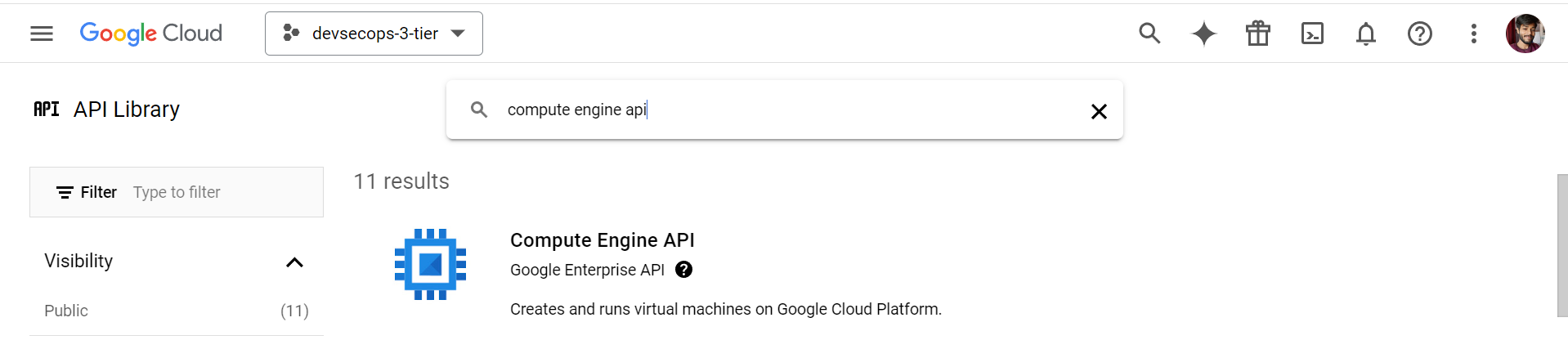

Now enable Compute Engine, Container File System, Kubernetes Engine, and Cloud Logging and Monitoring APIs.

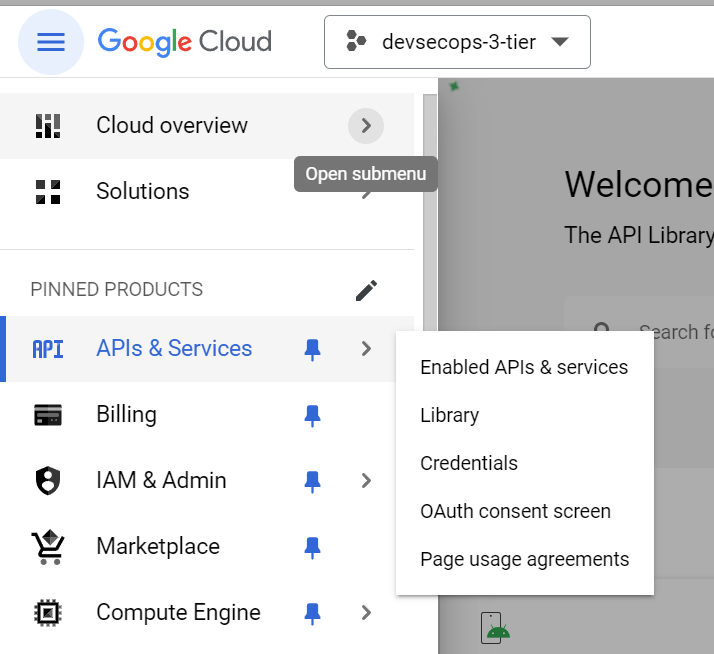

To enable API’s go to the Navigation Menu (the three line) in top left hand side and select APIs and Services, then go to Library to enable the APIs.

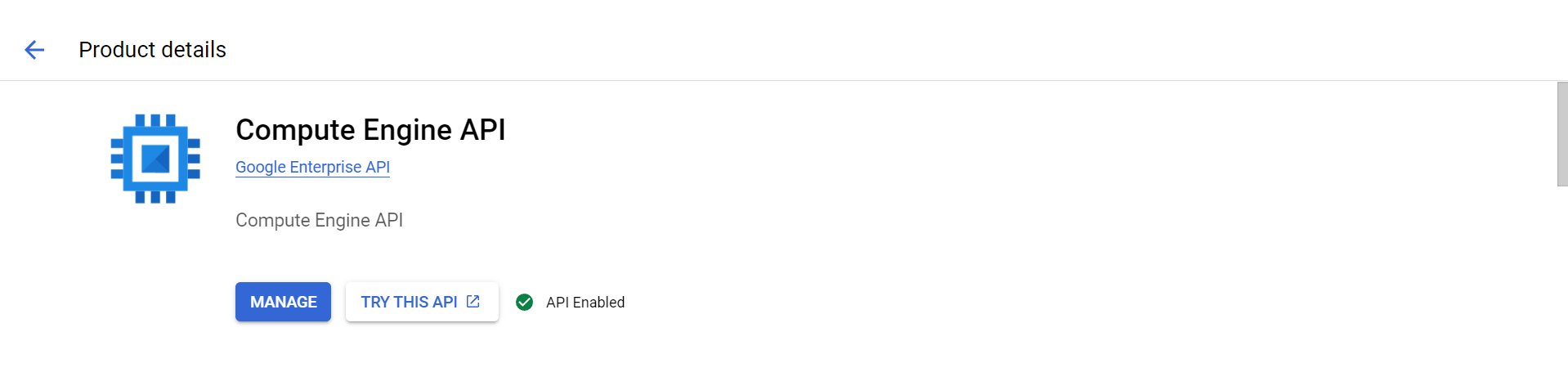

In GCP, before using any services we need to enable the API like for Compute Engine API, click on the API and enable it. Like, I enabled the API for Compute Engine in project ‘‘devsecops-3-tier‘‘ so it was showing API Enabled.

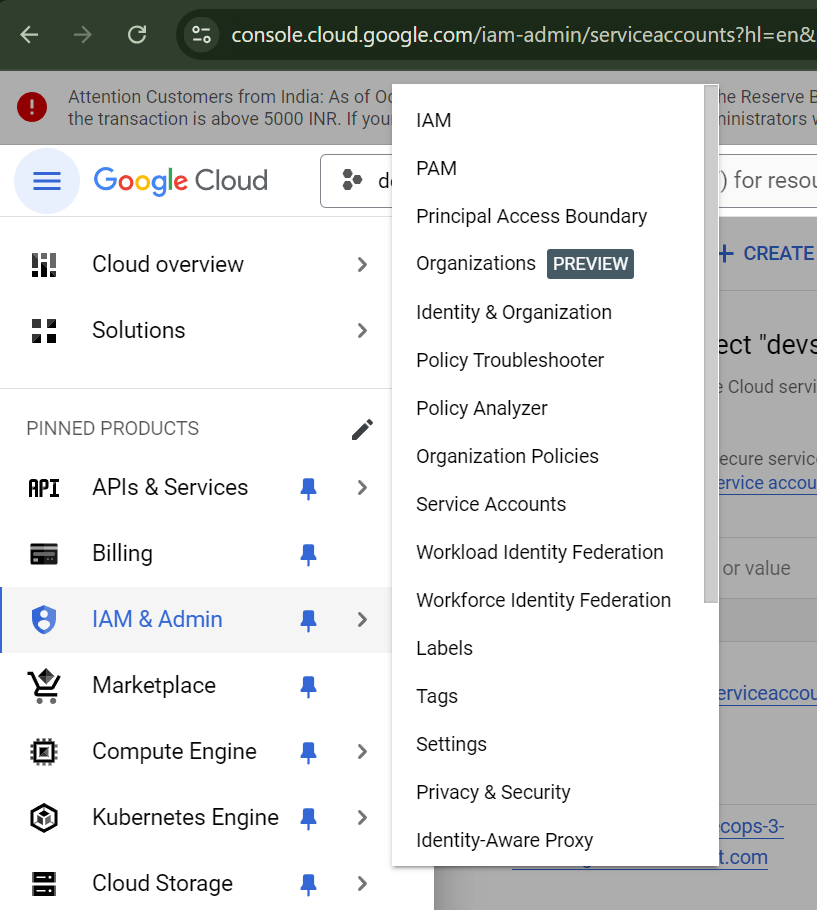

Setup IAM Roles:

Like in AWS, we create IAM for least-privileged roles (e.g., Viewer, Editor) same as in GCP we also create IAM to ensure proper access control for our team and services.

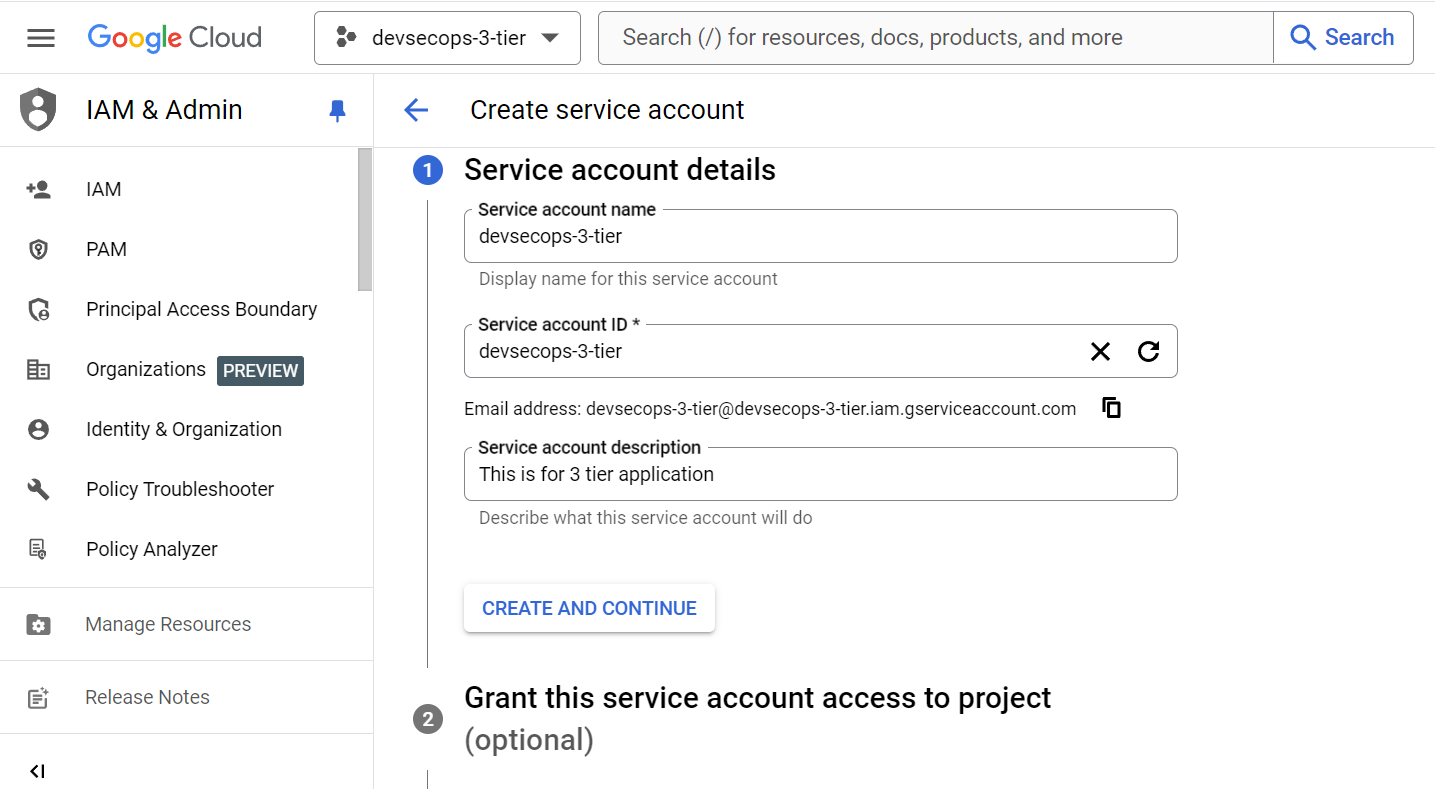

1) Go to Service Account by navigating into IAM & Admin section.

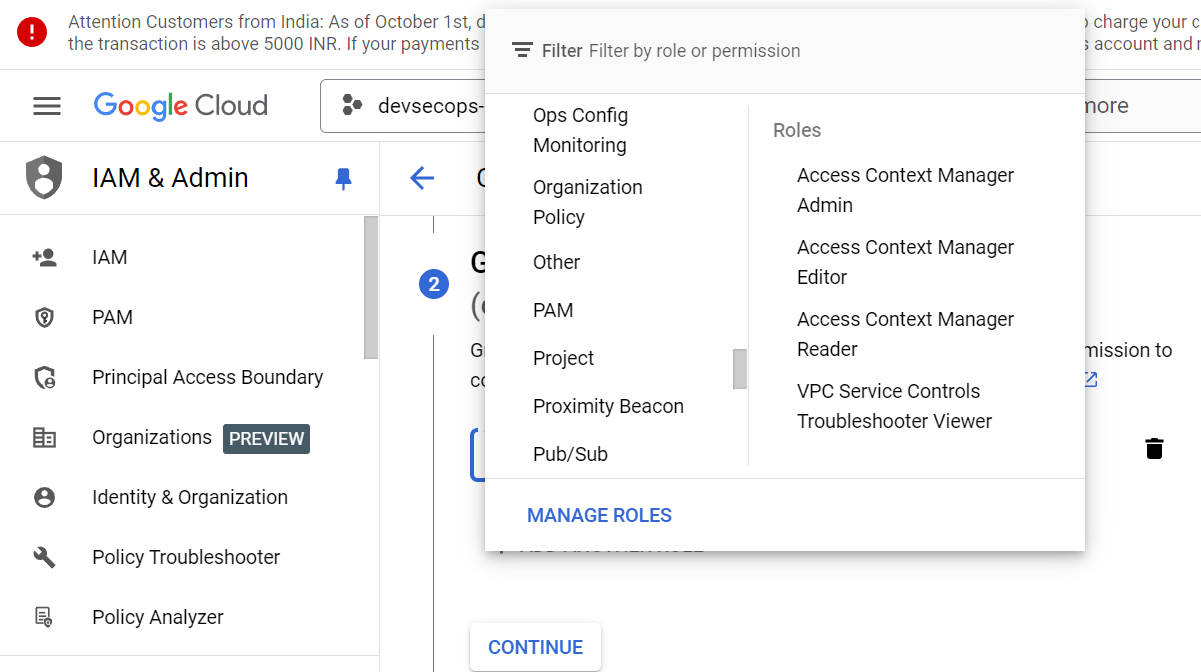

2) Now create the Service Account with name “devsecops-3-tier“ and grant the roles of Project Viewer, Admin, and Kubernetes Engine Service Agent.

Jenkins Setup

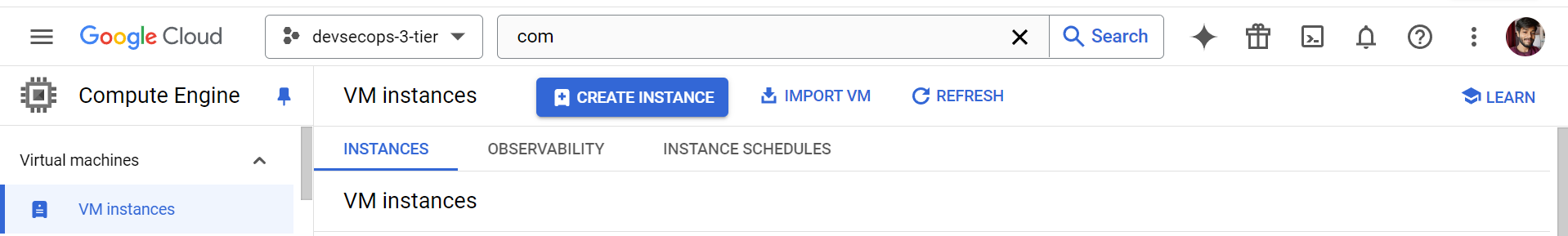

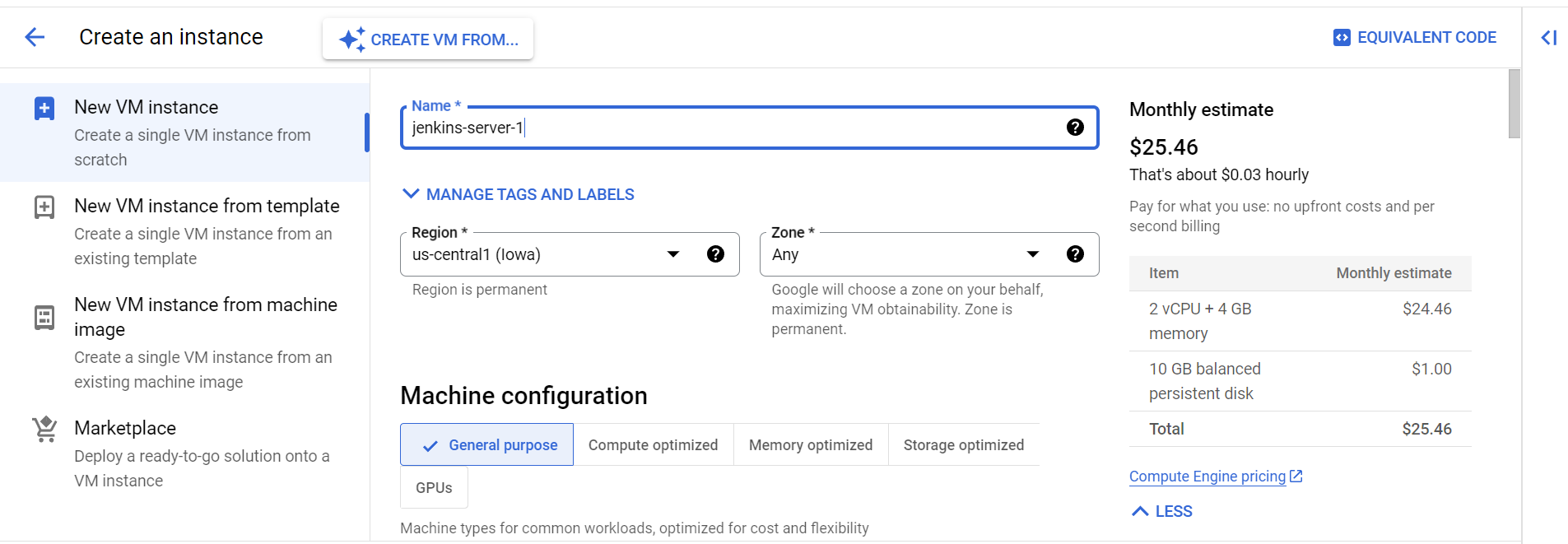

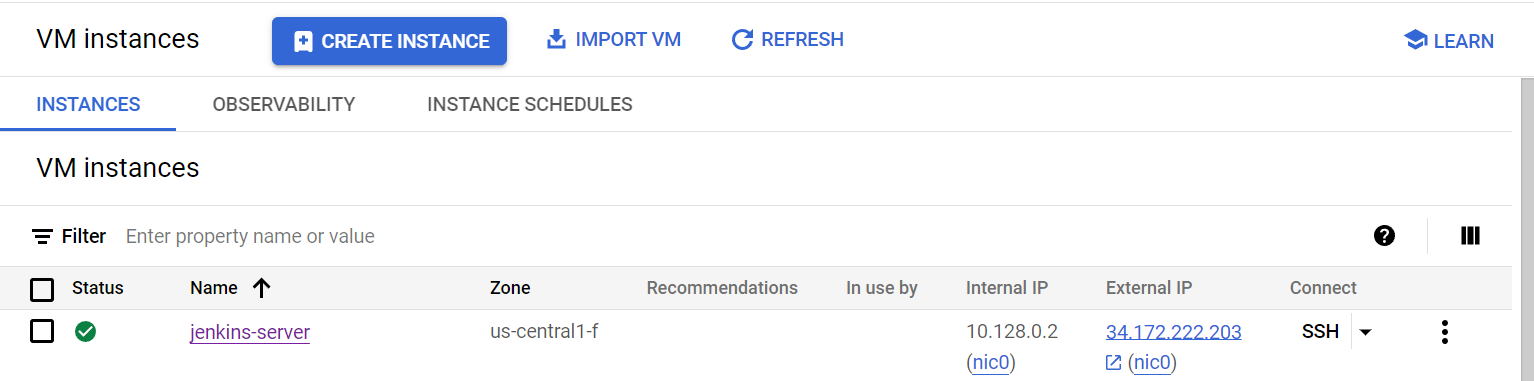

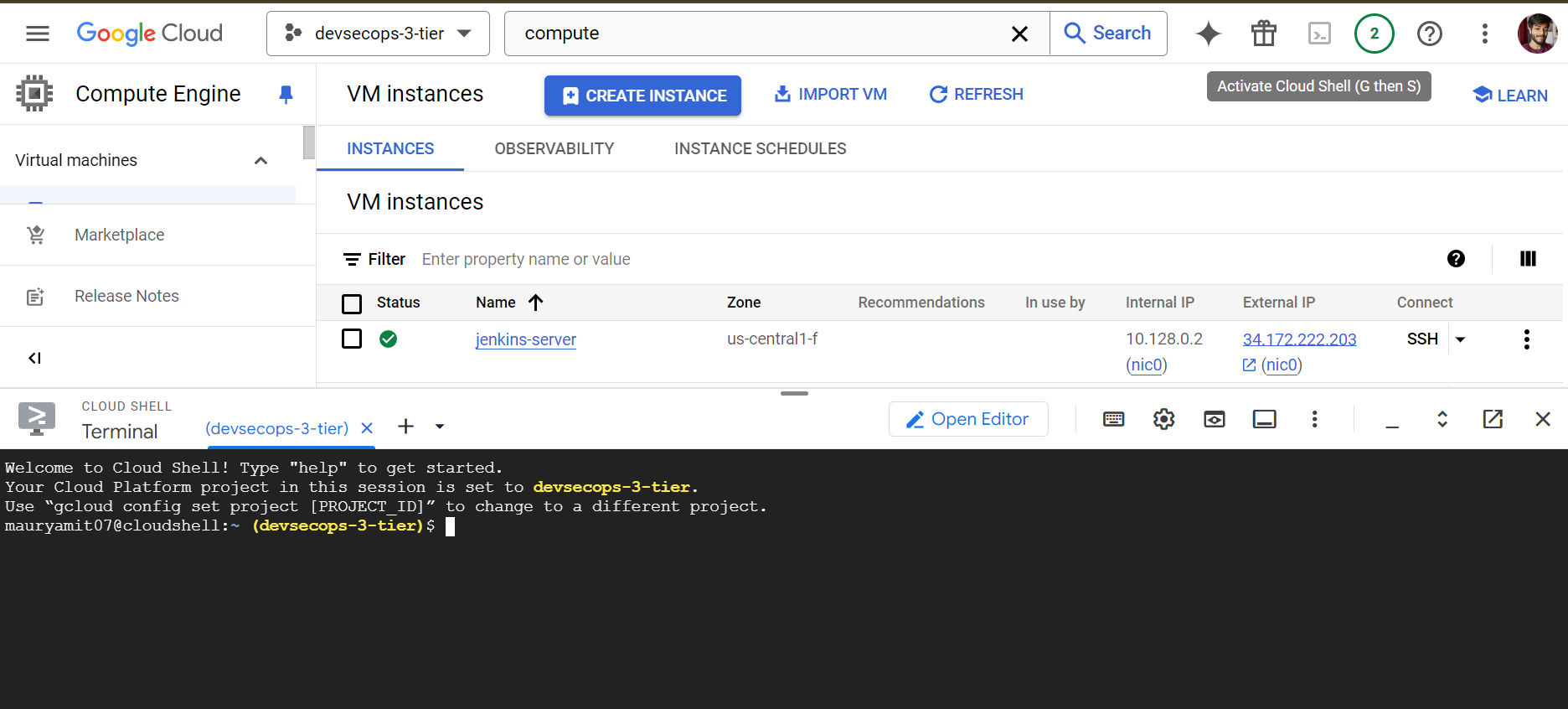

1) Navigate to Compute Engine and click on Create Instance.

2) Now, name the instance to ‘‘jenkins-server‘‘ and choose the Machine Configuration and region.

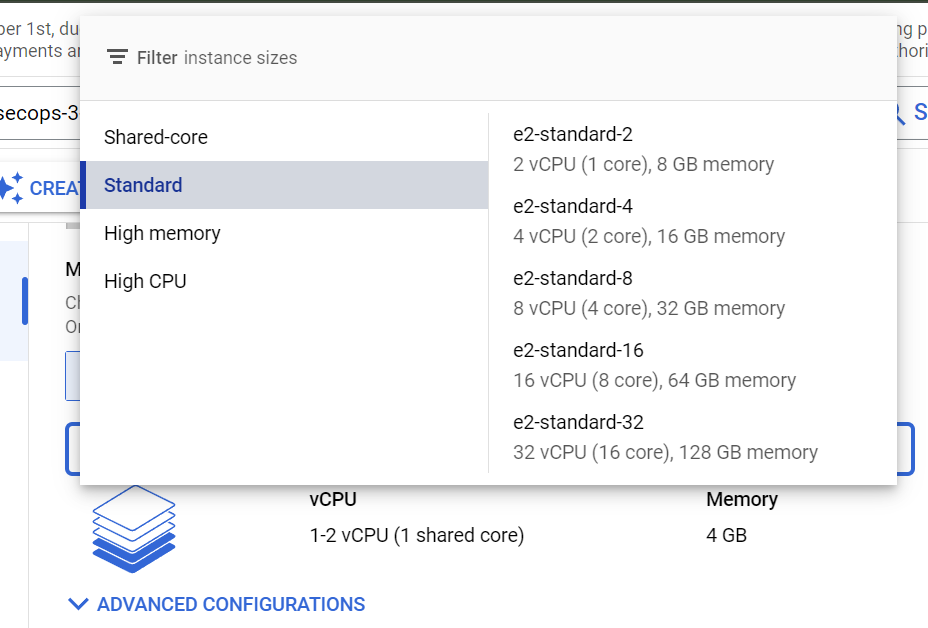

3) Scroll down, and select the machine type of Standard(e2-standard-2) which has 8 GB of memory and 2vCPU.

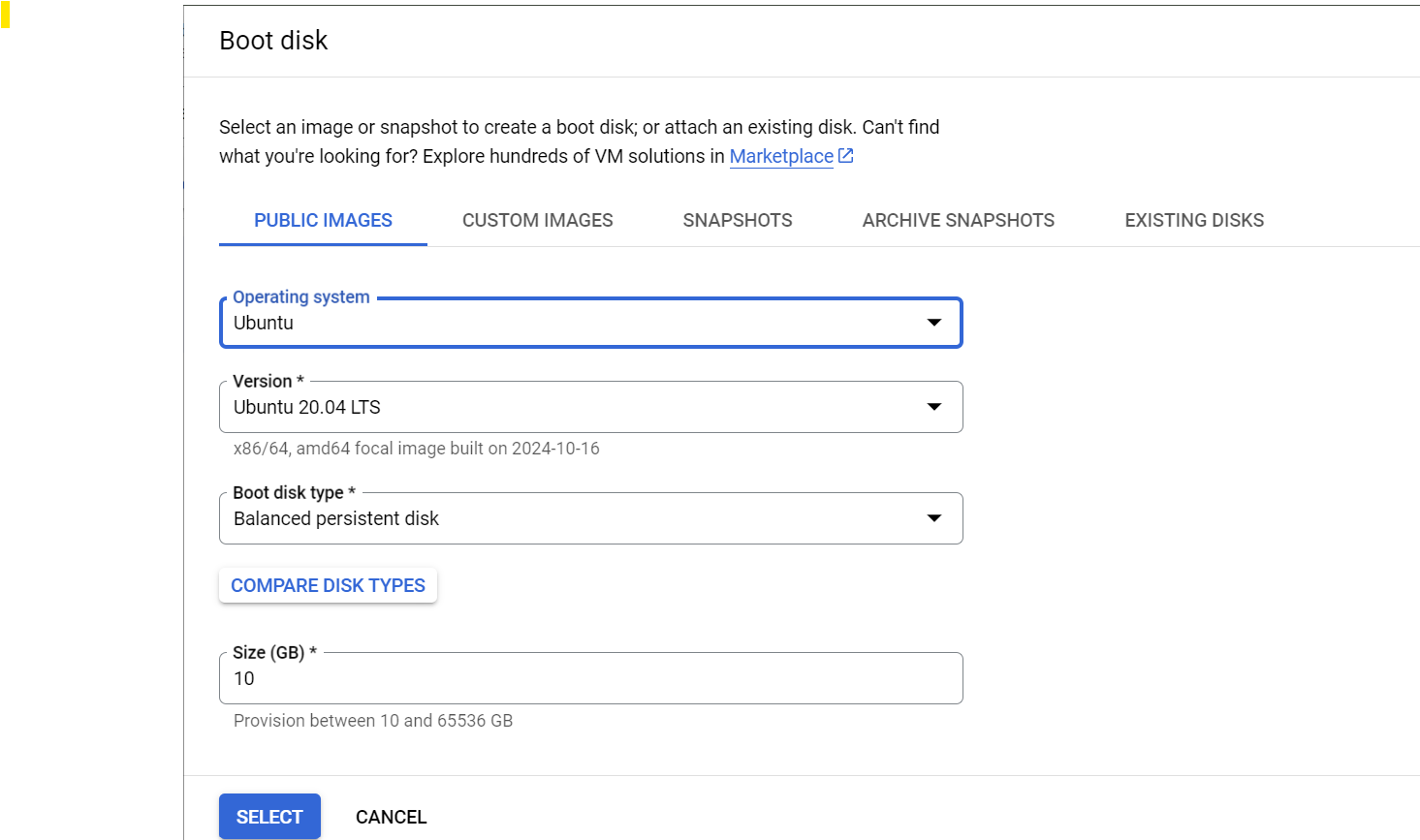

4) After this, scroll down and select the boot disk to choose the operating system and configure the storage. Change to Debian or your preferred Linux distribution.

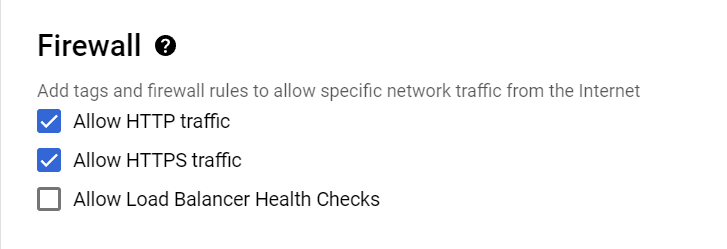

5) Now go to Firewall and check in two boxes to allow HTTP and HTTPS traffics to instance.

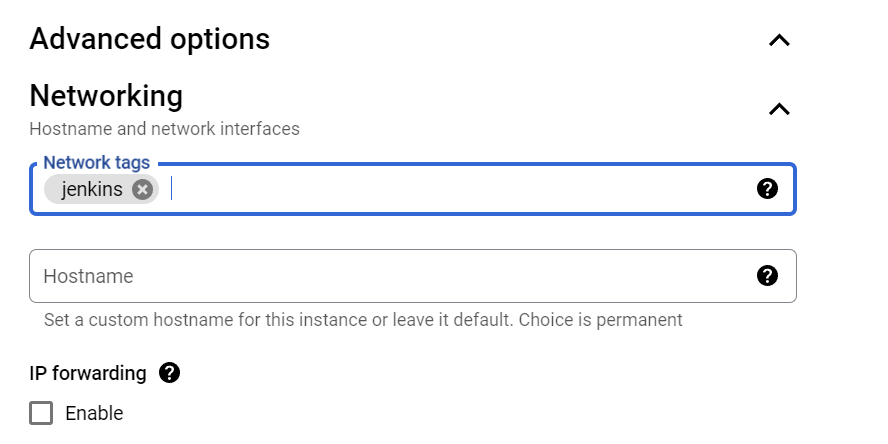

6) Finally, navigate to the Advanced section and open Networking to assign the tag jenkins. This ensures that when we create the Firewall, it will recognize the tag "jenkins" and route traffic to the appropriate port and hostname associated with the attached firewall. By default only two tags are associated with the created instance that are “http-traffic“ and “https-traffic“ that will allow only 80 and 443 port.

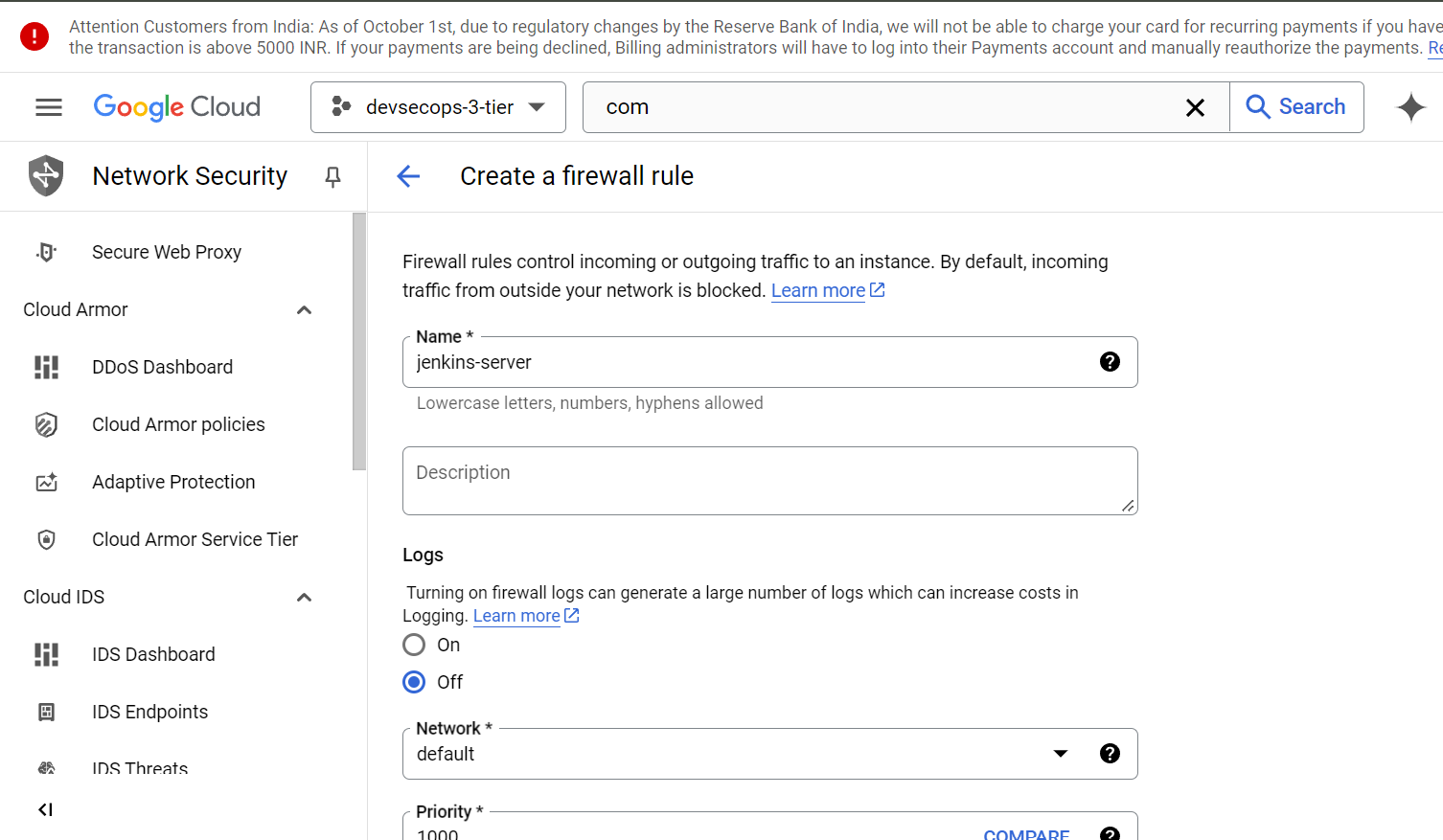

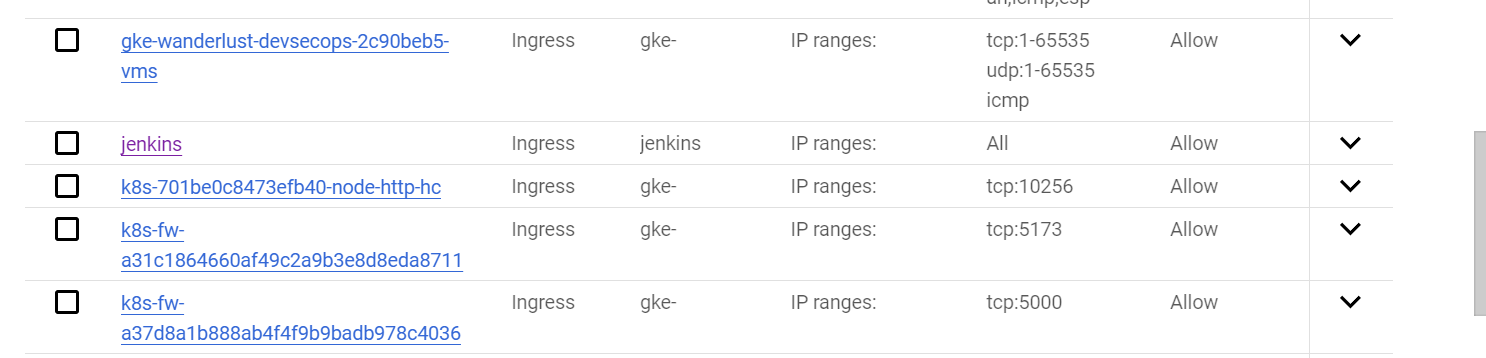

7) Navigate to the VPC Network and click on Firewall to create firewall rule to allow the port 8080 to access Jenkins. Scroll down you will find Network which is “default”, specify the Target tags which is “jenkins“ (remember we had attached this tag to instance), specify the IPv4 range where I had given “0.0.0.0/0“ and then specify the Port which is 8080 then create that rule.

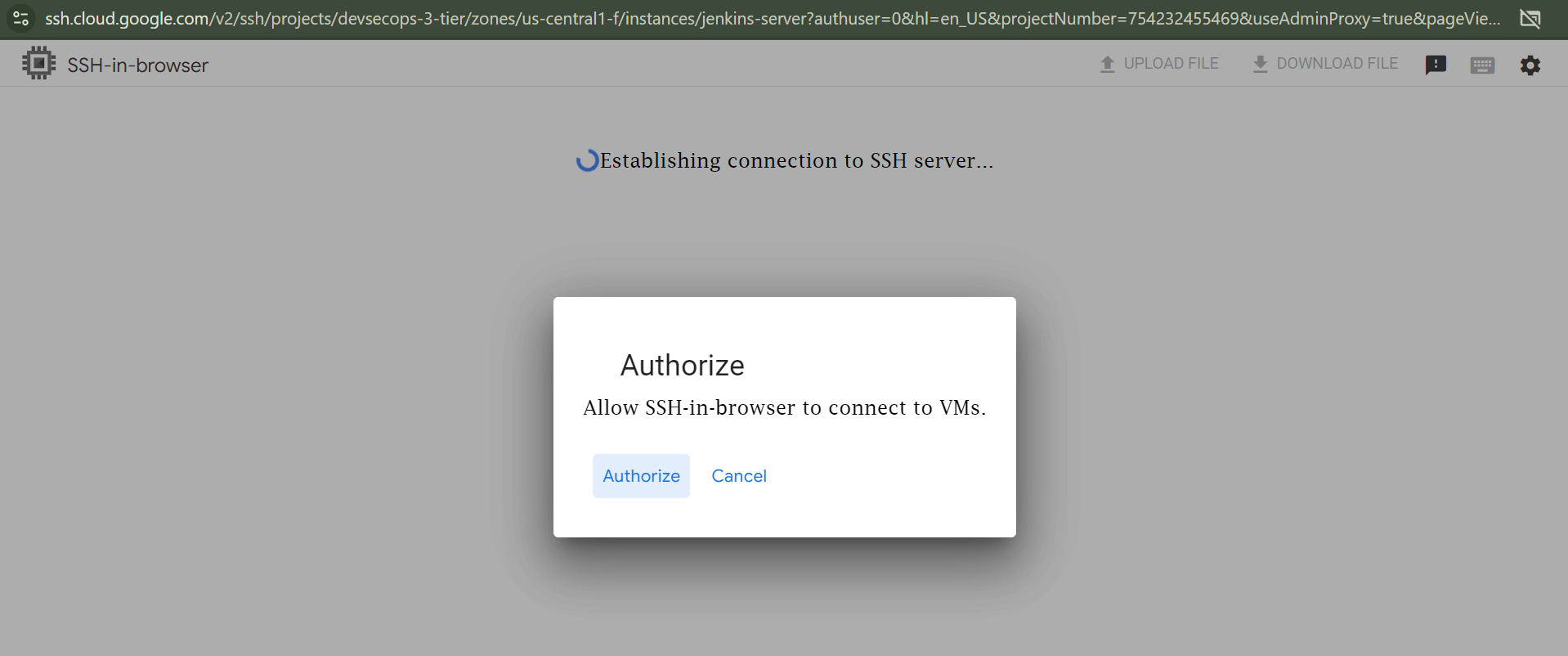

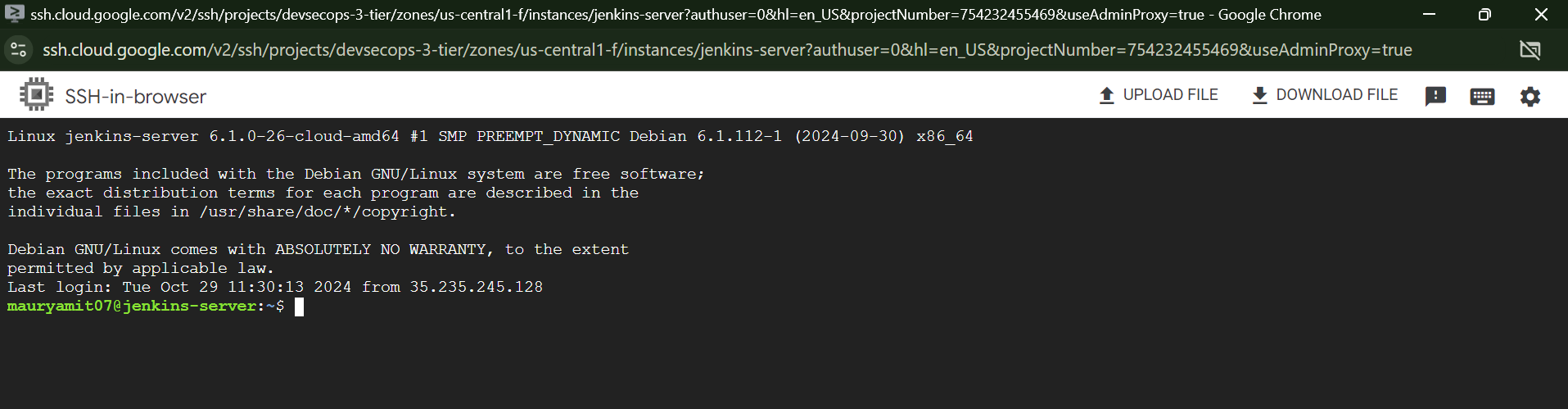

8) Now, navigate to Compute Enginer and let’s SSH into the instance (jenkins-server) click on SSH it will open the new window and then Authorize it.

9) After successfully SSH into the instance execute the following commands to install the Jenkins.

sudo apt-get update

sudo apt install default-jre // To install JDK

sudo wget -O /usr/share/keyrings/jenkins-keyring.asc \

https://pkg.jenkins.io/debian-stable/jenkins.io-2023.key

echo "deb [signed-by=/usr/share/keyrings/jenkins-keyring.asc]" \

https://pkg.jenkins.io/debian-stable binary/ | sudo tee \

/etc/apt/sources.list.d/jenkins.list > /dev/null

sudo apt-get update

sudo apt-get install jenkins

Now enable the jenkins service to start automatically when the instance boot up.

sudo systemctl enable jenkins

sudo systemctl start jenkins

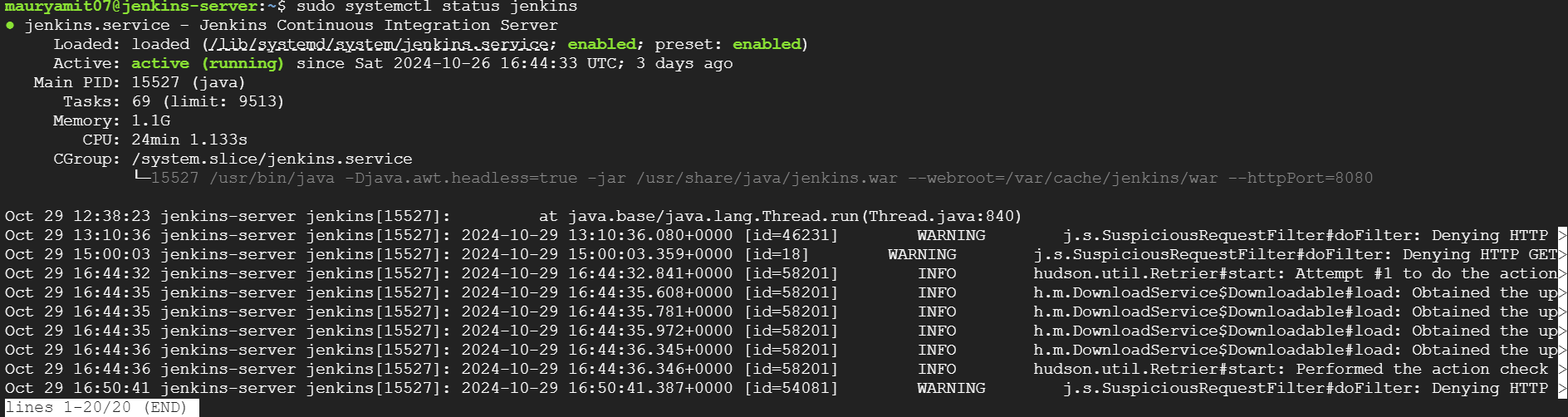

To check the status of Jenkins

sudo systemctl status jenkins

After this extract the password from the /var/lib/jenkins/secrets/initialAdminPassword. Then setup the username and password.

cat /var/lib/jenkins/secrets/initialAdminPassword

CI/CD Pipeline (Jenkins Parametrized Pipeline)

Before heading to creating the Jenkins Job first install the required plugins by navigating into Manage Jenkins > Plugins > Available Plugins.

1) Kubernetes CLI Plugin

2) Pipeline Plugin

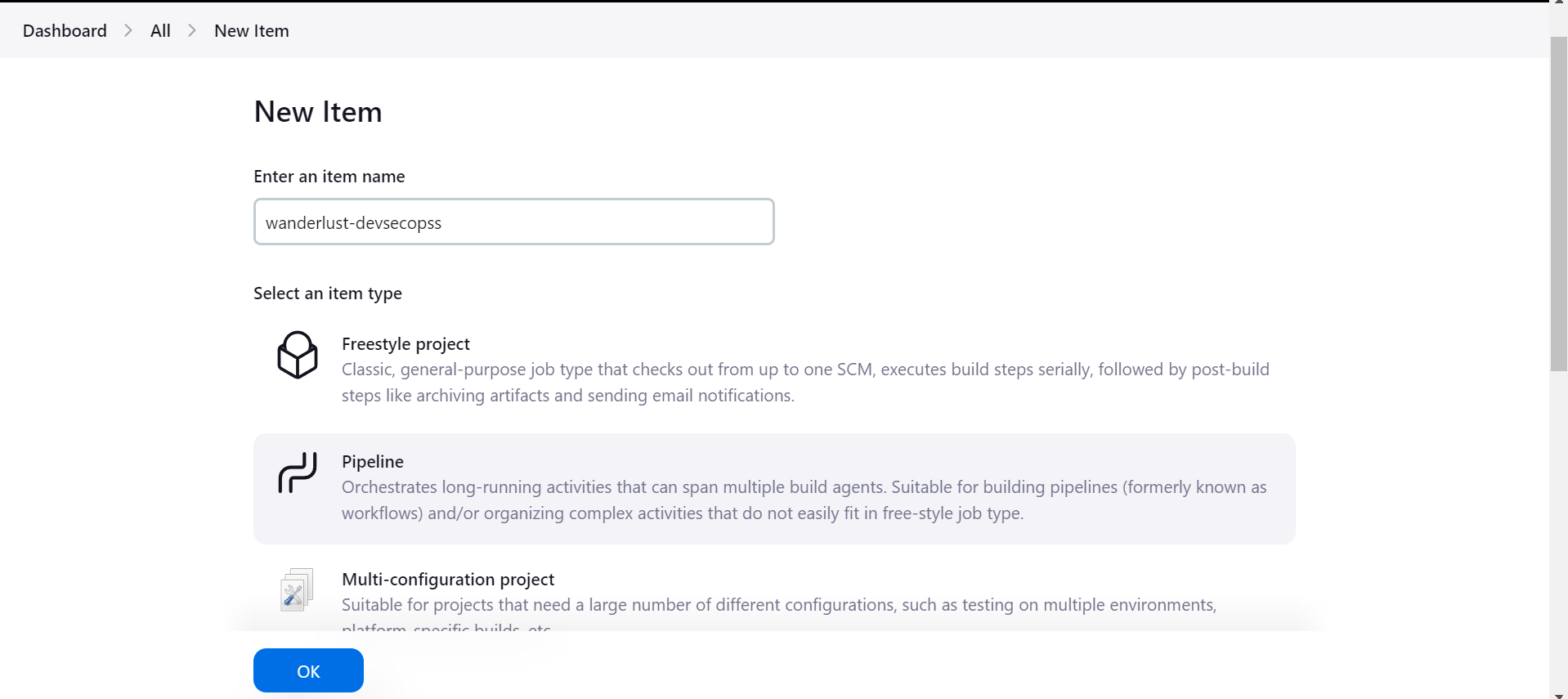

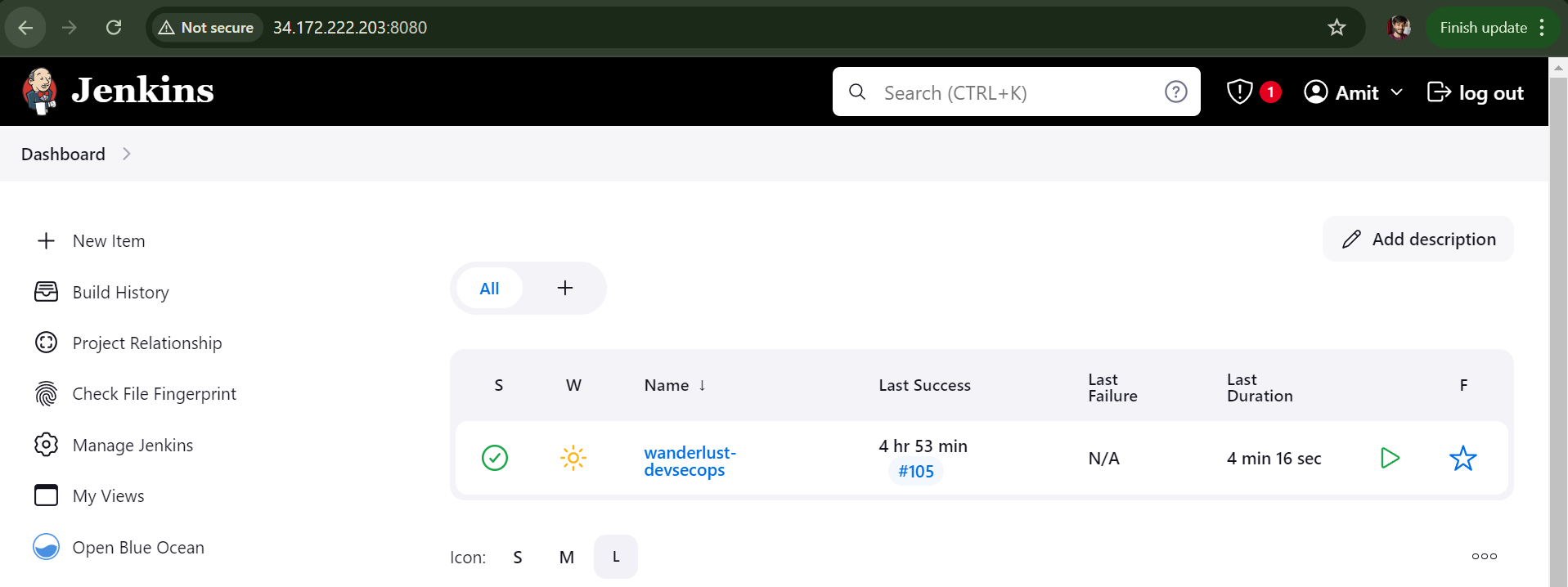

1) After all setup done, the first page of Jenkins will look like this where I had created my first job “wanderlust devsecops“. You can create the new job by going into New Item and select Pipeline.

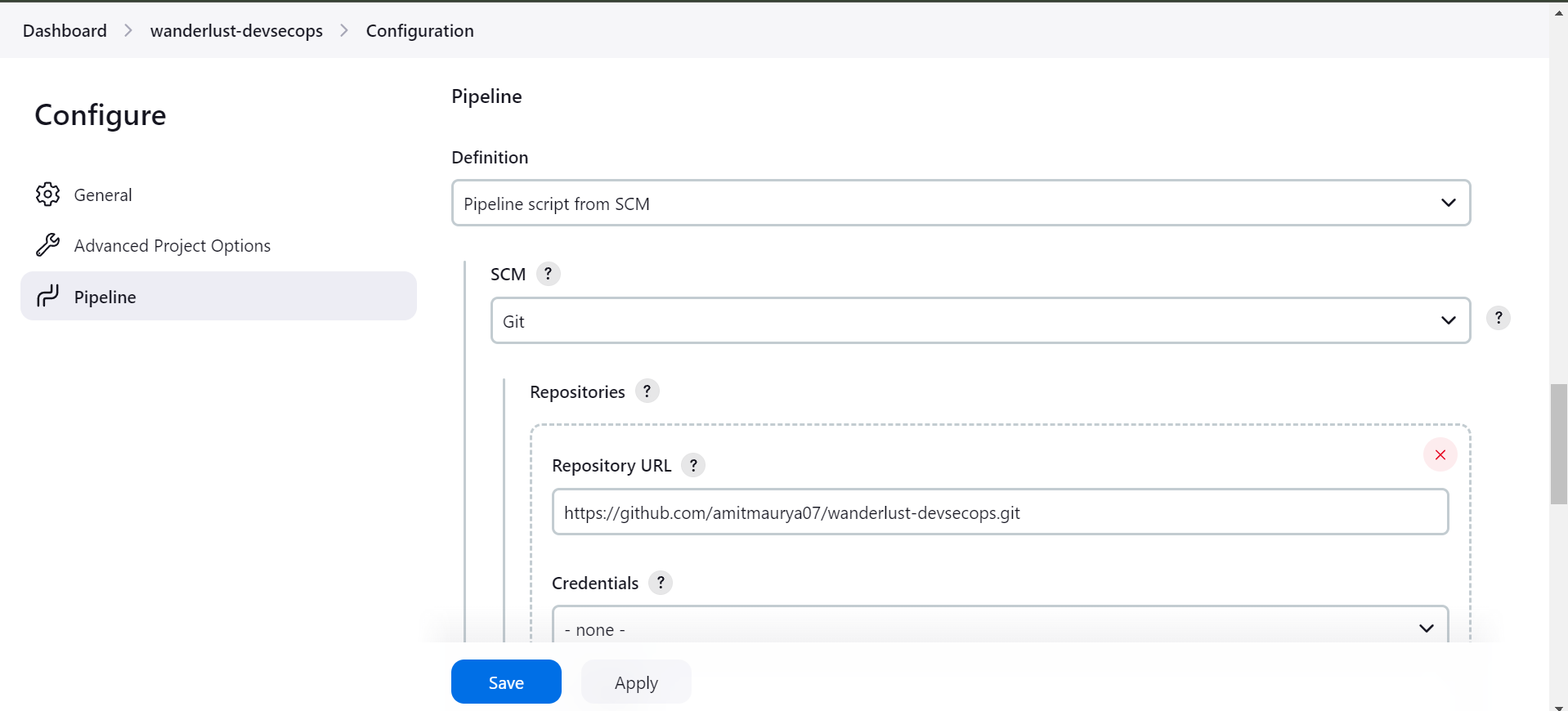

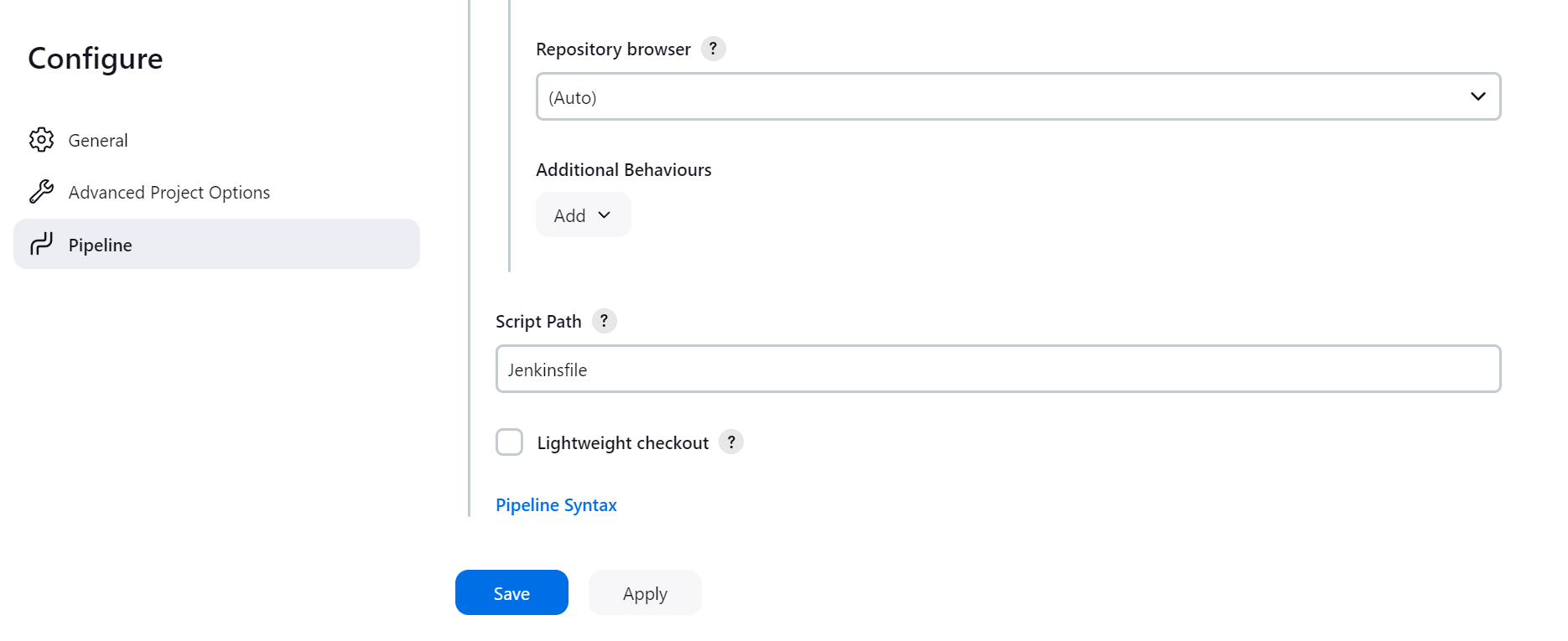

2) You will now go to configure the Jenkins Job in which we will add the Github Repository (https://github.com/amitmaurya07/wanderlust-devsecops.git)

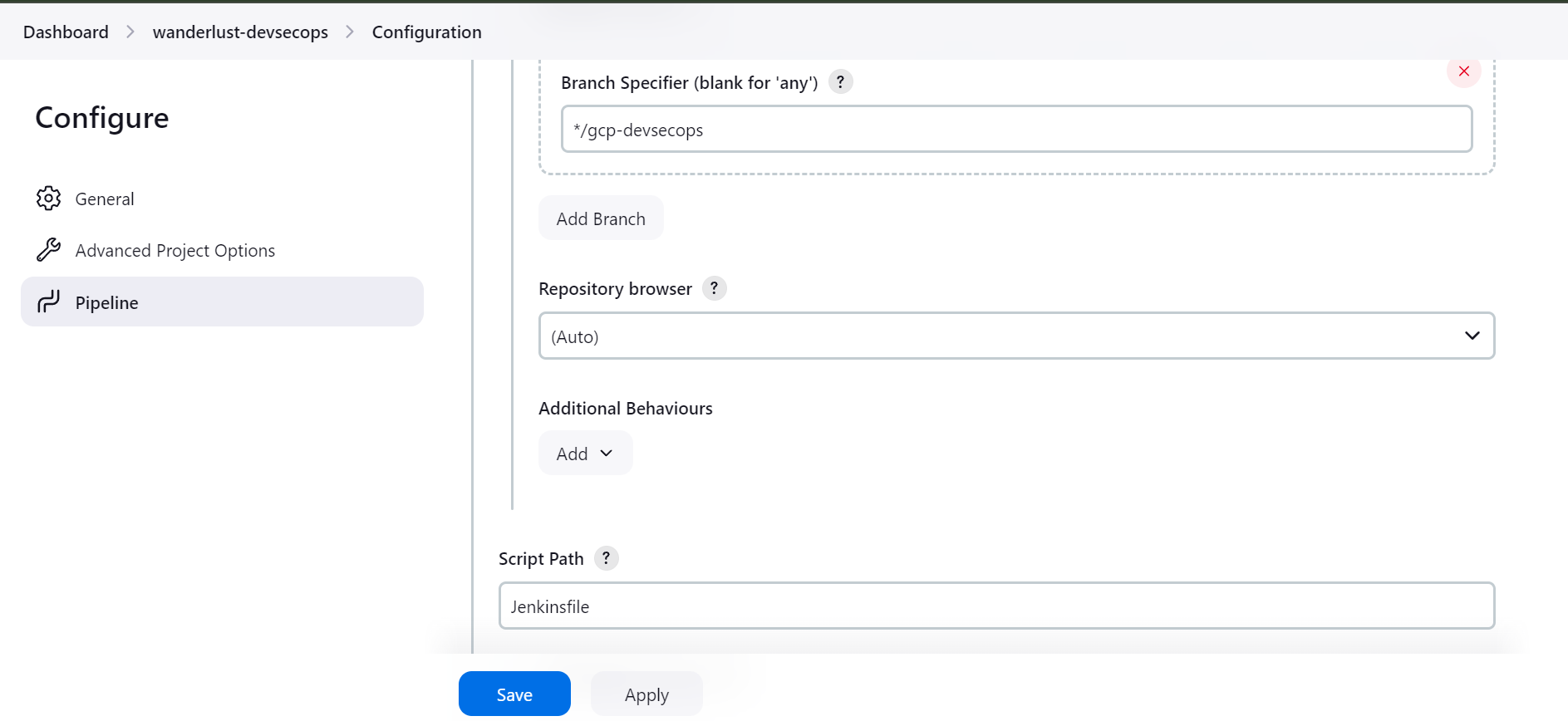

Add the branch specifier where you want to store Jenkinsfile.

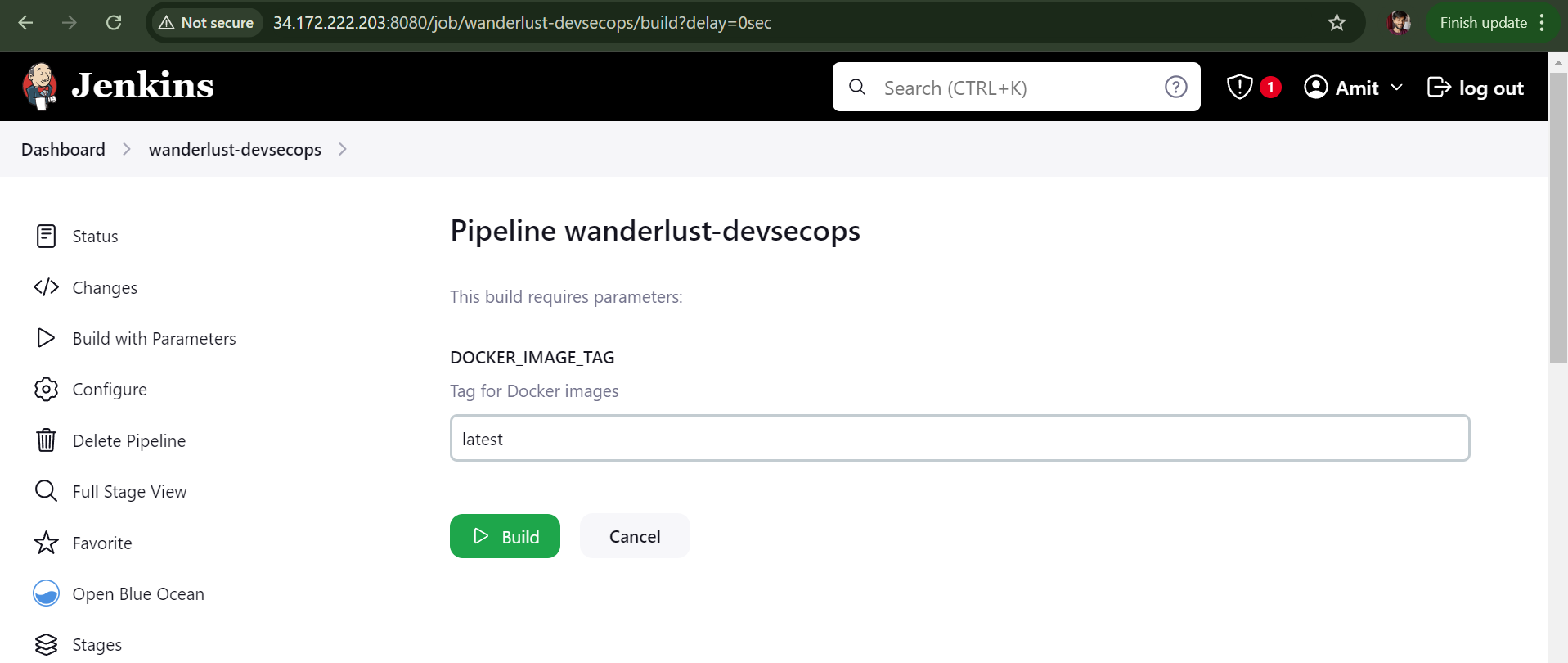

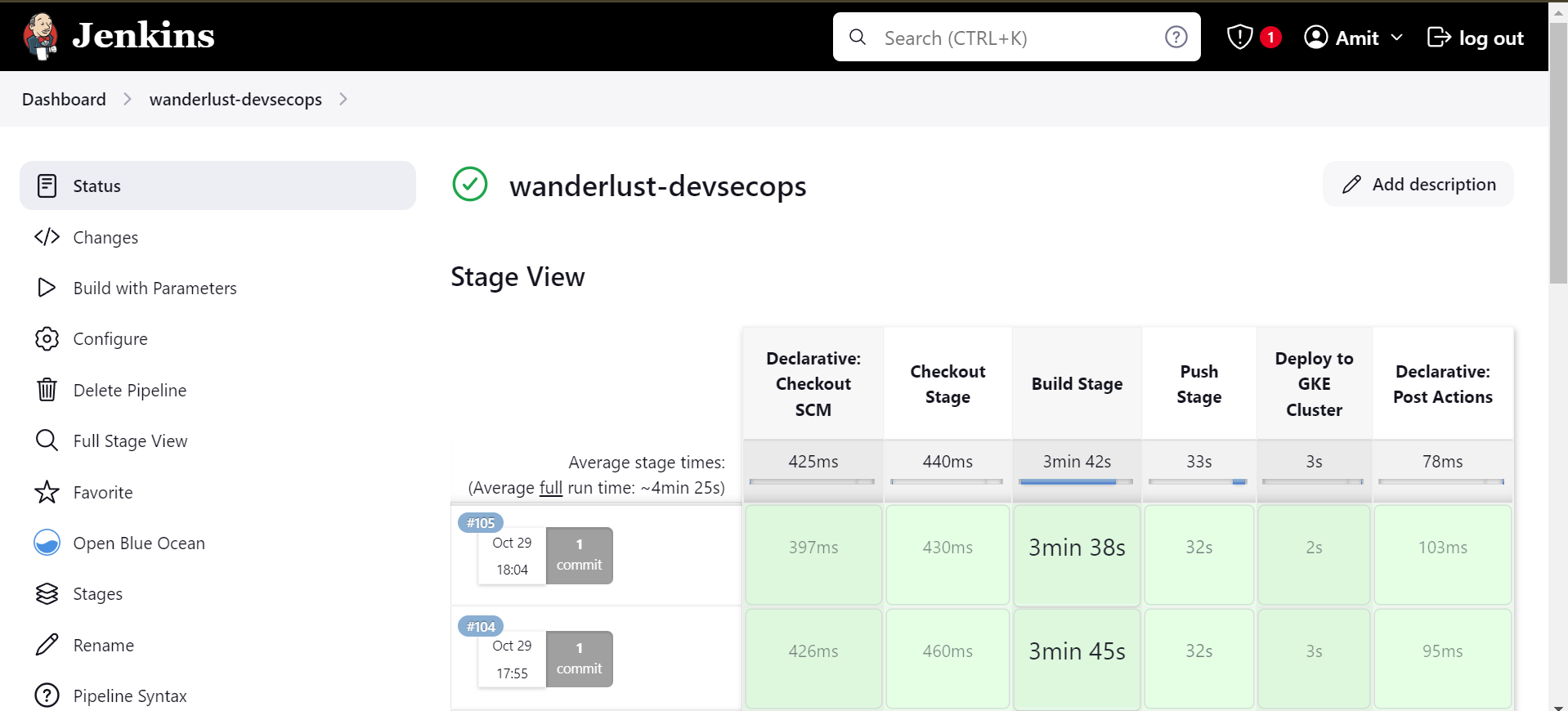

Now, lets create the Jenkinsfile in which we will define 4 stages and add parameters for image build tag.

Jenkinsfile - https://github.com/amitmaurya07/wanderlust-devsecops/blob/gcp-devsecops/Jenkinsfile

1) Stage 1 - Checkout Source Code

2) Stage 2 - Build Docker Image

3) Stage 3 - Push Docker Image

4) Stage 4 - Deploy to GKE (Google Kubernetes Engine)

Here is the Jenkinsfile which should be added in GitHub repository where Jenkins will pull the Jenkinsfile and build the stages.

pipeline {

agent any

parameters {

string(name: 'DOCKER_IMAGE_TAG', defaultValue: 'latest', description: 'Tag for Docker images')

}

stages {

stage("Checkout Stage") {

steps {

script {

git branch: 'gcp-devsecops', url: 'https://github.com/amitmaurya07/wanderlust-devsecops.git'

}

}

}

stage("Build Stage") {

steps {

withCredentials([usernamePassword(credentialsId: 'dockerhub', passwordVariable: 'DOCKER_PASSWORD', usernameVariable: 'DOCKER_USERNAME')]) {

script {

def frontendImage = "${DOCKER_USERNAME}/wanderlust_frontend:${params.DOCKER_IMAGE_TAG}"

sh "docker build -f ./frontend/Dockerfile -t ${frontendImage} ."

}

script {

def backendImage = "${DOCKER_USERNAME}/wanderlust_backend:${params.DOCKER_IMAGE_TAG}"

sh "docker build -f ./backend/Dockerfile -t ${backendImage} ."

}

}

}

}

stage("Push Stage") {

steps {

withCredentials([usernamePassword(credentialsId: 'dockerhub', passwordVariable: 'DOCKER_PASSWORD', usernameVariable: 'DOCKER_USERNAME')]) {

script {

sh "echo \$DOCKER_PASSWORD | docker login -u \$DOCKER_USERNAME --password-stdin"

sh "docker push ${DOCKER_USERNAME}/wanderlust_frontend:${params.DOCKER_IMAGE_TAG}"

sh "docker push ${DOCKER_USERNAME}/wanderlust_backend:${params.DOCKER_IMAGE_TAG}"

}

}

}

}

stage("Deploy to GKE Cluster") {

steps {

withKubeConfig(caCertificate: '', clusterName: 'gke_devsecops-3-tier_us-central1_wanderlust-devsecops', contextName: '', credentialsId: 'k8s-secret', namespace: 'devsecops', restrictKubeConfigAccess: false, serverUrl: 'https://34.56.143.43') {

script {

sh "kubectl apply -f ./kubernetes -n devsecops"

sh "kubectl get pods -n devsecops"

sh "kubectl get services -n devsecops"

}

}

}

}

}

post {

success {

echo "======== Pipeline executed successfully ========"

}

failure {

echo "======== Pipeline execution failed ========"

}

always {

echo "======== Cleaning up resources ========"

}

}

}

Let’s break down the Jenkinsfile to understand it properly how to write Jenkinsfile and add parameters.

1) Checkout Stage

pipeline {

agent any

parameters {

string(name: 'DOCKER_IMAGE_TAG', defaultValue: 'latest', description: 'Tag for Docker images')

}

stages {

stage("Checkout Stage") {

steps {

script {

git branch: 'gcp-devsecops', url: 'https://github.com/amitmaurya07/wanderlust-devsecops.git'

}

}

}

The pipeline starts from the pipeline with agent “any”, we can give agent name to run the Jenkins Job on another server so that the Jenkins Master server doesn’t have any load or goes down. Here for time being, we are running the Jenkins job on Jenkins Master node.

Next, we incorporate parameters into the pipeline to receive input from the user, allowing the build to proceed with the specified parameter. We are giving “string“ parameter which will take the build tag for the image.

Next is we are specifying the stages, where first stage is “Checkout Stage“ which includes the script block that pulls the content of GitHub Repository.

2) Build Stage

stage("Build Stage") {

steps {

withCredentials([usernamePassword(credentialsId: 'dockerhub', passwordVariable: 'DOCKER_PASSWORD', usernameVariable: 'DOCKER_USERNAME')]) {

script {

def frontendImage = "${DOCKER_USERNAME}/wanderlust_frontend:${params.DOCKER_IMAGE_TAG}"

sh "docker build -f ./frontend/Dockerfile -t ${frontendImage} ."

}

script {

def backendImage = "${DOCKER_USERNAME}/wanderlust_backend:${params.DOCKER_IMAGE_TAG}"

sh "docker build -f ./backend/Dockerfile -t ${backendImage} ."

}

}

}

}

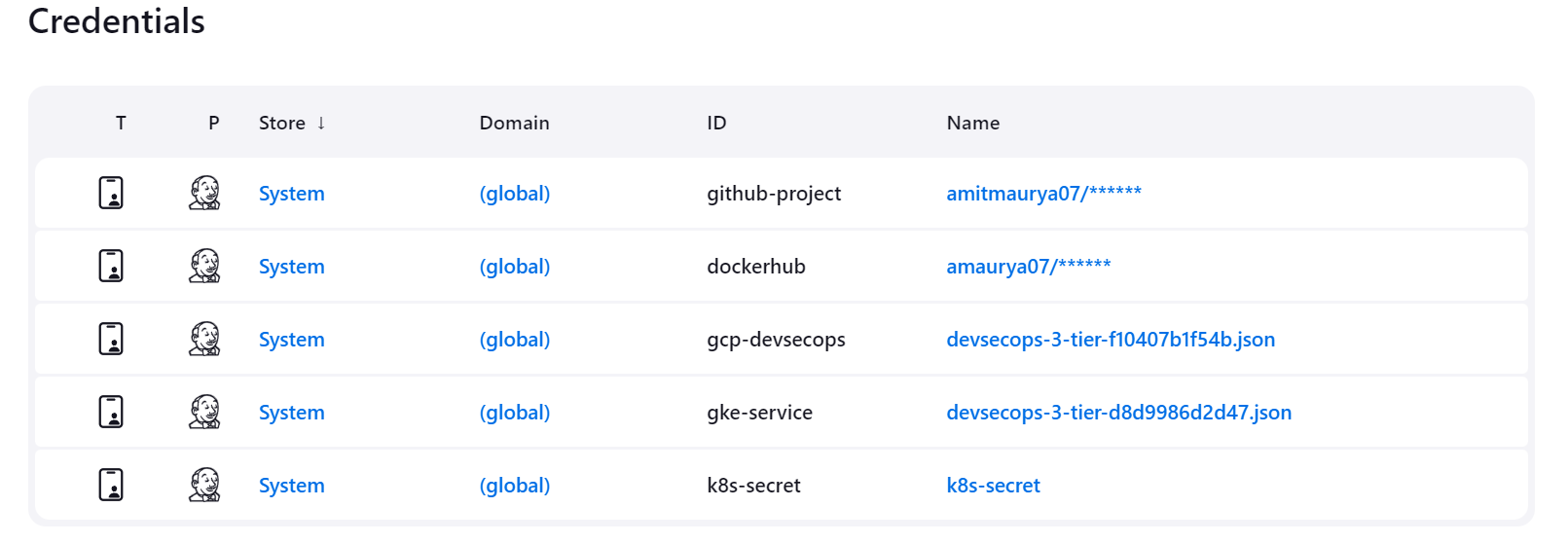

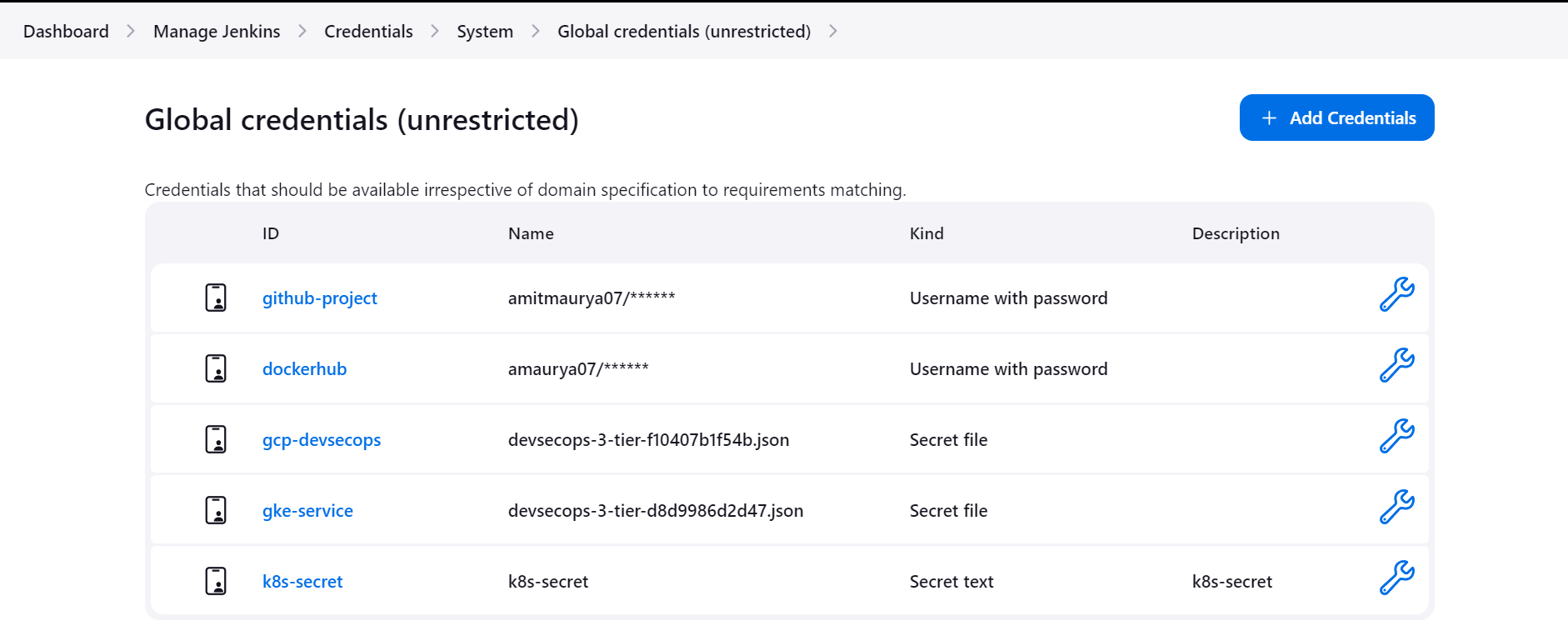

As now we had moved to next stage i.e. Build Stage which builds the docker file. Now add the Credentials of DockerHub in Jenkins in Manage Jenkins > Credentials.

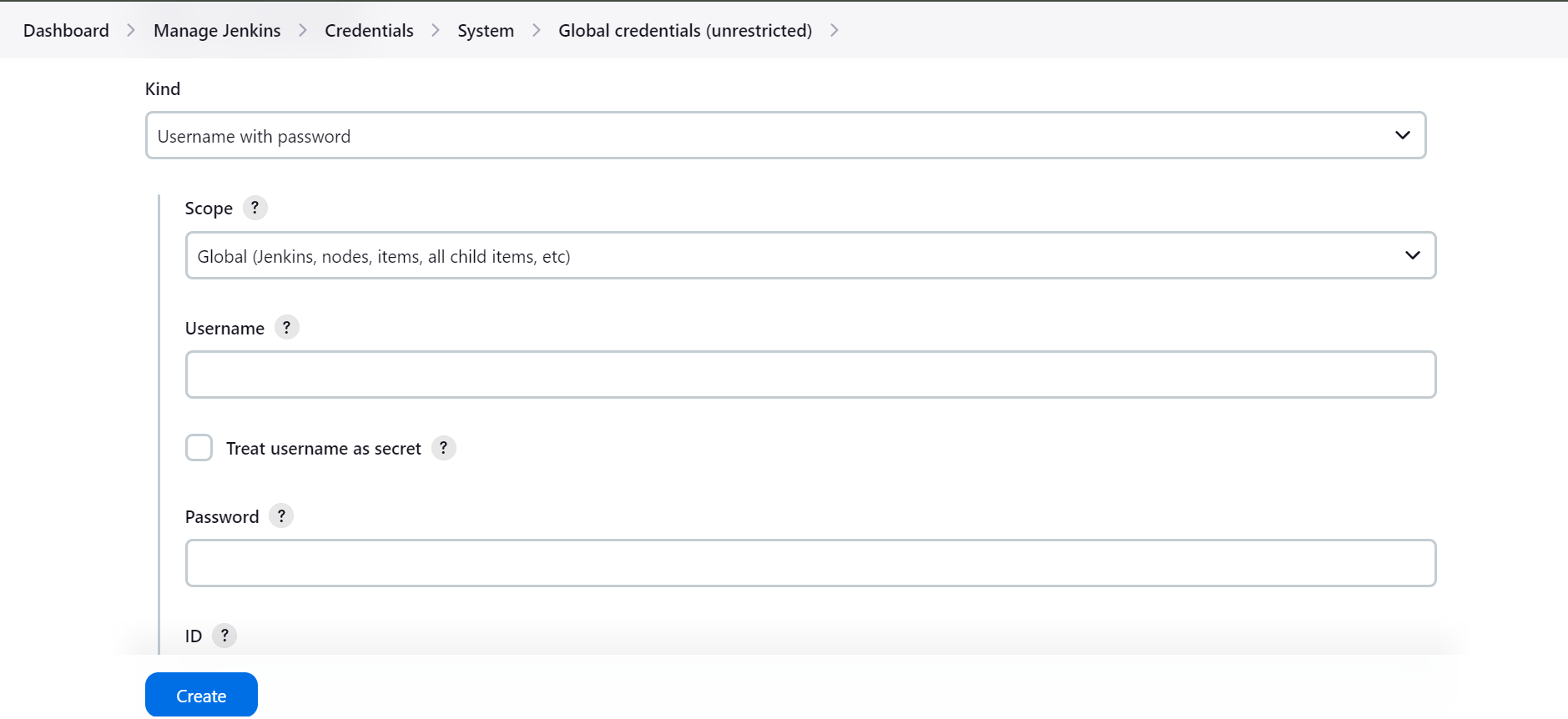

As you can see I had created some credentials to use in Jenkins Pipeline, to create the credentials go to System then go to Global Credentials and Add Credentials of Username and Password.

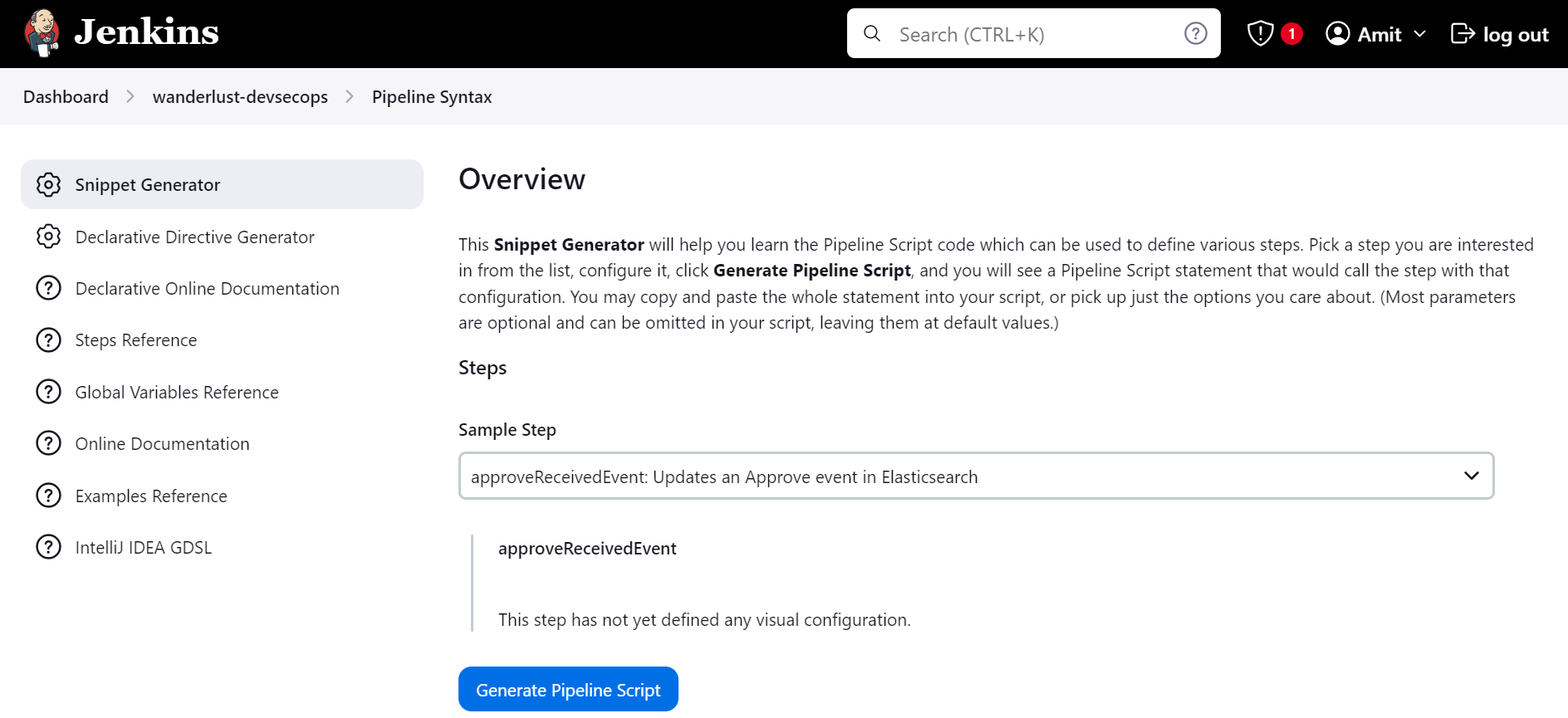

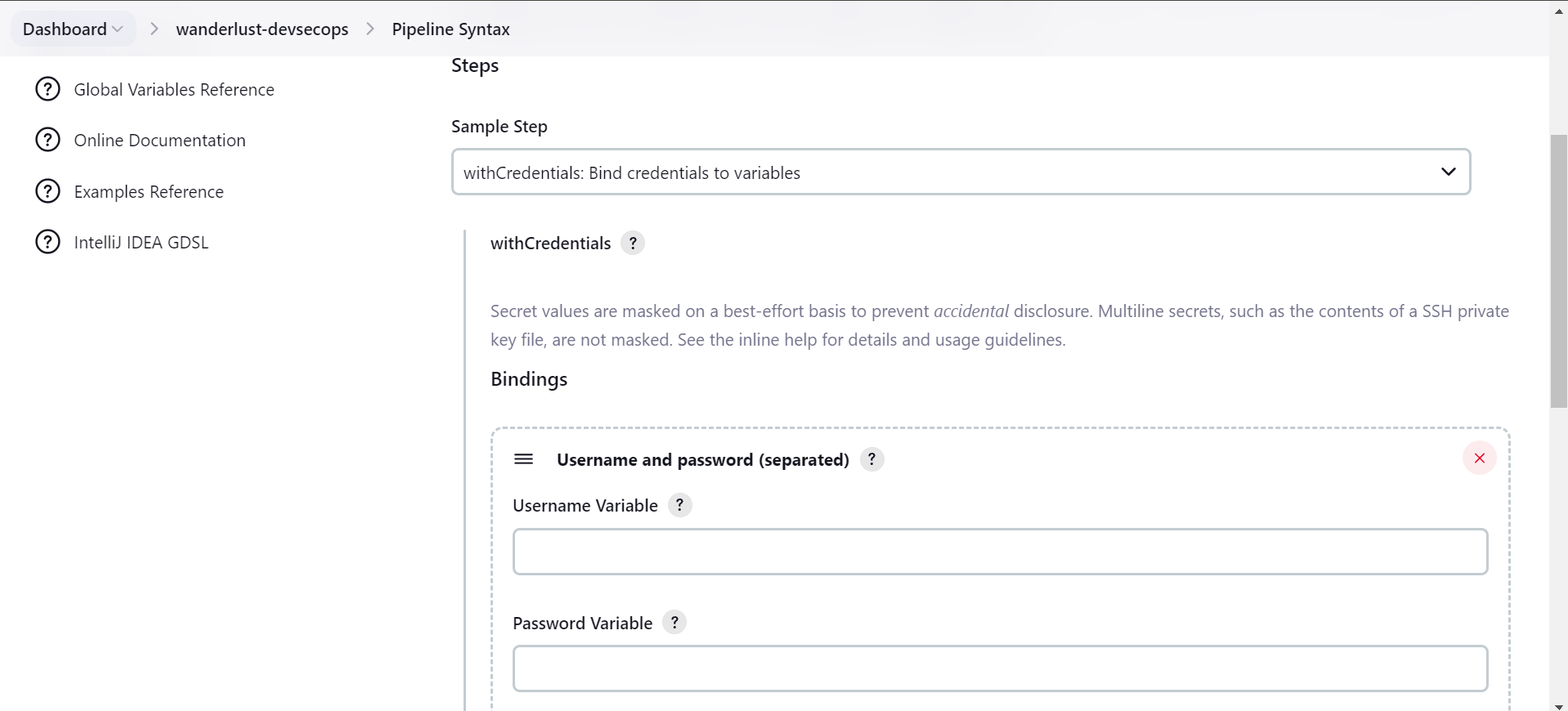

After this, when you added the dockerhub credentials return back to Pipeline creation in Jenkinsfile. To add withCredentials navigate to Jenkins Pipeline Syntax, for this you need to go to Configure Job scroll down and you will find Pipeline Syntax.

Search for “withCredentials“ and add Username and Password variable and use the dockerhub credentials that you had created.

Inside the “withCrendentials“ block I had defined the is the groovy language which is used in Jenkins where I had defined the variable frontendImage and backendImage in which I had stored the tag details of the image.

params.DOCKER_IMAGE_TAG

This is taking the parameter as an input from the user.

3) Push Stage

stage("Push Stage") {

steps {

withCredentials([usernamePassword(credentialsId: 'dockerhub', passwordVariable: 'DOCKER_PASSWORD', usernameVariable: 'DOCKER_USERNAME')]) {

script {

sh "echo \$DOCKER_PASSWORD | docker login -u \$DOCKER_USERNAME --password-stdin"

sh "docker push ${DOCKER_USERNAME}/wanderlust_frontend:${params.DOCKER_IMAGE_TAG}"

sh "docker push ${DOCKER_USERNAME}/wanderlust_backend:${params.DOCKER_IMAGE_TAG}"

}

}

}

}

Now comes to Push stage where we had used the “withCredentials“ pipeline syntax in which we are login to the Dockerhub and then pushing the image.

4) Deploy to GKE (Google Kubernetes Engine)

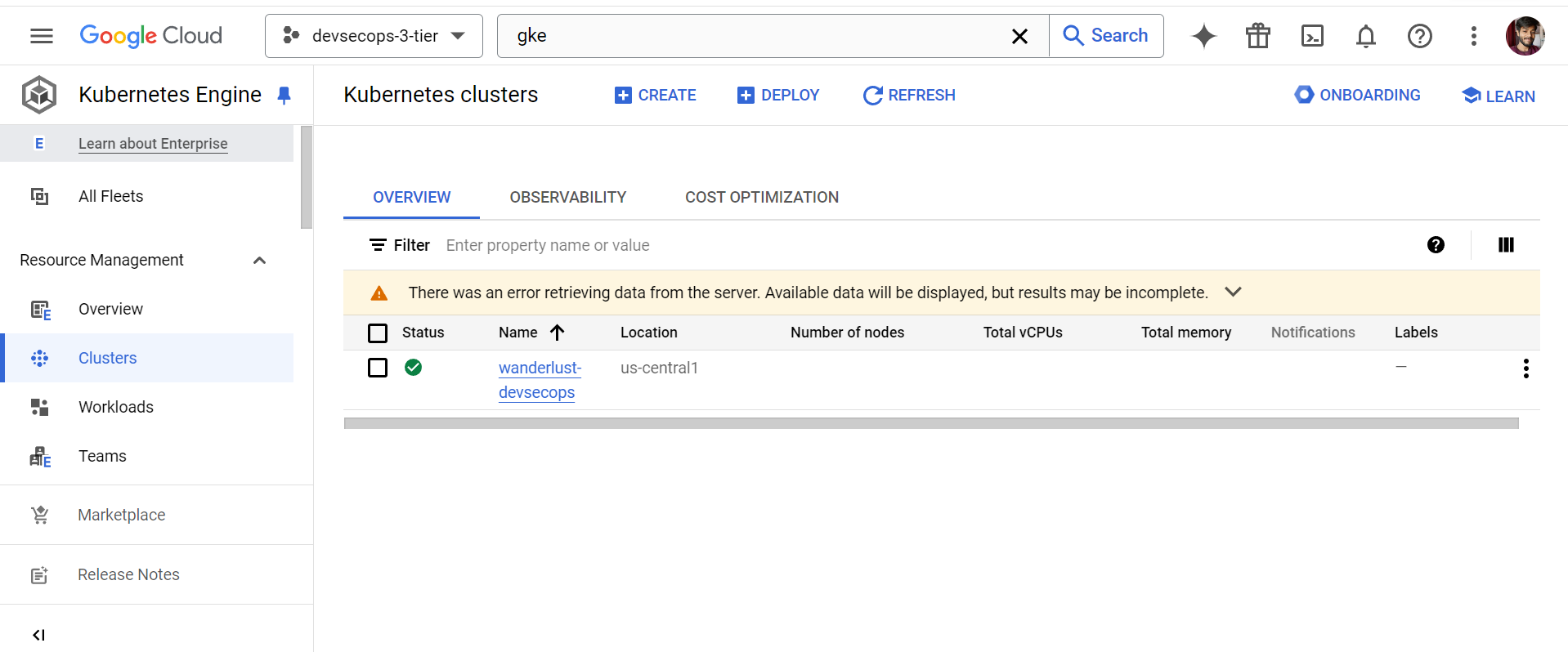

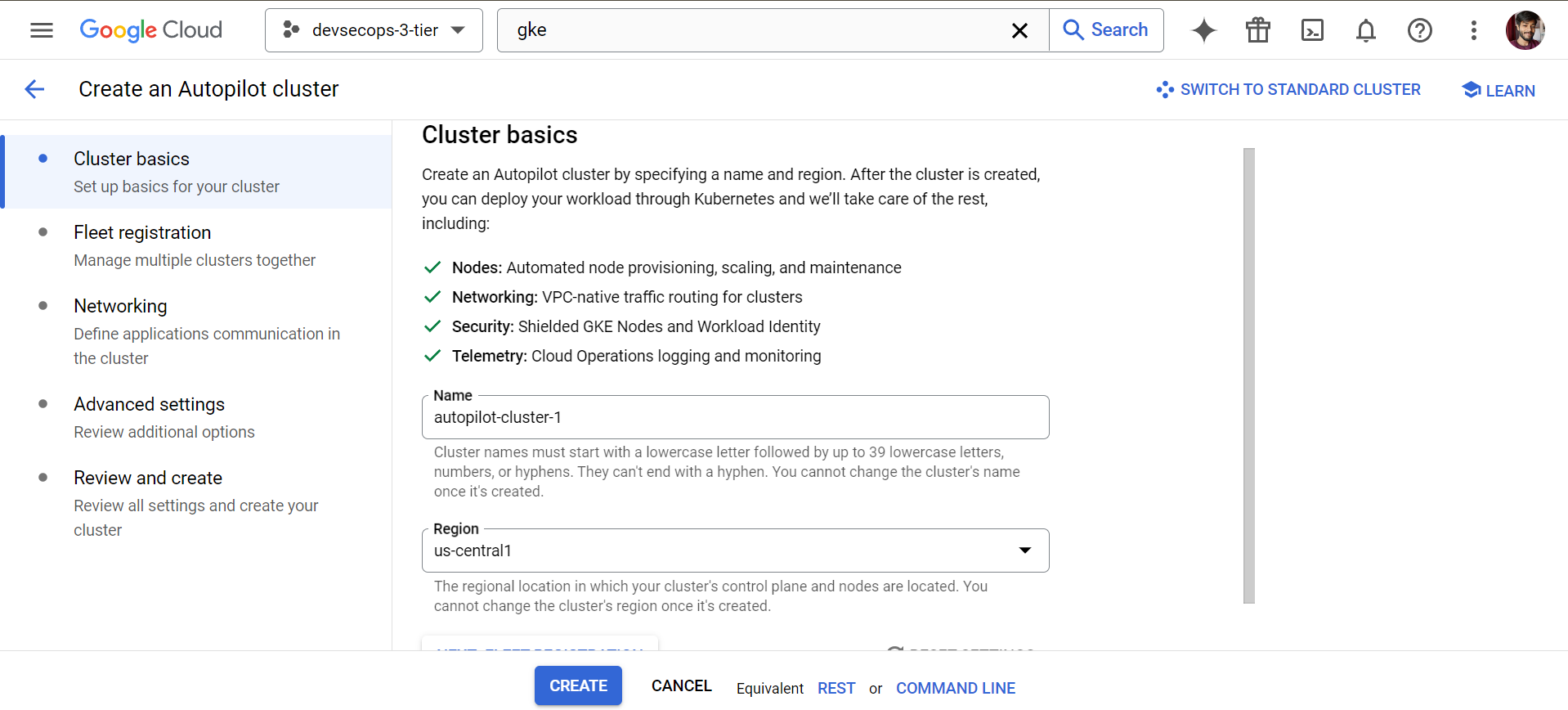

Navigate to the Google Cloud Console and search for GKE (Google Kubernetes Engine). I have already created the cluster named wanderlust-devsecops, which is an Autopilot Mode Cluster. These clusters are fully managed, so there is no need for us to manage the resources and cluster nodes manually.

After the cluster creation done, now extract the kubeconfig to access the cluster. Before this we need to install gcloud cli to authenticate with Google Cloud. I am using Windows system so execute these commands on Powershell.

(New-Object Net.WebClient).DownloadFile("https://dl.google.com/dl/cloudsdk/channels/rapid/GoogleCloudSDKInstaller.exe", "$env:Temp\GoogleCloudSDKInstaller.exe")

& $env:Temp\GoogleCloudSDKInstaller.exe

It will open the installer and after installation done go to VS Code or your favorite IDE and execute the gcloud auth command to authenticate and then to extract kubeconfig.

gcloud auth login

When you login with Gmail ID and password to GCP Console it will show this page “You are authenticated“.

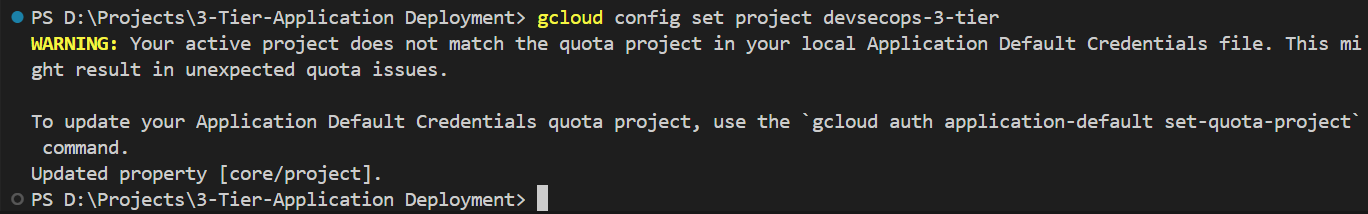

gcloud config set project devsecops-3-tier

This command will set the project ID in your terminal.

Now, we have to extract the kubeconfig from the created cluster.

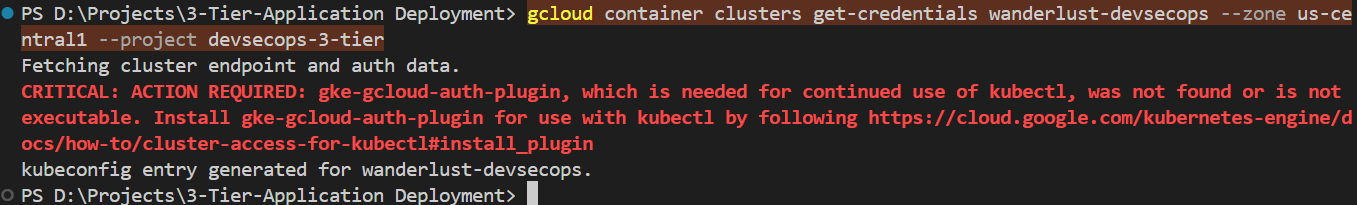

gcloud container clusters get-credentials wanderlust-devsecops --zone us-central1 --project devsecops-3-tier

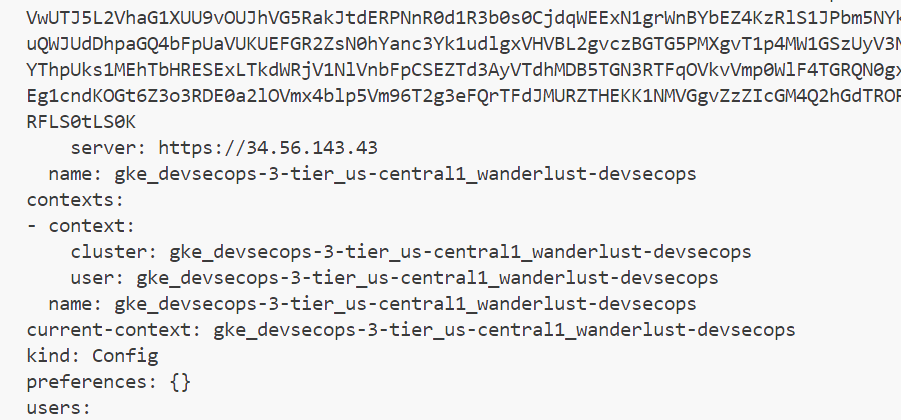

As we can see kubeconifg is generated, now to use kubeconfig we need to set the context. Here comes the Kubernetes knowledge, or what we can do we can do this with Google Cloud Shell from the Google Cloud console and then open the editor.

The above commands where you want to authenticate with google cloud by executing CLI command is for local, when we are running cloudshell this is already authenticated and to get kubeconfig run the gcloud container command.

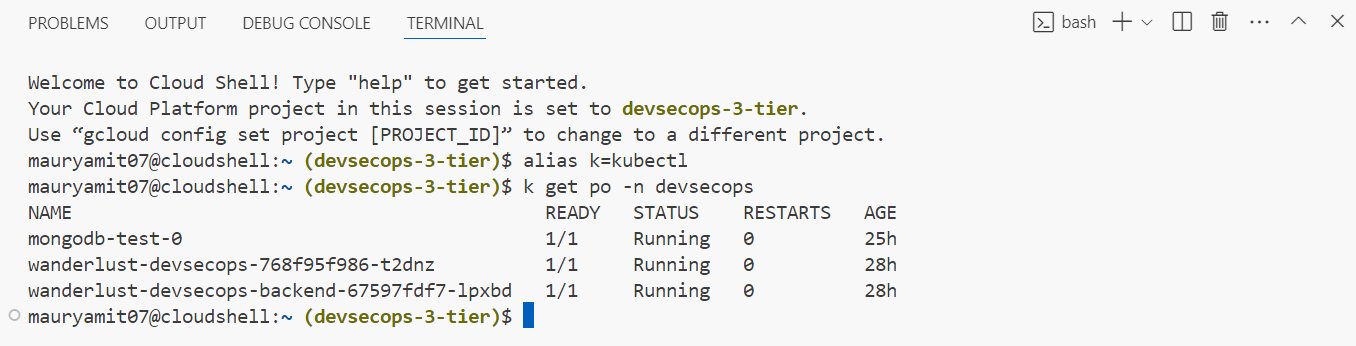

Now create the Kubernetes manifest files:

1) Namespace - Create the namespace “devsecops“ to seperate or to isolate the resources from other.

kubectl create namespace devsecops

1) Service Account - To authenticate with Jenkins.

kubectl create serviceaccount jenkins-sa -n devsecops

2) Role - After service account creation, create the role which you want to give permissions to view in the cluster. For now, I am giving almost full access to the jenkins-sa.

3) Role-Binding - After role creation, bind the role or assign the permissions to jenkins-sa.

4) Cluster Role - Create cluster role for the resources PV (Persistent Volume) as it not namespaced resource.

5) Cluster Role Binding - Bind the cluster role with the jenkins-sa by creating cluster role binding.

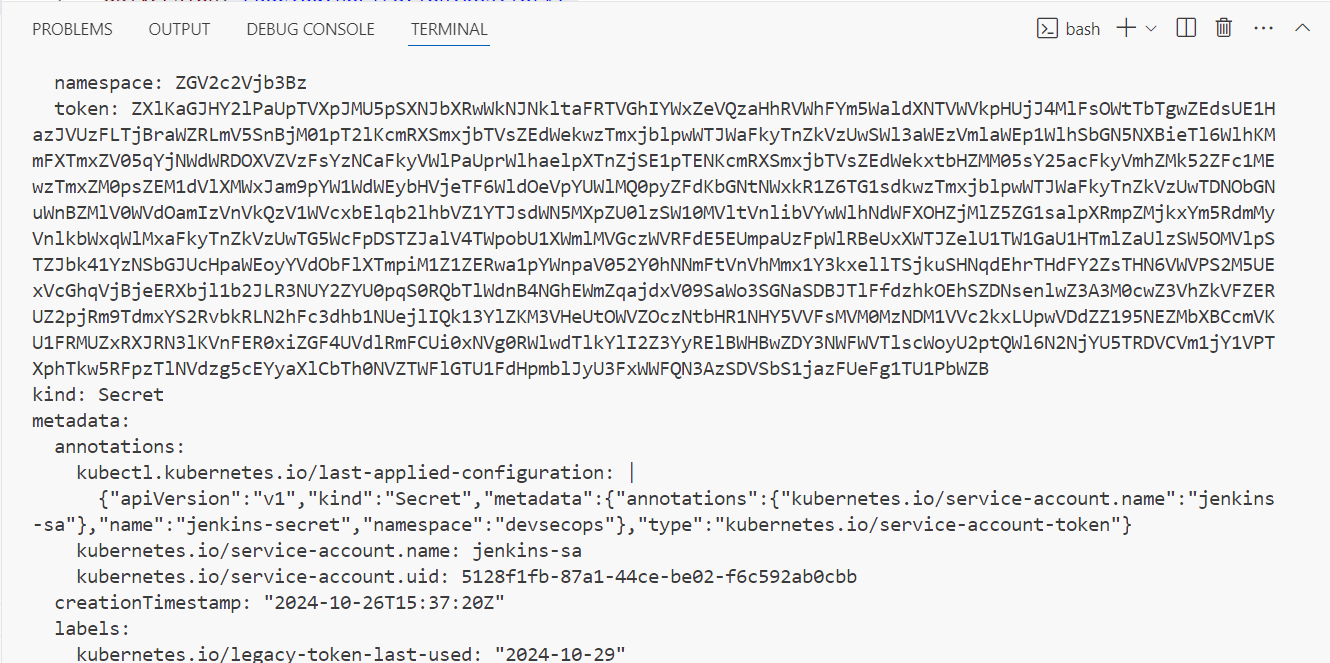

6) Secret - Create secret for the jenkins-sa to authenticate with service account token. After creation of secret copy the token by executing the command

kubectl get secret jenkins-secret -oyaml -n devsecops

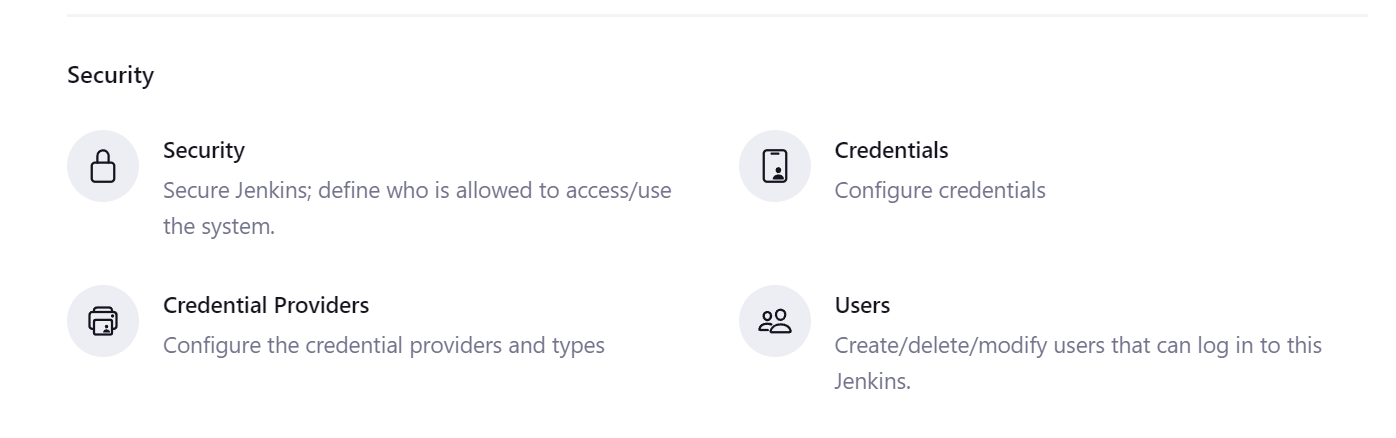

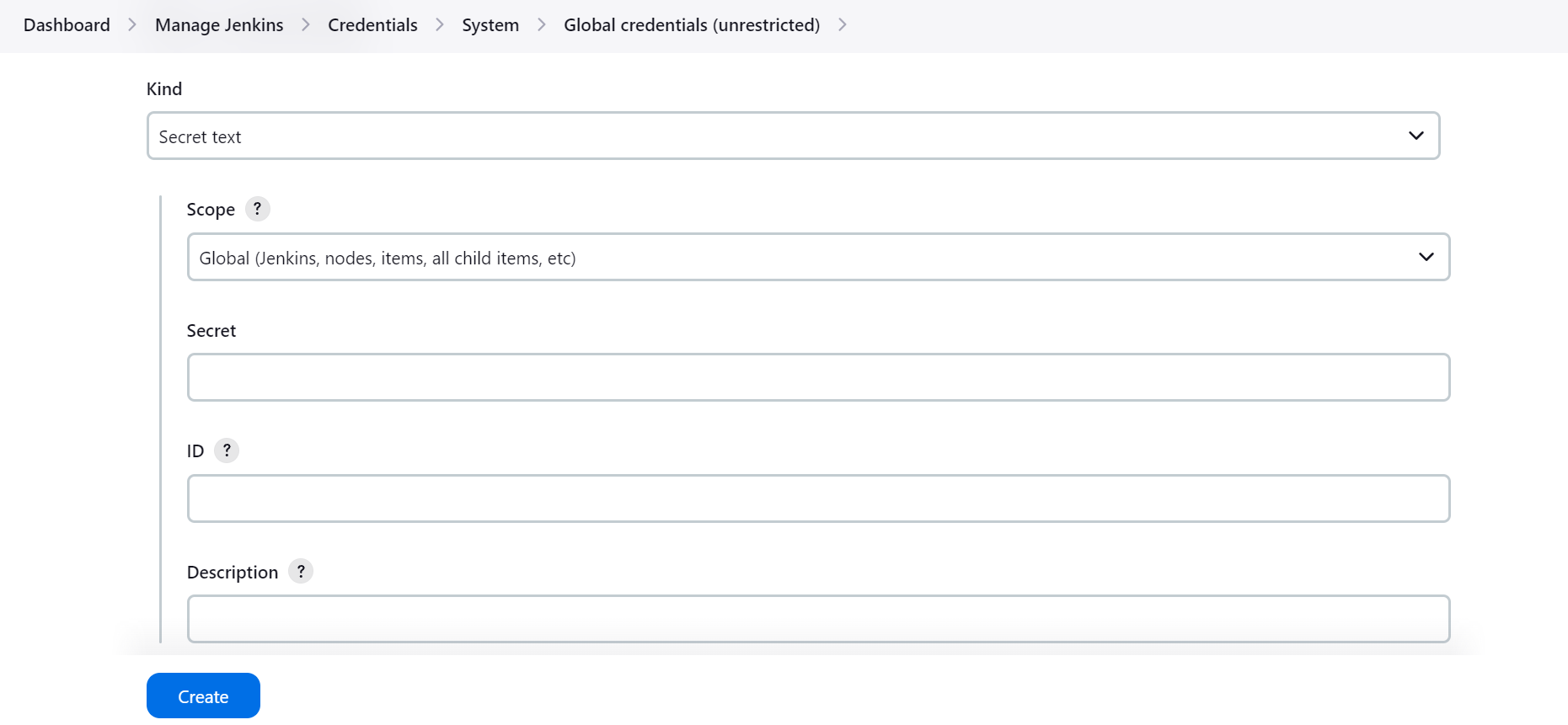

Now go to Jenkins and add the credentials of Secret Text in Manage Jenkins > Credentials > System > Global Credentials.

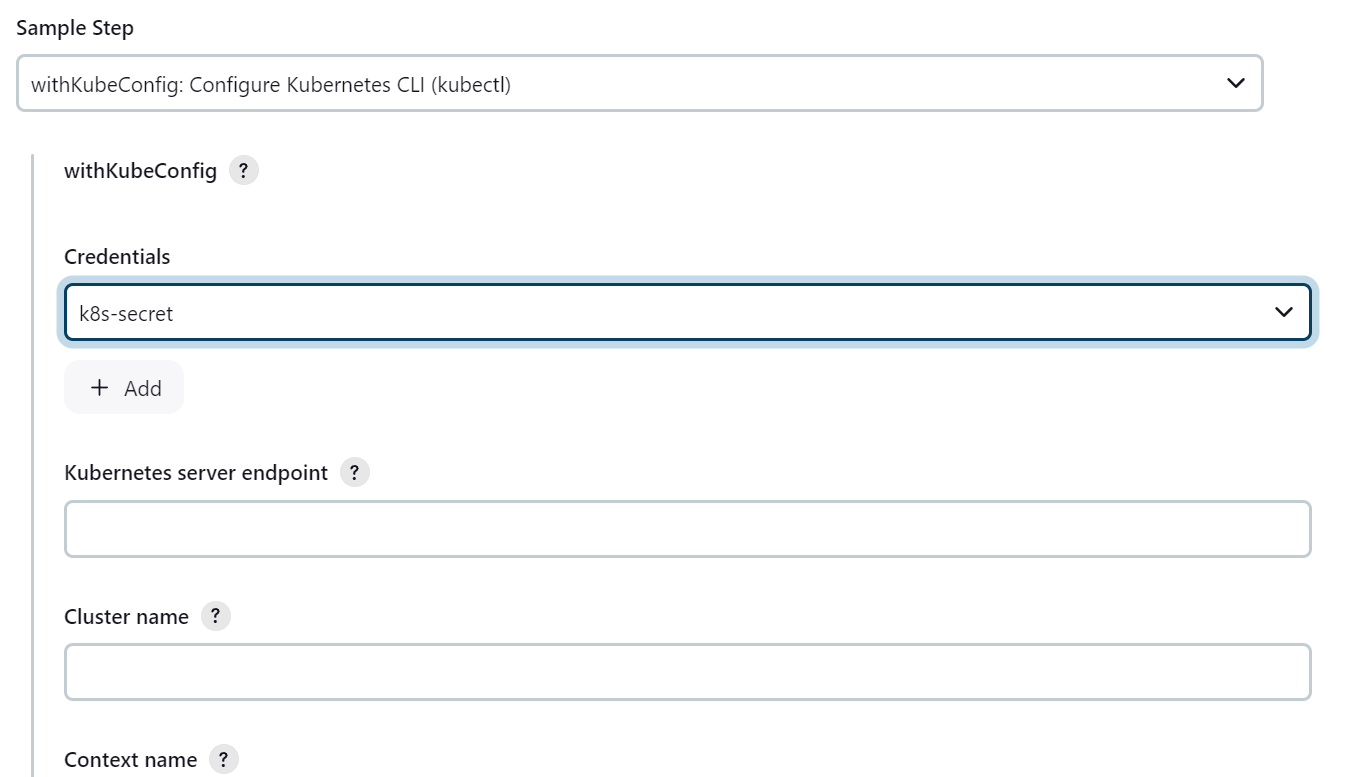

After adding the secret to the credentials go to Pipeline Syntax and search for “withkubeConfig” and select the credentials “k8s-secret“ and fill the required details Kubernetes server endpoint, Cluster Name and namespace.

You will get all the details from the kubeconfig, namespace would be devsecops.

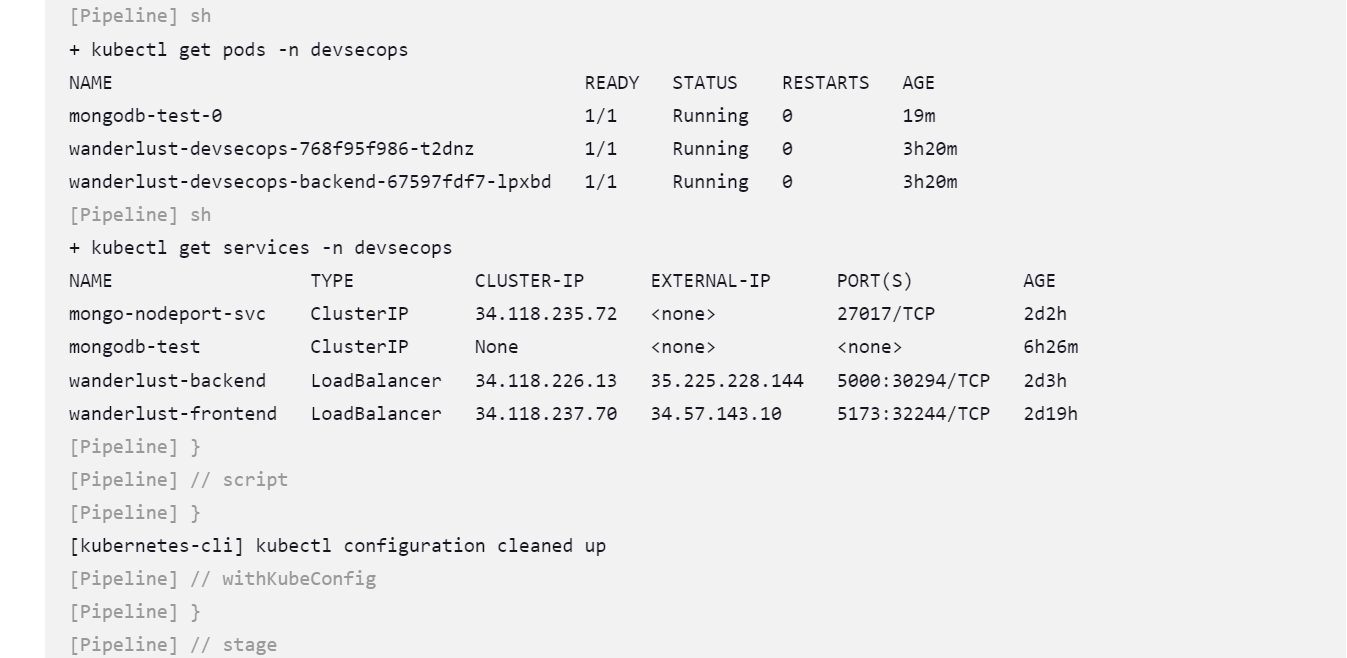

After all the details now head to Jeninsfile 4th stage i.e. last stage where you will execute the command of creation of all kubernetes manifest files.

stage("Deploy to GKE Cluster") {

steps {

withKubeConfig(caCertificate: '', clusterName: 'gke_devsecops-3-tier_us-central1_wanderlust-devsecops', contextName: '', credentialsId: 'k8s-secret', namespace: 'devsecops', restrictKubeConfigAccess: false, serverUrl: 'https://34.56.143.43') {

script {

sh "kubectl apply -f ./kubernetes -n devsecops"

sh "kubectl get pods -n devsecops"

sh "kubectl get services -n devsecops"

}

}

}

}

}

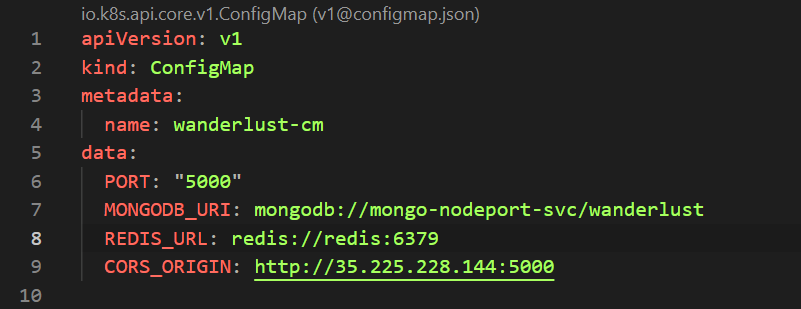

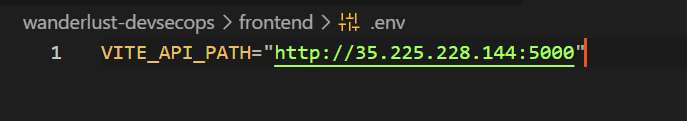

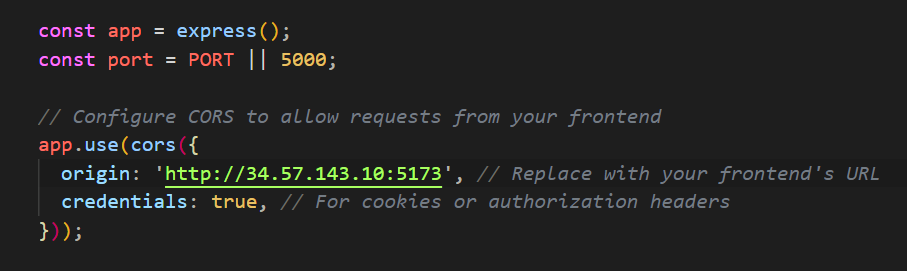

Before this, change the URL in backend-configmap in CORS_ORIGIN section, and also change in the frontend env with the deployed backend IP and port.

Then change in the backend code server.js to resolve all CORS error with the forntend URL.

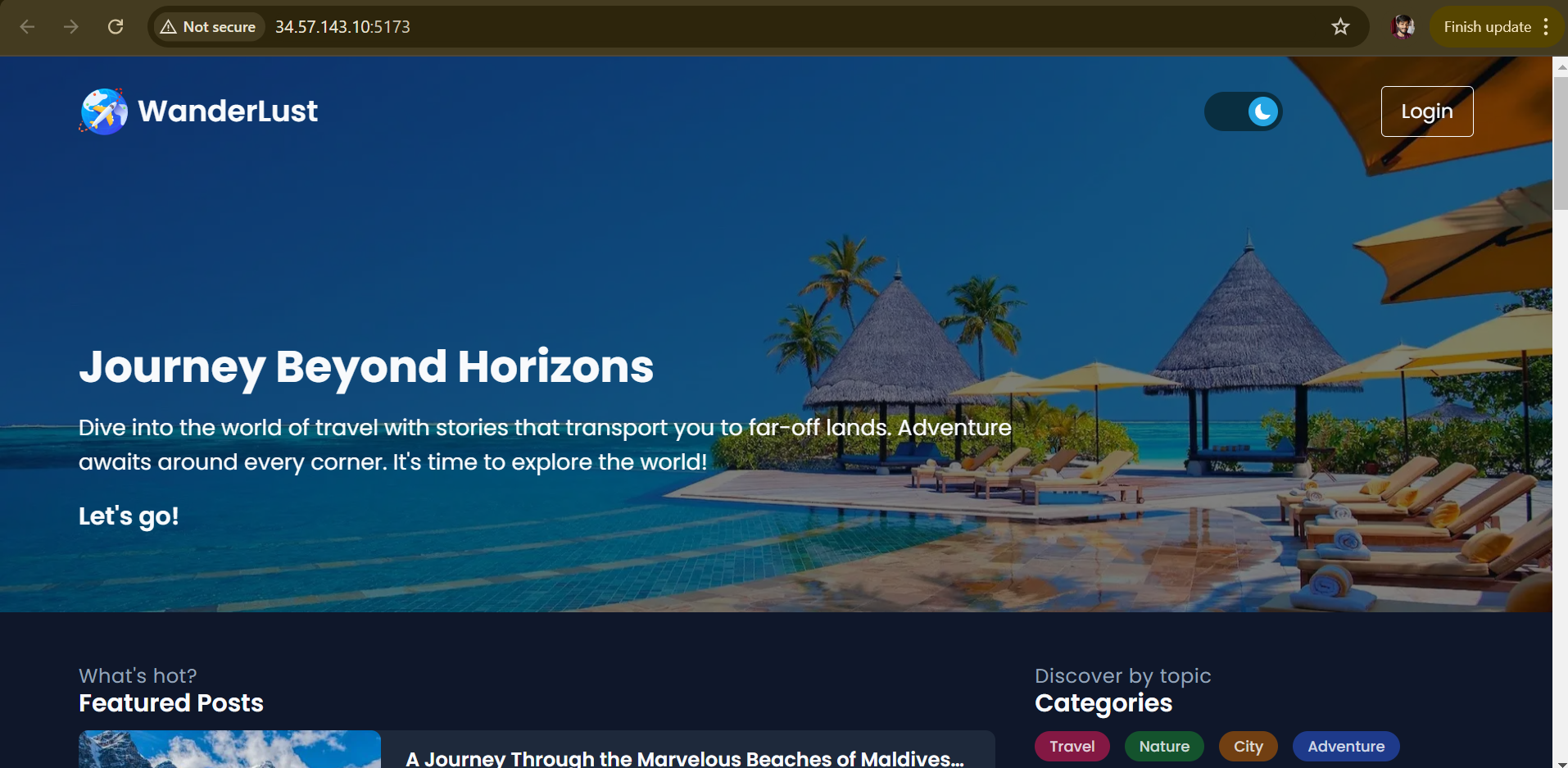

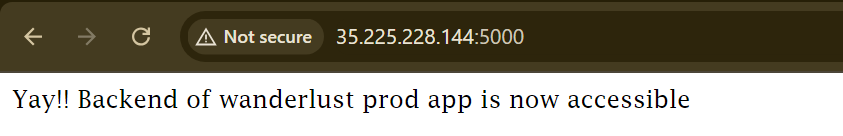

By this the frontend, backend and database mongodb is deployed.

If any issue arise, contact me on Twitter or on LinkedIn. But, this project ends not here I will implement DevSecOps approach and post it with you.

And yess !!! My next blog will be on deployement of this wnderlust project on AWS where I am using AWS ECS, AWS ECR, and AWS CI/CD.

Stay Tuned for the next blog !!

Conclusion

In review, this project showcases DevOps setup. It incorporates a 3 tier architecture, Jenkins CI/CD pipelines, Docker, Kubernetes. The step-by-step process covered everything that we needed to do.

GitHub Code : https://github.com/amitmaurya07/wanderlust-devsecops/tree/gcp-devsecops

Twitter : https://x.com/amitmau07

LinkedIn : www.linkedin.com/in/amit-maurya07

Subscribe to my newsletter

Read articles from Amit Maurya directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Amit Maurya

Amit Maurya

DevOps Enthusiast, Learning Linux