🚀 My Docker & DevOps Learning Journey !

Mohd Salman

Mohd Salman

Day 10: Digging Into ENV Variables & Multi-Stage Builds in Docker 🚀

Today’s Docker journey took me deeper into the concepts of ENV variables and multi-stage builds. These tools are essential for making applications secure, efficient, and easier to manage—especially as projects grow more complex. Let’s dive in!

🧠 What I Learned Today

ENV Variables

In software development, especially in backend environments, we often use ENV (environment) variables to store sensitive information securely, such as database URLs, API keys, and configuration settings. They allow applications to access these settings without exposing them directly in the codebase, making the project safer and easier to configure.

Why ENV Variables Matter

In a large project, repeatedly updating URLs or database credentials directly in code can be cumbersome. ENV variables simplify this by allowing all configurations to be managed in one file, ensuring that these critical settings are easy to find and change.

Quick Example

To connect a container to a MongoDB database using ENV variables:

docker run -p 3000:3000 -e MONGO_URI=mongodb://username:password@hostname:port <image_tag>

Here, MONGO_URI is set as an environment variable, pointing to the MongoDB URL. This approach makes managing multiple configurations (like testing vs. production databases) simple, while keeping sensitive info secure and accessible only within the container.

🚀 Multi-Stage Builds

Multi-stage builds in Docker optimize the build process by creating different setups within the same Dockerfile, each tailored to a specific environment—like development or production.

Why Use Multi-Stage Builds?

When developing, we often want tools like hot-reloading to instantly reflect code changes without restarting the server. However, in production, stability is essential, so disabling such features makes sense. Multi-stage builds allow us to create a development setup with hot-reloading and a separate, production-ready setup, all from the same Dockerfile.

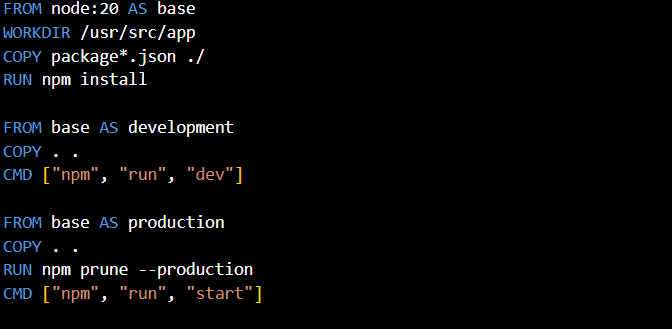

Example Dockerfile with Multi-Stage Builds

Let’s walk through a Dockerfile that sets up development and production stages for a Node.js application. This setup enables quick reloading during development and optimized performance in production:

# Base Stage: Shared configuration for all stages

FROM node:20 AS base

WORKDIR /usr/src/app

COPY . . # Copies all files from the project to the container’s working directory

RUN npm install # Installs dependencies

# Development Stage: Includes hot-reloading for an active development environment

FROM base AS development

COPY . .

CMD ["npm", "run", "dev"]

# Production Stage: Optimized for performance

FROM base AS production

RUN npm prune --production # Removes unnecessary development dependencies for a leaner image

CMD ["npm", "run", "start"]

Breakdown of Each Command:

Base Stage:

FROM node:20 AS base: Defines a base image with Node.js version 20.WORKDIR /usr/src/app: Sets the working directory where the application will run.COPY . .: Copies project files from the local directory to the container.RUN npm install: Installs all necessary dependencies.

Development Stage:

FROM base AS development: Reuses the base image and adds development-specific commands.CMD ["npm", "run", "dev"]: Starts the application in development mode, enabling hot-reloading.

Production Stage:

FROM base AS production: Reuses the base image but with production-only optimizations.RUN npm prune --production: Removes all development dependencies to create a leaner, production-ready image.CMD ["npm", "run", "start"]: Launches the application in production mode.

Each stage leverages a shared base setup, which improves efficiency and consistency across environments.

🔥 Takeaway

ENV variables and multi-stage builds are essential tools that every developer working with Docker should know. ENV variables keep configurations clean and secure, while multi-stage builds allow developers to tailor the environment for specific needs without duplicating Dockerfiles.

What’s Next?

In my next update, I’ll dive into orchestration—how to handle multiple containers for larger applications using tools like Docker Compose. Stay tuned as I continue exploring Docker’s potential!

🔗 Follow My Docker Journey! I’m posting daily updates on what I’m learning, the challenges I encounter, and practical examples. Follow along with #SalmanDockerDevOpsJourney to see my progress!

Feel free to connect with me on LinkedIn or follow along with the hashtag!

Subscribe to my newsletter

Read articles from Mohd Salman directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by