Kubernetes - The Ultimate Orchestration Tool

Anurag Hatole

Anurag HatoleTable of contents

- Why Kubernetes?

- Kubernetes Architecture

- Core Concepts of Kubernetes

- Networking in Kubernetes

- Traffic Management

- Persistent Storage in Kubernetes

- Configuration and Secrets Management

- Service Mesh: Microservice Communication

- Kubernetes Security

- Observability in Kubernetes

- Best Practices and Security Guidelines

- Common kubectl Commands and Troubleshooting Tips

- Troubleshooting Tips

- Conclusion

Kubernetes is a powerful tool for automating application deployment, scaling, and management in containerized environments. As an industry standard, it provides solutions to run and scale complex applications efficiently. This guide is crafted to take you from the basics of Kubernetes to advanced concepts, so by the end, you'll understand why each feature is important and how it fits into the big picture of cloud-native application management.

Why Kubernetes?

Containers revolutionized application deployment by providing isolated, consistent environments, but they presented new challenges in managing thousands of containers at scale. Kubernetes, also known as K8s, automates container management, helping developers deploy, scale, and operate applications efficiently. By abstracting infrastructure complexities, Kubernetes enables teams to focus on building applications rather than managing infrastructure.

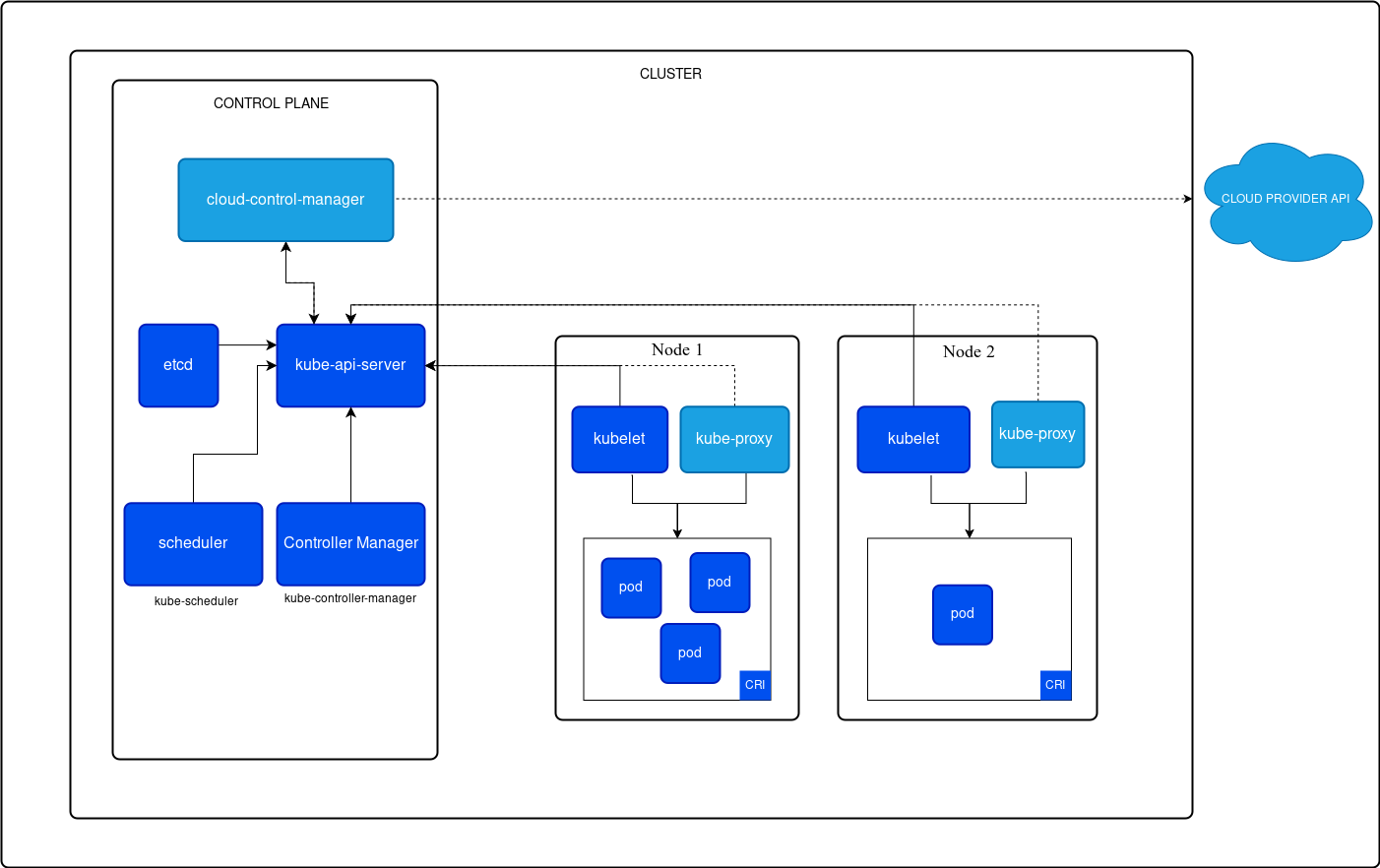

Kubernetes Architecture

1. Control Plane: The brain of Kubernetes, managing the desired state of the cluster, the component’s of control plane are:

API Server: The API Server is the interface between Kubernetes and its users (both human and components like kubelet). It’s responsible for processing RESTful requests (e.g., creating, reading, updating, and deleting Kubernetes objects), exposing the Kubernetes API, and validating and executing API requests.

Etcd: Etcd is a distributed, reliable key-value store that holds all cluster data in a strongly consistent way. It’s often considered the single source of truth in Kubernetes, as it stores configuration data, secrets, service discovery information, and cluster state.

Scheduler: The Scheduler allocates Pods to Nodes based on resource availability and defined constraints. It continuously monitors Pods without assigned nodes and places them where resources best meet their requirements.

Controller Manager: The Controller Manager is responsible for ensuring that the cluster matches the desired state defined in configuration files. It continuously reconciles the actual cluster state with the desired state, handling tasks such as replication, node monitoring, and endpoint management.

Cloud-Controller Manager: This component manages cloud-specific control logic and integrates the cluster with cloud infrastructure. It handles interactions with cloud providers to orchestrate resources such as load balancers and storage.

2. Node Plane: While the Control Plane manages the cluster’s overall state, the Node Components facilitate container management on each node in the cluster, the component’s of Node Plane are:

Kubelet: The Kubelet is the Kubernetes agent on each node, responsible for ensuring containers run in Pods. It communicates with the API Server and manages pod execution based on received instructions.

Kube-proxy: The Kube-proxy component is responsible for network routing, managing network rules to allow Pods on different nodes to communicate.

Pod: A Pod is the smallest deployable unit, representing a single instance of a running process. It can contain one or more containers that share the same network namespace for easy communication and manage storage resources for scalable application deployment.

Container Runtime Interface: The Container Runtime Interface executes containers within Pods. The system supports Docker, containerd, CRI-O, and other runtime options through the CRI.

Core Concepts of Kubernetes

- Pods: A Pod is the smallest deployable unit in Kubernetes. It typically runs a single container but can also group multiple containers that share storage and networking. Pods are ephemeral by nature, meaning they can be replaced as needed by Kubernetes to ensure high availability.

apiVersion: v1

kind: Pod

metadata:

name: my-app

spec:

containers:

- name: app-container

image: myapp:latest

ports:

- containerPort: 80

- Labels and Selectors : Labels are key-value pairs assigned to Kubernetes objects like Pods and Services. They allow for flexible selection and grouping of resources, making it easier to perform bulk operations. Selectors use these labels to identify resources, allowing tools like Services and ReplicaSets to target specific Pods.

metadata:

labels:

app: my-app

environment: production

- Namespaces : Namespaces provide a way to segment resources within a cluster, allowing multiple teams or projects to share the same Kubernetes environment without conflicts. For instance, you could have

development,staging, andproductionnamespaces, each isolated from the others.

apiVersion: v1

kind: Namespace

metadata:

name: development

- Annotations: Annotations add metadata to objects in Kubernetes, similar to labels but without any functional impact. They’re used for attaching information to objects.

metadata:

annotations:

description: "This is my production deployment of the app."

- ReplicaSet : A ReplicaSet ensures a specific number of identical Pods are running at all times. If a Pod fails, the ReplicaSet automatically replaces it.

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: my-replicaset

spec:

replicas: 3

selector:

matchLabels:

app: my-app

template:

metadata:

labels:

app: my-app

spec:

containers:

- name: my-app

image: myapp:latest

- Deployments: Deployments build on ReplicaSets by allowing updates, rollbacks, and scaling to be automated. It manages a set of Pods in a ReplicaSet and ensures they’re running according to a desired state.

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-app

labels:

app: my-app

spec:

replicas: 3

selector:

matchLabels:

app: my-app

template:

metadata:

labels:

app: my-app

spec:

containers:

- name: my-container

image: my-app-image:latest

ports:

- containerPort: 80

Networking in Kubernetes

1. Services: Services provide stable endpoints for accessing Pods, which are ephemeral. Kubernetes offers several types of services:

ClusterIP: Accessible only within the cluster.

NodePort: Exposes the service on a static port on each node.

LoadBalancer: Integrates with cloud providers to offer an external load balancer.

apiVersion: v1

kind: Service

metadata:

name: my-service

spec:

type: ClusterIP

selector:

app: my-app

ports:

- port: 80

targetPort: 80

2. Ingress: An Ingress provides a way to route HTTP and HTTPS traffic to services based on hostnames and paths. It’s a powerful way to manage access to your applications externally.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: my-ingress

spec:

rules:

- host: myapp.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: my-service

port:

number: 80

Traffic Management

1. Ingress Controllers: Ingress resources require an Ingress Controller (e.g., NGINX or Traefik) to enforce rules. The Ingress Controller translates Ingress definitions into load balancing, SSL, and routing configurations, managing traffic in a way that’s both scalable and secure.

2. Gateway API: The Gateway API is the future of Kubernetes ingress management. It provides a more flexible, role-oriented approach to traffic control, offering new ways to configure advanced traffic routing and improve load balancing and security capabilities.

Persistent Storage in Kubernetes

In Kubernetes, storage is crucial for stateful applications. Here are the main components:

Volumes: Tied to a Pod’s lifecycle, meaning they disappear when the Pod is deleted.

Persistent Volumes (PVs): Storage resources that exist independently of Pods.

Persistent Volume Claims (PVCs): Requests for storage resources by Pods, linked to PVs.

# Persistent Volume

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-data

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/mnt/data"

# Persistent Volume Claim

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-data

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

Configuration and Secrets Management

Managing application configurations and sensitive data securely is crucial in Kubernetes. Here’s how Kubernetes handles configuration and secrets:

1. ConfigMaps: ConfigMaps allow you to decouple configuration details from your container images, making applications more flexible and portable. ConfigMaps store non-sensitive data, like environment-specific variables or application settings, and can be injected into Pods as environment variables or mounted as files.

Example ConfigMap:

apiVersion: v1

kind: ConfigMap

metadata:

name: app-config

data:

database_url: "mongodb://db-service:27017"

log_level: "debug"

You can then reference this ConfigMap in a pod:

apiVersion: v1

kind: Pod

metadata:

name: config-demo

spec:

containers:

- name: demo-app

image: myapp:latest

envFrom:

- configMapRef:

name: app-config

2. Secrets: Secrets in Kubernetes are used to store sensitive data, like passwords, API keys, and SSH credentials. Secrets are similar to ConfigMaps but encoded in Base64 to enhance security. By default, Secrets are not encrypted at rest, so additional configuration (such as encryption at rest in etcd) is recommended for high-security setups.

Example Secret:

apiVersion: v1

kind: Secret

metadata:

name: db-secret

type: Opaque

data:

db_password: dGVzdHBhc3M= # 'testpass' encoded in Base64

Using Secrets in a Pod:

apiVersion: v1

kind: Pod

metadata:

name: secret-demo

spec:

containers:

- name: demo-app

image: myapp:latest

env:

- name: DB_PASSWORD

valueFrom:

secretKeyRef:

name: db-secret

key: db_password

Service Mesh: Microservice Communication

In large, distributed applications with many microservices, handling traffic between services can be challenging. A Service Mesh provides a dedicated infrastructure layer for managing service-to-service communication, making it easy to implement advanced networking features without altering application code.

Benefits of Service Mesh:

Traffic Management: Control request routing, load balancing, and retries.

Observability: Track requests and monitor latency, errors, and traffic.

Security: Enforce policies for authentication, authorization, and encryption.

Istio and Linkerd are popular Service Mesh solutions for Kubernetes. These tools use sidecar proxies, which are injected into each Pod to manage traffic in and out of the container.

Example of Istio VirtualService for Traffic Routing:

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: my-service

spec:

hosts:

- my-app-service

http:

- route:

- destination:

host: my-app-service

subset: v1

Kubernetes Security

Security is fundamental in Kubernetes, especially in production environments. Here are core security practices:

1. Role-Based Access Control (RBAC)

RBAC restricts access to cluster resources based on user roles, ensuring users only access what they need. This is achieved through:

Roles: Define permissions within a namespace.

ClusterRoles: Define permissions across the entire cluster.

RoleBindings: Bind a Role to a user or group.

Example Role and RoleBinding:

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

namespace: default

name: pod-reader

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["get", "watch", "list"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: read-pods

namespace: default

subjects:

- kind: User

name: "example-user"

roleRef:

kind: Role

name: pod-reader

apiGroup: rbac.authorization.k8s.io

2. Network Policies

Network Policies define how Pods can communicate with each other and other network endpoints. They act as a firewall for controlling traffic at the Pod level.

Example Network Policy:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-web

namespace: default

spec:

podSelector:

matchLabels:

role: web

ingress:

- from:

- podSelector:

matchLabels:

role: frontend

Observability in Kubernetes

Observability in Kubernetes refers to the ability to understand the internal states and behaviors of the system by collecting and analyzing various types of data. It encompasses three primary components: Monitoring, Logging, and Tracing. Together, they provide insights into system performance, troubleshooting, and debugging.

1. Monitoring: Monitoring focuses on gathering and analyzing metrics over time to understand the health and performance of Kubernetes clusters and applications. Metrics can track resource utilization (like CPU, memory), network traffic, and application-specific data. Popular tools for Kubernetes monitoring include Prometheus and Grafana.

Prometheus: An open-source monitoring and alerting toolkit designed specifically for monitoring dynamic containerized environments. Prometheus collects real-time metrics by scraping HTTP endpoints and stores them as time-series data.

Grafana: A data visualization tool that integrates with Prometheus to create interactive dashboards for visualizing metrics and setting up alerts.

Prometheus Configuration Example:

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: monitoring

data:

prometheus.yml: |

global:

scrape_interval: 15s

scrape_configs:

- job_name: 'kubernetes-pods'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_label_app]

action: keep

regex: .*

Once configured, Grafana can display Prometheus data in customized dashboards, showing metrics like CPU usage, memory, and request latency.

2. Logging: Logging is essential for capturing events and messages generated by applications and Kubernetes components. Logs provide a historical record of what happened in the system and are invaluable for debugging and troubleshooting issues. Logs in Kubernetes can come from various sources, including:

Container logs: Application-specific logs from individual containers.

Kubernetes component logs: Logs from the Kubernetes API server, scheduler, and controller manager.

To efficiently manage and search logs, Kubernetes often uses the EFK Stack (Elasticsearch, Fluentd, and Kibana):

Elasticsearch: Stores log data and allows for fast retrieval.

Fluentd: A log collector and forwarder, which captures logs from Kubernetes nodes and sends them to Elasticsearch.

Kibana: A visualization tool for exploring and analyzing logs stored in Elasticsearch.

Example Fluentd DaemonSet for Log Collection:

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd

namespace: kube-logging

spec:

selector:

matchLabels:

app: fluentd

template:

metadata:

labels:

app: fluentd

spec:

containers:

- name: fluentd

image: fluentd/fluentd-kubernetes-daemonset:v1.11

env:

- name: FLUENT_ELASTICSEARCH_HOST

value: "elasticsearch.kube-logging"

volumeMounts:

- name: varlog

mountPath: /var/log

volumes:

- name: varlog

hostPath:

path: /var/log

With this setup, you can use Kibana to visualize logs, search for specific events, and troubleshoot errors.

3. Tracing: Tracing helps understand the flow of requests as they pass through various services in an application, particularly in microservices architectures. It provides insight into latency and identifies performance bottlenecks. Distributed tracing is especially useful in Kubernetes environments, where requests may travel through multiple microservices.

Jaeger and OpenTelemetry are popular tools for distributed tracing in Kubernetes.

Jaeger: An end-to-end distributed tracing system, initially developed by Uber. Jaeger captures spans (individual units of work in a trace) and visualizes the full request path through multiple services.

OpenTelemetry: A unified framework for collecting traces, metrics, and logs. OpenTelemetry is becoming the standard for observability and integrates well with both Prometheus and Jaeger.

Example Jaeger Deployment in Kubernetes:

apiVersion: v1

kind: ConfigMap

metadata:

name: jaeger-config

namespace: tracing

data:

jaeger-agent-config.yml: |

collector:

zipkin:

http:

enabled: true

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: jaeger

namespace: tracing

spec:

replicas: 1

selector:

matchLabels:

app: jaeger

template:

metadata:

labels:

app: jaeger

spec:

containers:

- name: jaeger

image: jaegertracing/all-in-one:1.20

ports:

- containerPort: 16686

- containerPort: 6831

When tracing is enabled, you can follow the request's path from the initial request through each service and back to the response, providing valuable insights into latency and potential areas for optimization.

Best Practices and Security Guidelines

Ensuring security and following best practices is essential when deploying applications on Kubernetes. Here are some key guidelines:

Namespaces and RBAC: Use namespaces to organize resources and enforce isolation. Set up Role-Based Access Control (RBAC) to limit access to only those who need it.

Pod Security Policies: Enforce security policies that prevent privileged containers and manage access to resources like host network and file systems.

Network Policies: Control communication between pods with network policies. This can be helpful to isolate sensitive services (e.g., databases) from public traffic.

Image Security: Use trusted images and scan them for vulnerabilities regularly. Limit the use of root users in images to reduce risks.

Secrets Management: Avoid hard-coding sensitive information in configurations. Use Kubernetes Secrets to manage sensitive data like API keys, credentials, and tokens.

Resource Limits: Define resource requests and limits for CPU and memory to prevent pods from overloading the cluster and affecting other applications.

Implementing these security measures strengthens your Kubernetes environment against threats and ensures the stability of your applications.

Common kubectl Commands and Troubleshooting Tips

kubectl is Kubernetes’ command-line tool, and mastering it is essential for effective Kubernetes management. Here are some frequently used commands:

View All Pods in a Namespace:

kubectl get pods -n <namespace>Describe a Pod to Check Events and Details:

kubectl describe pod <pod-name>Check Logs of a Pod:

kubectl logs <pod-name>Scale a Deployment:

kubectl scale deployment <deployment-name> --replicas=<number>Delete a Resource:

kubectl delete <resource-type> <resource-name>

Troubleshooting Tips

CrashLoopBackOff Errors: Run

kubectl describe pod <pod-name>to get details on why a pod is crashing.Connectivity Issues: Verify service endpoints and ensure that network policies aren’t blocking communication.

High Resource Usage: Check resource allocation with

kubectl top podand adjust resources if necessary.

These commands help in navigating, managing, and troubleshooting common Kubernetes issues, making daily operations smoother.

Conclusion

Kubernetes has revolutionized the way modern applications are deployed, scaled, and managed in cloud environments. By providing an automated, self-healing, and scalable platform, it empowers teams to focus on building resilient applications that meet business demands. This article covered foundational Kubernetes concepts, common commands, security best practices, and CI/CD integration—all essential for mastering Kubernetes.

Let Kubernetes handle the orchestration so you can focus on creating value with your applications.

Subscribe to my newsletter

Read articles from Anurag Hatole directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Anurag Hatole

Anurag Hatole

👋 Hey there! I’m Anurag, a final-year BCA student with a solid foundation in the MERN stack and a deep passion for Cloud and DevOps technologies, currently learning and expanding my knowledge in these areas.