Generative AI - Nvidia Course Notes

Jayachandran Ramadoss

Jayachandran RamadossI have written this article with the information summary from my perspective of learning Nvidia Crash Course “Generative AI”.

Central Idea of Generative AI

If the strong models that learn the structure of Data, Then It can generate new Data too.

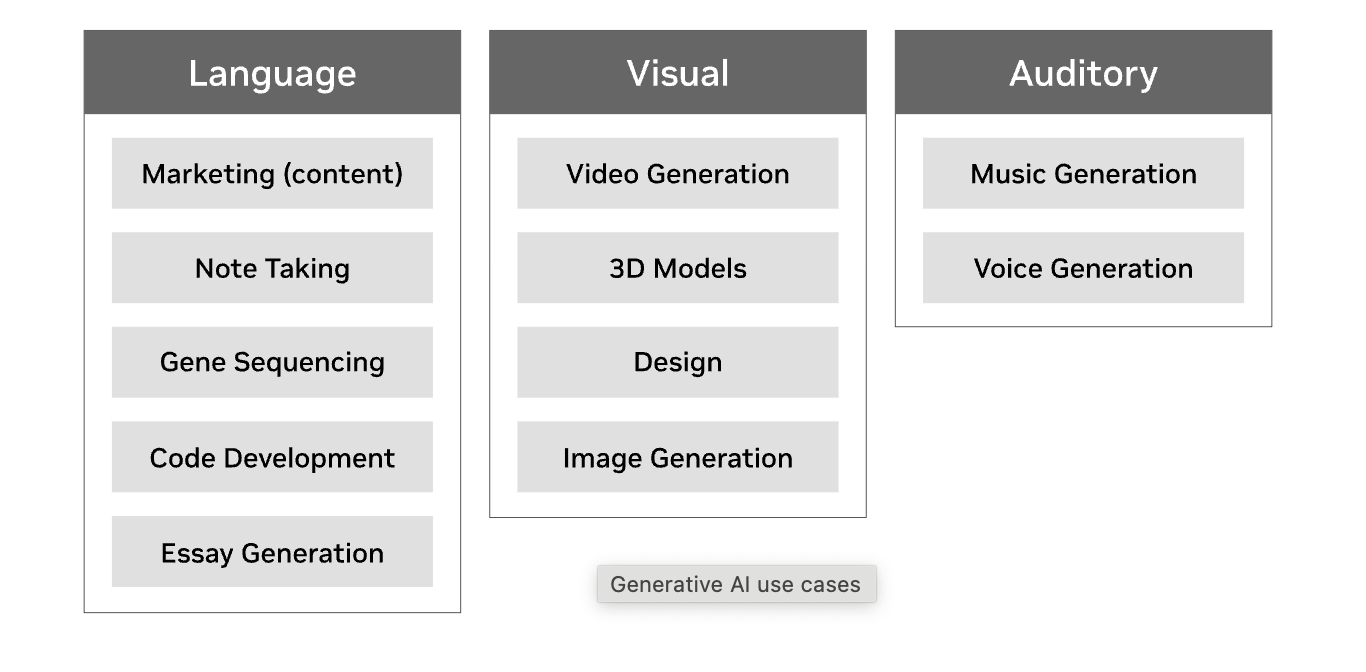

Generative AI has become one of the most exciting fields within artificial intelligence, transforming how we approach content creation across text, images, sound, animations, and even complex 3D models. Let’s dive into what generative AI is, its evolution, and some fascinating examples that show just how powerful it has become.

What is Generative AI?

Generative AI refers to systems that can create new content by recognising and understanding existing patterns in data. This process involves a variety of input-output combinations—be it text, images, sound, or animations. By leveraging advanced neural networks, these AI models can identify the structure in data, thereby enabling the creation of novel, yet coherent, outputs that feel original.

How Does Generative AI Work?

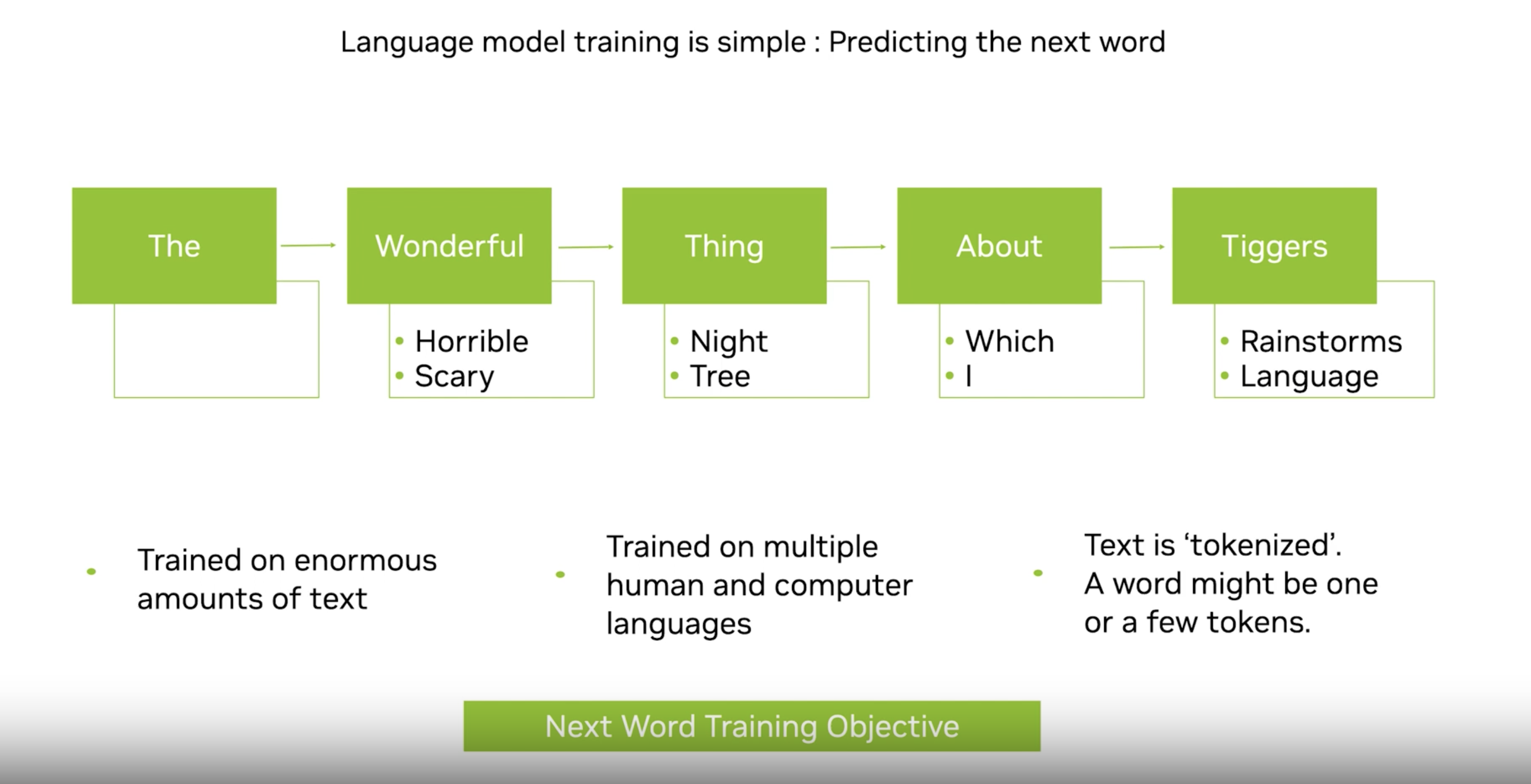

At the core of generative AI is machine learning, particularly neural networks that learn from vast datasets. These models detect patterns, uncover relationships, and construct probability distributions to predict and generate new instances that follow the patterns found in training data.

To make this process relatable, imagine your smartphone’s autocomplete feature. When you start typing, it predicts and suggests the next word based on its understanding of previous sentences you’ve written. Generative AI does something similar but on a grander scale, producing anything from realistic artwork to complex textual responses.

The Evolution of Generative AI

From Classification to Creation

Generative AI evolved from simpler classification models.

Initially, these models could only identify patterns and categorize data, such as distinguishing cats from dogs in an image.

The breakthrough came when researchers realized that, if models could learn the structure within data, they could potentially create new data as well.

For instance, when you show a model thousands of cat images, it learns to recognize pixel patterns that indicate a “cat.”

By reversing this process, the AI can create new images of cats based on these patterns, often generating realistic or artistically styled versions of cats it has never “seen” before.

Generative vs. Discriminative Models

Generative AI is different from discriminative AI, which focuses on distinguishing between categories. Discriminative models are trained to classify data—think spam vs. non-spam emails—while generative models go a step further by using the patterns they’ve learned to produce entirely new, plausible outputs.

Evolution with GPT Models

Each generation of GPT—starting from GPT-1 and advancing through GPT-2, GPT-3, and GPT-4—brought about remarkable improvements that not only pushed the boundaries of generative AI but also changed how we use AI in practical applications.

Here’s a detailed look at the evolution of these models, their impact on generative AI, and how each iteration has contributed to its capabilities.

1. GPT-1:

• Released: 2018

• Innovation: GPT-1 introduced the concept of transfer learning for language models. It used a transformer architecture, which is highly effective for processing sequential data like language. Pre-trained on a large corpus of text, GPT-1 could generate coherent sentences, perform language tasks, and understand some level of context.

• Impact: Although limited in scope, GPT-1 demonstrated that training on vast amounts of general language data could be used for downstream language tasks, laying the groundwork for more complex models.

2. GPT-2:

• Released: 2019

• Innovation: GPT-2 was significantly larger, with 1.5 billion parameters, allowing it to generate much more sophisticated and nuanced text. GPT-2 could perform tasks without needing task-specific fine-tuning, displaying impressive “zero-shot” and “few-shot” learning capabilities.

• Impact: GPT-2 marked a leap in natural language generation. Its ability to create surprisingly coherent long-form content gained public attention, showcasing that generative AI could be used for realistic text-based applications. Concerns about misinformation and misuse also arose due to its power, highlighting ethical challenges.

3. GPT-3:

• Released: 2020

• Innovation: With 175 billion parameters, GPT-3 became one of the largest language models to date. It could produce coherent text across many domains, understand complex instructions, and even perform tasks like translation, question answering, and code generation.

• Impact: GPT-3’s scale and versatility opened up new applications, from customer support bots to content creation tools and educational assistants. It could handle various queries, create engaging dialogue, and adapt to different tones or styles. GPT-3 truly popularized generative AI in mainstream applications and influenced industries ranging from marketing to healthcare.

4. GPT-4:

• Released: 2023

• Innovation: GPT-4 introduced a multimodal capability, meaning it could process both text and image inputs. It improved upon GPT-3’s reasoning and language understanding and could better follow nuanced instructions, with refined accuracy, creativity, and context awareness.

• Impact: GPT-4’s multimodal abilities allowed it to assist with visual tasks (like analyzing images) and made it even more adaptable across industries. For instance, GPT-4 has been used in legal document analysis, medical image interpretation, and as a foundation for accessible AI-powered applications in creative and design fields. Its release underscored the potential for future multimodal models to tackle increasingly complex tasks.

How the Evolution of GPT Models Impacts Generative AI

The evolution of GPT models has significantly impacted generative AI, driving the field forward and introducing new possibilities:

1. Improved Text Generation Quality:

• Each GPT generation refined language generation, making outputs more natural, contextually accurate, and coherent. This led to applications in content creation, storytelling, customer service, and even news writing. Today’s models can generate detailed articles, marketing copy, or technical documentation, helping businesses save time and resources.

2. Better Understanding of Nuance and Context:

• Advanced versions like GPT-3 and GPT-4 can understand subtle instructions and adjust responses based on style or tone. This level of understanding has opened up new uses in personalized education, interactive gaming, and tailored therapy tools, where nuanced responses are essential.

3. Multimodal Capabilities:

• GPT-4’s ability to process both text and images marked a turning point, as it can now interpret visuals and generate descriptive text. This makes it ideal for image analysis in healthcare, educational tools, and even for creative brainstorming where images and descriptions are used in tandem.

4. Few-Shot and Zero-Shot Learning:

• GPT-2 and later models introduced few-shot and zero-shot learning, where the model could handle tasks it wasn’t specifically trained on with minimal examples. This versatility makes generative AI adaptable across fields without needing massive datasets or task-specific training.

5. Catalyst for Innovation Across Industries:

• GPT models have become the backbone for applications in marketing, finance, customer service, healthcare, education, and more. The generative power of these models allows companies to build interactive AI experiences, automate workflows, and enhance customer experiences through personalized, context-aware interactions.

6. Ethical and Societal Considerations:

• The powerful abilities of GPT models to generate realistic and persuasive text raised concerns about misinformation, bias, and data privacy. Their evolution has driven essential conversations about responsible AI use, transparency, and fairness, leading to efforts toward AI governance and ethical frameworks.

Applications of Generative AI

Generative AI has advanced from theory to practice, with applications impacting numerous industries. Here are some significant areas where generative AI is changing the game:

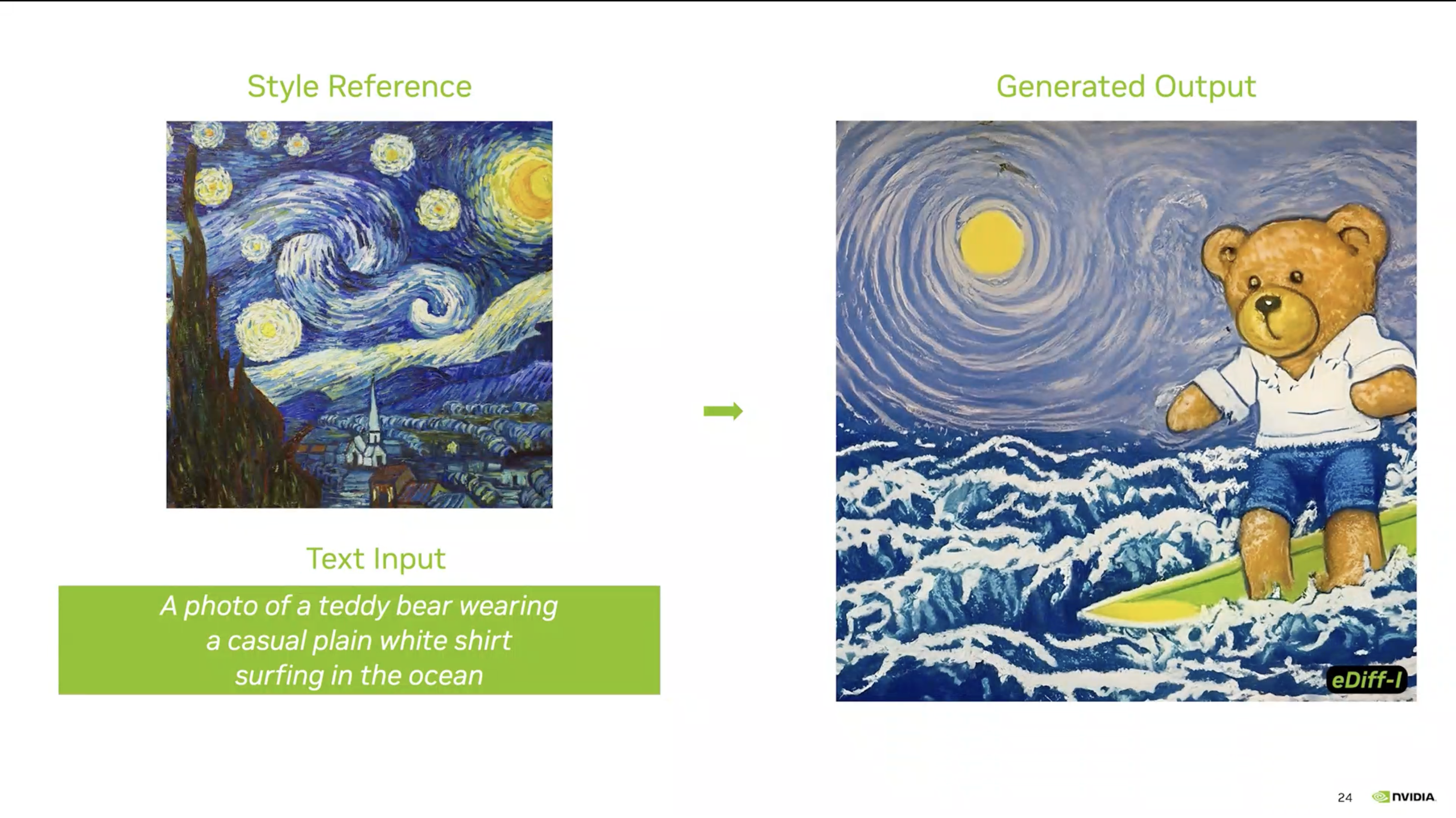

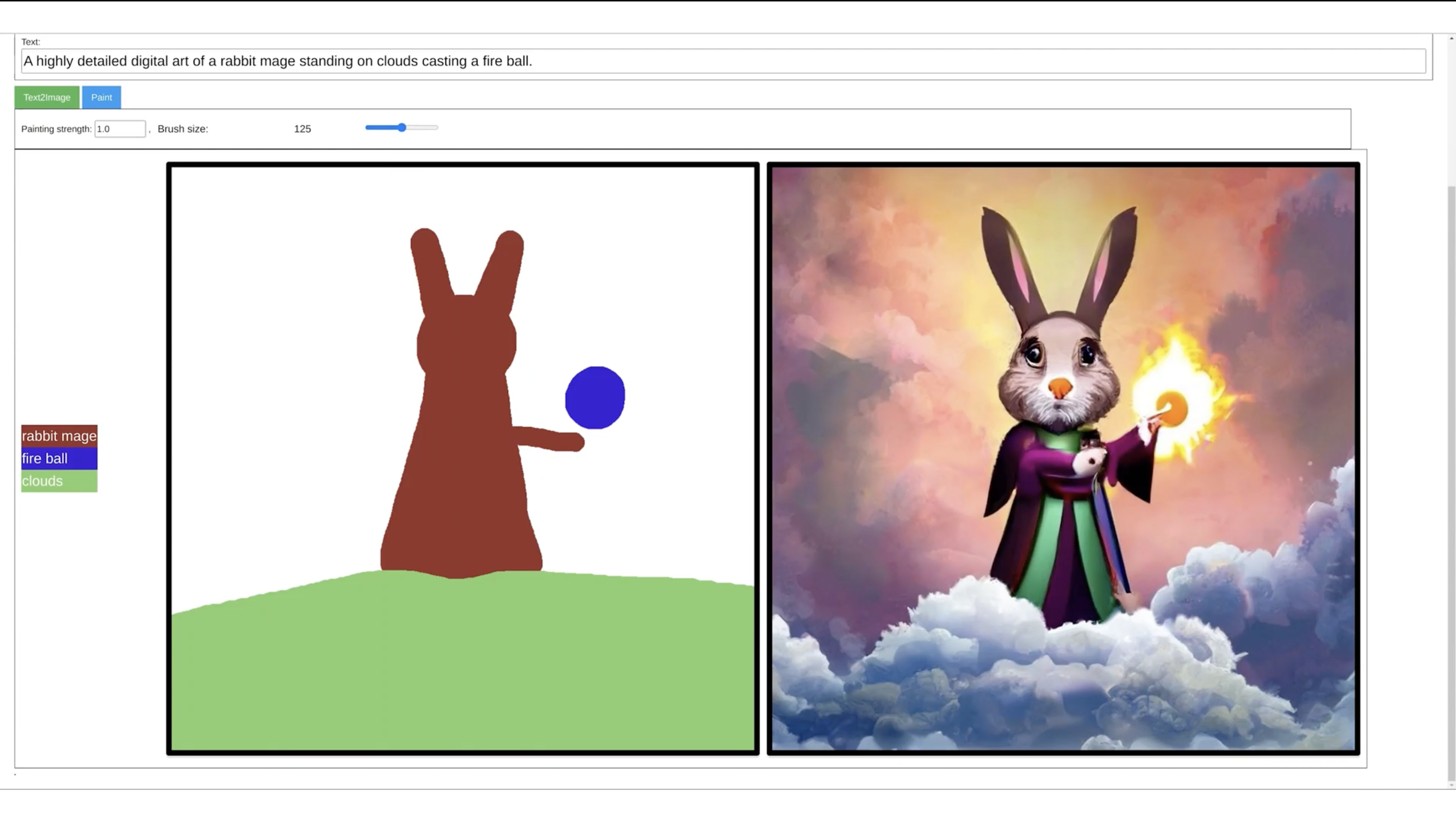

1. Content Creation in Media and Art

Tools like DALL-E and Midjourney can now generate intricate digital artwork based on text prompts. Artists and designers are using these tools for brainstorming and creating concept art, saving hours in manual work. Studies show that over 60% of creative professionals in certain fields, such as gaming and advertising, are exploring AI tools to enhance or expedite their work.

2. Personalized Content and Copywriting

With tools like ChatGPT, Jasper, and Copy.ai, marketers and content creators can produce tailored marketing content, blog posts, and even social media updates. This ability to create personalized content at scale has led 80% of businesses to explore AI-driven content solutions for improved customer engagement.

3. Voice and Sound Generation

Generative AI has also made significant strides in audio. With tools like Adobe’s VoCo and Google’s WaveNet, AI can create realistic voice recordings based on text, which has wide applications in customer support, audiobooks, and even the gaming industry.

4. Virtual World and 3D Model Generation

In fields like gaming and virtual reality, generative AI is used to create realistic 3D models and landscapes, offering an immersive experience. Companies are leveraging this to create dynamic environments with less human input, contributing to a projected $42 billion virtual reality market by 2027.

5. Medical Research and Drug Discovery

Generative AI is also helping researchers in creating molecular structures for new drugs, potentially reducing the time it takes to bring new drugs to market. AI tools like AlphaFold by DeepMind have even mapped out protein structures that would have taken years for humans to discover, accelerating breakthroughs in disease treatment.

How can we employ in a Industry Specific Project?

Developing a Generative Artificial Intelligence model is resource intensive. Companies looking to leverage Generative AI have the option to either use it out-of-the-box or fine-tune them to perform specific tasks.

Collect and fine-tune an existing foundational model with a relatively small sample of demonstration data for specific tasks (supervised)

Use reinforcement learning and reward system to improve model outputs (RL)

Enable human feedback such as rating or ranking the model outputs (HF)

Underlying Mechanisms in Generative AI

Also, some quick notes on this.

Embeddings to represent high-dimensional, complex data

Variational AutoEncoder (VAE) Model use an encoder-decoder architecture to generate new data, typically for image and video generation

Generative Adversial Networks (GAN) use a generator and discriminator to generate new data, often in video generation

Diffusion Models add and remove noises to generate quality images with high levels of detail

Transformer Model for Large Language Models such as GPT, LaMBDA, and LLaMa

Neural Radiance Fields (NeRF) for generating 3D content from 2D images

Retrieval-Augmented Generation (RAG) combined with Prompt Engineering, works with the user level instructions.

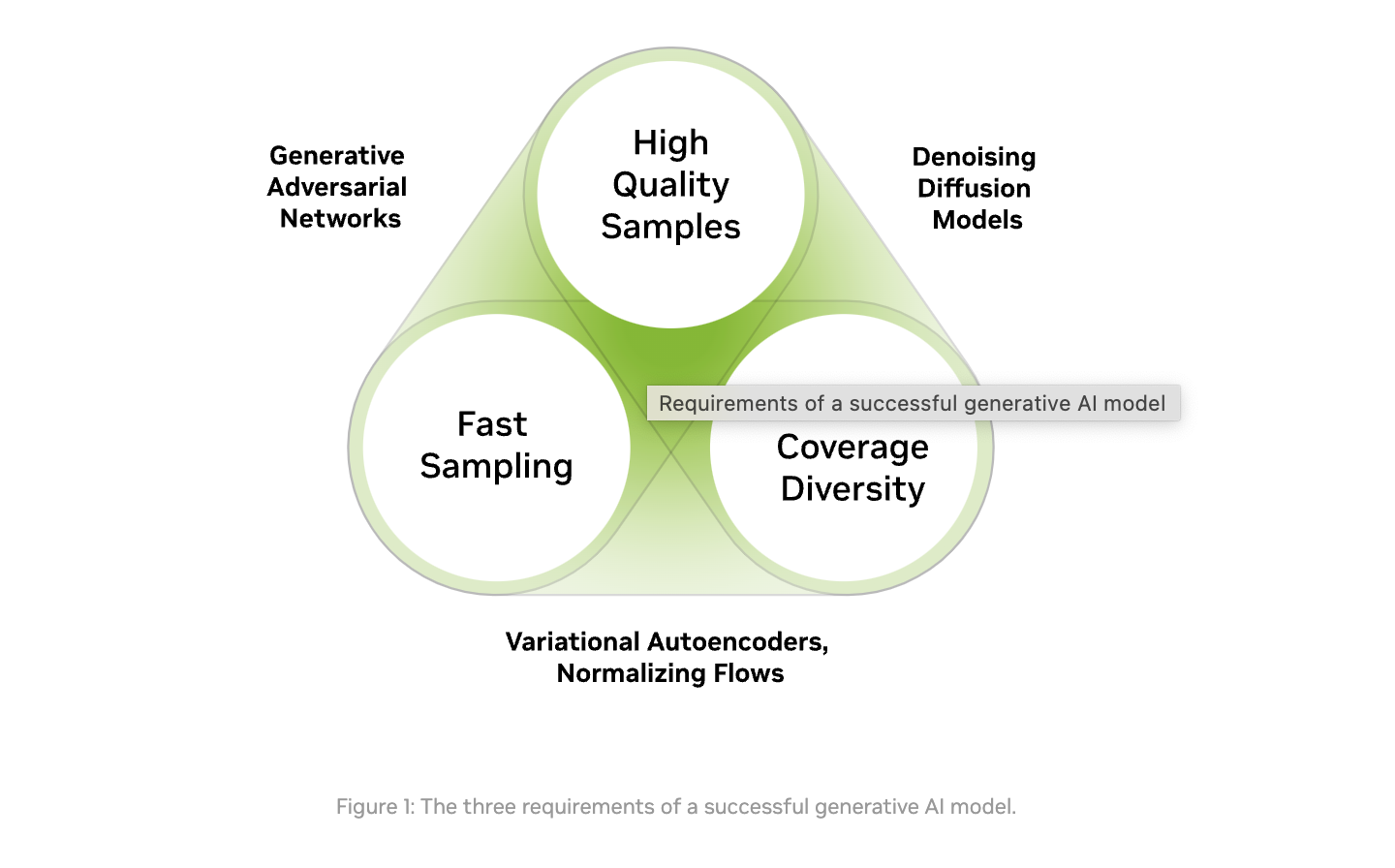

How to Evaluate Generative AI Models

Quality:

Especially for applications that interact directly with users, having high-quality generation outputs is key.

For example, in image generation, the desired outputs should be visually indistinguishable from natural images

Diversity:

A good generative model captures the minority modes in its data distribution without sacrificing generation quality.

This helps reduce undesired biases in the learned models

Quantity:

- A generative model should aim to be used as much as possible because generating content is cheap and easy

Speed:

- Many interactive applications require fast generation, such as real-time image editing to allow use in content creation workflows

How to Improve Generative AI

Carefully consider how tools are deployed and build safeguards so that the models are more controllable

Align models with human preferences so that they are safer to use

Optimize training speed and cost through improvements in both hardware and software

Enable accessibility and flexibility for developers

Reduce time to generate outputs

Challenges of AI

Training

Biased Training data

- may result in non-logical, error in generated output.

Intellectual property ownership of AI-generated content & Attributes

- Does the output affects owner of the training data.

Collecting training data has complicated challenges and expensive to acquire

Confidentiality of Private Data

since, LLMs are need to be trained in datacenter, data are transferred to that cloud servers.

Build private LLMS

Need to construct industry specific norms

Generated Output

Hallucination : false output due to biased training data.

Harmful application & output.

Realistic-sounding content can make it difficult to identify inaccuracy

Resource Intensive Training of models, requires large compute infrastructure with high power consumption, demands fast output generation (High Sampling Speed).

Leveraging Generative AI technology to solve problems requires expertise

The Future of Generative AI: What’s Next?

Generative AI continues to evolve, with future advancements likely to enhance personalization, ethical content generation, and even human-like intelligence in creative fields. Experts estimate that AI-driven content could make up 10% of all generated content by 2030 across various media.

GPU computing that enables parallel processing of large datasets (scale up)

Network capabilities that enable distributed systems to work in parallel (scale out)

Software that enables developers to leverage powerful hardware

Cloud services that enable access to scarce and expensive hardware

Innovation in neural network architectures

Full Stack Optimization from networking to compute to frameworks & libraries.

- Nvidia invests heavily in JAX, PyTorch, Tensorflow.

Final Thoughts

Size of the content is not much important with the value of the idea.

We need to concentrate on High level of Abstraction, rather than quantity

Think about, There are lot of models available, How does we make a model useful?

Subscribe to my newsletter

Read articles from Jayachandran Ramadoss directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by