Integrated AWS Workflows with SageMaker Notebooks

Sreekesh Iyer

Sreekesh Iyer

I was recently a part of a hackathon and was found searching for good Jupyter environments on the cloud to be able to work on my ML Project.

Soon enough, I found myself here. However, it took me a while to figure out how powerful it truly can be.

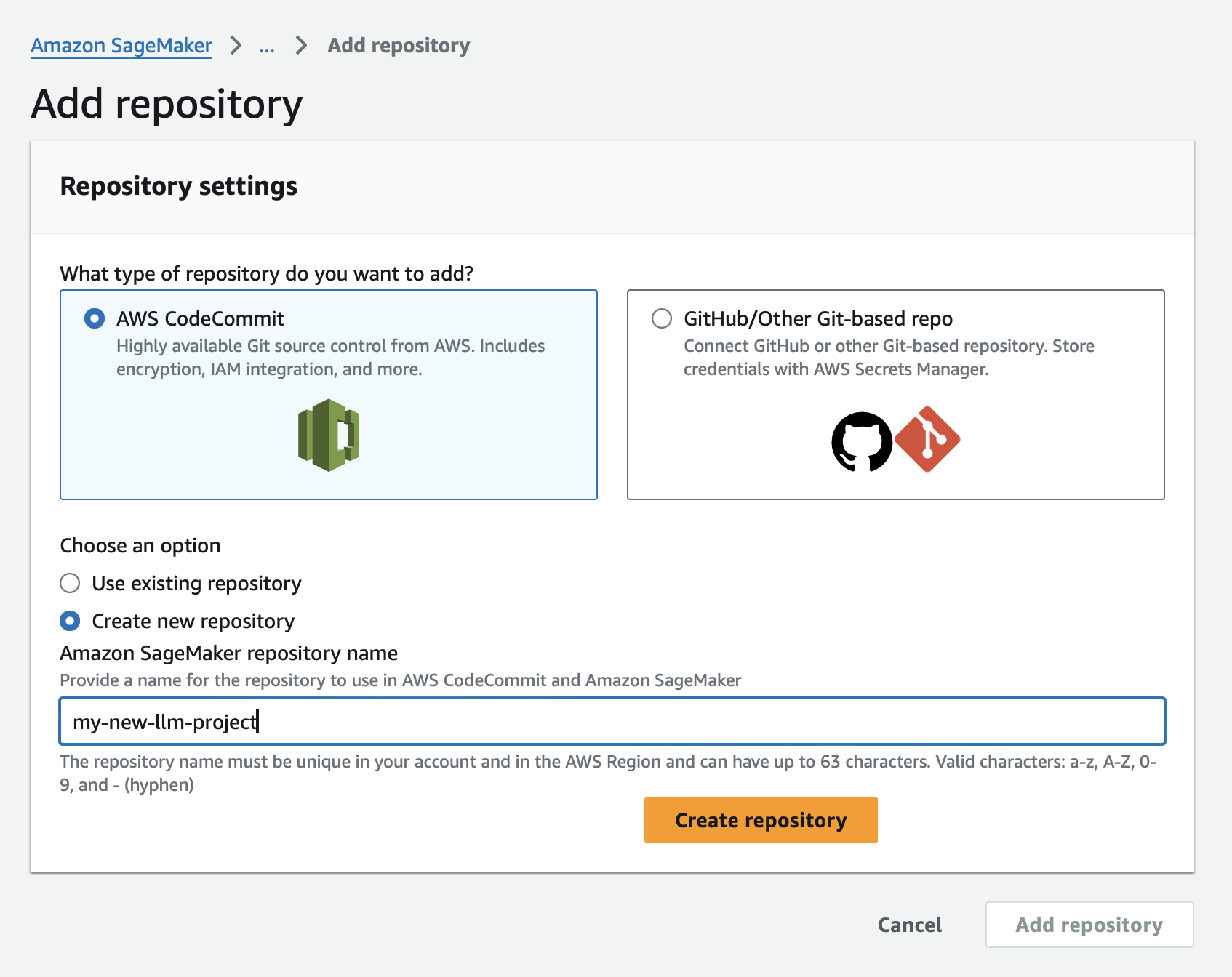

For starters, I was able to hook it up to any Repository within seconds. So I did not have to worry about versioning or sharing it with teammates.

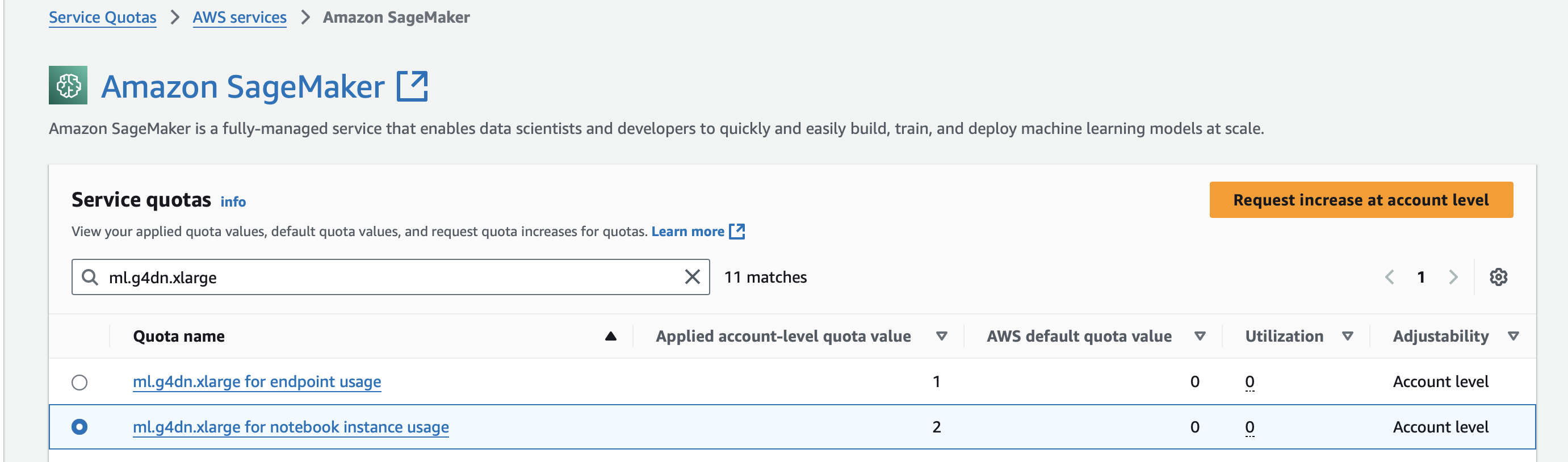

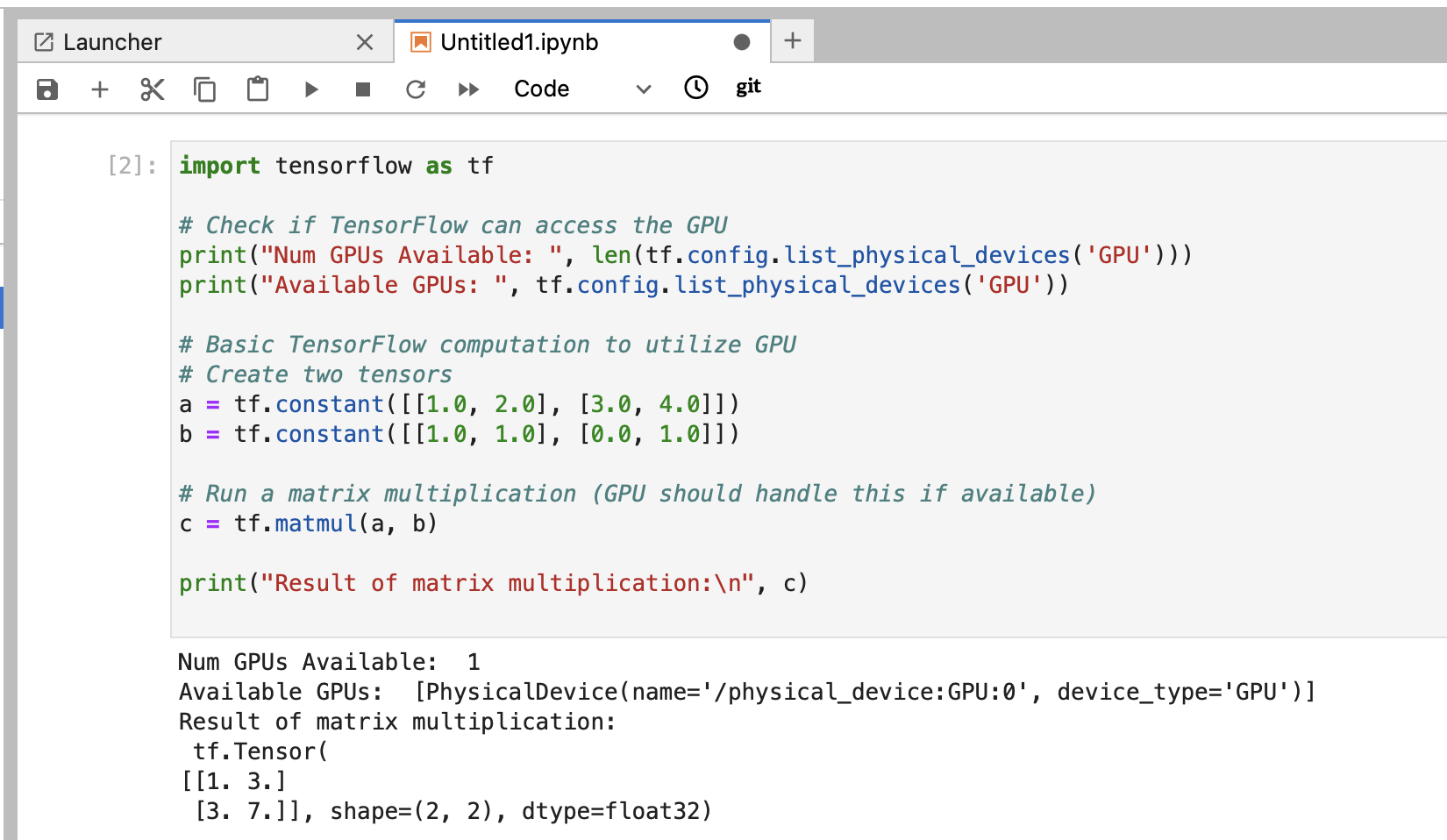

Direct access to GPU instances

You’ll need to get an additional approval to make use of the ml.g-series of instances in SageMaker Notebooks, but it didn’t take longer than a minute for me. And just like that, I have access to a Jupyter notebook with TensorFlow and a GPU.

Integrations with other AWS Services

This is not a Sagemaker feature as much as it is an AWS IAM feature, but when you get hold of the IAM Role that is being used by the Sagemaker Notebook, you can basically open up your Python shell to have access to literally any AWS service via client libraries like boto3.

You can control EC2 instances, invoke Lambda functions, send emails via SES, perform operations on S3 objects and so many other things programmatically through the same Jupyter notebook.

—

So far, it’s been fun watching folks work on Sagemaker Notebooks trying out different ways to automate workflows on AWS using raw Python. Looks like I’ve found my new provider for easy-access Cloud Notebooks.

Subscribe to my newsletter

Read articles from Sreekesh Iyer directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sreekesh Iyer

Sreekesh Iyer

Few interesting things about me: Software Engineer @ JP Morgan Chase & Co. AWS Community Builder 2023 I love working with technology I enjoy playing video games 😄