The Ultimate Guide to Data Transformation Tools

Lucy Zhang

Lucy Zhang

Originally published at The Ultimate Guide to Data Transformation Tools - NocoBase.

In the era of big data, businesses and organizations face the challenge of handling vast amounts of data. As applications become more complex and user needs evolve, development teams need to efficiently process large volumes of data to make quick decisions. However, how can they identify and effectively utilize the data that is critical for decision-making amid all this information?

Data transformation toolsprovide solutions to help development teams extract value from complex information, optimizing data structures and ensuring effective data usage.

In this ultimate guide, we will explore the fundamental concepts, key steps, importance, and how to choose the right data transformation tools that fit your needs. We hope to assist your team in utilizing data transformation tools effectively, maximizing data value, and achieving a data-driven successful transformation!

What is Data Transformation?

Data transformation refers to the process of organizing and maintaining data effectively, encompassing data extraction, cleaning, integration, and loading (ETL). This process typically occurs across various stages of data storage, analysis, and visualization. The goal of data transformation is to enhance the quality and usability of data, making it suitable for different analytical needs and application scenarios.

Key Steps in Data Transformation

Data Extraction: Extracting data from various sources (e.g., databases, APIs, file systems).

Data Cleaning: Removing duplicate data, filling in missing values, correcting data formats, and handling outliers.

Data Integration: Merging data from different sources for unified analysis.

Data Transformation: Converting data into the required formats, such as changing CSV to JSON or relational data to NoSQL format.

Data Loading: Loading the transformed data into target systems or data warehouses for subsequent use.

Importance of Data Transformation

Data transformation is critical for businesses for several reasons:

Improved Data Quality: Cleaning and integrating data enhances its accuracy and consistency.

Enhanced Data Accessibility: Formatting data for analysis makes it easier to access and use.

Support for Business Decisions: High-quality data supports deeper analysis, providing a solid basis for decision-making.

Compliance with Regulations: Data transformation ensures that data meets industry regulations and standards.

Criteria for Choosing Data Transformation Tools

When selecting the right data transformation tools, developers and teams should consider several key factors, particularly the characteristics of being open source and self-hosted:

Open Source: Open source tools can be modified and optimized to meet specific needs, adapting to unique business processes. An active open source community supports continuous improvement and problem-solving.

Self-Hosted: Self-hosting allows users to run tools on their own servers, enhancing data security and privacy while improving control and flexibility to align with IT infrastructure and security policies.

Functionality: Whether the tool supports multiple data sources and formats to meet specific data transformation needs.

Performance: Efficiency and stability when handling large-scale data.

Usability: The user interface's friendliness and whether the learning curve is suitable for team members' technical backgrounds.

Community and Support: Availability of an active community and good technical support for assistance and resources.

Cost: Whether the tool's costs fit the budget, including potential maintenance and expansion expenses.

Recommended Data Transformation Tools

NocoBase

Overview

GitHub:https://github.com/nocobase/nocobase

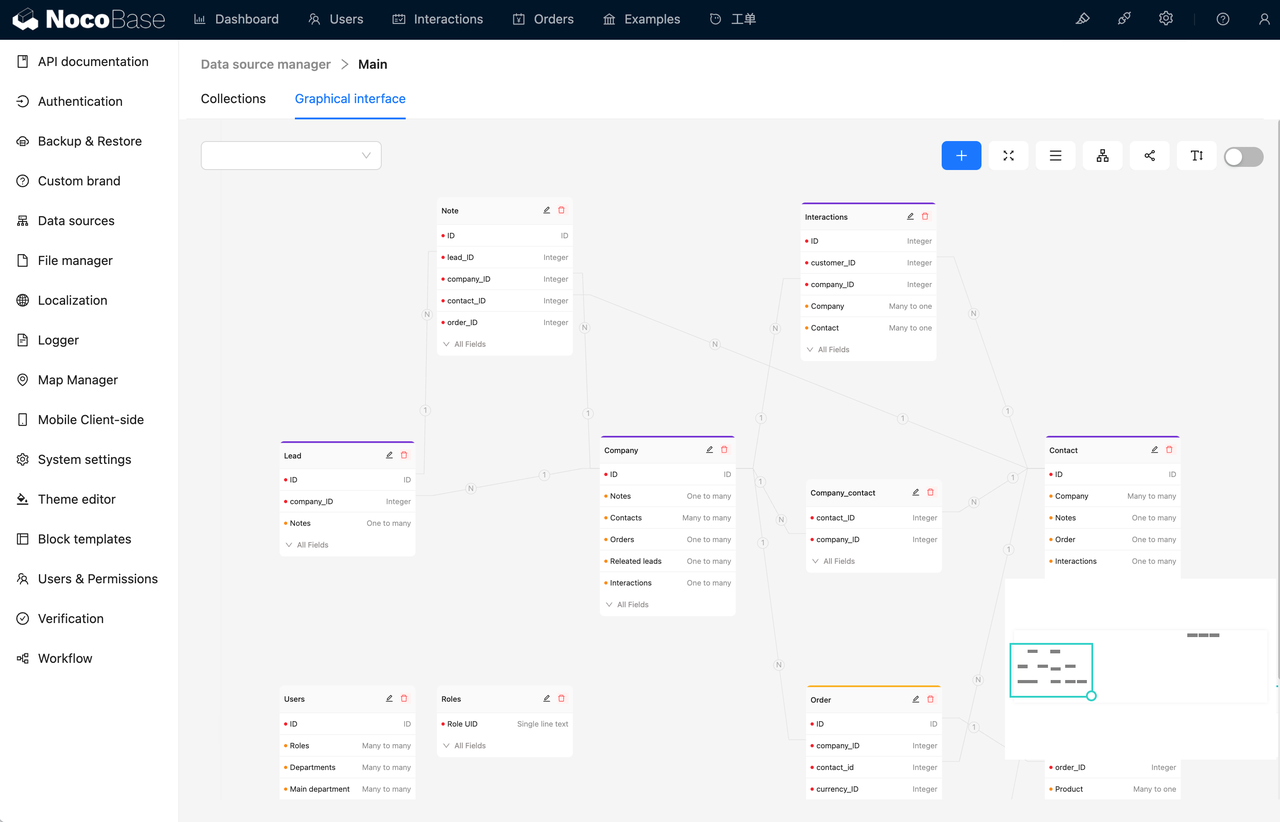

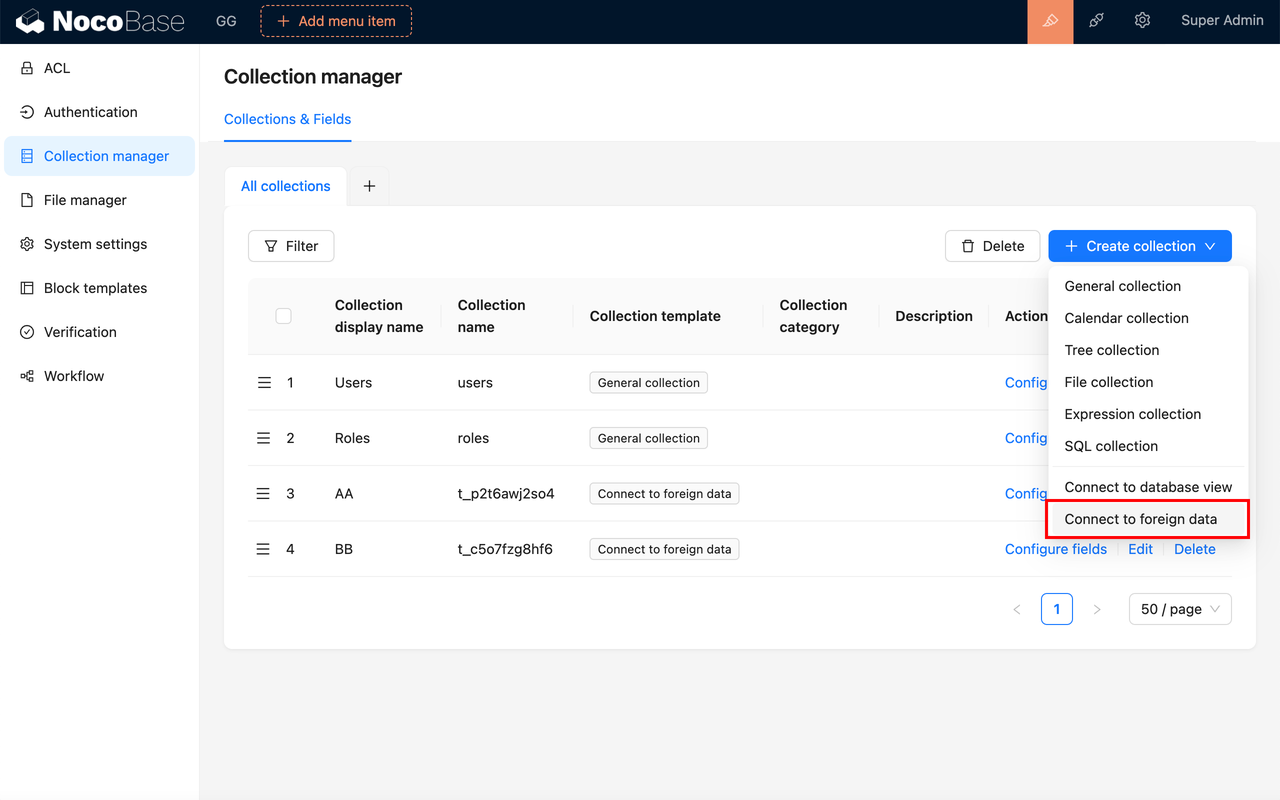

NocoBase is an open-source no-code/low-code development platform that efficiently integrates data from various sources and automates data cleaning, significantly reducing data governance costs. It helps users quickly build customized solutions and improve work efficiency.

💡 Dig deeper: UUL Saves 70% on Logistics System Upgrade with NocoBase

Features

WYSIWYG interface: Users can easily create data transformation workflows through a visual interface and simple logic.

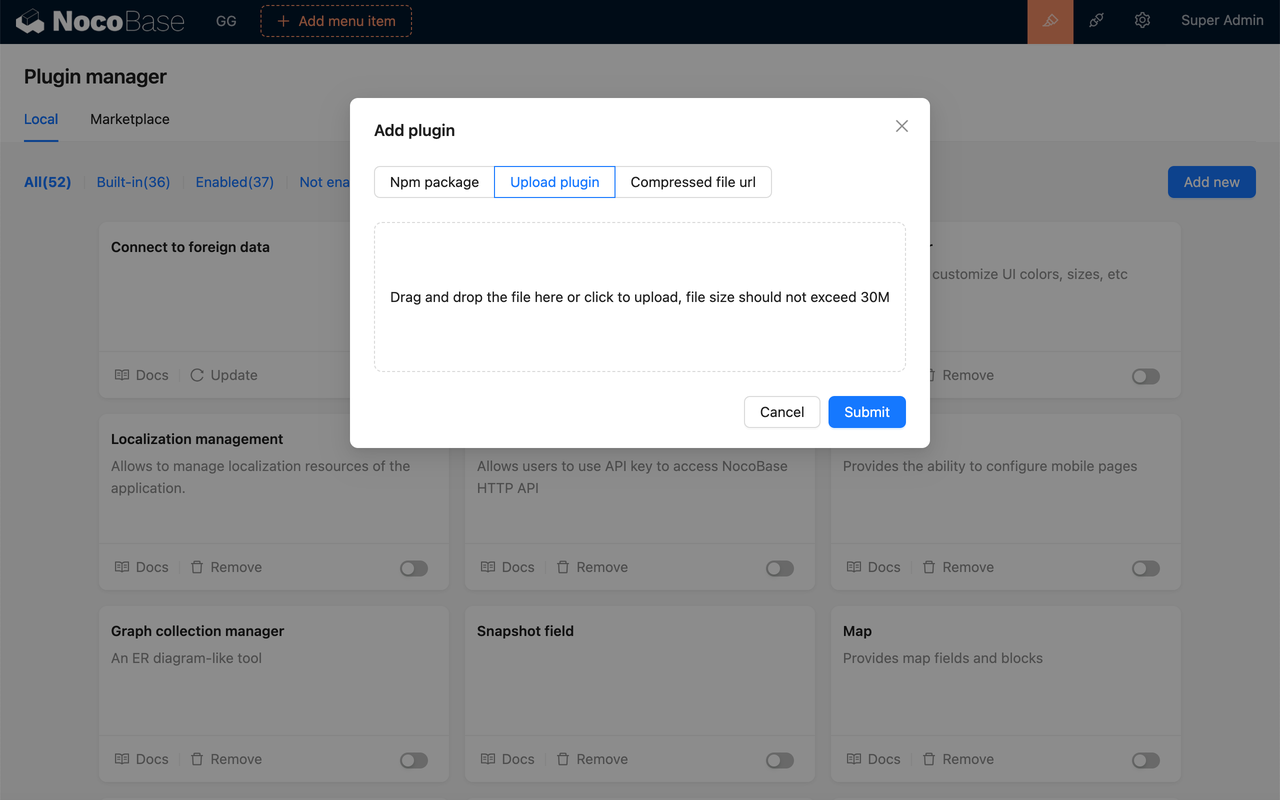

Plugin architecture: Users can customize and extend features according to their needs through plugins.

- Support multiple data sources: Compatible with various data formats, including databases and APIs.

Pros and Cons

Pros: User-friendly, suitable for those without a deep technical background.

Cons: May not have as rich a feature set as more complex tools.

Price: Offers a free community version and a more professional commercial version.

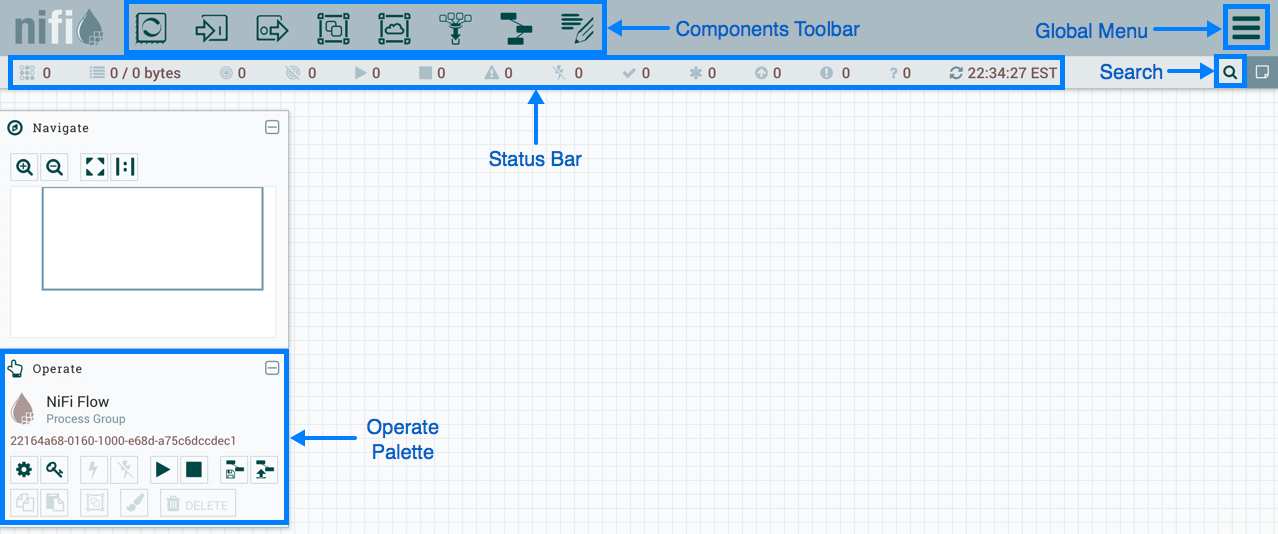

Nifi

Overview

GitHub:https://github.com/apache/nifi

Nifi is a powerful data flow management tool that supports automated data movement and transformation, known for its visual interface that allows users to design data flows easily.

Features

- Graphical user interface: Build data processing workflows through drag-and-drop without writing complex code.

Secure data handling: Offers multiple security mechanisms, including user authentication, authorization, and data encryption.

Rich connectors: Supports various data sources and targets, including databases, files, and APIs.

Pros and Cons

Pros: Highly flexible, suitable for various data processing needs.

Cons: May require a steeper learning curve for complex scenarios.

Price: Open source and free to use.

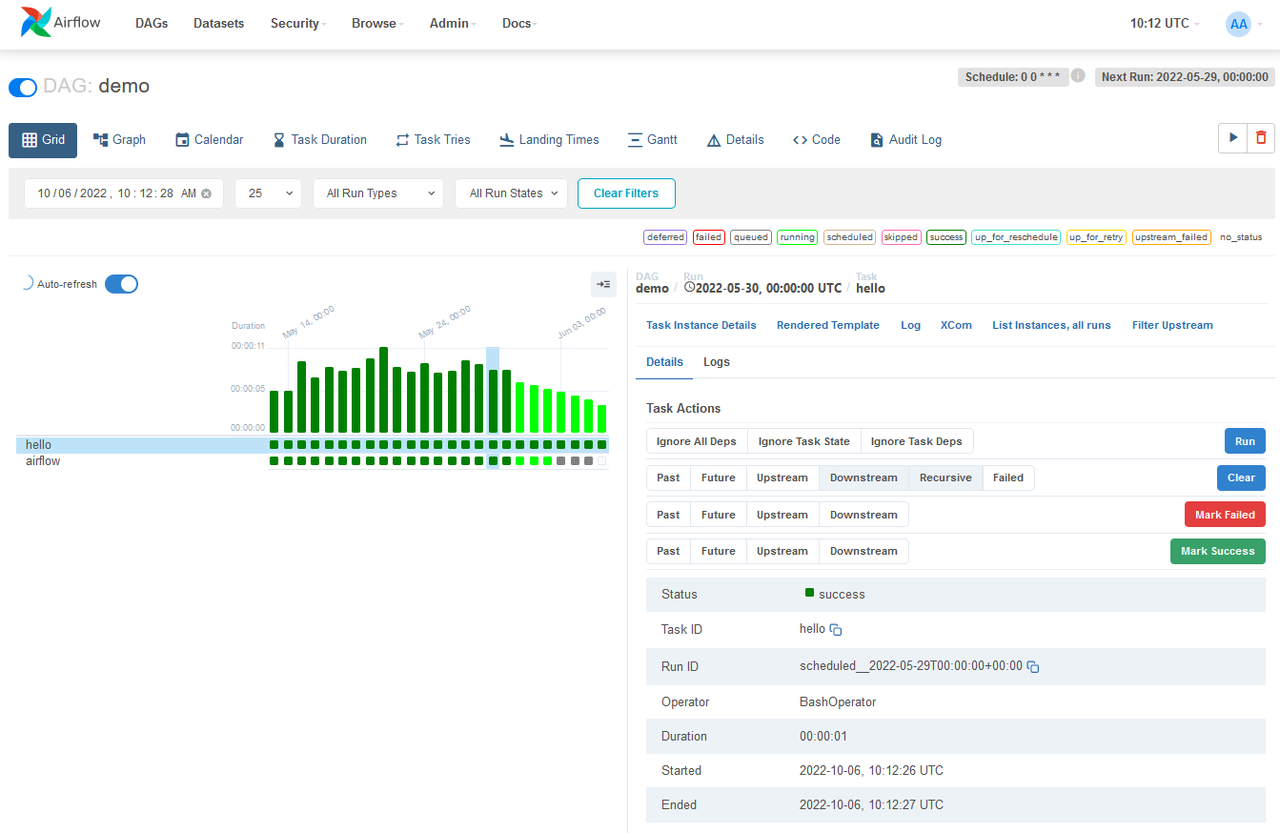

Airflow

Overview

GitHub:https://github.com/apache/airflow

Airflow is an open-source workflow management platform primarily used for orchestrating complex data processing and transformation tasks.

Features

Flexible scheduling: Parameterized workflows are built using the Jinja templating engine, accommodating various complex scheduling needs.

Extensibility: Operators can be easily defined, and all components are extensible for seamless integration into different systems.

Python scripting: Workflows can be created using standard Python functions, including date-time formatting and loops for dynamic task generation.

Pros and Cons

Pros: Powerful scheduling and monitoring capabilities.

Cons: Requires some development experience to configure and use.

Price: Open source and free to use.

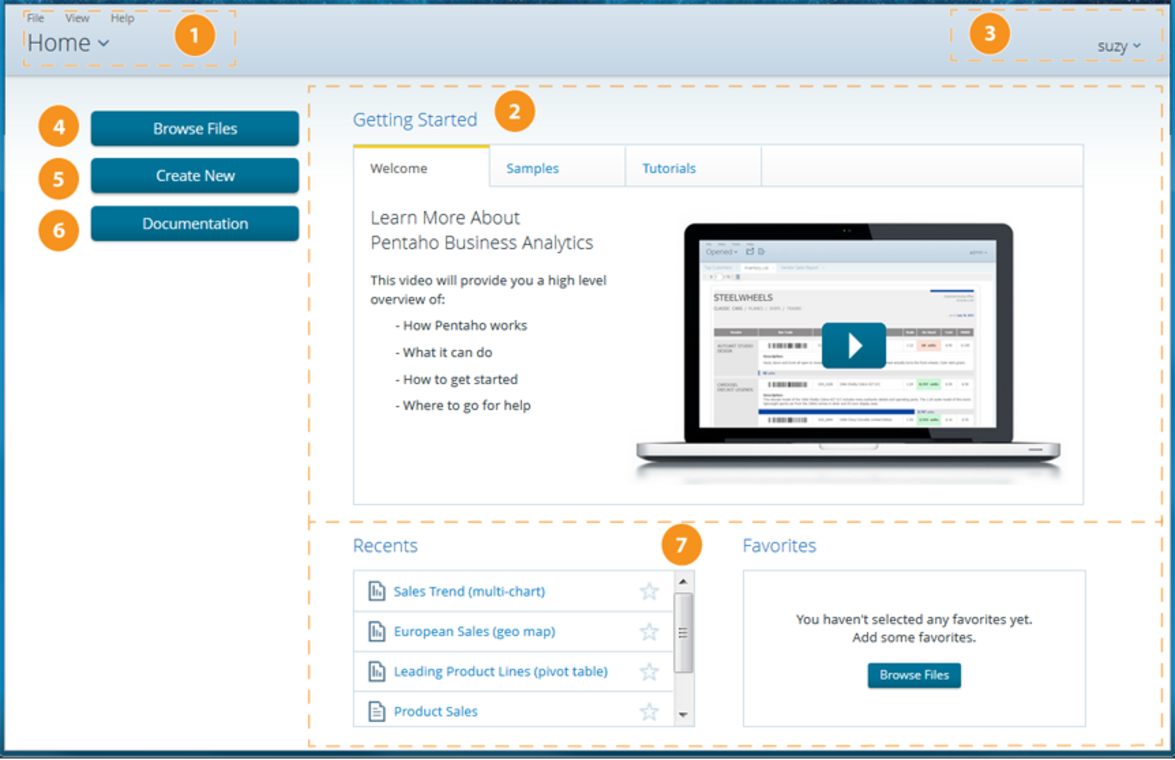

Pentaho

Overview

GitHub:https://github.com/pentaho/pentaho-kettle

Pentaho is an open-source ETL tool widely used for data transformation, cleaning, and loading.

Features

- Drag-and-drop interface: Users can design data flows visually, reducing the difficulty of data integration.

Support multiple data sources: Compatible with relational databases, NoSQL, and file systems.

Rich plugin support: Users can develop new plugins based on their needs.

Pros and Cons

Pros: Easy to use with comprehensive features.

Cons: Some advanced features require additional configuration and development.

Price: Offers a free version and a paid commercial version.

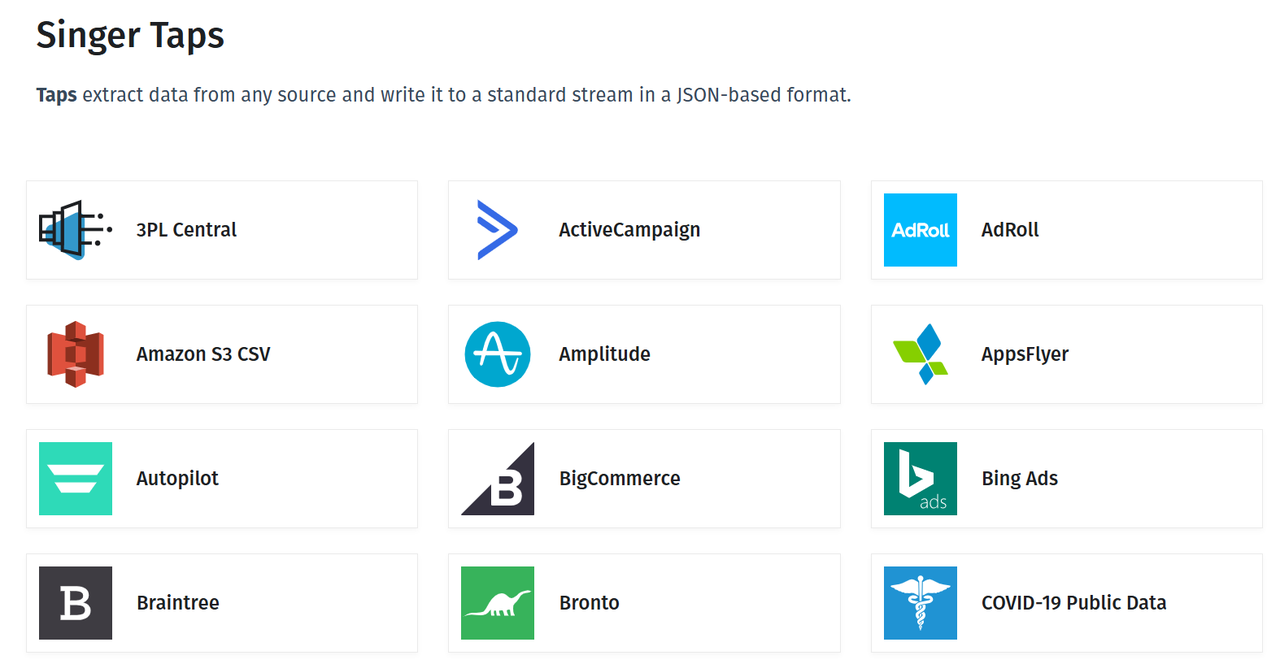

Singer

Overview

GitHub:https://github.com/singer-io

Singer is an open-source standardized tool for data extraction and loading, suitable for creating reusable ETL pipelines.

Features

- Modular design: Defines data flows using "taps" and "targets," making it easy to extend.

High flexibility: Supports various data sources and targets, ideal for building customized solutions.

JSON-based: Singer applications are linked with JSON, easy to use and implement in any programming language.

Pros and Cons

Pros: Highly flexible, suitable for building personalized data pipelines.

Cons: Requires some technical background for configuration and use.

Price: Open source and free to use.

Spark

Overview

GitHub:https://github.com/apache/spark

Spark is a unified analytics engine for large-scale data processing and transformation, supporting both batch and stream processing.

Features

Batch/stream processing: Unified data processing in preferred languages (Python, SQL, Scala, Java, or R).

SQL analytics: Executes fast, distributed ANSI SQL queries for dashboards and ad-hoc reports.

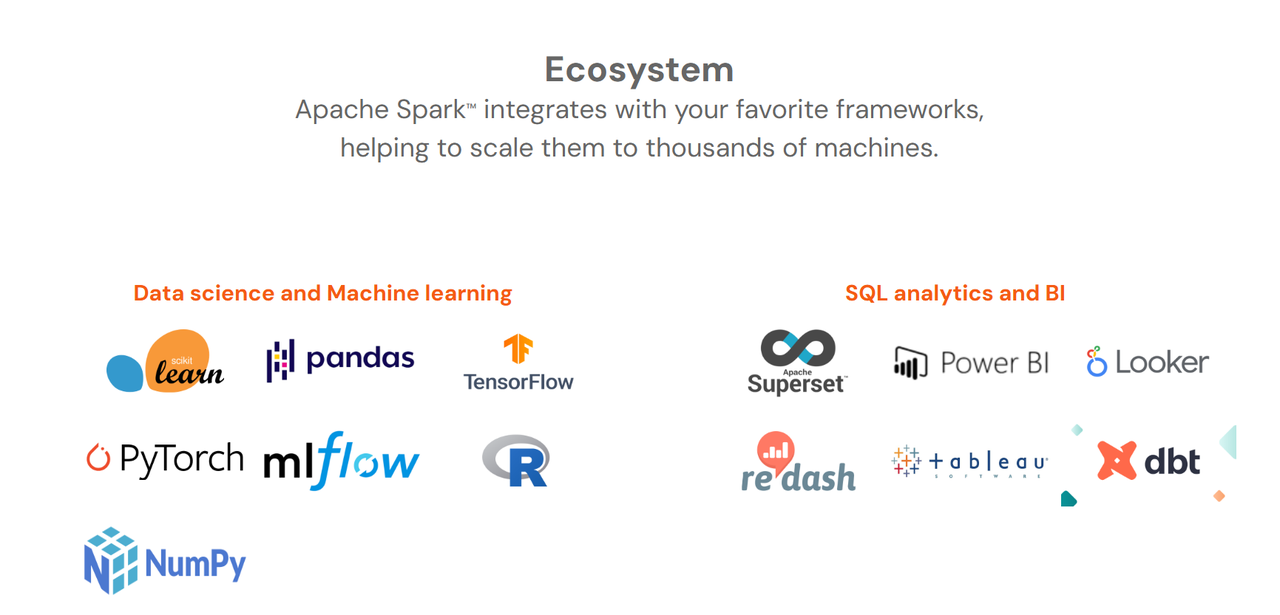

Rich ecosystem: Compatible with big data and machine learning tools.

Pros and Cons

Pros: Powerful performance and flexibility, suitable for various data processing scenarios.

Cons: Steeper learning curve for beginners.

Price: Open source and free to use.

Summary

NocoBase: A WYSIWYG interface and flexible plugin architecture simplify the complexity of data integration.

Nifi: A graphical interface and security mechanisms ensure efficient and secure data flow.

Airflow: Flexible scheduling and extensibility enhance the efficiency of orchestrating complex tasks.

Pentaho: Drag-and-drop design and rich plugin support lower the learning curve.

Singer: Modular design and flexibility facilitate the creation of reusable ETL pipelines.

Spark: Unified batch and stream processing capabilities meet large-scale data processing needs.

😊 We hope this guide helps you select the right data transformation tools to improve data processing efficiency and achieve data-driven business growth.

Related reading:

Subscribe to my newsletter

Read articles from Lucy Zhang directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by