CICD: Microservices Deployment on a Scalable Amazon EKS Cluster with Jenkins, ArgoCD, Terraform, Prometheus, and Grafana

Mwanthi Daniel

Mwanthi DanielTable of contents

- Introduction

- Execution Plan

- What You'll Achieve

- Core Tools and Techs Used

- Prerequisites

- Installations

- Clone the Microservices Source Code Repository

- Infrastructure Deployment (AWS VPC, EKS Cluster)

- Developing Jenkins CI Pipeline

- Setting Up Jenkins Master and Agent Nodes

- Jenkins Master Node

- Jenkins Agent Node

- Configure Authentication for Jenkins Master Node to Access Agent Node

- Preparing the Agent Node

- Add Jenkins Agent to the Master Node

- Installing plugins

- Developing the CI pipeline

- Understanding the Pipeline

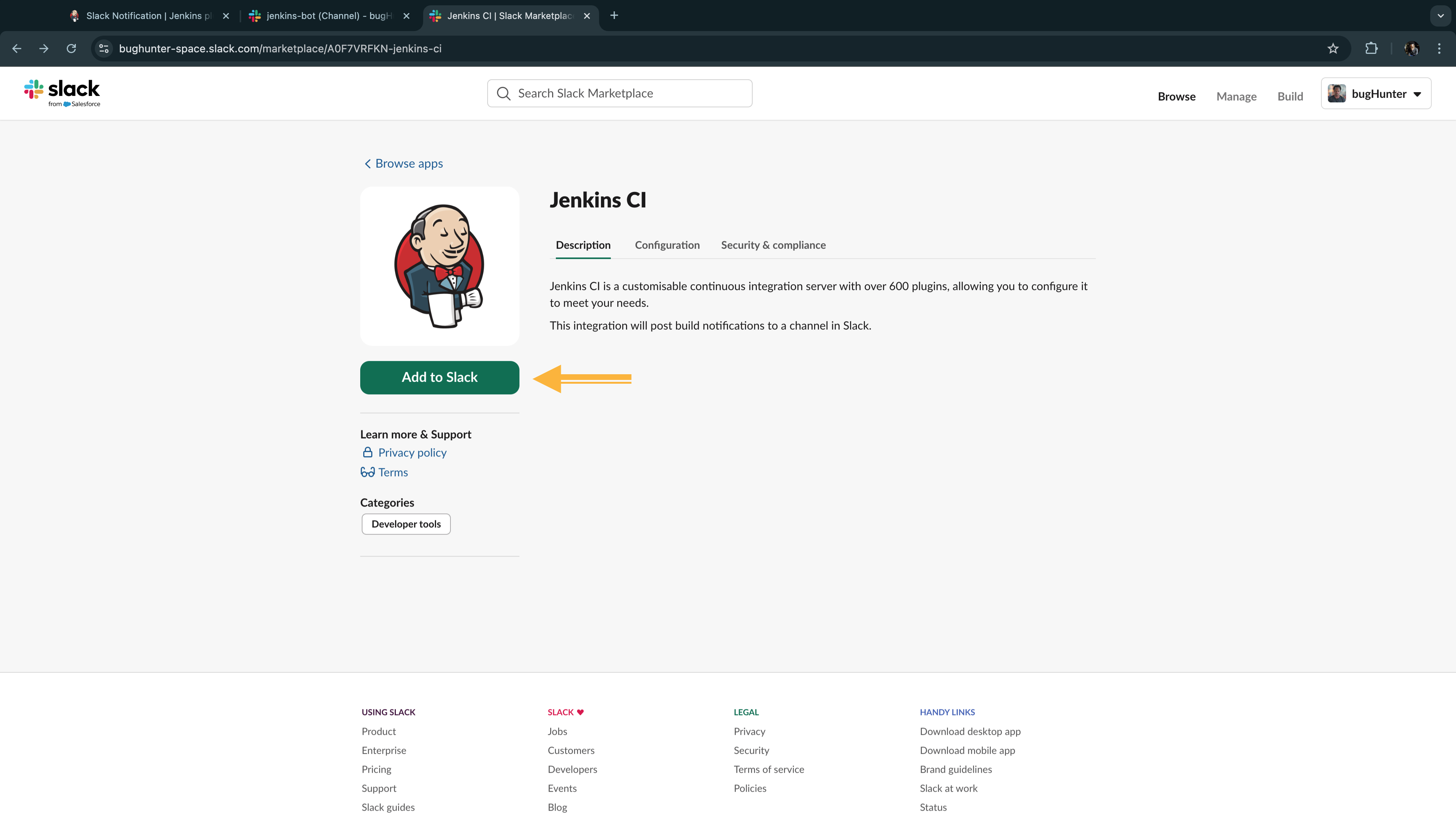

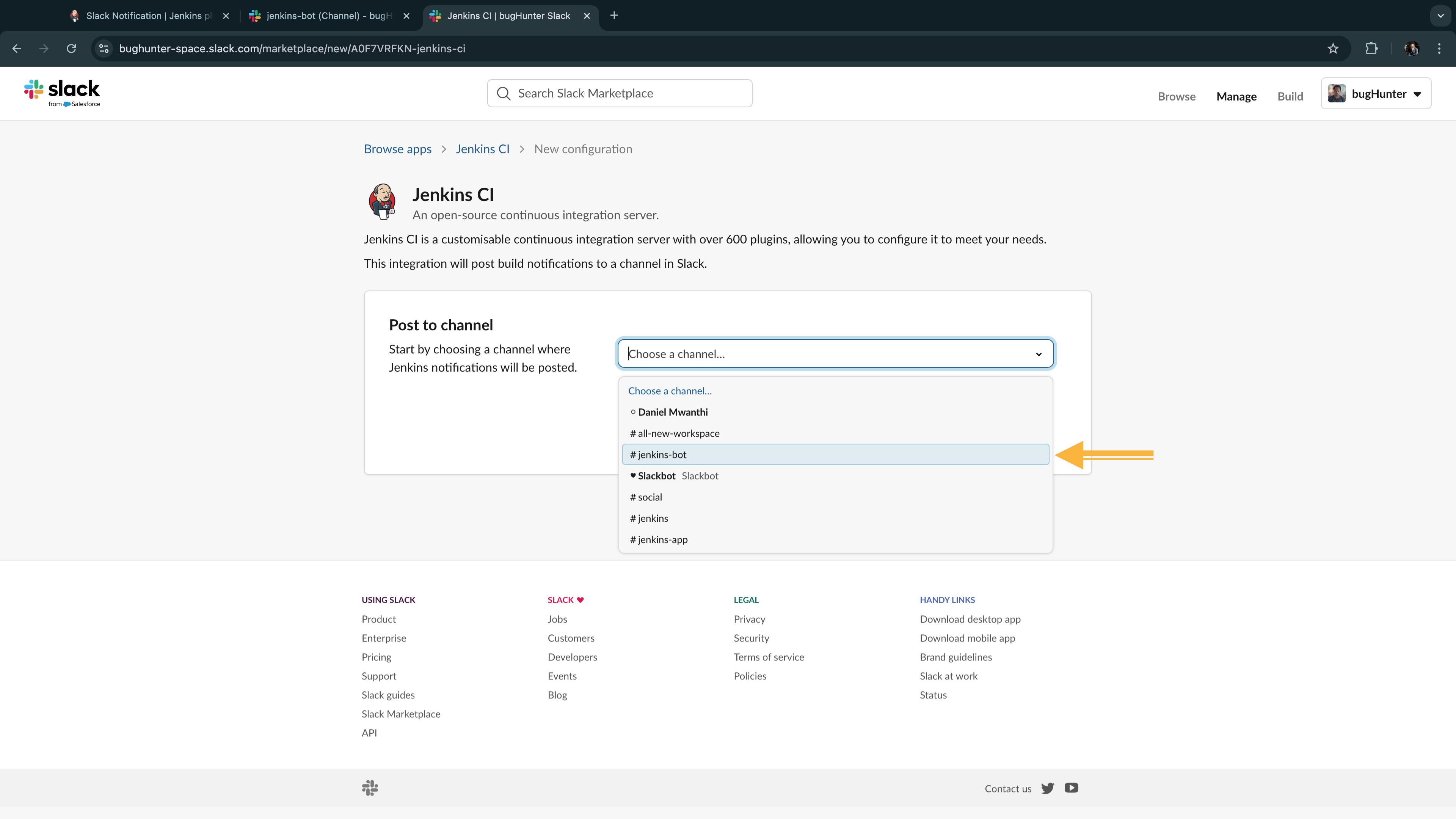

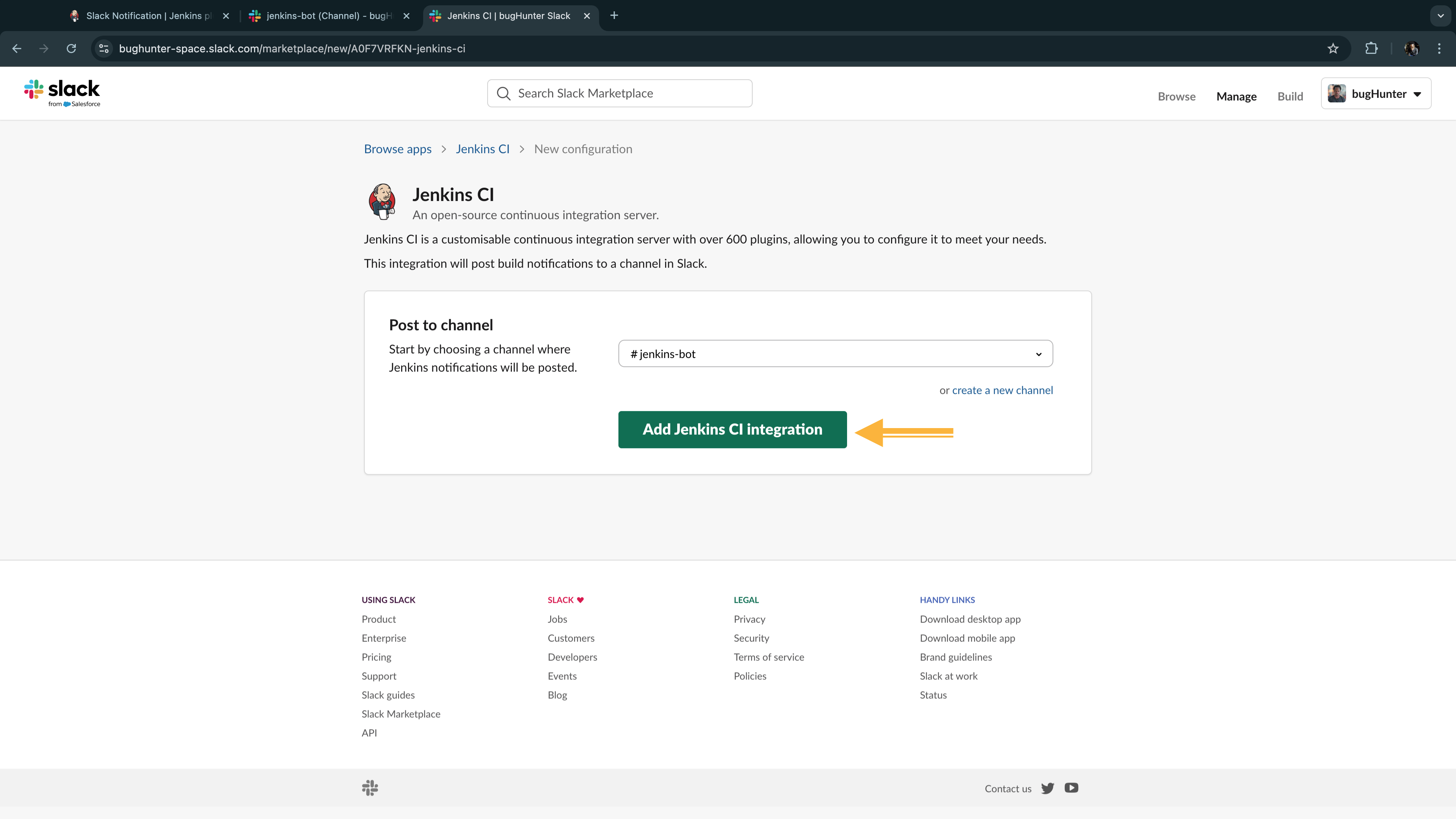

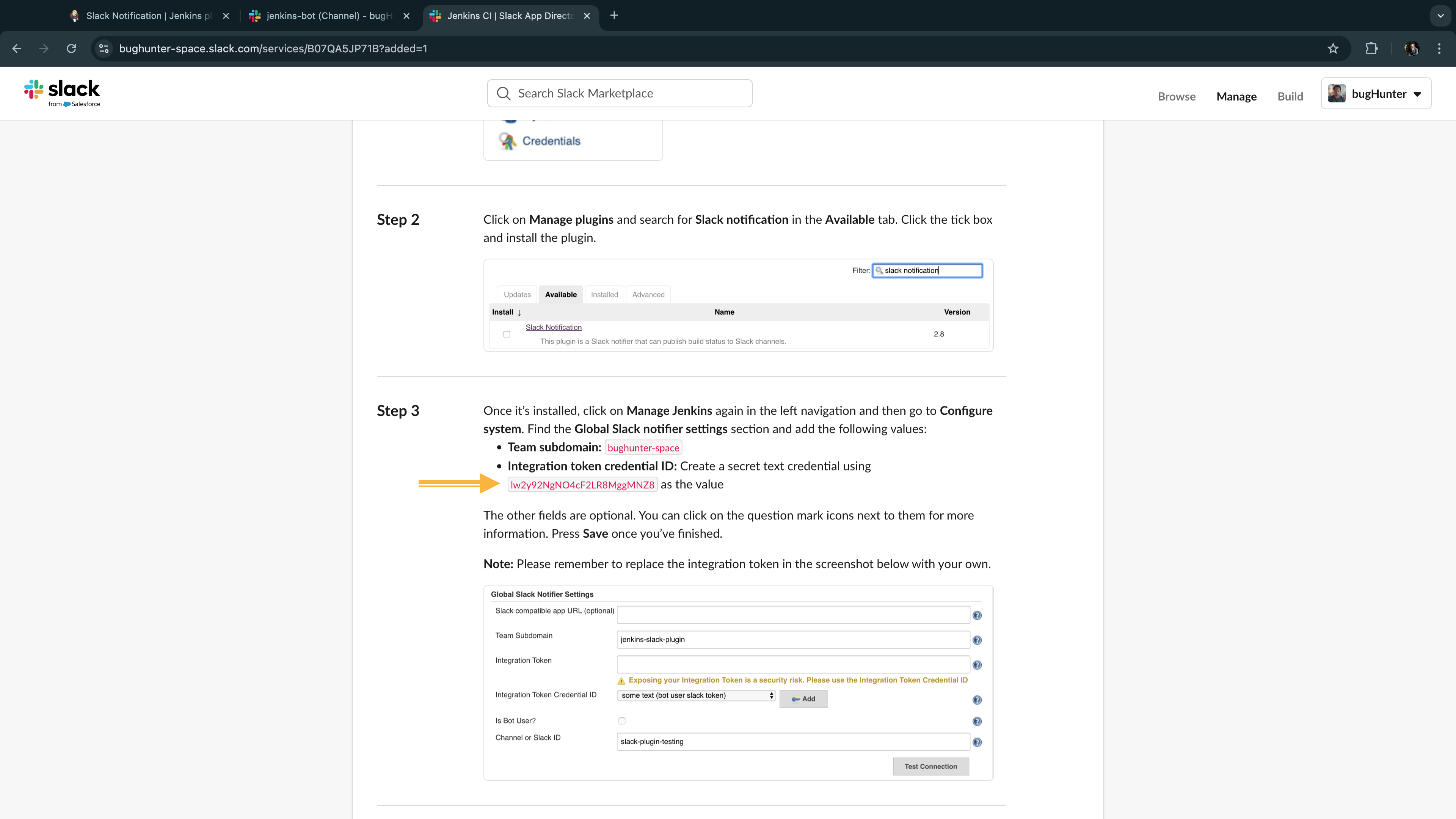

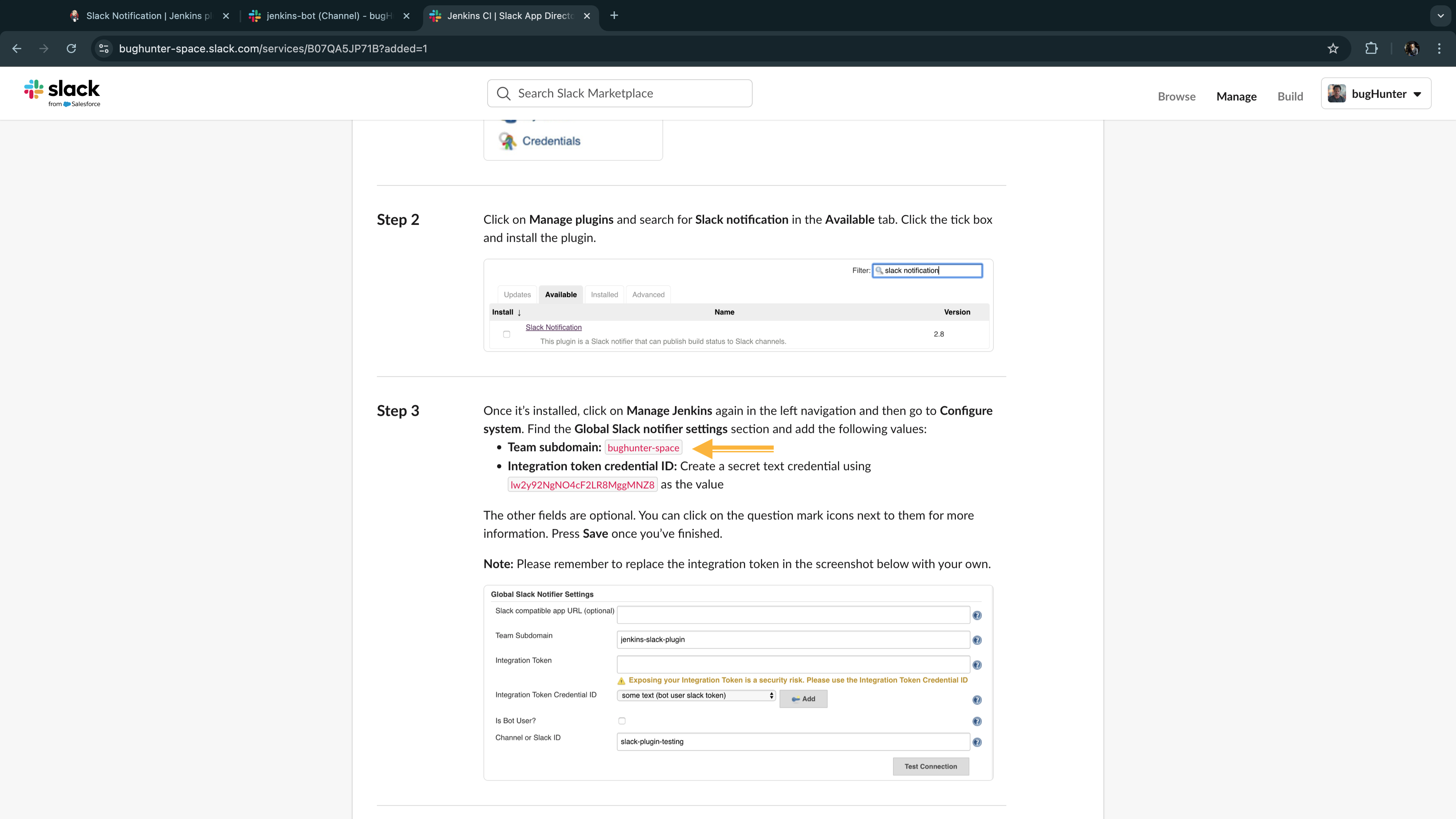

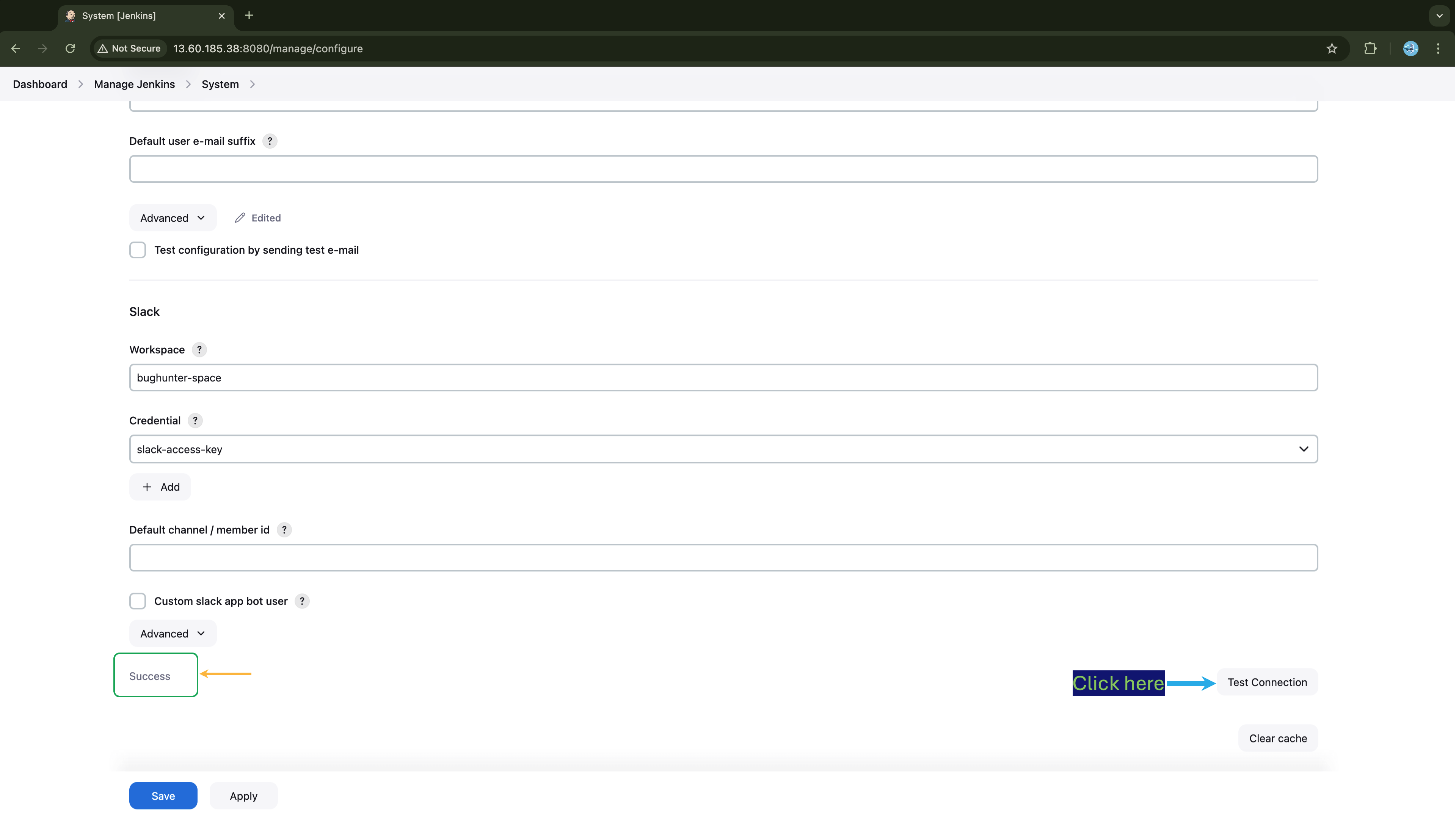

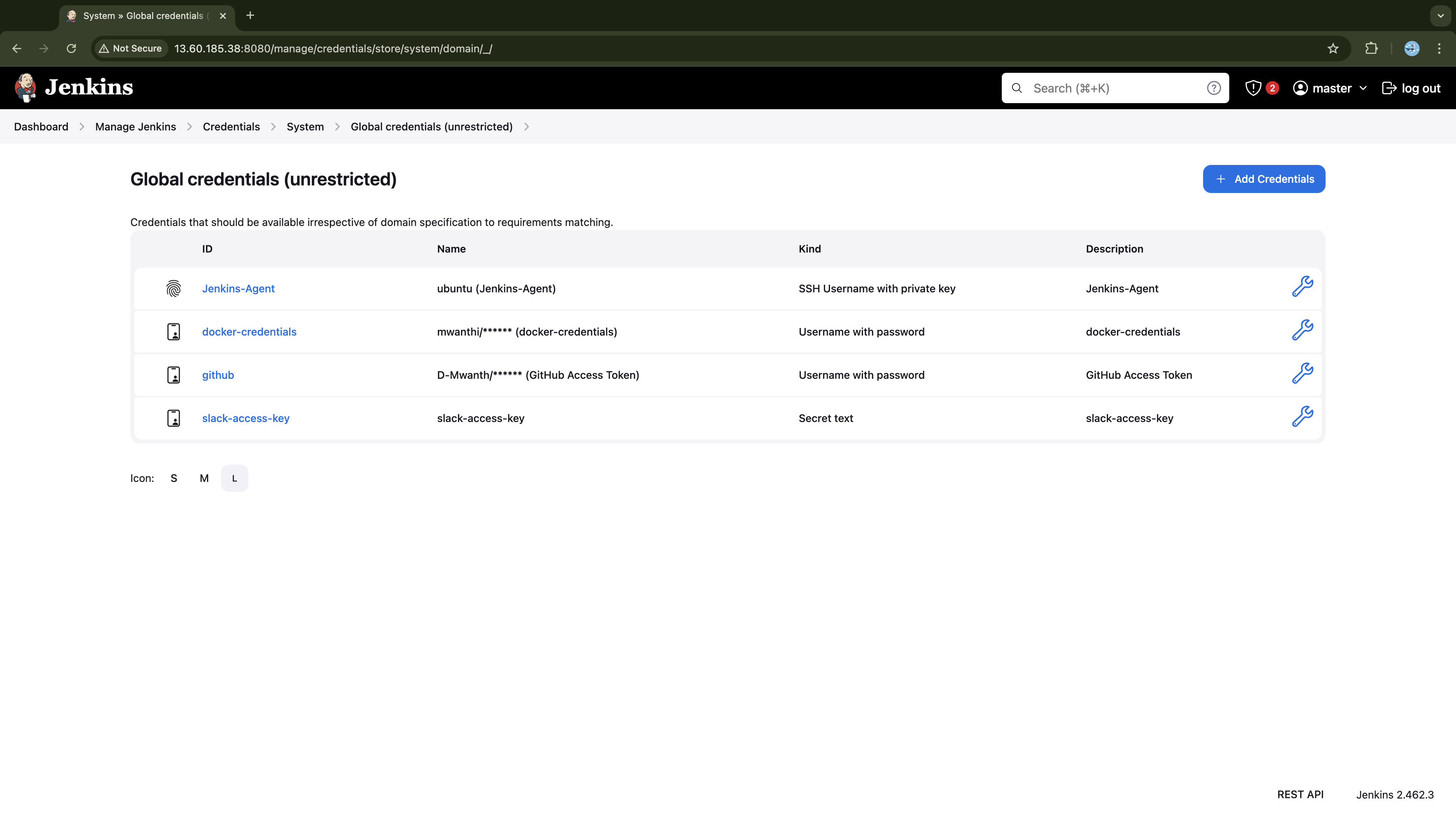

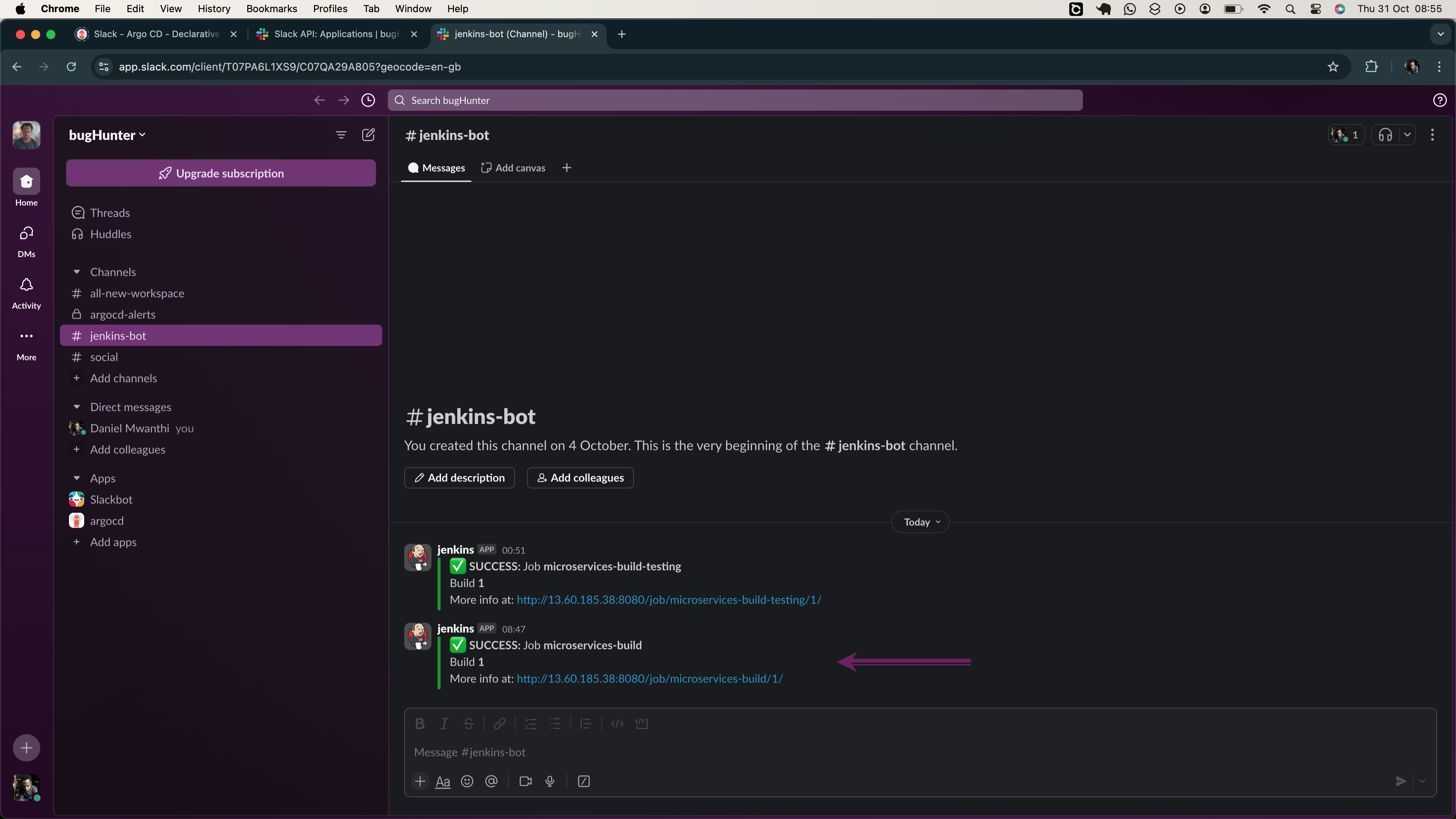

- Jenkins-Slack Integration

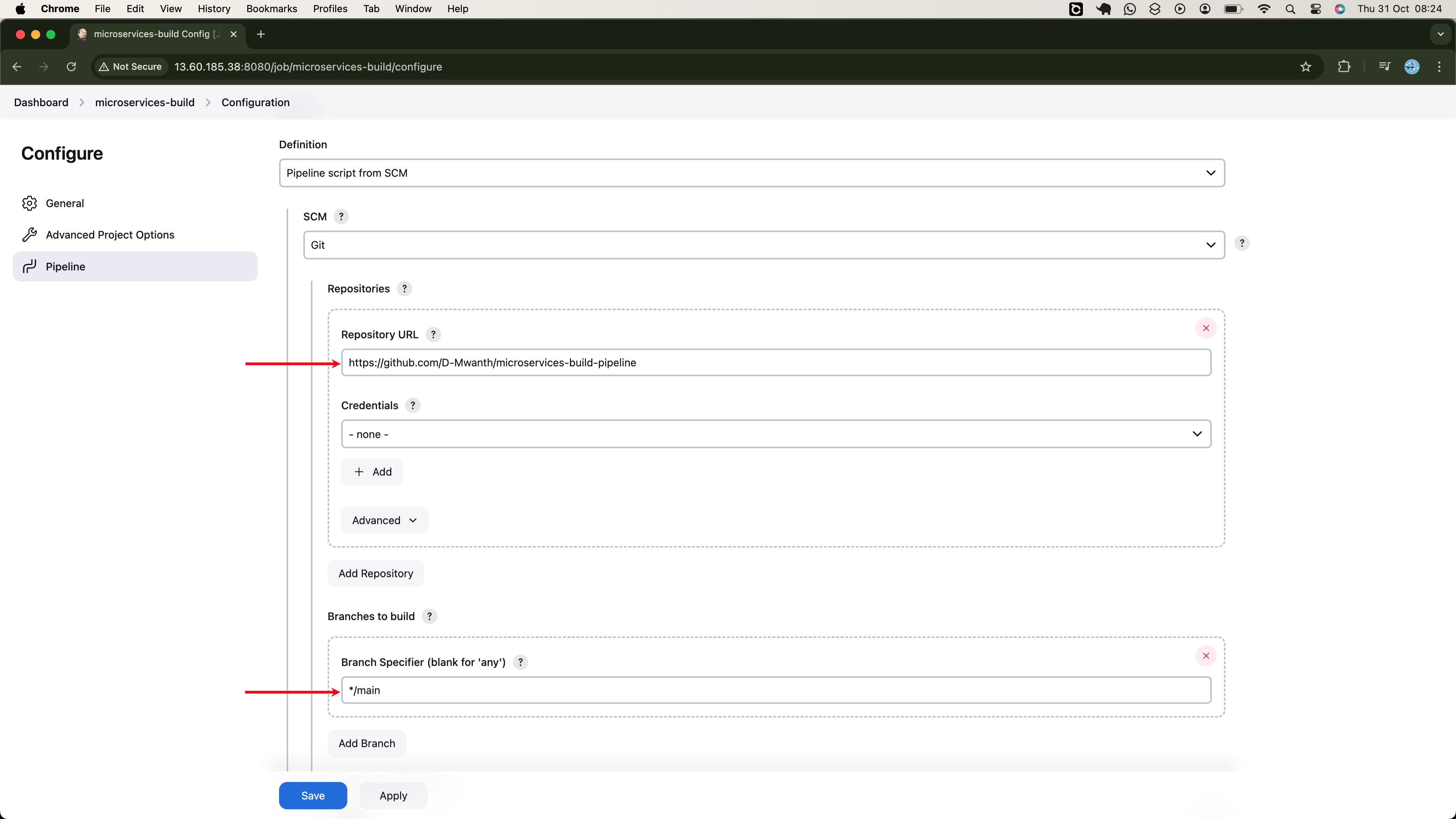

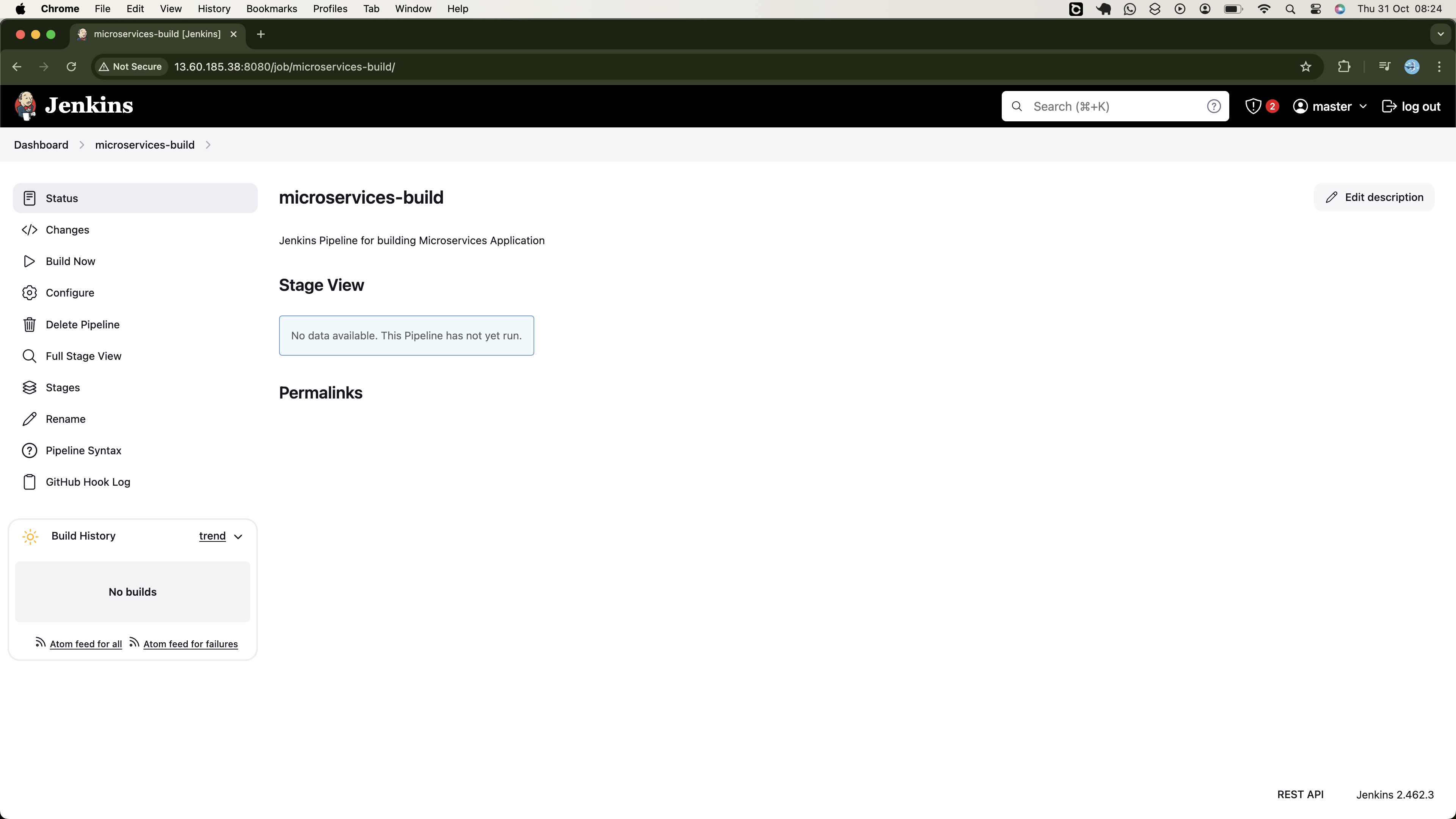

- Creating Jenkins Job

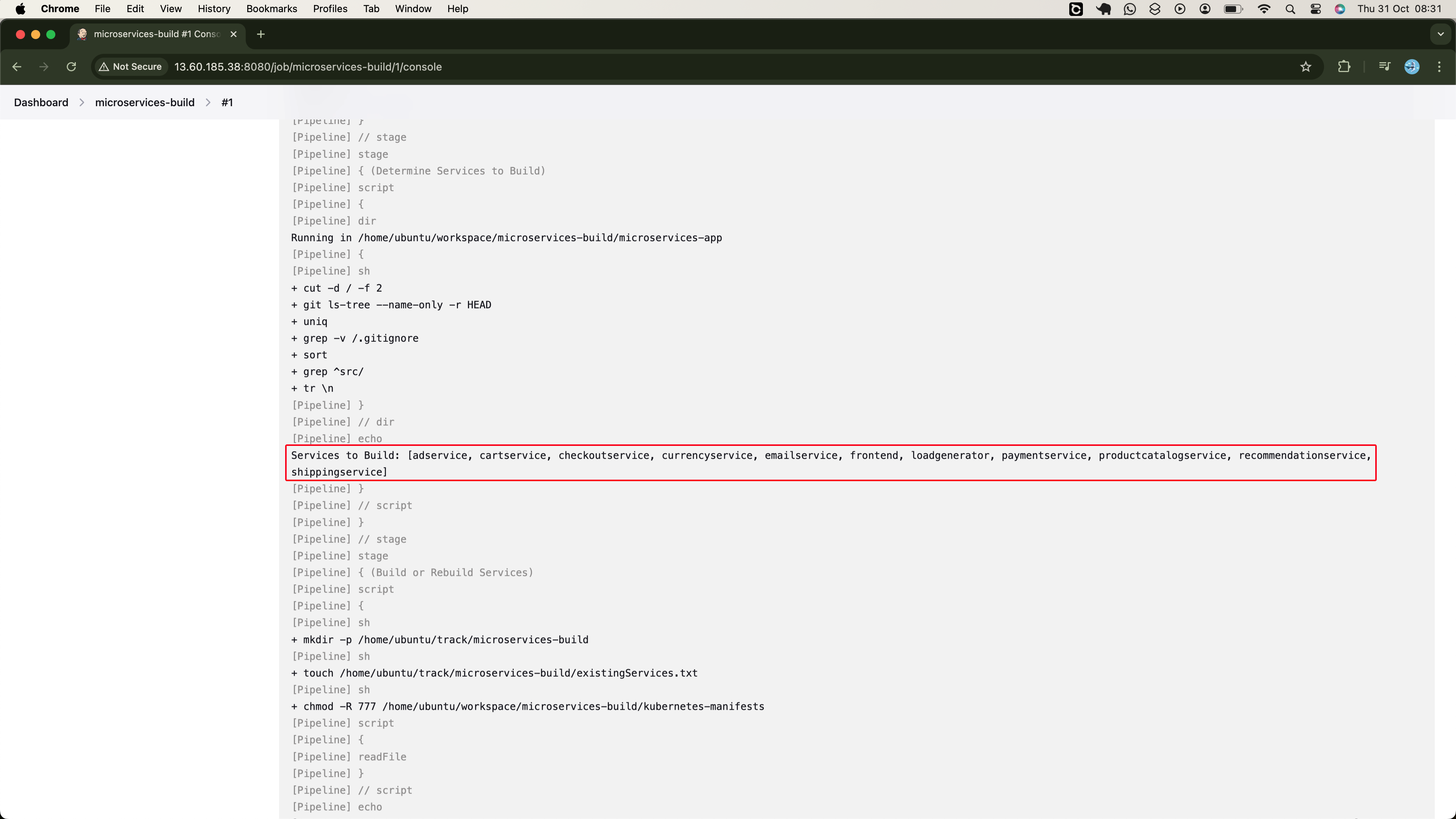

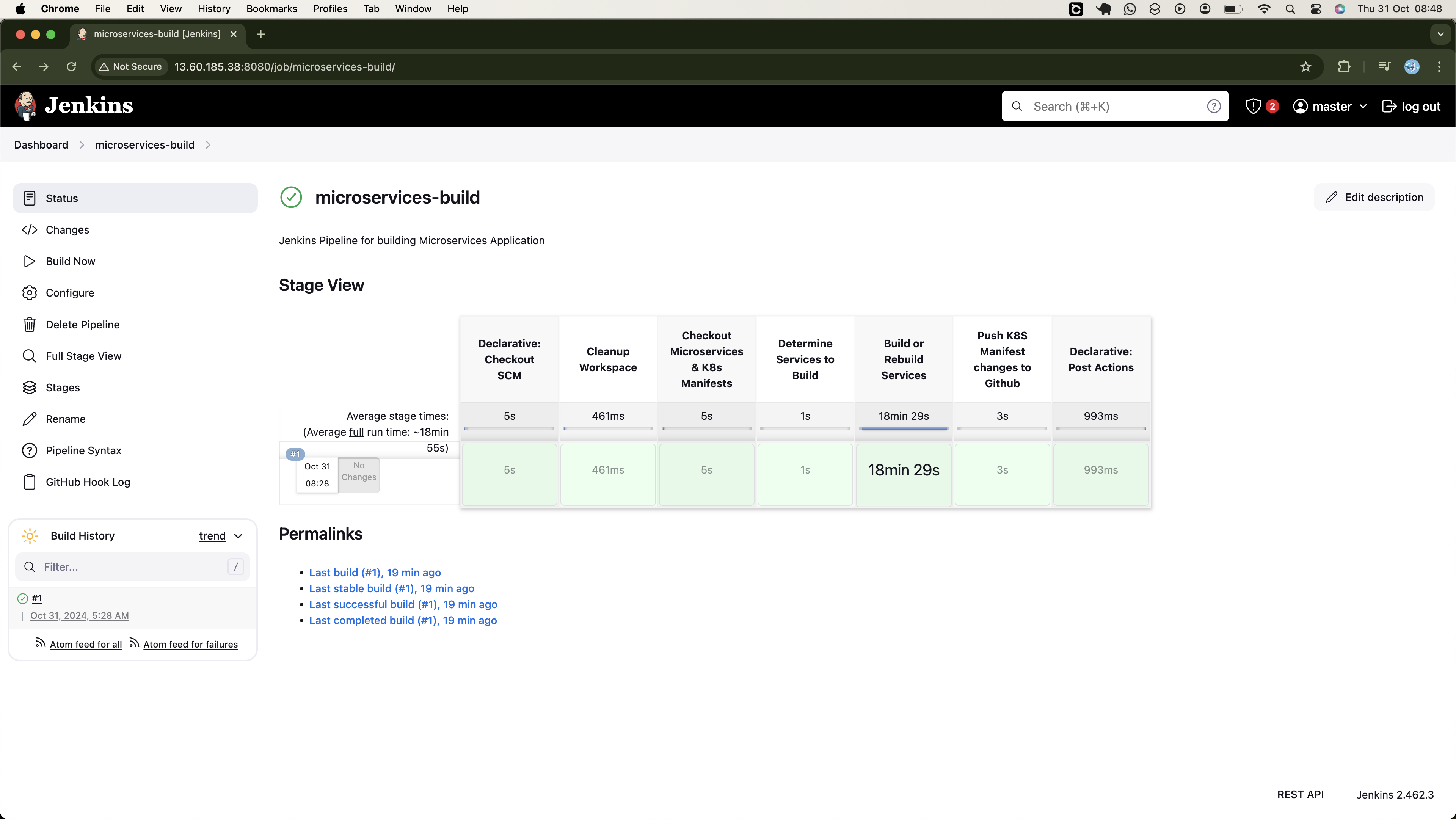

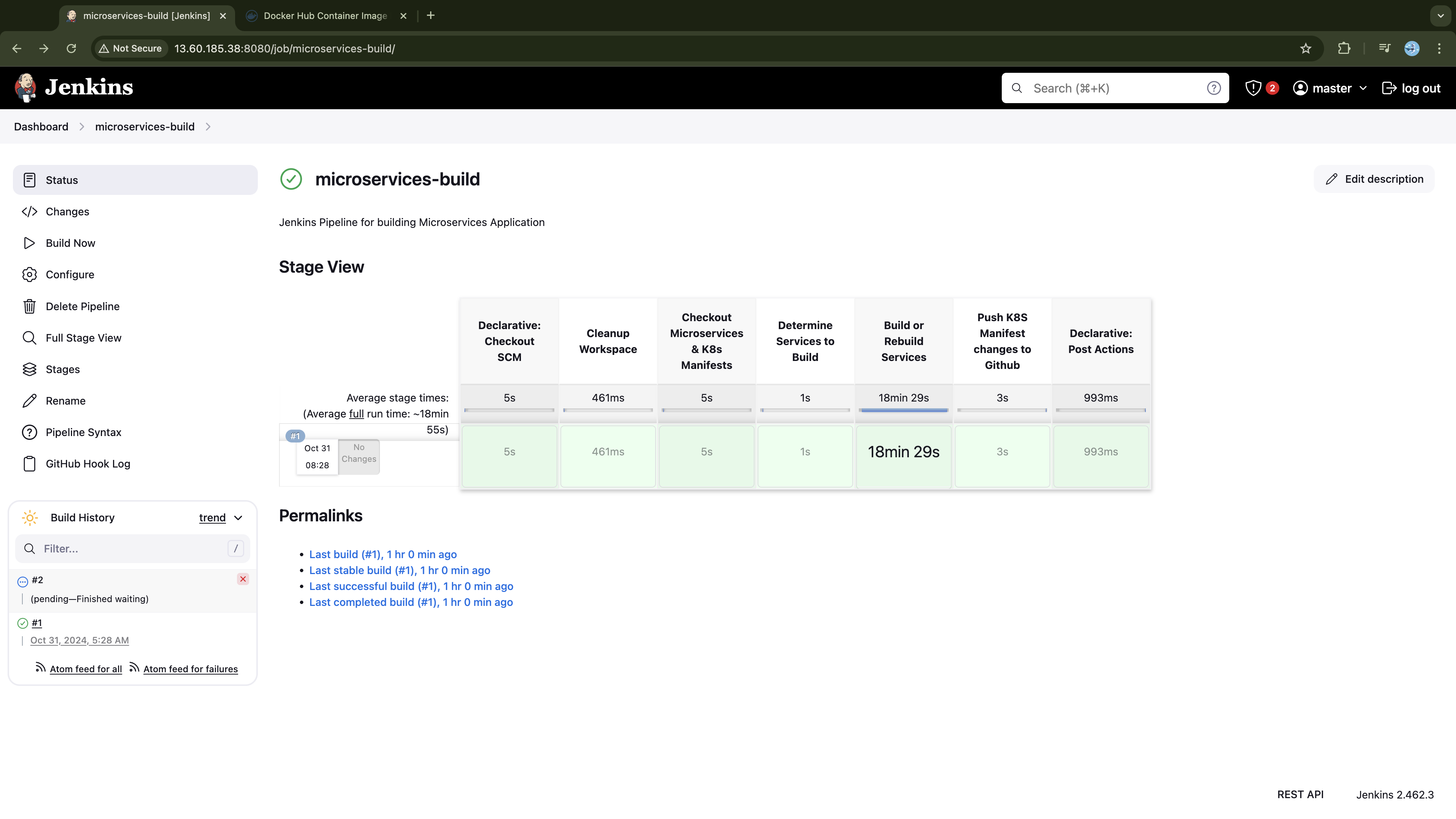

- Executing the Pipeline

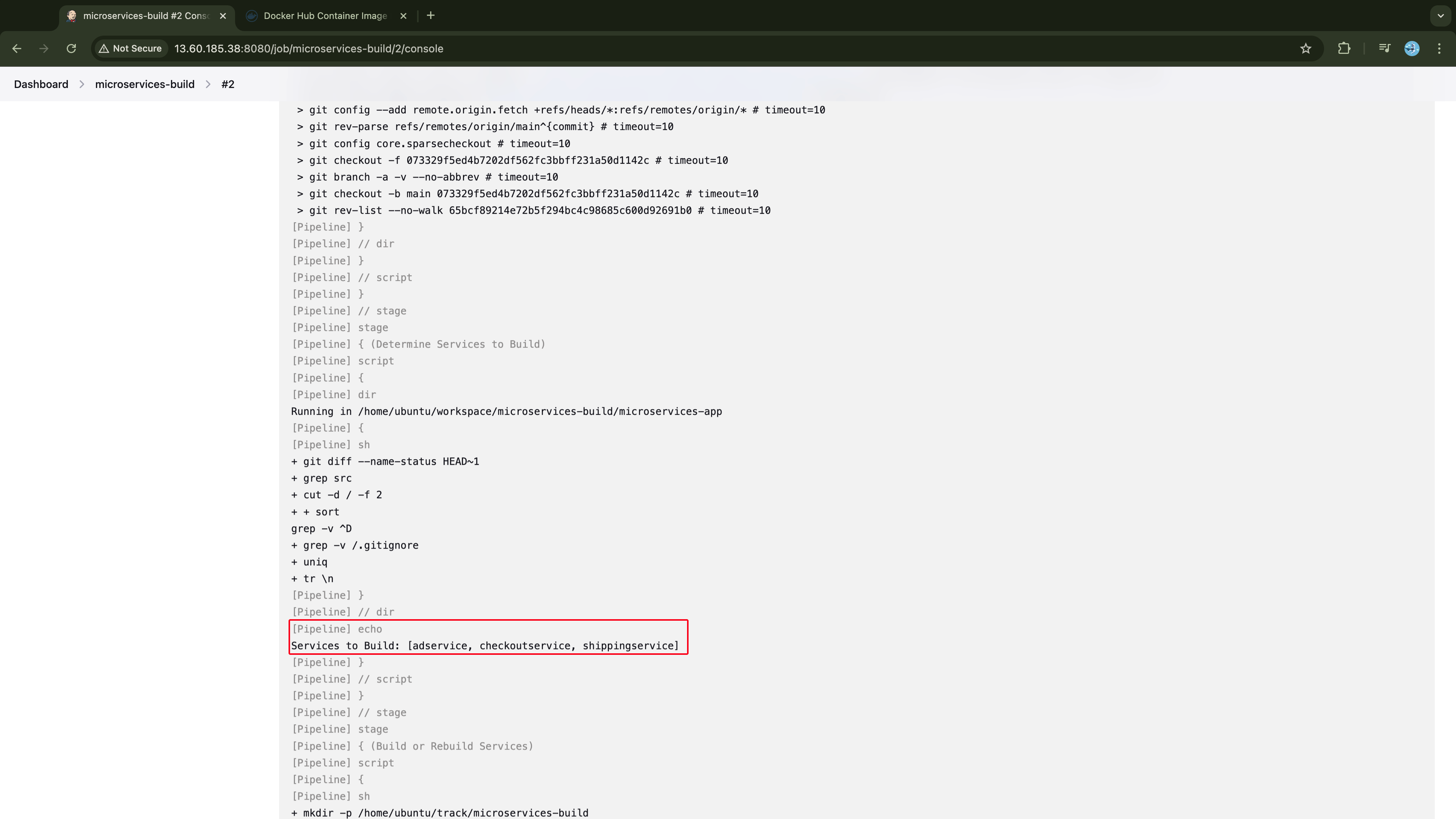

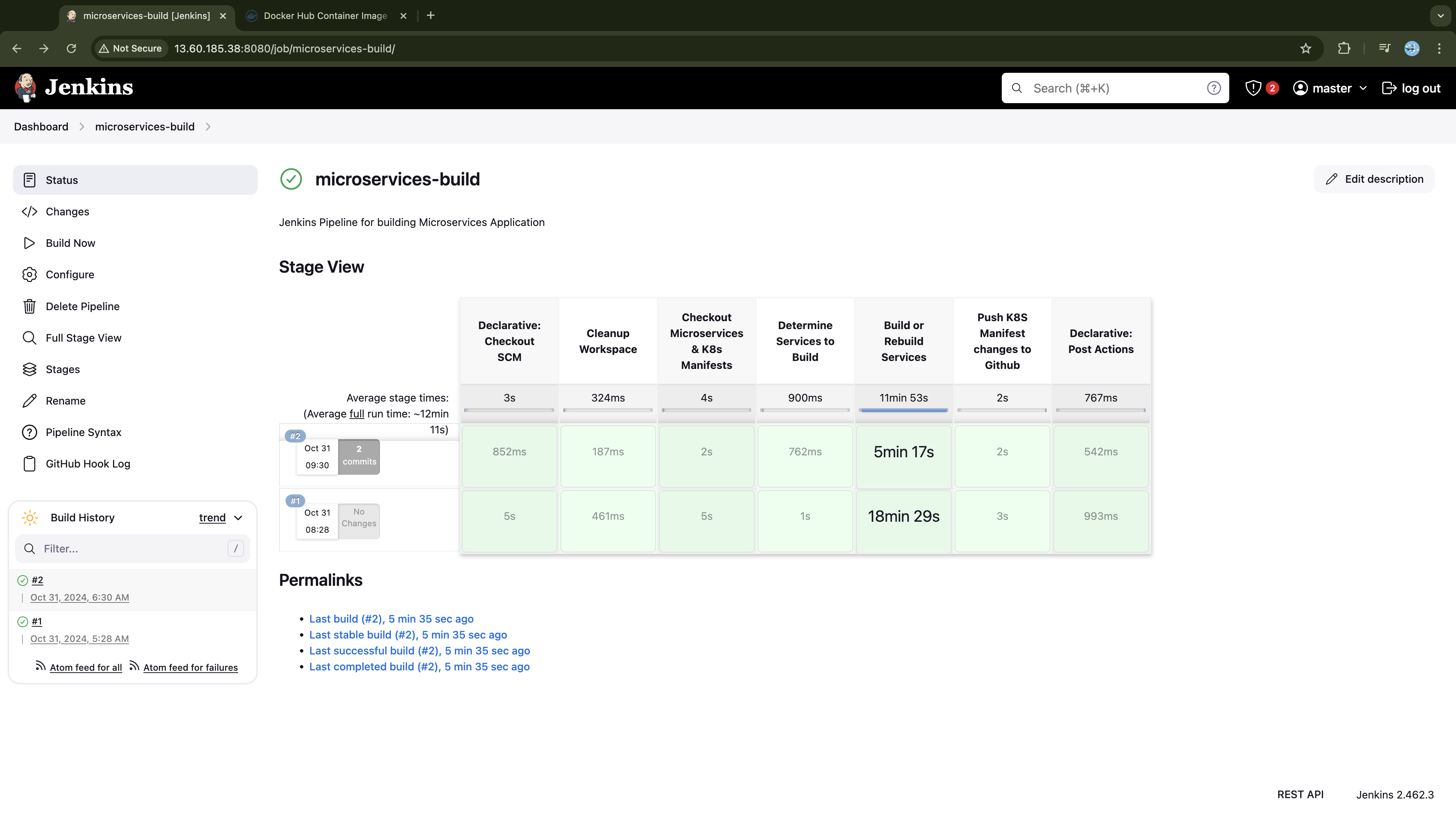

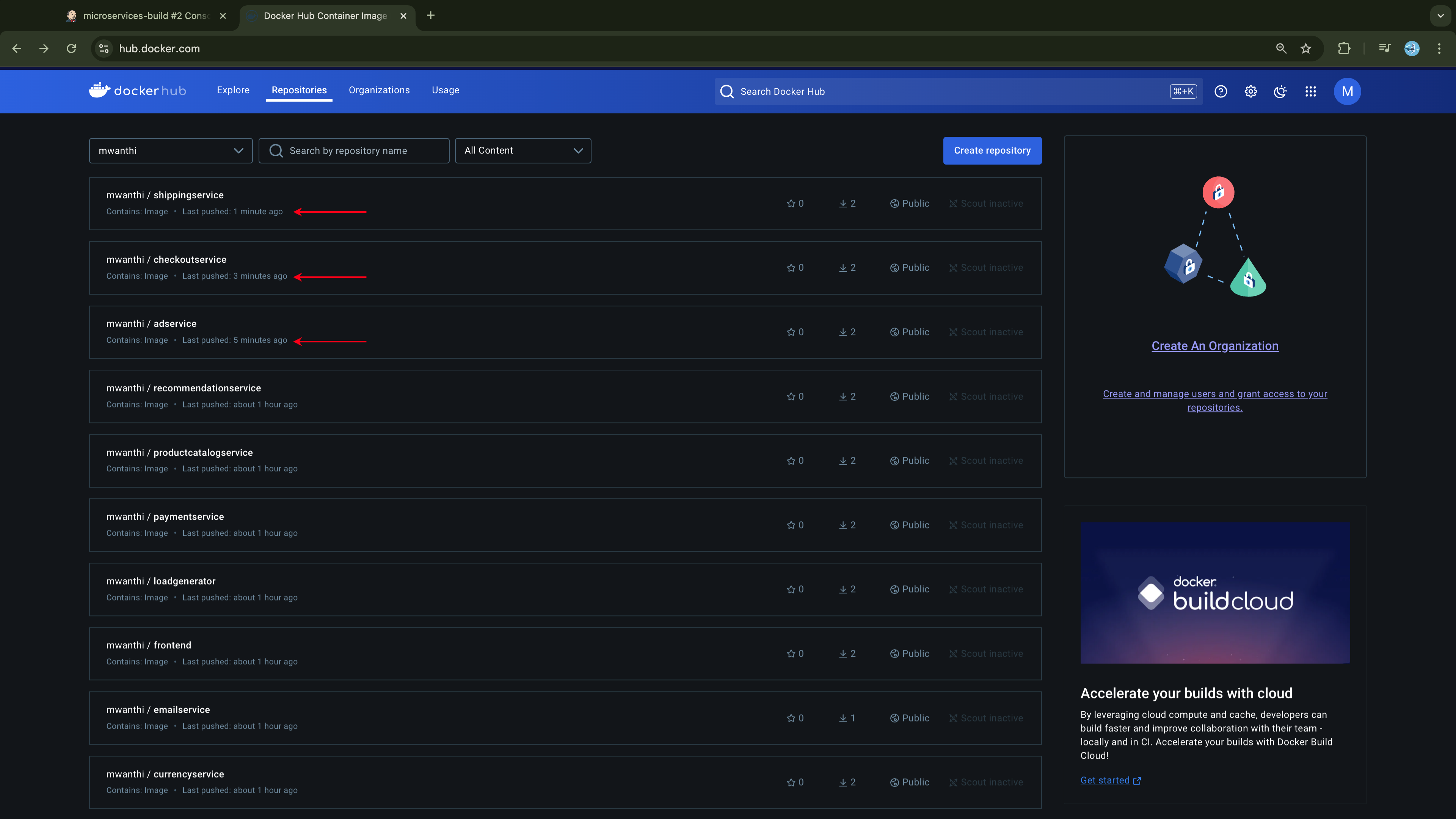

- Testing the Pipeline ability to selectively build services

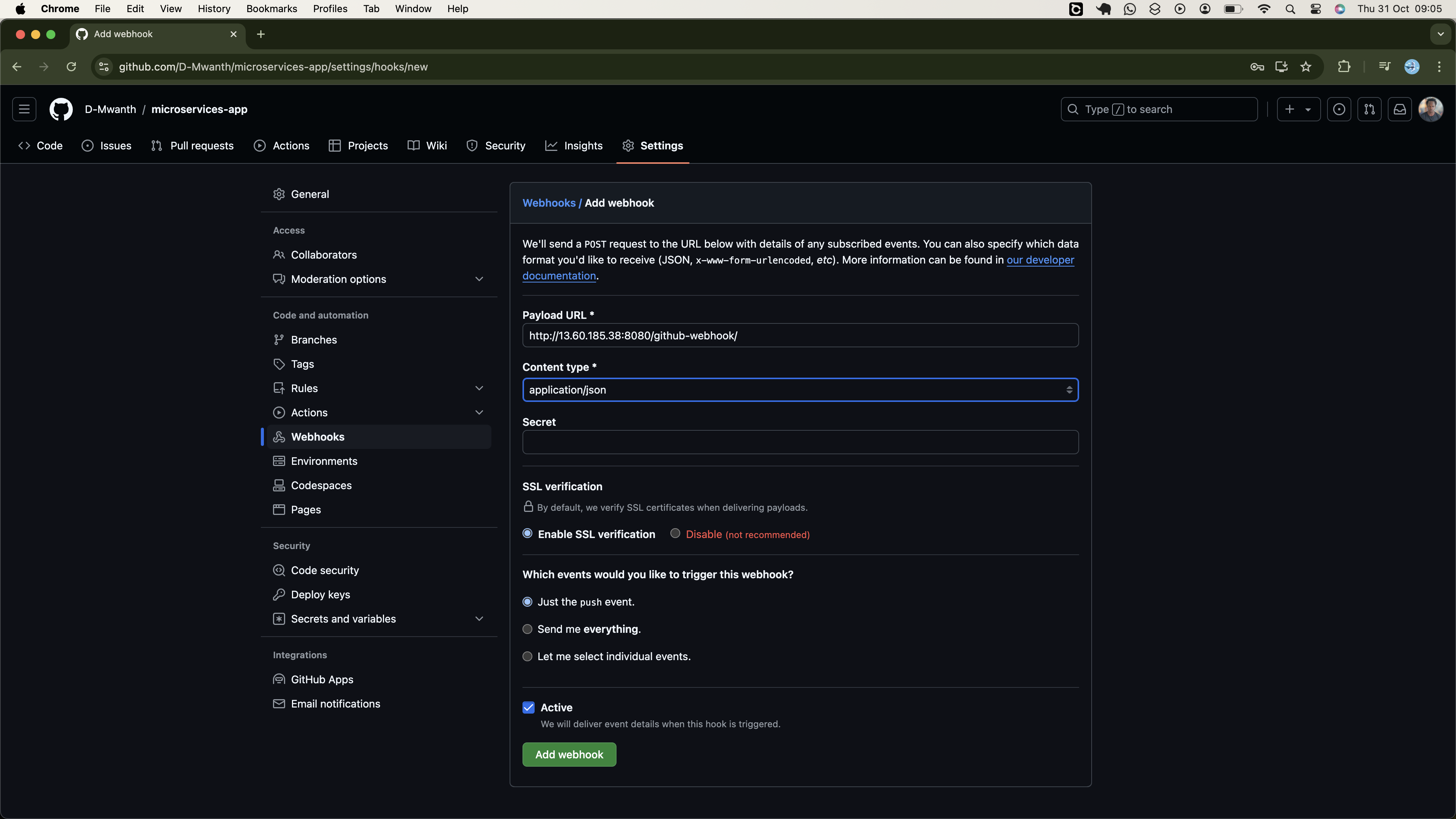

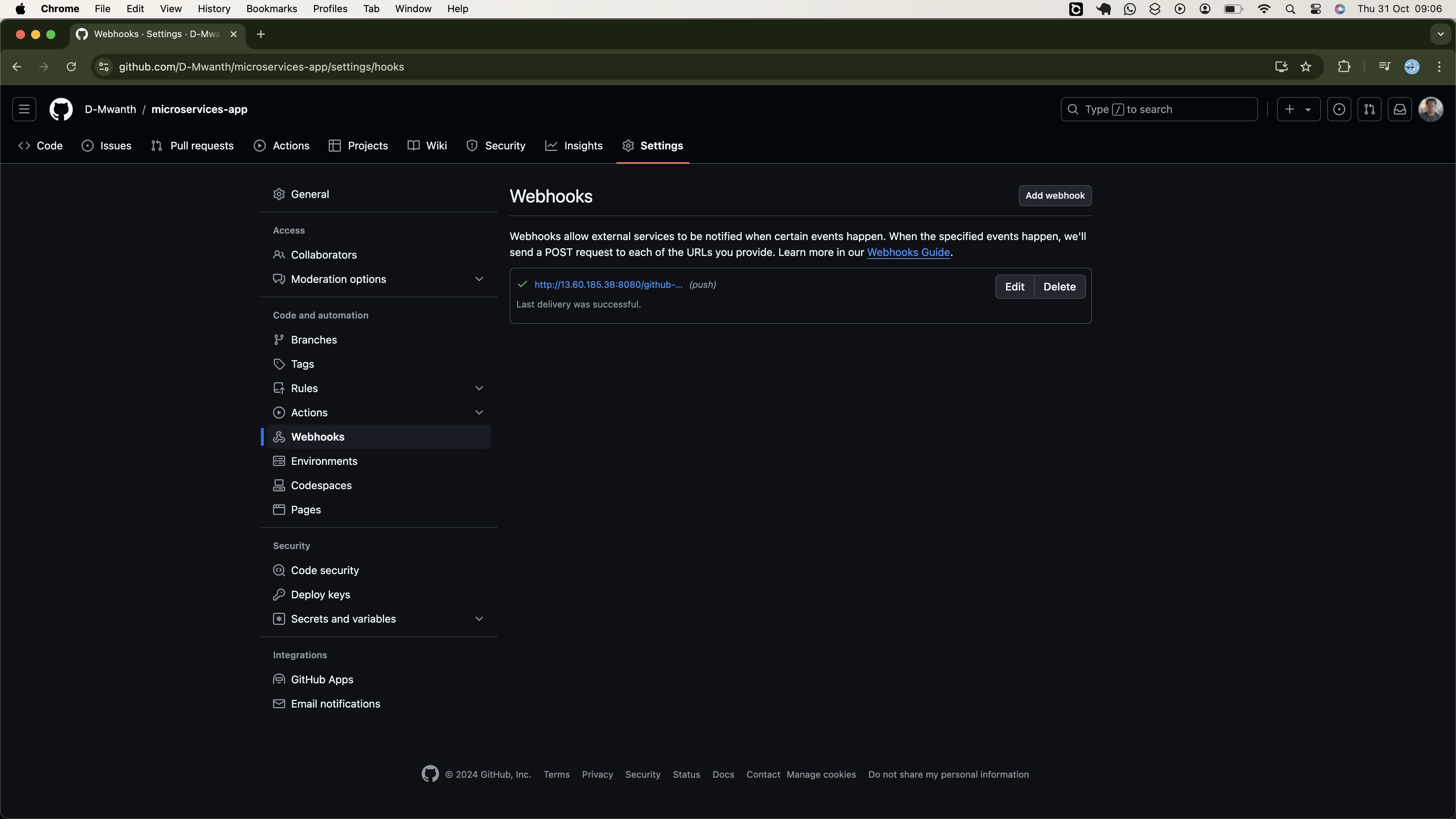

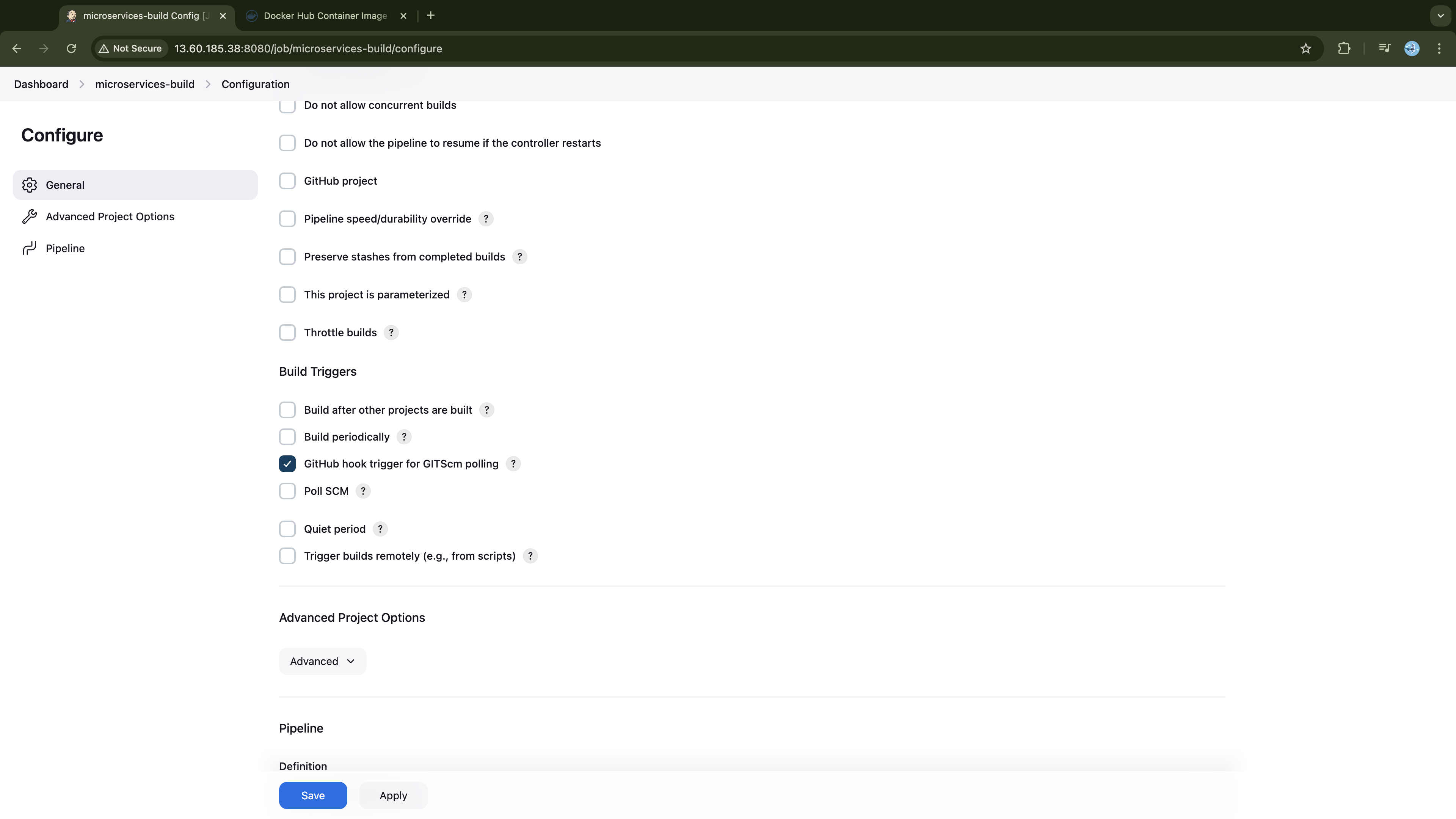

- Set Up Github Webhooks

- Triggering Jenkins Pipeline with Github WebHooks

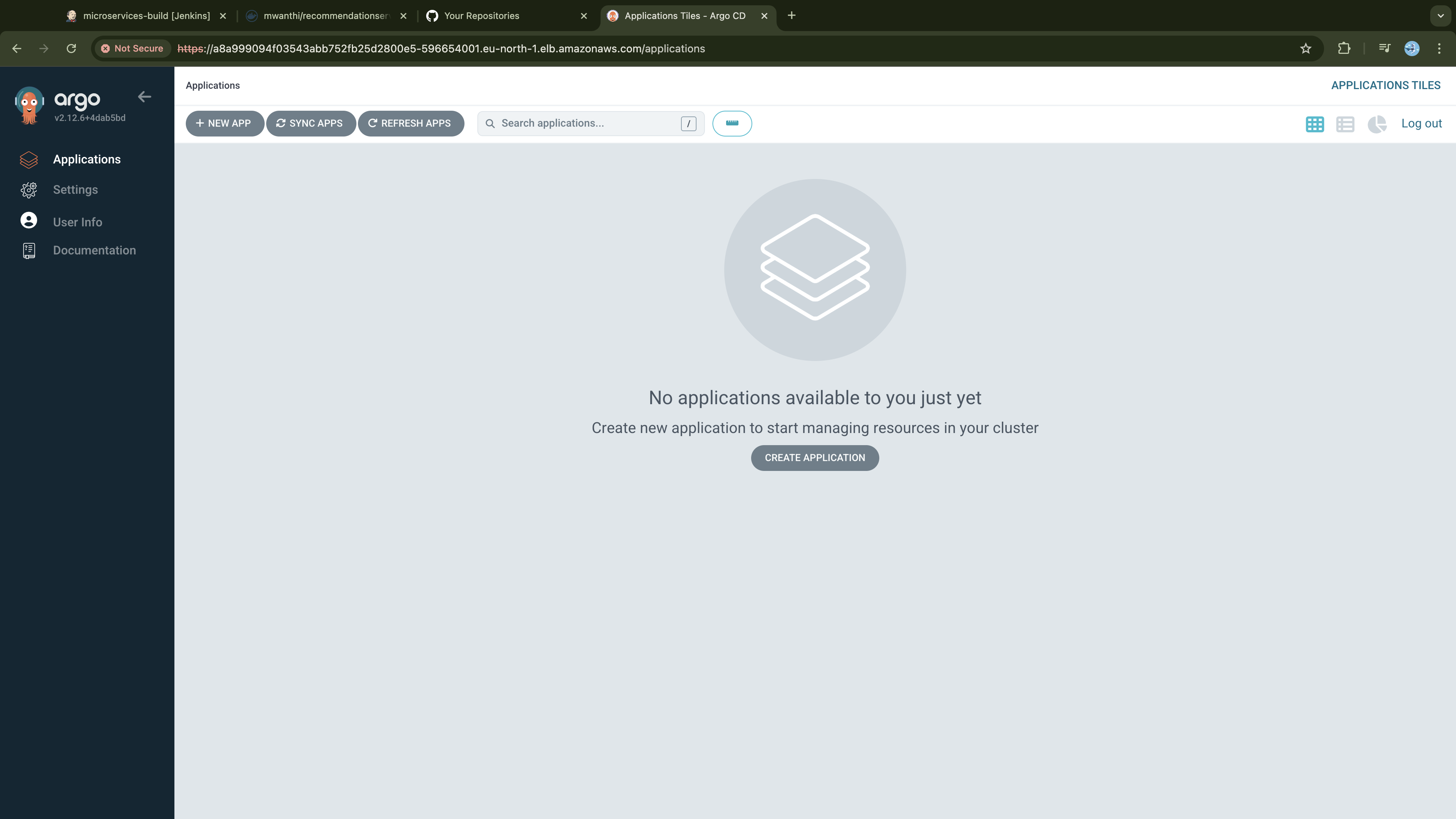

- GitOps: Continuous Delivery and Deployment with ArgoCD

- Exposing the Application for Public Accessibility

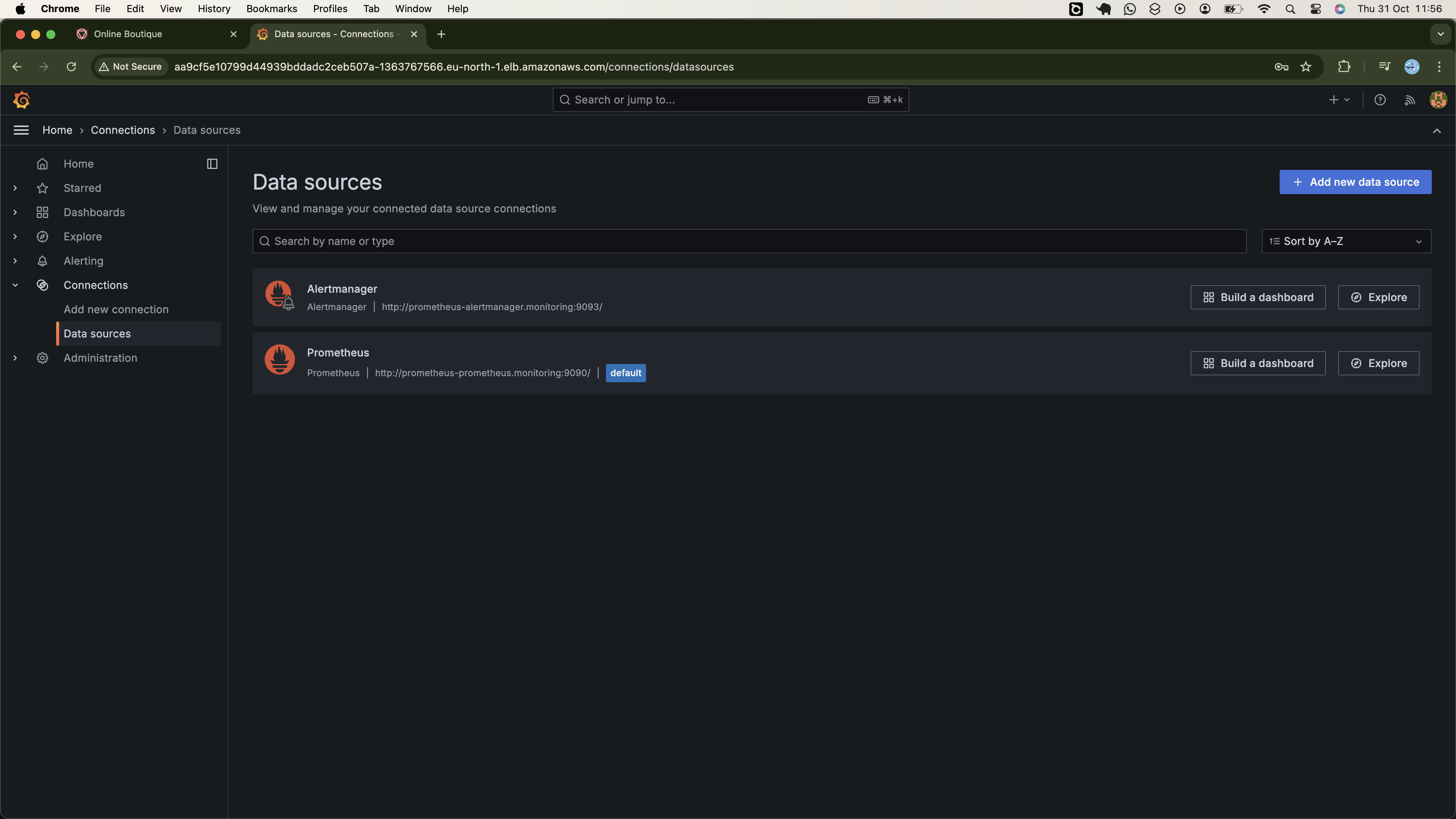

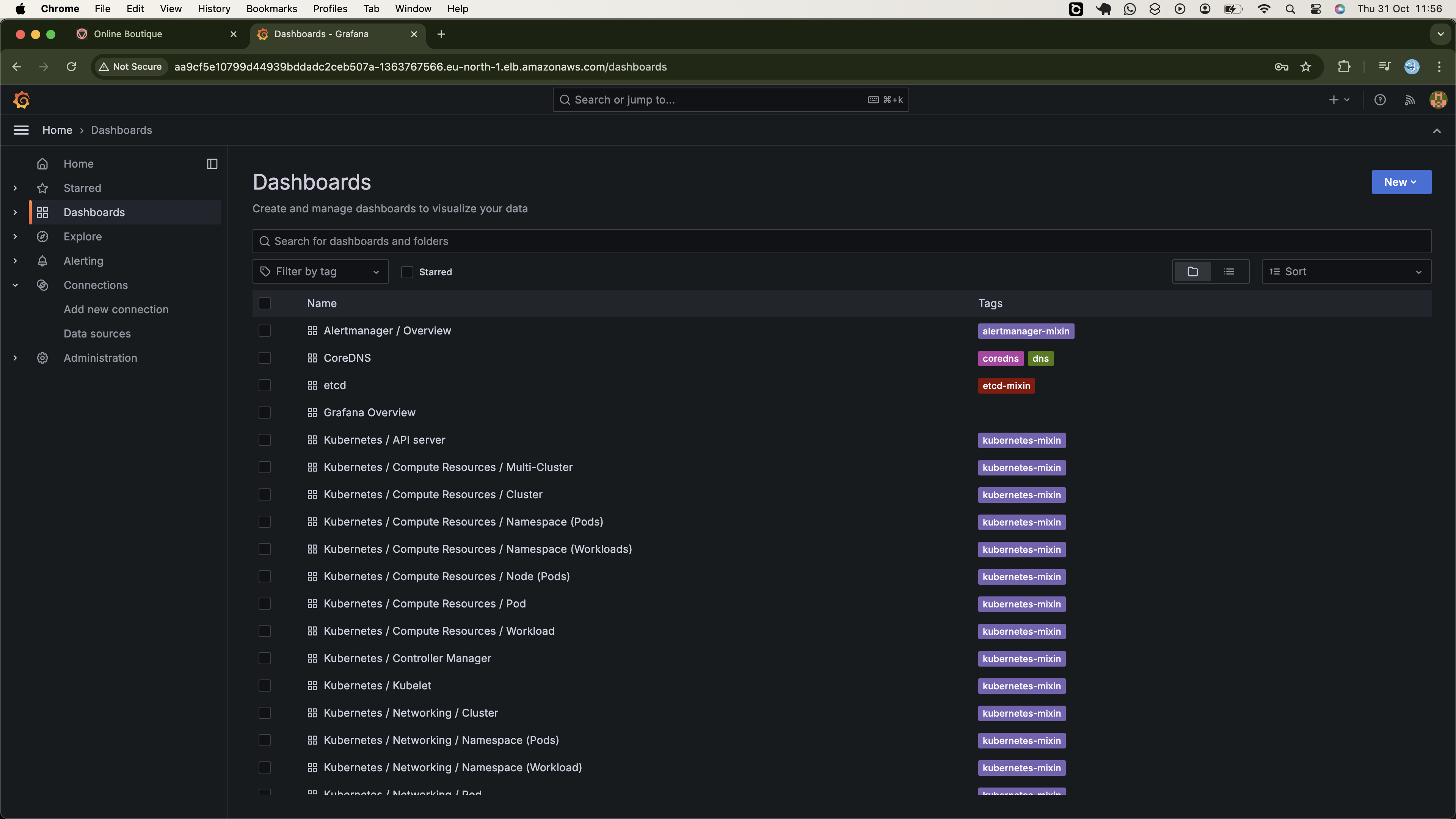

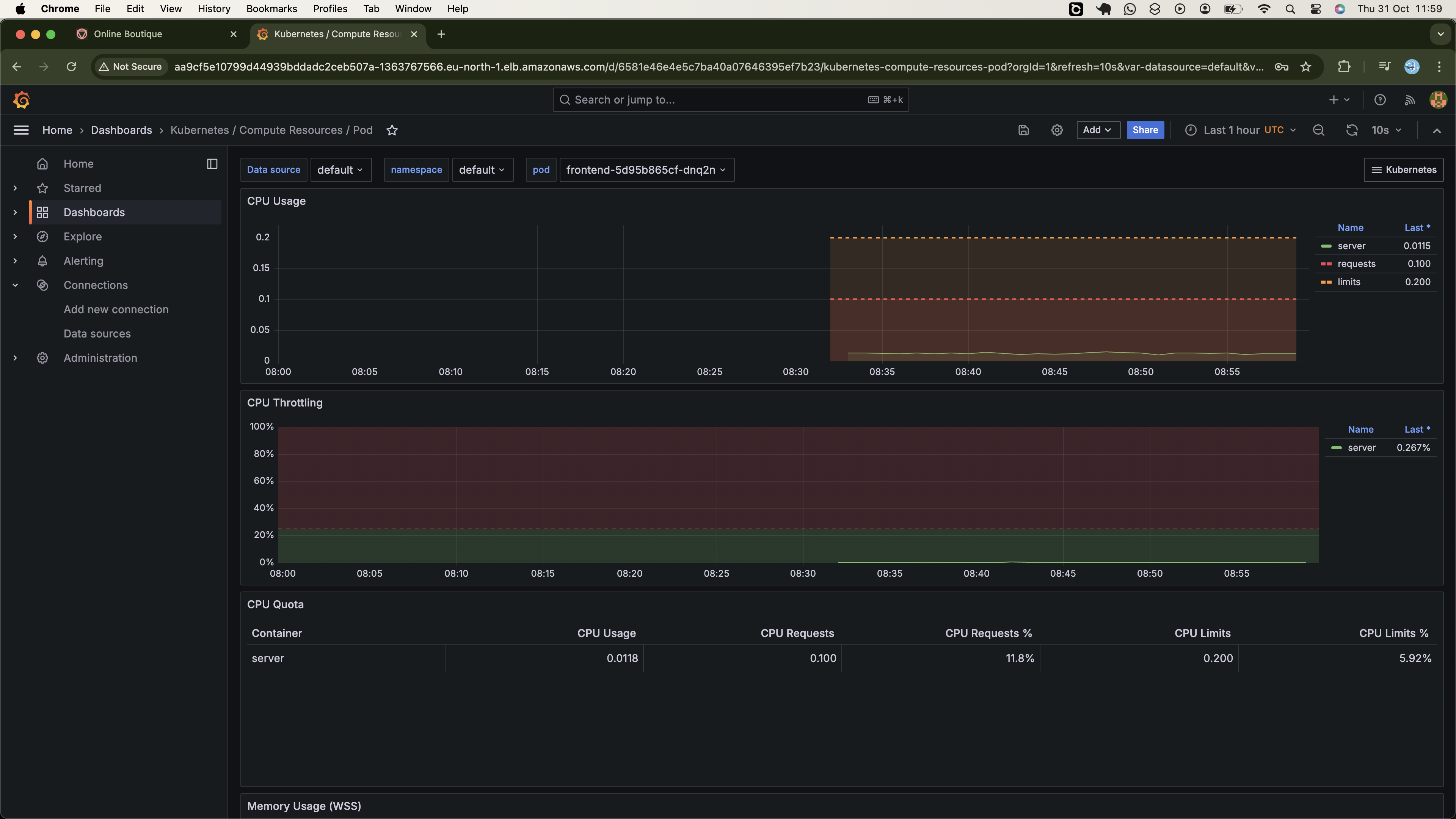

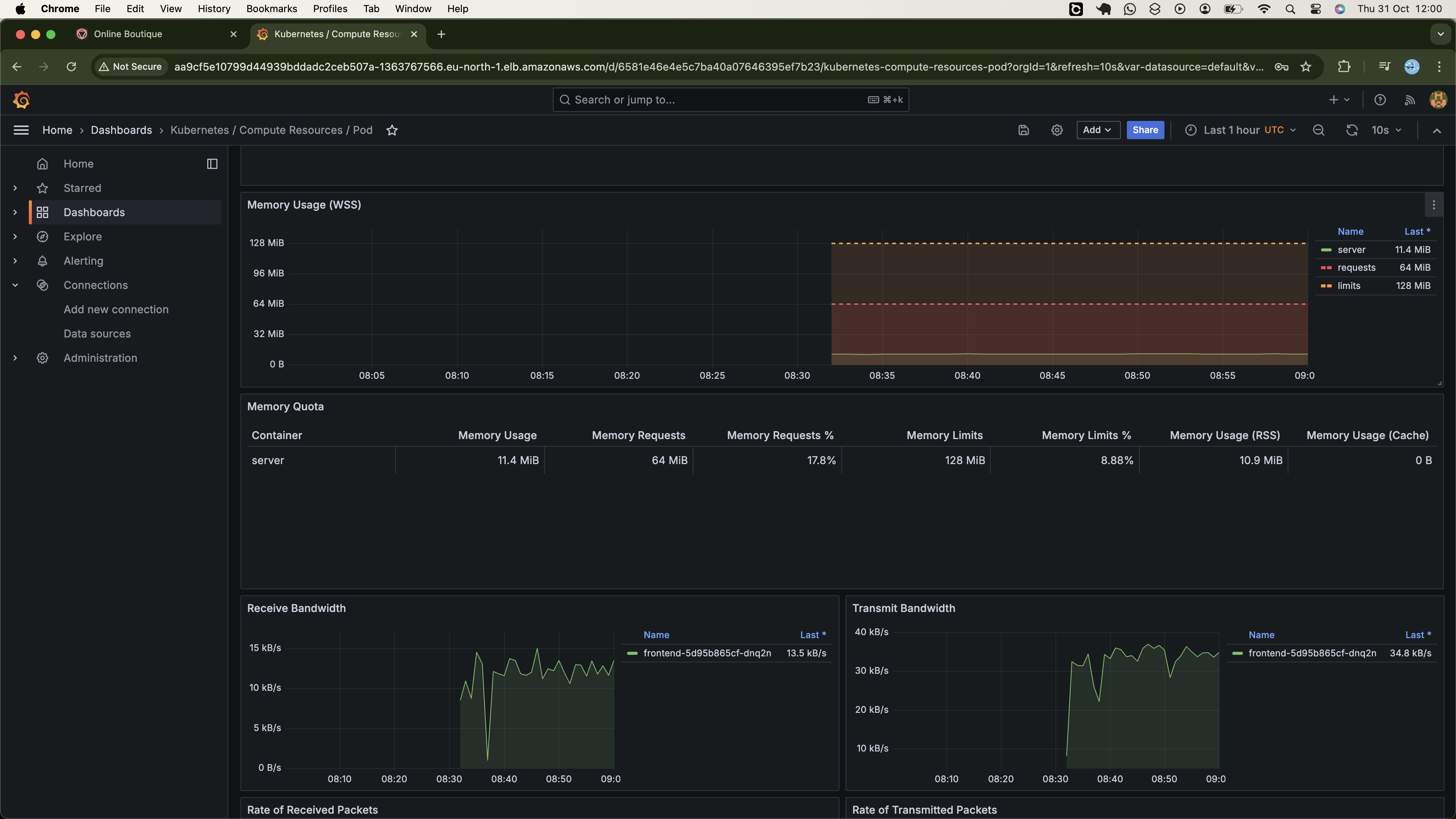

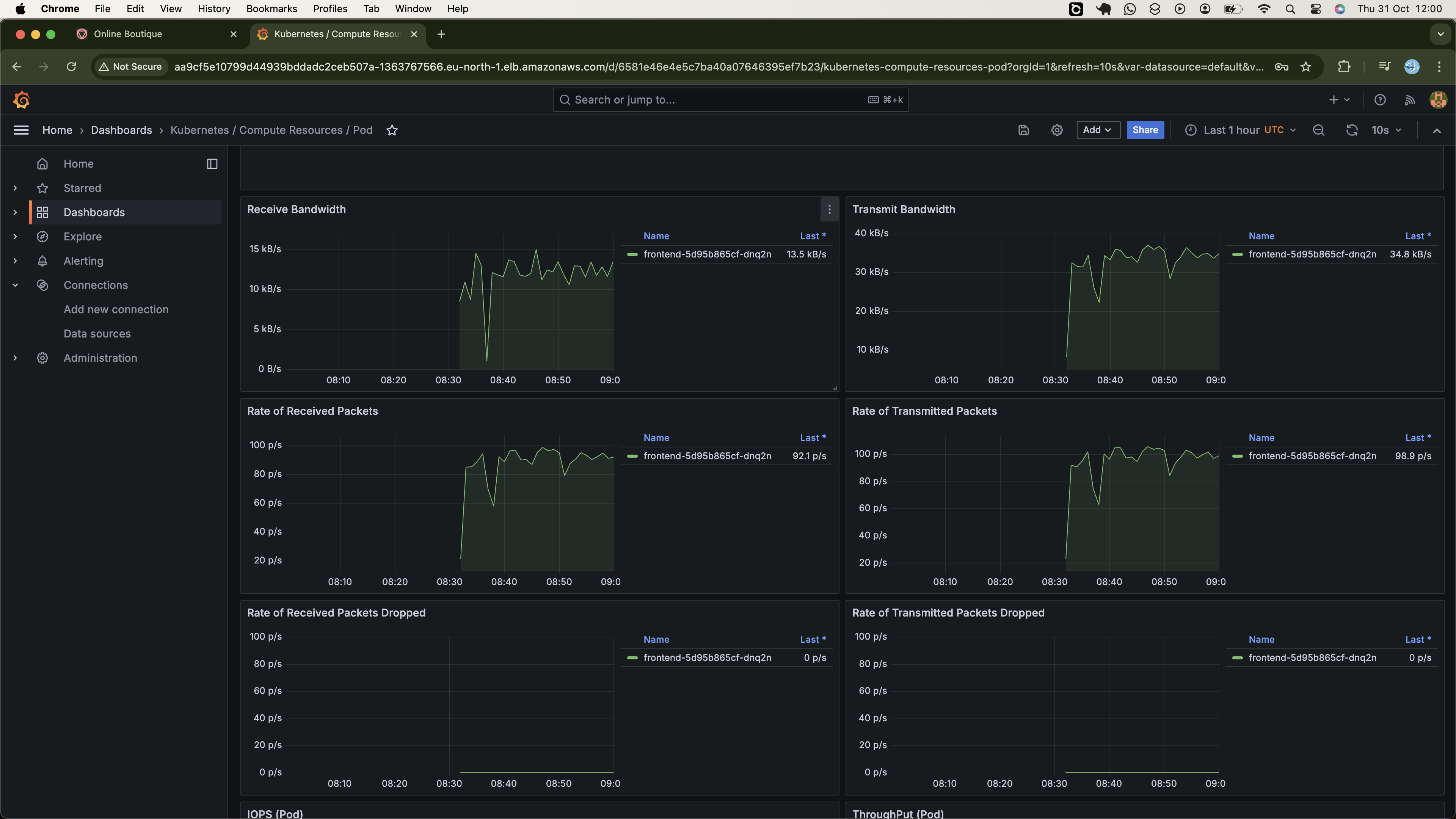

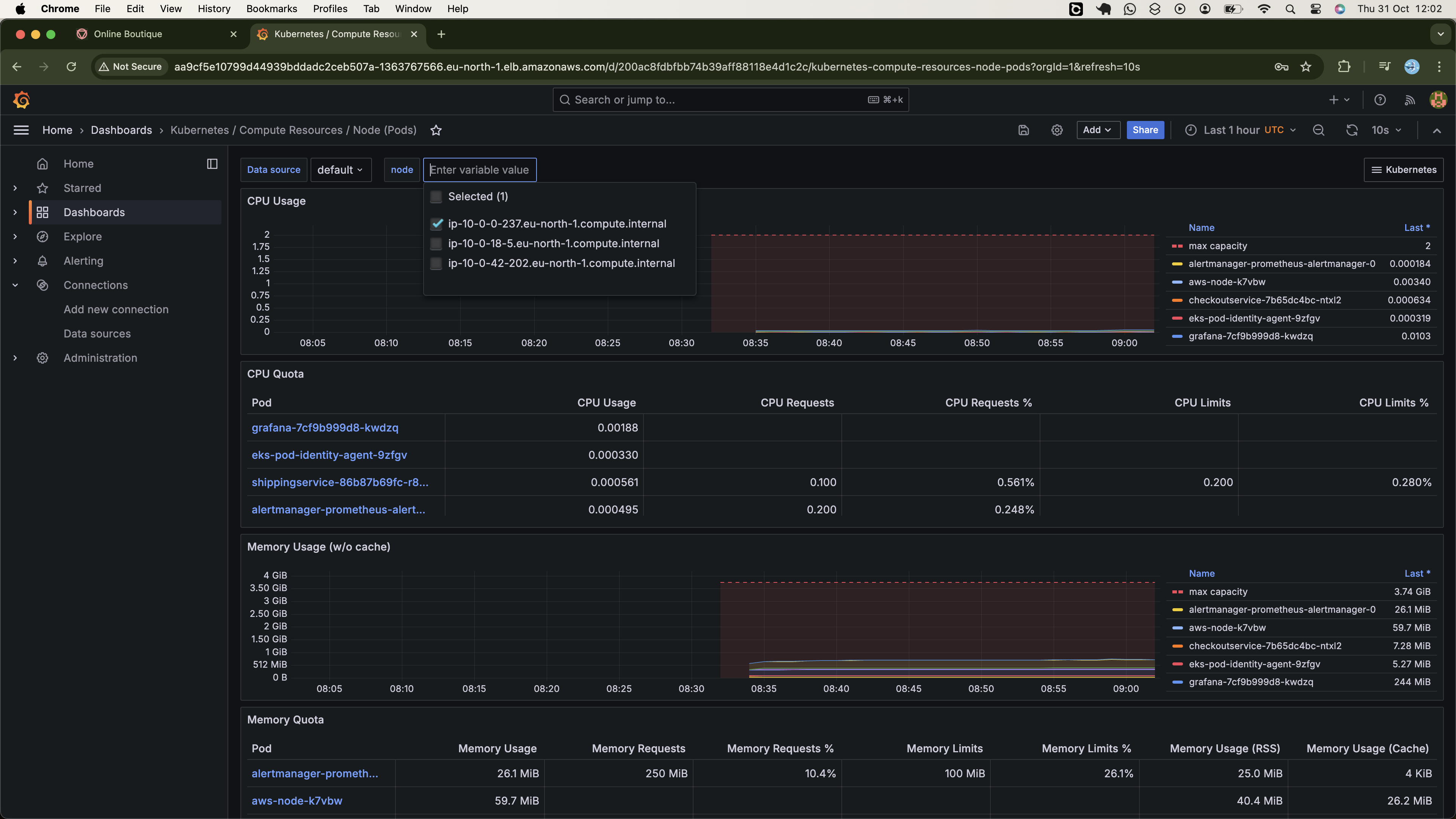

- Cluster Moniterting using Promethus and Grafana

- Clean Up

- Conclusion

Introduction

This project implements a fully automated workflow for building and deploying a monorepo-based microservices architecture. Using Jenkins and ArgoCD, we will develop an efficient CI/CD workflows to automate the building process of various services of a sample ecommerce microservices architecutre consisting of 12 services, and deploying them to a resilient, scalable Kubernetes cluster, that we will provision using Terraform.

To ensure secure access to our application, we will expose the frontend service via an Application load balancer (ALB) protected with SSL/TLS encryption to safeguard sensitive user data. Additionally, to provide visibility into our Kubernetes cluster's performance, we will integrate a monitoring solution using Prometheus and Grafana to track metrics and promptly identify issues.

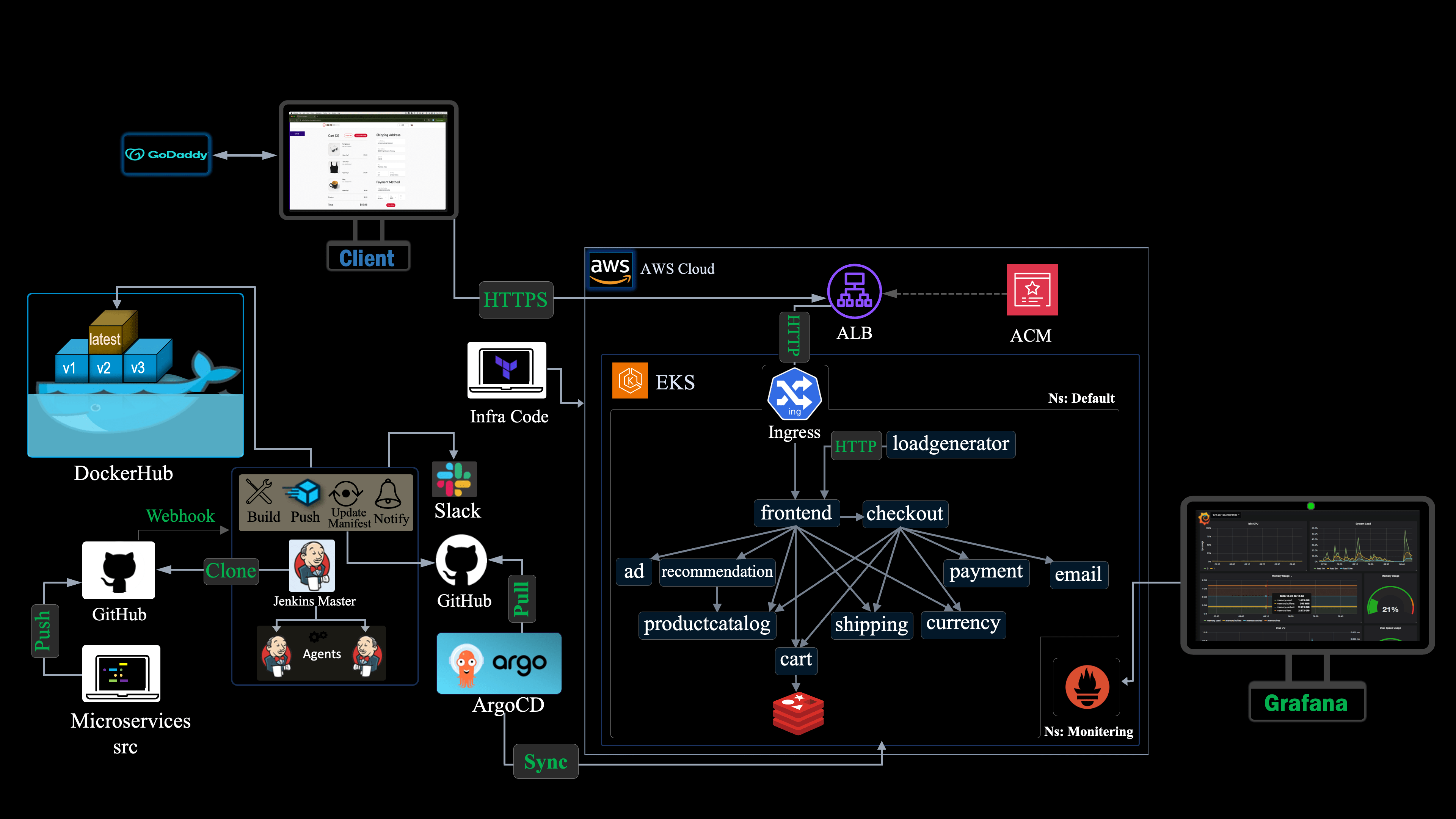

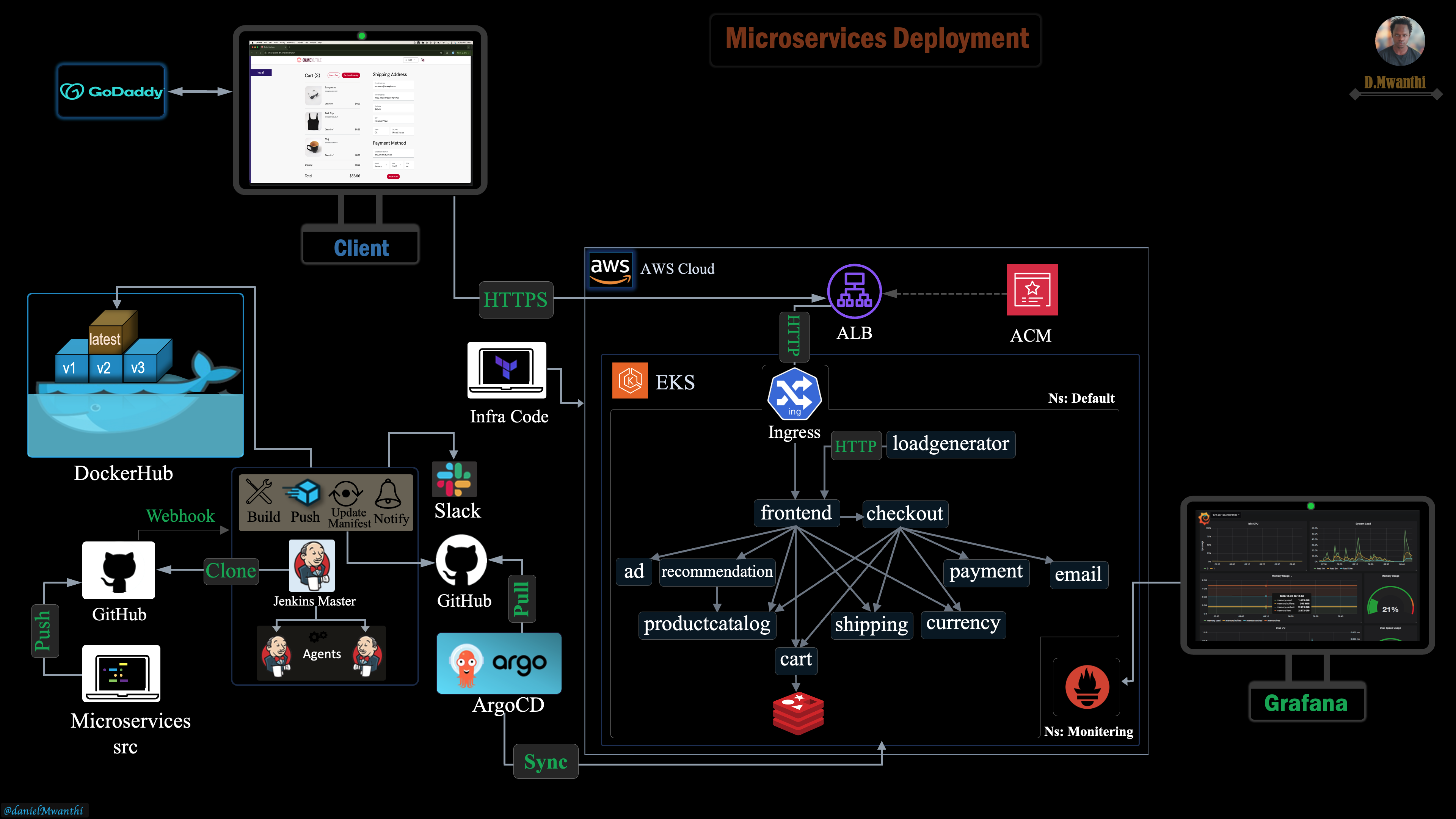

Below is the detailed architecture that we will implement from scratch:

Execution Plan

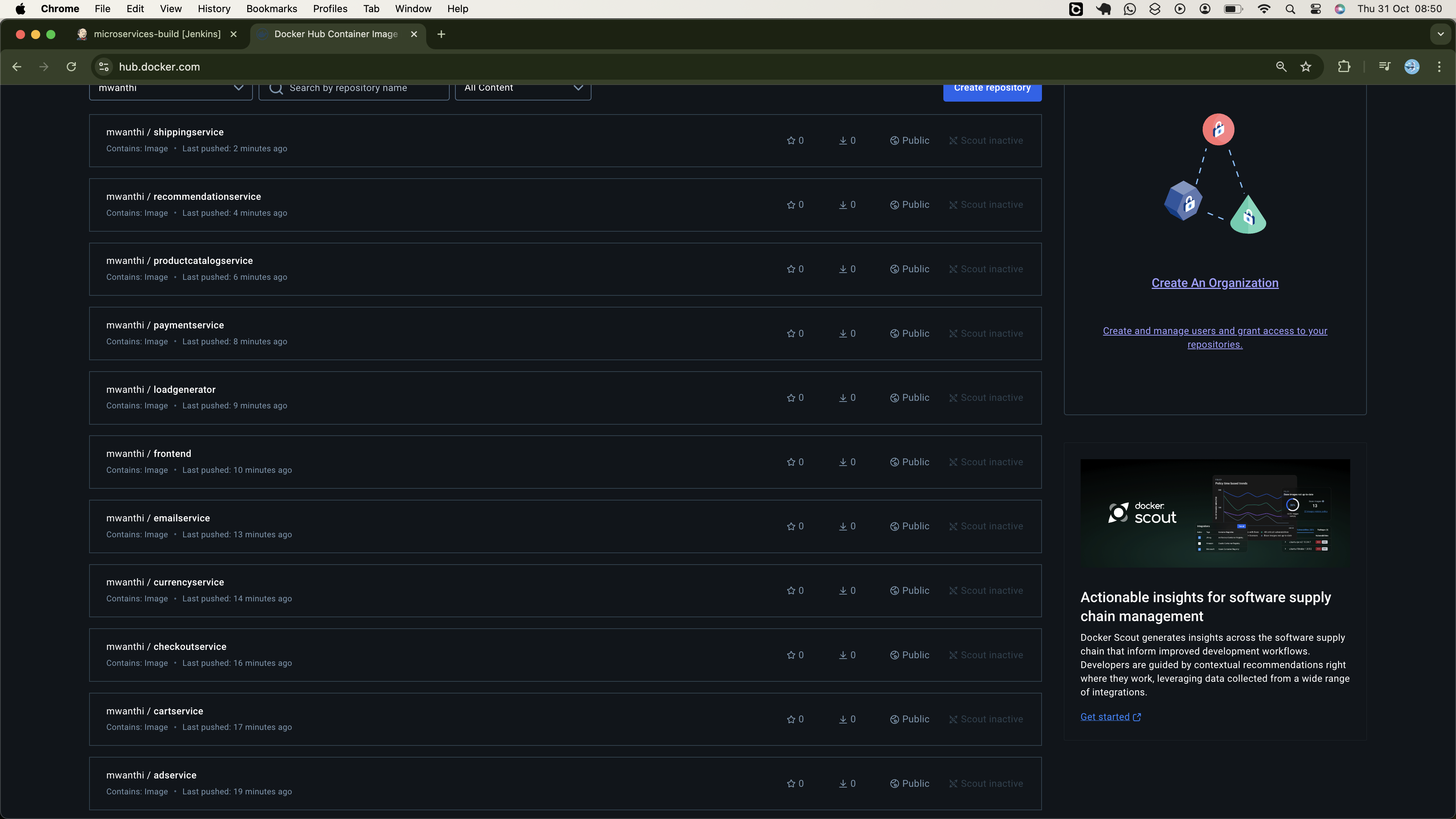

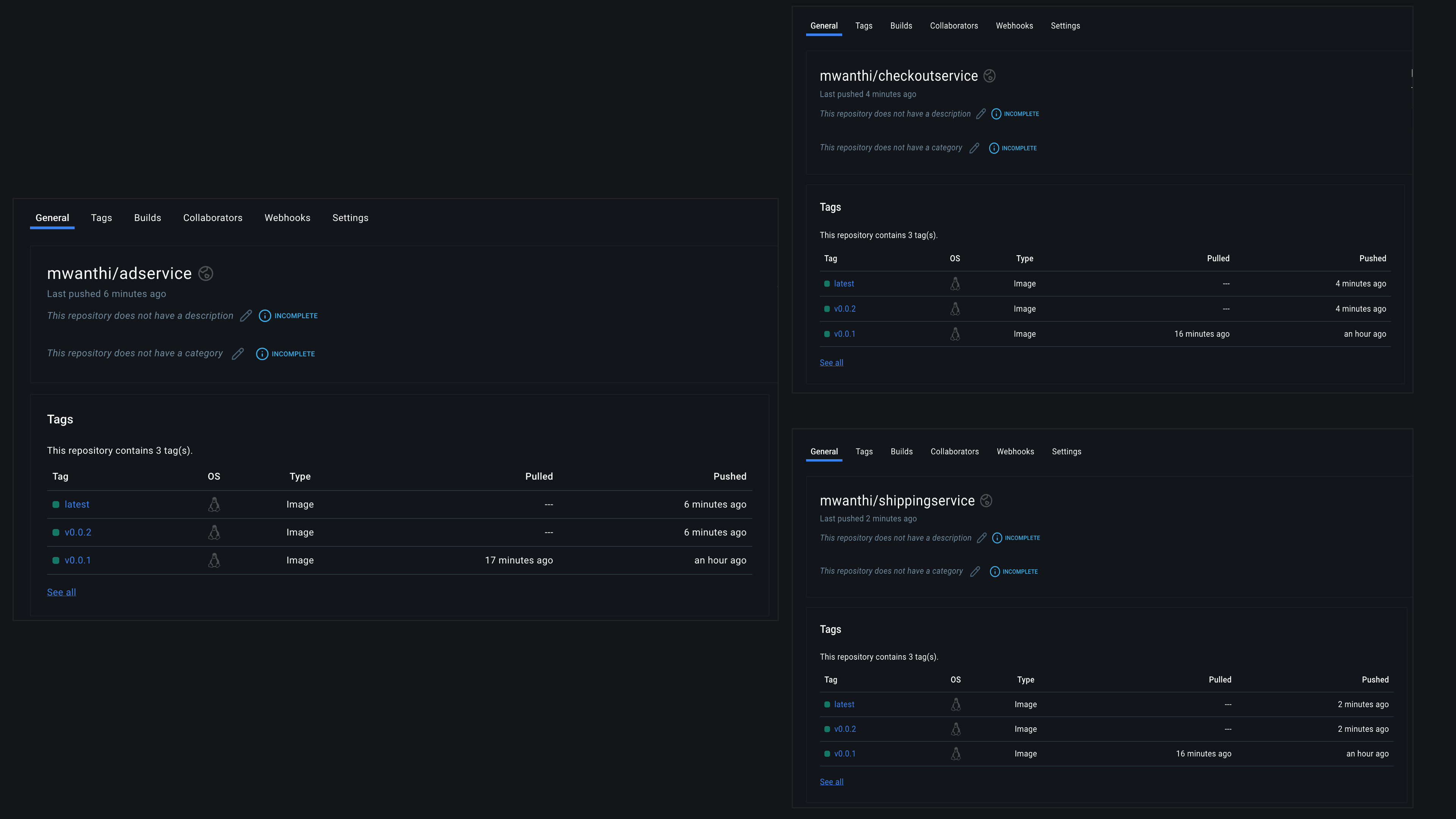

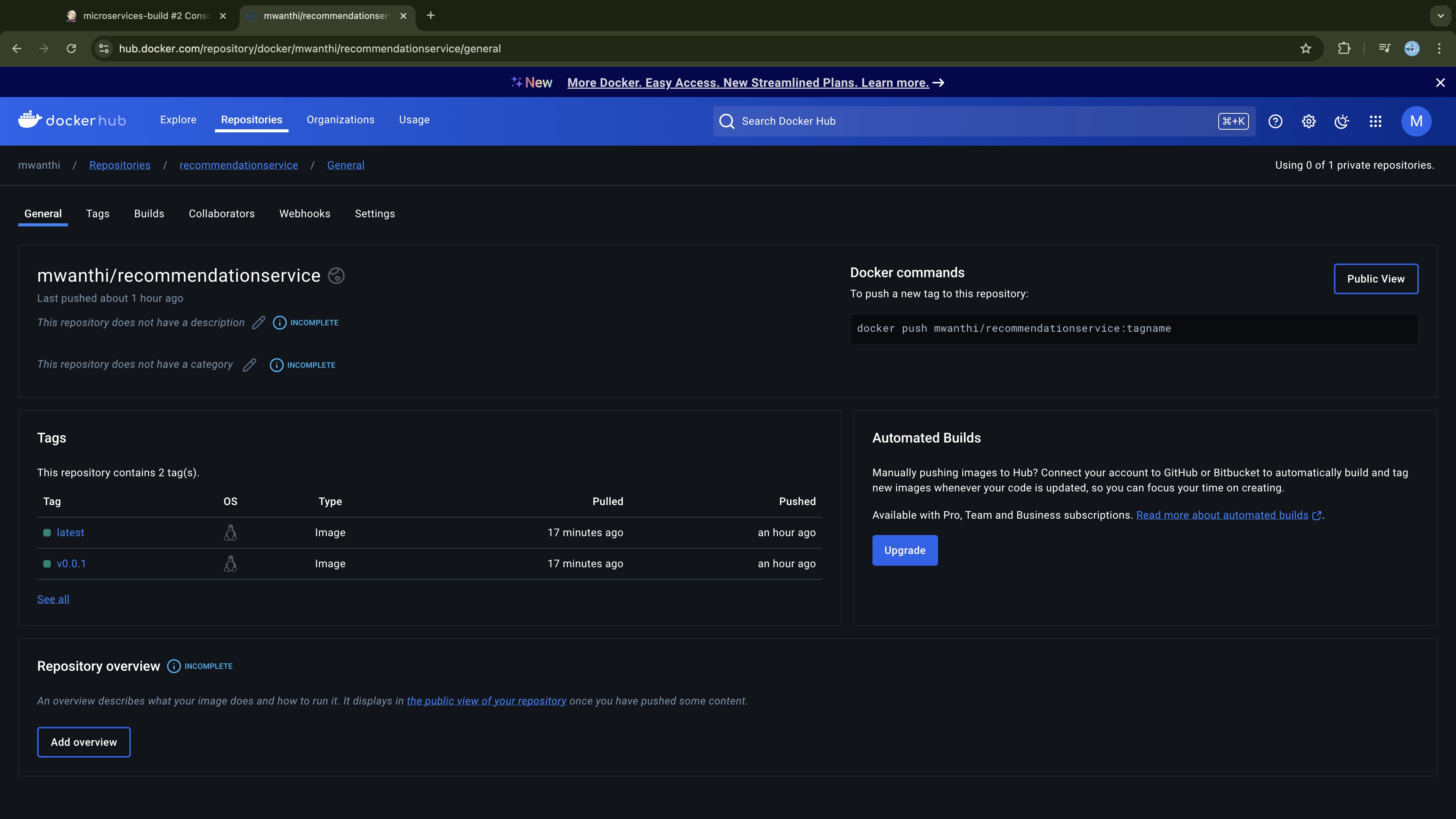

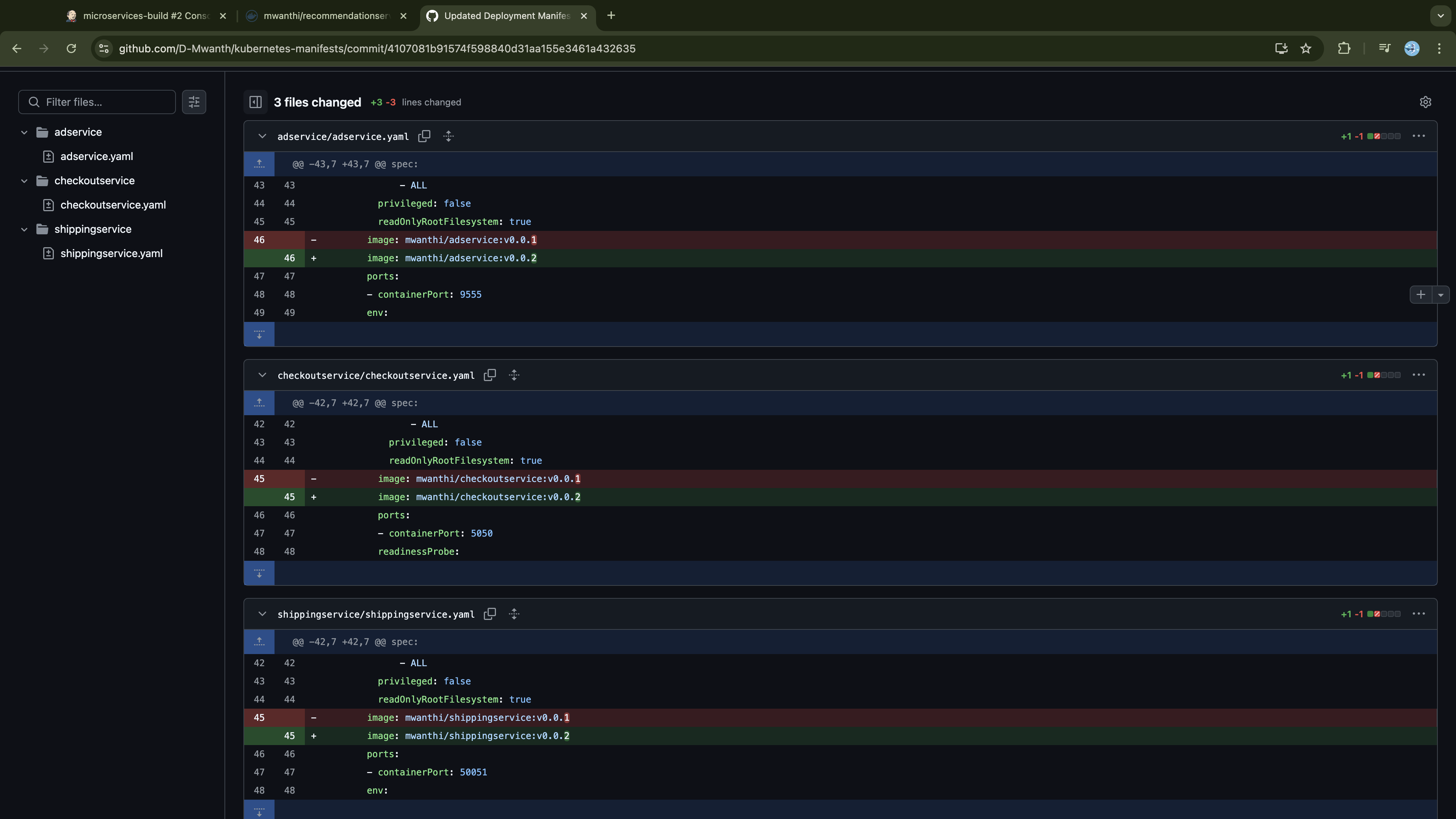

We will begin by provisioning a Kubernetes cluster on AWS using Amazon EKS within a custom VPC, utilizing Terraform for infrastructure management. Once the cluster is provisioned, we will implement a declarative Jenkins Continuous Integration (CI) pipeline. When executed, this pipeline will first checkout the microservices' source code and Kubernetes deployment manifests into separate repositories within the Jenkins workspace. From within the Jenkins server, it (pipeline) will build the container images for the targeted services, apply appropriate versioning tags to build images and update respective deployment manifests to reflect these tags. Finally, the pipeline will push the Docker images to DockerHub and the updated Kubernetes manifests to GitHub, ensuring consistent versioning and readiness for deployment. As a post-run job, the pipeline will send an alert to the designated Slack channel, notifying the team of the build outcome (Success or Failure) ensuring everyone stays updated for quick issues response.

Since all services reside in a mono repo, to optimize pipeline efficiency, the first run (Build no. 1) will build all services of our microservices architecture. For subsequent builds, the pipeline will detect and build only modified or newly added services since the last build, avoiding unnecessary builds of unchanged services. We’ll also configure a GitHub webhook to automatically trigger Jenkins Pipeline with each new repository update, removing the need for manual builds and ensuring timely execution.

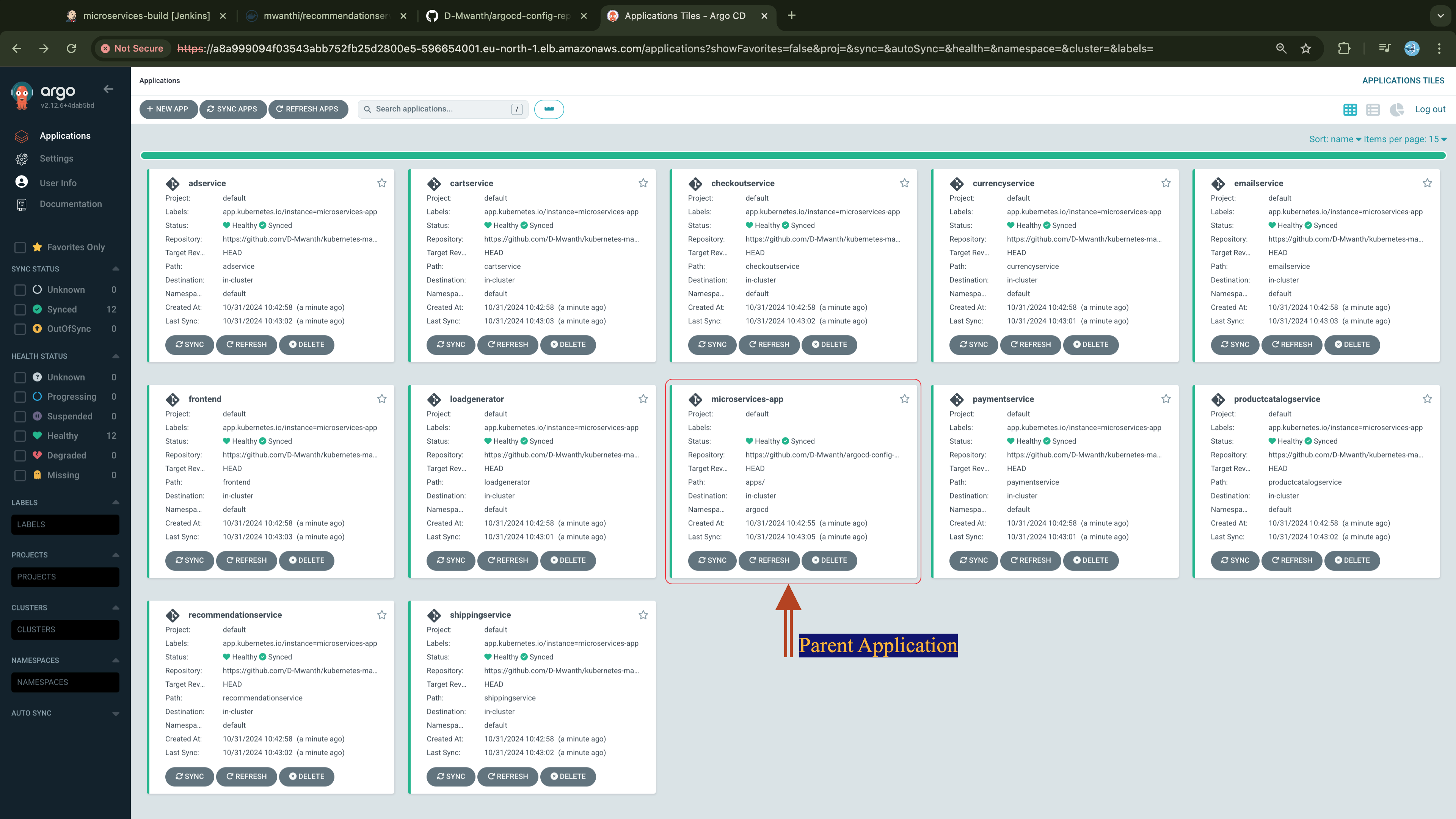

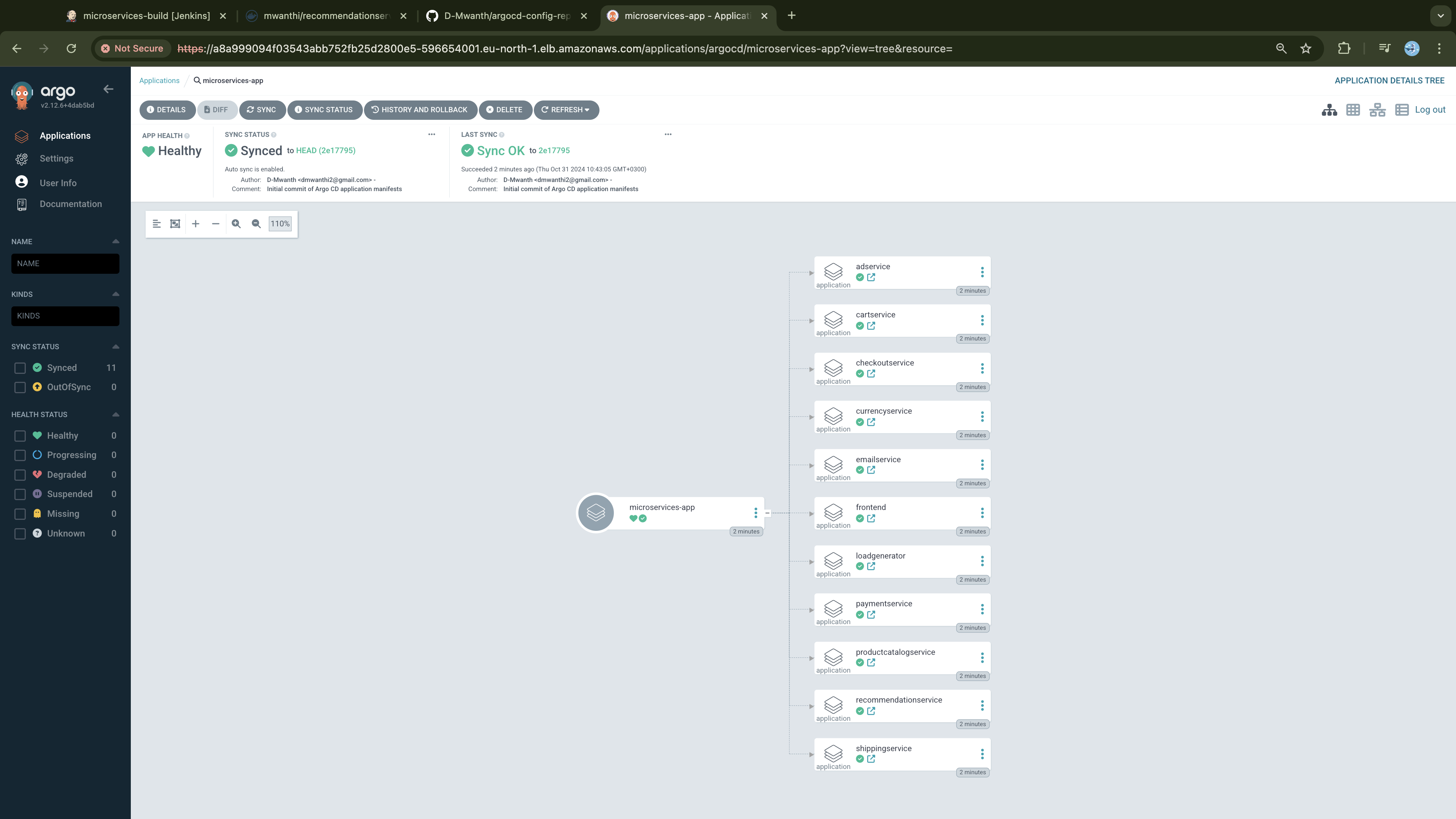

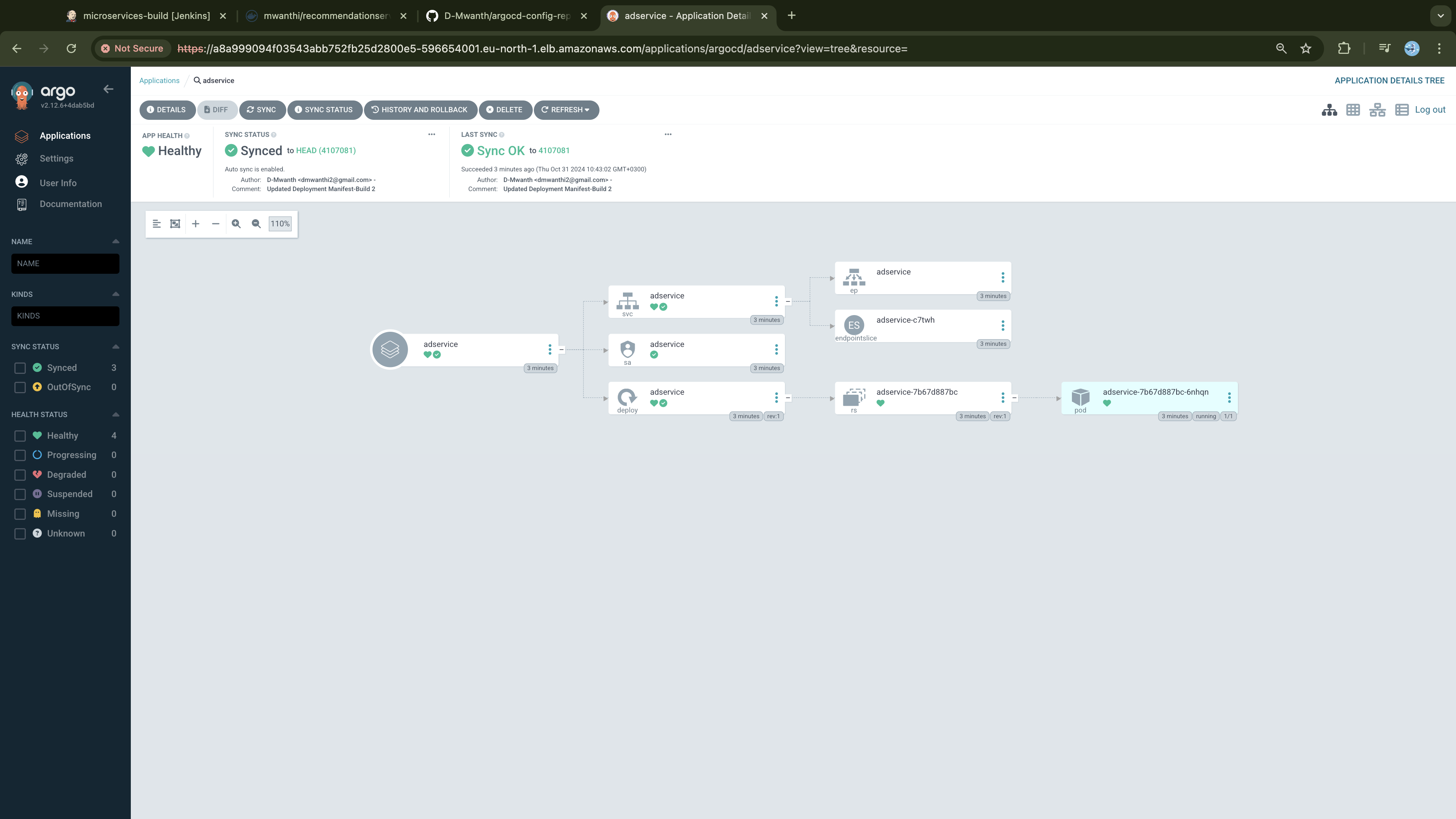

With the microservices built, container images pushed to DockerHub, and updated deployment manifest pushed to Github we will create a Continuous Delivery workflow following GitOps practices with ArgoCD. Adopting the App-of-Apps pattern, we will create an ArgoCD application (parent app) that manages 12 other applications (child apps), each responsible for deploying and managing one of the microservices we have in our project, we will detail this later.

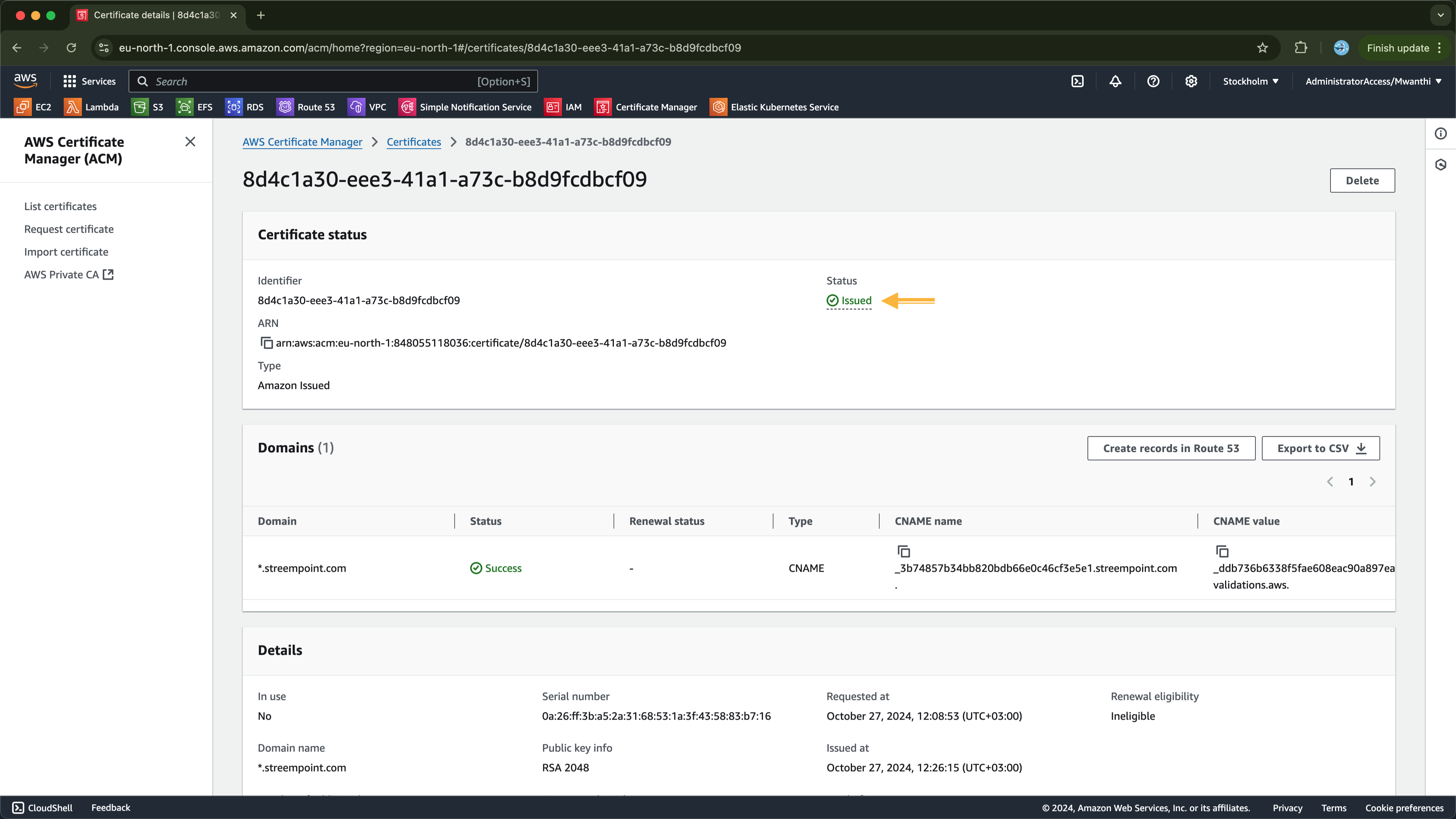

To securely expose our microservices to the internet, we will create an ALB Ingress resource on our Kubernetes cluster using the AWS Load Balancer Controller. This will provision an Application Load Balancer (ALB) on AWS, configured for secure SSL/TLS encryption with AWS Certificate Manager (ACM) provided through the Ingress annotations. After setting up the ALB, we will configure a CNAME record on GoDaddy to map our Ingress host to the ALB's DNS name, ensuring users can access our application through a custom and user-friendly URL.

Here’s a refined version of your statement for clarity and engagement:

Finally, we’ll explore how to monitor our cluster using Prometheus and Grafana.

What You'll Achieve

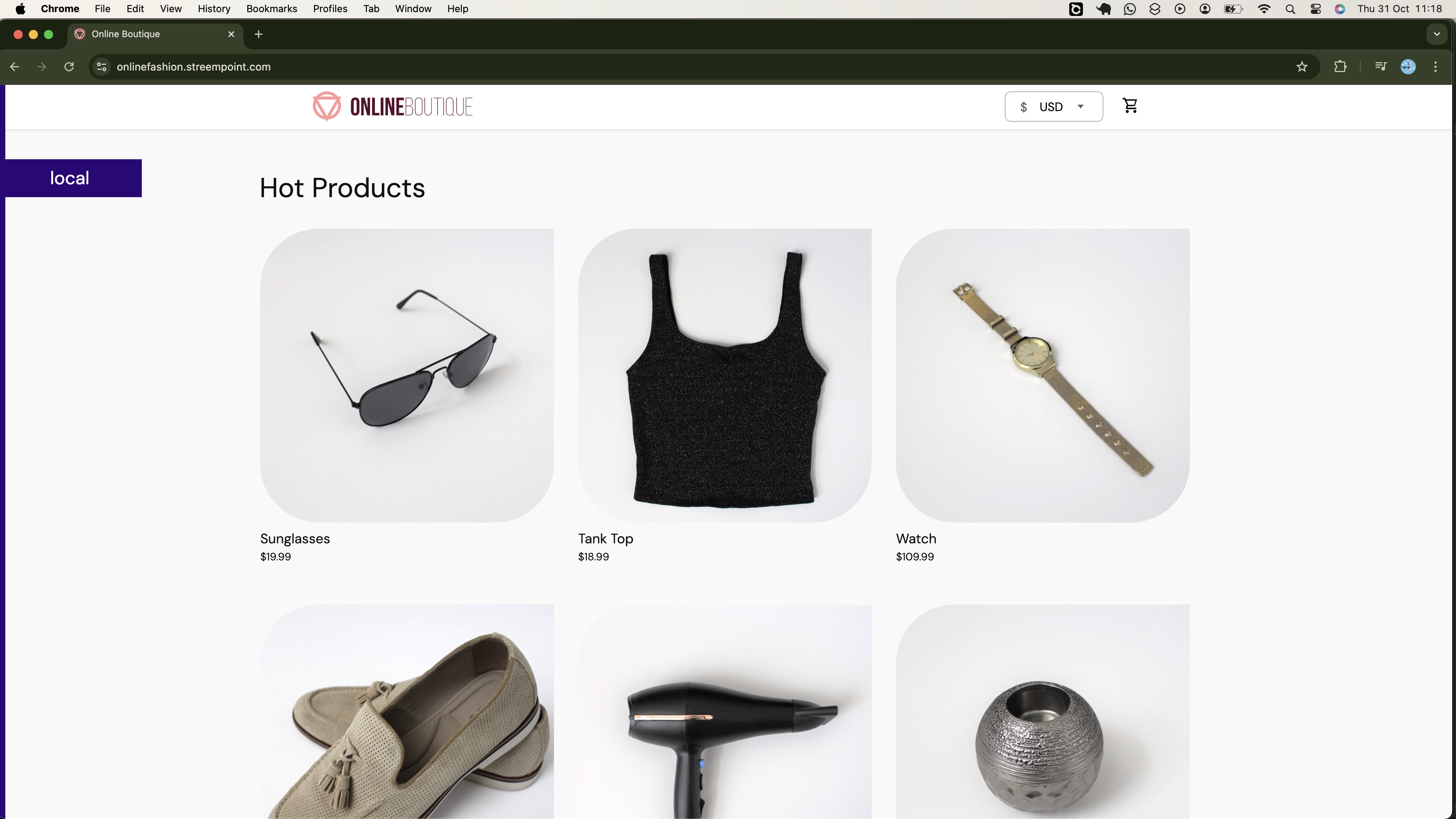

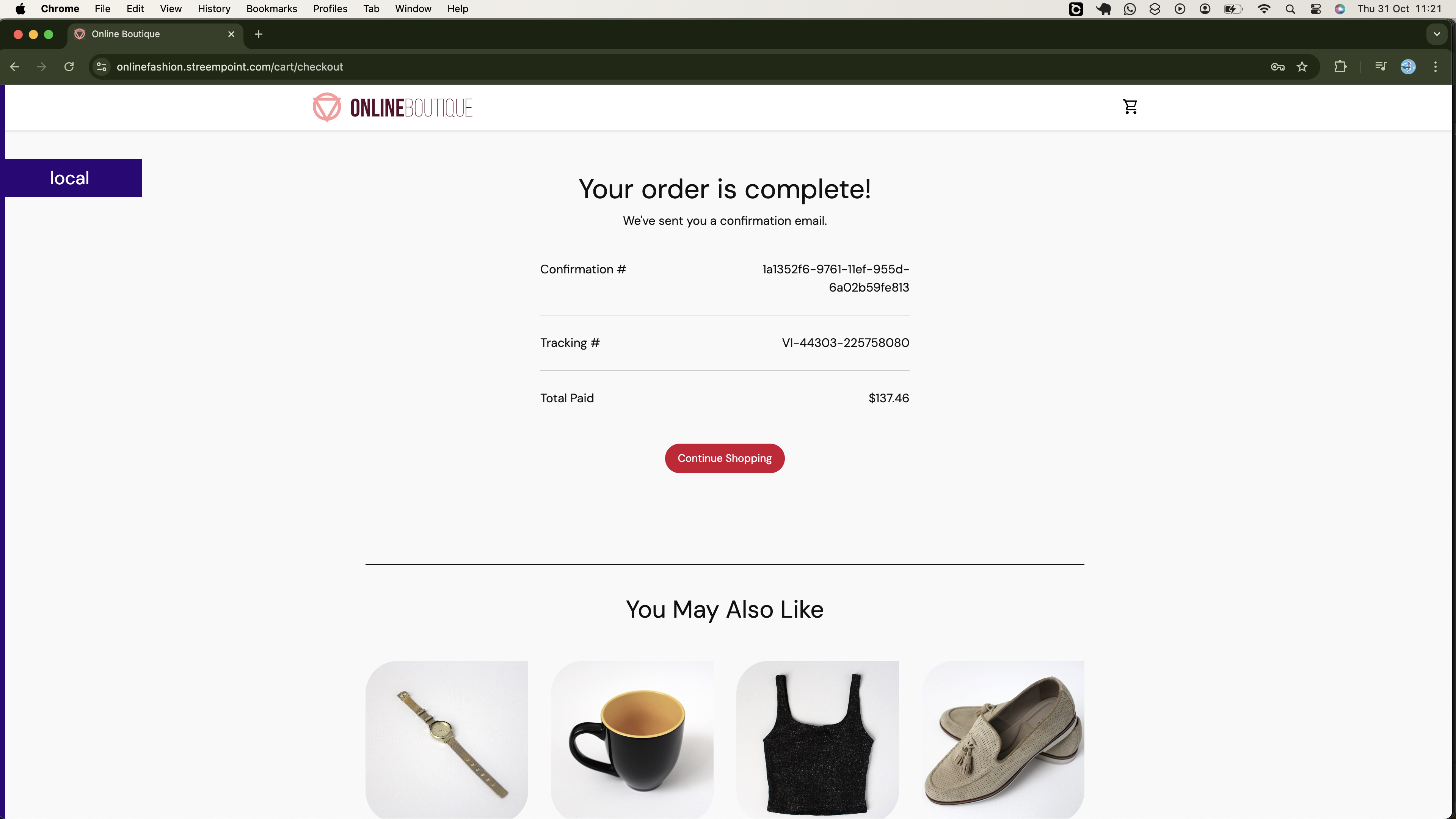

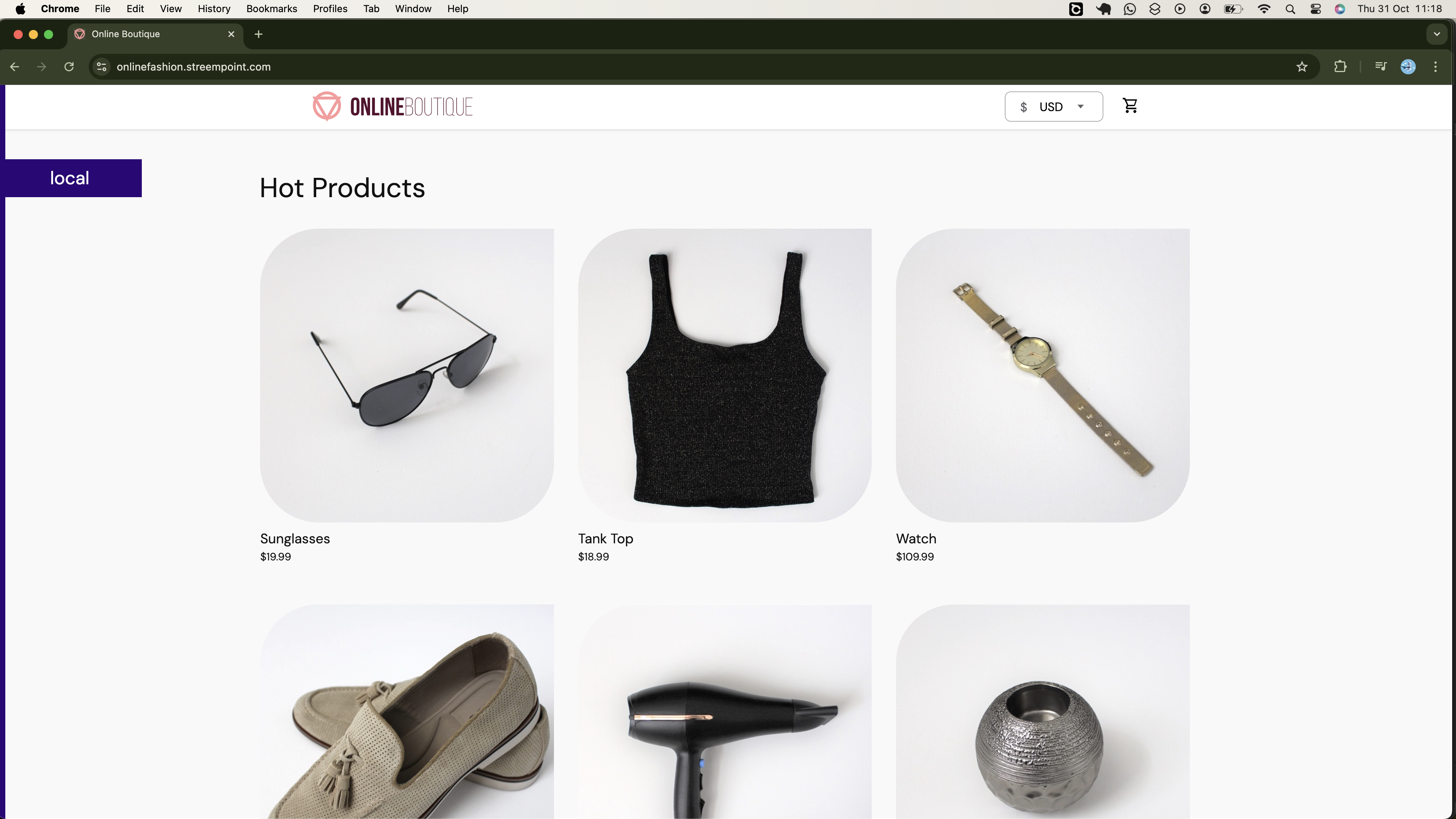

By the end of this project, you will be able to access your onlineboutique application running on remote infrastructure over the internet, as demonstrated below:

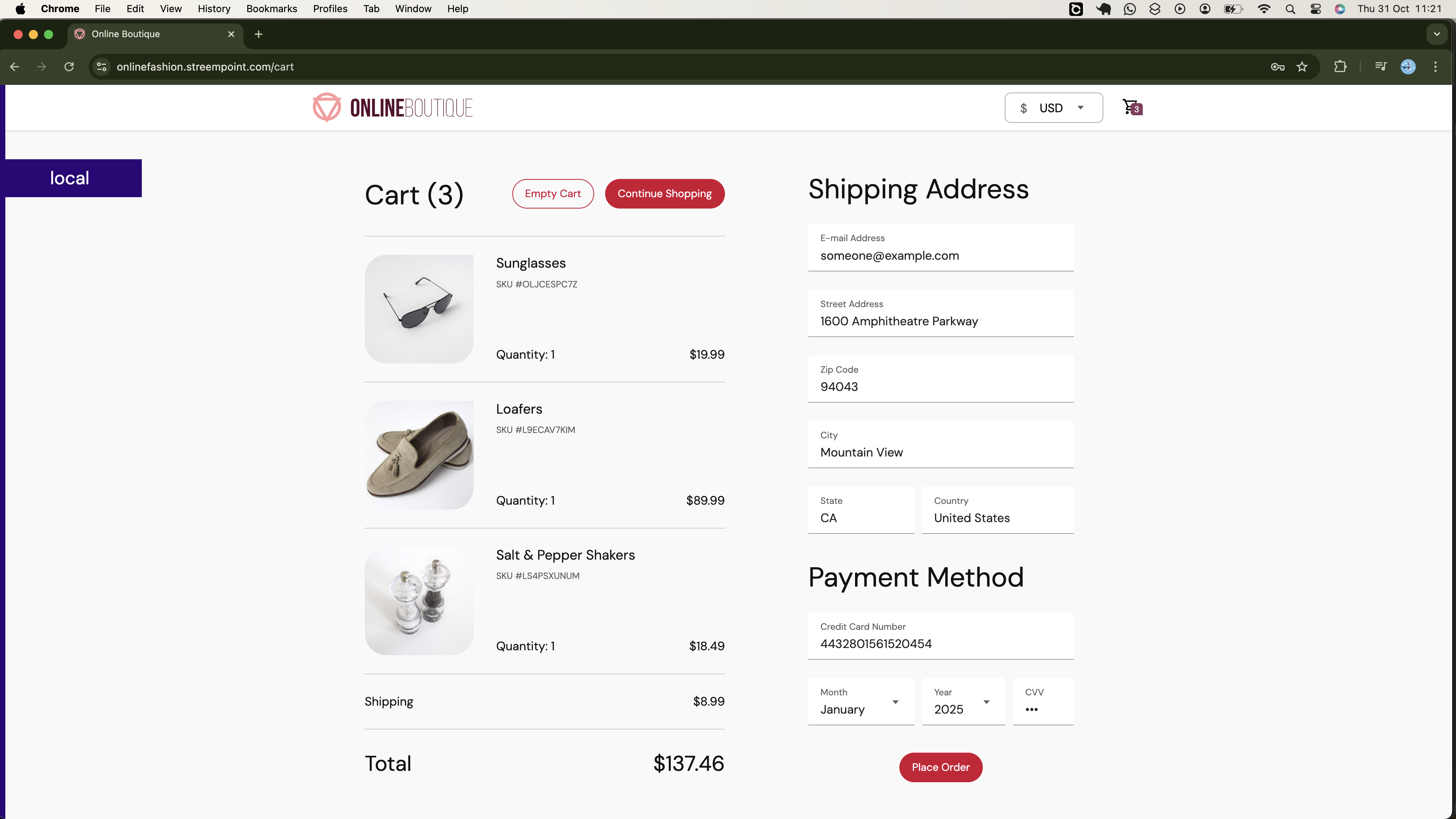

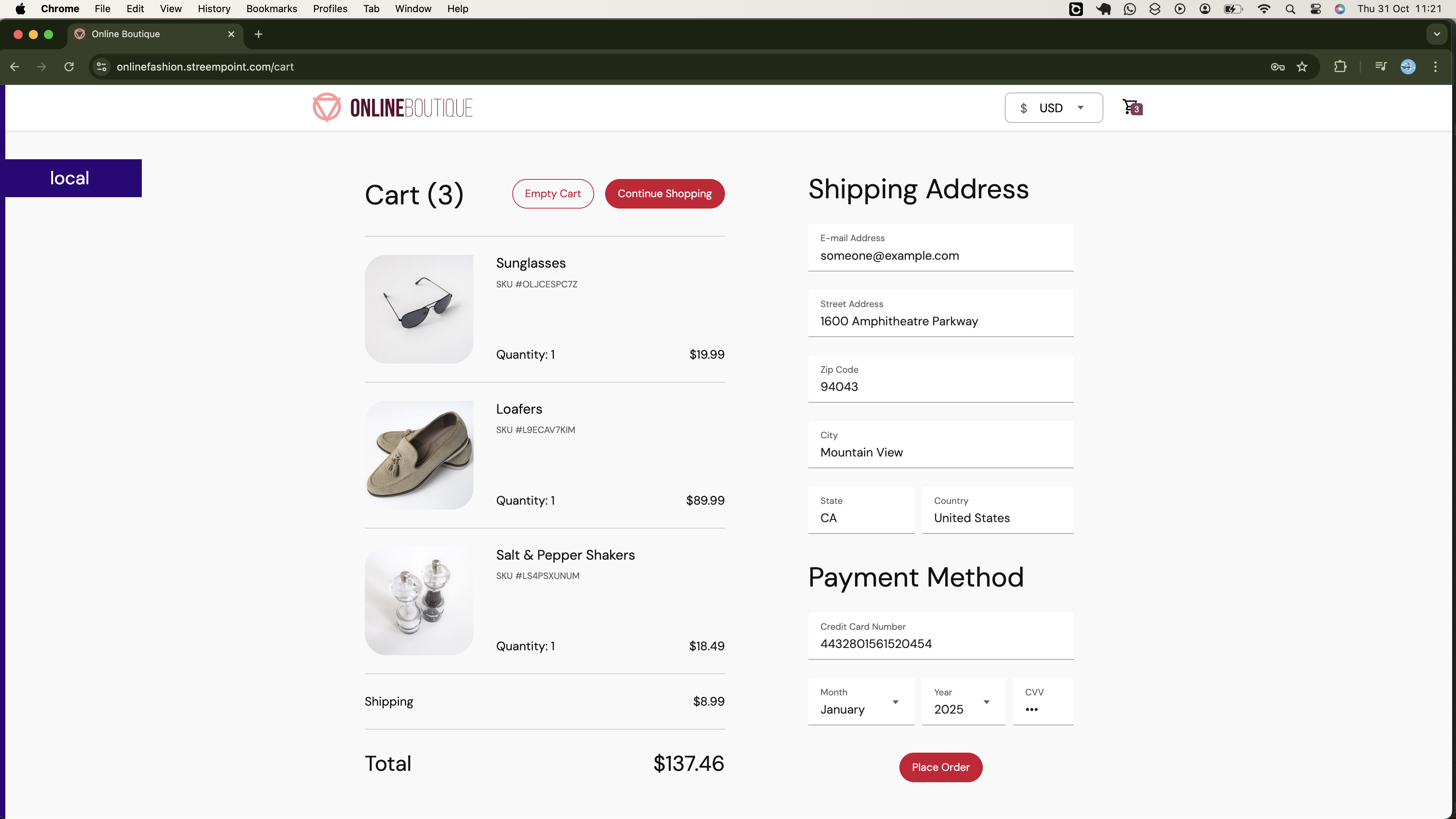

You will also have the capability to perform actions such as adding fashion products you are interested into the cart, as illustrated below:

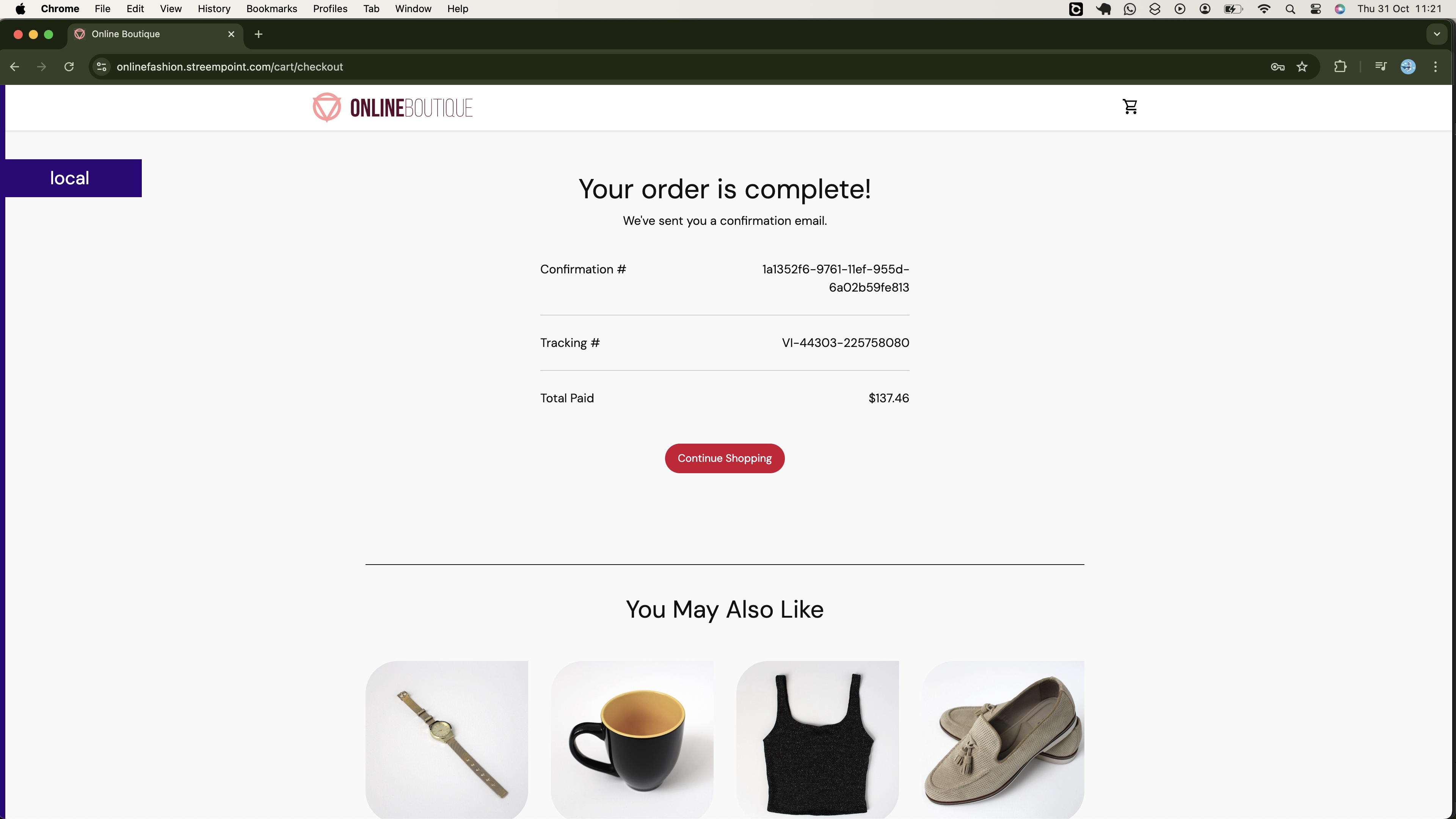

Also, you will be able to proceed and place your order, as demonstrated below:

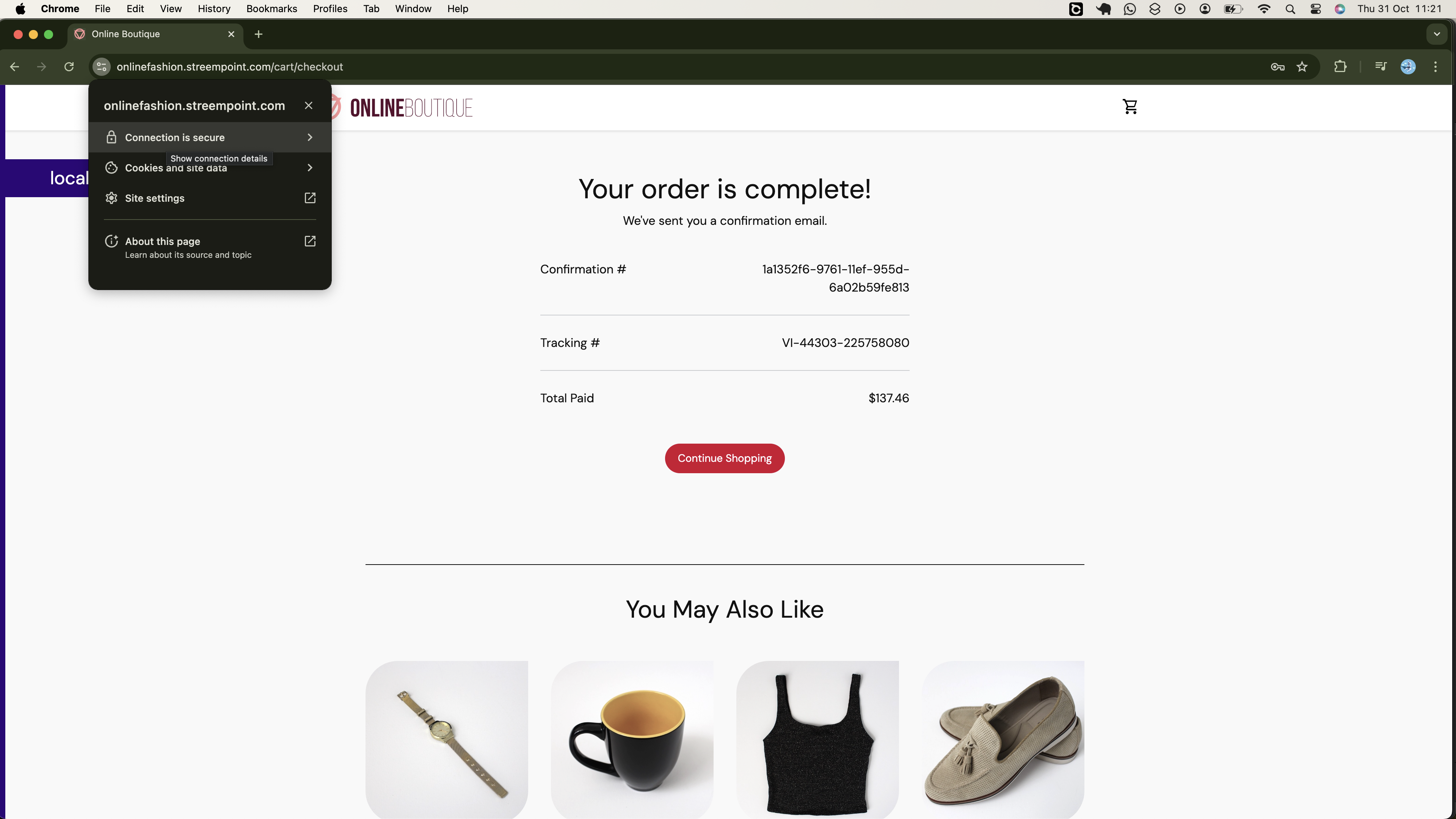

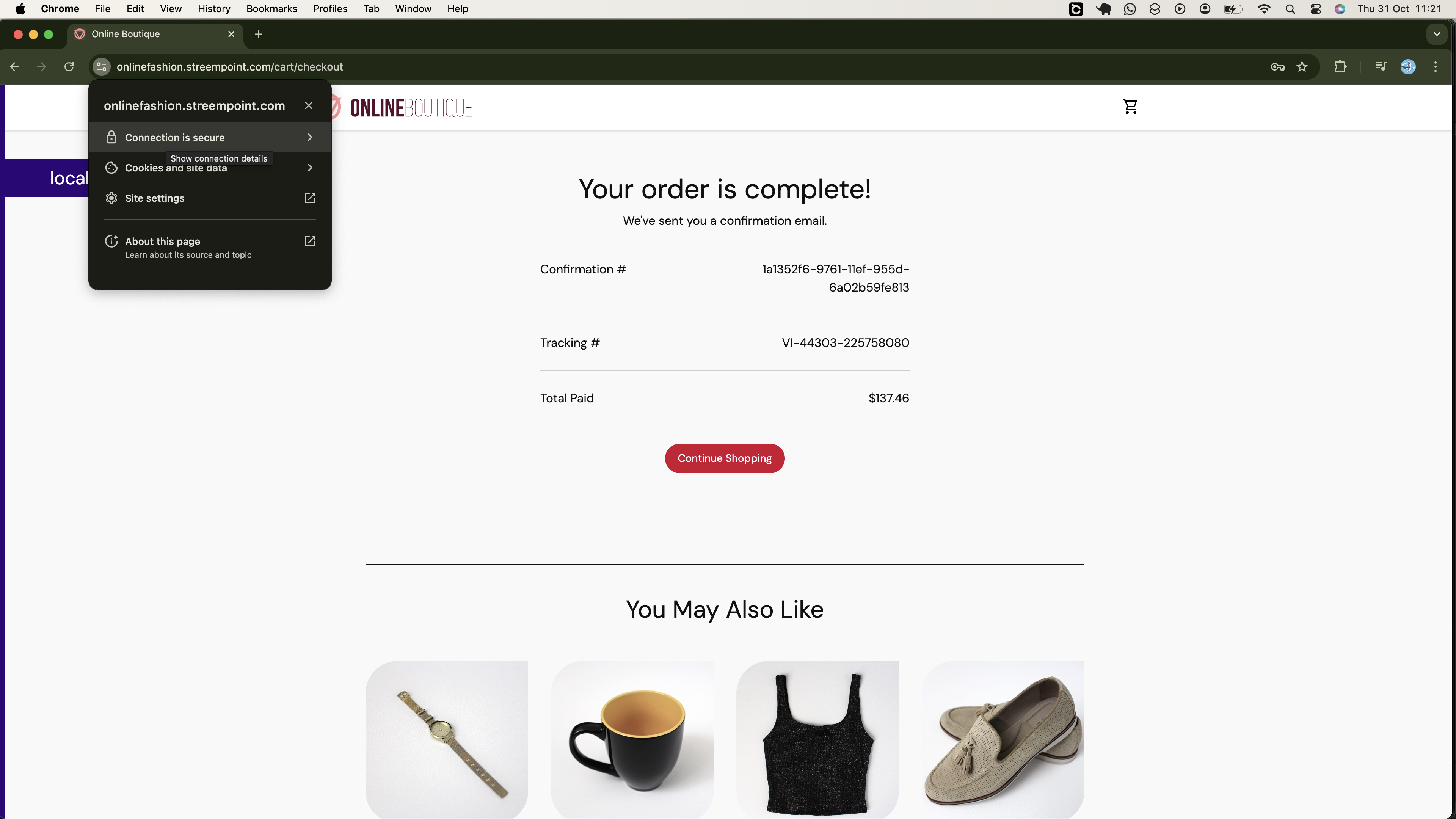

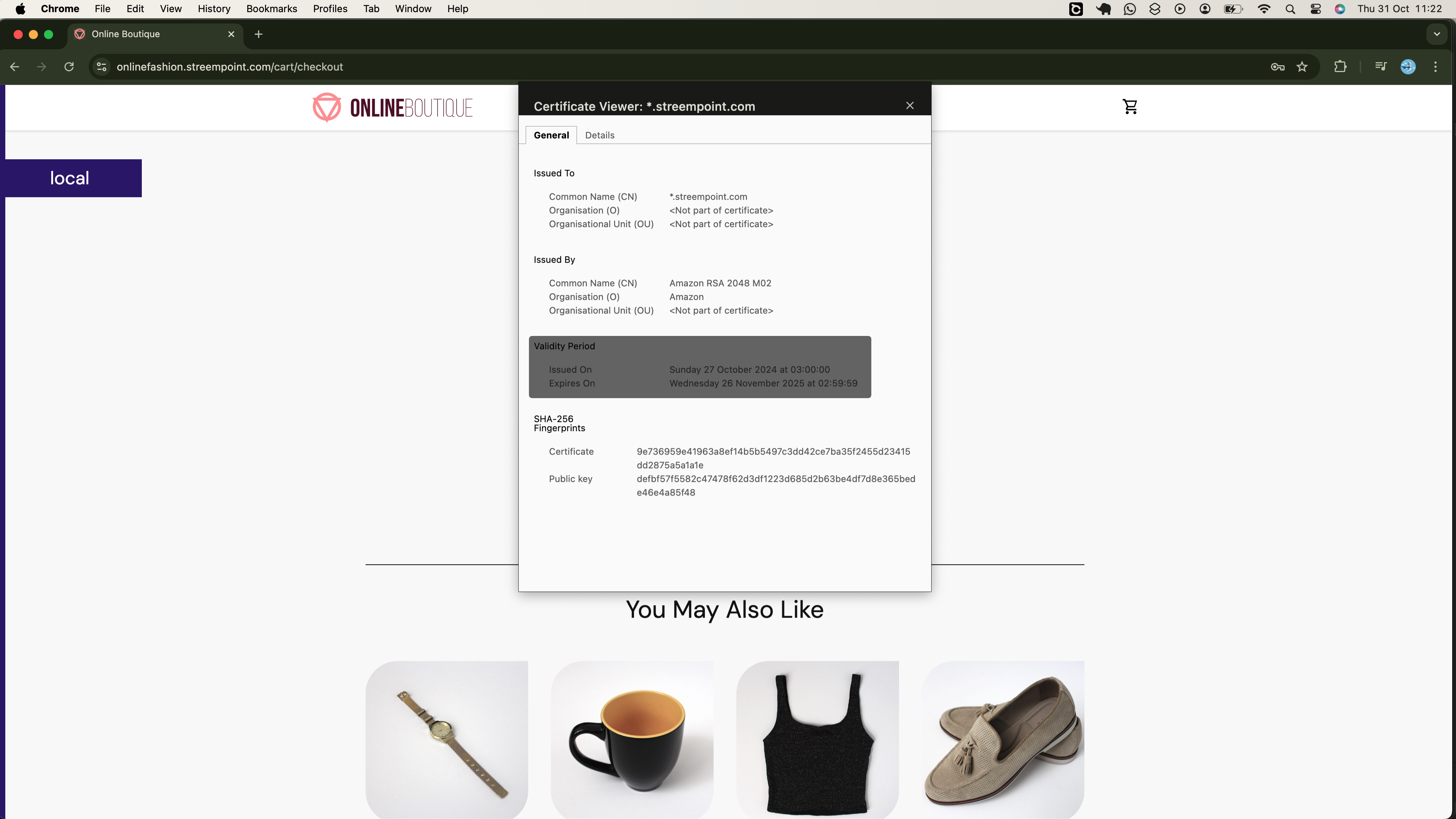

Additionally, as shown below, your connection to the onlinebotique app will be secure, therefore, you should not worry about the client's data being compromised:

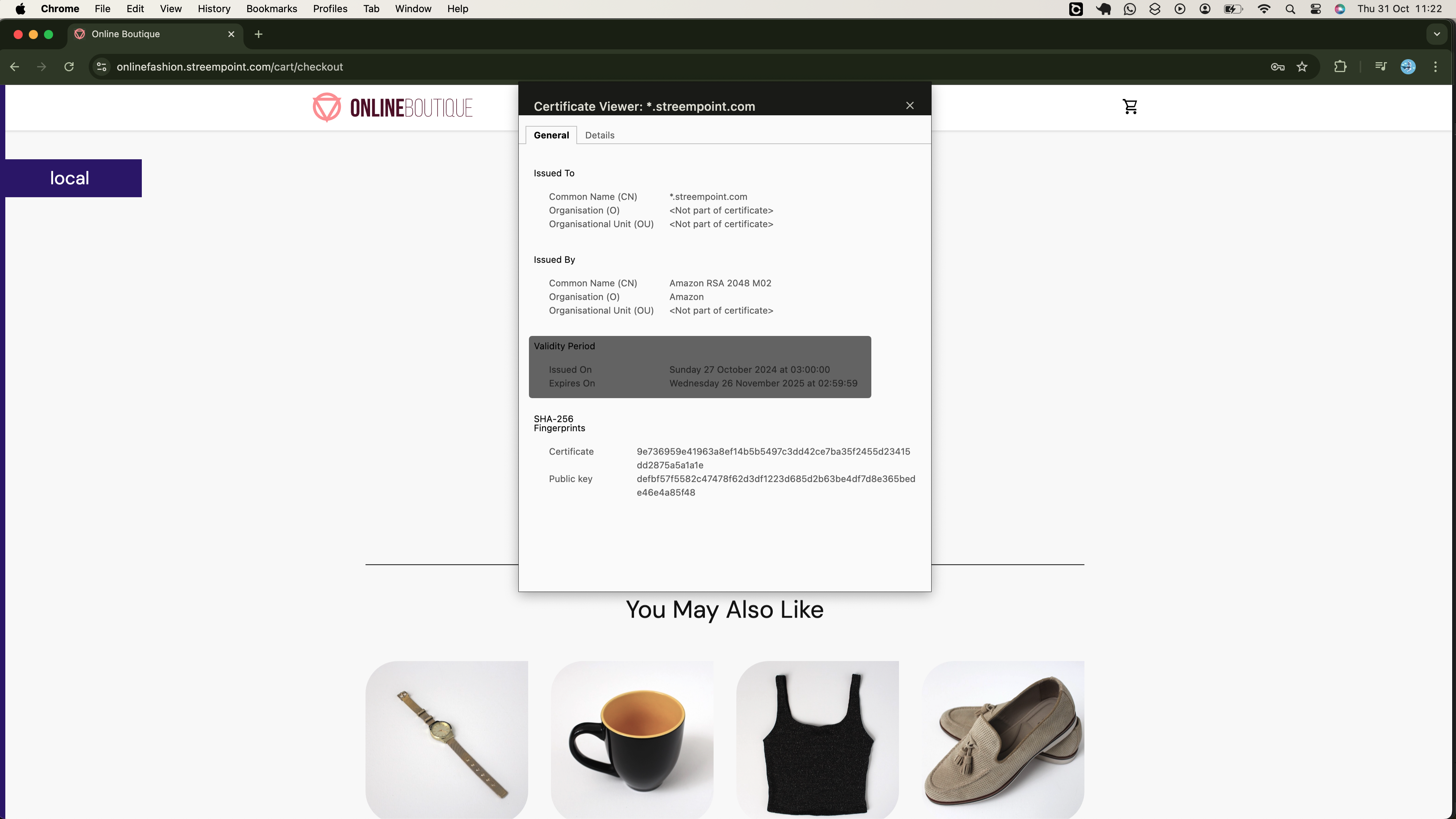

When you inspect the application, you will observe that encryption is managed using an AWS Certificate Manager (ACM) certificate, as illustrated below:

Later in the session, you will learn how to request this certificate using a custom domain.

This session will be comprehensive and packed with valuable insights, so I encourage you to stay with me until the end to fully benefit from it. Let’s dive in!

Core Tools and Techs Used

The following tools will be utilized in this project:

AWS Cloud: We will provision our infrastructure on AWS Cloud.

Terraform: Utilized to automate the infrastructure provisioning process, including a custom VPC (Virtual Private Cloud) and a scalable Kubernetes cluster (Amazon EKS).

Jenkins: Used to create a CI pipeline for building container images of the microservices, publishing them to DockerHub, and updating the Kubernetes manifests. It will also be integrated with Slack to notify us of successful or failed builds.

ArgoCD: Employed to develop a continuous delivery (CD) solution that ensures the Kubernetes cluster stays in sync with the deployment configurations hosted on GitHub. It will also be integrated with Slack to notify us of successful or failed deployments.

Prometheus: Prometheus will be deployed in the cluster to collect metrics visualized in Grafana, enhancing observability in our cluster.

Grafana: Used to visualize cluster metrics collected from Prometheus.

Slack: A messaging platform for team collaboration. We will integrate it with Jenkins for build status updates, ensuring timely action on pipeline failures.

Prerequisites

A solid understanding of the above tools is essential for effective participation. Additionally, you will need:

AWS Account: If you haven't registered yet, create a free account here. AWS offers a 12-month free tier for new users, which includes limited usage of various services. Note that AWS EKS is not included in the free tier, so there may be costs associated with this project. Be sure to review AWS's free tier offerings for more details on what is covered.

AWS CLI: The AWS CLI should be installed and configured on your local system.

Docker and Kubernetes: A basic knowledge of Docker and a solid understanding of Kubernetes are required.

Linux Environment: You should be comfortable working within a Linux environment.

To ensure we’re aligned throughout this session, let’s create a Linux EC2 instance on AWS and install all the packages required for our development purposes. Assuming you have the AWS CLI installed and configured on your local machine, we can efficiently set this up from the command line.

First, we need to create a key pair that will be attached to the instance. This will allow us to use the corresponding private key to securely SSH into the instance.

# Create a directory where we will be located as we do this initial setup

mkdir -p setup-site && cd setup-site

# Create key-pair on aws

aws ec2 create-key-pair --key-name online-fashion --region eu-north-1 --query 'KeyMaterial' --output text > online-fashion.pem

Since we will be fully operating within this instance, including creating resources in the cloud, it's essential for the instance to authenticate with the AWS APIs. While one straightforward approach would be to create an AWS user, generate access keys, and configure an AWS profile on the instance with these keys, this method is not best practice due to security concerns. Instead, we should use an instance profile for authentication, avoiding the need to create a dedicated user with long-term credentials. This approach enhances security by leveraging temporary credentials that are automatically managed by AWS. That said let's create an instance IAM profile.

First, we need an IAM role that includes the permissions required for the tasks you will perform on AWS. The instance should have permission to assume this IAM role. For this, we need a trust policy attached to the role. Run the script below to create a trust policy document:

cat <<EOF > trust-policy.json

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "ec2.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

EOF

Now, create an IAM role with the necessary permissions. For the time being, we will grant this role administrative privileges.

# Create IAM Role

aws iam create-role --role-name onlinefashion --assume-role-policy-document file://trust-policy.json

# Attach AdministratorAccess Policy to the Role (Not Good For Security Concerns)

aws iam attach-role-policy --role-name onlinefashion --policy-arn arn:aws:iam::aws:policy/AdministratorAccess

Now, let's create an instance profile and associate the previously created role with it.

# Create Instance Profile

aws iam create-instance-profile --instance-profile-name onlinefashion-InstanceProfile

# Add Role to Instance Profile

aws iam add-role-to-instance-profile --instance-profile-name onlinefashion-InstanceProfile --role-name onlinefashion

Also, we need a security group that allows us to SSH into the instance (open port 22).

# Create security

SG_ID=$(aws ec2 create-security-group \

--group-name onlinefashion-sg \

--description "Security group for SSH access" \

--query 'GroupId' \

--output text)

# Allow inbound SSH access (port 22) from any IP (0.0.0.0/0)

aws ec2 authorize-security-group-ingress \

--group-id $SG_ID \

--protocol tcp \

--port 22 \

--cidr 0.0.0.0/0

Now, let's launch an EC2 instance:

# Ensure the image id and instance type exists in the region you create the instance

# Also ensure SG_ID env stores the referenced sg id

aws ec2 run-instances \

--image-id ami-04cdc91e49cb06165 \

--count 1 \

--instance-type t3.xlarge \

--key-name online-fashion \

--iam-instance-profile Name=onlinefashion-InstanceProfile \

--security-group-ids $SG_ID \

--tag-specifications 'ResourceType=instance,Tags=[{Key=Name,Value=Admin-Server}]' \

--block-device-mappings '[{"DeviceName":"/dev/sda1","Ebs":{"VolumeSize": 30}}]' \

--region eu-north-1

Now, connect to your instance:

# Ensure private key has read permission only for the owner (if on windows use appropriate command)

chmod 400 online-fashion.pem

# Replace the value of `instance-ids` with value of `InstanceId` returned by the instance launch command

ssh -i online-fashion.pem -o StrictHostKeyChecking=no ubuntu@$(aws ec2 describe-instances --instance-ids i-043fc9a3baaca5e4c --query "Reservations[*].Instances[*].PublicIpAddress" --output text)

Once connected, upgrade and update its system packages, and rename the instance's hostname.

# Upgrade and update system packages

sudo apt upgrade -y && sudo apt update -y

# Change the instance hostname

sudo hostnamectl set-hostname online-fashion

# Starts a new shell session.

bash

Installations

We need to install all the tools required to work comfortably from our instance.

Install AWS CLI

We will centralize our cloud activities, specifically creating resources such as ACM requests, in our instance. Therefore, we need the AWS CLI installed. Run the following commands to install it:

# Download and install AWS CLI <refer to the docs for the latest version>

sudo curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

sudo apt install unzip -y

sudo unzip awscliv2.zip

sudo ./aws/install

sudo rm awscliv2.zip

sudo rm -rf ./aws

With the AWS CLI installed, we can verify that the instance profile attached to our instance is functioning as expected. To do this, list the buckets in your account using the following command:

$ aws s3 ls

2024-10-27 06:49:01 infra-bucket-by-daniel

Indeed, we can authenticate successfully with the AWS API from within the AWS cloud environment.

Install Terraform

Check the Terraform installation instructions for your operating system on this page. Below are the commands for installing it on a Linux distribution (Ubuntu):

# Update the system and install the gnupg, software-properties-common, and curl packages

sudo apt-get update && sudo apt-get install -y gnupg software-properties-common

# Install the HashiCorp GPG key.

wget -O- https://apt.releases.hashicorp.com/gpg | \

gpg --dearmor | \

sudo tee /usr/share/keyrings/hashicorp-archive-keyring.gpg > /dev/null

# Verify the key's fingerprint

gpg --no-default-keyring \

--keyring /usr/share/keyrings/hashicorp-archive-keyring.gpg \

--fingerprint

# Add the official HashiCorp repository to your system

echo "deb [signed-by=/usr/share/keyrings/hashicorp-archive-keyring.gpg] \

https://apt.releases.hashicorp.com $(lsb_release -cs) main" | \

sudo tee /etc/apt/sources.list.d/hashicorp.list

# Download the package information from HashiCorp.

sudo apt update -y

# Install Terraform from the new repository.

sudo apt-get install terraform

Install Terragrunt

We will use Terragrunt to manage our Terraform configurations. Although it is designed to streamline infrastructure provisioning across multiple environments, we will utilize it for a single environment:

# Downloading the binary for

curl -L https://github.com/gruntwork-io/terragrunt/releases/download/v0.62.3/terragrunt_linux_amd64 -o terragrunt

# Add execute permissions to the binary.

chmod u+x terragrunt

# Put the binary somewhere on your PATH

sudo mv terragrunt /usr/local/bin/terragrunt

Install kubectl (a utility for interacting with the Kubernetes cluster)

We'll use kubectl to interact with our clusters from our local system(online-fashion EC2 instance), therefore we need it installed.

# Download the binary

curl -O https://s3.us-west-2.amazonaws.com/amazon-eks/1.30.0/2024-05-12/bin/linux/amd64/kubectl

# Apply execute permissions to the binary.

chmod +x ./kubectl

# Copy the binary to a folder in your PATH

mkdir -p $HOME/bin && cp ./kubectl $HOME/bin/kubectl && export PATH=$HOME/bin:$PATH

rm kubectl

If there are any remaining requirements, we will address them as the need arises. Before we begin, please note that some of the cloud resources we'll create may incur costs, so be prepared to spend at least $10, depending on the speed of implementation. Also, we will not provide further details on infrastructure deployment, as our focus is solely on the CI/CD pipeline. For more information on infrastructure provisioning, check out our dedicated project on infrastructure provisioning.

Clone the Microservices Source Code Repository

We are working with a microservices application developed by Google for educational purposes. You can find the application repository here.

On your online-fashion server, create the project directory and navigate into it:

mkdir -p microservices-app && cd microservices-app

Next, clone the microservices project into this newly created directory:

git clone https://github.com/GoogleCloudPlatform/microservices-demo.git .

Explore and Prepare the Source Code for Deployment

First, list the content of the cloned project.

$ ls -l

total 68

-rw-rw-r-- 1 ubuntu ubuntu 1490 Oct 31 04:49 cloudbuild.yaml

drwxrwxr-x 4 ubuntu ubuntu 4096 Oct 31 04:49 docs

drwxrwxr-x 3 ubuntu ubuntu 4096 Oct 31 04:49 helm-chart

drwxrwxr-x 2 ubuntu ubuntu 4096 Oct 31 04:49 istio-manifests

drwxrwxr-x 2 ubuntu ubuntu 4096 Oct 31 04:49 kubernetes-manifests

drwxrwxr-x 5 ubuntu ubuntu 4096 Oct 31 04:49 kustomize

-rw-rw-r-- 1 ubuntu ubuntu 11358 Oct 31 04:49 LICENSE

drwxrwxr-x 3 ubuntu ubuntu 4096 Oct 31 04:49 protos

-rw-rw-r-- 1 ubuntu ubuntu 11812 Oct 31 04:49 README.md

drwxrwxr-x 2 ubuntu ubuntu 4096 Oct 31 04:49 release

-rw-rw-r-- 1 ubuntu ubuntu 3248 Oct 31 04:49 skaffold.yaml

drwxrwxr-x 14 ubuntu ubuntu 4096 Oct 31 04:49 src

drwxrwxr-x 2 ubuntu ubuntu 4096 Oct 31 04:49 terraform

The repository contains various files and directories, including a LICENSE, README.md, cloudbuild.yaml, source code in src directory, Helm charts, Kubernetes and Istio manifests, Terraform configurations, and additional resources for protos, kustomize, and release management.

For our purposes, we only require the source code for each microservice, the Dockerfile for building it, and the respective deployment Kubernetes manifests. Each microservice's source code and its Dockerfile are stored together within their own directory inside the src directory. The Kubernetes manifests are stored in the kubernetes-manifests directory. Therefore, we will focus on these two directories. While Helm charts are available, we'll stick to Kubernetes manifests for simplicity.

Let’s delete all content listed above, keeping only the microservices source code in the src directory, the Kubernetes manifests in the kubernetes-manifests directory, the README.md file, and the docs directory, which contains images referenced in the README.md file.

# Delete unnecessary files and directories

rm -rf LICENSE cloudbuild.yaml \

helm-chart istio-manifests \

kustomize protos release \

skaffold.yaml terraform .deploystack \

.github .editorconfig

Verify that the following three directories and README file were preserved:

$ ls -l

total 24

drwxrwxr-x 4 ubuntu ubuntu 4096 Oct 31 04:49 docs

drwxrwxr-x 2 ubuntu ubuntu 4096 Oct 31 04:49 kubernetes-manifests

-rw-rw-r-- 1 ubuntu ubuntu 11812 Oct 31 04:49 README.md

drwxrwxr-x 14 ubuntu ubuntu 4096 Oct 31 04:49 src

Now, let's explore the src and kubernetes-manifests directories to understand their contents.

First, let's list the contents of the src directory:

$ ls -l src

total 48

drwxrwxr-x 4 ubuntu ubuntu 4096 Oct 31 04:49 adservice

drwxrwxr-x 4 ubuntu ubuntu 4096 Oct 31 04:49 cartservice

drwxrwxr-x 4 ubuntu ubuntu 4096 Oct 31 04:49 checkoutservice

drwxrwxr-x 4 ubuntu ubuntu 4096 Oct 31 04:49 currencyservice

drwxrwxr-x 3 ubuntu ubuntu 4096 Oct 31 04:49 emailservice

drwxrwxr-x 7 ubuntu ubuntu 4096 Oct 31 04:49 frontend

drwxrwxr-x 2 ubuntu ubuntu 4096 Oct 31 04:49 loadgenerator

drwxrwxr-x 3 ubuntu ubuntu 4096 Oct 31 04:49 paymentservice

drwxrwxr-x 3 ubuntu ubuntu 4096 Oct 31 04:49 productcatalogservice

drwxrwxr-x 2 ubuntu ubuntu 4096 Oct 31 04:49 recommendationservice

drwxrwxr-x 3 ubuntu ubuntu 4096 Oct 31 04:49 shippingservice

drwxrwxr-x 2 ubuntu ubuntu 4096 Oct 31 04:49 shoppingassistantservice

There are 12 subdirectories in this directory, each containing the source code for a specific microservice, such as adservice, cartservice, and 10 others. These microservices are developed using different technologies, as indicated here.

As mentioned earlier, each microservice includes a Dockerfile that defines how to build its container image. This file is located at the root of each microservice directory (service-name/), except for the cartservice, which is located in /cartservice/src. It’s important to note this distinction, as we must specify the correct relative path to each microservice's Dockerfile when building its image in the Jenkins CI pipeline.

$ ls -l src/*

src/adservice:

total 44

-rw-rw-r-- 1 ubuntu ubuntu 3628 Oct 31 04:49 build.gradle

-rw-rw-r-- 1 ubuntu ubuntu 1431 Oct 31 04:49 Dockerfile

-rwxrwxr-x 1 ubuntu ubuntu 796 Oct 31 04:49 genproto.sh

drwxrwxr-x 3 ubuntu ubuntu 4096 Oct 31 04:49 gradle

-rw-rw-r-- 1 ubuntu ubuntu 8762 Oct 31 04:49 gradlew

-rw-rw-r-- 1 ubuntu ubuntu 2872 Oct 31 04:49 gradlew.bat

-rw-rw-r-- 1 ubuntu ubuntu 634 Oct 31 04:49 README.md

-rw-rw-r-- 1 ubuntu ubuntu 33 Oct 31 04:49 settings.gradle

drwxrwxr-x 3 ubuntu ubuntu 4096 Oct 31 04:49 src

src/cartservice:

total 12

-rw-rw-r-- 1 ubuntu ubuntu 2809 Oct 31 04:49 cartservice.sln

drwxrwxr-x 5 ubuntu ubuntu 4096 Oct 31 04:49 src

drwxrwxr-x 2 ubuntu ubuntu 4096 Oct 31 04:49 tests

src/checkoutservice:

total 64

-rw-rw-r-- 1 ubuntu ubuntu 1307 Oct 31 04:49 Dockerfile

drwxrwxr-x 2 ubuntu ubuntu 4096 Oct 31 04:49 genproto

-rwxrwxr-x 1 ubuntu ubuntu 905 Oct 31 04:49 genproto.sh

-rw-rw-r-- 1 ubuntu ubuntu 2101 Oct 31 04:49 go.mod

-rw-rw-r-- 1 ubuntu ubuntu 23390 Oct 31 04:49 go.sum

-rw-rw-r-- 1 ubuntu ubuntu 12493 Oct 31 04:49 main.go

drwxrwxr-x 2 ubuntu ubuntu 4096 Oct 31 04:49 money

-rw-rw-r-- 1 ubuntu ubuntu 123 Oct 31 04:49 README.md

src/currencyservice:

total 152

-rw-rw-r-- 1 ubuntu ubuntu 1826 Oct 31 04:49 client.js

drwxrwxr-x 2 ubuntu ubuntu 4096 Oct 31 04:49 data

-rw-rw-r-- 1 ubuntu ubuntu 1195 Oct 31 04:49 Dockerfile

-rwxrwxr-x 1 ubuntu ubuntu 787 Oct 31 04:49 genproto.sh

-rw-rw-r-- 1 ubuntu ubuntu 895 Oct 31 04:49 package.json

-rw-rw-r-- 1 ubuntu ubuntu 121516 Oct 31 04:49 package-lock.json

drwxrwxr-x 3 ubuntu ubuntu 4096 Oct 31 04:49 proto

-rw-rw-r-- 1 ubuntu ubuntu 5528 Oct 31 04:49 server.js

src/emailservice:

total 80

-rwxrwxr-x 1 ubuntu ubuntu 30091 Oct 31 04:49 demo_pb2_grpc.py

-rwxrwxr-x 1 ubuntu ubuntu 10536 Oct 31 04:49 demo_pb2.py

-rw-rw-r-- 1 ubuntu ubuntu 1227 Oct 31 04:49 Dockerfile

-rwxrwxr-x 1 ubuntu ubuntu 1238 Oct 31 04:49 email_client.py

-rwxrwxr-x 1 ubuntu ubuntu 6363 Oct 31 04:49 email_server.py

-rwxrwxr-x 1 ubuntu ubuntu 767 Oct 31 04:49 genproto.sh

-rwxrwxr-x 1 ubuntu ubuntu 1542 Oct 31 04:49 logger.py

-rw-rw-r-- 1 ubuntu ubuntu 302 Oct 31 04:49 requirements.in

-rw-rw-r-- 1 ubuntu ubuntu 3772 Oct 31 04:49 requirements.txt

drwxrwxr-x 2 ubuntu ubuntu 4096 Oct 31 04:49 templates

src/frontend:

total 112

-rw-rw-r-- 1 ubuntu ubuntu 1578 Oct 31 04:49 deployment_details.go

-rw-rw-r-- 1 ubuntu ubuntu 1339 Oct 31 04:49 Dockerfile

drwxrwxr-x 2 ubuntu ubuntu 4096 Oct 31 04:49 genproto

-rwxrwxr-x 1 ubuntu ubuntu 891 Oct 31 04:49 genproto.sh

-rw-rw-r-- 1 ubuntu ubuntu 2504 Oct 31 04:49 go.mod

-rw-rw-r-- 1 ubuntu ubuntu 25758 Oct 31 04:49 go.sum

-rw-rw-r-- 1 ubuntu ubuntu 19992 Oct 31 04:49 handlers.go

-rw-rw-r-- 1 ubuntu ubuntu 7762 Oct 31 04:49 main.go

-rw-rw-r-- 1 ubuntu ubuntu 2873 Oct 31 04:49 middleware.go

drwxrwxr-x 2 ubuntu ubuntu 4096 Oct 31 04:49 money

-rw-rw-r-- 1 ubuntu ubuntu 2074 Oct 31 04:49 packaging_info.go

-rw-rw-r-- 1 ubuntu ubuntu 116 Oct 31 04:49 README.md

-rw-rw-r-- 1 ubuntu ubuntu 4214 Oct 31 04:49 rpc.go

drwxrwxr-x 6 ubuntu ubuntu 4096 Oct 31 04:49 static

drwxrwxr-x 2 ubuntu ubuntu 4096 Oct 31 04:49 templates

drwxrwxr-x 2 ubuntu ubuntu 4096 Oct 31 04:49 validator

src/loadgenerator:

total 16

-rw-rw-r-- 1 ubuntu ubuntu 1047 Oct 31 04:49 Dockerfile

-rwxrwxr-x 1 ubuntu ubuntu 2462 Oct 31 04:49 locustfile.py

-rw-rw-r-- 1 ubuntu ubuntu 29 Oct 31 04:49 requirements.in

-rw-rw-r-- 1 ubuntu ubuntu 1502 Oct 31 04:49 requirements.txt

src/paymentservice:

total 232

-rw-rw-r-- 1 ubuntu ubuntu 2821 Oct 31 04:49 charge.js

-rw-rw-r-- 1 ubuntu ubuntu 1195 Oct 31 04:49 Dockerfile

-rwxrwxr-x 1 ubuntu ubuntu 785 Oct 31 04:49 genproto.sh

-rw-rw-r-- 1 ubuntu ubuntu 2258 Oct 31 04:49 index.js

-rw-rw-r-- 1 ubuntu ubuntu 848 Oct 31 04:49 logger.js

-rw-rw-r-- 1 ubuntu ubuntu 859 Oct 31 04:49 package.json

-rw-rw-r-- 1 ubuntu ubuntu 204525 Oct 31 04:49 package-lock.json

drwxrwxr-x 3 ubuntu ubuntu 4096 Oct 31 04:49 proto

-rw-rw-r-- 1 ubuntu ubuntu 2838 Oct 31 04:49 server.js

src/productcatalogservice:

total 76

-rw-rw-r-- 1 ubuntu ubuntu 4770 Oct 31 04:49 catalog_loader.go

-rw-rw-r-- 1 ubuntu ubuntu 1408 Oct 31 04:49 Dockerfile

drwxrwxr-x 2 ubuntu ubuntu 4096 Oct 31 04:49 genproto

-rwxrwxr-x 1 ubuntu ubuntu 917 Oct 31 04:49 genproto.sh

-rw-rw-r-- 1 ubuntu ubuntu 2721 Oct 31 04:49 go.mod

-rw-rw-r-- 1 ubuntu ubuntu 28257 Oct 31 04:49 go.sum

-rw-rw-r-- 1 ubuntu ubuntu 2691 Oct 31 04:49 product_catalog.go

-rw-rw-r-- 1 ubuntu ubuntu 2721 Oct 31 04:49 product_catalog_test.go

-rw-rw-r-- 1 ubuntu ubuntu 3847 Oct 31 04:49 products.json

-rw-rw-r-- 1 ubuntu ubuntu 1408 Oct 31 04:49 README.md

-rw-rw-r-- 1 ubuntu ubuntu 5576 Oct 31 04:49 server.go

src/recommendationservice:

total 76

-rwxrwxr-x 1 ubuntu ubuntu 1222 Oct 31 04:49 client.py

-rwxrwxr-x 1 ubuntu ubuntu 30091 Oct 31 04:49 demo_pb2_grpc.py

-rwxrwxr-x 1 ubuntu ubuntu 10536 Oct 31 04:49 demo_pb2.py

-rw-rw-r-- 1 ubuntu ubuntu 1253 Oct 31 04:49 Dockerfile

-rwxrwxr-x 1 ubuntu ubuntu 881 Oct 31 04:49 genproto.sh

-rwxrwxr-x 1 ubuntu ubuntu 1542 Oct 31 04:49 logger.py

-rwxrwxr-x 1 ubuntu ubuntu 5646 Oct 31 04:49 recommendation_server.py

-rw-rw-r-- 1 ubuntu ubuntu 255 Oct 31 04:49 requirements.in

-rw-rw-r-- 1 ubuntu ubuntu 3381 Oct 31 04:49 requirements.txt

src/shippingservice:

total 60

-rw-rw-r-- 1 ubuntu ubuntu 1340 Oct 31 04:49 Dockerfile

drwxrwxr-x 2 ubuntu ubuntu 4096 Oct 31 04:49 genproto

-rwxrwxr-x 1 ubuntu ubuntu 905 Oct 31 04:49 genproto.sh

-rw-rw-r-- 1 ubuntu ubuntu 1705 Oct 31 04:49 go.mod

-rw-rw-r-- 1 ubuntu ubuntu 18639 Oct 31 04:49 go.sum

-rw-rw-r-- 1 ubuntu ubuntu 5136 Oct 31 04:49 main.go

-rw-rw-r-- 1 ubuntu ubuntu 1256 Oct 31 04:49 quote.go

-rw-rw-r-- 1 ubuntu ubuntu 351 Oct 31 04:49 README.md

-rw-rw-r-- 1 ubuntu ubuntu 2392 Oct 31 04:49 shippingservice_test.go

-rw-rw-r-- 1 ubuntu ubuntu 1454 Oct 31 04:49 tracker.go

src/shoppingassistantservice:

total 24

-rw-rw-r-- 1 ubuntu ubuntu 1260 Oct 31 04:49 Dockerfile

-rw-rw-r-- 1 ubuntu ubuntu 146 Oct 31 04:49 requirements.in

-rw-rw-r-- 1 ubuntu ubuntu 5093 Oct 31 04:49 requirements.txt

-rwxrwxr-x 1 ubuntu ubuntu 5447 Oct 31 04:49 shoppingassistantservice.py

Verify the Docekerfile for cartservice is located at src/cartservice/src:

$ ls -l src/cartservice/src | grep Dockerfile | head -n 1

-rw-rw-r-- 1 ubuntu ubuntu 1427 Oct 31 04:49 Dockerfile

These files (Docker files) are already configured to build their respective microservices, so no modifications are needed. We will use them as-is to build our container images and push them to DockerHub. Feel free to explore them.

As an example, let's take a look at how the Dockerfile for the cartservice is crafted:

$ cat src/cartservice/src/Dockerfile

# Copyright 2021 Google LLC

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# https://mcr.microsoft.com/product/dotnet/sdk

FROM mcr.microsoft.com/dotnet/sdk:8.0.402-noble@sha256:96bd4e90b80d82f8e77512ec0893d7ae18b4d2af332b9e68ed575c9842cc175c AS builder

WORKDIR /app

COPY cartservice.csproj .

RUN dotnet restore cartservice.csproj \

-r linux-x64

COPY . .

RUN dotnet publish cartservice.csproj \

-p:PublishSingleFile=true \

-r linux-x64 \

--self-contained true \

-p:PublishTrimmed=true \

-p:TrimMode=full \

-c release \

-o /cartservice

# https://mcr.microsoft.com/product/dotnet/runtime-deps

FROM mcr.microsoft.com/dotnet/runtime-deps:8.0.8-noble-chiseled@sha256:7c86350773464d70bd15b2804c0e52f6c0f6b27d05d0fc607ff16abeb84dedd0

WORKDIR /app

COPY --from=builder /cartservice .

EXPOSE 7070

ENV DOTNET_EnableDiagnostics=0 \

ASPNETCORE_HTTP_PORTS=7070

USER 1000

ENTRYPOINT ["/app/cartservice"]

The file creates a container image for the cartservice microservice using a multi-stage build process. The first stage, named "builder", is responsible for compiling the application and publishing it as a self-contained file for Linux. Let's explore it in detail.

Builder Stage:

Base Image: Uses the .NET SDK image (version

8.0.402) for building the application. This image contains tools for compiling and building .NET applications.WORKDIR: The

WORKDIR /appcommand sets the working directory to/app. All subsequent commands will be executed from this directory.COPY: This copies the

cartservice.csprojfile from thecartservicedirectory to the container's/appdirectory.dotnet restore:: Restores the NuGet packages needed by the project, downloading dependencies for the project based on

cartservice.csproj. The-r linux-x64option specifies the runtime environment, targeting Linux on 64-bit architecture.COPY: This copies all the other files (source code, configurations, etc.) from the local directory to the container.

dotnet publish: This command compiles the source code and publishes the application.

-p:PublishSingleFile=true: Creates a single executable file for the application.-r linux-x64: Specifies the target runtime for Linux on x64 architecture.--self-contained true: Produces a self-contained deployment, meaning the .NET runtime will be packaged with the application.-p:PublishTrimmed=trueand-p:TrimMode=full: Enables trimming, which removes unused code to reduce the size of the published app.-c release: Specifies that the release configuration should be used, which typically includes optimizations.-o /cartservice: Specifies the output directory (/cartservice) where the published files will be stored.

The second stage creates a runtime environment by using the .NET runtime dependencies image, copying the published application, exposing port 7070, setting environment variables, and defining the entry point. Let's explore it in detail.

Runtime Stage:

Base Image: This stage uses the lightweight .NET runtime dependencies image (

runtime-deps:8.0.8-noble-chiseled). This image includes the necessary libraries to run the application but not to build it, resulting in a smaller final image.Set Working Directory: Sets the working directory to /app in the runtime container.

COPY --from=builder: Copies the built application from the

builderstage (/cartservice) into the current stage's/appdirectory. This includes the final executable and any necessary runtime files.EXPOSE 7070: Exposes port

7070to the host, meaning the container will listen on this port for HTTP traffic.Environment Variables:

DOTNET_EnableDiagnostics=0: Disables diagnostics to improve performance.ASPNETCORE_HTTP_PORTS=7070: Configures ASP.NET Core to use port7070.

USER 1000: Specifies that the application should run as user 1000 instead of the root user, which is a good security practice.

ENTRYPOINT: Defines the command to be executed when the container starts, which in this case is the

cartserviceexecutable located in/app/cartservice.

Using this builder pattern approach reduces the final image size and enhances security by eliminating unnecessary files and dependencies from the final Docker image.

You can explore the Dockerfiles of the other microservices to see how each application is built. This step is optional–if you're already familiar with Dockerfile structure, feel free to skip it, as all necessary instructions are already in place.

Let's list the contents of the kubernetes-manifests directory to verify that each microservice has a corresponding deployment manifest, except for the shoppingassistantservice.

$ ls -l ./kubernetes-manifests

total 52

-rw-rw-r-- 1 ubuntu ubuntu 2033 Oct 31 04:49 adservice.yaml

-rw-rw-r-- 1 ubuntu ubuntu 3391 Oct 31 04:49 cartservice.yaml

-rw-rw-r-- 1 ubuntu ubuntu 2474 Oct 31 04:49 checkoutservice.yaml

-rw-rw-r-- 1 ubuntu ubuntu 2043 Oct 31 04:49 currencyservice.yaml

-rw-rw-r-- 1 ubuntu ubuntu 2046 Oct 31 04:49 emailservice.yaml

-rw-rw-r-- 1 ubuntu ubuntu 4032 Oct 31 04:49 frontend.yaml

-rw-rw-r-- 1 ubuntu ubuntu 1460 Oct 31 04:49 kustomization.yaml

-rw-rw-r-- 1 ubuntu ubuntu 2767 Oct 31 04:49 loadgenerator.yaml

-rw-rw-r-- 1 ubuntu ubuntu 2018 Oct 31 04:49 paymentservice.yaml

-rw-rw-r-- 1 ubuntu ubuntu 2082 Oct 31 04:49 productcatalogservice.yaml

-rw-rw-r-- 1 ubuntu ubuntu 317 Oct 31 04:49 README.md

-rw-rw-r-- 1 ubuntu ubuntu 2228 Oct 31 04:49 recommendationservice.yaml

-rw-rw-r-- 1 ubuntu ubuntu 2016 Oct 31 04:49 shippingservice.yaml

As expected, we have manifest files for all microservices except for the shoppingassistantservice, a newly introduced microservice in the architecture. The deployment manifest for this microservice (shoppingassistantservice) can be found here. However, integrating this service into our application requires an access key for an LLM model, which I currently don’t have due to cost constraints. Therefore, I will exclude this microservice from the current deployment. Additionally, the kustomization.yaml configuration is not necessary, so we can remove it:

rm ./kubernetes-manifests/kustomization.yaml

Updating Kubernetes Manifests with Correct Image tags

In contrast to the Dockerfiles, which required no changes, these manifests need modifications. Examining each microservice's manifest—particularly the sections specifying the container image to be pulled—reveals that the correct image tags are not defined.

$ grep -h 'image' kubernetes-manifests/*.yaml | grep -v '#' | grep -v 'busybox' | grep -v 'redis'

image: adservice

image: cartservice

image: checkoutservice

image: currencyservice

image: emailservice

image: frontend

image: loadgenerator

image: paymentservice

image: productcatalogservice

image: recommendationservice

image: shippingservice

We will pull the container images from our DockerHub registry. Therefore, each microservice manifest must specify the registry hosting the respective container image, including the image name and version tag.

We will start by tagging all microservices with the release version v0.0.1. In future builds, our CI pipeline will subsequently update the version tags for any modified microservice to reflect the latest release. This will ensure that the CD pipeline is triggered, and the cluster is updated to run the most recent versions of our microservices.

Execute the following command in the kubernetes-manifests directory to update the image field in all the manifest files:

# Esure you are at the root of the project directory (microservices-app)

# also update the docker username (mwanthi) with your username

for file in kubernetes-manifests/*.yaml; do

service=$(basename "$file" .yaml)

dockerusername="mwanthi"

sed -i "s|image: ${service}|image: ${dockerusername}/${service}:v0.0.1|g" "$file"

done

Now, verify the image field in each manifest was appropriately updated, except for the redis and busybox, which do not need modification:

$ grep -h 'image' kubernetes-manifests/*.yaml | grep -v '#'

image: mwanthi/adservice:v0.0.1

image: mwanthi/cartservice:v0.0.1

image: redis:alpine

image: mwanthi/checkoutservice:v0.0.1

image: mwanthi/currencyservice:v0.0.1

image: mwanthi/emailservice:v0.0.1

image: mwanthi/frontend:v0.0.1

image: busybox:latest

image: mwanthi/loadgenerator:v0.0.1

image: mwanthi/paymentservice:v0.0.1

image: mwanthi/productcatalogservice:v0.0.1

image: mwanthi/recommendationservice:v0.0.1

image: mwanthi/shippingservice:v0.0.1

Separating Kubernetes manifests from the microservices source repository

To ensure that the Jenkins pipeline can easily update the version tag of specific manifest whenever the corresponding service is built in the future iterations (e.g., from v0.0.1 to v0.0.2, or v0.0.2 to v0.0.3), we will store the deployment manifests in a separate repository from the source code. This separation ensures that the changes to the source code repository trigger the Jenkins CI pipeline, while updates to the manifest files initiate the ArgoCD CD workflow. This approach will prevent potential pipeline loops that could arise if Jenkins-initiated commits are pushed back to the source code without careful management.

Let’s proceed with moving these configurations to a separate directory. Before doing so, it's worth noting that, each microservice deployment will be managed by a dedicated ArgoCD application. To facilitate this, we will store each microservice's deployment manifest in its own directory, which will later be targeted by the corresponding ArgoCD application for deploying and managing that microservice. Let's achieve this by executing the following commands.

# Cd to home directory and create manifest directory

cd ~/ && mkdir -p kubernetes-manifests

# Cd to kubernetes-manifests directory

# Create a list of microservices

microservices=(adservice cartservice checkoutservice currencyservice emailservice frontend loadgenerator paymentservice productcatalogservice recommendationservice shippingservice)

# Loop through each microservice

for microservice in "${microservices[@]}"; do

# Create a directory for each microservice inside the services-manifests directory

mkdir -p ~/kubernetes-manifests/$microservice

# Move the corresponding YAML file into the created directory

mv ~/microservices-app/kubernetes-manifests/${microservice}.yaml ~/kubernetes-manifests/$microservice

done

# Move the README.md file also

mv ~/microservices-app/kubernetes-manifests/README.md ~/kubernetes-manifests/

# Remove the kubernetes-manifests from the source code

rm -rf ~/microservices-app/kubernetes-manifests

Now, verify that the newly created kubernetes-manifests directory contains all Kubernetes manifest files organized into distinct subdirectories:

# First install the tree utility

sudo apt install tree

# Check directory structure

$ tree ~/kubernetes-manifests

/home/ubuntu/kubernetes-manifests

├── adservice

│ └── adservice.yaml

├── cartservice

│ └── cartservice.yaml

├── checkoutservice

│ └── checkoutservice.yaml

├── currencyservice

│ └── currencyservice.yaml

├── emailservice

│ └── emailservice.yaml

├── frontend

│ └── frontend.yaml

├── loadgenerator

│ └── loadgenerator.yaml

├── paymentservice

│ └── paymentservice.yaml

├── productcatalogservice

│ └── productcatalogservice.yaml

├── README.md

├── recommendationservice

│ └── recommendationservice.yaml

└── shippingservice

└── shippingservice.yaml

12 directories, 12 files

Also, verify the microservices-app directory contains only the source code.

$ ls ~/microservices-app/

docs README.md src

We have two project directories: one for the source code and another for the deployment manifest files. Let’s focus on the one containing the microservices' source code.

The microservices-app project directory has already been initialized with Git.

$ cd ~/microservices-app/ && ls -al

total 40

drwxrwxr-x 5 ubuntu ubuntu 4096 Oct 31 04:54 .

drwxr-x--- 12 ubuntu ubuntu 4096 Oct 31 04:54 ..

drwxrwxr-x 4 ubuntu ubuntu 4096 Oct 31 04:49 docs

drwxrwxr-x 8 ubuntu ubuntu 4096 Oct 31 04:49 .git

-rw-rw-r-- 1 ubuntu ubuntu 201 Oct 31 04:49 .gitattributes

-rw-rw-r-- 1 ubuntu ubuntu 310 Oct 31 04:49 .gitignore

-rw-rw-r-- 1 ubuntu ubuntu 11812 Oct 31 04:49 README.md

drwxrwxr-x 14 ubuntu ubuntu 4096 Oct 31 04:49 src

All changes made during the application's lifecycle are tracked in this repository.

# Check the number of commits

$ git rev-list --count HEAD

2279

There have been 2,279 commits made in this project. To start fresh, let’s delete this repository and initialize a new one.

# Make sure you are at the root of your project folder where .git is located

rm -rf .git && git init

Let's rename the default master branch to main:

git branch -m main

Now we can commit the changes. However, we need to configure the Git profile on the instance to enable committing:

# Replace with your GitHub username and email address

git config --global user.name "D-Mwanth"

git config --global user.email "dmwanthi2@gmail.com"

Also, before committing the changes, let's remove the shoppingassistantservice service since we won't deploy it.

rm -rf src/shoppingassistantservice

Now, stage the changes and make the initial commit:

git add . && git commit -m "initial commit"

If we check the number of commits now, there should be one:

$ git rev-list --count HEAD

1

Next, we need to push our code to GitHub, from where Jenkins will clone the repository to its workspace and build container mages for the targeted microservices.

Hosting Application code on GitHub

First, we need to create a remote repository. Let's create a public repository on Github:

# Ran this command from my local system (not from ec2 instance), where gh cli is configured

gh repo create microservices-app --public

Configure your local repository to set this GitHub repository as the remote origin.

git remote add origin git@github.com:D-Mwanth/microservices-app.git

We can now push local changes to GitHub; however, this will result in errors because our instance’s authentication with GitHub isn’t configured. Follow these steps to set up SSH access between the instance and GitHub.

- Generate an SSH Key Pair:

$ ssh-keygen -t rsa -b 4096

Generating public/private rsa key pair.

Enter file in which to save the key (/home/ubuntu/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/ubuntu/.ssh/id_rsa

Your public key has been saved in /home/ubuntu/.ssh/id_rsa.pub

The key fingerprint is:

SHA256:Ss2IGIKbyPJLQmEipUTFsXMYdnjcmFsn/SvU7W4IZrA ubuntu@online-fashion

The key's randomart image is:

+---[RSA 4096]----+

|.o+=+.+ . |

|oo.o== + o |

|*o.+..o o o . |

|*+.ooo = . o . |

|=o. . o S o |

|o. . E = . . |

|. o . o o o |

| o . . o |

| . . |

+----[SHA256]-----+

- Add the private SSH Key to the SSH Agent:

eval "$(ssh-agent -s)"

ssh-add ~/.ssh/id_rsa

- Copy the SSH public key:

cat ~/.ssh/id_rsa.pub

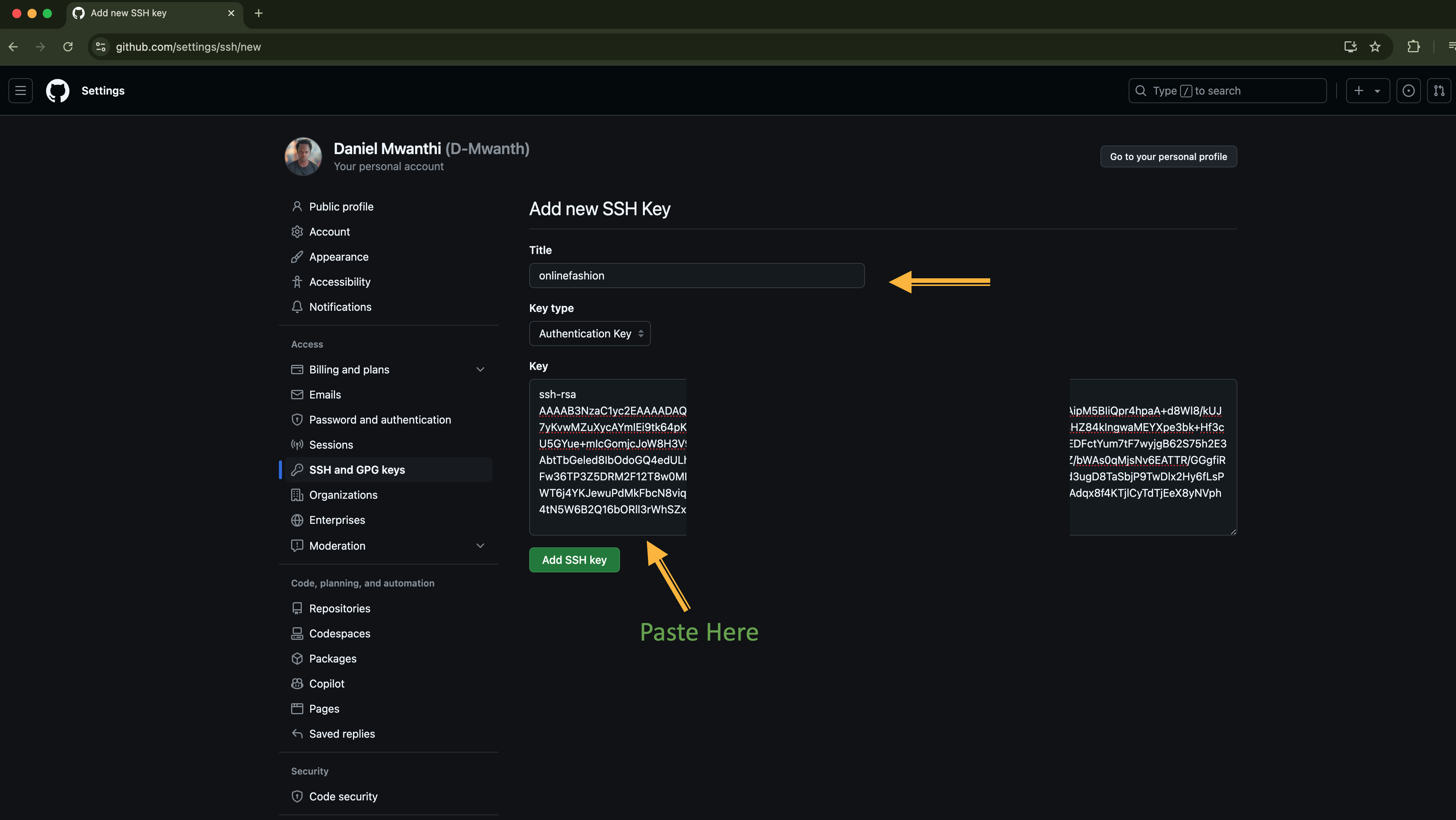

- Go to GitHub → Settings → SSH and GPG keys → New SSH key and paste the key and save: ⃗

To push your local commits to the remote repository, run the command below:

git push -u origin main

Finally, verify your code was pushed successfully.

# Replace username with yours (you need jq install to manipulate the returned JSON)

% gh api repos/D-Mwanth/microservices-app/contents | jq -r '.[] | select(.name | startswith(".") | not).name'

README.md

docs

src

Hosting Kubernetes Manifest on Github

- Create a public repository on Github:

gh repo create kubernetes-manifests --public

- Initialize your local manifest project using Git:

cd ~/kubernetes-manifests && git init

Let's rename the default master branch to main:

git branch -m main

- Configure this repository to use

kubernetes-manifestsGitHub repository as the remote origin.

git remote add origin git@github.com:D-Mwanth/kubernetes-manifests.git

- Stage the changes and make the initial commit:

git add . && git commit -m "Initial k8s manifests commit"

- Push local commits to your remote repository:

git push -u origin main

- Verify the changes were pushed:

# Replace username with yours (you need jq install to manipulate the returned JSON)

% gh api repos/D-Mwanth/kubernetes-manifests/contents | jq -r '.[] | select(.name | startswith(".") | not).name'

README.md

adservice

cartservice

checkoutservice

currencyservice

emailservice

frontend

loadgenerator

paymentservice

productcatalogservice

recommendationservice

shippingservice

Everything was successfully pushed.

Infrastructure Deployment (AWS VPC, EKS Cluster)

We will deploy an EKS cluster using custom Terraform modules developed in this project. Unlike in that project, where we provisioned multiple clusters, we will provision a single environment, as it is sufficient for our needs. We will install and configure a Cluster Autoscaler to ensure the cluster can scale and accommodate all deployments. Additionally, we’ll install an AWS Load Balancer Controller to manage an ingress resource that will expose the frontend deployment to the Internet. Version two of our Terraform add-on module is already configured to deploy these two components, thus we will use this version in our setup. Since our modules repository is publicly accessible, we can reference it directly via HTTPS without cloning.

Let's get started with infrastructure provisioning. Similar to our previous infrastructure provisioning project, we will use Terragrunt to apply these changes to save ourselves time. First, create an infrastructure directory and clone the Terragrunt configuration files into it:

Clone the Terragrunt configuration repository

cd ~/ && mkdir -p infrastructure && cd infrastructure

# Consider cloning via HTTPS as it's public repo

git clone git@github.com:D-Mwanth/live-infrastructure.git .

Check the structure of the cloned repository:

$ tree

.

├── dev

│ ├── eks

│ │ └── terragrunt.hcl

│ ├── env.hcl

│ ├── k8s-addons

│ │ └── terragrunt.hcl

│ └── vpc

│ └── terragrunt.hcl

├── prod

│ ├── eks

│ │ └── terragrunt.hcl

│ ├── env.hcl

│ ├── k8s-addons

│ │ └── terragrunt.hcl

│ └── vpc

│ └── terragrunt.hcl

├── staging

│ ├── eks

│ │ └── terragrunt.hcl

│ ├── env.hcl

│ ├── k8s-addons

│ │ └── terragrunt.hcl

│ └── vpc

│ └── terragrunt.hcl

└── terragrunt.hcl

13 directories, 13 files

From the output, we see these configurations are provisioning three cluster environments. As we just said, we only need one environment, specifically the dev cluster, which is set to run medium-sized instances. With that in mind, let's delete the two other environments and the GitHub Action workflow used to automate the release process in the original infrastructure project.

rm -rf prod staging .github

Check the directory structure to ensure that only the development environment configurations and the root Terragrunt file are retained:

$ tree

.

├── dev

│ ├── eks

│ │ └── terragrunt.hcl

│ ├── env.hcl

│ ├── k8s-addons

│ │ └── terragrunt.hcl

│ └── vpc

│ └── terragrunt.hcl

└── terragrunt.hcl

5 directories, 5 files

The root terragrunt.hcl file is configured to manage the Terraform backend, utilizing S3 for state storage and DynamoDB for state locking. Additionally, it generates a provider.tf file to define the AWS provider with the necessary version and region settings.

$ cat terragrunt.hcl

# terraform state configuration

remote_state {

backend = "s3"

generate = {

path = "backend.tf"

if_exists = "overwrite_terragrunt"

}

config = {

bucket = "infra-bucket-by-daniel"

key = "${path_relative_to_include()}/terraform.tfstate"

region = "eu-north-1"

encrypt = true

dynamodb_table = "infra-terra-lock"

}

}

# terraform provider configuration

generate "provider" {

path = "provider.tf"

if_exists = "overwrite_terragrunt"

contents = <<EOF

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0"

}

}

}

provider "aws" {

region = "eu-north-1"

}

EOF

}

As you can see, it specifies the S3 bucket named infra-bucket-by-daniel and the associated key for storing the Terraform state file. Additionally, it includes the DynamoDB table named infra-terra-lock for state locking.

Currently, these resources do not exist in the cloud, as they were deleted in the original project. We need to recreate them to avoid issues when executing Terraform. Let’s quickly create them from the command line.

# Create s3 bucket

aws s3api create-bucket --bucket infra-bucket-by-daniel --region eu-north-1 --create-bucket-configuration LocationConstraint=eu-north-1

# Disable Block Public Access

aws s3api put-public-access-block --bucket infra-bucket-by-daniel --public-access-block-configuration '{

"BlockPublicAcls": false,

"IgnorePublicAcls": false,

"BlockPublicPolicy": false,

"RestrictPublicBuckets": false

}' --region eu-north-1

# Set bucket policy

aws s3api put-bucket-policy --bucket infra-bucket-by-daniel --policy '{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": "*",

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::infra-bucket-by-daniel/*"

}

]

}' --region eu-north-1

# Enable bucket versioning

aws s3api put-bucket-versioning --bucket infra-bucket-by-daniel --versioning-configuration Status=Enabled

# Ensure the table is created in the same region as your s3 bucket

# Create a Dynamodb table for state locking

aws dynamodb create-table \

--table-name infra-terra-lock \

--attribute-definitions \

AttributeName=LockID,AttributeType=S \

--key-schema \

AttributeName=LockID,KeyType=HASH \

--billing-mode PAY_PER_REQUEST \

--region eu-north-1

You can verify the creation of these resources using the following commands:

aws s3 ls | grep infra-bucket-by-daniel

# Verify DynamoDB table

aws dynamodb list-tables --region eu-north-1

Applying your Terragrunt configurations now may result in a Terraform modules' download error, as Terragrunt is set to pull modules from GitHub via SSH. Since you dont have SSH access to my Github repository, this will cause issues. To resolve this, clone the Terraform modules repostory from my GitHub account and host them in your GitHub account. This will allow you to continue using the SSH method without any problems. However, you will need to modify the GitHub username in the terraform block of the terragrunt.hcl file within each of the three Terragrunt module subdirectories (eks, k8s-addons, vpc) to match your GitHub username.

Apply the Terragrunt configuration

If everything is in place, run the following command to apply the configurations and get your Amazon EKS cluster provisioned:

#

cd ~/infrastructure && terragrunt run-all apply --auto-approve --terragrunt-non-interactive

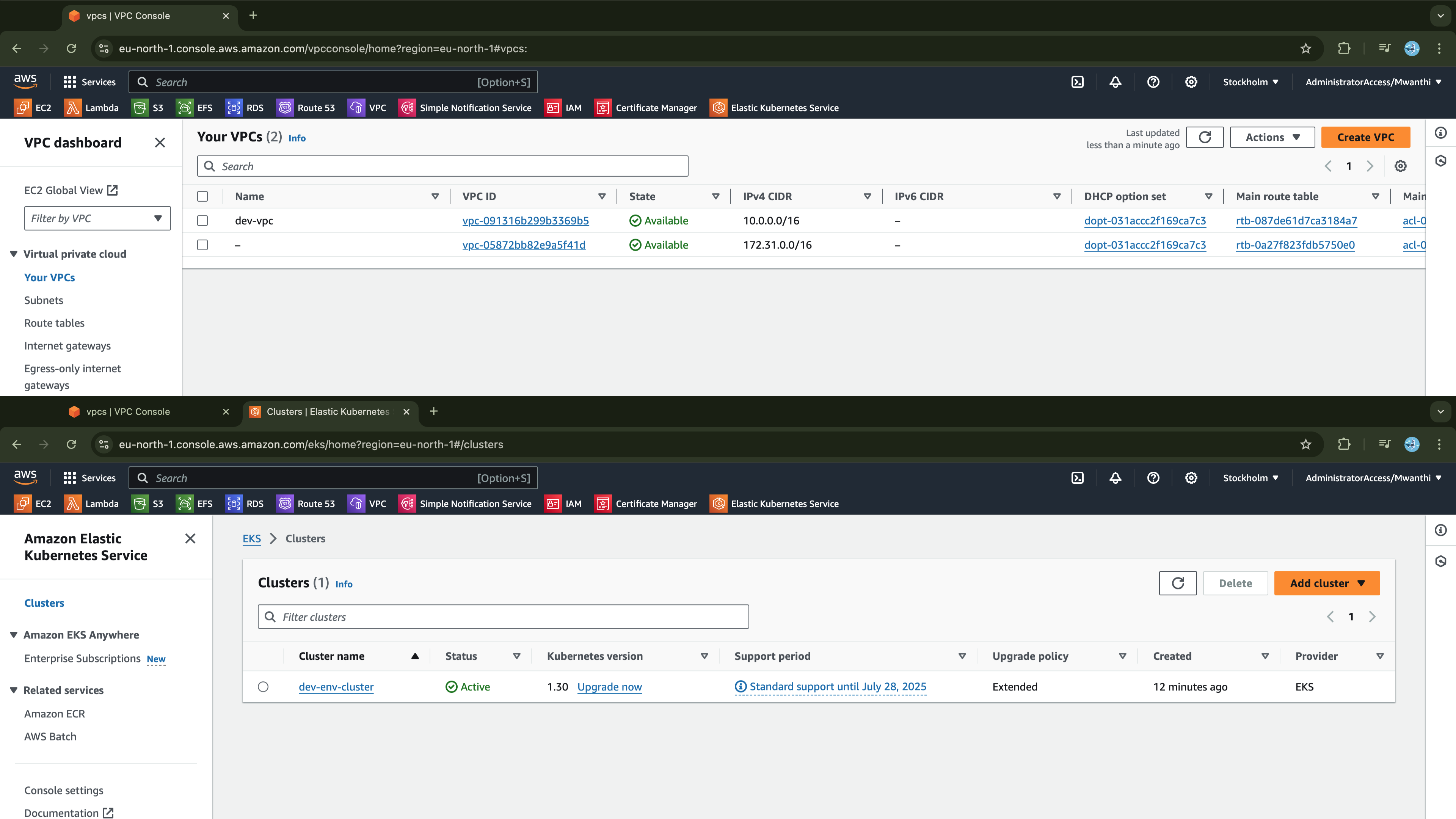

After successful execution, navigate to the AWS Management Console to verify that the Amazon VPC and an EKS cluster have been created and the cluster is active.

Accessing the EKS Cluster

Update kubectl with the cluster access credentials so you can connect to the cluster and verify it works:

# Update kubectl with cluster credentials

aws eks update-kubeconfig --name dev-env-cluster

To verify that kubectl is configured correctly, you can list cluster resources:

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

ip-10-0-18-5.eu-north-1.compute.internal Ready <none> 2m56s v1.30.4-eks-a737599

ip-10-0-42-202.eu-north-1.compute.internal Ready <none> 2m57s v1.30.4-eks-a737599

You should be able to list the nodes in your EKS cluster if everything is set up correctly.

Also, verify the Cluster Autoscaler and AWS Load Balancer Controller were installed and their pods are running:

$ kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

autoscaler-aws-cluster-autoscaler-ffb695cd5-l8fs5 1/1 Running 0 2m28s

aws-load-balancer-controller-69668dbfd6-98tzc 1/1 Running 0 2m17s

aws-load-balancer-controller-69668dbfd6-rlgw9 1/1 Running 0 2m17s

aws-node-9wdsx 2/2 Running 0 3m53s

aws-node-f5z9b 2/2 Running 0 3m51s

coredns-75b6b75957-cv9pg 1/1 Running 0 5m46s

coredns-75b6b75957-kr8h7 1/1 Running 0 5m46s

eks-pod-identity-agent-l5hkm 1/1 Running 0 2m56s

eks-pod-identity-agent-ql5rb 1/1 Running 0 2m56s

kube-proxy-4hbgk 1/1 Running 0 3m53s

kube-proxy-fpg68 1/1 Running 0 3m51s

metrics-server-6b76f9fd75-xgp8m 1/1 Running 0 3m1s

Everything seems to be as expected.

Now that the cluster is operational, we can move forward and set up a Continuous Delivery (CD) workflow with ArgoCD to deploy our services to the cluster. Kubernetes will handle orchestration and management, ensuring the containers run efficiently at scale. However, before creating the CD workflow, we must establish a Continuous Integration pipeline, (CI), to build and publish the container images to DockerHub. Once the images are available, we can implement the CD pipeline to deploy them to the Kubernetes cluster.

Developing Jenkins CI Pipeline

To start, we need a dedicated host to run the Jenkins server. It's also essential to separate the Jenkins controller from job execution tasks. To achieve this, we will create a separate agent node that will handle the execution of all jobs, while the Jenkins controller manages the overall pipeline. Therefore, we will require at least two hosts: one for the Jenkins controller and another for the Jenkins agent.

Setting Up Jenkins Master and Agent Nodes

We will provision two EC2 instances on AWS: one for the Jenkins master node and another for the agent node. For the master node, we need to enable SSH access on port 22 and HTTP access on port 8080. We will attach a public key to the hosts to facilitate SSH access using the corresponding private key.

After provisioning these instances, we will connect to the master node to install Jenkins and access it via a browser for configuration. Let's begin by setting the Jenkins master node.

Jenkins Master Node

First, create the dedicated key pair to attach to both nodes:

# Am creating from my local system but you can do so from your EC2 instance if interested

aws ec2 create-key-pair --key-name jenkins-key --region eu-north-1 --query 'KeyMaterial' --output text > jenkins-key.pem

Next, create a security group to attach to the instance:

% aws ec2 create-security-group --group-name jenkins-security-group --description "Security group for Jenkins server access"

{

"GroupId": "sg-02b661121a154380b"

}

Create a list of all ingress rules in a JSON file.

# This file contains ingress rules for SSH and HTTP access

cat <<EOF > ingress-rules.json

{

"IpPermissions": [

{

"IpProtocol": "tcp",

"FromPort": 22,

"ToPort": 22,

"IpRanges": [

{

"CidrIp": "0.0.0.0/0"

}

]

},

{

"IpProtocol": "tcp",

"FromPort": 8080,

"ToPort": 8080,

"IpRanges": [

{

"CidrIp": "0.0.0.0/0"

}

]

}

]

}

EOF

Apply the ingress rules defined in the above JSON file to the security group:

# replace `sg-02b661121a154380b` with the group id return on your terminal

aws ec2 authorize-security-group-ingress --group-id sg-02b661121a154380b --cli-input-json file://ingress-rules.json

{

"Return": true,

"SecurityGroupRules": [

{

"SecurityGroupRuleId": "sgr-0b6989769b6e3cfaa",

"GroupId": "sg-02b661121a154380b",

"GroupOwnerId": "848055118036",

"IsEgress": false,

"IpProtocol": "tcp",

"FromPort": 22,

"ToPort": 22,

"CidrIpv4": "0.0.0.0/0"

},

{

"SecurityGroupRuleId": "sgr-0dd34f69c1c5f08a9",

"GroupId": "sg-02b661121a154380b",

"GroupOwnerId": "848055118036",

"IsEgress": false,

"IpProtocol": "tcp",

"FromPort": 8080,

"ToPort": 8080,

"CidrIpv4": "0.0.0.0/0"

}

]

}

Now, launch an EC2 instance:

# ensure the image id and instance type exists in the region you create the instance

# also use the sg id returned on your terminal: `sg-02b661121a154380b`

aws ec2 run-instances \

--image-id ami-07c8c1b18ca66bb07 \

--count 1 \

--instance-type t3.large \

--key-name jenkins-key \

--security-group-ids sg-02b661121a154380b \

--tag-specifications 'ResourceType=instance,Tags=[{Key=Name,Value=Jenkins-Master-node}]' \

--block-device-mappings '[{"DeviceName":"/dev/sda1","Ebs":{"VolumeSize":30,"VolumeType":"gp3"}}]' \

--region eu-north-1

Let's SSH into the instance:

# ensure private key has read permission only for the owner

chmod 400 jenkins-key.pem

# Replace the value of `instance-ids` with value of `InstanceId` returned by the instance launch command

ssh -i jenkins-key.pem -o StrictHostKeyChecking=no ubuntu@$(aws ec2 describe-instances --instance-ids i-0613e9c1155a3bd87 --query "Reservations[*].Instances[*].PublicIpAddress" --output text)

Now, rename the instance hostname, update and upgrade the system packages:

# Update and upgrade system packages

sudo apt update -y && sudo apt upgrade -y

# Rename hostname

sudo hostnamectl set-hostname Jenkins-Master

# Start new shell session

bash

Now, let's install Jenkins. First, we need to install Java, as it is required to run Jenkins. Copy and run the following commands to install both Java and Jenkins:

# Install java

sudo apt install fontconfig openjdk-17-jre -y

# Add Jenkins repository key

sudo wget -O /usr/share/keyrings/jenkins-keyring.asc \

https://pkg.jenkins.io/debian-stable/jenkins.io-2023.key

# Add Jenkins repository to sources

echo deb [signed-by=/usr/share/keyrings/jenkins-keyring.asc] \

https://pkg.jenkins.io/debian-stable binary/ | sudo tee \

/etc/apt/sources.list.d/jenkins.list > /dev/null

# Update system packages again

sudo apt-get update -y

# Install jenkins

sudo apt-get install jenkins -y

Verify that Jenkins is up and running by checking its status:

$ sudo systemctl status jenkins

● jenkins.service - Jenkins Continuous Integration Server

Loaded: loaded (/usr/lib/systemd/system/jenkins.service; enabled; preset: enabled)

Active: active (running) since Thu 2024-10-31 08:09:38 UTC; 7s ago

Main PID: 20928 (java)

Tasks: 49 (limit: 9367)

Memory: 913.4M (peak: 928.6M)

CPU: 23.686s

CGroup: /system.slice/jenkins.service

└─20928 /usr/bin/java -Djava.awt.headless=true -jar /usr/share/java/jenkins.war --webroot=/var/cache/jenkins/war --httpP>

Oct 31 08:09:31 Jenkins-Master jenkins[20928]: 18e18bff699a4ee49ebce3bfe493552e

Oct 31 08:09:31 Jenkins-Master jenkins[20928]: This may also be found at: /var/lib/jenkins/secrets/initialAdminPassword

Oct 31 08:09:31 Jenkins-Master jenkins[20928]: *************************************************************

Oct 31 08:09:31 Jenkins-Master jenkins[20928]: *************************************************************

Oct 31 08:09:31 Jenkins-Master jenkins[20928]: *************************************************************

Oct 31 08:09:38 Jenkins-Master jenkins[20928]: 2024-10-30 08:09:38.038+0000 [id=31] INFO jenkins.InitReactorRunner$1#on>

Oct 31 08:09:38 Jenkins-Master jenkins[20928]: 2024-10-30 08:09:38.071+0000 [id=24] INFO hudson.lifecycle.Lifecycle#onR>

Oct 31 08:09:38 Jenkins-Master systemd[1]: Started jenkins.service - Jenkins Continuous Integration Server.

Oct 31 08:09:38 Jenkins-Master jenkins[20928]: 2024-10-30 08:09:38.744+0000 [id=49] INFO h.m.DownloadService$Downloadab>

Oct 31 08:09:38 Jenkins-Master jenkins[20928]: 2024-10-30 08:09:38.745+0000 [id=49] INFO hudson.util.Retrier#start: Per>

lines 1-20/20 (END)

Jenkins is actively running and enabled, meaning we don't need to manually start it on system reboot.

Now, let’s access it in the browser and proceed with the setup:

# Retrieve the IP address of the master node (replace i-0613e9c1155a3bd87 with the instance ID displayed in the terminal when creating the master node)

aws ec2 describe-instances --instance-ids i-0613e9c1155a3bd87 --query "Reservations[*].Instances[*].PublicIpAddress" --output text

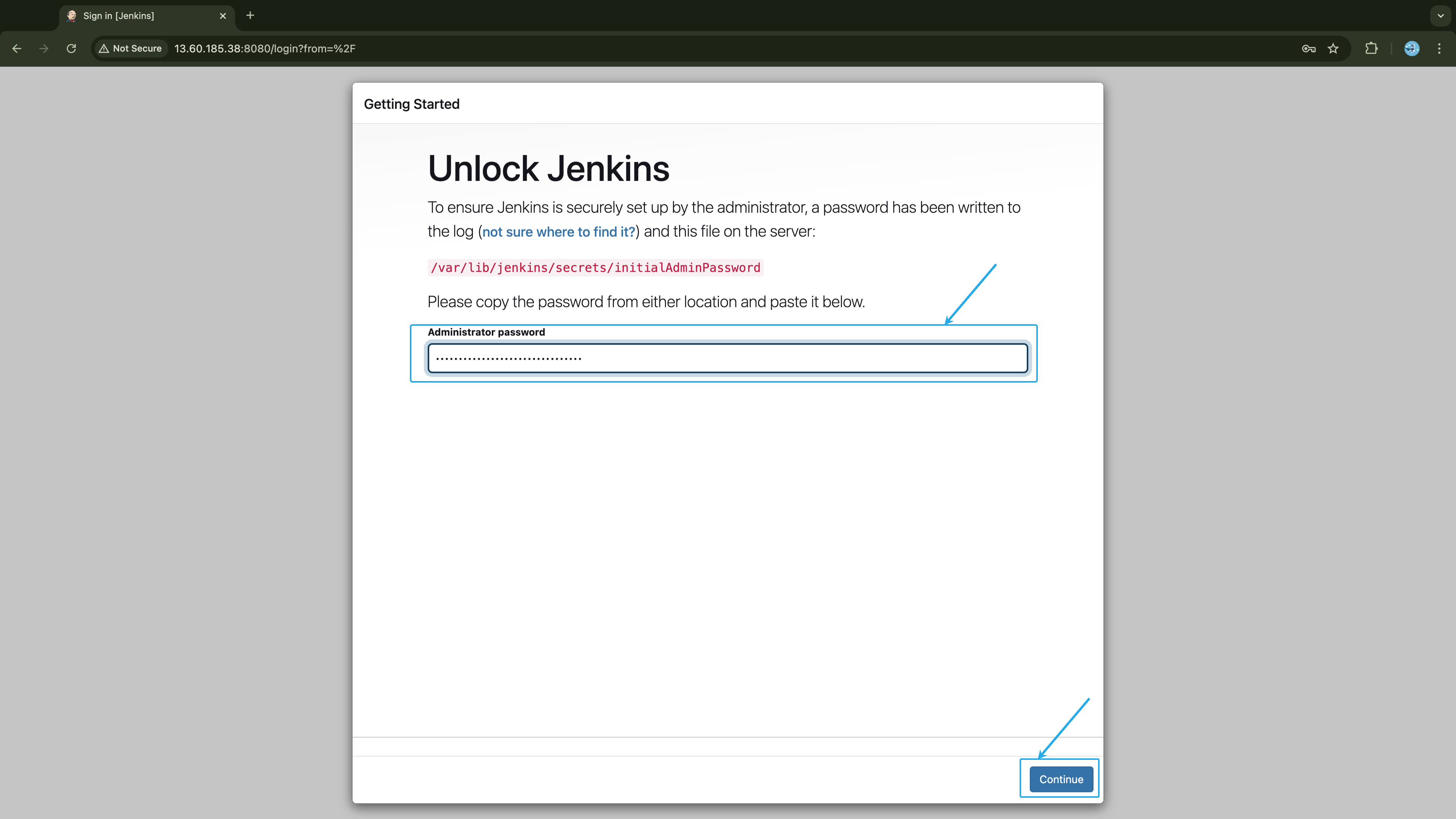

Open your web browser and navigate to http://<your-master-node-ip>:8080. Replace <your-master-node-ip> with the actual IP address of your Jenkins master node returned by the previous command. Below is my URL.

http://13.60.185.38:8080

You are required to enter the initial password in the Administrator password field. Run the command below on your master node and copy the output:

$ sudo cat /var/lib/jenkins/secrets/initialAdminPassword

18e18bff699a4ee49ebce3bfe493552e

Once you paste the password, press Enter or click the Continue button at the right-bottom corner.

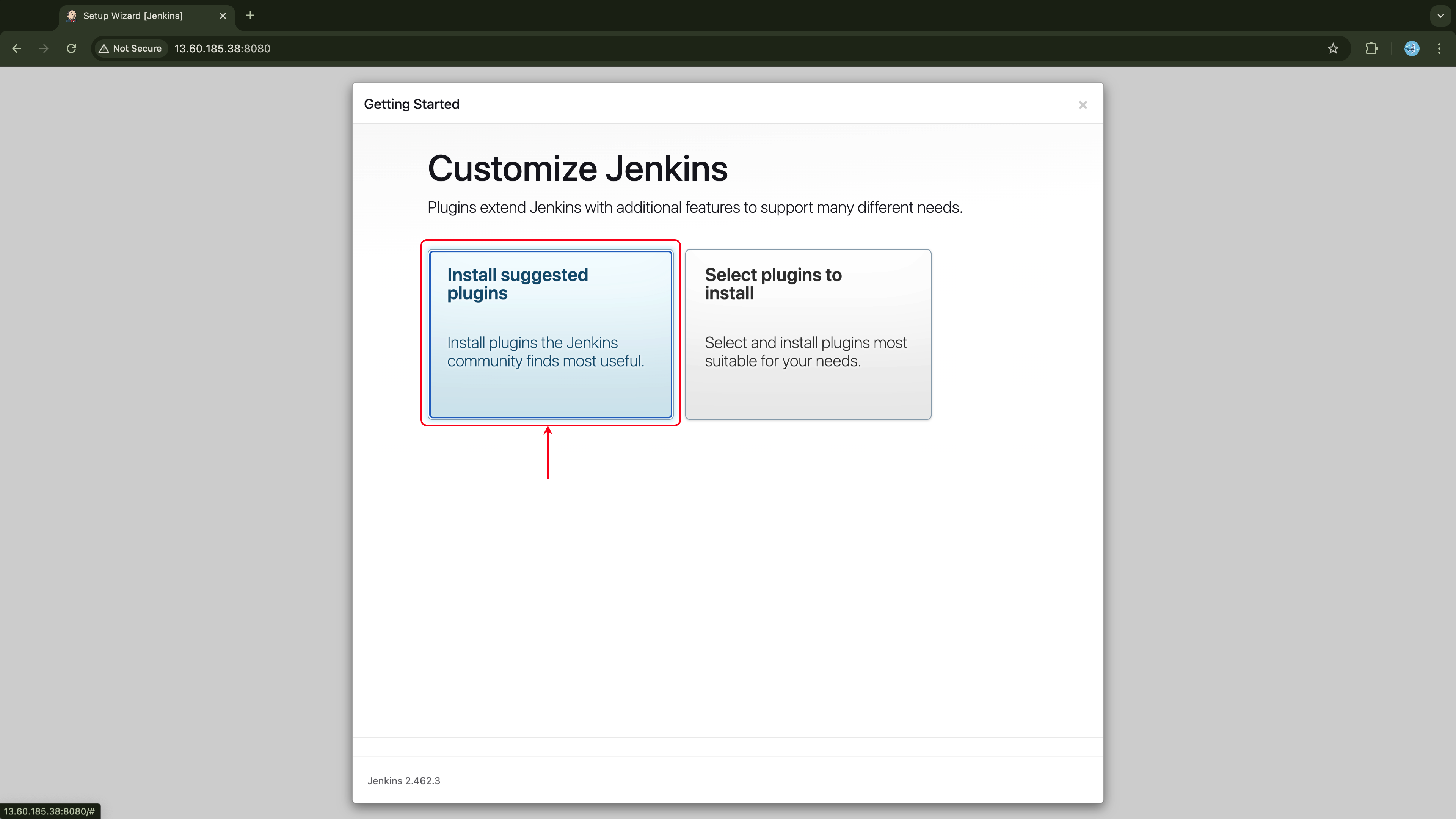

On the next page, click on Install suggested Plugins or simply press Enter/return to select the first option, usually chosen by default.

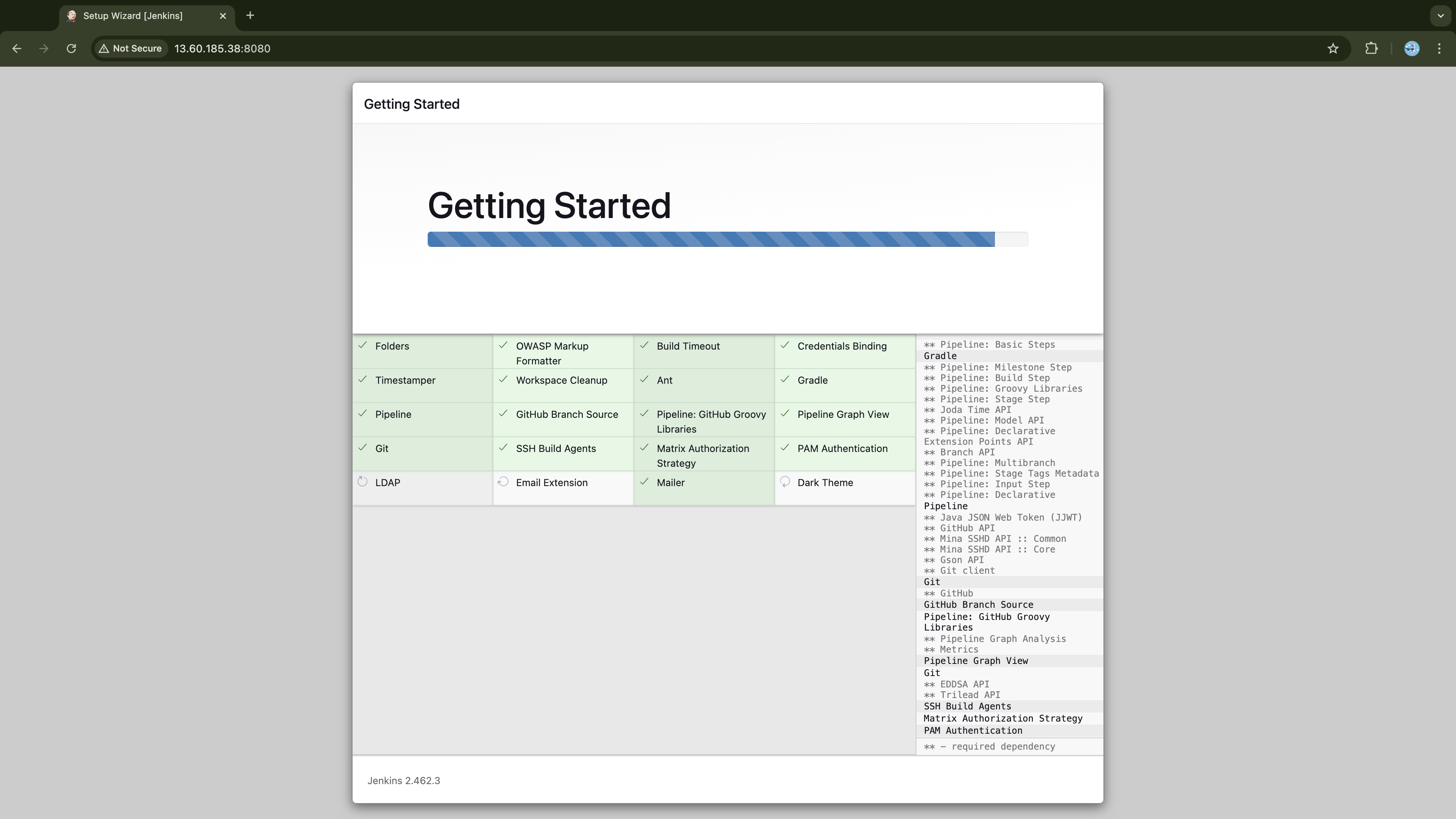

This will lead you to the plugin installation page as shown below:

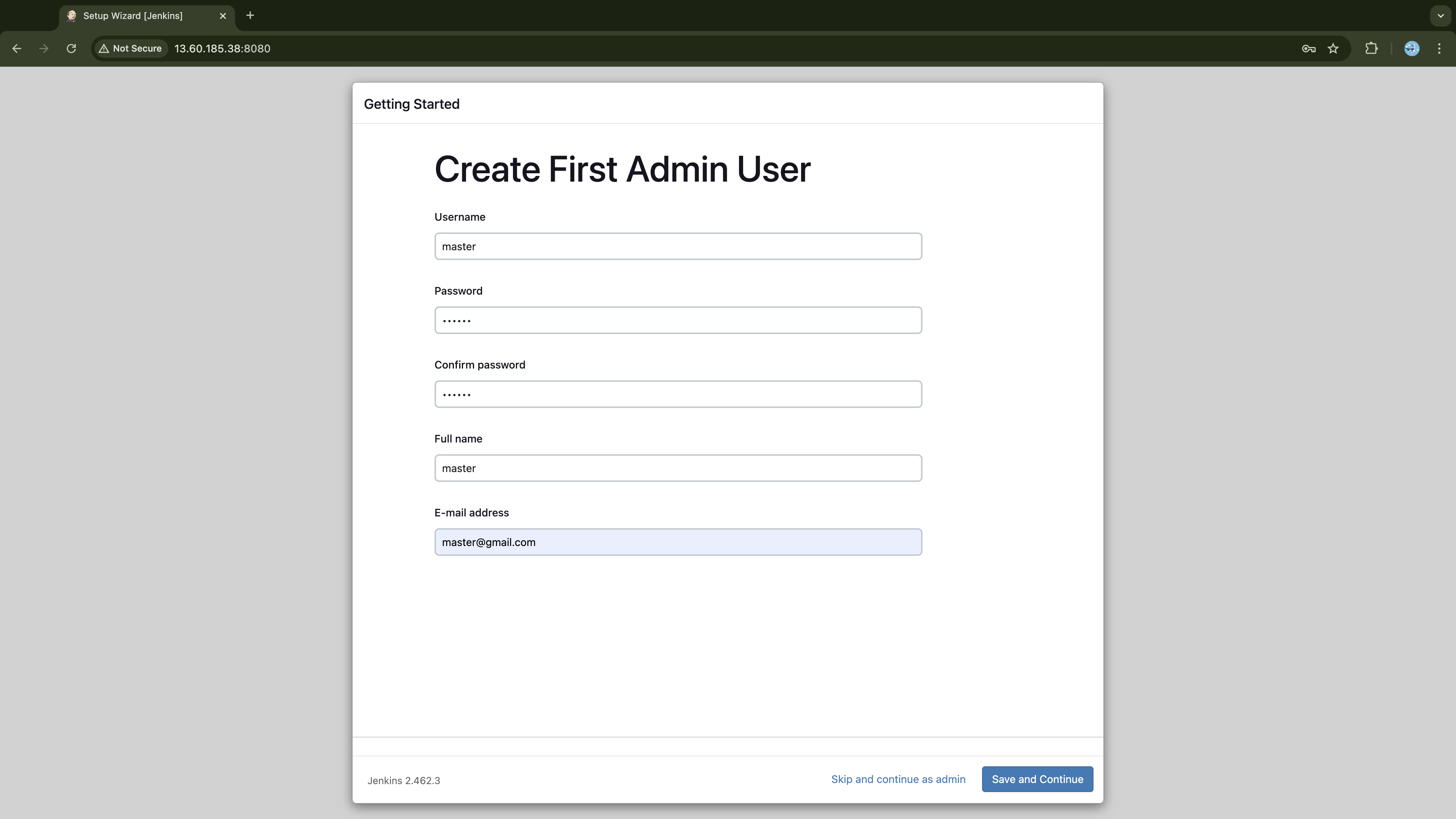

Wait until the installation process is complete. Once finished, you will automatically be taken to the next page, where you'll be provided with a form to create your admin user. Fill out the form and then click Save and Continue:

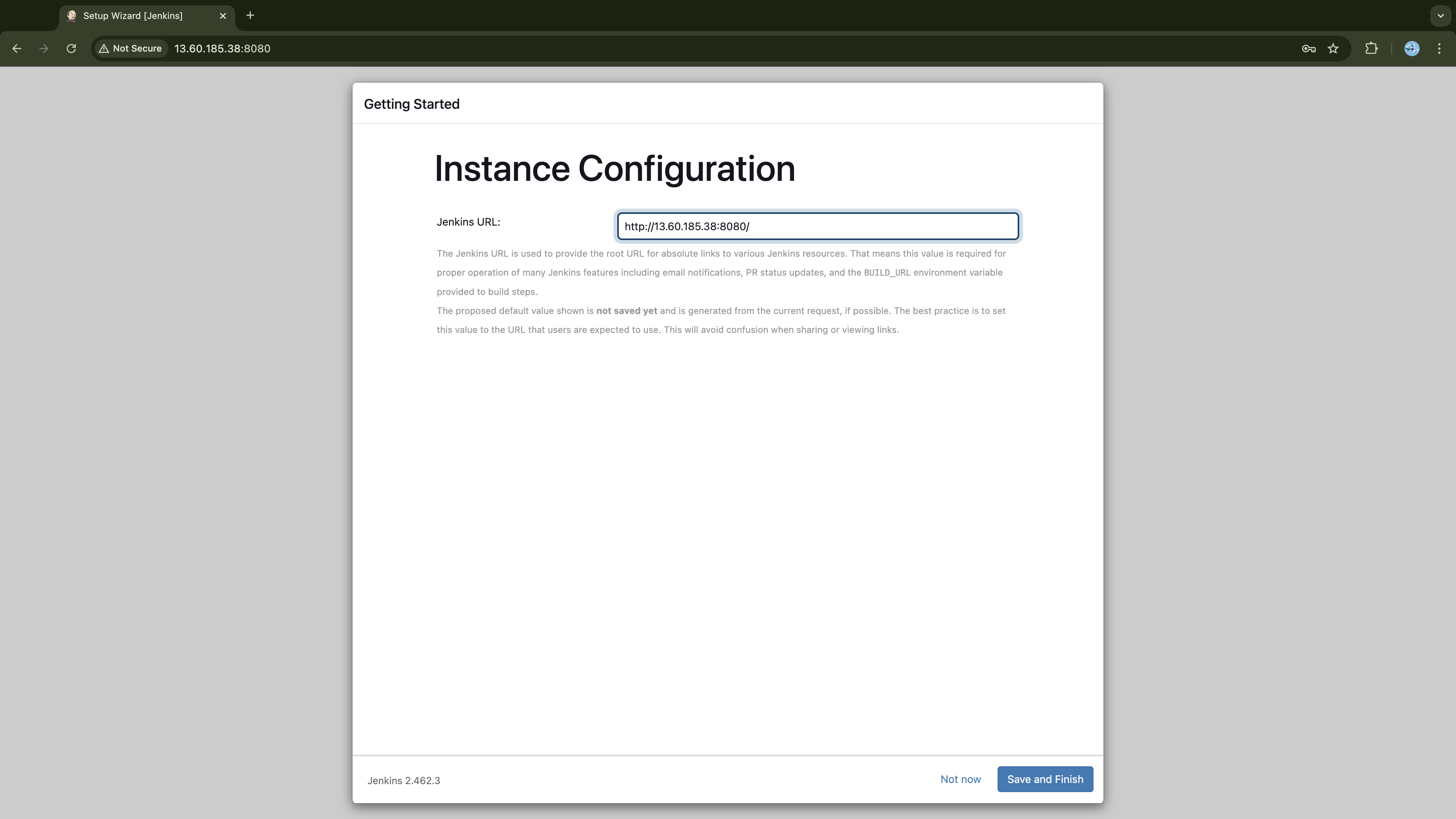

On the Instance configuration page, click the Save and Continue button at the bottom right.

To this point, Jenkins is fully set and you can start using it, just click, Start using Jenkins:

Now, let's create the agent node and attach it to the master node.

Jenkins Agent Node

Create an EC2 instance from the command line. We need to ensure this host is sufficiently powerful to serve its purpose. I’m using the t3.large instance type, which offers 2 vCPUs and 8 GiB of memory. Execute the following command from your local system (online-fashion ec2 instance) to get the instance created.

aws ec2 run-instances \

--image-id ami-07c8c1b18ca66bb07 \

--count 1 \

--instance-type t3.large \

--key-name jenkins-key \

--security-group-ids sg-02b661121a154380b \

--tag-specifications 'ResourceType=instance,Tags=[{Key=Name,Value=Jenkins-Agent}]' \

--block-device-mappings '[{"DeviceName":"/dev/sda1","Ebs":{"VolumeSize":30,"VolumeType":"gp3"}}]' \

--region eu-north-1

This instance uses the same key pair and security group as the Jenkins master. While the security group allows SSH and HTTP access, this agent node requires only SSH access. We are allocating 30 GB of EBS storage, which should be sufficient to store all necessary files.

Let’s SSH into this instance to configure it.

# Replace instance-ids with the value of InstanceId returned by the instance launch command

# Also ensure the path to the key is correct

ssh -i jenkins-key.pem ubuntu@$(aws ec2 describe-instances --instance-ids i-06415312fee6ac75a --query "Reservations[*].Instances[*].PublicIpAddress" --output text)

Once connected, first rename the instance's hostname:

sudo hostnamectl set-hostname Jenkins-Agent && bash

Now, update and upgrade the instance system packages:

# Update and upgrade system packages

sudo apt update -y && sudo apt upgrade -y

Configure Authentication for Jenkins Master Node to Access Agent Node

The Jenkins-Master needs to authenticate to the Jenkins-Agent via SSH. To achieve this, generate SSH key-pair on the Jenkins-Master node and then configure the Jenkins-Agent with the public key. This setup will allow the master node to connect with the corresponding private key.

# Generate ssh-keys from Jenkins master

$ ssh-keygen -t ed25519 -f ~/.ssh/jenkins-master

Generating public/private ed25519 key pair.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/ubuntu/.ssh/jenkins-master

Your public key has been saved in /home/ubuntu/.ssh/jenkins-master.pub

The key fingerprint is:

SHA256:OZ75pisPZZun43QYC3A0w5U4M3e8u4zWeYRZMWSoET4 ubuntu@Jenkins-Master

The key's randomart image is:

+--[ED25519 256]--+

| .+oo+ oo |

| .*++ +.o |

| . .=E+ . o |

| o .o. . |

| . S = |

| = X+ . |

| . X+o+ |

| .o+=* . |

| =*=.. |

+----[SHA256]-----+

The generated keys are stored in .ssh directory:

$ ls ~/.ssh/

authorized_keys jenkins-master jenkins-master.pub

The jenkins-master is the private key, while jenkins-master.pub is the public key. The authorized_keys file contains the public keys of authenticated clients, allowing clients with the corresponding private keys to connect to the system.

To authenticate the Jenkins-Master with the Jenkins-Agent over SSH, copy the public key we just created in the master node, jenkins-master.pub, and add it to the ~/.ssh/authorized_keys file on the Jenkins-Agent node.

# Display public key and manually copy it from the terminal file

cat ~/.ssh/jenkins-master.pub

On the Jenkins-Agent node, open the authorized_keys file and paste the content copied above in a new line:

nano ~/.ssh/authorized_keys

Save the changes (control + O + ⏎) and close the file (control + X).

Verify the key was successfully added:

$ cat ~/.ssh/authorized_keys

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDdR/QvymrDQvgo6xyablSEK8ByLBHI4FjKcpsIyTNi6jkeusgrzvzXeQBeAUC6a5KC0gH7PiFRgA3Tb3TV1N//nougsViIGC0IO02FUqhMfy4KEm2EYJrFJv671wEJLnetzidIzoHKU672x8+MvIV8SqcxE12NlL5HLRbmC9AnhuN4Gk8XZTbCUp2dXKw8+KTJhmp/RFbYftwVMXaZEezQ84RBXUQaH+xUpc1YkJQmCvxGzxLdkOEEqo4kJSrEW/ebNMacSnj6BKEZdaKDaIXebPHRfQflVCewMjCGLASDrzhVCCNKa+qfXFMOWy3DT+cdaspSlNriDpJQP2TWlm2T jenkins-key

ssh-ed25519 AAAAC3NzaC1lZDI1NTE5AAAAIHQVAZyFmcqoedTc8itUyHgbZRh2ohFJpkTE/uqebWUQ ubuntu@Jenkins-Master

Two keys should be displayed. The first key is the public key that allows us to SSH into the instance with the corresponding private-key from our local machine. The second key is the public key we just generated in the Jenkins-Master node, that we just added.

Before adding the Agent node to the Jenkins master, first, let's prepare it.

Preparing the Agent Node

We must install Java in the agent node as the master node uses it to manage builds and run jobs:

# Install java

sudo apt update -y

sudo apt install fontconfig openjdk-17-jre -y

Jenkins will be performing builds on this agent using Docker, so we need to install it as well:

# Install docker

sudo apt install docker.io -y

# Add your user (ubuntu) to docker group (this will grant user permission to execute docker command without admin privilages)

sudo usermod -aG docker $USER

# Reload the group membership

newgrp docker

These commands will install and add the Ubuntu user to the Docker group. This is necessary as Jenkins will assume Ubuntu user to execute tasks from the agent node.

Verify Docker is working as expected.

$ docker run hello-world

Unable to find image 'hello-world:latest' locally

latest: Pulling from library/hello-world

c1ec31eb5944: Pull complete

Digest: sha256:d211f485f2dd1dee407a80973c8f129f00d54604d2c90732e8e320e5038a0348

Status: Downloaded newer image for hello-world:latest

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the "hello-world" image from the Docker Hub.

(amd64)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

https://hub.docker.com/

For more examples and ideas, visit:

https://docs.docker.com/get-started/

The docker is working as expected.

Add Jenkins Agent to the Master Node

Login to Jenkins master from the browser and follow the following steps to add the Agent:

Go to, Manage Jenkins → Nodes → + New Node and then set:

Node Name: Jenkins-Agent

Type: Select

Permanent Agentand click Create.

Fill in the following information to the provided form:

Description: Jenkins Agent for performing build tasks.

Number of executors:

2, don't provide more than the cores of your instance (use lscpu to see executors your instance has).Remote root directory:

/home/ubuntuLabels:

Jenkins-AgentUsage: Go with,

Use this node as much as possible.Launch Method: Select

Launch Agent Via SSHfrom the dropdown.Host: Enter the public Ip address of the Jenkins-Agent, and use this command to get it (

aws ec2 describe-instances --instance-ids <agent-node-instance-id> --query "Reservations[*].Instances[*].PublicIpAddress" --output text)Credentials: Since not already set, click + Add button. When the

Jenkinsoption appears in the popup dialog, click it and follow the instructions below to set up your credential:Credentials Kind: SSH Username with private key

ID: Jenkins-Agent

Description: Jenkins_Agent

Username: ubuntu

Private Key: Select Enter directly, under Key, click

Addand paste thejenkins-masterprivate key in the provided field. You can run this command on the master node to print the private key (cat ~/.ssh/jenkins-master)Click Add to save the credential.

Now, under Credentials select the

ubuntu (Jenkins-Agent).Host Key Verification Strategy: Select

Non verifying Verifcation Strategyfrom the dropdown.Click the Save at the bottom of the page to save changes.

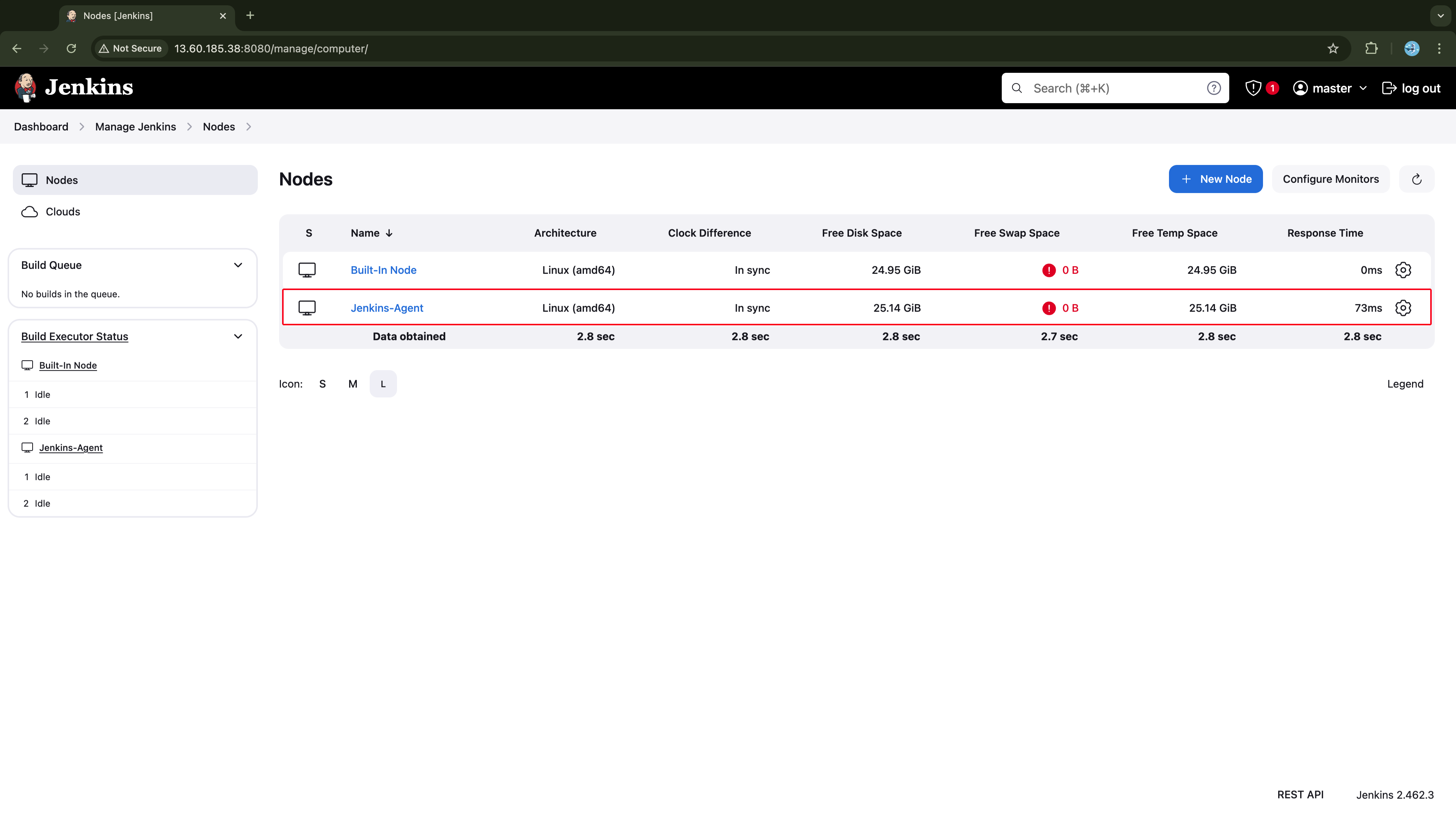

The agent node should be added to the Jenkins Master and listed under the Nodes as displayed in the screenshot below.

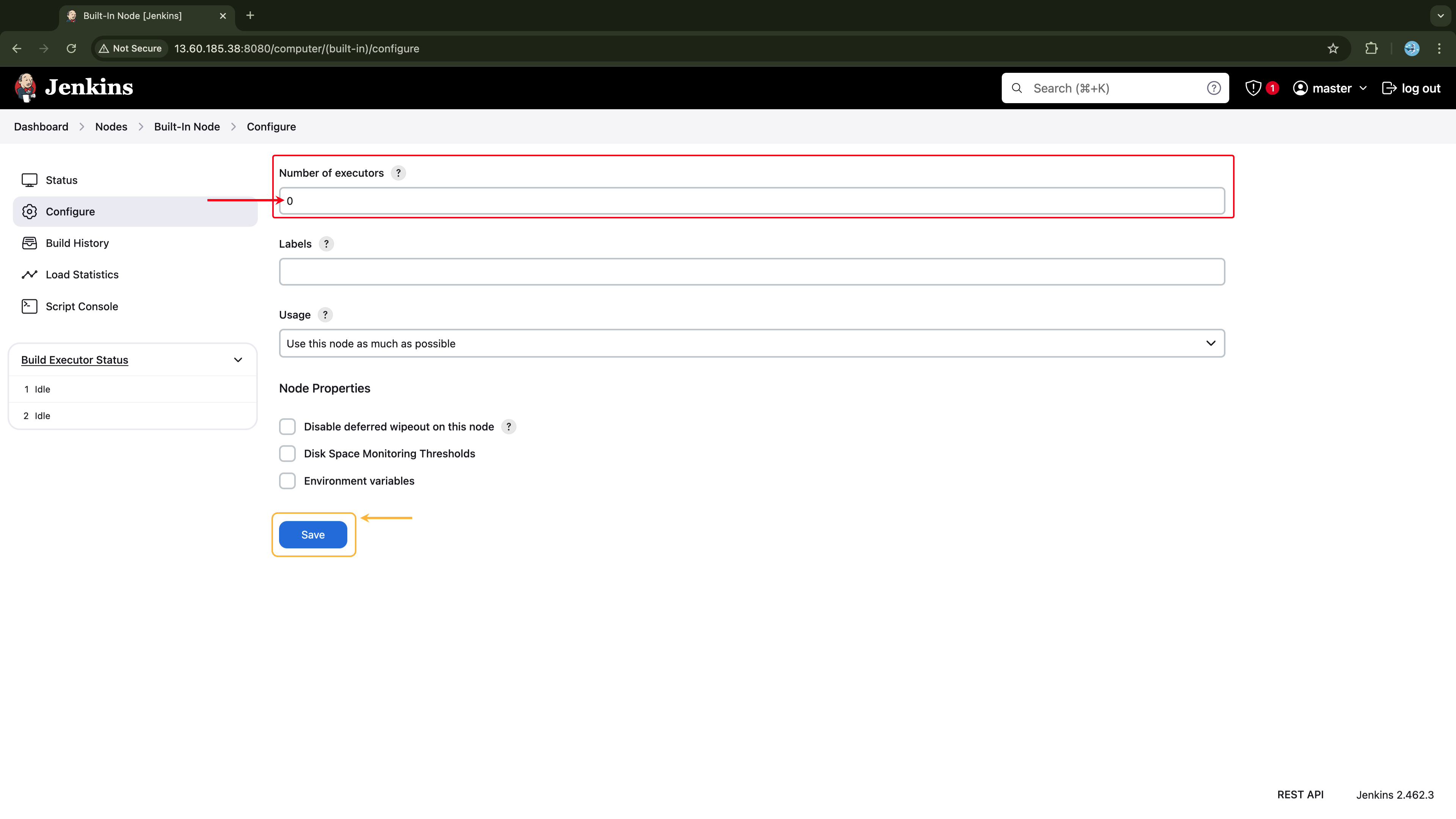

Since we don't want any jobs executed on the Jenkins-Master, let's allocate zero executors to the master node. To do this go to Manage Jenkins → Nodes → Built-In Node → Configure and set the Number of executors field to zero. Save the changes.

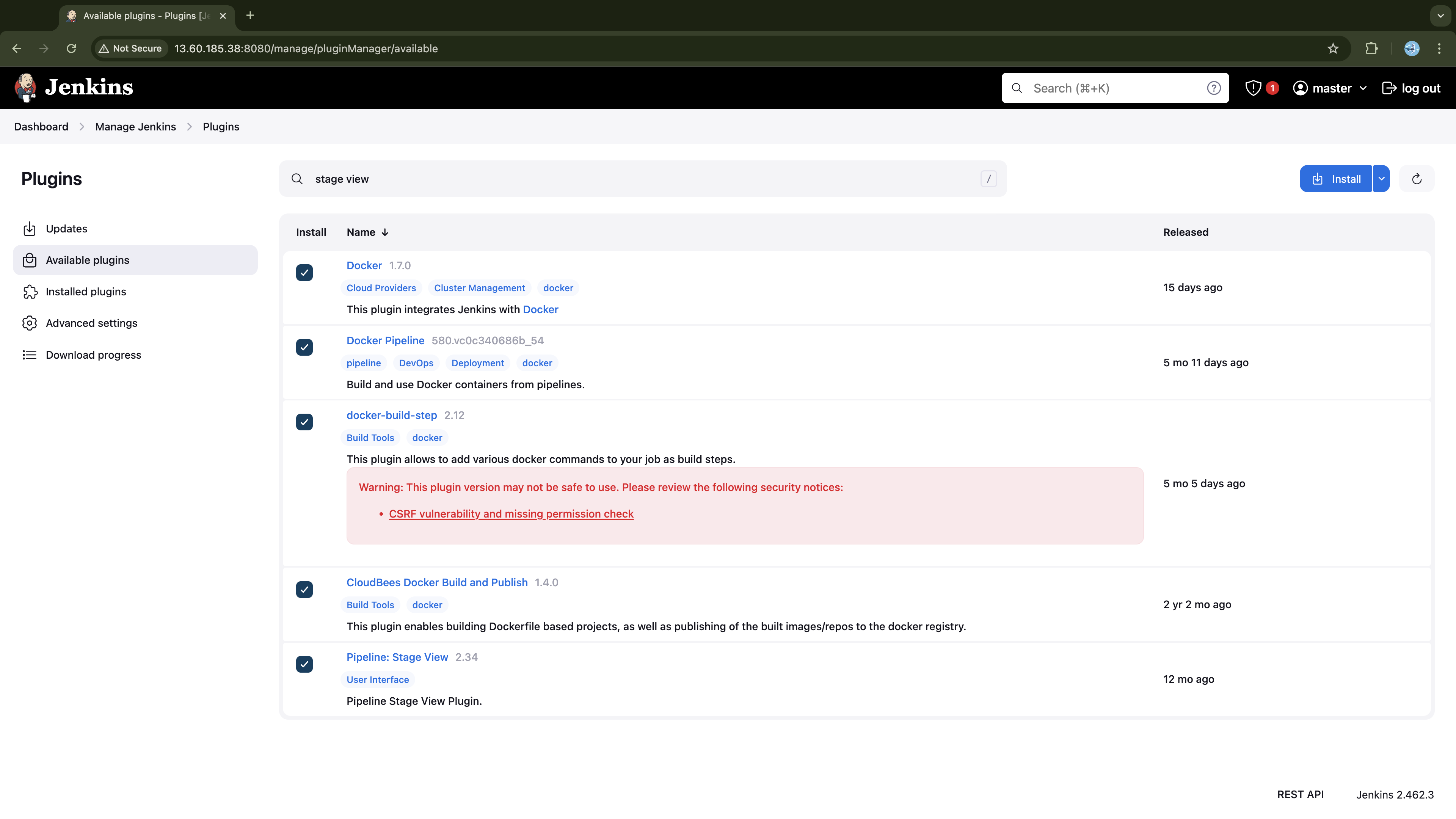

Installing plugins

Go to Manage Jenkins → Plugins → Available Plugins, search, select, and install the following plugins:

Docker

Docker Pipeline

docker-build-step

CloudBee Docker Build and Publish

Pipeline: Stage View

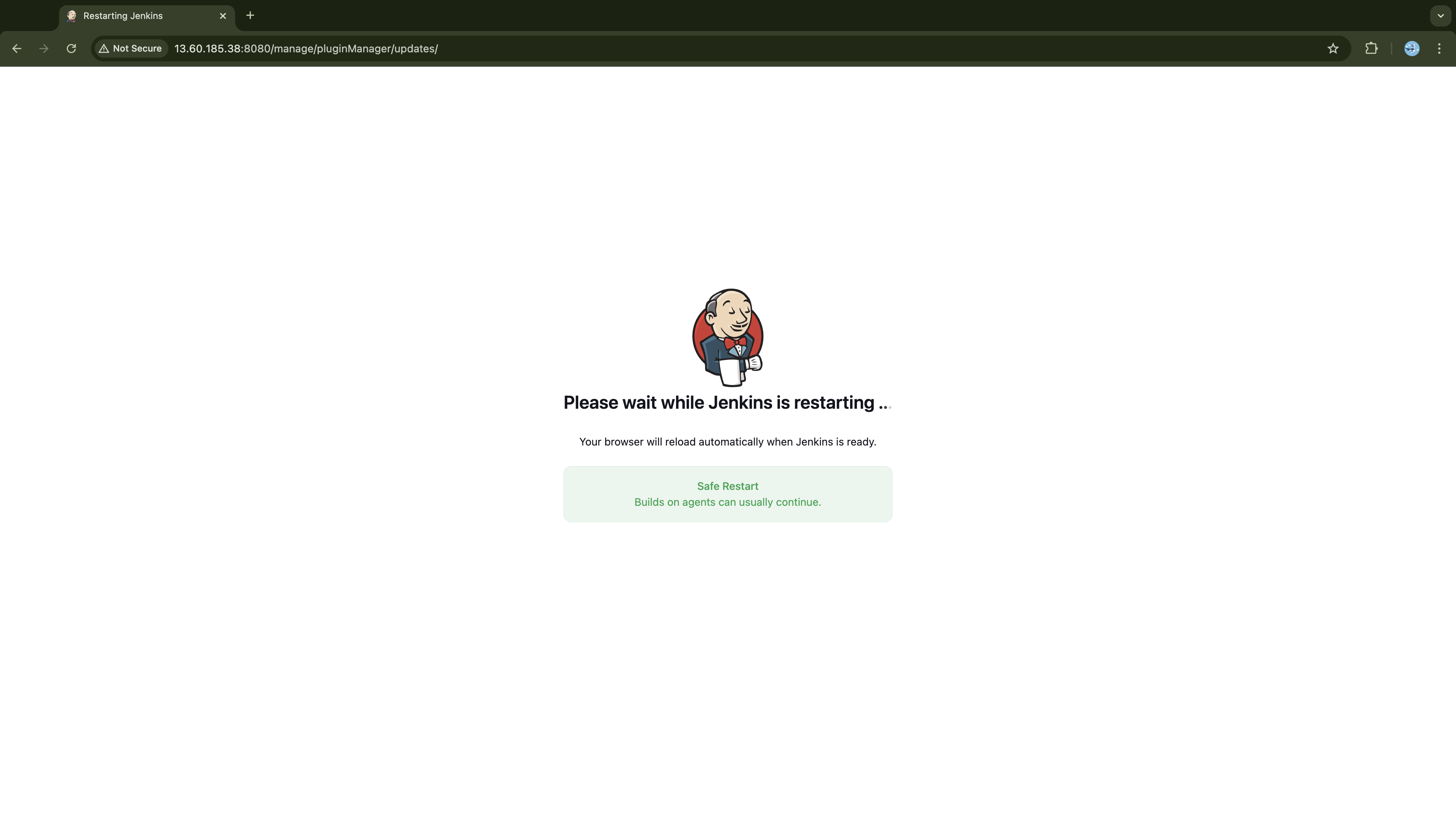

Once installed restart the Jenkins:

Now that plugins are installed, we need to configure some of them as Jenkins tools.

Configure Tools

Go to Manage Jenkins → Tools and configure Docker as a Jenkins tool by navigating to the Docker Installations section. In that section, click Add Docker and fill out the form with the following information:

Name:

dockerInstall automatically:

Download from docker.com

Docker version:

latest

Apply and Save the changes.

When our CI pipeline is run, Jenkins will require the following credentials.

DockerHub User Token: Required to push images to Docker Hub after a build.

GitHub Access Token: Needed for the pipeline to push updated Kubernetes manifests to GitHub.

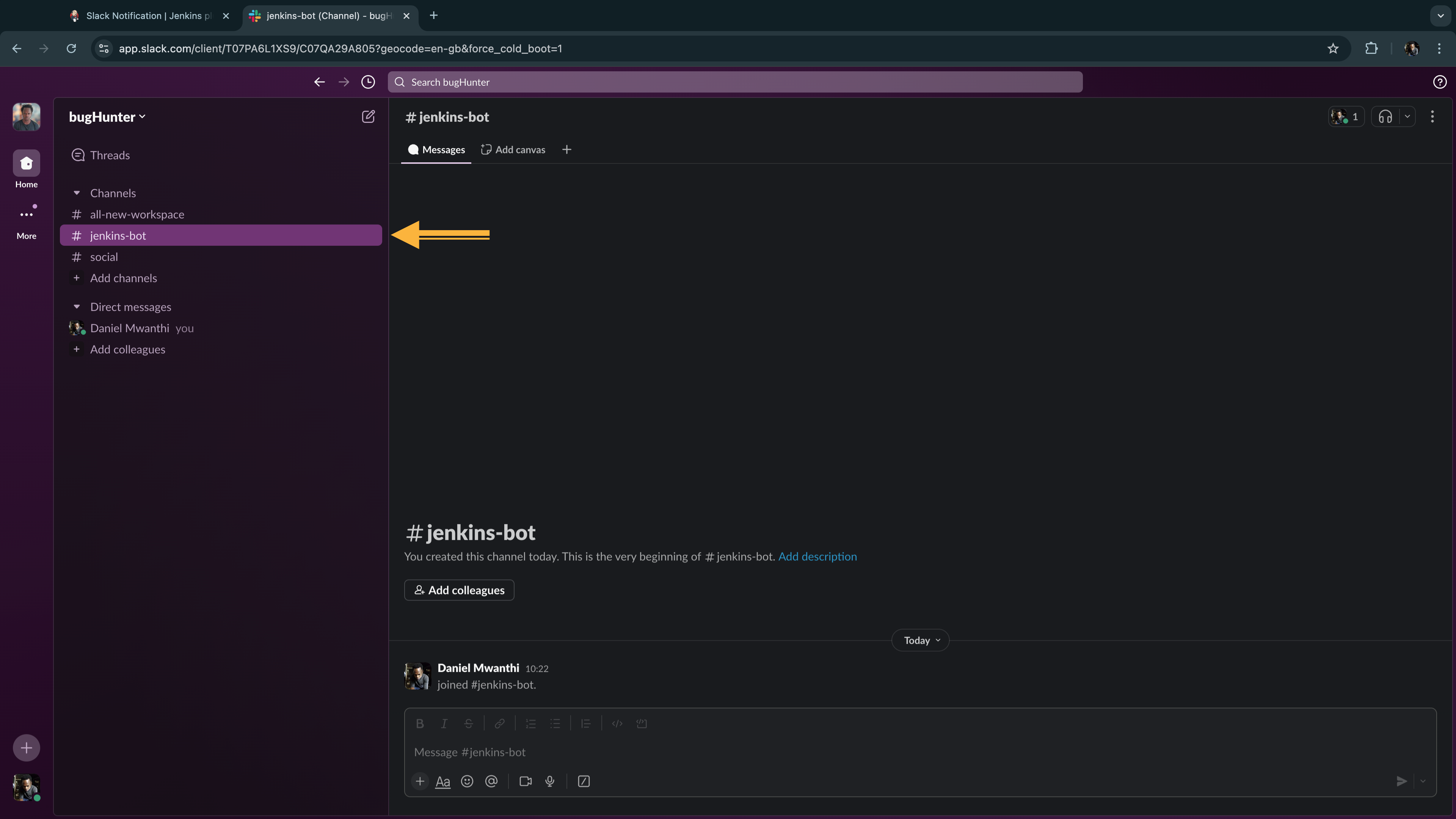

Slack Bot User Access Token: Required for the pipeline to send build status notifications to Slack.

We won't configure these now; instead, we'll address them when the need arises. For now, we're noting these pending configurations so we can proceed with an understanding that they are planned but not yet implemented.

Developing the CI pipeline

With Jenkins set up, we're ready to create a CI pipeline to build and publish our microservices' container images to DockerHub. To ensure the efficiency of our pipeline, it will manage the build process based on two scenarios: First Execution (Build No. 1) and Subsequent Executions (Build No. > 1).

First Execution (Build No = 1):

On the initial run, the pipeline will:

Clean the workspace.

Clone the repositories for microservices and Kubernetes manifests.

Identify all services in the microservices repository.

Build all identified services, tagging each image with the initial version

v0.0.1and adding them to the tracked list.Push the images to DockerHub.

Update the services' deployment manifests with the new version

v0.0.1(assuming the manifests exist).Commit and push updated manifests to the

kubernetes-manifestGitHub repository, triggering the ArgoCD to sync changes with the cluster.Send a Slack notification to the team with the pipeline’s exit status.

Subsequent Executions (Build No. > 1):

For all the following runs, the pipeline will:

Clean the workspace.

Clone the repositories for microservices and Kubernetes manifests.

Identify only the services affected by the latest commit. For each identified service:

New Service: If the service is new (not tracked), the pipeline will:

Build and tag the image with the initial version

v0.0.1.Add it to the tracked list.

Push the image to DockerHub.

Update its Kubernetes manifest to reflect the initial version

v0.0.1(assuming a manifest exists).

Updated Service: For an existing service (tracked), the pipeline will:

Retrieve its previous version and increment it (e.g., from

v0.0.1tov0.0.2).Build the service and tag with the new version.

Push the updated image to DockerHub.

Update its deployment manifest to reflect the incremented version.

Commit and push manifest updates to GitHub to trigger ArgoCD, which will sync these changes with the cluster.

Send a Slack notification with the pipeline’s exit status.

To ensure the pipeline remains concise and clear while incorporating these capabilities, we have created and organized the logic for coordinating these operations within a Jenkins shared library, which we’ll examine shortly.

Below is the Jenkins CI pipeline for building any microservice in our microservices application:

@Library("ci-library") _

pipeline {

agent { label 'Jenkins-Agent'}

environment {

INITIAL_VERSION = '0.0.1'

DOCKER_USER = 'mwanthi'

ROOT_DIR = '/home/ubuntu'

JOB_DIR = "${ROOT_DIR}/workspace/${env.JOB_NAME}"

MICRO_REPO = "${ROOT_DIR}/workspace/${env.JOB_NAME}/microservices-app"

SER_SRC_DIR = "${MICRO_REPO}/src"

KUBE_MANIFESTS_DIR = "${JOB_DIR}/kubernetes-manifests"

SER_TRACKING_DIR = "${ROOT_DIR}/track/${env.JOB_NAME}" // we could store this file externally (s3)

SER_TRACKING_FILE = "${SER_TRACKING_DIR}/existingServices.txt"

GITHUB_USER = 'D-Mwanth'

GITHUB_USER_EMAIL = 'dmwanthi2@gmail.com'

MICRO_REPO_URL = 'https://github.com/D-Mwanth/microservices-app.git'

MANIFESTS_REPO_URL = 'https://github.com/D-Mwanth/kubernetes-manifests.git'

BUILD_BRANCH = 'main'

}

stages {

stage('Cleanup Workspace') {

steps {

cleanWs()

}

}

stage('Checkout Microservices & K8s Manifests') {

steps {

script {

// Clone the microservices-app repository

dir('microservices-app') {

git branch: "${BUILD_BRANCH}", url: "${MICRO_REPO_URL}"

}

// Clone the kubernetes-manifests repository

dir('kubernetes-manifests') {

git branch: "${BUILD_BRANCH}", url: "${MANIFESTS_REPO_URL}"

}

}

}

}

stage('Determine Services to Build') {

steps {

script {

def servicesToBuild = determineServices(env.BUILD_NUMBER.toInteger(), MICRO_REPO)

echo "Services to Build: ${servicesToBuild}"

// Pass servicesToBuild to the environment for use in the next stage

env.SERVICES_TO_BUILD = servicesToBuild.join(',')

}

}

}

stage('Build or Rebuild Services') {

when {

expression { return env.SERVICES_TO_BUILD != '' }

}

steps {

script {

// Create services tracking dir and file if don't exist

sh "mkdir -p ${SER_TRACKING_DIR}"

sh "touch ${SER_TRACKING_FILE}"

// Set read, write, and execute permissions for all users on the manifest directory

sh 'chmod -R 777 ${KUBE_MANIFESTS_DIR}'