Introduction and Installation of Docker Container

Akash Sutar

Akash SutarTable of contents

Introduction

In this blog, we will explore Docker, a powerful containerization tool and a key development in application packaging solutions. Docker revolutionizes the way applications are developed, shipped, and deployed by enabling developers to package code, dependencies, and configuration into isolated containers. This approach ensures that applications run consistently across diverse environments, from a developer's local machine to testing and production servers. By simplifying deployment and enhancing scalability, Docker has become a foundational technology in modern DevOps practices, empowering teams to build, deploy, and manage applications with unmatched efficiency and speed.

Before we delve into the ocean of containerization, let’s first understand some of the basics of virtualization.

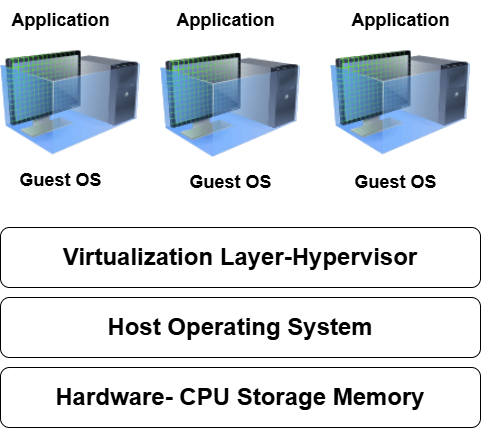

What is Virtualization?

Virtualization refers to a technology that allows us to run multiple Virtual Machines (VMs) or resources on top of a single physical hardware machine. It essentially divides the physical resources such as CPU, Storage, RAM, etc. to create Virtual Machines that run independently on the same Hardware, with their own Operating Systems and Applications.

How It Works:

Virtualization relies on a hypervisor, a software layer that sits between the hardware and virtual machines. The hypervisor allocates physical resources (like CPU, memory, and storage) to each VM and manages their operation. There are two main types of hypervisors:

Type 1 Hypervisors (Bare-metal): These run directly on the physical hardware. Examples include VMware ESXi, Microsoft Hyper-V, and KVM.

Type 2 Hypervisors (Hosted): These run on top of a host operating system, like VirtualBox or VMware Workstation.

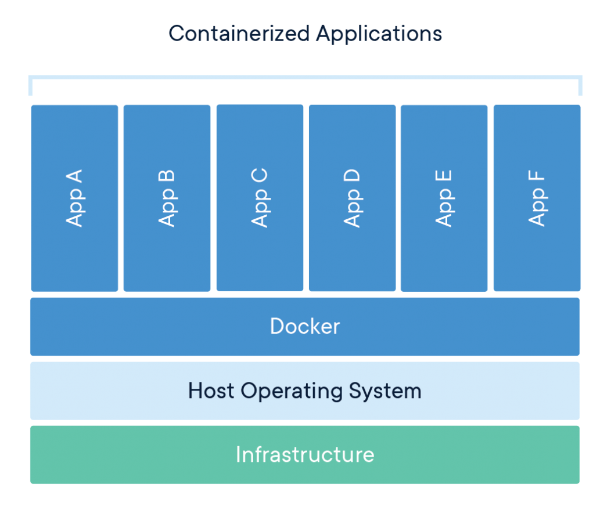

What is Containerization?

Containerization is an OS-level virtualization used to deploy and run distributed applications without launching an entire Virtual Machine for each application.

Benefits of using containers

Containers address one of the important issues developers face while sharing the code. Earlier, the code would run perfectly on the developers’ environment, however, it would fail when run on other environments. With the containers, the code is now shared with its dependencies wrapped together in a very lightweight format. This has resolved the issue of the code not running in different environments.

Unlike Virtual Machines, Containers are not resource-hungry.

They are lightweight and hence portable.

What is Docker?

Docker is an open platform for developing, shipping, and running applications. Docker enables you to separate your applications from your infrastructure so you can deliver software quickly. With Docker, you can manage your infrastructure in the same ways you manage your applications. By taking advantage of Docker's methodologies for shipping, testing, and deploying code, you can significantly reduce the delay between writing code and running it in production.

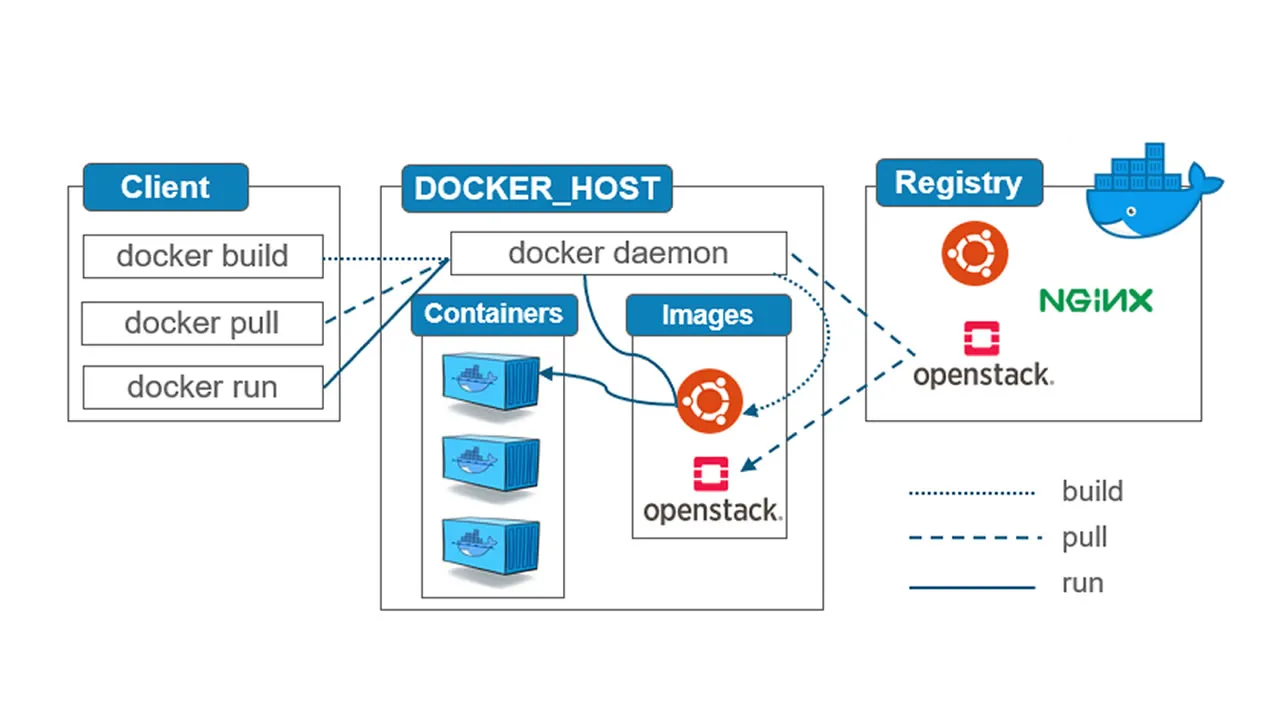

Docker Architecture

Docker uses a client-server architecture. The Docker client talks to the Docker daemon, which does the heavy lifting of building, running, and distributing our Docker containers. The Docker client and daemon can run on the same system, or we can connect a Docker client to a remote Docker daemon. The Docker client and daemon communicate using a REST API, over UNIX sockets or a network interface. Another Docker client is Docker Compose, which lets us work with applications consisting of a set of containers.

Components of Docker

Docker Hub

DockerHub is a Central public docker registry

It stores custom docker images for free, however, are publically available.

Docker Engine

Docker Engine is the heart of the Docker Ecosystem. It is responsible for managing our container runtimes. It utilizes the kernel of the underlying OS.

Docker Images

Docker images are read-only templates that contain instructions for creating a container. A Docker image is a snapshot or blueprint of the libraries and dependencies required inside a container for an application to run.

Docker images are made up of multiple stacked layers, each changing something in the environment. For example, to build a web server image, we can start with an image that includes Ubuntu Linux, and then add packages like Apache and PHP on top.

Docker Containers

A Docker container is a lightweight, standalone package that contains everything an application needs to run, including code, libraries, system tools, and runtime. Docker containers are used to build, test, and deploy applications quickly. These are created using the docker images.

Docker Volumes

Docker containers cannot persist data. To store the data in the containers, Dockers volumes can be used. A docker Volume can connect to multiple containers simultaneously. If it is not created explicitly, then it is automatically created when a container is created.

Docker Daemon and Client (CLI)

The Docker Daemon is a background process running on our host machine that takes care of managing Docker objects, such as images, containers, networks, and volumes. It performs the heavy lifting involved in building, running, and managing Docker containers.

Role: The daemon handles all Docker commands and requests it receives from the Docker CLI or other Docker APIs.

Process: Known as

dockerd, it’s responsible for creating and running containers, pulling images, and managing Docker resources.Communication: The Docker Daemon listens for requests on a socket or through a REST API, allowing the Docker CLI or other clients to communicate with it.

The Docker CLI is the command-line interface we use to interact with Docker. It allows us to issue commands to manage containers, images, volumes, and networks with a simple and consistent syntax.

How They Work Together

Command Input: When we enter a command using the Docker CLI (e.g.,

docker run), the CLI translates this command into an API request.Request Handling: The CLI sends the request to the Docker Daemon, which processes the command.

Execution: The Docker Daemon performs the requested actions, such as pulling an image, creating a container, or managing Docker resources.

Response: The Docker Daemon sends feedback to the CLI, which displays the output for us to see.

Role: It’s the user-facing component that sends our commands to the Docker Daemon. The CLI translates our commands into API calls, which are then sent to the Docker Daemon for processing.

Common Commands:

docker run: Creates and starts a container from a specified image.docker pull: Downloads an image from Docker Hub or another registry.docker build: Builds an image from a Dockerfile.docker ps: Lists all running containers.docker stop/docker start: Stops or starts containers.

Installation of Docker

We will install docker on an AWS instance with AMI of ubuntu.

The complete steps can be accessed here.

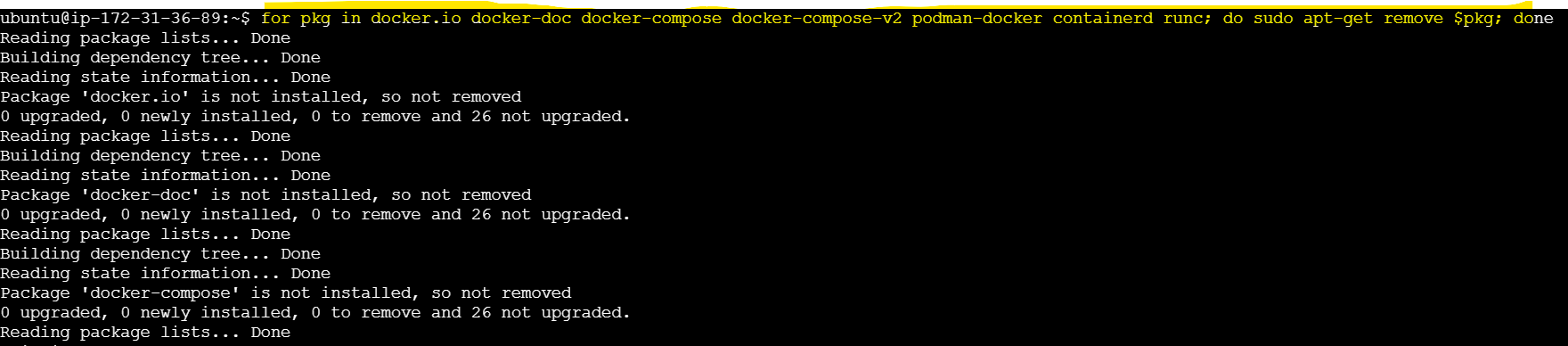

Uninstall older versions if any:

$

for pkg indocker.iodocker-doc docker-compose docker-compose-v2 podman-docker containerd runc; do sudo apt-get remove $pkg; done

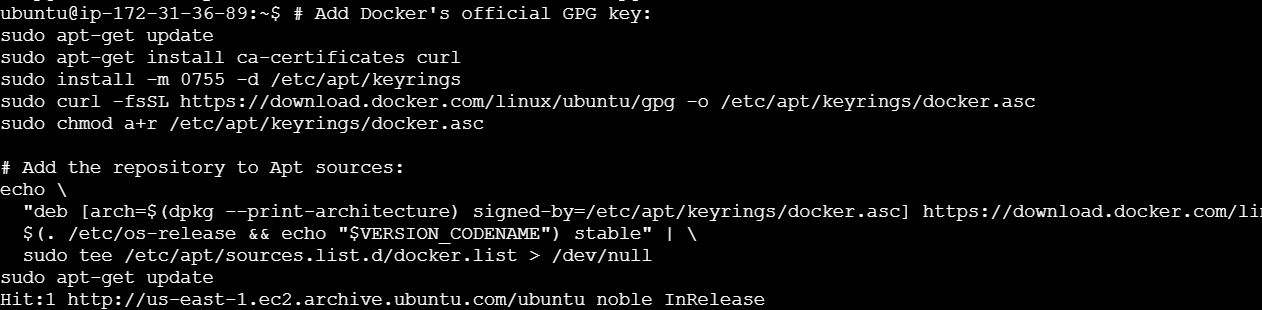

Setting up docker’s

aptrepository:#Add Docker's official GPG key: sudo apt-get update sudo apt-get install ca-certificates curl sudo install -m 0755 -d /etc/apt/keyrings sudo curl -fsSL https://download.docker.com/linux/ubuntu/gpg -o /etc/apt/keyrings/docker.asc sudo chmod a+r /etc/apt/keyrings/docker.asc #Add the repository to Apt sources: echo "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/ubuntu $(. /etc/os-release && echo "$VERSION_CODENAME") stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null sudo apt-get update sudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin#Add Docker's official GPG key:sudo apt-get update sudo apt-get install ca-certificates curl sudo install -m 0755 -d /etc/apt/keyrings sudo curl -fsSLhttps://download.docker.com/linux/ubuntu/gpg-o /etc/apt/keyrings/docker.asc sudo chmod a+r /etc/apt/keyrings/docker.asc#Add the repository to Apt sources:echo "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc]https://download.docker.com/linux/ubuntu$(. /etc/os-release && echo "$VERSION_CODENAME") stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null sudo apt-get update

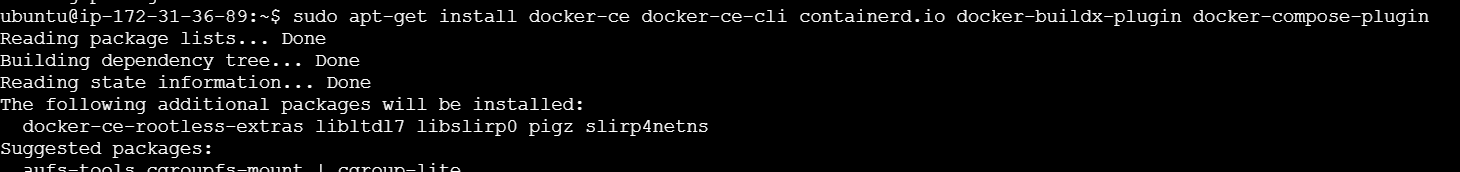

Install the docker packages:

sudo apt-get install docker-ce docker-ce-clicontainerd.iodocker-buildx-plugin docker-compose-plugin

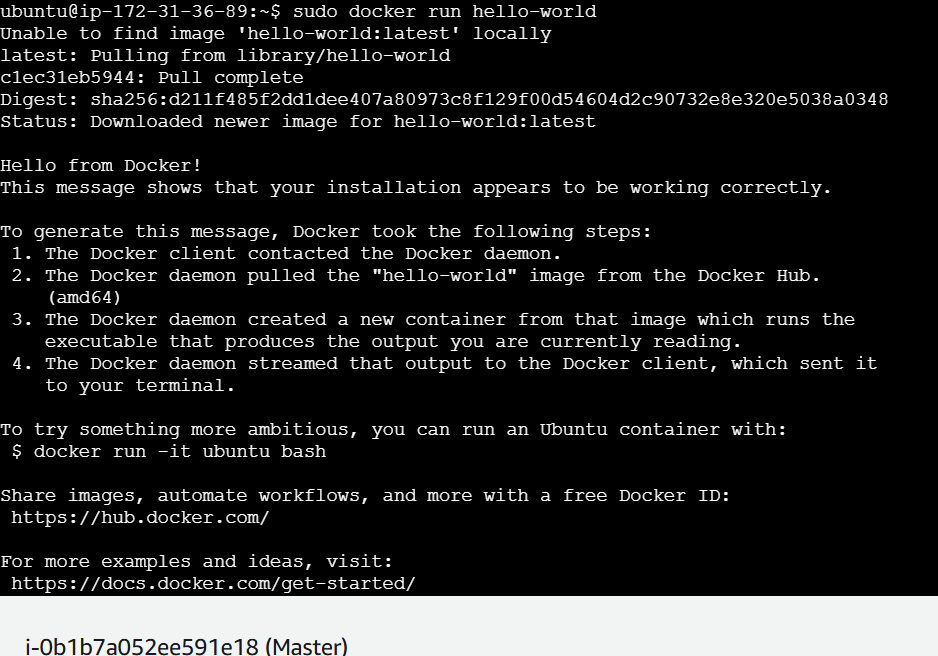

Verifying the docker engine installation

Common Docker Commands

Following are the commonly used docker commands

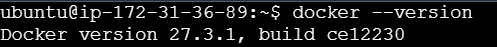

docker --versionused to check the version installed on docker

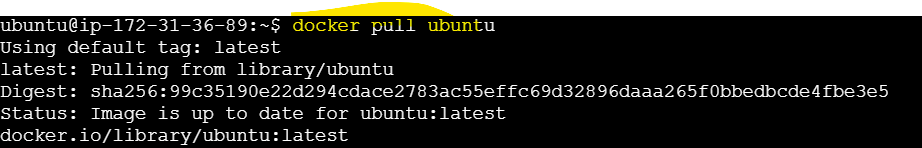

docker pull <image_name>command to pull an image from a remote docker registry

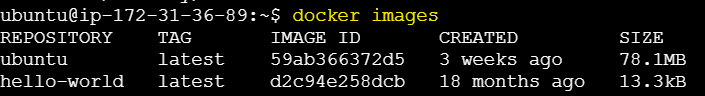

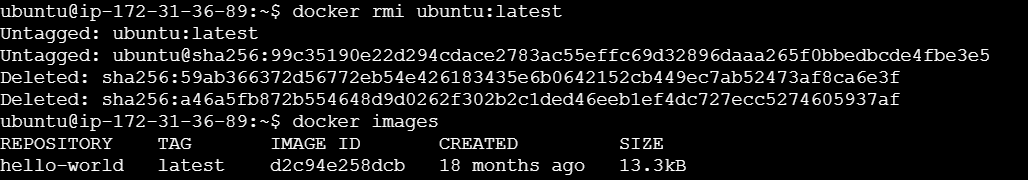

docker imagesCommand to list all the locally present docker images

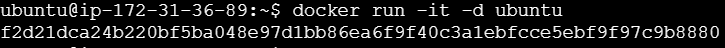

docker run -it -d ubuntuRuns a container in detached mode using an image

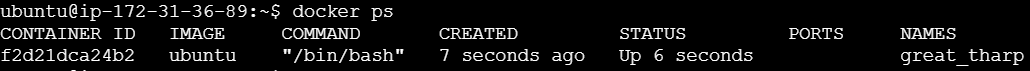

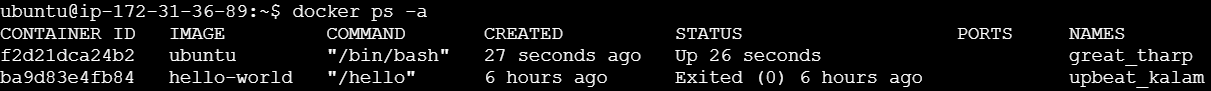

docker psLists the containers running

docker ps -aList all the containers including the stopped conatiners

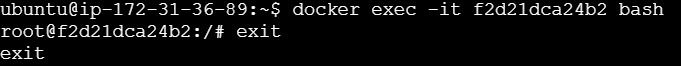

docker exec -it f2d21dca24b2 bashExecutes a command inside a running container

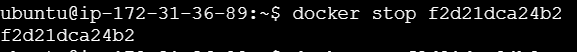

docker stop <container_id>To stop a running container

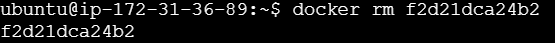

docker rm <container_id>To remove a stopped container

docker rmi ubuntu:latestTo remove an image from the system

Conclusion

In this article, we have seen what containerization is and how containers can outperform traditional virtual machines. Docker, one such containerization tool helps us simplify the application development. We understood key components such as Docker Hub, Docker Engine, Docker Images, Containers, Volumes, and the Docker Daemon and CLI. Further, we saw how docker is installed over an Ubuntu-based AWS EC2 instance. Docker is an essential technology in modern DevOps, offering consistent application performance across environments. We will dive deep into the containerization world in upcoming blogs.

Subscribe to my newsletter

Read articles from Akash Sutar directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by