Important Cheatcode of AWS

Ashwin

AshwinName 5 AWS services you have used and what are the use cases?

Here are five AWS services I have used and their use cases:

Amazon EC2(Elastic Compute Cloud): It provides scalable compute capacity in the cloud. I have used it to launch and manage virtual machines (EC2 instances) for various purposes such as web hosting and data processing.

Amazon S3(Simple Storage Service): It is an object storage service used to store and retrieve any amount of data from anywhere on the web. Used for hosting static websites, backup and archiving, and data lakes.

Amazon IAM (Identity and Access Management): It is a web service that helps you securely control access to AWS resources. You use IAM to control who is authenticated (signed in) and authorized (has permissions) to use resources.

Amazon CloudWatch: It is a monitoring and observability service for AWS resources and applications. CloudWatch can be used to monitor services like EC2, RDS, S3, and Lambda, as well as custom metrics generated by your applications.

Amazon RDS (Relational Database Service): It is a managed database service that makes it easy to set up, operate, and scale a relational database in the cloud. I have used it to create and manage MySQL databases for web applications. It offers high availability and automated backups for your application's database.

Following are the types of databases in RDS,

• Aurora • Oracle

• MYSQL server

• PostgreSQL

• MariaDB • SQL server

What are the tools used to send logs to the cloud environment?

Commonly used tools for sending logs to the cloud include:

Amazon CloudWatch Logs: Collects and monitors log data.

AWS CloudTrail: Records AWS API calls for your account and delivers log files.

What are IAM Roles? How do you create /manage them?

IAM Role is completely different from other IAM Users; you must grant permission for things like services that are running outside of AWS Or services for interacting with other AWS Accounts

To create/manage IAM Roles:

Go to the IAM Console.

Choose "Roles" in the navigation pane.

Select "Create Role" and follow the wizard to define the role's permissions, trusted entities, and tags.

Can we recover the EC2 instance when we have lost the key?

If you have lost the key pair used to authenticate with an EC2 instance, you cannot recover or regain access to the instance using that key.

However, you can still regain access in several ways: -

Recover the Original Key Pair: If you have a backup of the private key or can retrieve the lost key, you can regain access by replacing the key pair.Create a New EC2 Instance: If you can't recover the original key pair, you can create an AMI of the instance and launch a new one with a new key pair.Access via Instance Metadata (Linux Instances): In some cases, for Linux instances with IAM roles, you may access the instance using instance metadata and the public key.

How do you upgrade or downgrade a system with zero downtime?

To upgrade or downgrade a system with zero downtime, you can use techniques such as blue-green deployment, rolling deployment, or canary deployment. These techniques involve creating a duplicate environment, deploying the updated version to the duplicate environment, and gradually shifting traffic from the old environment to the new one

The main ways an application communicates with the outside world

🔹 1. Public IP or DNS

- Direct access via public IP or domain (e.g.,

app.example.com)

🔹 2. Load Balancer

- Routes external traffic to internal services (e.g., AWS ELB)

🔹 3. NAT Gateway

- Enables outbound internet access for private apps (no public IP)

🔹 4. Reverse Proxy / API Gateway

- Intercepts requests and forwards them (e.g., Nginx, AWS API Gateway)

🔹 5. Kubernetes Services

- NodePort, LoadBalancer, and Ingress expose pods to external traffic

🔹 6. Open Ports & Protocols

- App listens on specific TCP/UDP ports (e.g., 80 for HTTP, 443 for HTTPS)

🔹 7. DNS & Service Discovery

- Resolves names to IPs; used for locating services

🔹 8. Messaging Queues & Event Systems

- Communicates via Kafka, RabbitMQ, AWS SQS for async data transfer

🔹 9. CDN / Edge Services

- Distribute and cache content closer to users (e.g., CloudFront)

What is a load balancer? Give scenarios of each kind of balancer based on your experience.

A load balancer distributes incoming network traffic across multiple servers to ensure no single server bears too much load. There are Application Load Balancers (ALBs) for HTTP/HTTPS traffic and Network Load Balancers (NLBs) for TCP/UDP traffic.

Scenario:

ALB: Distributes web traffic to multiple EC2 instances for a scalable web application.

NLB: Ensures even distribution of TCP traffic for a reliable and fault-tolerant application.

GWLB: GWLB is designed to manage and route traffic to virtual appliances deployed in your VPC (Virtual Private Cloud) for specific use cases like firewalls, intrusion detection, and more.

If you're using load balancing in 2 availability zones, which load balancer should you use?

Use an Application Load Balancer (ALB) or a Network Load Balancer (NLB) depending on your needs:

ALB: For HTTP/HTTPS traffic with advanced routing features.

NLB: For high-performance TCP/UDP traffic with very low latency.

Both support cross-zone load balancing.

What is CloudFormation and why is it used?

AWS CloudFormation is a service that allows you to define and provision infrastructure as code, enabling you to create, update, and manage AWS resources in a declarative and automated way. You can spend less time managing resources and more time focusing on your applications. It's a tool that helps you create and manage all the different pieces of your cloud setup—like virtual servers, databases, storage, and more—without manually clicking around in the AWS console every time you need something new.

How do you access the data on EBS in AWS?

Data cannot be accessible on EBS directly by a graphical interface in AWS. This process includes assigning the EBS volume to an EC2 instance.

Here, when the volume is connected to any of the instances, either Windows or Unix, you can write or read on it. First, you can take a screenshot from the volumes with data and build unique volumes with the help of screenshots. Here, each EBS volume can be attached to only a single instance.

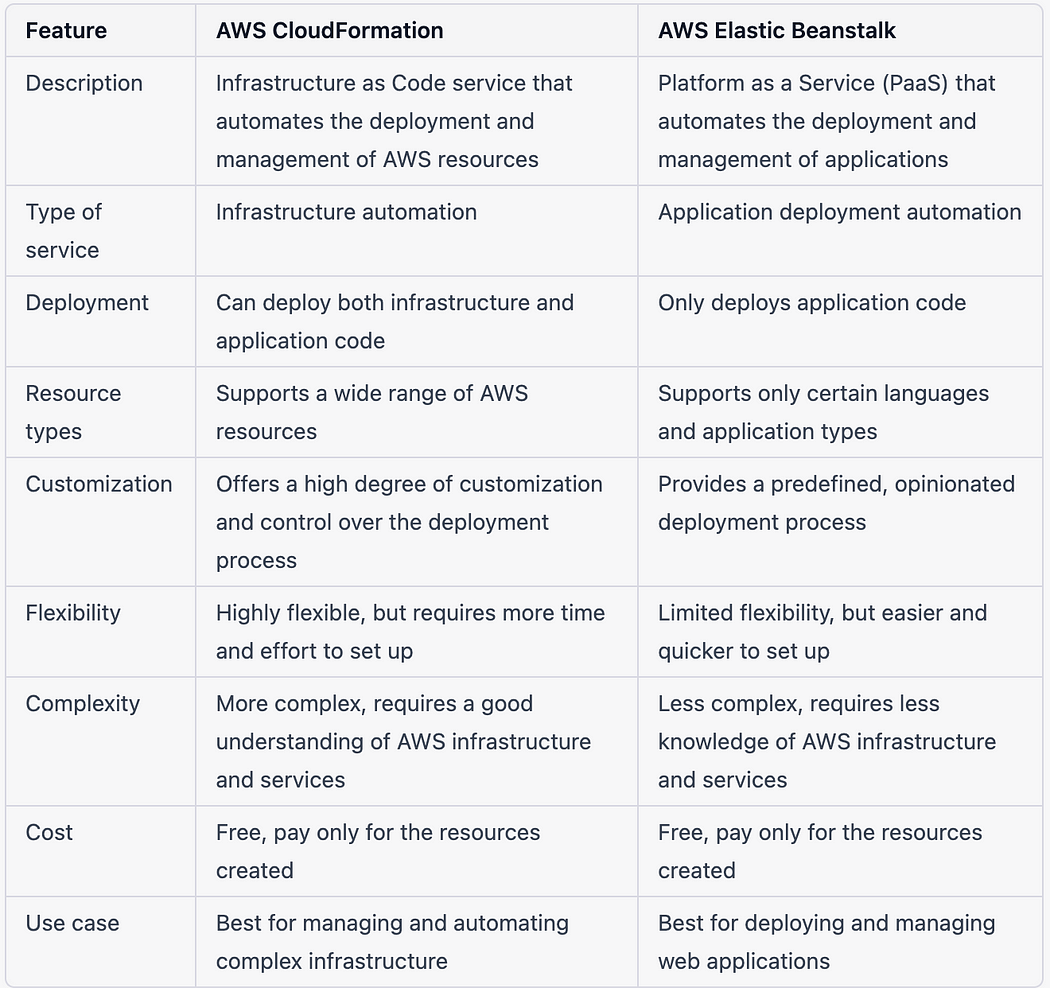

Difference between AWS CloudFormation and AWS Elastic Beanstalk?

CloudFormation: AWS CloudFormation is a service that allows you to define and provision infrastructure as code, enabling you to create, update, and manage AWS resources in a declarative and automated way. You can spend less time managing resources and more time focusing on your applications.

Elastic Beanstalk: AWS Elastic Beanstalk is a platform-as-a-service (PaaS) offering that simplifies application deployment and management. It handles infrastructure provisioning, deployment, monitoring, and scaling, allowing developers to focus on writing code.

It is the fastest and simplest way to deploy your application on AWS using either AWS Management Console, a Git repository, or an integrated development environment (IDE).

What are the kinds of security attacks that can occur on the cloud? And how can we minimize them?

Common security attacks include DDoS attacks, data breaches, and unauthorized access. To minimize them, employ measures such as:

*Regularly updating and patching systems

* Using firewalls and security groups.

* Implementing encryption for data at rest and in transit.

* Monitoring and auditing activities with services like AWS CloudTrail.

How do you handle data encryption in AWS?

Data encryption in AWS can be handled using services like AWS Key Management Service (KMS), AWS CloudHSM for hardware-based key storage and cryptographic operations, and encryption features available in other AWS services such as Amazon S3, Amazon RDS, and Amazon EBS.

What is AWS API Gateway, and how is it used?

AWS API Gateway is a fully managed service for creating, deploying, and managing APIs at scale. It allows you to expose backend services as RESTful APIs, WebSocket APIs, or HTTP APIs and provides features such as request/response transformations, authorization, throttling, and monitoring.

What is a gateway?

In AWS, a gateway is a network device that connects different networks. For example, an Amazon VPC (Virtual Private Cloud) can be connected to an on-premises network using an AWS VPN Gateway

Are you only using CloudWatch for monitoring?

No, CloudWatch is one of several tools for monitoring in AWS. You can also use:

AWS X-Ray: For distributed tracing.

CloudTrail: For API call monitoring and auditing.

Third-party tools: Like Datadog, New Relic, or Prometheus for advanced monitoring and alerting.

What is AWS ECS (Elastic Container Service), and how does it compare to AWS EKS (Elastic Kubernetes Service)?

AWS ECS is a fully managed container orchestration service for Docker containers, allowing you to run, stop, and manage containerized applications. AWS EKS is a managed Kubernetes service that simplifies the deployment, management, and scaling of Kubernetes clusters. ECS is tightly integrated with other AWS services, while EKS provides more flexibility and compatibility with the Kubernetes ecosystem.

What is offered under Messaging services by Amazon?

Amazon offers various messaging services. They are: -

Amazon Simple Notification Service (SNS)is a fully managed, secured, available messaging service by AWS that helps decouple Serverless applications, micro-services, and distributed systems. SNS can be started within minutes from either the AWS management console, command-line interface, or software development kit.Amazon Simple Queue Service (SQS)is a fully managed message queue for Serverless applications, micro-services, and distributed systems. The advantage of SQS FIFO guarantees single-time processing and exact orders sent by this kind of messaging service.Amazon Simple Email Service (SES)offers sending and receiving email services for informal, notify, and marketing correspondence via email for their cloud customers through the SMTP interface.

Can we recover the EC2 instance when we have lost the key?

Yes, you can recover an EC2 instance when you have lost the key by creating an Amazon Machine Image (AMI) of the instance, launching a new instance from the AMI, and specifying a new key pair.

What is VPC Peering and how does it work?

VPC Peering is a networking connection between two Virtual Private Clouds (VPCs) that enables traffic routing between them using private IP addresses. VPC Peering allows resources in each VPC (like EC2, RDS) to communicate as if they are within the same network.

How it works:

You create a peering connection between two VPCs.

You must update the route tables of both VPCs to allow traffic to flow.

The traffic stays on AWS's private network; no public internet involved.

It is non-transitive — if A peers with B and B with C, A cannot access C.

AWS CI/CD pipeline works.

➤ 𝐒𝐨𝐮𝐫𝐜𝐞 𝐒𝐭𝐚𝐠𝐞

It all starts when developers push code to a repository like GitHub or CodeCommit. This triggers a pipeline via a webhook or CloudWatch event.

➤ 𝐁𝐮𝐢𝐥𝐝 𝐒𝐭𝐚𝐠𝐞

AWS CodeBuild (or Jenkins on EC2) kicks in. It compiles the code, runs unit tests, lints the project, and creates build artifacts. These artifacts are pushed to S3 or an artifact store like ECR if we’re building Docker images.

➤ 𝐓𝐞𝐬𝐭 𝐒𝐭𝐚𝐠𝐞

Optional but powerful. You can run integration or security tests here. Think of tools like SonarQube, Trivy, or AWS Inspector. Fail fast, fix early.

➤ 𝐃𝐞𝐩𝐥𝐨𝐲 𝐒𝐭𝐚𝐠𝐞

Based on the environment (dev, staging, or prod), the pipeline uses AWS CodeDeploy, CloudFormation, or even CDK to deploy infrastructure and application code.

For container-based apps, ECS or EKS handles deployments. For serverless, it's Lambda and SAM.

➤ 𝐑𝐨𝐥𝐥𝐛𝐚𝐜𝐤 𝐒𝐭𝐫𝐚𝐭𝐞𝐠𝐲

Things break. Rollbacks are handled via deployment hooks, versioned artifacts, or blue-green/canary strategies in CodeDeploy or ECS.

➤ 𝐌𝐨𝐧𝐢𝐭𝐨𝐫𝐢𝐧𝐠 𝐚𝐧𝐝 𝐀𝐥𝐞𝐫𝐭𝐬

CloudWatch logs everything. Alarms can notify you via SNS or trigger rollbacks. X-Ray, Prometheus, and Grafana help trace and debug real-time issues.

➤ 𝐒𝐞𝐜𝐫𝐞𝐭𝐬 𝐚𝐧𝐝 𝐂𝐨𝐧𝐟𝐢𝐠

Secrets Manager or Parameter Store injects sensitive values safely at runtime. IAM roles ensure the least privilege across every stage.

Just wrapped up building a fully automated CI/CD pipeline using AWS services! Leveraged tools .Embracing Infrastructure as Code and automation has made our DevOps process more efficient and scalable. Always exciting to see how powerful cloud-native solutions can transform workflows!

Explain different AWS services to manage cost!

These services collectively help organizations monitor, analyze, and optimize their AWS costs.

1. AWS Cost Explorer: Visualizes and analyzes AWS spending patterns with forecasting and budgeting features.

2. AWS Budgets: Allows setting custom spending thresholds and sends alerts when exceeded.

3. AWS Trusted Advisor: Provides actionable recommendations for optimizing AWS infrastructure across various aspects.

- AWS Cost and Usage Report (CUR): Offers detailed usage and cost data for in-depth analysis and reporting.

5. AWS Savings Plans: Flexible pricing models for significant savings on committed usage.

What is the use of Amazon ElastiCache?

Amazon ElastiCache is a web service that makes it easy to deploy, operate, and scale an in-memory data store or cache in the cloud.

What is the most expensive- Lambda or EC2?

Ans: It depends

I have designed an app where Lambda was more expensive than EC2. We can check through the AWS Cost calculator to estimate the cost for both lambda and ec2 with estimated traffic for a better-informed decision.

Is it possible to run any VM in AWS without creating any EC2 instance?

No, EC2 instances are the virtual machines (VMs) in AWS. However, you can run containers using Amazon ECS or Amazon EKS, which abstract away the underlying EC2 instances. You could also use AWS Lambda for serverless workloads without managing VMs.

Describe the key differences between Amazon EC2 and AWS Lambda. When would you choose one over the other for a specific task?

Amazon EC2 provides virtual servers that you manage, while AWS Lambda runs code in response to events and scales automatically. Choose EC2 for long-running tasks or when you need more control over the environment. example: hosting a website with specific software requirements or running a database server.

Choose Lambda for event-driven, short-lived tasks with automatic scaling. example: processing event-driven actions such as file uploads, database updates, or API requests

Your EC2 has a public IP and the port is open in the security group, but it's unreachable. Why?

Check the subnet's network ACL. If inbound or outbound rules are blocking traffic, the security group won't help. NACLs silently drop traffic with no message.

You shared an AMI with another AWS account, but they still can't launch an instance from it. What's usually missed?

Sharing the AMI isn't enough. You also need to share the associated EBS snapshot. Without that, the AMI looks valid but fails at launch.

You created a VPC endpoint for S3 and now your Lambda can't call external APIs. Why?

If your Lambda is in a private subnet, it can't reach the internet without a NAT Gateway. An Internet Gateway only works when the subnet is public, the route table points to the IGW, and the resource has a public IP. Lambda does not get a public IP, so IGW alone won't help.

One of the most common interview topics and real-world project implementation is three-tier Architecture.

1. The first layer is the presentation layer. Customers consume the application using this layer. Generally, this is where the front end runs. For example - amazon.com website. This is implemented using an external facing load balancer distributing traffic to VMs (EC2s) running webserver.

2. The second layer is the application layer. This is where the business logic resides. Going with the previous example - you browsed your products on amazon.com, and now you found the product you like and then click "add to cart". The flow comes to the application layer, validates the availability, and then creates a cart. This layer is implemented with an internal facing load balancer and VMs running applications.

3. The last layer is the database layer. This is where information is stored. All the product information, your shopping cart, order history, etc. The application layer interacts with this layer for CRUD (Create, Read, Update, Delete) operations. This could be implemented using one or a mix of databases - SQL (e.g. Amazon Aurora), and/or NoSQL (DynamoDB)

Is it possible to configure communication between two servers that have private access?

Yes, communication can be configured between two servers with private access within a VPC by ensuring they are in the same security group, or by configuring appropriate VPC peering, VPN, or Transit Gateway to allow routing between the instances.

What is the difference between Amazon RDS, DynamoDB, and Redshift?

- Amazon RDS: Amazon Relational Database Service is a collection of managed database services that makes it simple to set up, operate, and scale databases in the cloud. It supports multiple database engines, including MySQL, PostgreSQL, Oracle, SQL Server, and MariaDB. I have created my MySQL database for my application.

Advantages of RDS include managed backups, automated patching, and scaling. Disadvantages include less control over the database environment compared to managing it directly on EC2 instances.

DynamoDB: Fully managed NoSQL database service designed for high-performance applications.

Redshift: Fully managed data warehouse service designed for analytics queries on large datasets.

How do you choose the right database service in AWS for a specific application requirement?

RDS is ideal for applications that require a traditional relational database with standard SQL support, transactions, and complex queries.

Amazon DynamoDB suits applications needing a highly scalable, NoSQL database with fast, predictable performance at any scale. It's great for flexible data models and rapid development.

Amazon Redshift is best for analytical applications requiring complex queries over large datasets, offering fast query performance by using columnar storage and data warehousing technology

Explain AWS DevOps tools to build and deploy software in the cloud.

AWS DevOps tools to build and deploy software in the cloud includes: -

AWS Cloud Development Kit: It is an open-source software development framework for modeling and provisioning cloud application resources with popular programming languages.AWS CodeBuild: It is a continuous integration service that processes multiple builds and tests code with continuous scaling.AWS CodeDeploy: It helps to automate software deployments to any of the on-premises servers to choose from such as Amazon EC2, AWS Fargate, AWS Lambda, etc.AWS CodePipeline: It automates code received through continuous delivery for rapid and accurate updates.AWS CodeStar: It is a user interface that helps the DevOps team to develop, build, and deploy applications on AWS.AWS Device Farm: It works as a testing platform to test applications on different mobile devices and browsers.

What is offered under Migration services by Amazon?

Amazon offers various migration services. They are: -

Amazon Database Migration Service (DMS)is a tool for migrating data extremely fast from an on-premise database to Amazon Web Services cloud. DMS supports RDBMS systems like Oracle, SQL Server, MySQL, and PostgreSQL in on-premises and the cloud.Amazon Server Migration Services (SMS)helps in migrating on-premises workloads to Amazon web services cloud. SMS migrates the client’s server VMWare to cloud-based Amazon Machine Images (AMIs),Amazon Snowballis a data transport solution for data collection, machine learning, processing, and storage in low-connectivity environments.

Can a connection be made between a company's data center and the Amazon cloud? How?

Yes, a connection can be established between a company's data center and Amazon Web Services using

AWS Direct Connect: A dedicated, high-bandwidth link for a private connection.

Virtual Private Network: An encrypted connection over the internet for secure data transmission.

Explain the steps to set up a secured VPC with subnets and everything

1. Create VPC: Define VPC CIDR block and tenancy. Enable DNS support and DNS hostnames if needed.

2. Create Subnets: Allocate CIDR blocks for subnets. Spread subnets across availability zones for redundancy.

3. Configure Route Tables: Define routes for internet-bound traffic. Associate subnets with route tables.

4. Set Up NACLs: Configure inbound and outbound rules. Associate NACLs with subnets.

5. Implement Security Groups: Define inbound and outbound rules. AWS Interview questions with Answers 2 Associate security groups with instances.

- Add Internet Gateway (IGW): Attach IGW to VPC. Update route tables for internet access.

7. Optional - NAT Gateway/Instance: Set up in public subnet for private subnet internet access.

8. Enable Monitoring: Enable VPC Flow Logs for traffic analysis. Monitor with CloudWatch

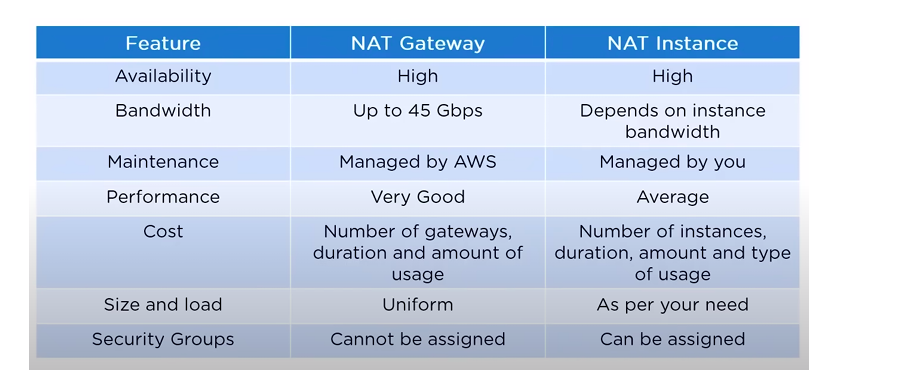

What is the difference between NAT gateways and NAT instances? why do we use them?

NAT Gateway: Managed by AWS, high performance, and availability, no administration needed.

NAT Instance: User-managed EC2 instance, requires manual scaling and administration, less scalable and available compared to NAT Gateway

Use Cases:

Enhance security by avoiding direct exposure of internal resources to the internet.

Facilitate compliance and address translation.

The choice depends on factors like performance, scalability, and management preferences

Both enable outbound internet access for resources in private subnets.

Do you prefer to host a website on S3? What’s the reason if your answer is either yes or no?

Yes, Host on S3:

Cost-Effective: Hosting a website on S3 is cost-effective, especially for static websites with low traffic. You pay only for the storage and data transfer you use.Scalability: S3 can handle high traffic volumes and is automatically scalable. It's suitable for small to medium websites.Simple Setup: Setting up a static website on S3 is straightforward, and AWS provides tools to simplify the process.Security: S3 allows fine-grained control over access permissions, and you can integrate it with other AWS services for added security.

No, Don’t Host on S3:

Dynamic Content: If your website relies on dynamic content generated by a server, S3 alone is not suitable. You'd need a web server or serverless architecture to handle dynamic requests.Database: If your website requires a database for user authentication, e-commerce functionality, or content management, S3 is not the best choice. You'd need a more comprehensive hosting solution.Complexity: For complex websites with many features, interactivity, and databases, using S3 alone may become complex to manage, and other hosting solutions might be more appropriate.

What are the parameters for S3 pricing?

The following are the parameters for S3 pricing:

Transfer acceleration

Number of requests you make

Storage management

Data transfer

Storage used

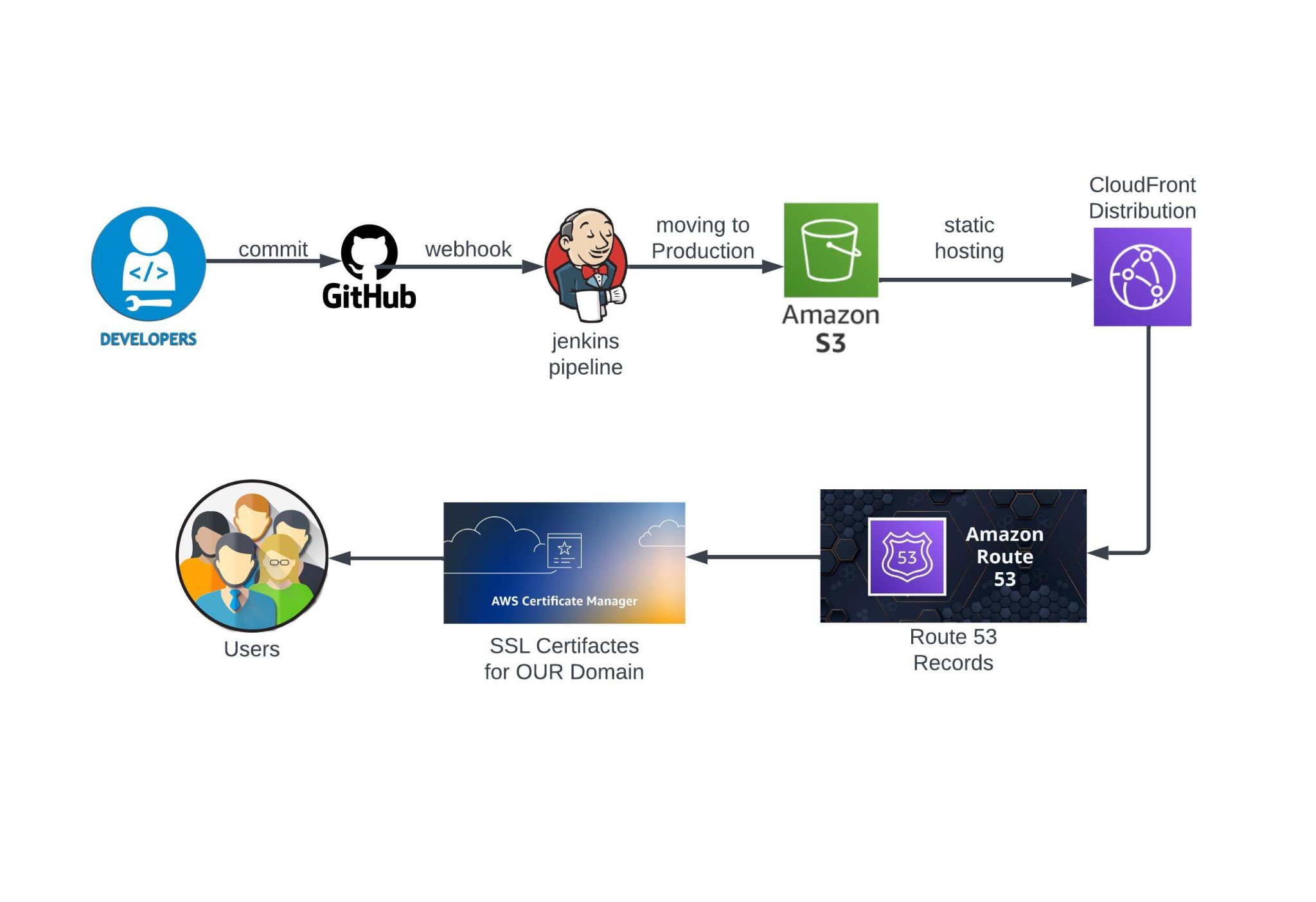

How to Build a Scalable and Secure Static Website with AWS and Jenkins?

GitHub Repository for Static Code: We stored our static website code in a GitHub repository, ensuring version control and collaboration efficiency.

Jenkins Pipeline for Automation: We created a Jenkins pipeline that uses GitHub webhooks. Whenever code is pushed to the repository, the webhook triggers the Jenkins pipeline to upload the latest code to an Amazon S3 bucket.

S3 Bucket Configuration: We enabled static website hosting and version control on our S3 bucket, updating policies to manage access effectively.

CloudFront Distribution: We set up a CloudFront distribution to serve our S3 content globally, ensuring fast and reliable access. Post-setup, we updated the S3 bucket policy to allow CloudFront access.

Domain Purchase and Route 53 Setup: After purchasing a domain from providers like GoDaddy, we used Route 53 to create DNS records, mapping our domain to the CloudFront distribution.

SSL Certificates with AWS Certificate Manager: We created SSL certificates for our domain and mapped the records to ensure secure access over HTTPS.

Accessing the Application: Finally, we accessed our application through the newly configured domain, enjoying a seamless and secure user experience

What are the different ways to access AWS services?

AWS Management Console: Web-based interface for point-and-click management of AWS services

AWS Command Line Interface: The AWS CLI is a command-line tool provided by AWS for interacting with various AWS services. It allows users to manage resources, configure services, and automate tasks through a command-line interface, making it an essential tool for DevOps engineers.

Software Development Kits: SDK stands for software development kit. An SDK is a set of tools to build software for a particular platform. These tools also allow an app developer to build an app which can integrate with another program.

AWS CloudFormation: Infrastructure as Code for defining and provisioning AWS resources

🚀 AWS Service Limitations You Should Know 🚀

As cloud architects, developers, and DevOps engineers, understanding AWS service limitations is crucial for designing scalable and efficient systems. Here's a quick rundown of some important AWS limits to keep in mind when building on the cloud:

📌 Storage & Data

EBS Volume Size: Max 64TB

S3 Object Size: Max 5TB

RDS Storage: Max 64TB

DynamoDB Item Size: Max 400KB

ECR Image Size: Max 10GB

📌 Networking

VPC CIDR Blocks: Max 5 per VPC

VPC Peering: Max 125 per VPC

VPC Route Table Entries: Max 50 per route table

VPC Security Group Rules: Max 60 in/out per group

Subnet IP Limit: Based on CIDR (e.g., /28 = 11 usable IPs)

📌 Compute & Application

Glue Job Timeout: Max 48 hours

Lambda Package Size: 50MB zipped, 250MB unzipped

ECS Task Definition Size: Max 512KB

EC2 Instance Limit: 20 per region (soft limit)

📌 Messaging & API

SNS Message Size: Max 256KB

SQS Message Size: 256KB (standard), 2GB (extended)

API Gateway Payload: Max 10MB (REST), 6MB (WebSocket)

📌 Monitoring & Security

CloudWatch Log Event Size: Max 256KB

Secrets Manager Secret Size: Max 64KB

📌 Others

Kinesis Data Record: Max 1MB

C CloudFront Distributions per AWS account: Max 200 per account

ELB Target Groups: Max 100 per load balancer

Route 53 DNS Records: Max 10,000 per hosted zone

While these limits can often be increased by AWS upon request, it’s essential to design with these in mind to avoid surprises.

Tip: Always plan your architecture with scalability and these limits in mind.

What are the target groups for AWS?

Target groups route requests to individual registered targets, such as EC2 instances, using the protocol and port number that you specify

Your team is adopting Docker for containerization. How would you deploy and manage Docker containers on AWS?

Deploy Docker containers using Amazon Elastic Container Service (ECS) or Amazon Elastic Kubernetes Service (EKS). These services provide managed container orchestration, scaling, and integration with other AWS services

How do you monitor, and debug applications deployed on AWS?

Monitoring and debugging on AWS can be done using services like CloudWatch for monitoring metrics, logs, and events, X-Ray for tracing requests through distributed systems, and AWS Config for auditing and tracking changes to AWS resources.

How to get an SSL certificate in AWS?

To obtain an SSL certificate in AWS, you can use the AWS Certificate Manager (ACM). Here's a brief overview of the process:

Sign in to AWS Console: Log in to the AWS Management Console using your credentials.

Navigate to AWS Certificate Manager: Go to the ACM service by searching for "Certificate Manager" in the AWS Management Console.

Request a Certificate: Click on the "Request a certificate" button.

Enter Domain Names: Enter the domain names (e.g., example.com, www.example.com) for which you want to request SSL certificates. You can also add additional domain names (SANs) if needed.

Select Validation Method: Choose a validation method to prove that you own the domain. ACM supports DNS validation and email validation.

DNS Validation (Recommended): If you choose DNS validation, ACM will provide you with a DNS record that you need to add to your DNS configuration. Once added, ACM will verify the DNS record to validate your ownership of the domain.

Email Validation: If you choose email validation, ACM will send verification emails to the domain owner's email addresses listed in the WHOIS record or common administrative email addresses like admin@example.com, webmaster@example.com, etc.

Review and Confirm: Review the details of your certificate request and confirm.

Validation: Wait for the validation process to complete. This may take a few minutes or longer depending on the validation method chosen.

Certificate Issued: Once validation is successful, ACM will issue the SSL certificate for the requested domain names.

Deploy Certificate: After the certificate is issued, you can deploy it to your AWS resources, such as Elastic Load Balancers (ELB), CloudFront distributions, API Gateways, or directly to EC2 instances.

Configure Your AWS Resources: Update your AWS resources' configurations to use the newly issued SSL certificate for secure communication.

Explain the process of rolling back a failed deployment in AWS.

To roll back a failed deployment in AWS:

1. Identify Failure: Monitor the deployment process to pinpoint the failure.

2. Stop Deployment: Halt any ongoing deployment to prevent further changes.

3. InvestigateCause: Analyze logs and errors to understand the issue.

4. Rollback Plan: Create a plan to revert changes made during the failed deployment.

5. Execute Rollback: Implement the rollback plan to restore the previous state.

6. Verify: Ensure the rollback is successful and the system functions as expected.

7. Communicate: Keep stakeholders informed about the status and resolution.

8. Learn and Improve: Conduct a post-mortem analysis to learn from the failure and prevent future issues.

Deploy a static site from AWS S3

Create an S3 bucket:

Log in to your AWS Management Console, navigate to the S3 service, and create a new bucket. Name it according to your preference, keeping in mind that bucket names must be globally unique.

Upload HTML file to bucket:

After creating the bucket, upload your website's static file (HTML, CSS, JavaScript, images, etc.) into the bucket. Make sure to set the appropriate permissions for these files so they can be publicly accessible.

Example HTML file to upload:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-

width, initial-scale=1.0">

<title>Serving from S3 Bucket</title>

</head>

<body>

<h1>Serving from 53 Bucket</h1>

<p>This is a simple HTML file served from an S3

bucket.</p>

</body>

</html>

Turn on static web hosting:

In the bucket properties, find the "Static website hosting" section and click on "Edit". Select the option to enable static website hosting, and specify the index document (e.g., index.html)

Allow public traffic:

To allow public access to your website files, you'll need to set a bucket policy. Go to the bucket's permissions tab, click on "Bucket Policy", and add a policy allowing read access to all users ("*") for the resources in your bucket.

Site should be live:

Once everything is set up, you can access your website using the endpoint provided in the "Static website hosting" section of your bucket properties.

Subscribe to my newsletter

Read articles from Ashwin directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Ashwin

Ashwin

I'm a DevOps magician, conjuring automation spells and banishing manual headaches. With Jenkins, Docker, and Kubernetes in my toolkit, I turn deployment chaos into a comedy show. Let's sprinkle some DevOps magic and watch the sparks fly!