APEX: OCI Speech Integration

Sydney Nurse

Sydney Nurse

The dominance of Generative AI + AI in conversations is undeniable, encroaching on daily work with the promise of replacing ineffective, inefficient, and cumbersome communication methods, like email or for the dinosaurs in the room a phone call.

As my engagements include exploring these new set services, much has stayed the same.

it’s a Service - so it costs $$ (dollar bills ya’ll)

it’s a set of REST APIs

it’s available in the Oracle Cloud set of Infrastructure Services

I’m no pundit nor will I pretend to be one. I’m just a simple consumer trying to understand the product I just brought and how to benefit from it in a meaningful manner.

Hence OCI Speech, like other AI services, Document Understanding, Language, or the new kid on the block , Generative AI; myself and others are seeking ways these services can be useful to us.

About this Post

In today’s post, I’ll share a little of my recent experience as a developer and consumer of the OCI Speech APIs and current development project.

Speech AI services transcribe spoken language from digital content using automatic speech recognition (ASR) technology to provide a grammatically correct transcription. These services will typically process media content or spoken speech, supporting multiple languages with a high degree of success.

So, something that would have taken a 1.X factor from listening to transcribing, no shorthand allowed, is now a few minutes.

Very useful indeed.

The team I have been asked to support is seeking to turn, meeting recordings from shelf-ware into a searchable, conversational knowledge base with the first stage being transcription.

What are we really going to talk about?

If you were expecting a deep dive into How-To consume this API, stop reading now!

In my previous articles I cover how you to start on this journey exploring the OCI API Documentation, so working with this API will not especially different.

What are we really going to talk about then? Well, I will attempt to share how I worked with and landed on parsing the response as a non-developer.

Understanding the API always starts with the documentation but receiving an actual response is better and changes everything.

Speech API Responses

The Transcription Job Create API launches the Speech analysis of media content. The mapping of the create transcription job details data type as an APEX REST data source Request Body Template can look this

{

"compartmentId": "#COMPARTMENT_ID#",

"displayName": "#OBJECT_NAME#",

"inputLocation":

{

"locationType": "OBJECT_LIST_INLINE_INPUT_LOCATION",

"objectLocations": [

{

"bucketName": "#IN_BUCKET_NAME#",

"namespaceName": "#NAMESPACE#",

"objectNames": ["#OBJECT_NAME#"]

}]

},

"outputLocation":

{

"bucketName": "#OUT_BUCKET_NAME#",

"namespaceName": "#NAMESPACE#",

"prefix": "#PREFIX#"

},

"modelDetails":

{

"languageCode": "#LANG_CODE#",

"modelType": "#MODEL_TYPE#",

"transcriptionSettings":

{

"diarization":

{

"isDiarizationEnabled": "true"

}

}

}

}

Use SQL/JSON functions in a query you can combine transactional data and generate the body document for the request. This will be much simpler with JSON Duality Views but not everyone has a running Oracle DB 23ai instance up and running.

Here is a sample

DECLARE

l_response clob;

l_body clob;

BEGIN

-- FILENAME bind variable is passed into this call

SELECT

JSON

{

'compartmentId' VALUE ac.compartment,

'displayName' VALUE REPLACE(:FILENAME,' ','-'),

'inputLocation' VALUE

{

'locationType' VALUE 'OBJECT_LIST_INLINE_INPUT_LOCATION',

'objectLocations' VALUE [

{

'bucketName' VALUE ac.input_location,

'namespaceName' VALUE ac.namespace,

'objectNames' VALUE [:FILENAME]

}]

},

'outputLocation' VALUE

{

'bucketName' VALUE ac.output_location,

'namespaceName' VALUE ac.namespace,

'prefix' VALUE 'whisper'

},

'modelDetails' VALUE

{

'languageCode' VALUE :LANG_CODE,

'modelType' VALUE ac.model,

'transcriptionSettings' VALUE

{

'diarization' VALUE

{

'isDiarizationEnabled' VALUE 'true'

}

}

}

}

INTO l_body

FROM ....

This service saves the transcription to the OCI Object Storage Bucket (outputLocation) of the request. Once the jobs is complete this is where we begin after downloading the contents.

If you are wondering were to start, have a look at Jon Dixon’s APEX_WEB_SERVICE The Definitive Guide where he has an example of fetching a blob file.

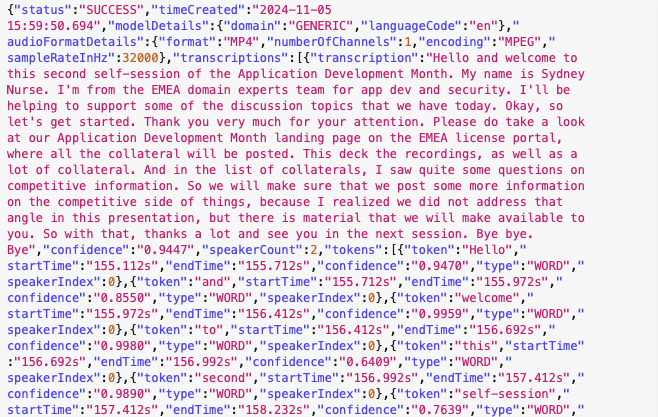

Response Snippet

{"status":"SUCCESS","timeCreated":"2024-11-05 15:59:50.694","modelDetails":{"domain":"GENERIC","languageCode":"en"},"audioFormatDetails":{"format":"MP4","numberOfChannels":1,"encoding":"MPEG","sampleRateInHz":32000},

"transcriptions":

[

{"transcription":"Hello and welcome to this second self-session of the Application Development Month. My name is Sydney Nurse. I'm from the EMEA domain experts team for app dev and security. I'll be helping to support some of the discussion topics that we have today. Okay, so let's get started. Thank you very much for your attention. Please do take a look at our Application Development Month landing page on the EMEA license portal, where all the collateral will be posted. This deck the recordings, as well as a lot of collateral. And in the list of collaterals, I saw quite some questions on competitive information. So we will make sure that we post some more information on the competitive side of things, because I realized we did not address that angle in this presentation, but there is material that we will make available to you. So with that, thanks a lot and see you in the next session. Bye bye. Bye",

"confidence":"0.9447",

"speakerCount":2,

"tokens":

[

{"token"::"Hello","startTime":"155.112s","endTime":"155.712s","confidence":"0.9470","type":"WORD","speakerIndex":0},

{"token"::"and","startTime":"155.712s","endTime":"155.972s","confidence":"0.8550","type":"WORD","speakerIndex":0},

{"token"::"welcome","startTime":"155.972s","endTime":"156.412s","confidence":"0.9959","type":"WORD","speakerIndex":0},

{"token"::"to","startTime":"156.412s","endTime":"156.692s","confidence":"0.9980","type":"WORD","speakerIndex":0},

{"token"::"this","startTime":"156.692s","endTime":"156.992s","confidence":"0.6409","type":"WORD","speakerIndex":0},

{"token"::"second","startTime":"156.992s","endTime":"157.412s","confidence":"0.9890","type":"WORD","speakerIndex":0},

{"token"::"self-session","startTime":"157.412s","endTime":"158.232s","confidence":"0.7639","type":"WORD","speakerIndex":0},

{"token"::"of","startTime":"158.232s","endTime":"158.652s","confidence":"0.9950","type":"WORD","speakerIndex":0},

{"token"::"the","startTime":"158.652s","endTime":"158.992s","confidence":"0.9940","type":"WORD","speakerIndex":0},

{"token"::"Application","startTime":"158.992s","endTime":"159.392s","confidence":"0.5210","type":"WORD","speakerIndex":0},

{"token"::"Development","startTime":"159.392s","endTime":"159.752s","confidence":"0.9900","type":"WORD","speakerIndex":0},

{"token"::"Month.","startTime":"159.752s","endTime":"160.032s","confidence":"0.9060","type":"WORD","speakerIndex":0},

{"token"::"My","startTime":"160.152s","endTime":"160.332s","confidence":"0.9940","type":"WORD","speakerIndex":0},

{"token"::"name","startTime":"160.332s","endTime":"160.532s","confidence":"0.9980","type":"WORD","speakerIndex":0},

{"token"::"is","startTime":"160.532s","endTime":"160.812s","confidence":"0.9990","type":"WORD","speakerIndex":0},

{"token"::"Sydney","startTime":"172.992s","endTime":"173.272s","confidence":"0.5460","type":"WORD","speakerIndex":0},

{"token"::"Nurse.","startTime":"173.272s","endTime":"173.692s","confidence":"0.7260","type":"WORD","speakerIndex":0},

...

...

...

{"token"::"Bye","startTime":"3419.352s","endTime":"3419.592s","confidence":"0.9890","type":"WORD","speakerIndex":1},

{"token"::"bye.","startTime":"3419.592s","endTime":"3420.892s","confidence":"0.4769","type":"WORD","speakerIndex":1},

{"token"::"Bye","startTime":"3421.372s","endTime":"3421.672s","confidence":"0.5099","type":"WORD","speakerIndex":1}

]

}

]}

The Goal

Our goal is to save the transcript, each sentence by speaker and the ability to find content related to a topic, tag or discover who are topic areas go to person.

The latter part of this graph style relationship will be supported by Vector embeddings & search with summaries and tags generated by Gen AI.

From the snippet above, I can save the entire transcript, easy, then either split this into sentences or use the Word tokens and re-build the sentences and collect the other data points such as speaker index, start, and end times.

Parsing the entire transcript is simple once you have the blob contents

l_transcription := apex_web_service.make_rest_request_b(

p_url => l_request_url,

p_http_method => 'GET',

p_credential_static_id => :OCI_CREDENTIAL

);

UPDATE ...MEDIA

SET

TRANBLOB = l_transcription, -- Blob response / file contents

TRANSCRIPT = (

SELECT jt.transcript

FROM JSON_TABLE (

l_transcription, '$.transcriptions[0]'

NULL ON EMPTY

COLUMNS

(

transcript clob path '$.transcription'

)

) jt

)

Parsing the Tokens into sentences required a bit of loop-ty-loop

FOR r IN (

SELECT jt.token, jt.startTime, jt.endTime, jt.confidence,jt.speakerIndex

FROM JSON_TABLE (

l_transcription, '$.transcriptions[0].tokens[*]'

NULL ON EMPTY

COLUMNS

(

token varchar2(4000) path '$.token',

startTime varchar2(4000) path '$.startTime',

endTime varchar2(4000) path '$.endTime',

confidence number path '$.confidence',

speakerIndex int path '$.speakerIndex'

)

) jt

)

LOOP

IF l_sentence = EMPTY_CLOB()

THEN l_sentence := r.token;

ELSE l_sentence := l_sentence || ' ' || r.token;

END IF;

IF l_startTime IS NULL THEN l_startTime := replace(r.startTime,'s');

END IF;

IF (instr(r.token,'.') > 0) THEN

BEGIN

INSERT INTO ...TRANSCRIPT_BLOCKS

(MEDIA_ID, SPEAKER_INDX, BLOCK, START_ORDER, END_ORDER)

VALUES

(:MEDIA_ID, r.speakerIndex, l_sentence, l_startTime, replace(r.endTime,'s'));

l_startTime := NULL;

l_sentence := EMPTY_CLOB();

COMMIT; -- commit as you like row by row or dataset

END;

END IF;

END LOOP;

As I’ve commented a few times, I’m not developer and like to have simple and readable code that I can come back to and understand with my limit experience.

I am sure more efficient version of this is out there using analytical functions but lucky me, I’m not delivering Production systems anymore. ;)

A short explanation:

Get all the tokens and loop over them, Word by Word

Speech adds punctuation, so each sentence ends with a '.', so use this to identify the end of the sentence.

Until then concatenate everything until the end of the sentence

At the start of the sentence, remember to capture the start time

At the end do the same

For good measure save the speaker index for the sentence to the database

Don’t forget to reset those variables in the scope

That’s it, and this response is typical for many of OCI’s AI services, so something similar can be applied to those as well.

Learnings

The importance of multiple test cycles was re-affirmed as this service took me through its paces. Starting small, 30Mb, or 5 minute recording was useful in getting the initial job requests to run and viewing the response.

Stepping up the content size to 50 minutes or 1hour+ helped me to identify the real data types potentials, blobs & clobs vs varchar2 even large ones.

For instance, the same code to create a json body for a sentence vs the entire transcript would fail as the default json return is a varchar2(4000).

That meant calling GenAI with a block of text like

json_object(

'role' value 'USER',

'content' value json_array (

json_object(

'type' value 'TEXT',

'text' value transcripts.BLOCK

)

)

)

happy days for sentences but unforeseen error for an hour long transcript, so

json_object(

'role' value 'USER',

'content' value json_array (

json_object(

'type' value 'TEXT',

'text' value transcripts.BLOCK

)

) RETURNING CLOB

manage return types appropriately.

In my short but ferocious battle, I made well over 2000 REST API calls! If you are using a credit based or pay-as-you-go model, rate limits and consumption will go through the roof.

APEX does offer some minimal safe guards with the MAX_WEBSERVICE_REQUESTS set by default to 1000 requests per 24 hours. This may be a pain but if costs is a concern during development and testing then set this appropriately.

I am now running at a 100K limit for 24 hours. What?!? I have a lot of files to process …

You can read about the APEX Instance parameters and setting them as the database admin using the APEX_INSTANCE_ADMIN.SET_PARAMETER procedure.

APEX_INSTANCE_ADMIN.SET_PARAMETER (

p_parameter IN VARCHAR2,

p_value IN VARCHAR2 DEFAULT 'N' )

Conclusion

There is a large potential for AI services over a wide set of use cases. The power of these will be how well we can combine them over very fast networks and scores processing services.

As use cases become more mainstream, so will better and better models and choosing the right one makes a big difference.

The most flexible, and funny enough, way to use these services is through custom coding, even in APEX, Oracle’s LowCode Platform.

The Speech API does support processing of multiple media in a single request. In this use case, even with multiple related recordings I’ve individual transcription jobs that could be re-submitted or have different job settings.

Note that the sample submits a single media file object with a transcript array with a single element. i.e. transcriptions[0]. Ensure that you consider this.

Subscribe to my newsletter

Read articles from Sydney Nurse directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Sydney Nurse

Sydney Nurse

I work with software but it does not define me and my constant is change and I live a life of evolution. Learning, adapting, forgetting, re-learning, repeating I am not a Developer, I simply use software tools to solve interesting challenges and implement different use cases.