Understanding Load Balancers: The Backbone of Scalable Systems

Chethana S V

Chethana S V

In today’s digital era, there is a need for high availability, scalability, and much efficient resource utilization. Being a DevOps or Cloud engineer, the most important and necessary component you'll encounter in building reliable systems is the load balancer. But what exactly is a load balancer, and why is it so important to build a scalable architectures? Let’s deep dive into the functionality, types, and benefits of load balancers to understand the impact it has on modern infrastructure.

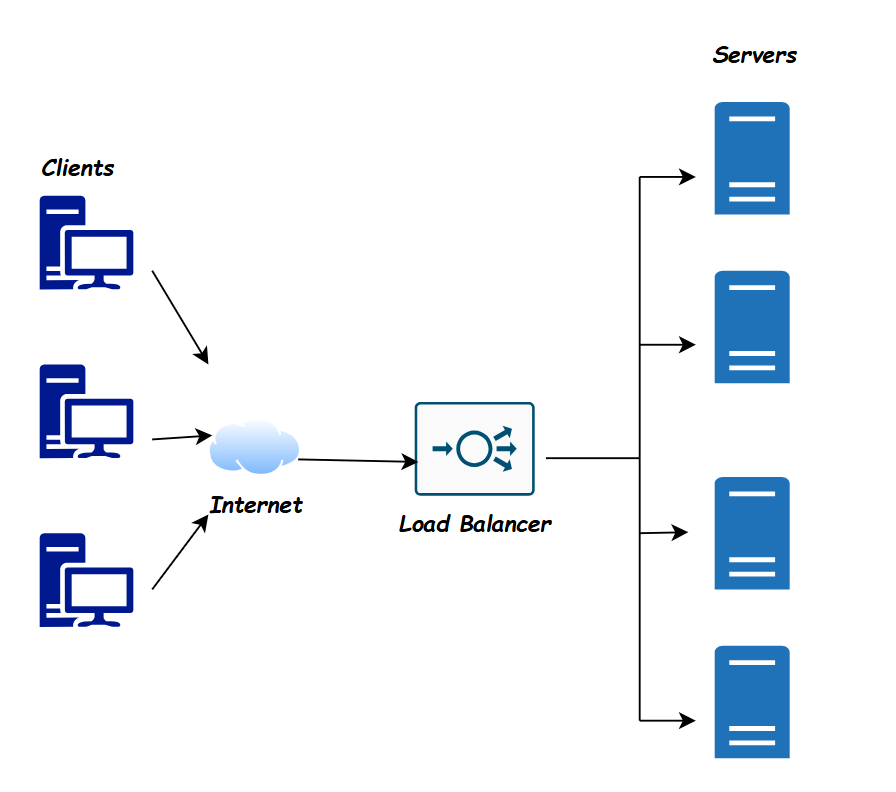

What exactly is a Load Balancer?

A load balancer is similar to a traffic cop for your network, ensuring that the incoming traffic gets evenly spread across multiple servers so that no single server gets overloaded. It’s there to keep things running smoothly, optimizing response times and using resources efficiently. With the proper set up, load balancers are responsible to keep your system fault-tolerant and minimizing the downtime. Plus, they make sure your users have a seamless experience, by making the application availbale even when there is a huge amount of traffic to the server.

Why should we use Load Balancers?

The purpose of load balancers extends beyond just dividing traffic. They also serve as a critical component in:

High Availability: By distributing requests across multiple servers, a load balancer ensures that even if one server fails, the application remains accessible.

Scalability: As per the demand, load balancers adds or removes servers which allows the applications to scale efficiently.

Optimized Performance: End user experience is enhanced by load balancers by directing traffic to the least busy servers, thereby reducing latency and response time.

Security: Many load balancers supports SSL termination, which ensures secure traffic between clients and servers, improving overall security of the application.

Types of Load Balancers

Load balancers are generally categorized based on the layer at which they operate in the OSI model, primarily at the transport and application layers.

1. Layer 4 Load Balancers (Transport Layer)

Layer 4 load balancers work behind the scenes at the transport layer of the network, handling traffic based on things like IP addresses and TCP or UDP ports. Think of them as efficient traffic managers that make quick decisions without digging into the details of the data itself. Because they don’t waste time inspecting packet content, they’re incredibly fast and capable of handling huge amounts of data. These load balancers are a great fit for applications where there is no need to check what’s actually inside the data packets—they just get the job done, and they get it done fast.

2. Layer 7 Load Balancers (Application Layer)

Layer 7 load balancers works at the application layer. Instead of just managing traffic based on IP addresses or ports, they look at the data — like HTTP headers, cookies, and URLs. This allows to make more advanced decisions, like routing traffic based on a specific URL or keeping users connected to the same server during a session. Basically, they’re like the sophisticated traffic directors of the internet, allowing the requests are routed exactly where they need to go in the most efficient way possible. This is perfect for complex applications which requires extra level of fine-tuned control.

Example: An e-commerce website might use a Layer 7 load balancer to direct image requests to dedicated image servers, enhancing resource efficiency and reducing load on the main application servers.

3. Global Server Load Balancing (GSLB)

To distribute the traffic worldwide across the servers which are scattered in different geographical regions, Global Server Load Balancing are used. It’s perfect for multi-region or global setups where minimum latency really matters. By directing users to the server that’s closest to them, GSLB helps reduce response times and creates a smoother user experience. Additionally, it ensures the application remains accessible on a global scale, even if one region goes offline.

Traffic Routing Techniques in Load Balancers

Load balancers use a variety of algorithms to determine how traffic should be distributed across servers. Here are some of the most common methods:

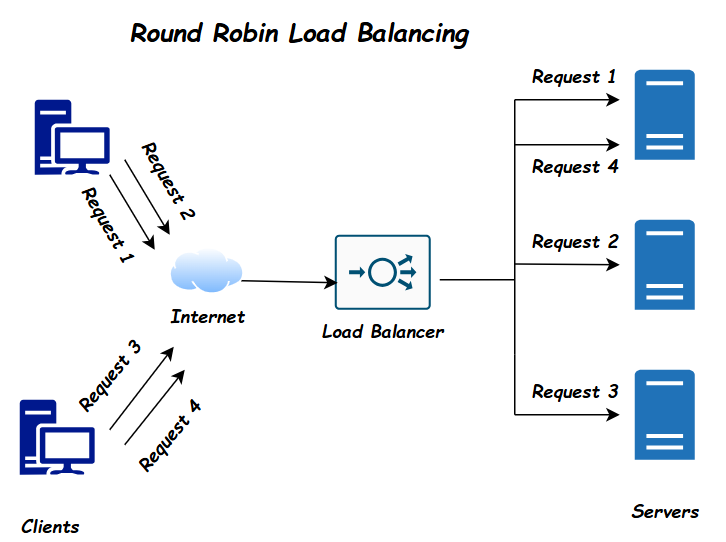

Round Robin: The Round Robin load balancing algorithm distributes incoming requests sequentially across all servers in a circular order, ensuring an even allocation of traffic. It is effective to evenly distribute the traffic in environments with equal server resources, it may not be suitable for setups with varying server loads or capacities.

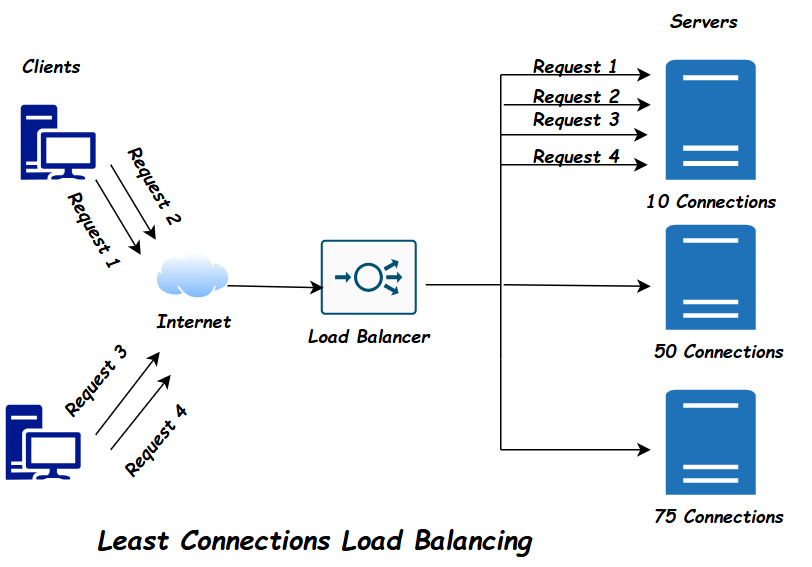

Least Connections: The Least Connections load balancing algorithm directs traffic to the server with the fewest active connections at any given moment, making it more effective in environments where traffic varies significantly.

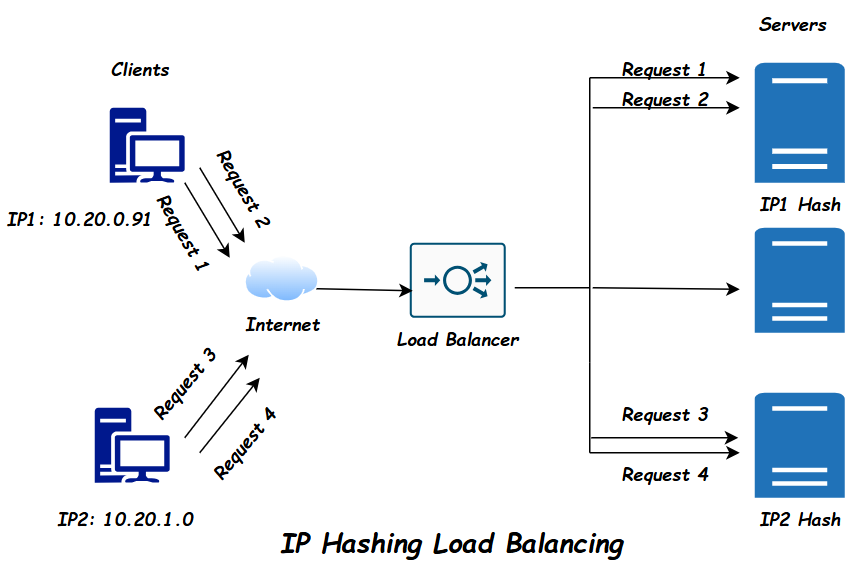

IP Hashing: IP Hashing is a load balancing algorithm that routes incoming requests based on the client's IP address. This method ensures session persistence, meaning that a returning user with the same IP address will consistently be directed to the same server, which is useful for applications that require session continuity.

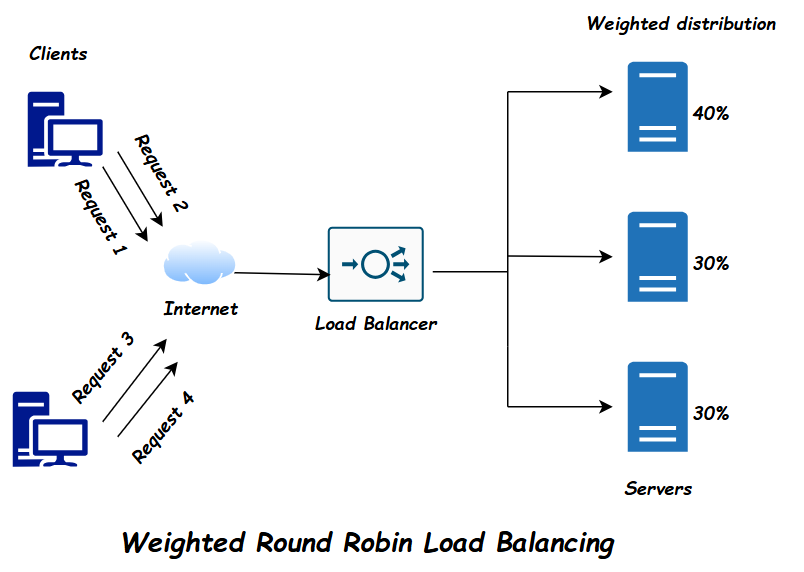

Weighted Round Robin: Weighted Round Robin is an extension of the Round Robin load balancing algorithm that assigns a weight to each server based on its processing capacity or capability. Servers with higher weights receive more requests, allowing traffic to be distributed according to the server’s performance or resources.

Hardware vs. Software Load Balancers

Load balancers come in two main types: hardware-based and software-based, each having its own set of advantages.

Hardware Load Balancers are typically found in on-premises data centers. These are dedicated devices designed with specialized processing power, offering high performance. They often come with advanced features like SSL offloading and hardware acceleration, which are ideal for heavy-duty, high-traffic environments.

Software Load Balancers have become increasingly popular due to their cost-effectiveness and flexibility. They’re suitable for both cloud and on-premises setups, and tools like NGINX, HAProxy, and AWS Elastic Load Balancing (ELB) can be deployed quickly and scaled across various environments as per the requirement.

In the world of modern DevOps, software load balancers are often the go-to choice. Their flexibility makes them a great fit for dynamic, CI/CD-driven workflows, allowing for faster deployment and easier scaling.

Load Balancer Use Cases

1. Web Applications

Load balancers are are mandatory for any web applications that needs support to a large number of concurrent users. By distributing HTTP requests across multiple servers, they help maintain response times during increased traffic and provides protection against single points of failure.

2. Database Systems

Load balancers are often used to distribute queries across multiple database servers or replicas in case of complex database environments. This infact reduces the query time and helps in loading the distribution among read and write replicas.

3. Microservices Architectures

Microservices architecture involves numerous small, independent services. Here, load balancers distribute incoming requests to the appropriate service, ensuring scalability and high availability of each service.

Advantages of Load Balancers

Enhanced Reliability: Load balancers prevent overloading of any single server, reducing the risk of failures.

Cost Efficiency: By distributing the workload, load balancers allow for optimized server usage, often enabling the use of smaller, cost-effective instances.

Scalability: Load balancers make it easier to scale applications by seamlessly adding or removing servers based on demand.

Traffic Management and Analytics: Many load balancers provide valuable insights into traffic patterns, helping to optimize resources and improve application performance.

Conclusion

Load balancers are a must-have when it comes to creating resilient and high-performing systems, especially in today’s world of distributed architectures. They don’t just make your setup more reliable and scalable; they also simplify how you manage traffic and add an extra layer of security. As you dive deeper into your DevOps journey, getting a solid understanding of how load balancers work and where to use them will help you design systems that can handle the real-world challenges of scaling and availability.

Whether you’re working on on-premises applications or building cloud-native services, load balancers are a key part of your toolkit. They keep things running smoothly by supporting both high availability and flexibility—essentials for any successful deployment.

Subscribe to my newsletter

Read articles from Chethana S V directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Chethana S V

Chethana S V

I’m a DevOps engineer passionate about automation, cloud infrastructure, and security. Currently, I’m diving into the world of DevSecOps, integrating security into the DevOps lifecycle. Always eager to learn and apply new technologies, I aim to build secure, scalable systems and share my experiences along the way through this blog.