Beyond Correlation: Giving LLMs a Symbolic Crutch with Graph-Based Logic

Gerard Sans

Gerard Sans

Large Language Models (LLMs) have achieved remarkable feats, generating human-quality text and even demonstrating some semblance of understanding. However, beneath the surface lies a fundamental limitation: they excel at correlation, not causation or true logical reasoning. LLMs are trained on massive text corpora using gradient descent optimization, learning to predict the next token in a sequence. This makes them masters of mimicking patterns and stylistic nuances, but it doesn't equip them with the tools for rigorous logical inference.

The Limits of Next-Token Prediction

The core problem is that LLMs rely heavily on statistical associations between words and phrases. They learn that certain words often follow others, but they don't grasp the underlying meaning or logical relationships between them. This makes them susceptible to errors in reasoning, especially when faced with tasks requiring:

Induction: Generalizing from specific examples to broader rules. LLMs may struggle to identify the underlying pattern if the training data doesn't explicitly cover it.

Abduction: Inferring the most plausible explanation for observed facts. LLMs may generate plausible-sounding but logically flawed explanations based on surface-level associations.

Deduction: Drawing logical conclusions from given premises. LLMs may struggle with multi-step deductions or situations requiring symbolic manipulation, as their internal representation is distributed and statistical rather than symbolic.

Graphs as a Symbolic Crutch

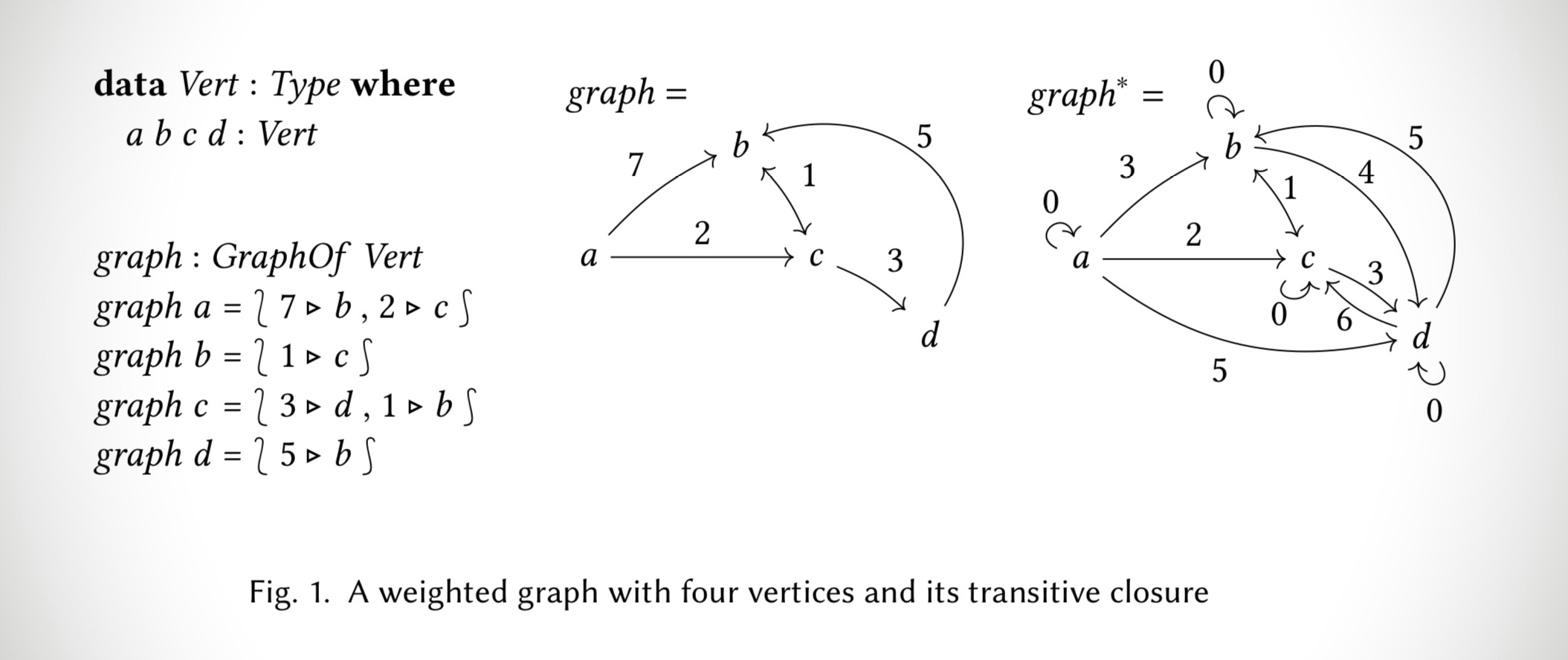

To overcome these limitations, we need to provide LLMs with a symbolic crutch – an external mechanism that explicitly represents logical relationships and supports symbolic manipulation. Graph-based logic offers a promising solution. We can represent knowledge and premises as a graph, where:

Vertices: Represent concepts, entities, or events.

Edges: Represent relationships or logical connections between vertices.

Weights: Represent the strength or certainty of these connections, or other relevant properties.

This graph becomes an external state accessible to the LLM, enabling a dynamic interaction between statistical and symbolic reasoning.

A Symbolic Dialogue

Imagine a conversation with an LLM augmented with a graph-based logic runtime:

Initial Context: The conversation begins with an initial graph representing background knowledge or a specific domain.

Premise Integration: As the conversation progresses, new information or premises are added to the graph as vertices and edges.

Query Processing: When the user poses a question or requests a logical deduction, the LLM translates the query into a graph traversal or manipulation problem.

External Runtime Inference: The graph-based logic runtime executes the corresponding graph algorithm (e.g., transitive closure, shortest path) to derive logical conclusions.

Response Generation: The LLM integrates the results from the external runtime into its response, providing a logically sound and grounded answer.

This symbolic crutch allows the LLM to offload the heavy lifting of logical inference to the external runtime, leveraging its formal guarantees and symbolic manipulation capabilities. The graph serves as a temporary scratchpad for each conversation but can be extended, modified, and stored for later use. Curated subgraphs can be loaded on demand to provide specialized knowledge, and the graph can also be queried directly to support ad-hoc queries or fact retrieval.

A Symbolic Riddle: Taming Gödel with Graphs

Let's illustrate the power of graph-based logic with a classic example that exposes the limitations of pure next-token prediction: the liar paradox, a simplified version of concepts explored in Gödel's incompleteness theorems. Consider the statement: "This statement is false."

If the statement is true, then it must be false (as it claims). But if it is false, then its claim is true, making the statement true. This self-contradictory loop highlights the challenges LLMs face when dealing with self-reference and logical paradoxes.

LLM Prompt and Response (Without Graph Support)

Prompt: Is the statement "This statement is false" true or false?

LLM (likely response): This statement is a classic paradox. It can't be definitively classified as true or false because it creates a self-contradictory loop.

While the LLM recognizes the paradox, it sidesteps providing a definitive answer. It simply identifies the pattern of self-contradiction without a deeper understanding of the underlying logical structure.

LLM with Graph Support

Now, let's see how a graph-based logic runtime can help. We'll represent the statement and its truth value with a small graph.

Initial Graph: The graph starts empty.

Premise Representation: We introduce a vertex "S" representing the statement "This statement is false."

Truth Value Representation: We add a vertex "True" and a vertex "False" to represent the possible truth values.

Edge Creation: We add two edges:

S -> False (representing the statement's claim: "S is false")

False -> S (representing the implication: "If S is false, then S is true" – as the negation of a false statement is true).

This simple graph captures the self-referential nature of the statement and the resulting contradiction.

Prompt: Is the statement "This statement is false" true or false?

LLM (with graph support): Analyzing the statement's graph representation reveals a cycle between the statement vertex 'S' and the 'False' vertex. This cycle represents the self-contradictory loop inherent in the statement. Therefore, within the context of classical logic, the statement cannot be assigned a consistent truth value.

The LLM, guided by the graph, not only recognizes the paradox but also pinpoints the source of the contradiction: the cyclical structure. The coinductive nature of the graph representation allows for the representation of this infinite or recursive structure without leading to computational issues. The LLM now leverages the graph as a tool for explicitly representing and analyzing the logical structure of the problem, moving beyond mere pattern recognition towards a more nuanced understanding of the underlying logic. Further, more sophisticated algorithms can be employed over this graph to analyze the logical structure and provide different types of responses or insights.

Formal Foundations: The Role of Coinductive Graphs

While not the primary focus, the paper "Formalising Graph Algorithms with Coinduction" plays a crucial role in this design by introducing the concept of coinductive graphs. These graphs can represent potentially infinite or cyclical relationships, which are often necessary for modeling complex temporal or logical dependencies. The formal verification aspects of the paper also provide a robust basis for the development of trustworthy and reliable logic runtimes, ensuring consistent and correct outputs from the implemented graph algorithms.

The Uncharted Territory: Beyond Formal Systems

While formal symbolic systems, like the graph-based logic described above, offer a powerful tool for enhancing logical reasoning in LLMs, they also highlight a crucial limitation: the vast expanse of human knowledge and reasoning that lies outside the realm of formalization. Many aspects of human intelligence, including:

Common Sense: The vast, often unspoken, body of knowledge about the everyday world that humans implicitly rely on.

Axioms and Assumptions: The foundational beliefs and principles that underpin our reasoning, often taken for granted and not explicitly stated.

Tacit Knowledge: The "know-how" and practical skills that are difficult to articulate or formalize, often acquired through experience and embodied practice.

Embodied Experiences: The rich tapestry of sensory, emotional, and physical experiences that shape our understanding of the world and influence our reasoning. This includes psychological and intuitive processes, unconscious biases, and inherently anthropomorphic perspectives.

These aspects of human intelligence are challenging to capture in formal symbolic systems. While we can represent specific facts and relationships within a graph, the broader context, implicit assumptions, and embodied experiences that shape human reasoning are often left unformalized. This represents a significant challenge for building truly intelligent systems, as these unformalized premises play a crucial role in human thought and understanding. LLMs, through their statistical learning from vast amounts of text, implicitly capture some aspects of common sense and tacit knowledge. However, this capture is often superficial and unreliable, as it is based on correlation rather than true understanding.

The development of robust symbolic systems that can integrate and reason with these unformalized aspects of human intelligence remains an open challenge for the field of AI. Future research may explore hybrid approaches that combine the strengths of both statistical and symbolic AI, allowing LLMs to leverage both the richness of data-driven learning and the rigor of formal logical systems. Further, incorporating other forms of representation beyond graphs, such as those capable of capturing embodied or sensory information, could provide avenues for bridging the gap between formal systems and the uncharted territory of human experience.

Conclusion

LLMs are powerful tools, but their inherent limitations in logical reasoning hinder their potential. By providing them with a symbolic crutch in the form of graph-based logic, we can bridge this gap and unlock new possibilities for knowledge representation, reasoning, and explainability. The combination of statistical and symbolic AI promises a future where LLMs can truly understand and reason about the world, moving beyond mere correlation to achieve deeper insights and more reliable inferences.

Subscribe to my newsletter

Read articles from Gerard Sans directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Gerard Sans

Gerard Sans

I help developers succeed in Artificial Intelligence and Web3; Former AWS Amplify Developer Advocate. I am very excited about the future of the Web and JavaScript. Always happy Computer Science Engineer and humble Google Developer Expert. I love sharing my knowledge by speaking, training and writing about cool technologies. I love running communities and meetups such as Web3 London, GraphQL London, GraphQL San Francisco, mentoring students and giving back to the community.