Integrating AI LLM in Rails with Ollama

Wilbert Aristo

Wilbert AristoTable of contents

Preface

Recently, my team held a 2-day hackathon to find and alleviate some pain points in our product. Developers were free to address these issues with any solutions they thought were suitable. There were no product requirements or UI/UX specifications — encouraging creative ideations and a rapid building of an MVP to tackle each pain point.

Problem

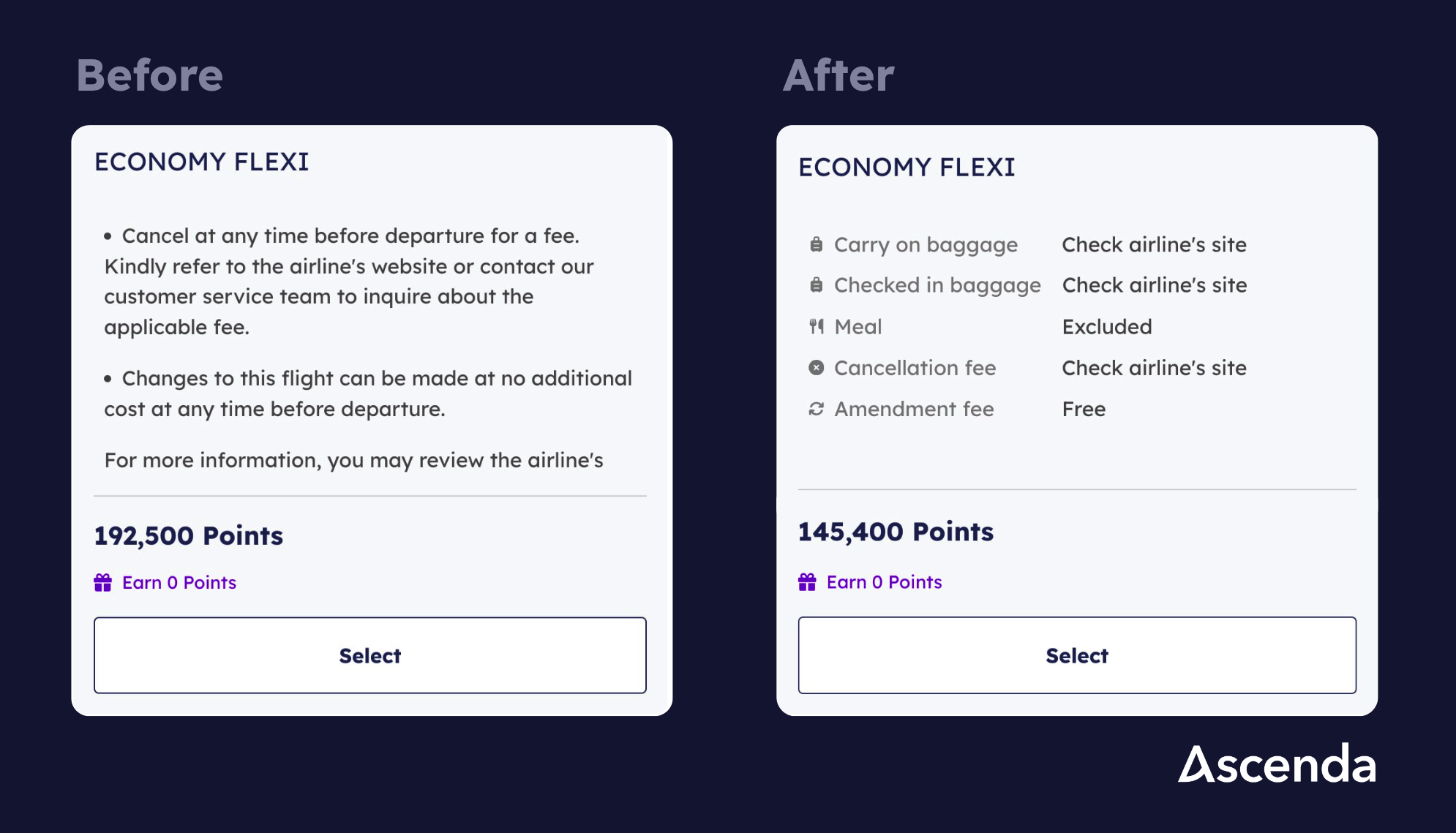

TLDR: Showing large blocks of text to explain fare type details makes it difficult for users to quickly capture important booking information at a glance.

My company’s travel product includes a flight booking portal where we collect flight options from various suppliers for our users to book. When choosing a flight, users can also select a fare type from their preferred airline. Each fare type provides different amenities and extras. For example, Singapore Airlines offers three economy fare types, each with different details on baggage allowance, seat selection, cancellation fees, and change fees.

The issue is that when we gather flight options from various airlines through different suppliers, the fare type details often come back to us as dynamic texts instead of a fixed format. For example, the details of a fare type might look like this:

This is not ideal because our users have to read long blocks of text to find key information about their booking. The team wants a setup similar to the Singapore Airlines example, where information is neatly categorized into sections like baggage allowance, meals, cancellation fees, and amendment fees. This way, users can quickly understand all the details at a glance, without being overwhelmed by text.

Solution

We know that AI, especially LLMs, are excellent at summarizing key information from long text passages. We want to use this capability to create a pre-prompted AI model that consistently returns a fixed JSON schema. This schema will contain all the fare type details in an organized way.

Using Ollama AI, I integrated an LLM into our Rails app through the ollama-ai gem. After installing the gem, I added an initializer for Ollama, which calls a separate service to set up an Ollama client and create a model based off Mistral.

## config/initializers/ollama.rb

require_relative '../../lib/ollama_app/client'

OllamaApp::Aristo.setup

## lib/ollama_app/client.rb

require "ollama-ai"

module OllamaApp

class Aristo

def self.client

@client ||= Ollama.new(

credentials: { address: 'http://localhost:11434' },

options: { server_sent_events: true }

)

end

def self.setup

client.pull({ name: 'mistral' })

create_model

end

def self.create_model

client.create({

name: "aristo",

modelfile: "FROM mistral\nPARAMETER temperature 0\nSYSTEM '''You are an expert in the airlines industry who is highly familiar with general terms and conditions surrounding flights. You will be given excerpts of airfare terms and conditions. You will provide a summary of these terms in a structured manner in JSON format with the following schema: {carry_on_baggage: string;checked_in_baggage: string;meal: boolean;cancellation:boolean;cancellation_fee: string;cancellation_fee_description: string;amendment:boolean;amendment_fee: string;amendment_fee_description: string;}. If 'carry_on_baggage' and 'checked_in_baggage' are not specified, change their values to empty string. If 'meal' is not specified, return false boolean. 'meal' must be true boolean if the excerpt mentions that food or meal and beverages are available. 'cancellation_fee' and 'amendment_fee' must only contain the amount of fees with their currencies, any other details must be moved to 'cancellation_fee_description' and 'amendment_fee_description' respectively. If 'cancellation_fee_description' and 'amendment_fee_description' contain descriptions indicating that the service is free, then their respective 'cancellation_fee' and 'amendment_fee' must be updated to 'Free'. If 'cancellation_fee_description' and 'amendment_fee_description' contain descriptions instructing to check airlines' website, then add 'cancellation_require_check' and 'amendment_require_check' as booleans to the result. Do not give explanations to your answer, strictly return in JSON format with the schema provided above.'''"

})

end

end

end

To ensure the model converts long text blocks into structured JSON every time, we need to pre-prompt our custom model with the JSON schema and specific constraints. More importantly, we must clearly instruct the model to never explain its answer and only return a JSON object. This is because adding explanations would turn the response into a string, causing an error when we try to parse it as a JSON object later. I included the prompt in the create_model method above. Here’s a more readable version of the prompt that I used:

\Note: Remove any newlines from the prompt below when passing it into the modelfile argument of create_model method above, as Ollama is sensitive to newline characters (e.g. \n). It should be a long string like the example above.*

'''You are an expert in the airlines industry who is highly familiar

with general terms and conditions surrounding flights. You will be given

excerpts of airfare terms and conditions. You will provide a summary of

these terms in a structured manner in JSON format with the following

schema:

{

carry_on_baggage: string;

checked_in_baggage: string;

meal: boolean;

cancellation: boolean;

cancellation_fee: string;

cancellation_fee_description: string;

amendment: boolean;

amendment_fee: string;

amendment_fee_description: string;

}

If 'carry_on_baggage' and 'checked_in_baggage' are not specified, change

their values to empty string. If 'meal' is not specified, return false

boolean. 'meal' must be true boolean if the excerpt mentions that food

or meal and beverages are available. 'cancellation_fee' and

'amendment_fee' must only contain the amount of fees with their

currencies, any other details must be moved to

'cancellation_fee_description' and 'amendment_fee_description'

respectively. If 'cancellation_fee_description' and

'amendment_fee_description' contain descriptions indicating that the

service is free, then their respective 'cancellation_fee' and

'amendment_fee' must be updated to 'Free'. If

'cancellation_fee_description' and 'amendment_fee_description' contain

descriptions instructing to check airlines' website, then add

'cancellation_require_check' and 'amendment_require_check' as booleans

to the result. Do not give explanations to your answer, strictly return

in JSON format with the schema provided above.'''

With this setup, we will have an Ollama client with our custom model ready whenever we start a Rails server. The final step is to use Ollama in a service or decorator that adds the fare type details, including a caching mechanism to avoid calling Ollama inference on duplicate texts. In my case, I created a new model for the cache to make the code cleaner.

## decorators/ollama/infer_fare_type_text.rb

module Ollama

class InferFareTypeText

## fare_class_description is an array of dynamic strings

## containing fare type details from multiple suppliers

def call(fare_class_description)

raw_text = fare_class_description.join(", ")

fare_metadata_hash = Digest::MD5.hexdigest(raw_text)

cached_fare_metadata = FareMetadataCache.find(fare_metadata_hash)

return cached_fare_metadata unless cached_fare_metadata.blank?

response = OllamaApp::Aristo.client.generate({

model: "aristo",

prompt: "Summarize '#{raw_text}'",

stream: false

})

json_result = JSON.parse(response[0]['response'])

FareMetadataCache.save(fare_metadata_hash, json_result)

json_result

end

end

end

The FareMetadataCache model uses Rails' built-in caching library

## models/ollama/fare_metadata_cache.rb

module Ollama

module FareMetadataCache

extend self

def save(hashed_fare_text, content)

Rails.cache.write(key(hashed_fare_text), JSON.generate(content), expires_in: 1.week)

end

def find(hashed_fare_text)

JSON.parse(Rails.cache.fetch(key(hashed_fare_text)) || {})

end

def key(hashed_fare_text)

"fare-metadata-#{hashed_fare_text}"

end

end

end

With this setup, the frontend will always receive consistent fare type metadata in JSON format for each fare type, following the schema we previously defined for the model.

{

"carry_on_baggage": "Check airline's site",

"checked_in_baggage": "Check airline's site",

"meal": false,

"cancellation": true,

"cancellation_fee": "Check airline's site",

"cancellation_fee_description": "Check airline's site",

"amendment": true,

"amendment_fee": "Free",

"amendment_fee_description": "Allowed"

}

Since the fare type details are now returned in a fixed format, the frontend can easily render an organized UI using this structure. This means users get all the important information at a glance without being overwhelmed by too much text. Better UX leads to smoother bookings, resulting in happier users! 🧳💻

Conclusion

Tackling this problem in a hackathon setting allowed me to try something new and improve my product-thinking skills. It's exciting to see how accessible AI has become for developers, and I look forward to exploring its potential to create even better features.

If you have any suggestions or improvements, please leave a comment on this blog! You can also connect with me on LinkedIn if you’d like to chat more. See you in the next blog! :)

Subscribe to my newsletter

Read articles from Wilbert Aristo directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Wilbert Aristo

Wilbert Aristo

Hi! I am a senior software engineer based in sunny Singapore. ☀️ My current work revolves around the full-stack development of loyalty platforms for major banks and financial institutions across the globe at Ascenda. Outside of work, I am a Web3 enthusiast who closely follows developments around cryptocurrency, smart contracts, and NFTs. Oh, and sometimes I DJ for clubs and private events 🎧