The Evolution of AI Minds: Understanding Cognitive Architecture in Modern LLMs

Ali Pala

Ali Pala

In the rapidly evolving landscape of artificial intelligence, one concept has emerged as a crucial framework for understanding how AI systems think and operate: cognitive architecture. As we push the boundaries of what Large Language Models (LLMs) can achieve, understanding their cognitive architecture becomes essential for building truly autonomous systems. Let's dive deep into this fascinating intersection of artificial intelligence and system design.

The Blueprint of Artificial Thought

Imagine building a house. You wouldn't start without architectural plans that detail every room, corridor, and connection. Similarly, cognitive architecture in AI systems serves as the blueprint for how they process information, make decisions, and interact with the world. Originally coined by Flo Crivello, this term perfectly captures the dual nature of modern AI systems: they combine both cognitive processes (the thinking part) and architectural design (the engineering part).

The Autonomy Spectrum: From Simple Scripts to Intelligent Agents

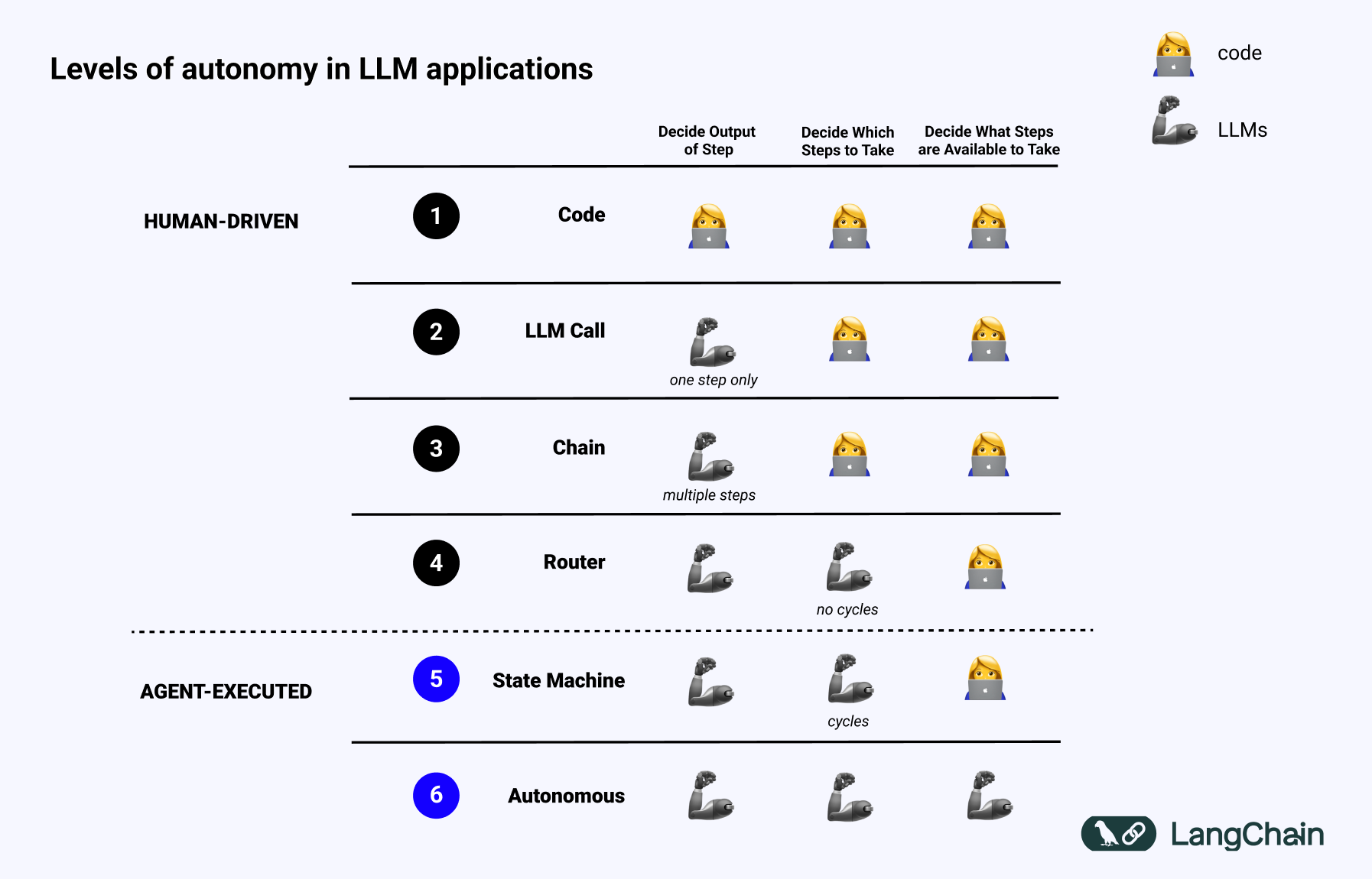

The journey from basic code to fully autonomous systems follows a fascinating progression, each level bringing new capabilities and challenges:

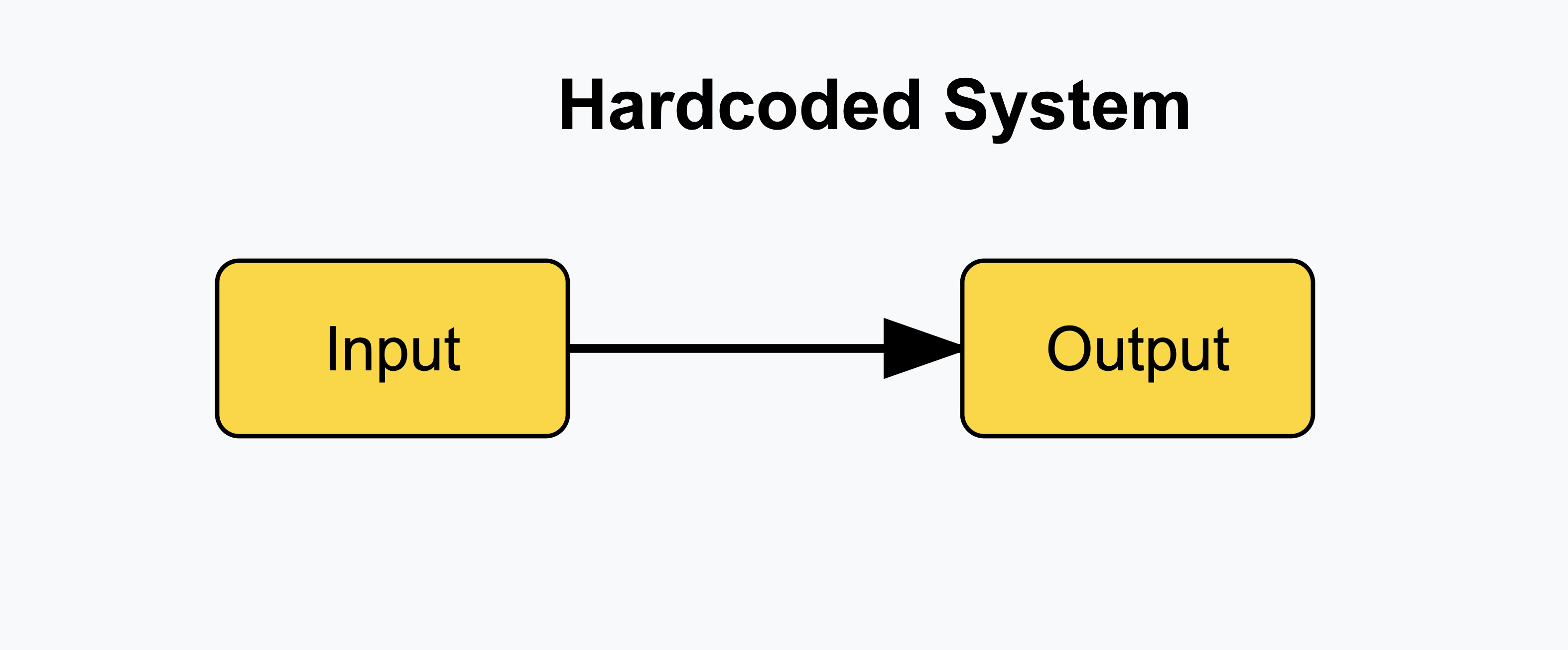

1. Hardcoded Systems: The Foundation

At the most basic level, we have systems that follow predetermined rules. Like a calculator, they're reliable but inflexible. There's no real "thinking" involved – just execution of predefined instructions.

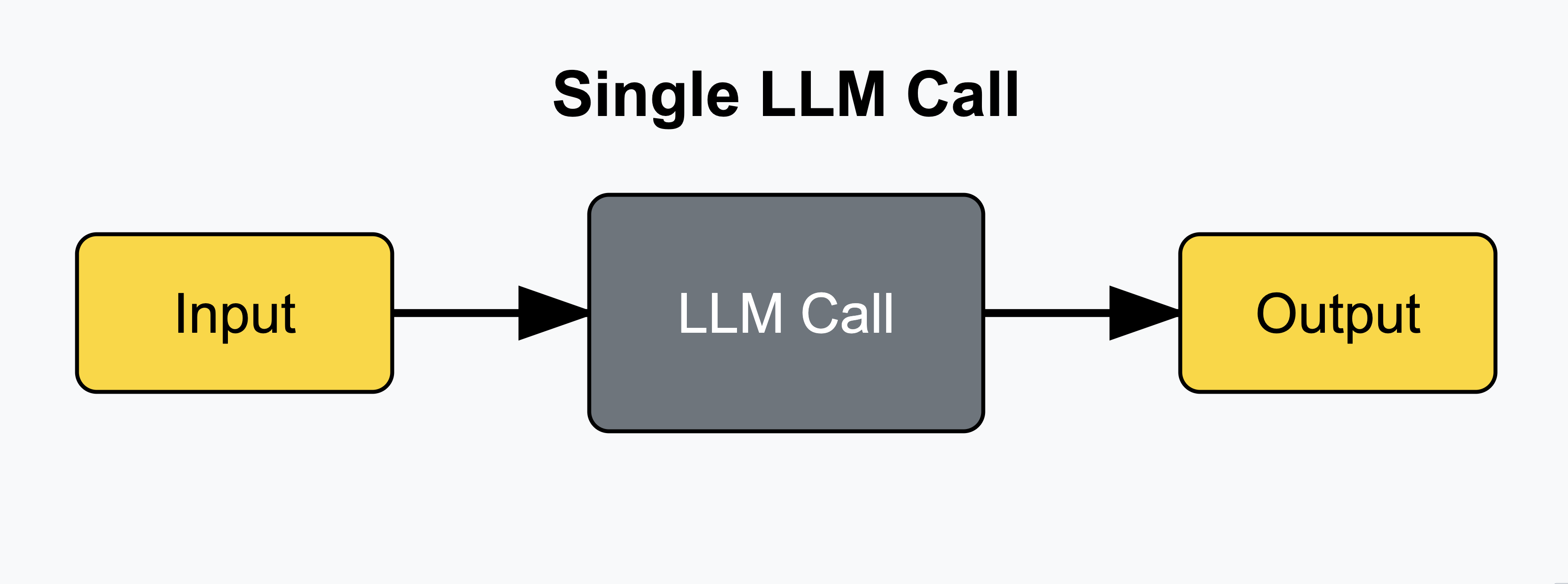

2. Single LLM Call: The First Step

This is where AI begins to show its potential. Think of it as a simple conversation – you ask a question, and the AI provides one thoughtful response. While basic, this architecture powers many of today's chatbots and simple AI applications.

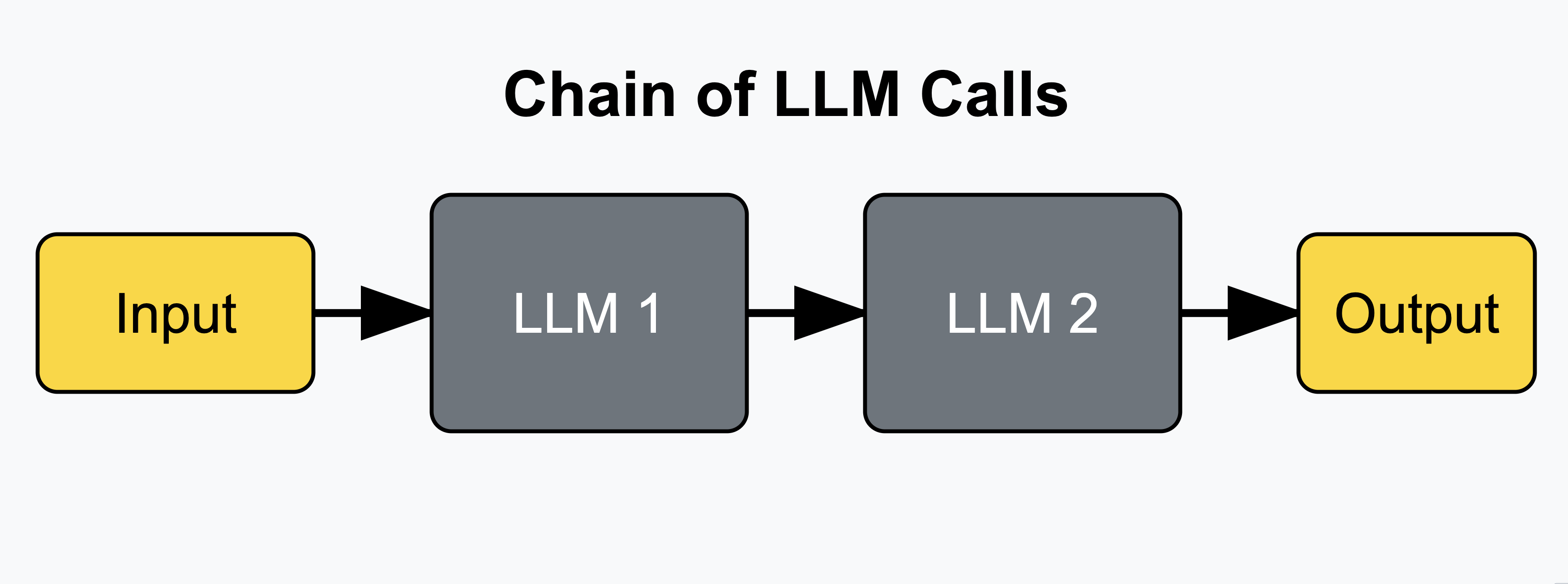

3. Chain of LLM Calls: The Orchestrator

Here, the system breaks down complex tasks into manageable steps. Like a well-orchestrated symphony, each LLM call builds upon the previous one, creating a more sophisticated response. This architecture excels in tasks requiring multiple stages of reasoning or different types of processing.

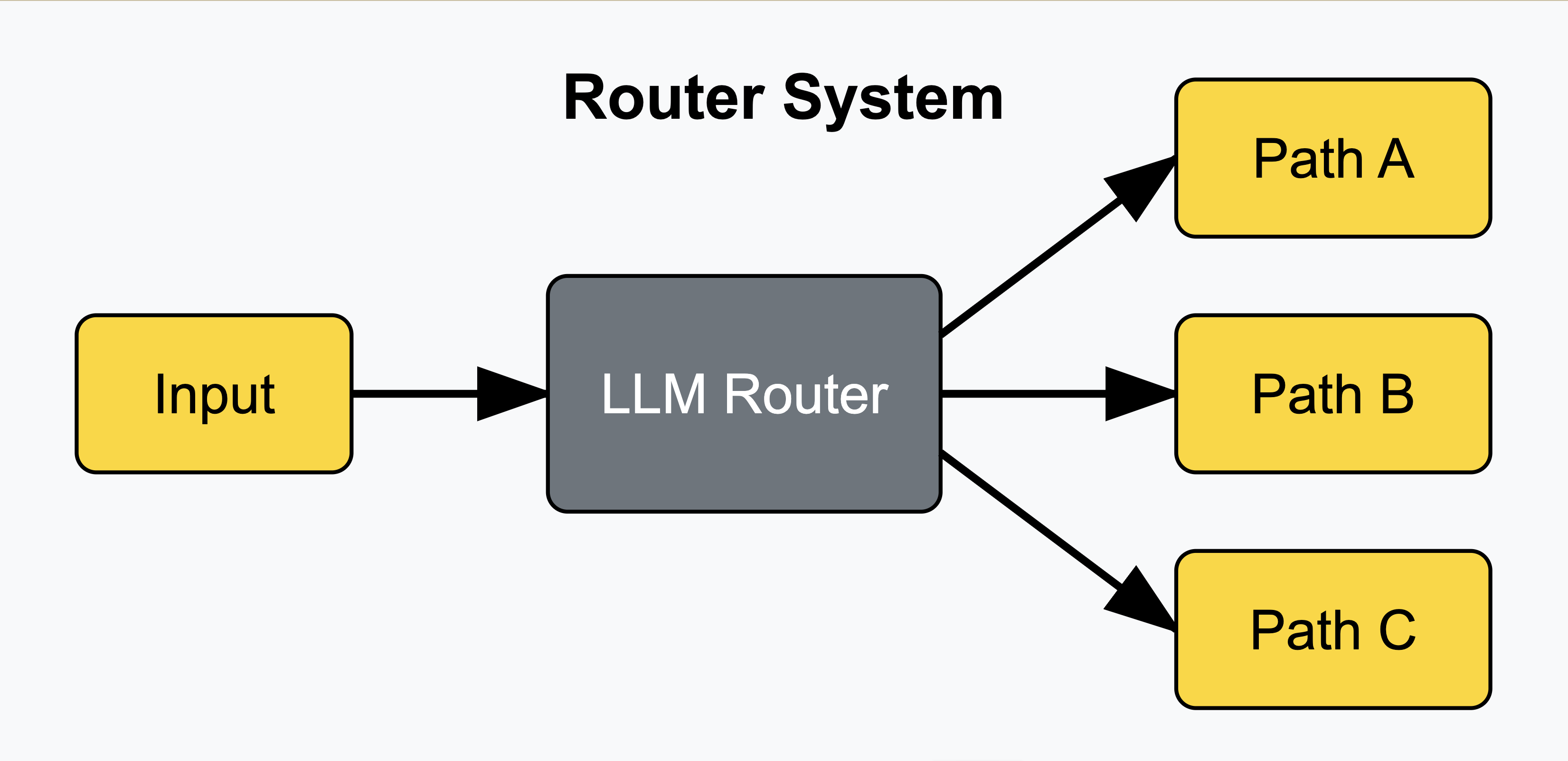

4. Router Systems: The Decision Maker

At this level, the AI gains the ability to choose its path. Instead of following a predetermined sequence, it can decide which steps to take based on the input it receives. This introduces an element of adaptability and unpredictability – key characteristics of more advanced AI systems.

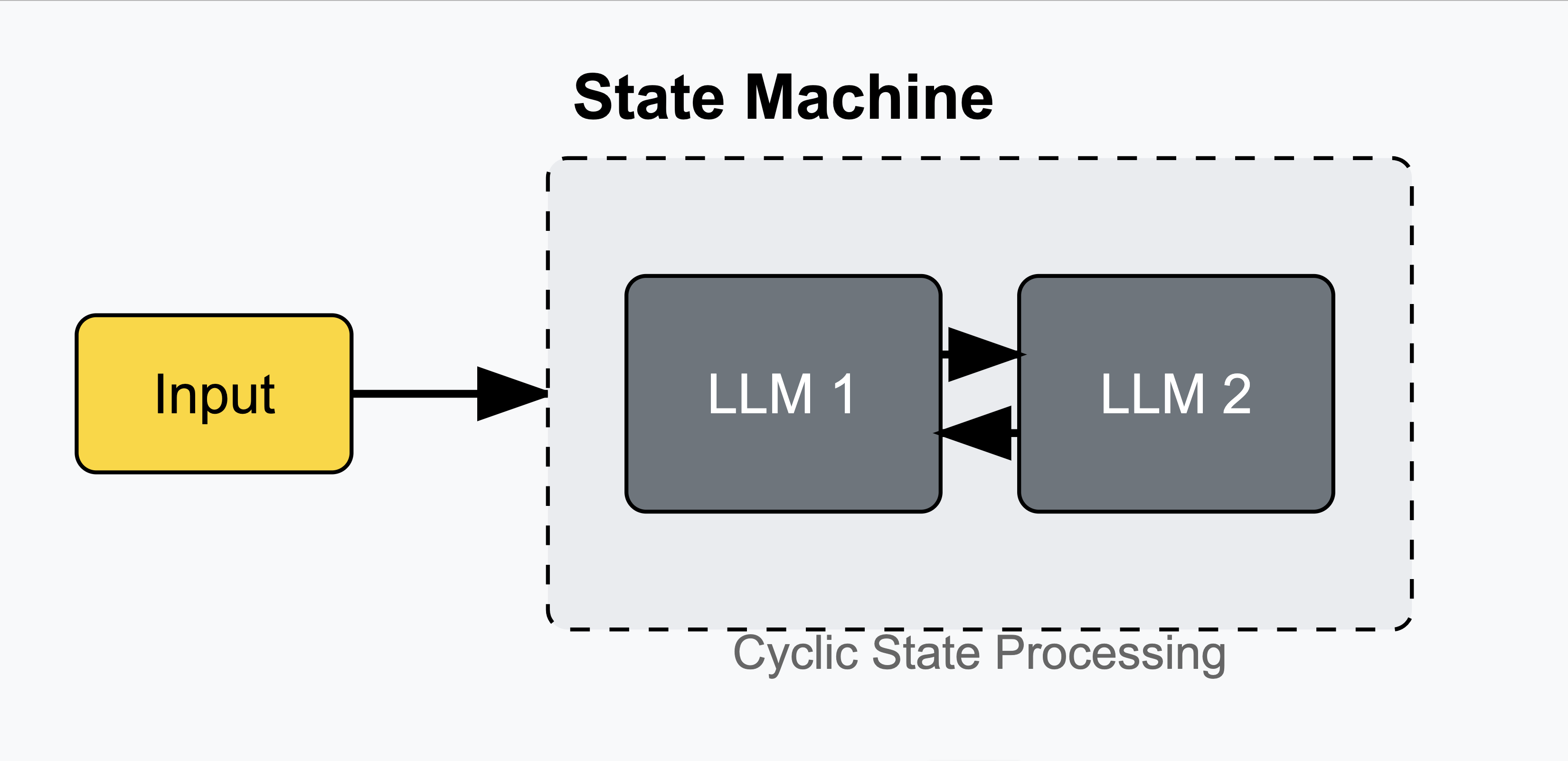

5. State Machines: The Strategic Thinker

Combining routing capabilities with loops creates a more dynamic system. Like a chess player thinking several moves ahead, these systems can maintain context and adjust their strategy over multiple iterations. The potential for unlimited LLM calls makes them powerful but also more complex to control.

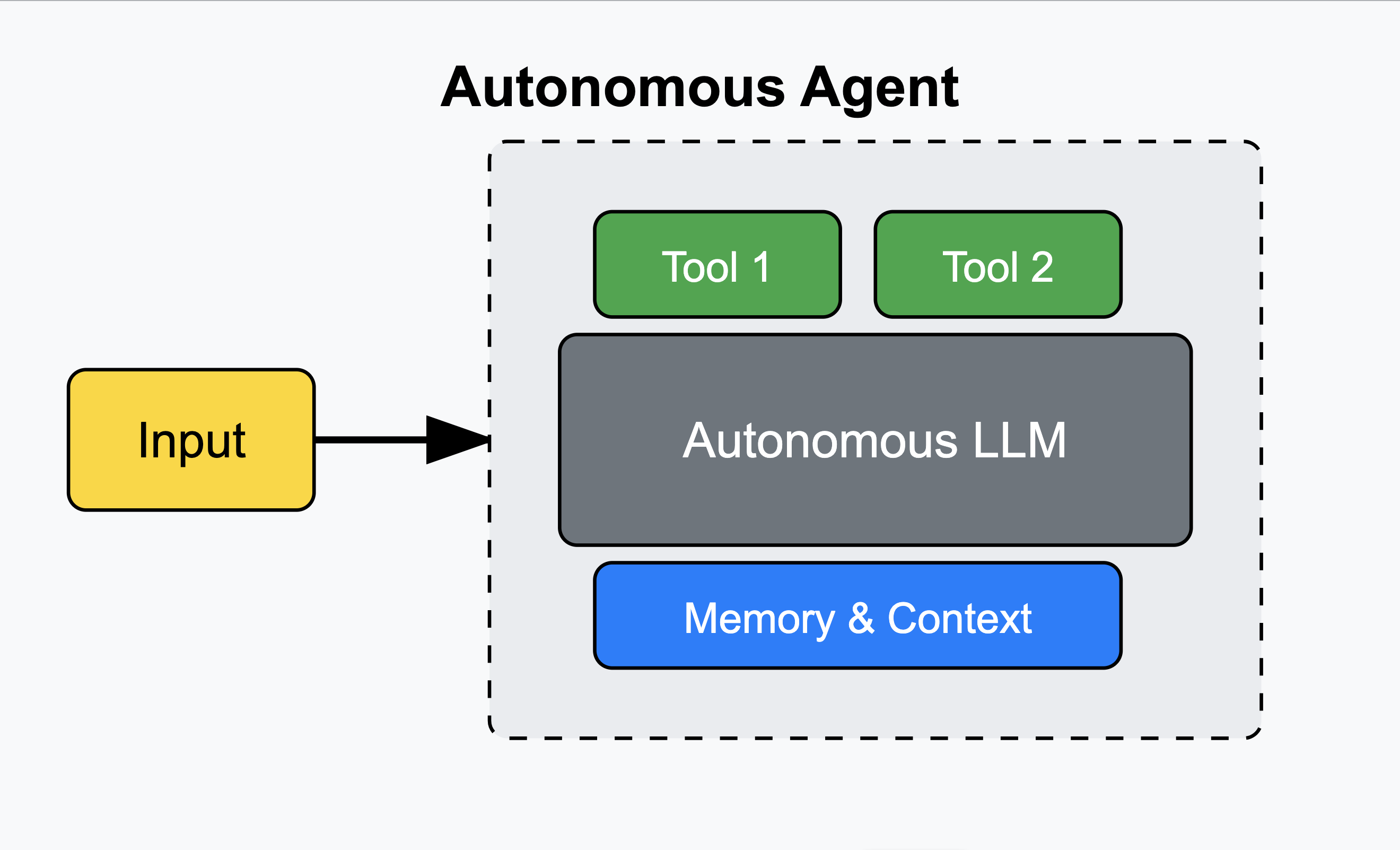

6. Autonomous Agents: The Independent Mind

At the pinnacle of current cognitive architectures, autonomous agents represent the highest level of AI independence. These systems not only decide their actions but can also modify their own instructions and available tools. They're the closest we've come to artificial general intelligence in practical applications.

The Engineering Challenge: Why Cognitive Architecture Matters

As Jeff Bezos once wisely noted about breweries making their own electricity during the industrial revolution, we must ask: Does controlling cognitive architecture truly add value to AI applications? The answer, at least for now, is a resounding yes.

First, building complex agents that function reliably is incredibly challenging. Companies that master this capability gain a significant competitive advantage. Second, in many modern AI applications, the cognitive architecture isn't just a means to an end – it is the product. Whether it's AI-powered coding assistants or customer support agents, the way the system thinks directly determines its value.

Looking Ahead: The Future of Cognitive Architecture

As we continue to push the boundaries of AI capabilities, the importance of cognitive architecture will only grow. The challenge lies not just in building more powerful systems, but in creating architectures that are:

Scalable and reliable in production environments

Customizable to specific use cases

Transparent and debuggable

Independent yet controllable

Tools like LangChain and LangGraph are leading the way in providing flexible, powerful frameworks for experimenting with different cognitive architectures. They represent a shift from simple, off-the-shelf solutions to highly customizable, low-level orchestration frameworks that give developers full control over their AI systems' thinking processes.

Conclusion: Building Tomorrow's AI Today

Understanding cognitive architecture isn't just academic – it's crucial for anyone working with advanced AI systems. As we move toward more sophisticated AI applications, the ability to design and control these architectures will become a key differentiator between successful and unsuccessful implementations.

The future belongs to those who can master this delicate balance: building AI systems that are autonomous enough to be truly useful, yet controlled enough to be reliable and trustworthy. As we continue this journey, cognitive architecture will remain at the heart of AI innovation, shaping how we build and interact with artificial minds.

Subscribe to my newsletter

Read articles from Ali Pala directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by