Load Balancer-Basics

Subbu Tech Tutorials

Subbu Tech Tutorials

A load balancer is a device that distributes network traffic across multiple servers to improve the performance and reliability of an application or service. It acts as a single point of contact for clients, sitting between the user and the server group.

Load Balancer Workflow for example.com:

Client Request: Users request example.com via their browsers.

Load Balancer:

Receives requests from clients.

Checks backend servers for availability and load.

3. Select Backend Server:

- Chooses the optimal server (e.g., Server A, B, or C) based on load-balancing algorithms.

4. Process Request:

- Selected server processes the request and sends the response back to the load balancer.

5. Send Response to Client:

- Load balancer forwards the server’s response back to the client.

Client 1 Client 2 Client 3

| | |

v v v

+------------------------------------------+

| Load Balancer |

+------------------------------------------+

| | |

v v v

Server A Server B Server C

The whole process if performed in highly efficient way with minimum of processing time that the end user is never aware of what is going on in the background giving the user the best possible experience.

Key Roles of Load Balancers

- Traffic Distribution:

A load balancer splits incoming requests among servers in a cluster.

For example, if you have an e-commerce website, the load balancer distributes shopper requests across several servers, ensuring no single server is overwhelmed and making the website faster for everyone.

2. High Availability:

Load balancers detect server issues. If one server fails, they redirect traffic to healthy servers.

Imagine a bank’s online portal: if one server goes down, the load balancer ensures users are rerouted to another server, so the service stays up without interruptions.

3. Scalability:

Load balancers support growth by allowing more servers to be added as traffic increases.

In high-demand scenarios like a flash sale, a load balancer can route traffic to additional servers to handle the surge, preventing crashes.

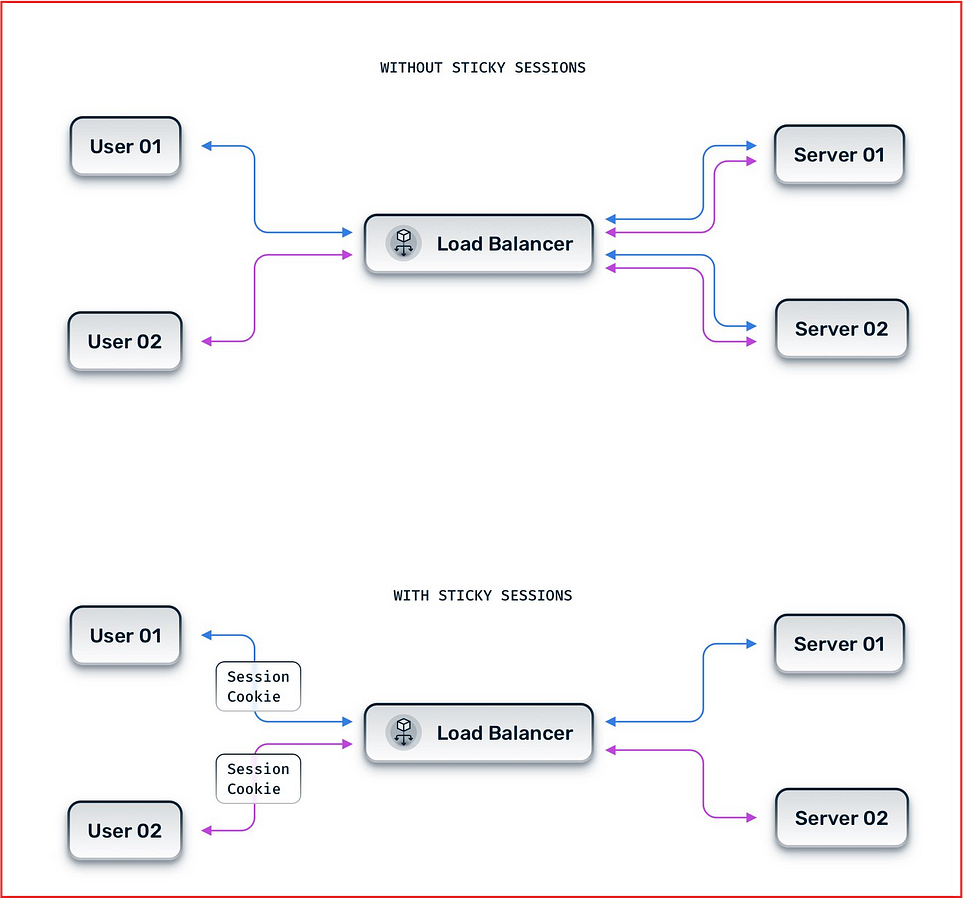

4. Session Persistence (Sticky Sessions):

Some applications require keeping a user’s session on the same server.

In online banking, sticky sessions ensure a user stays connected to the same server throughout their session. This keeps their login and transaction data consistent, so they don’t have to re-authenticate or lose session information while navigating their account.

Types of Load Balancers:

Layer 4 (Transport Layer) Load Balancers: Operate at the TCP/UDP layer, directing traffic based on source/destination IP addresses and ports. They are efficient and fast but lack visibility into application-level data.

Layer 7 (Application Layer) Load Balancers: Operate at the HTTP/HTTPS layer, directing traffic based on request details like URLs, headers, or cookies. These load balancers support more complex routing rules, making them ideal for web applications requiring advanced load distribution, session stickiness, and application-level monitoring.

- Layer 7 load balancers route network traffic in a much more sophisticated way than layer 4 load balancers, but they require far more CPU and can reduce performance and increase cost as a result.

Load Balancing Algorithms:

Here’s a brief explanation of load balancing algorithms with simple examples, including sticky sessions:

1. Round Robin:

How it works: Sends requests to each server in turn, like taking turns in a line.

Example: If three servers (A, B, C) are available, requests are routed to A, then B, then C, and the cycle repeats. Great for evenly performing servers.

2. Least Connections:

How it works: Routes traffic to the server with the fewest active connections.

Example: In a web application with two busy servers (A and B), if Server A has 10 active users and Server B has 5, the next request goes to Server B. Useful for varying workload sizes.

3. Weighted Round Robin:

How it works: Assigns weights to servers based on their capacity. Servers with more capacity handle more requests.

Example: If Server A is twice as powerful as Server B, Server A may receive 2 requests for every 1 request that Server B receives.

4. IP Hash:

How it works: Uses a hash of the client’s IP address to assign them to the same server each time.

Example: If Client X’s IP hash always points to Server A, Client X’s requests consistently go to Server A. Helps maintain session consistency, especially useful in gaming and video streaming.

5. Sticky Sessions (Session Persistence):

How it works: Routes a user’s requests to the same server during their entire session.

Example: In online shopping, a user’s session remains on Server B after initially assigned there. This keeps items in their cart accessible throughout their visit.

The main difference between IP Hash and Sticky Session is how they route traffic:

IP Hash:

- Uses the client’s IP address to determine which server to route traffic to. This method provides a consistent mapping, so the same client will always be directed to the same server as long as the number of servers remains the same.

Sticky Session:

- Also known as Session Persistence, this method directs all requests from a user session to the same server. This is done by storing a cookie on the client side and sending it with every request to the server.

For more in-depth information on Load Balancer Algorithms, check the URL below:

https://waytoeasylearn.com/learn/load-balancing-algorithms/

Types of Load Balancers by Deployment

- Hardware Load Balancers:

- Specialized physical devices installed on-premises, offering robust performance, security features, and high-throughput capabilities. They are more expensive and require hardware setup.

2. Software Load Balancers:

- Load balancing software installed on virtual or physical servers, offering flexibility and lower cost. Examples include HAProxy, NGINX, and Apache HTTP Server. These can be installed in on-premises environments or cloud infrastructures.

3. Cloud-Based Load Balancers:

- Managed load balancing services offered by cloud providers like AWS Elastic Load Balancer (ELB), Azure Load Balancer, and Google Cloud Load Balancing. These are scalable, easily configurable, and integrate well with cloud-native applications.

Key Features of Load Balancers:

Health Checks: Monitors server health and removes unresponsive servers until they recover, using ping, TCP, or HTTP checks.

SSL Termination: Offloads SSL/TLS encryption to reduce backend server load, handling HTTPS and forwarding HTTP internally.

Traffic & Path-Based Routing: Routes traffic based on URL paths, headers, or HTTP methods, ideal for Layer 7 load balancers and customized service routing.

Global Server Load Balancing (GSLB): Distributes traffic across global locations to reduce latency, considering user location and server health.

DDoS Protection & Security: Guards against DDoS attacks with IP filtering, traffic rate limiting, and integration with firewalls, enhancing security.

Common Load Balancing Scenarios

- Web Application Load Balancing:

- Distributes HTTP/HTTPS traffic across web servers, ensuring high availability for websites and web applications.

2. Database Load Balancing:

- Manages traffic to multiple database instances, balancing read requests across replicas while maintaining consistency for write requests.

3. Microservices Load Balancing:

- Routes traffic to different microservices within an application, improving performance and resource usage by allowing each service to scale independently.

4. API Gateway Load Balancing:

- Routes requests to different backend services based on the API endpoint. This approach supports multi-tenant architectures and complex application setups.

Example of Load Balancer in Action:

In a microservices environment, a load balancer sits between clients and multiple backend services (e.g., authentication, data processing, user profile). Each service may have several instances. When a request arrives, the load balancer inspects the request path and routes it to the appropriate service, balancing the load across multiple instances of each service. If an instance becomes unhealthy, the load balancer stops directing traffic to it, maintaining the availability and performance of the application.

Popular Load Balancer Solutions:

1. AWS Elastic Load Balancer (ELB):

Managed by AWS includes Application Load Balancer (ALB) for Layer 7 traffic, Network Load Balancer (NLB) for Layer 4 traffic, and Gateway Load Balancer for firewall integration.

2. NGINX and NGINX Plus:

A software-based, popular open-source and enterprise solution offering both Layer 4 and Layer 7 capabilities, widely used for HTTP load balancing.

3. HAProxy:

A high-performance, open-source TCP/HTTP load balancer with advanced features like connection limiting, health checks, and SSL offloading.

4. F5 BIG-IP:

A hardware-based and virtual load balancing solution with extensive Layer 4–7 capabilities, DDoS protection, and advanced security features.

Subscribe to my newsletter

Read articles from Subbu Tech Tutorials directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by