Leveling up KafkaOps with Conduktor Console

Farbod Ahmadian

Farbod Ahmadian

As organizations embrace streaming data, Apache Kafka has emerged as the backbone for low-latency, high-throughput event processing. This distributed streaming platform enables companies to build real-time data pipelines and streaming applications. However, as Kafka-based applications scale, teams often grapple with the complexities of debugging and feature development due to Kafka's multi-layered architecture. Traditional observability and debugging tools fall short in ensuring reliable performance at scale, leading to the rise of KafkaOps.

KafkaOps - a growing approach to operationalizing Kafka management - encompasses monitoring, triaging, and troubleshooting Kafka workflows. It's become crucial for maintaining the health and performance of Kafka-based systems as they grow in complexity and scale.

In this blog post:

we will introduce Conduktor Console along with its primary benefits.

Next we’ll show you how you can use Conduktor Console locally to develop a simple

ksqldbapplication.Finally, we’ll reflect on how Conduktor Console can be deployed as a shared service within an organization that is looking for a way to tame larger Kafka deployments.

Why Conduktor Console?

Conduktor Console stands out by simplifying complex tasks like schema management, message replay, and access control within an intuitive UI. Unlike alternatives such as Confluent's Control Center or Provectus' KafkaUI, Conduktor Console offers unique capabilities that make it particularly valuable for both development and platform teams.

Let's explore three key features that have driven successful adoption within our team and partner organizations:

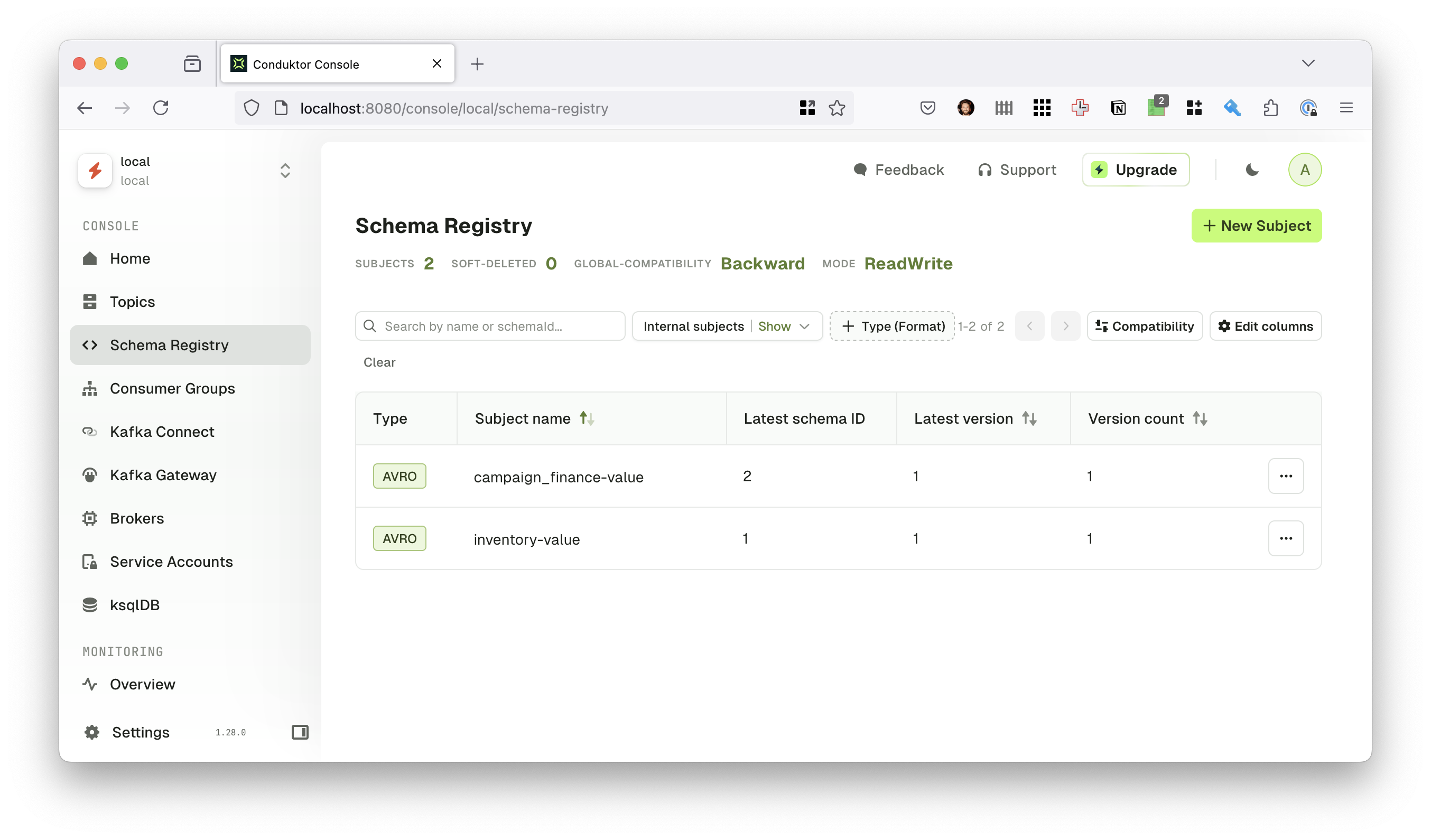

1. Seamless Schema Management

Schema management is crucial for maintaining data consistency, but it can become complex as systems evolve. Conduktor Console excels here by providing:

Integration with both AWS Glue and Confluent schema registries

Visual interface for managing schema compatibility

Simplified subject management across multiple registry types

One notable limitation: While Conduktor supports Azure Event Hubs through its Kafka protocol compatibility, it doesn't integrate with Azure's Schema Registry. This means teams using Azure Event Hubs won't be able to leverage schema-related features within Conduktor Console.

2. Powerful Message Replay Capabilities

Message replay is perhaps Conduktor's most immediately valuable feature for developers. It enables:

Testing new code against production-like data

Reproducing specific scenarios for debugging

Simulating message flows without affecting production systems

This capability is particularly powerful for development teams working on new features or investigating production issues, as it allows them to work with realistic data in a controlled environment.

3. Enterprise-Grade Access Control

For platform teams, Conduktor's granular access control (available in the Enterprise Tier) provides:

Streamlined permissions management

Self-service access for developers

Enhanced security through role-based controls

Single Sign-On (SSO) integration for seamless authentication

While credential management remains a challenge for many IT departments, Conduktor's approach enables platform teams to focus on enabling self-service while maintaining security controls.

Implementing Conduktor Console in Your Workflow

Conduktor publishes a number of docker images to Docker Hub that can be deployed to local container environments. In the following example, we’re going to build on a recent version of the Confluent Community docker-compose.yml file by adding the Conduktor Console and it’s dependencies.

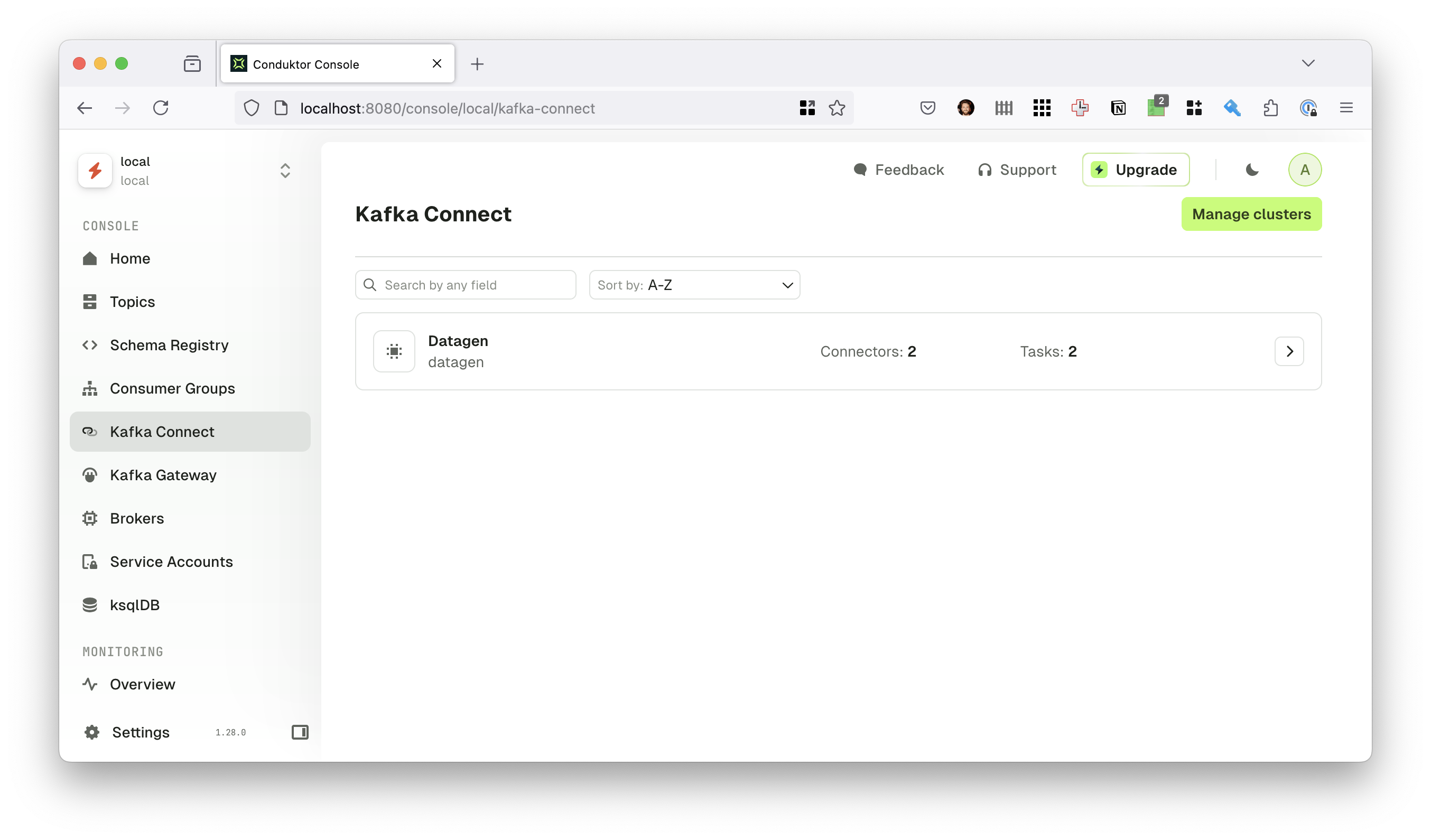

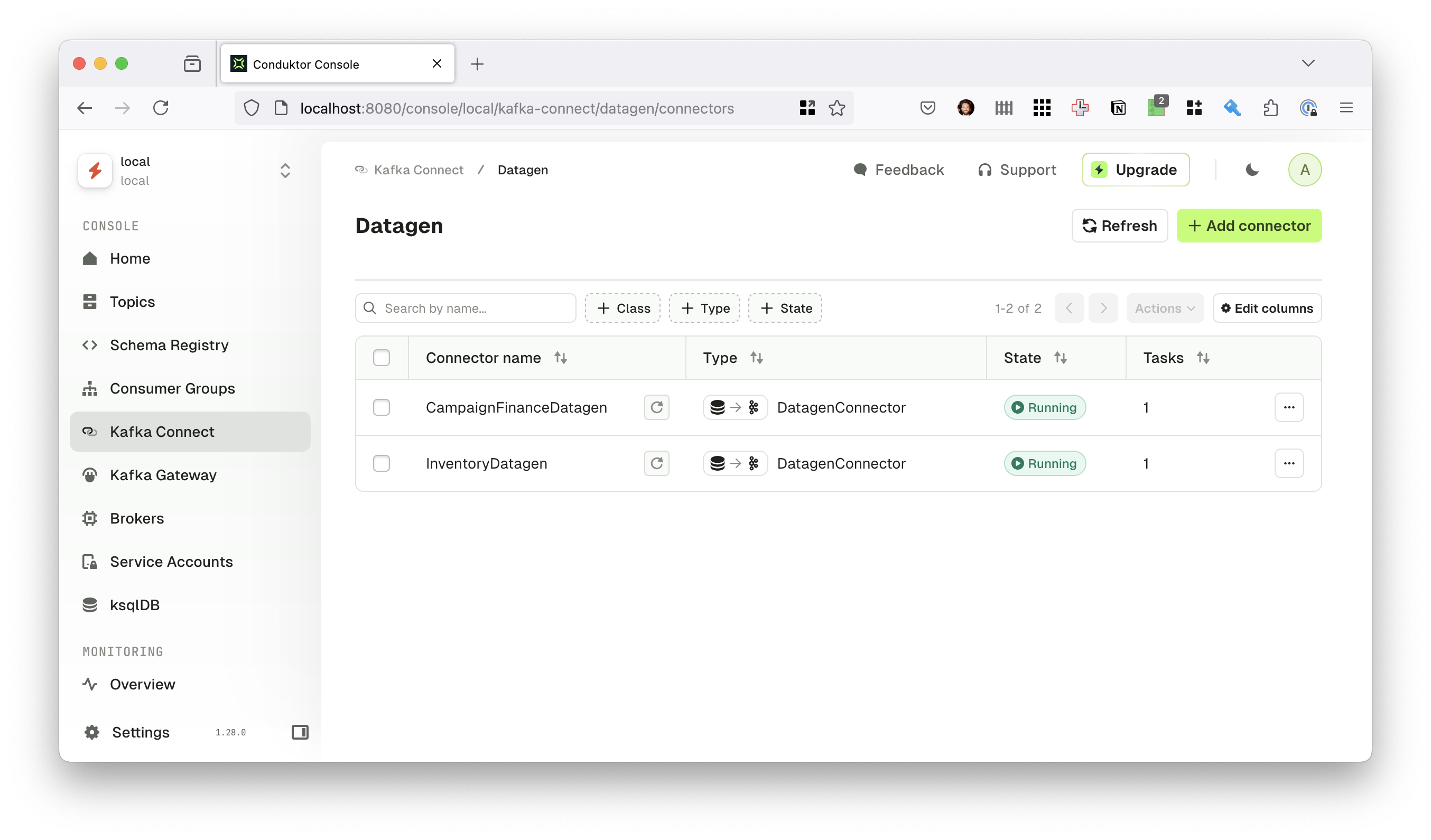

In addition to the Apache Kafka broker, Schema Registry, and ksqldb server, we’re also including a Kafka Connect container that will be used to generate example data. For this example we’ll leverage the Confluent Datagen Source Connector to generate random data for us to experiment with. For a list of predefined Datagen configurations, please see the examples from the kafka-connect-datagen GitHub repository.

Local Development Setup

Conduktor publishes Docker images on Docker Hub, making it easy to deploy in local container environments. Here's how to get started:

Pull the latest Conduktor Console image from Docker Hub

Create a

docker-compose.ymlfile, building on the Confluent Community versionAdd Conduktor Console and its dependencies to the composition

Launch your local Kafka environment with Conduktor Console

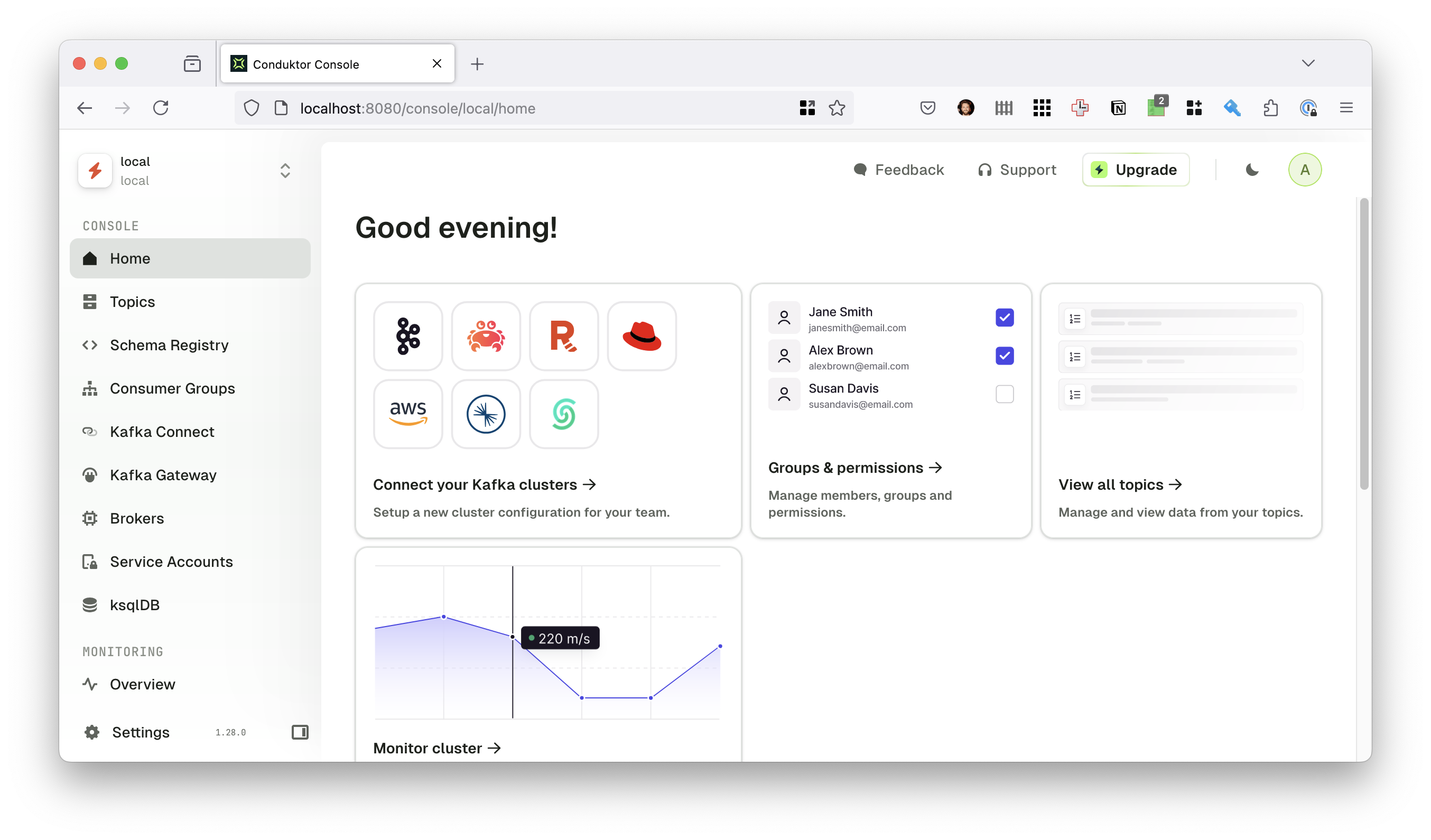

After spinning up the Docker Compose environment, you can navigate to the Conduktor Console which will be available at http://localhost:8080. The username/password for Conduktor Console are defined in the docker-compose.yml file above as the environmental variables CDK_ADMIN_EMAIL and CDK_ADMIN_PASSWORD - please update or override the values when deploying this docker compose environment into an insecure environment.

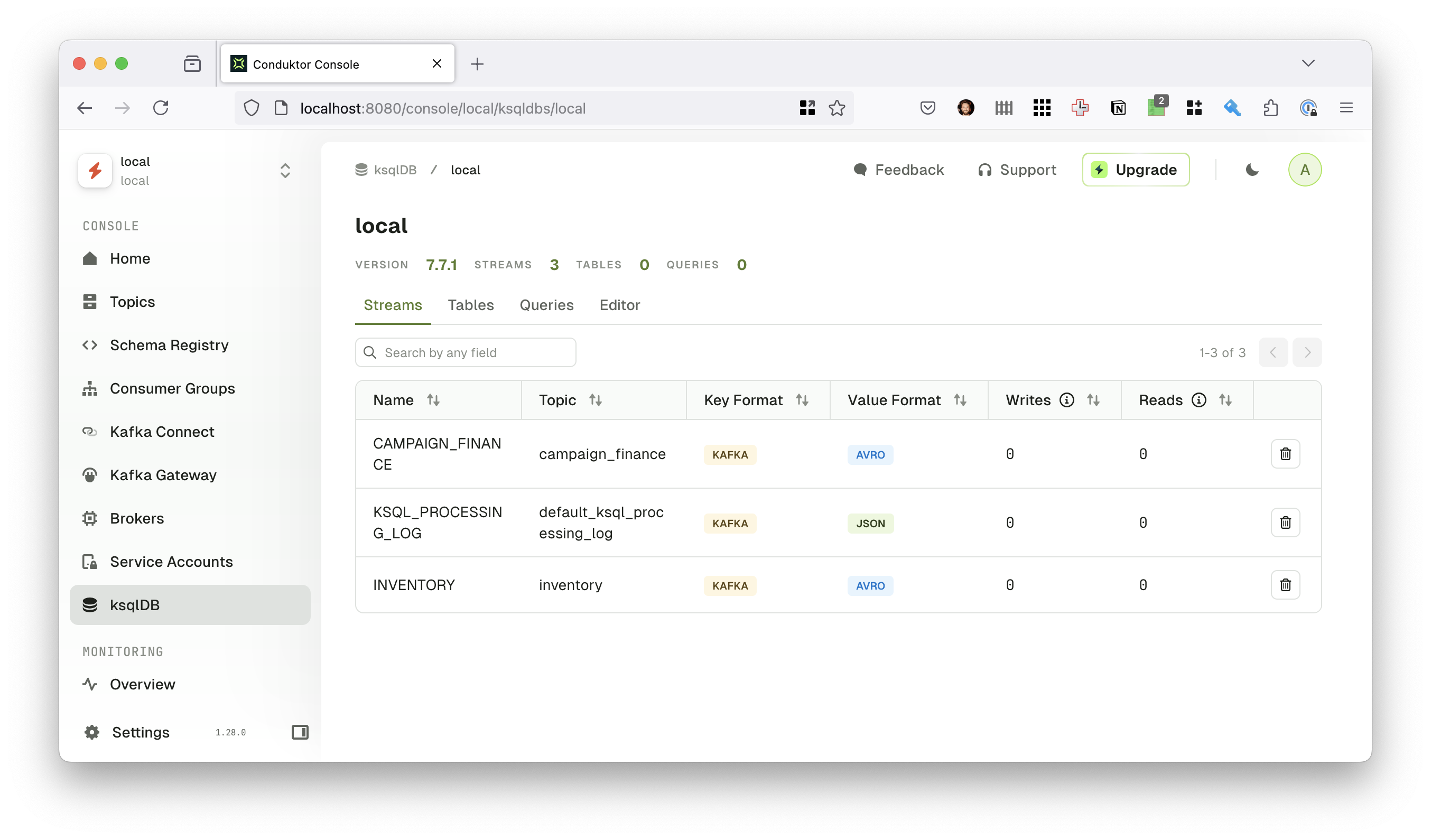

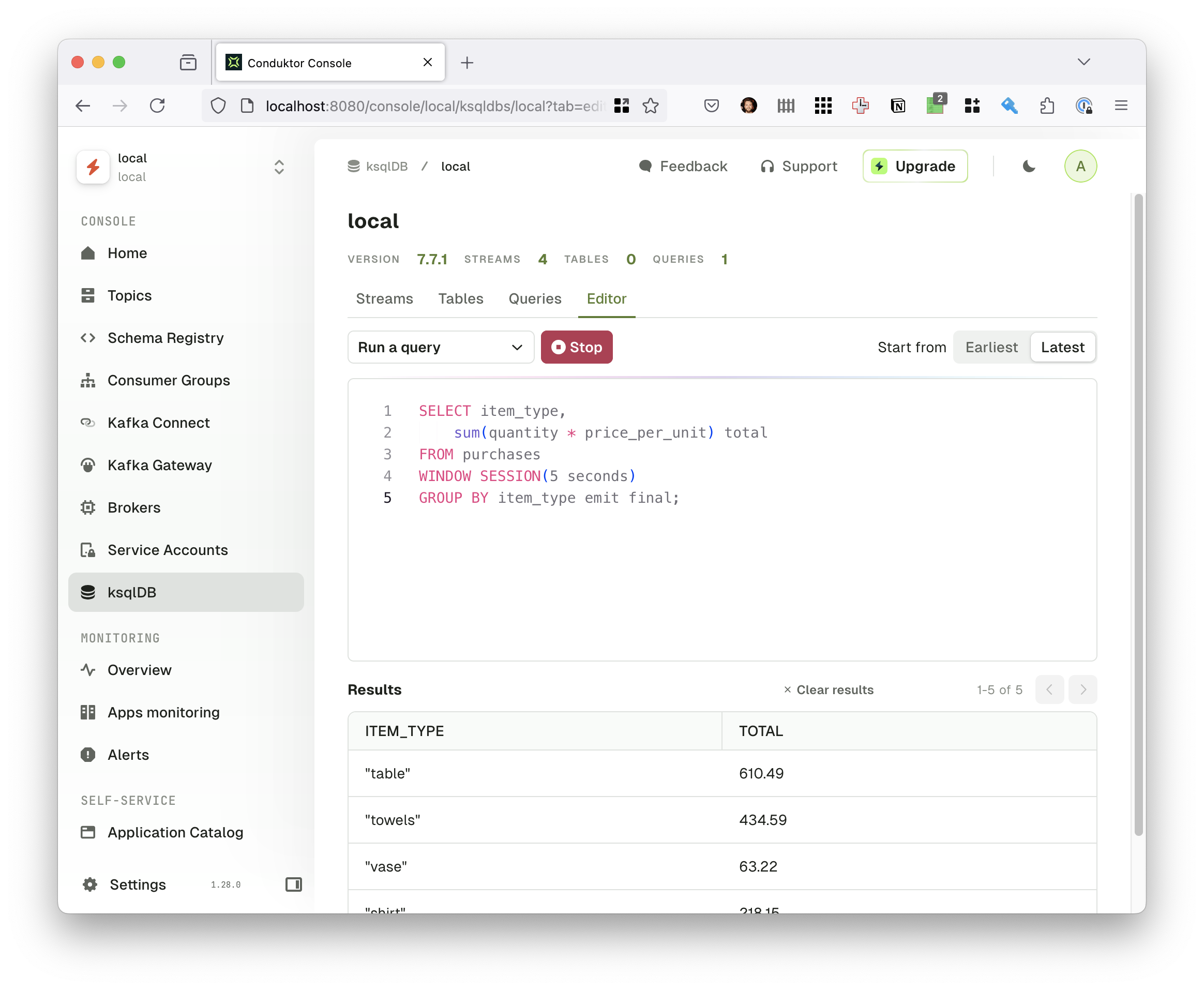

After configuring the Kafka cluster, associated Schema Registry, Kafka Connect workers, as well as the ksqldb server, we can start executing ksqldb statements in Conduktor Console, and perform stream processing jobs. The following shows a push query emit the total revenue generated for various item types within a window session. To learn more about ksqldb windowing options, please see the documentation on Time and Windows.

Enterprise Deployment

For larger organizations, deploying Conduktor Console as a shared service can significantly enhance Kafka operations:

Centralized Management: Streamline workflows and reduce latency by having a single point of control

Consistent Monitoring: Ensure uniform monitoring and management across all Kafka clusters

Enhanced Collaboration: Enable teams to work together more effectively on Kafka-based projects

For AWS users, Conduktor Console's integration with services like MSK and Glue Schema Registry further enhances its functionality. Leveraging AWS IAM roles and policies facilitates secure, credential-less authentication, simplifying user management and reducing security risks.

Deploying in a Shared Environment

Centralizing Kafka management tools within your infrastructure can greatly enhance operational efficiency. By streamlining workflows and reducing latency, teams can collaborate more effectively and resolve issues faster. This centralized approach ensures consistent monitoring and management of Kafka clusters, leading to improved performance and reliability.

Additional requirements such as networking and security may restrict which resources Conduktor Console could access while deployed locally. For this reason, organizations may want to host Conduktor Console on their own infrastructure. For our team’s internal use, we took extensive inspiration from the documentation on Deployment on AWS based on ECS and RDS. This can expanded upon to include SSL certificates via ACM and route requests via a custom domain registered in Route53.

For organizations using AWS Managed Streaming for Apache Kafka (MSK), Conduktor Console's natively supports using IAM roles to authenticate with MSK and the Glue Schema Registry. AWS IAM roles and policies facilitate secure, credential-less authentication, simplifying user management and reducing the risk of credential exposure via long-lived API keys.

Conclusion

Conduktor Console represents a significant leap forward in Kafka management, addressing the real-world challenges of operating Kafka at scale. By simplifying complex operations, enabling self-service capabilities, and providing robust security features, it allows teams to focus on building and maintaining robust streaming applications rather than wrestling with operational complexities.

Whether you're a platform team managing multiple clusters or a development team building Kafka-based applications, Conduktor Console provides the features and workflows needed to work effectively with Apache Kafka in a modern enterprise environment.

We encourage you to explore Conduktor Console for your Kafka operations. Start with a local setup to experience its benefits firsthand, and consider how it might enhance your team's productivity and your organization's Kafka management at scale.

Subscribe to my newsletter

Read articles from Farbod Ahmadian directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by