How to set up Langchain/Langchain Models Explained

Anix Lynch

Anix LynchTable of contents

What Is LangChain?

- Learn about LangChain as a framework that helps create complex applications using LLMs by combining different functionalities (e.g., agents, chains, memory) in a structured way.

Benefits of LangChain

Connect LLMs to external data sources, making applications more context-aware and capable of working with proprietary or dynamic data.

Create agents that can handle complex, multi-step tasks by deciding what actions to take based on prompts.

Chain together multiple LLMs or tools for sequential processing, enhancing flexibility and enabling complex workflows.

Prompt Templates

Understand and implement prompt templates, which allow dynamic prompts by substituting variables without changing the prompt structure. This helps with efficiency and adaptability, especially for complex projects.

Learn about Python F-strings for simple dynamic prompts, then explore LangChain’s prompt templates, which offer more functionality for building robust applications.

Basic Setup

- Initial setup instructions for LangChain, including connecting to OpenAI and setting up API keys.

Framework and LLM Overview

Introduction to frameworks as tools for efficiently managing repetitive tasks and leveraging reusable software environments.

Insight into LLMs, their capabilities, and the limitations they address in LangChain, like handling domain-specific or real-time data.

Advanced Capabilities and Use Cases

Explore LangChain’s advanced components:

Agents – Automate decisions within the model.

Chains – Sequential task handling.

Indexes, Memory, Prompts, Model Schema – Specific functionalities to enhance the model’s capabilities.

Practical applications include creating assistants, question-answering systems, chatbots, and more.

To set up LangChain

To run a sample demo project in Jupyter Notebook, follow these steps:

Step 1: Install Anaconda

Download and Install Anaconda

Go to Anaconda's official website and click on "Download".

Select the installer based on your OS (Windows, Mac, Linux) and install it.

Run the Installer

- Double-click on the installer, follow the instructions, and keep default settings. The setup might take a few minutes to complete.

Launch Anaconda Navigator

- Once installed, open Anaconda Navigator from your Start Menu (Windows) or Applications (Mac).

Open Jupyter Notebook

- In Anaconda Navigator, find and launch Jupyter Notebook to start creating and running notebooks.

Step 2: Set Up LangChain Project in Jupyter Notebook

Create a New Notebook

- Inside Jupyter Notebook, create a new notebook by selecting Python 3.

Install Required Libraries

- Use the following code to install the essential libraries:

!pip install langchain openai faiss-cpu

Initialize LangChain and OpenAI

- Import LangChain and OpenAI and set up your OpenAI API key. Replace

"your_openai_api_key"with your actual key.

- Import LangChain and OpenAI and set up your OpenAI API key. Replace

import os

from langchain.llms import OpenAI

os.environ["OPENAI_API_KEY"] = "your_openai_api_key"

Step 3: Run a Sample LangChain Demo

Define a Simple Query with LangChain

from langchain.chains import SimpleQAChain from langchain.indexes import SimpleIndex # Create a simple index for the document (e.g., a PDF or text file) document = "Example document text: 'Wu is a fictional character from Egypt who won five awards for humor.'" index = SimpleIndex(document) # Initialize the QA chain qa_chain = SimpleQAChain.from_index(index=index, llm=OpenAI()) # Query example: Asking the model about 'Wu' response = qa_chain.run("How many awards did Wu win?") print(response)

After running the code, you should see the response based on the provided document. This is a basic setup and demonstrates how LangChain can use external data sources (like PDFs) to answer questions based on recent or domain-specific information.

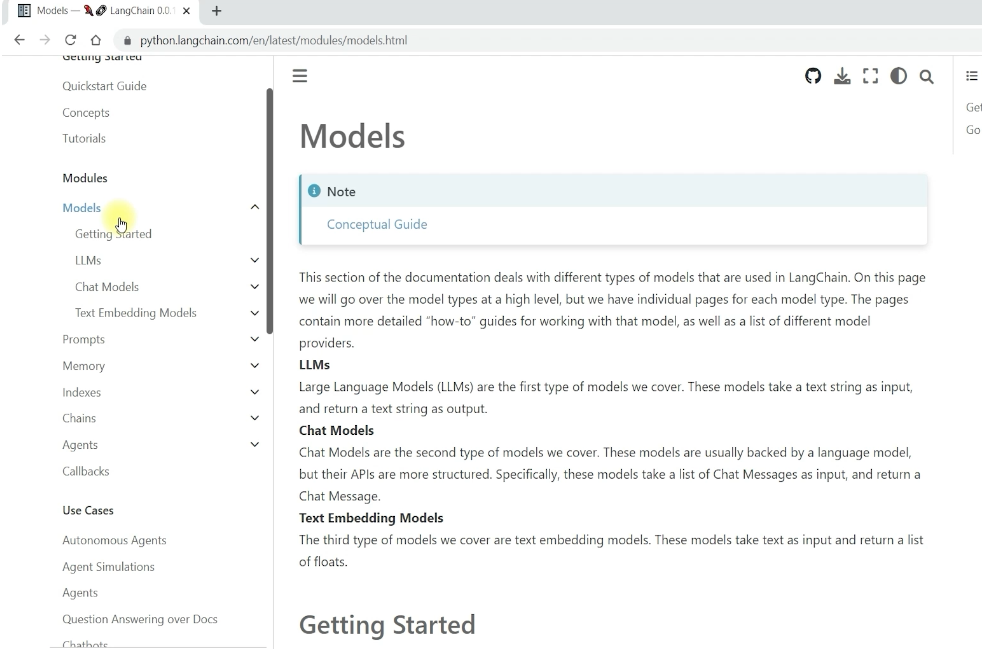

Langchain Models

LangChain provides a structured, team-based approach to building language-based applications. Each module acts like a team member in a well-organized business, each with a specific role to help the operation run efficiently. Here’s a combined view of the modules and their roles:

1. Models (The Specialist)

Models are the foundation in LangChain, representing the various LLMs and embedding models that can be integrated.

Code: Initialize the LLM (e.g., OpenAI model) to serve as the main expert

from langchain.llms import OpenAI llm = OpenAI(model_name="text-davinci-003")Types of Models:

LLMs (Large Language Models): Used for general-purpose language tasks.

Chat Models: Designed for conversational AI.

Text Embedding Models: Convert text into numerical representations for efficient similarity search and retrieval tasks.

Role: The Specialist is the core expert in the business, holding deep knowledge in their field, similar to a lawyer, doctor, or architect.

Function: Like LangChain’s models (LLMs, chat models, embeddings), the Specialist is the main source of knowledge, relied upon for accurate and detailed answers.

2. Prompt (The Communicator)

Code: Design a prompt template that will structure our question to get the best answer.

from langchain.prompts import PromptTemplate prompt_template = PromptTemplate(template="Answer the question based on the document: {question}")Components:

Prompt Templates: Predefined text structures with placeholders for dynamic content.

Prompt Engineering: Techniques to fine-tune prompts for better, more relevant model responses.

Role: The Communicator is skilled at asking the right questions, structuring conversations, and ensuring clear communication with the Specialist.

Function: LangChain’s prompts guide models on what to focus on, allowing the Communicator to extract exactly what’s needed from the Specialist through well-crafted prompts and templates.

3. Memory (The Assistant)

Code: Use memory to keep track of previous questions asked so we can provide context if the user asks follow-up questions.

from langchain.memory import ConversationMemory memory = ConversationMemory()Types of Memory:

Short-Term Memory: Retains context within a single session.

Long-Term Memory: Saves information across sessions for future reference.

Role: The Assistant remembers key details from past interactions, ensuring consistency in ongoing tasks and helping the team avoid repetitive work.

Function: LangChain’s memory module tracks past interactions, much like the Assistant, allowing the business to build on previously gathered information instead of starting from scratch.

4. Indexes (The Librarian)

Indexes are used for organizing and retrieving large collections of documents or data, making it easier for models to locate relevant information.

Code: Embed the PDF content into a searchable format, allowing the model to retrieve the relevant information.

from langchain.indexes import SimpleIndex document = "Wu is a fictional character from Egypt who won five awards." index = SimpleIndex(text=document)Common Index Types:

Vector Indexes: Use embeddings to efficiently search through large text datasets.

Keyword/Concept Indexes: Categorize information based on specific terms or themes.

Role: The Librarian organizes the business’s knowledge base, making sure relevant information is easily accessible when needed.

Function: Indexes in LangChain serve as the Librarian, storing and organizing data so the models can quickly find relevant information—enabling efficient and targeted retrieval, especially for large datasets.

5. Chains (The Project Manager)

Chains connect multiple models or processing steps in a sequence, allowing for complex workflows where the output of one step feeds into the next.

Code: Create a chain to process the question by feeding it to the model along with the prompt and memory. The chain will handle combining these elements and retrieving the final answer.

from langchain.chains import SimpleQAChain

qa_chain = SimpleQAChain(llm=llm, prompt=prompt_template, memory=memory, index=index)

Types of Chains:

Sequential Chains: Link multiple models or functions in a linear sequence.

Parallel Chains: Execute tasks simultaneously, useful for handling multi-part processes.

Role: The Project Manager structures tasks step-by-step, knowing who to involve at each stage and ensuring everything flows toward a successful completion.

Function: Chains in LangChain work similarly, connecting models and processes in a logical order. They allow different components to work sequentially or in parallel, guiding tasks smoothly from start to finish.

6. Agents (The Strategist)

Agents are sophisticated automation units that make decisions about which actions to take based on prompts or inputs.

Code: If this project requires multiple types of models or specific actions (e.g., summarizing and then answering), an agent decides which model to use and when to switch between tasks.

from langchain.agents import Agent agent = Agent(qa_chain)Agent Capabilities:

Task Management: Handle multi-step tasks with conditional logic.

Data Fusion: Combine information from various sources to produce comprehensive answers.

Role: The Strategist decides on the best actions, selecting which team members should handle each task and managing complex, multi-step scenarios.

Function: Agents in LangChain perform similar strategic functions by making decisions based on inputs. They analyze the situation, coordinating different models or tools as needed to solve complex problems effectively.

7. Callbacks (The Observer)

Callbacks provide a way to track the internal states, processes, and outputs of LangChain tasks, useful for logging and debugging.

Code: Set up callbacks to log or print out the process at each step, helping us monitor the actions being taken in the workflow.

def callback(status):

print(f"Status: {status}")

agent.add_callback(callback)

Use Cases:

Logging: Record each step of a process for analysis.

Error Tracking: Detect and manage issues within chains and agents.

Role: The Observer tracks the team’s progress, noting successes, errors, and opportunities for improvement to optimize operations.

Function: Callbacks in LangChain work like the Observer, monitoring internal processes, logging steps, and managing issues within chains and agents—act as observers, tracking progress, errors, or key results at each step. This is similar to how humans might check in or self-correct while multitasking.

How They Work Together

Input: The question is passed to the

Agent, which decides which chain and index to use.Prompt: The

Promptmodule prepares the question in the right format.Index: The

Indexmodule searches the document for relevant text.Memory: The

Memorymodule keeps track of past questions, providing context if needed.Model: The

Modelprocesses the prompt and indexed information to answer the question.Callback: Logs are printed by

Callbackto track each step, making debugging and tracking easier.Output: The

Chaincompiles everything, andAgentgives the final answer.

Subscribe to my newsletter

Read articles from Anix Lynch directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by