Langchain Project 3: Basic copy.ai clone

Anix Lynch

Anix LynchTable of contents

- Chunk 1: Imports and Environment Setup

- Chunk 2: Define the Main Function getLLMResponse

- Chunk 3: Define Examples by Age Group

- Chunk 4: Set Up Prompt Templates for the Example Selector

- Chunk 5: Define the Prefix and Suffix for the Prompt

- Chunk 6: Configure the Example Selector

- Chunk 7: Create a Few-Shot Prompt Template

- Chunk 8: Generate and Print the LLM Response

- Chunk 9: Streamlit UI Setup

- Chunk 10: Streamlit Input Widgets

- Chunk 11: Generate Button and Display Response

- Full Python Code

- Summary

This project is about creating a marketing tool that uses a language model to generate different types of content based on user input. The user provides a topic or question, selects the type of content they want (like a tweet, sales copy, or product description), and specifies the intended audience (e.g., kid, adult, senior). Based on this input, the tool generates customized content with a specific tone, style, and length.

Here’s the process in brief:

User Input: The user inputs a question or topic and chooses content type and target audience.

Prompt Engineering: Based on the selected audience and content type, the tool uses example selectors and prompt templates to generate prompts that tailor the language model’s response.

Content Generation: The model outputs content formatted according to the input criteria (e.g., a tweet for kids about laptops).

Streamlit Interface: The project includes a user interface built with Streamlit, allowing users to interactively input their preferences and view the generated content.

The output is dynamically generated marketing copy, aimed to serve content creators, marketing professionals, or even social media managers in drafting engaging content.

Alright, let's break down the code in chunks with a detailed explanation for each, followed by the complete Python code at the end.

Chunk 1: Imports and Environment Setup

import streamlit as st

from langchain_openai import OpenAI

from langchain.prompts import PromptTemplate, FewShotPromptTemplate

from langchain.prompts.example_selector import LengthBasedExampleSelector

from dotenv import load_dotenv

load_dotenv()

Purpose: This section imports the necessary modules.

streamlit: Used to create the web app interface.OpenAI: To connect with OpenAI's LLM model.PromptTemplate,FewShotPromptTemplate,LengthBasedExampleSelector: These are LangChain tools to build prompts and select appropriate examples.load_dotenv(): Loads environment variables from a.envfile, typically for securely accessing the OpenAI API key.

Chunk 2: Define the Main Function getLLMResponse

def getLLMResponse(query, age_option, tasktype_option):

# 'text-davinci-003' model is depreciated now, so we are using the openai's recommended model

llm = OpenAI(temperature=.9, model="gpt-3.5-turbo-instruct")

Purpose:

Defines the main function

getLLMResponse, which generates a response based on the user input.query: User's input text (e.g., “What is a laptop?”).age_option: Selected age group (Kid, Adult, or Senior Citizen).tasktype_option: The type of content to generate (e.g., tweet, sales copy).Model Setup: Initializes the model (

gpt-3.5-turbo-instruct) with a high temperature (0.9) for more creative output.

Chunk 3: Define Examples by Age Group

if age_option == "Kid":

examples = [

{"query": "What is a mobile?", "answer": "...fits in your pocket..."},

{"query": "What are your dreams?", "answer": "...colorful adventures..."},

{"query": "What math means to you?", "answer": "...a puzzle game..."},

# More examples...

]

elif age_option == "Adult":

examples = [

{"query": "What is a mobile?", "answer": "...portable communication device..."},

{"query": "What are your dreams?", "answer": "...quest for endless learning..."},

# More examples...

]

elif age_option == "Senior Citizen":

examples = [

{"query": "What is a mobile?", "answer": "...portable device...seen mobiles become smaller..."},

{"query": "What are your dreams?", "answer": "My dreams for my grandsons..."},

# More examples...

]

Purpose:

Creates different sets of examples based on the selected

age_option.Examples are structured as question-answer pairs, with responses tailored to each demographic.

Chunk 4: Set Up Prompt Templates for the Example Selector

example_template = """

Question: {query}

Response: {answer}

"""

example_prompt = PromptTemplate(

input_variables=["query", "answer"],

template=example_template

)

Purpose:

Sets up an

example_templateformat for each example usingPromptTemplate.This template standardizes how each example will be presented, allowing for uniformity in the prompt given to the LLM.

Chunk 5: Define the Prefix and Suffix for the Prompt

prefix = """You are a {template_ageoption}, and {template_tasktype_option}:

Here are some examples:

"""

suffix = """

Question: {template_userInput}

Response: """

Purpose:

prefix: Introduces the prompt with context about the age group and task type.suffix: Sets up the final question that the LLM will respond to.

Chunk 6: Configure the Example Selector

example_selector = LengthBasedExampleSelector(

examples=examples,

example_prompt=example_prompt,

max_length=200

)

Purpose:

Uses

LengthBasedExampleSelectorto dynamically select examples based on length.The

max_lengthparameter limits the number of examples so the prompt doesn’t exceed a certain length, making it more cost-effective and efficient.

Chunk 7: Create a Few-Shot Prompt Template

new_prompt_template = FewShotPromptTemplate(

example_selector=example_selector,

example_prompt=example_prompt,

prefix=prefix,

suffix=suffix,

input_variables=["template_userInput","template_ageoption","template_tasktype_option"],

example_separator="\n"

)

Purpose:

Creates a few-shot prompt that combines examples, prefix, suffix, and user input.

example_separator="\n": Separates examples with new lines for readability.

Chunk 8: Generate and Print the LLM Response

print(new_prompt_template.format(

template_userInput=query,

template_ageoption=age_option,

template_tasktype_option=tasktype_option

))

response = llm.invoke(new_prompt_template.format(

template_userInput=query,

template_ageoption=age_option,

template_tasktype_option=tasktype_option

))

print(response)

return response

Purpose:

Formats the prompt with user input and selected options, then invokes the model to get a response.

Prints the final prompt and response for debugging.

Returns the response to be displayed in the Streamlit UI.

new_prompt_template.format(...):Purpose: Formats the template with specific values that we provide.

Details:

The

formatmethod takes in variables (template_userInput,template_ageoption, andtemplate_tasktype_option) and replaces corresponding placeholders in thenew_prompt_template.These placeholders were defined in

FewShotPromptTemplateas part of the input variables (input_variables=["template_userInput", "template_ageoption", "template_tasktype_option"]).

Values Passed:

template_userInput->query: This is the main text that the user entered, which could be a question or topic they want information about.template_ageoption->age_option: This specifies the target audience age (e.g., “Kid,” “Adult,” or “Senior Citizen”).template_tasktype_option->tasktype_option: Defines the action (e.g., “Write a sales copy,” “Create a tweet,” etc.).

llm.invoke(...):Purpose: Sends the formatted prompt to the

llm(Language Model) and gets the model's response.Formatted Prompt: The same formatted prompt from the

printstatement is now sent to thellm.llm.invokeis a method in LangChain that directly interacts with the LLM, which means the LLM will generate a response based on the input it receives.

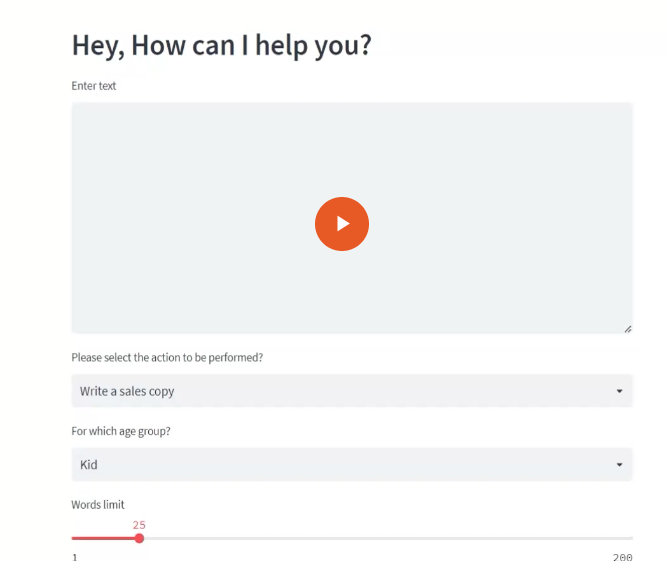

Chunk 9: Streamlit UI Setup

# UI Starts here

st.set_page_config(page_title="Marketing Tool",

page_icon='✅',

layout='centered',

initial_sidebar_state='collapsed')

st.header("Hey, How can I help you?")

- Purpose: Sets up the page configuration and header for the app using Streamlit.

Chunk 10: Streamlit Input Widgets

form_input = st.text_area('Enter text', height=275)

tasktype_option = st.selectbox(

'Please select the action to be performed?',

('Write a sales copy', 'Create a tweet', 'Write a product description'), key=1

)

age_option = st.selectbox(

'For which age group?',

('Kid', 'Adult', 'Senior Citizen'), key=2

)

numberOfWords = st.slider('Words limit', 1, 200, 25)

Purpose:

Creates input widgets for user interaction:

form_input: User enters text.tasktype_optionandage_option: Drop-downs for task type and age group.numberOfWords: Slider to set the word limit for the response.

Chunk 11: Generate Button and Display Response

submit = st.button("Generate")

if submit:

st.write(getLLMResponse(form_input, age_option, tasktype_option))

Purpose:

submitButton: When clicked, it calls thegetLLMResponsefunction with inputs.Display Response: Shows the response generated by the LLM on the Streamlit app.

Full Python Code

import streamlit as st

from langchain_openai import OpenAI

from langchain.prompts import PromptTemplate, FewShotPromptTemplate

from langchain.prompts.example_selector import LengthBasedExampleSelector

from dotenv import load_dotenv

load_dotenv()

def getLLMResponse(query, age_option, tasktype_option):

llm = OpenAI(temperature=.9, model="gpt-3.5-turbo-instruct")

# Define examples based on age group

if age_option == "Kid": # Examples for kids

examples = [

{"query": "What is a mobile?", "answer": "A magical device that fits in your pocket, like a mini-enchanted playground."},

{"query": "What are your dreams?", "answer": "Adventures where I become a superhero and save the day!"},

{"query": "What are your ambitions?", "answer": "To be a super funny comedian and spread laughter everywhere!"},

{"query": "What happens when you get sick?", "answer": "It’s like a sneaky monster visits, but I bounce back with rest and love!"},

{"query": "How much do you love your dad?", "answer": "To the moon and back, with sprinkles and unicorns on top!"},

{"query": "Tell me about your friend?", "answer": "My friend is like a sunshine rainbow! We laugh, play, and have magical parties together."},

{"query": "What math means to you?", "answer": "Math is like a puzzle game full of numbers and shapes."},

{"query": "What is your fear?", "answer": "I’m scared of thunderstorms, but my teddy bear helps me feel brave!"}

]

elif age_option == "Adult": # Examples for adults

examples = [

{"query": "What is a mobile?", "answer": "A portable communication device that allows calls, internet access, and apps."},

{"query": "What are your dreams?", "answer": "A quest for endless learning and innovation to empower individuals."},

{"query": "What are your ambitions?", "answer": "To be a helpful companion, empowering others with knowledge and insights."},

{"query": "What happens when you get sick?", "answer": "Symptoms arise, I seek care, and through rest and medicine, regain strength."},

{"query": "Tell me about your friend?", "answer": "A shining star, bringing laughter, support, and unforgettable memories."},

{"query": "What math means to you?", "answer": "Mathematics is a magical language, a tool to solve puzzles and unlock knowledge."},

{"query": "What is your fear?", "answer": "The fear of not living up to potential, but it motivates me to embrace new experiences."}

]

elif age_option == "Senior Citizen": # Examples for senior citizens

examples = [

{"query": "What is a mobile?", "answer": "A device that allows calls, messages, internet, and more; it has evolved so much over the years."},

{"query": "What are your dreams?", "answer": "For my grandkids to be happy and fulfilled, growing up with compassion and success."},

{"query": "What happens when you get sick?", "answer": "I feel weak and tired, but with rest and care, I regain my strength."},

{"query": "How much do you love your dad?", "answer": "My love for my late father transcends time, cherishing all he taught me."},

{"query": "Tell me about your friend?", "answer": "A treasure found amidst the sands of time, with whom I’ve shared laughter and wisdom."},

{"query": "What is your fear?", "answer": "The fear of being alone, but meaningful connections help dispel this feeling."}

]

# Template for each example

example_template = """

Question: {query}

Response: {answer}

"""

example_prompt = PromptTemplate(

input_variables=["query", "answer"],

template=example_template

)

# Define prefix and suffix for the few-shot prompt

prefix = """You are a {template_ageoption}, and {template_tasktype_option}:

Here are some examples:

"""

suffix = """

Question: {template_userInput}

Response: """

# Configure LengthBasedExampleSelector

example_selector = LengthBasedExampleSelector(

examples=examples,

example_prompt=example_prompt,

max_length=200

)

# Create Few-Shot Prompt Template

new_prompt_template = FewShotPromptTemplate(

example_selector=example_selector,

example_prompt=example_prompt,

prefix=prefix,

suffix=suffix,

input_variables=["template_userInput", "template_ageoption", "template_tasktype_option"],

example_separator="\n"

)

# Format the prompt and get LLM response

formatted_prompt = new_prompt_template.format(

template_userInput=query,

template_ageoption=age_option,

template_tasktype_option=tasktype_option

)

print("Prompt sent to LLM:\n", formatted_prompt)

# Generate response

response = llm.invoke(formatted_prompt)

print("LLM Response:\n", response)

return response

# Streamlit UI Setup

st.set_page_config(page_title="Marketing Tool",

page_icon='✅',

layout='centered',

initial_sidebar_state='collapsed')

st.header("Hey, How can I help you?")

form_input = st.text_area('Enter text', height=275)

tasktype_option = st.selectbox(

'Please select the action to be performed?',

('Write a sales copy', 'Create a tweet', 'Write a product description'), key=1

)

age_option = st.selectbox(

'For which age group?',

('Kid', 'Adult', 'Senior Citizen'), key=2

)

numberOfWords = st.slider('Words limit', 1, 200, 25)

submit = st.button("Generate")

if submit:

st.write(getLLMResponse(form_input, age_option, tasktype_option))

Summary

This expanded code includes all examples, setup, and UI logic.

The app is a marketing tool that tailors responses to specific user needs based on input, task type, and target audience.

Subscribe to my newsletter

Read articles from Anix Lynch directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by