LiteLLM for Developers: Build a Universal LLM Interface in Minutes

Smaranjit Ghose

Smaranjit GhoseTable of contents

🛣️ Introduction

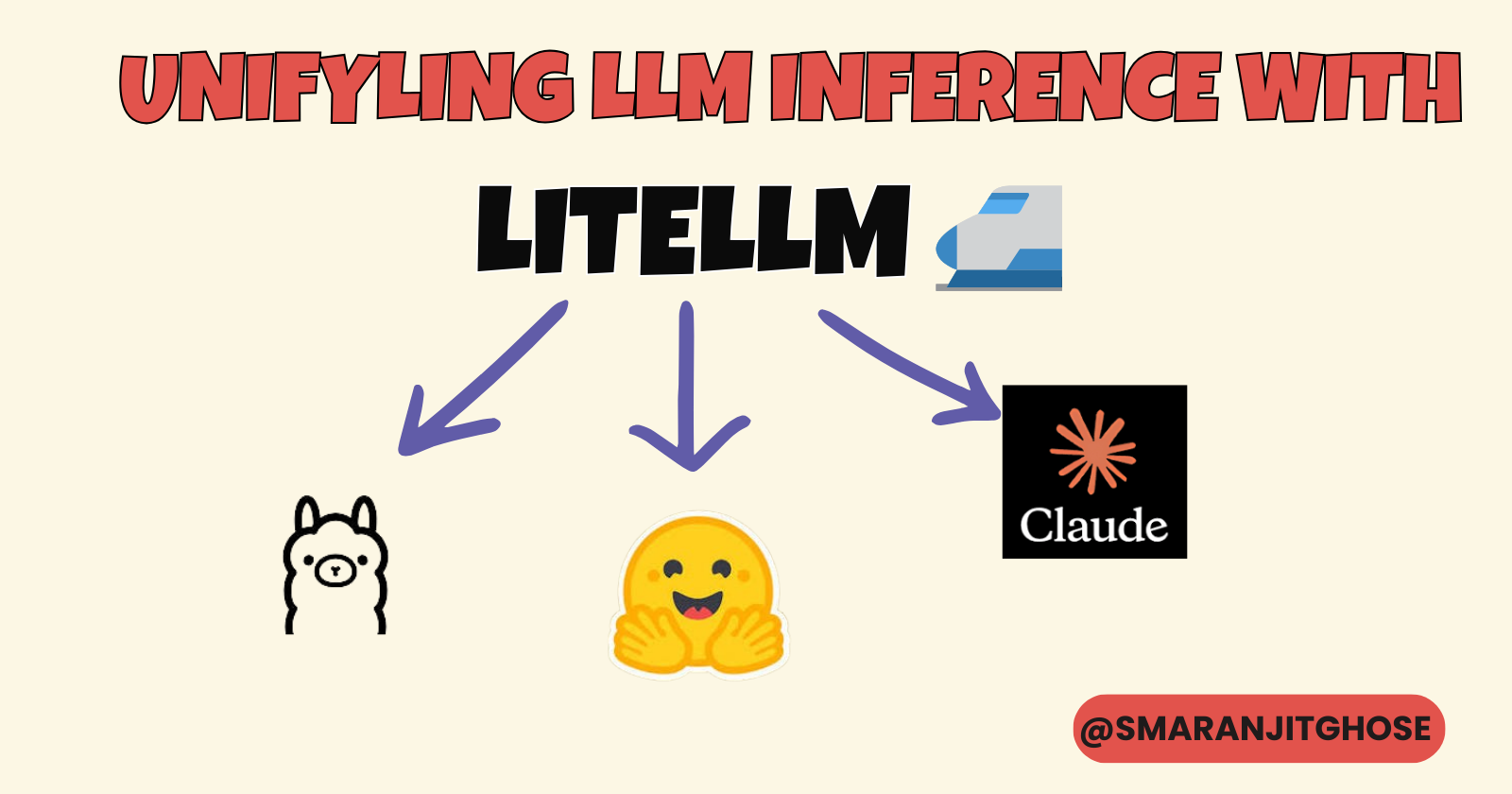

Since early 2024, the landscape of Language Models has become increasingly complex with multiple providers and APIs. Developers often struggle to integrate different LLMs into their applications efficiently. LiteLLM simplifies this process by providing a unified interface for all your LLM needs.

📙 What is LiteLLM?

LiteLLM is a powerful open-source toolkit that revolutionizes how developers interact with Large Language Models (LLMs). Think of it as a universal translator for LLMs – it allows your application to communicate with any supported language model using a single, consistent interface. 🌐

Why Choose LiteLLM? 🤔

Universal Model Access 🌍

Seamlessly connect to 100+ LLMs from major providers

Use the same code for OpenAI, Anthropic, Ollama, and other providers

Switch between models without rewriting your application logic

Simplified Development 👨💻

Write once, deploy anywhere approach

Consistent completion format across all models

Built-in error handling and retry mechanisms

Automatic model fallbacks for improved reliability

Resource Management 📊

Monitor usage across different projects

Track costs for each model integration

Built-in load balancing capabilities

Optimize resource allocation automatically

Enterprise-Ready Features ⚡

Support for multiple API keys

Customizable retry logic

Detailed logging and monitoring

Production-grade reliability

🧱 Setup Guide

1. Install Ollama

curl https://ollama.ai/install.sh | sh

2. Install CUDA Drivers

sudo apt-get update && sudo apt-get install -y cuda-drivers

This installs the necessary CUDA drivers for GPU acceleration.

3. Configure Environment

echo 'debconf debconf/frontend select Noninteractive' | sudo debconf-set-selections

4. Start Ollama Server

nohup ollama serve &

Note: This command:

Runs Ollama server in the background

Continues running after terminal closure (via

nohup)Remains active until explicitly terminated

5. Install LiteLLM 🛠️

pip install litellm

6. List Available Models

ollama list

Initial output will be empty:

NAME ID SIZE MODIFIED

Browse available models at ollama.com/library.

7. Pull a Model 🤖

For this tutorial, we'll use Llama 3.2:

ollama pull llama3.2:3b-instruct-fp16

Model Specifications:

3B parameter model

Instruction-tuned variant

FP16 precision for optimized memory usage

Suitable for consumer GPUs

8. Configure API Keys 🔑

import os

from google.colab import userdata

# Set API keys as environment variables

os.environ["ANTHROPIC_API_KEY"] = userdata.get("ANTHROPIC_API_KEY")

os.environ["OPENAI_API_KEY"] = userdata.get("OPENAI_API_KEY")

9. Using Local Models (Ollama) 💻

from litellm import completion

response = completion(

model="ollama/llama3.2:3b-instruct-fp16",

messages=[{

"content": "Write an API in ExpressJS to get the latest weather stats for a city",

"role": "user"

}],

api_base="http://localhost:11434"

)

print(response['choices'][0]['message']['content'])

10. Using Cloud Models ☁️

# OpenAI GPT-4

response = completion(

model="gpt-4",

messages=[{

"content": "Write an API in ExpressJS to get the latest weather stats for a city",

"role": "user"

}]

)

# Anthropic Claude

response = completion(

model="claude-3-opus-20240229",

messages=[{

"content": "Write an API in ExpressJS to get the latest weather stats for a city",

"role": "user"

}]

)

📚 References

Subscribe to my newsletter

Read articles from Smaranjit Ghose directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Smaranjit Ghose

Smaranjit Ghose

Talks about artificial intelligence, building SaaS solutions, product management, personal finance, freelancing, business, system design, programming and tech career tips