Complete Docker Guide: From Fundamentals to Production

Kanav Gathe

Kanav Gathe

1. Introduction to Docker

What is Docker?

Docker is a platform for developing, shipping, and running applications in containers. Containers are lightweight, standalone, executable packages that include everything needed to run an application: code, runtime, system tools, libraries, and settings.

Why Docker?

Consistency: Same environment across development, testing, and production

Isolation: Applications run independently without conflicts

Portability: Run anywhere that supports Docker

Resource Efficiency: Lighter than traditional VMs

Scalability: Easy to scale applications horizontally

Version Control: Track changes in your application environment

Real-World Use Cases

Microservices architecture

Continuous Integration/Continuous Deployment (CI/CD)

Cloud-native applications

Development environments

Application testing

Legacy application modernization

2. Docker Architecture

Core Components

Docker Daemon

Manages Docker objects

Handles container lifecycle

Maintains images, networks, and volumes

Docker Client

Command-line interface

Communicates with Docker daemon via REST API

Can connect to remote Docker daemons

Docker Registry

Stores Docker images

Docker Hub (public registry)

Private registries

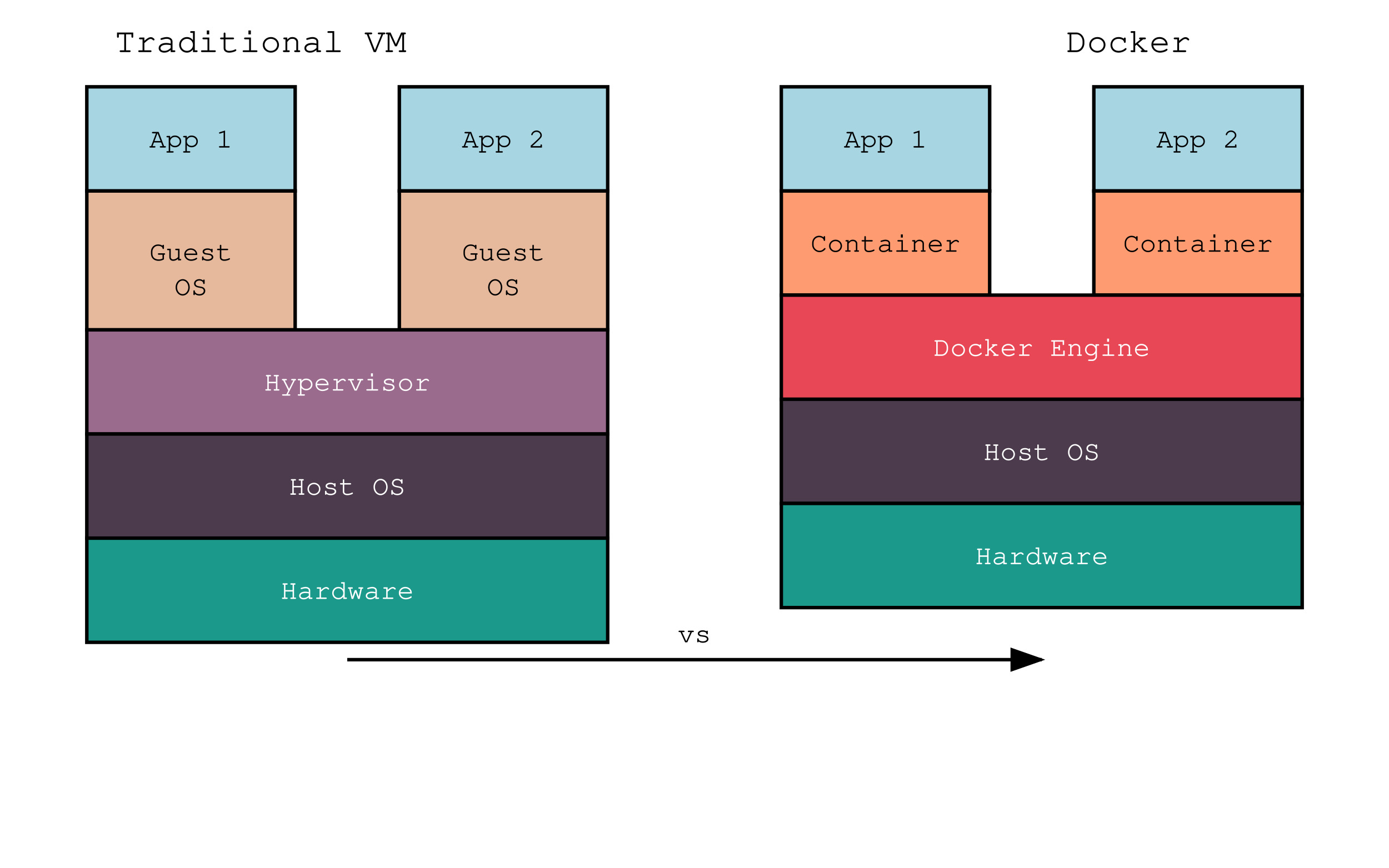

Container vs VM Architecture

3. Installation and Setup

Linux Installation

# Update package index

sudo apt-get update

# Install prerequisites

sudo apt-get install \

apt-transport-https \

ca-certificates \

curl \

gnupg \

lsb-release

# Add Docker's official GPG key

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

# Set up stable repository

echo "deb [arch=amd64 signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

# Install Docker Engine

sudo apt-get update

sudo apt-get install docker-ce docker-ce-cli containerd.io

# Verify installation

sudo docker run hello-world

Windows Installation

Download Docker Desktop for Windows from the official website

Run the installer

Enable WSL 2 (Windows Subsystem for Linux)

Configure resources in Docker Desktop

Test installation:

docker version

docker run hello-world

Post-installation Steps

# Add user to docker group (Linux)

sudo usermod -aG docker $USER

# Configure Docker to start on boot

sudo systemctl enable docker

# Configure Docker daemon

sudo nano /etc/docker/daemon.json

{

"log-driver": "json-file",

"log-opts": {

"max-size": "10m",

"max-file": "3"

}

}

4. Docker Core Concepts

Images

Definition: Read-only templates for containers

Layers: Each instruction in Dockerfile creates a layer

Commands:

# List images

docker images

# Pull image

docker pull nginx:latest

# Remove image

docker rmi nginx:latest

# Build image

docker build -t myapp:1.0 .

# Tag image

docker tag myapp:1.0 username/myapp:1.0

# Push image

docker push username/myapp:1.0

Containers

Definition: Running instances of Docker images

Lifecycle: Created → Running → Stopped → Deleted

Commands:

# Run container

docker run -d -p 80:80 nginx

# List containers

docker ps

docker ps -a # Including stopped containers

# Stop container

docker stop container_id

# Start container

docker start container_id

# Remove container

docker rm container_id

# Execute command in container

docker exec -it container_id bash

Registries

Types:

Public (Docker Hub)

Private (Amazon ECR, Google Container Registry)

Self-hosted (Docker Registry)

Authentication:

# Login to registry

docker login

# Push to registry

docker push username/image:tag

# Pull from registry

docker pull username/image:tag

5. Networking

Network Types

Bridge: Default network driver

Isolated network on host

Containers can communicate

Port mapping for external access

Host: Removes network isolation

Uses host's network directly

Better performance

Less security isolation

None: Disables networking

Complete network isolation

Used for maximum security

Overlay: Multi-host networking

Connect containers across hosts

Used in Docker Swarm

Network Commands

# List networks

docker network ls

# Create network

docker network create mynetwork

# Connect container to network

docker network connect mynetwork container_id

# Disconnect container from network

docker network disconnect mynetwork container_id

# Inspect network

docker network inspect mynetwork

# Remove network

docker network rm mynetwork

Network Configuration Example

# Create custom network with specific subnet

docker network create \

--driver bridge \

--subnet 172.18.0.0/16 \

--gateway 172.18.0.1 \

mynetwork

# Run container with specific IP

docker run -d \

--network mynetwork \

--ip 172.18.0.10 \

nginx

6. Volumes and Storage

Types of Storage

Volumes: Managed by Docker

Best practice for persistent data

Easy backup and migration

Can be shared between containers

Bind Mounts: Direct host filesystem mounting

Good for development

Host-dependent

Can affect performance

tmpfs: Memory-only storage

Temporary storage

High performance

Data lost on container stop

Volume Commands

# Create volume

docker volume create myvolume

# List volumes

docker volume ls

# Inspect volume

docker volume inspect myvolume

# Remove volume

docker volume rm myvolume

# Remove unused volumes

docker volume prune

Volume Usage Examples

# Run container with volume

docker run -d \

-v myvolume:/data \

nginx

# Run container with bind mount

docker run -d \

-v /host/path:/container/path \

nginx

# Run container with tmpfs

docker run -d \

--tmpfs /tmp:rw,noexec,nosuid,size=100m \

nginx

7. Dockerfile Deep Dive

Basic Structure

# Base image

FROM node:16-alpine

# Set working directory

WORKDIR /app

# Copy package files

COPY package*.json ./

# Install dependencies

RUN npm install

# Copy source code

COPY . .

# Build application

RUN npm run build

# Expose port

EXPOSE 3000

# Set environment variables

ENV NODE_ENV=production

# Define entry point

CMD ["npm", "start"]

Advanced Dockerfile Concepts

Multi-stage Builds

# Build stage

FROM node:16-alpine AS builder

WORKDIR /app

COPY package*.json ./

RUN npm install

COPY . .

RUN npm run build

# Production stage

FROM node:16-alpine

WORKDIR /app

COPY --from=builder /app/dist ./dist

COPY package*.json ./

RUN npm install --production

CMD ["npm", "start"]

Build Arguments

ARG NODE_VERSION=16

FROM node:${NODE_VERSION}-alpine

ARG PORT=3000

ENV PORT=${PORT}

EXPOSE ${PORT}

Health Checks

HEALTHCHECK --interval=30s --timeout=3s \

CMD curl -f http://localhost:3000/health || exit 1

A Dockerfile is a script containing instructions to build a Docker image. The basic structure starts with a base image (FROM), sets up a working directory (WORKDIR), and then follows a pattern of copying files and running commands to set up the application. The example shows a Node.js application setup, where it first copies package files, installs dependencies, copies source code, builds the app, exposes a port, sets environment variables, and defines how to start the application.

The advanced concepts demonstrate multi-stage builds, which use multiple FROM instructions to create a smaller production image by building in one stage and copying only necessary files to the final stage. This is particularly useful for reducing image size by excluding build tools and dependencies from the final image. Build arguments (ARG) allow for flexibility during image building by passing variables that can be used in the Dockerfile. Health checks are used to monitor container health by periodically running commands to verify the application is working correctly.

These Dockerfile patterns are essential for creating efficient, secure, and production-ready container images while maintaining development flexibility and monitoring capabilities.

8. Docker Compose

Basic Structure

version: '3.8'

services:

web:

build: ./web

ports:

- "3000:3000"

environment:

- NODE_ENV=production

depends_on:

- db

networks:

- mynetwork

db:

image: postgres:13

volumes:

- db-data:/var/lib/postgresql/data

environment:

- POSTGRES_PASSWORD=secret

networks:

- mynetwork

networks:

mynetwork:

volumes:

db-data:

Commands

# Start services

docker-compose up -d

# Stop services

docker-compose down

# View logs

docker-compose logs -f

# Scale service

docker-compose up -d --scale web=3

# Execute command in service

docker-compose exec web npm run test

# View service status

docker-compose ps

Advanced Compose Features

version: '3.8'

services:

web:

build:

context: ./web

dockerfile: Dockerfile.prod

args:

- NODE_VERSION=16

deploy:

replicas: 3

resources:

limits:

cpus: '0.5'

memory: 512M

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:3000/health"]

interval: 30s

timeout: 3s

retries: 3

Docker Compose is a tool for defining and running multi-container applications. The basic structure uses YAML format to define services, networks, and volumes. In the example, it sets up two services: a web application and a PostgreSQL database. Each service has its own configuration, including build instructions, port mappings, environment variables, dependencies (depends_on), and network settings. The services are connected through a custom network (mynetwork) and the database uses a named volume (db-data) for data persistence.

Common Docker Compose commands allow you to manage your multi-container application: 'up' starts services, 'down' stops them, 'logs' shows output, and 'scale' adjusts the number of containers for a service. The advanced features showcase deployment configurations with resource limits (CPU and memory), health checks for monitoring service health, and build arguments for flexible image building. This makes Docker Compose powerful for both development and production environments, handling everything from simple two-service setups to complex microservice architectures with multiple interconnected containers.

This setup demonstrates how Docker Compose simplifies container orchestration, making it easier to manage multiple containers as a single application while providing robust features for scaling, monitoring, and resource management.

9. Security

Container Security

Image Security

Use official base images

Scan for vulnerabilities

Keep images updated

Runtime Security

Run as non-root user

Use read-only root filesystem

Limit capabilities

Network Security

Use user-defined networks

Implement network policies

Control exposed ports

Security Best Practices

# Use specific version

FROM node:16-alpine

# Create non-root user

RUN addgroup -S appgroup && adduser -S appuser -G appgroup

# Set working directory

WORKDIR /app

# Copy and install dependencies

COPY package*.json ./

RUN npm ci --only=production

# Copy application code

COPY --chown=appuser:appgroup . .

# Use non-root user

USER appuser

# Run application

CMD ["npm", "start"]

Security Configuration

version: '3.8'

services:

web:

security_opt:

- no-new-privileges:true

read_only: true

tmpfs:

- /tmp

cap_drop:

- ALL

cap_add:

- NET_BIND_SERVICE

10. Troubleshooting

Common Issues and Solutions

- Container Won't Start

# Check logs

docker logs container_id

# Inspect container

docker inspect container_id

# Check resource usage

docker stats

- Network Issues

# Check network connectivity

docker network inspect network_name

# Test container networking

docker exec container_id ping other_container

# View container IP

docker inspect -f '{{range .NetworkSettings.Networks}}{{.IPAddress}}{{end}}' container_id

- Volume Issues

# Check volume mounts

docker inspect -f '{{ .Mounts }}' container_id

# Verify permissions

docker exec container_id ls -la /path/to/volume

# Check volume data

docker volume inspect volume_name

Debugging Tools

# Interactive shell

docker exec -it container_id sh

# Process list

docker top container_id

# Resource usage

docker stats container_id

# System events

docker events

11. Best Practices

Image Building

- Use .dockerignore

node_modules

npm-debug.log

Dockerfile

.dockerignore

.git

.gitignore

- Layer Optimization

# Bad

COPY . /app

RUN npm install

# Good

COPY package*.json /app/

RUN npm install

COPY . /app

- Multi-stage Builds

# Build stage

FROM node:16-alpine AS builder

WORKDIR /app

COPY package*.json ./

RUN npm install

COPY . .

RUN npm run build

# Production stage

FROM nginx:alpine

COPY --from=builder /app/build /usr/share/nginx/html

Container Management

- Resource Limits

docker run -d \

--memory="512m" \

--cpus="0.5" \

nginx

- Logging

# JSON logging configuration

{

"log-driver": "json-file",

"log-opts": {

"max-size": "10m",

"max-file": "3"

}

}

- Health Checks

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost/health"]

interval: 30s

timeout: 10s

retries: 3

start_period: 40s

[Previous sections remain the same...]

12. Hands-on Project: Task Manager

Project Overview

A Flask-based task management application with:

Backend: Python/Flask

Database: MongoDB

Docker Compose for orchestration

Volume persistence for data

Network isolation

Project Repository

The complete source code is available at: https://github.com/SlayerK15/Task-Manager

Implementation Details

Directory Structure

task-manager/

├── template/index.html

├── docker-compose.yml

├── Dockerfile

├── app.py

├── requirements.txt

└── README.md

Dockerfile Analysis

FROM python:3.9-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

COPY . .

EXPOSE 5000

CMD ["python", "app.py"]

Key points:

Uses Python 3.9 slim base image for smaller size

Sets up working directory

Installs dependencies first (layer caching)

Copies application code

Exposes port 5000

Uses simple Python command to run the app

Docker Compose Configuration

version: '3.8'

services:

web:

build:

context: .

dockerfile: Dockerfile

ports:

- "0.0.0.0:5000:5000"

environment:

- FLASK_APP=app.py

- FLASK_ENV=development

- MONGO_URI=mongodb://mongodb:27017/taskmanager

volumes:

- .:/app

depends_on:

- mongodb

networks:

- app-network

restart: unless-stopped

mongodb:

image: mongo:latest

ports:

- "127.0.0.1:27017:27017"

volumes:

- mongodb_data:/data/db

networks:

- app-network

restart: unless-stopped

networks:

app-network:

driver: bridge

volumes:

mongodb_data:

Key features:

Services:

Web service (Flask application)

MongoDB service (Database)

Environment Configuration:

Flask environment settings

MongoDB connection URI

Development mode enabled

Networking:

Custom bridge network (app-network)

Internal service discovery

Port mappings for both services

Persistence:

Volume mounting for application code

Named volume for MongoDB data

Data persistence across container restarts

Dependencies:

Web service depends on MongoDB

Proper startup order ensured

Restart Policies:

Both services restart unless stopped

Ensures high availability

Running the Project

- Clone the repository:

git clone https://github.com/SlayerK15/Task-Manager

cd Task-Manager

- Build and start the services:

docker-compose up --build

Access the application:

Web interface: http://localhost:5000

MongoDB (local access): localhost:27017

Stop the services:

docker-compose down

Development Workflow

Local Development:

Code changes reflect immediately due to volume mounting

No need to rebuild for Python file changes

Hot-reloading enabled in development mode

Database Management:

MongoDB data persists in named volume

Access database directly through exposed port

Use MongoDB compass or CLI tools

Troubleshooting:

# View logs

docker-compose logs -f

# Check service status

docker-compose ps

# Access container shell

docker-compose exec web bash

docker-compose exec mongodb mongo

Project Management:

Use docker-compose commands for service control

Easy environment variable configuration

Network isolation for security

This implementation demonstrates:

Proper Docker best practices

Service orchestration with Docker Compose

Development environment setup

Database integration

Volume management

Network configuration

Container security considerations

Connect & Learn More

Stay Connected

GitHub Repository: https://github.com/SlayerK15/Task-Manager

Star the repo if you found it helpful!

Feel free to fork and contribute

Report issues or suggest improvements

Watch for updates and new features

LinkedIn: www.linkedin.com/in/gathekanav

Connect for more tech content

Discussions on Docker, DevOps, and cloud technologies

Professional networking opportunities

Updates on new projects and tutorials

Share & Contribute

Share this guide with your network

Drop a star on GitHub if you found it helpful

Feel free to fork the project and make it your own

Issues and pull requests are welcome!

Thank you for following along with this comprehensive Docker guide! If you found it helpful, please consider:

Following on GitHub and LinkedIn

Sharing with others who might benefit

Contributing to make it even better

Let's learn and grow together! 🚀

Subscribe to my newsletter

Read articles from Kanav Gathe directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by