Building an Email Spam Classifier Using Naive Bayes

Roemai

Roemai

In this post, we will walk through the process of building an email spam classifier using the Naive Bayes algorithm. The goal is to classify email messages as either spam or not spam (ham) by processing text data and training a model. We'll cover all the necessary steps from data loading, preprocessing, vectorization, model training, to evaluation.

Objectives

Preprocess text data: Convert raw text into a suitable format for machine learning.

Train a Naive Bayes classifier: Use a Naive Bayes model to classify emails as spam or not.

Evaluate model performance: Assess the model using metrics such as accuracy, precision, and recall.

Skills

This project will involve the following skills:

Text Preprocessing: Cleaning and transforming raw text data for machine learning.

Machine Learning Basics: Using Naive Bayes or Logistic Regression to perform classification.

Model Evaluation: Understanding performance metrics like accuracy, precision, and recall.

Tools

For this project, we will be using:

Python: The primary programming language.

Scikit-Learn: A machine learning library to build the model.

NLTK (Natural Language Toolkit): A toolkit for natural language processing tasks like tokenization and stop word removal.

Step-by-Step Process

Here’s the complete Python code for building a spam classifier. This code covers everything from loading the dataset to model training, evaluation, and sample prediction.

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.naive_bayes import MultinomialNB

from sklearn.metrics import accuracy_score, precision_score, recall_score

import nltk

from nltk.corpus import stopwords

import re

# Download stopwords if you haven't already

nltk.download('stopwords')

# Step 1: Load the dataset

data = pd.read_csv("spam.csv", encoding="latin-1")

data = data[['v1', 'v2']]

data.columns = ['label', 'message']

# Step 2: Preprocess the text data

stop_words = set(stopwords.words('english'))

def preprocess_text(text):

text = re.sub(r'\W', ' ', text) # Remove non-word characters

text = text.lower() # Convert to lowercase

text = re.sub(r'\s+', ' ', text) # Remove extra whitespace

text = ' '.join([word for word in text.split() if word not in stop_words])

return text

data['message'] = data['message'].apply(preprocess_text)

# Step 3: Convert text data to numerical data

vectorizer = CountVectorizer()

X = vectorizer.fit_transform(data['message']).toarray()

y = data['label'].apply(lambda x: 1 if x == 'spam' else 0)

#Complete Code on our Github Repo

Check complete code on Github here

Explanation of Each Step

Load Dataset:

- The dataset is loaded using

pandas. We assume the file is namedspam.csv. The dataset contains two columns: one for the label (spam or not) and one for the message.

- The dataset is loaded using

Text Preprocessing:

- We clean the text by removing non-alphabetical characters and converting all text to lowercase. We also remove stopwords, which are common words like "the" and "and" that do not contribute much to the model's performance.

Text Vectorization:

- We use

CountVectorizerfrom Scikit-Learn to convert the cleaned text data into a format suitable for machine learning (i.e., a bag-of-words model). This transforms the text into a numerical array where each feature represents the count of a particular word in the message.

- We use

Data Splitting:

- The data is split into training and testing sets using

train_test_split. 80% of the data is used for training, and 20% is reserved for testing the model.

- The data is split into training and testing sets using

Model Training:

- We use the Naive Bayes algorithm (

MultinomialNB) to train the model. This algorithm works well with text classification tasks, especially for spam classification.

- We use the Naive Bayes algorithm (

Model Evaluation:

- After training the model, we evaluate its performance using accuracy, precision, and recall. These metrics help us understand how well the model is distinguishing between spam and non-spam messages.

Test Message Classification:

- Finally, we use the trained model to classify a sample message (e.g., "Congratulations! You've won a free ticket.") as spam or not.

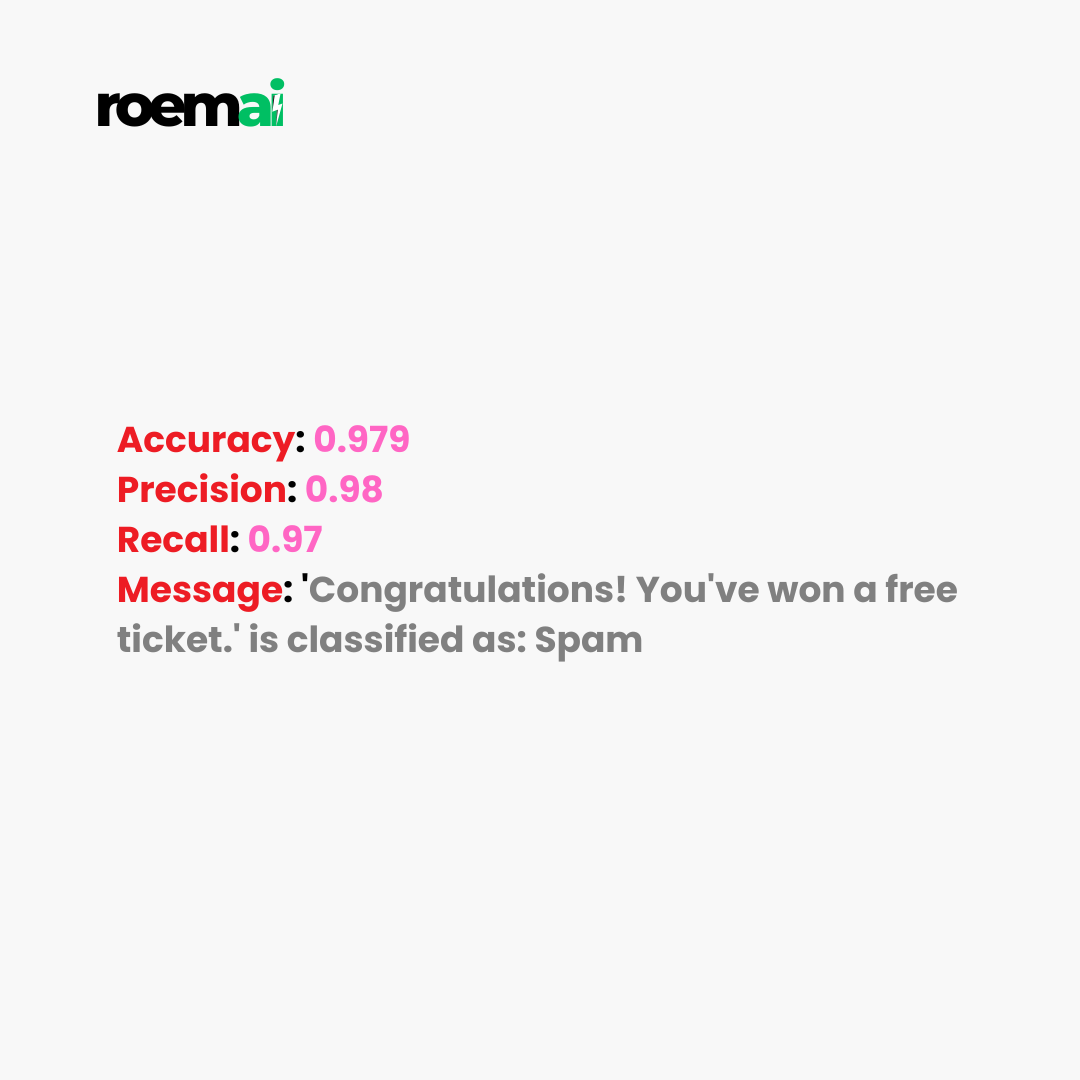

Expected Output

Running this code will produce the following results:

Model Accuracy: The overall accuracy of the model, showing how many predictions were correct.

Precision: The proportion of positive (spam) predictions that were actually spam.

Recall: The proportion of actual spam messages that were correctly identified by the model.

For example:

Conclusion

This spam classifier demonstrates how to preprocess text data, vectorize it into a machine-readable format, train a Naive Bayes classifier, and evaluate the model. By understanding the steps involved in building such classifiers, you can apply similar techniques to other text classification problems.

Let me know if you have any questions or need further assistance with this project!

Subscribe to my newsletter

Read articles from Roemai directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by

Roemai

Roemai

At Roemai we are empowering individuals through education, innovation, and technology solutions with robotics, embedded systems, and AI.