What is ETL in Data Engineering?

Harvey Ducay

Harvey Ducay

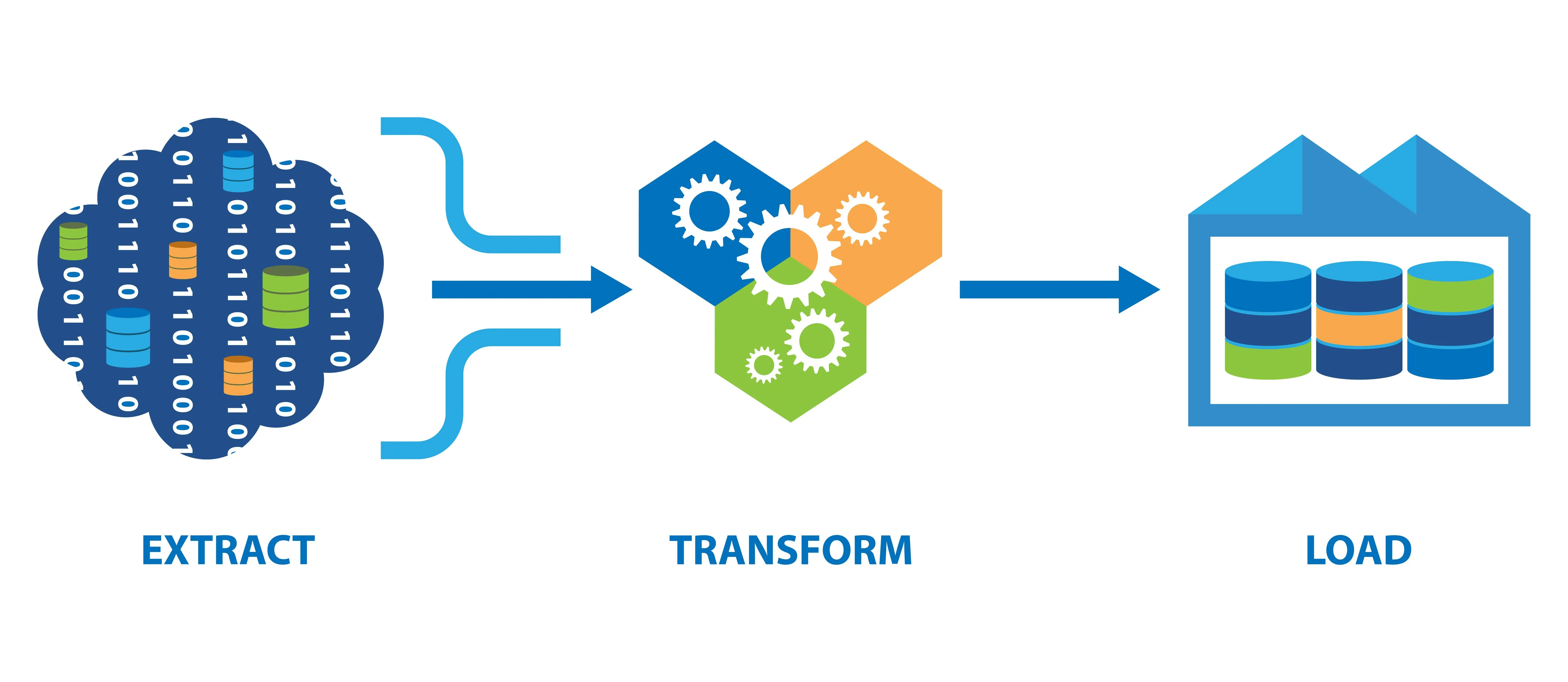

So imagine you're making a smoothie - that's basically what ETL is in the data world. First, you Extract all your ingredients (data) from different places, like grabbing berries from the fridge, bananas from the counter, and yogurt from the store - just like pulling data from different systems and files. Then comes the Transform part, where you wash the fruit, cut it up, and make sure everything's ready to blend - similar to cleaning up messy data, fixing errors, and making sure everything fits together nicely. Finally, you Load it all into your blender (or in data terms, your final database or warehouse) where it becomes something useful that everyone can consume!

“E-T-L” meaning

Extract

Data extraction is the first crucial step in the ETL process, involving the gathering of data from various source systems. This phase focuses on pulling raw data from multiple sources and preparing it for the transformation phase. The extraction process can be as simple as copying data from a single database or as complex as gathering information from dozens of disparate systems.

Transform

The transformation phase is where raw data becomes valuable business information. This critical stage involves converting extracted data into a format that's suitable for analysis and storage. During transformation, data undergoes various operations to ensure it meets business rules, quality standards, and technical requirements of the target system.

Load

The loading phase represents the final step where transformed data is written into the target system. This phase requires careful planning to ensure data is loaded efficiently while maintaining system performance and data integrity.

The Three Critical Pillars of ETL's Business Impact

1. Data-Driven Decision Making

Strategic Advantage

Consolidates data from multiple sources into a single source of truth

Sales, marketing, financial, and operational data integration

Enables real-time business intelligence and reporting

Business Impact

Faster, more accurate decision making

Reduced analysis time and effort

Better resource allocation based on actual data

Improved forecasting and planning capabilities

2. Operational Excellence

Process Optimization

Automates manual data processing tasks

Standardizes data handling across the organization

Eliminates redundant data entry and processing

Ensures consistent data quality and formatting

Cost Benefits

Reduces manual labor costs by 40-60% on average

Minimizes errors and associated correction costs

Improves employee productivity

Faster time-to-market for data-dependent projects

3. Customer Experience Enhancement

Customer Understanding

Creates comprehensive 360-degree customer views

Combines data from all customer touchpoints

Enables personalized marketing and service delivery

Supports predictive customer behavior analysis

Business Growth

Improves customer retention through better service

Increases cross-selling and upselling opportunities

Enables targeted marketing campaigns

Supports customer satisfaction monitoring and improvement

How can ETL go wrong?

Data extraction fails when source systems unexpectedly change formats or experience downtime, breaking automated processes. Legacy systems with outdated security protocols can become inaccessible, forcing rushed workarounds. Poor connectivity leads to incomplete datasets.

Transformation can silently corrupt data when business rules aren't properly maintained, while faulty loading processes create database deadlocks. Multiple competing ETL jobs can bring production systems to a halt during peak hours, causing business disruption.

Conclusion

ETL, or Extract, Transform, Load, is a crucial data integration process that consolidates data from multiple sources into a central repository, enabling organizations to perform analytics and drive informed decision-making. While ETL provides significant advantages such as improved data quality, automation, and enhanced business intelligence, it can also encounter challenges related to data quality issues, complex transformations, and performance bottlenecks. To mitigate potential pitfalls, businesses should follow best practices, such as implementing robust error handling, ensuring data quality, and utilizing automation to streamline and optimize the ETL process.

P.S. Let's Build Something Cool Together!

Drowning in data? Pipelines giving you a headache? I've been there – and I actually enjoy fixing these things. I'm that data engineer who: - Makes ETL pipelines behave - Turns data warehouse chaos into zen - Gets ML models from laptop to production.

If you find this blog interesting, connect with me on Linkedin and make sure to leave a message!

Subscribe to my newsletter

Read articles from Harvey Ducay directly inside your inbox. Subscribe to the newsletter, and don't miss out.

Written by